CogVLM 是一款强大的预训练模型,专注于理解和生成带视觉信息的文本,如图像描述、图像问答等任务。它通过大规模数据预训练,将视觉信号与语言知识融合在一起,以提高模型的跨模态理解和生成能力。CogVLM的核心在于它的多模态融合机制。模型接收文本与图像作为输入,并通过深度学习网络进行联合表示,使得两者的信息可以相互补充和交互。这种设计使得模型不仅能理解语言,还能"看"并理解图像,从而提供更全面的响应。

硬件要求(模型推理):

INT4 : RTX30901,显存24GB,内存32GB,系统盘200GB

INT4 : RTX40901或RTX3090*2,显存24GB,内存32GB,系统盘200GB

模型微调硬件要求更高。一般不建议个人用户环境使用

如果要运行官方web界面streamlit run composite_demo/main.py显存需要40G以上,至少需两张RTX3090显卡。否则基本无法体验

环境准备

模型准备

手动下载以下几个模型(体验时几个模型不一定需全下载)

下载地址:https://hf-mirror.com/THUDM

lmsys/vicuna-7b-v1.5

THUDM/cogagent-chat-hf

THUDM/cogvlm-chat-hf

THUDM/cogvlm-grounding-generalist-hf

下载模型源码

git clone https://github.com/THUDM/CogVLM.git;

cd CogVLM

创建conda环境

conda create -n cogvlm python=3.11 -y

source activate cogvlm

修改本国内源

pip config set global.index-url http://mirrors.aliyun.com/pypi/simple

pip config set install.trusted-host mirrors.aliyun.com

安装依赖库

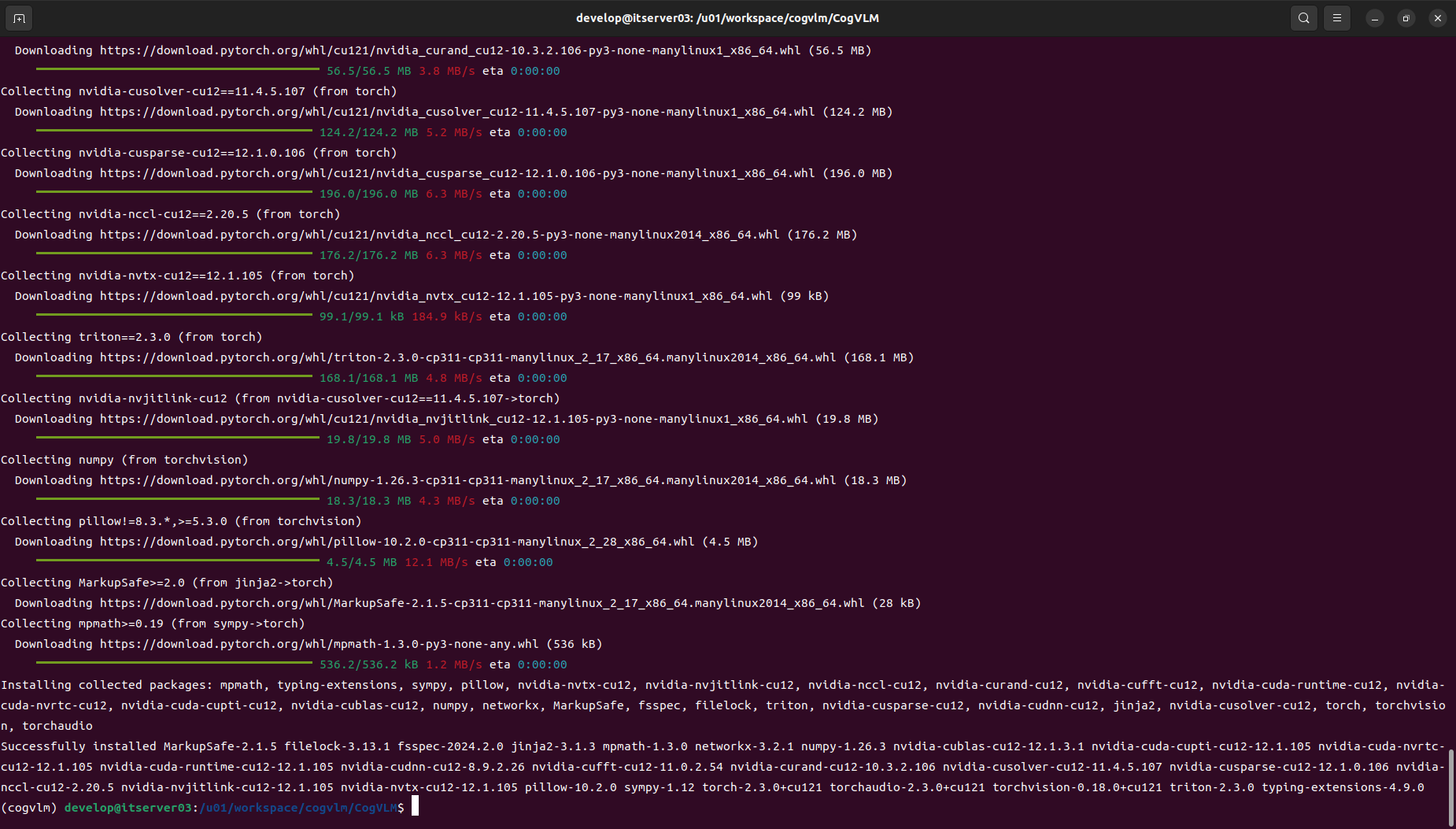

安装torch torchvision torchaudio

pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu121

安装 cuda-runtime

(cogvlm) develop@itserver03:/u01/workspace/cogvlm/CogVLM$: conda install -y -c "nvidia/label/cuda-12.1.0" cuda-runtime

The following NEW packages will be INSTALLED:

cuda-cudart nvidia/label/cuda-12.1.0/linux-64::cuda-cudart-12.1.55-0

cuda-libraries nvidia/label/cuda-12.1.0/linux-64::cuda-libraries-12.1.0-0

cuda-nvrtc nvidia/label/cuda-12.1.0/linux-64::cuda-nvrtc-12.1.55-0

cuda-opencl nvidia/label/cuda-12.1.0/linux-64::cuda-opencl-12.1.56-0

cuda-runtime nvidia/label/cuda-12.1.0/linux-64::cuda-runtime-12.1.0-0

libcublas nvidia/label/cuda-12.1.0/linux-64::libcublas-12.1.0.26-0

libcufft nvidia/label/cuda-12.1.0/linux-64::libcufft-11.0.2.4-0

libcufile nvidia/label/cuda-12.1.0/linux-64::libcufile-1.6.0.25-0

libcurand nvidia/label/cuda-12.1.0/linux-64::libcurand-10.3.2.56-0

libcusolver nvidia/label/cuda-12.1.0/linux-64::libcusolver-11.4.4.55-0

libcusparse nvidia/label/cuda-12.1.0/linux-64::libcusparse-12.0.2.55-0

libnpp nvidia/label/cuda-12.1.0/linux-64::libnpp-12.0.2.50-0

libnvjitlink nvidia/label/cuda-12.1.0/linux-64::libnvjitlink-12.1.55-0

libnvjpeg nvidia/label/cuda-12.1.0/linux-64::libnvjpeg-12.1.0.39-0

Downloading and Extracting Packages:

libcublas-12.1.0.26 | 329.0 MB | | 0%

libcusparse-12.0.2.5 | 163.0 MB | | 0%

libnpp-12.0.2.50 | 139.8 MB | | 0%

libcufft-11.0.2.4 | 102.9 MB | | 0%

libcusolver-11.4.4.5 | 98.3 MB | | 0%

libcurand-10.3.2.56 | 51.7 MB | | 0%

cuda-nvrtc-12.1.55 | 19.7 MB | | 0%

libnvjitlink-12.1.55 | 16.9 MB | | 0%

libnvjpeg-12.1.0.39 | 2.5 MB | | 0%

libcufile-1.6.0.25 | 763 KB | | 0%

cuda-cudart-12.1.55 | 189 KB | | 0%

cuda-opencl-12.1.56 | 11 KB | | 0%

cuda-libraries-12.1. | 2 KB | | 0%

cuda-runtime-12.1.0 | 1 KB | | 0%

Preparing transaction: done

Verifying transaction: done

Executing transaction: done

(cogvlm) develop@itserver03:/u01/workspace/cogvlm/CogVLM$

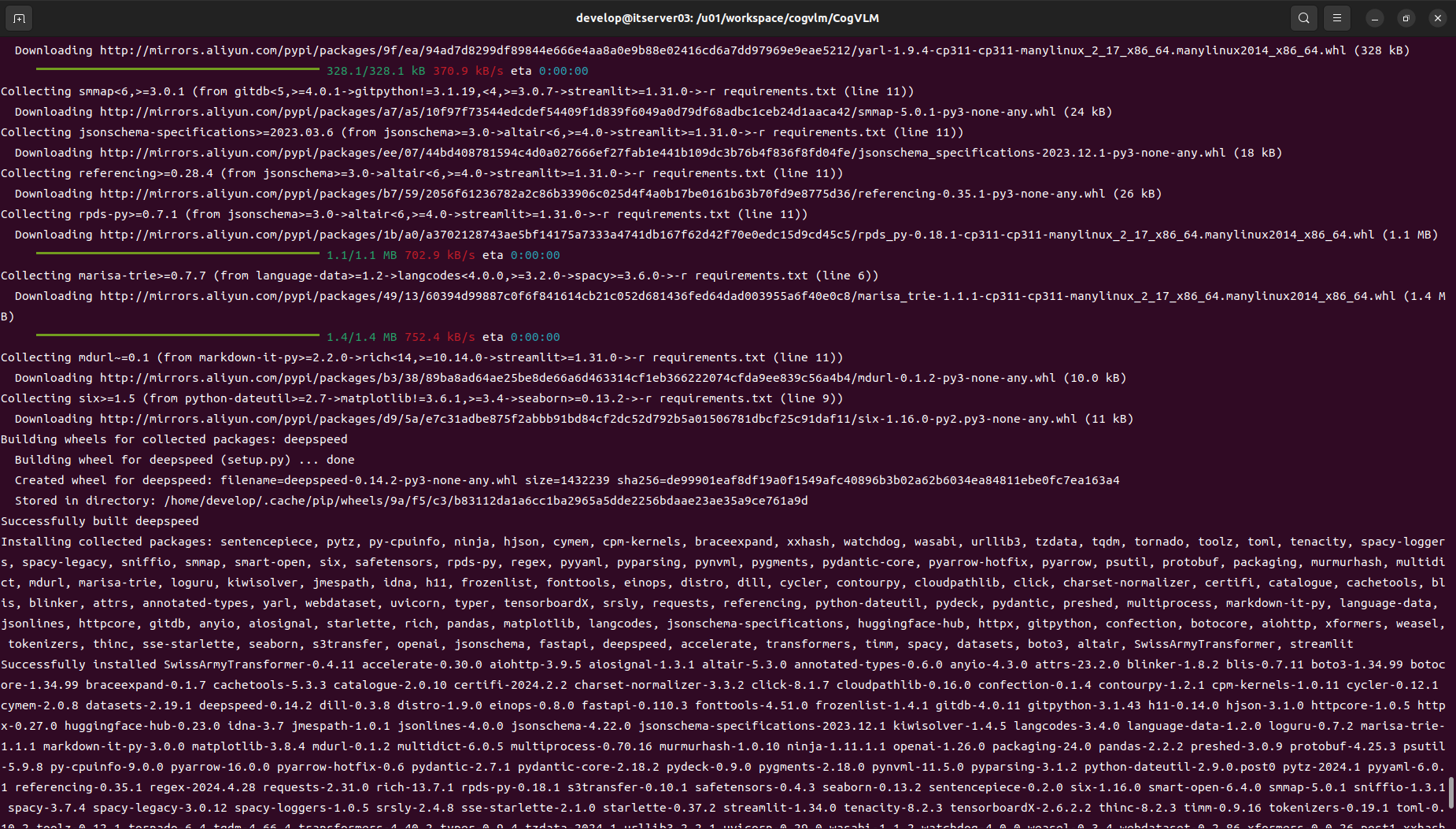

安装CogVLM依赖库

pip install -r requirements.txt

在安装后,启动web界面时,会出现报错,可能碰到如下安装包依赖库问题。huggingface_hub版本不要用最新版。这里制定版本huggingface_hub==0.21.4。bitsandbytes,chardet 这两库可能会需要单独在安装以便,这里至少我是碰到了错误。

pip install bitsandbytes

pip install chardet

pip install huggingface_hub==0.21.4

安装语言模型(非必须)

python -m spacy download en_core_web_sm

运行

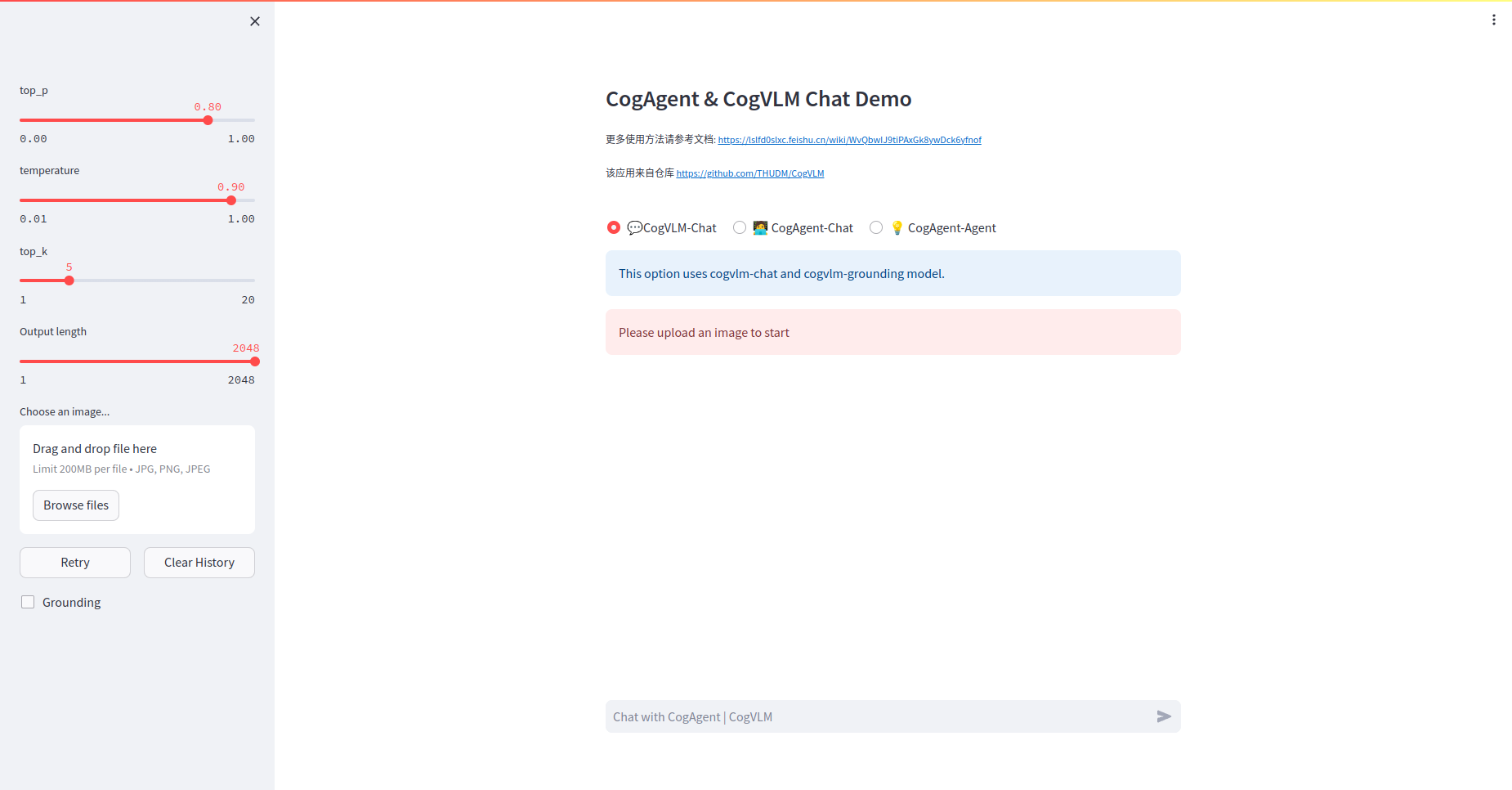

运行web界面

运行前请先修改模型地址,编辑composite_demo/client.py 文件中默认的模型地址

models_info = {

'tokenizer': {

#'path': os.environ.get('TOKENIZER_PATH', 'lmsys/vicuna-7b-v1.5'),

'path': os.environ.get('TOKENIZER_PATH', '/u01/workspace/cogvlm/models/vicuna-7b-v1.5'),

},

'agent_chat': {

#'path': os.environ.get('MODEL_PATH_AGENT_CHAT', 'THUDM/cogagent-chat-hf'),

'path': os.environ.get('MODEL_PATH_AGENT_CHAT', '/u01/workspace/cogvlm/models/cogagent-chat-hf'),

'device': ['cuda:0']

},

'vlm_chat': {

#'path': os.environ.get('MODEL_PATH_VLM_CHAT', 'THUDM/cogvlm-chat-hf'),

'path': os.environ.get('MODEL_PATH_VLM_CHAT', '/u01/workspace/cogvlm/models/cogvlm-chat-hf'),

'device': ['cuda:0']

},

'vlm_grounding': {

#'path': os.environ.get('MODEL_PATH_VLM_GROUNDING','THUDM/cogvlm-grounding-generalist-hf'),

'path': os.environ.get('MODEL_PATH_VLM_GROUNDING','/u01/workspace/cogvlm/models/cogvlm-grounding-generalist-hf'),

'device': ['cuda:']

}

}

执行启动命令

streamlit run composite_demo/main.py

成功后可以打开界面

控制台交互式运行

在python basic_demo/cli_demo_hf.py中运行代码,注意替换模型地址

python cli_demo_hf.py --from_pretrained /u01/workspace/cogvlm/models/cogvlm-chat-hf --fp16 --quant 4

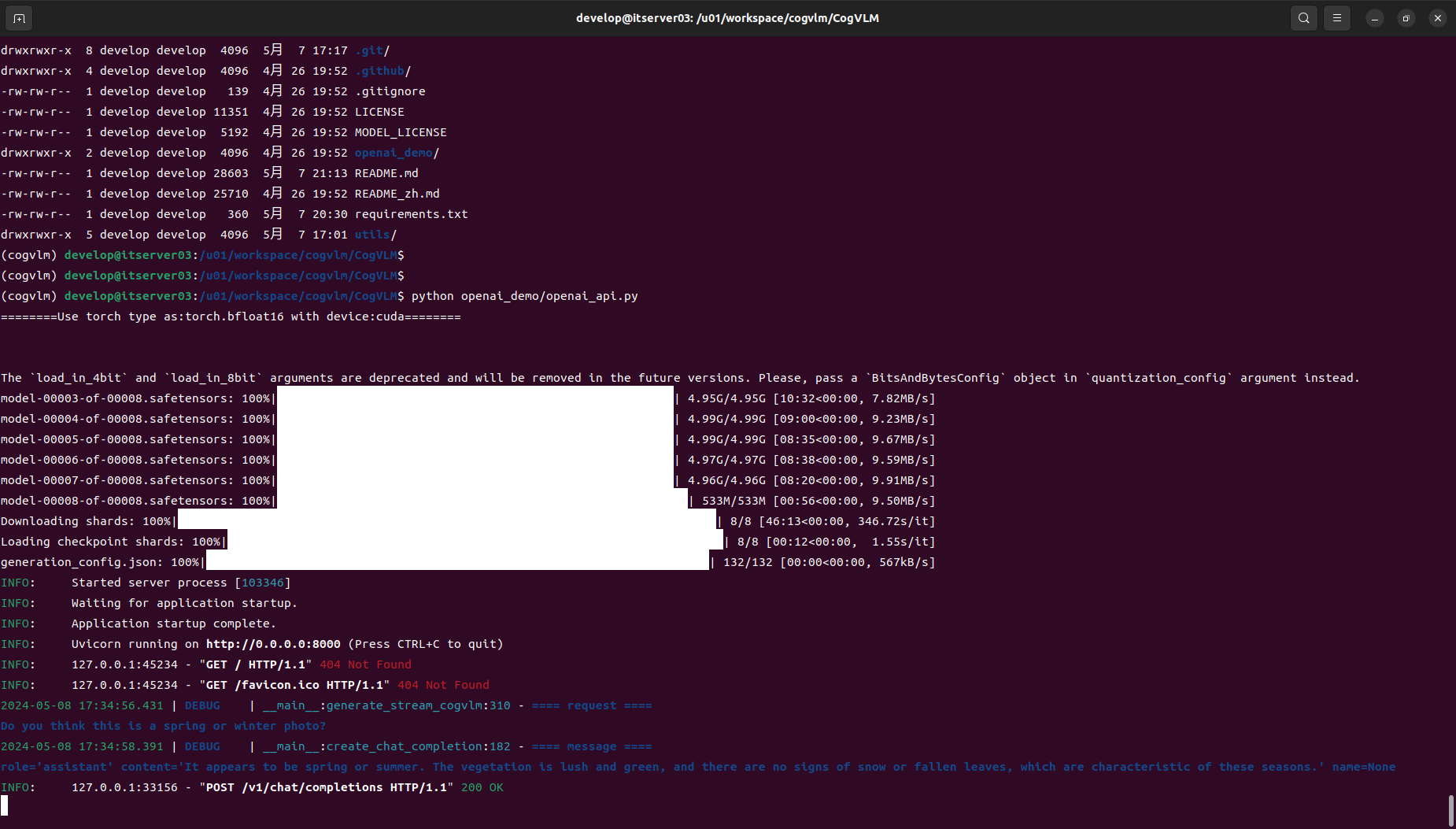

OpenAI 方式 Restful API 运行

运行服务端

python openai_demo/openai_api.py

客户端请求

请编辑openai_demo/openai_api_request.py中的图片地址以及你需要提的问题,例如

messages = [

{

"role": "user",

"content": [

{

"type": "text",

"text": "What’s in this image?",

},

{

"type": "image_url",

"image_url": {

"url": img_url

},

},

],

},

{

"role": "assistant",

"content": "The image displays a wooden boardwalk extending through a vibrant green grassy wetland. The sky is partly cloudy with soft, wispy clouds, indicating nice weather. Vegetation is seen on either side of the boardwalk, and trees are present in the background, suggesting that this area might be a natural reserve or park designed for ecological preservation and outdoor recreation. The boardwalk allows visitors to explore the area without disturbing the natural habitat.",

},

{

"role": "user",

"content": "Do you think this is a spring or winter photo?"

},

]

if __name__ == "__main__":

simple_image_chat(use_stream=False, img_path="/u01/workspace/cogvlm/CogVLM/openai_demo/demo.jpg")

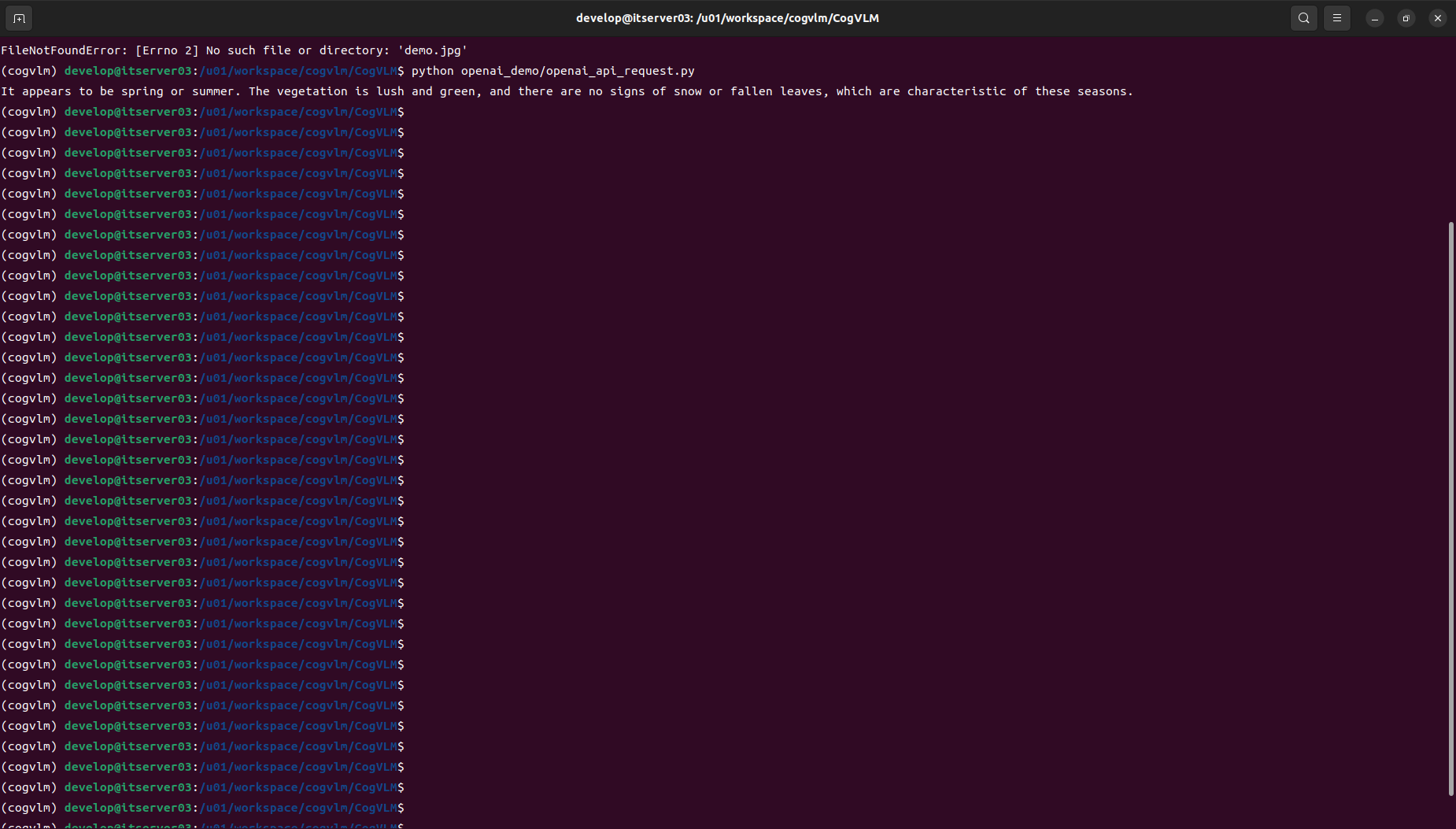

运行客户端请求命令

python openai_demo/openai_api_request.py

Docker 容器化部署

Dockerfile样例

注意

COPY CogVLM/ /app/CogVLM/这行执行需要根据世纪CogVLM源码下载存放位置。

FROM pytorch/pytorch:2.2.1-cuda12.1-cudnn8-runtime

ARG DEBIAN_FRONTEND=noninteractive

WORKDIR /app

RUN pip config set global.index-url http://mirrors.aliyun.com/pypi/simple

RUN pip config set install.trusted-host mirrors.aliyun.com

COPY CogVLM/ /app/CogVLM/

WORKDIR /app/CogVLM

RUN pip install bitsandbytes

RUN pip install --use-pep517 -r requirements.txt

RUN pip install huggingface_hub==0.23.0

EXPOSE 8000 8051

CMD [ "python","openai_demo/openai_api.py" ]

本文采用基础镜像

pytorch/pytorch:2.2.1-cuda12.1-cudnn8-runtime自带的 pip 相关版本与源码中的部分版本冲突(xformers,torch,torchvision),所以,下载原名后需要修改requirements.txt文件:

SwissArmyTransformer>=0.4.9

transformers==4.36.2

xformers==0.0.25

#torch>=2.1.0

#torchvision>=0.16.2

spacy>=3.6.0

pillow>=10.2.0

deepspeed>=0.13.1

seaborn>=0.13.2

loguru~=0.7.2

streamlit>=1.31.0

timm>=0.9.12

accelerate>=0.26.1

pydantic>=2.6.0

# for openai demo

openai>=1.16.0

sse-starlette>=1.8.2

fastapi>=0.110.1

httpx>=0.27.0

uvicorn>=0.29.0

jsonlines>=4.0.0

构建image

docker build -t qingcloudtech/cogvlm:v1.1 .

运行docker

docker run -itd --gpus all \

-p 8000:8000 \

-v /u01/workspace/models:/u01/workspace/models \

-v /u01/workspace/cogvlm/images:/u01/workspace/images \

qingcloudtech/cogvlm:v1.1

openai api 方式运行

docker run -itd --gpus all \

-p 8000:8000 \

-v /u01/workspace/models:/u01/workspace/models \

-v /u01/workspace/cogvlm/images:/u01/workspace/images \

qingcloudtech/cogvlm:v1.1

支持的环境变量:

MODEL_PATH: Model地址,如/u01/workspace/models/cogvlm-chat-hf

TOKENIZER_PATH: tokenizer 地址:如/u01/workspace/models/vicuna-7b-v1.5

QUANT_ENABLED: 默认值为true

注意环境变量中模型的路径地址如果挂载到主机上了,需要与挂在映射路径一致。

测试验证

693cce5688f2 替换为自己的容器ID

docker exec -it 693cce5688f2 python openai_demo/openai_api_request.py

root@itserver03:/u01/workspace/cogvlm/CogVLM/openai_demo# docker exec -it 693cce5688f2 python openai_demo/openai_api_request.py

This image captures a serene landscape featuring a wooden boardwalk that leads through a lush green field. The field is bordered by tall grasses, and the sky overhead is vast and blue, dotted with wispy clouds. The horizon reveals distant trees and a clear view of the sky, suggesting a calm and peaceful day.

root@itserver03:/u01/workspace/cogvlm/CogVLM/openai_demo#

其他访问方式:

Restful API地址:

127.0.0.1:8000/v1/chat/completions

【Qinghub Studio 】更适合开发人员的低代码开源开发平台

【QingHub企业级应用统一部署】

【QingHub企业级应用开发管理】

【QingHub** 演示】**

【https://qingplus.cn】

278

278

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?