自主行走机器人

In this post, I address two questions:

在这篇文章中,我提出两个问题:

- Is automation intelligence — is one a prerequisite for another? 是自动化智能吗?是另一个前提吗?

How do we train agents to act in ways that align with human goals — what limits are there on automation with the standard model.

我们如何培训代理商以符合人类目标的方式行动- 标准模型在自动化方面有哪些限制?

I am intentionally avoiding a couple of hot topics in reinforcement learning that pertains to generalization/task transfer (such as meta-learning or other generalizable methods). I think that most generalization to date is proportional to data-distribution coverage of multiple tasks — not the ability for the same hyperparameters to solve numerically distinct tasks.

在强化学习中,我有意避免涉及与泛化/任务转移(例如元学习或其他泛化方法 )有关的两个热门话题。 我认为,迄今为止,大多数概化与多个任务的数据分布范围成正比,而不是相同的超参数解决数字上不同的任务的能力。

The good news: I think expanding our robot worldview can lead to robots that can generalize and help humans all the same.

好消息:我认为,扩大我们的机器人世界观可以导致机器人能够普遍地推广和帮助人类。

硬件-任务关系: (Hardware-Task Relationship:)

To start, consider this video. In it, you’ll see an agent accomplishing a task: swimming up stream.

首先,请考虑以下视频。 在其中,您将看到一个代理完成一项任务:顺流而下。

Now, I know some of you didn’t click the link and that’s okay, but the catch is that it is a dead fish swimming upstream. Nature has figured some things out about design that human’s creating products may never get to tap into: passive design.

现在,我知道你们中的某些人没有单击该链接,这没关系,但要注意的是,它是一条死鱼游向上游。 大自然已经弄清楚了人类创造的产品可能永远无法利用的一些设计:被动设计。

Robots are designed with a task in mind, and this task can limit them. I start with hardware because

机器人的设计考虑了任务,而这项任务可能会限制它们。 我从硬件入手,因为

自动化并不总是智能 (Automation is not always intelligence)

One of the leaders in at-home robotics recently made a big shift from hardware-based development to software-based application development: iRobot (king of the Roomba). This is an interesting shift, that in many ways co-aligns thematically in what I am writing about (and it happened just when I was writing about it). The TL;DR is that iRobot moved two-thirds of their engineering base to software and human-robot interaction development, but the quotes from the CEO are very telling for anyone either a) interested in making at-home robots for a career or b) wondering by the at-home robot market seems so bizarrely empty and shifty.

家用机器人技术的领导者之一最近发生了从基于硬件的开发到基于软件的应用程序开发的重大转变: iRobot(Roomba的王者) 。 这是一个有趣的转变,它在许多方面使主题与我正在写的内容保持一致(并且恰好在我写此内容时发生 )。 TL; DR是iRobot将其工程基础的三分之二转移到了软件和人机交互开发上,但是CEO的话对任何人都非常有用:a)对职业家用机器人感兴趣或b) )对家用机器人市场的疑惑显得如此空洞而多变。

为用户设计机器人与设计 (Design a robot vs design for users)

The Roomba company is shifting from a robust autonomy approach, ie how to we make the vacuum that’ll get the highest percentage of particles in any space with the most 9’s of reliability, to that of how do we create happy customers that interact with their vacuum. When reflecting on what users want, the CEO of iRobot, Anges says the company needs a new direction to keep users engaged:

Roomba公司正在从一种强大的自治方法转变,即如何使真空度在任何空间中以最高的 9分 获得最大百分比的粒子 ,而到如何创建与客户互动的满意客户的方式真空 。 在思考用户的需求时,iRobot的首席执行官Anges说,公司需要一个新的方向来保持用户的参与度:

Autonomy is not intelligence. We need to do something more.

自治不是智慧。 我们需要做更多的事情。

This dichotomy comes into play with many other companies, and I will take a little liberty when I make these comparisons. A similar difference in self-driving cars is that Waymo is making an autonomy stack and Tesla is making a product people want to use. This could also be seen in a difference between more of a hardware focused company that famously went by a motto of “do what the customer needs before they want it,” versus a company that copies the trend in technology to stay afloat.

这种二分法在许多其他公司中都发挥了作用,在进行这些比较时,我会有些自由。 自动驾驶汽车的一个类似区别是,Waymo正在制造自动驾驶汽车,特斯拉正在制造人们想要使用的产品。 这也可以从以下两者之间的差异中看出来:一家以硬件为中心的公司以“ 在客户想要之前先做他们需要的事情 ”为座右铭,而后者则复制了技术趋势以保持生存 。

The former stance — make what is right — is very hard in robotics. No one knows the answer, and what is right likely is not technologically feasible yet.

在机器人技术领域,前者的立场(正确无误)非常困难。 没有人知道答案,正确的选择在技术上还不可行。

机器人天生就是坚硬和有限的 (Robots are inherently hard and limited)

Thinking about this above, I think a lot of people will reflect that they want to make agents that are intelligent, reflective of their environment, and interactive, but that’s really damn hard.

考虑到以上问题,我认为很多人会反映出他们希望使代理程序变得智能,能够反映其环境并具有交互性,但这确实很难。

Think about what needs to be in place before a robot can even maybe reason about how to better serve you in your home. Again, from Anges:

想一想在机器人甚至可能需要考虑如何为您的家中更好地服务之前,需要采取哪些措施。 再次,从昂热:

Until the robot proves that it knows enough about your home and about the way that you want your home cleaned, you can’t move forward.”

除非机器人证明自己对房屋以及对房屋的清洁方式了解得足够多,否则您将无法前进。”

And more succinctly:

更简洁地说:

a robot can’t be responsive if it’s incompetent.

如果机器人不称职,它将无法响应。

How iRobot is making this transition is very reminiscent of how I have done research: go around lab taking photos of low lying objects and see how the model we create can capture them.

iRobot进行这种转换的方式非常让人联想到我的研究工作 :在实验室拍摄低空物体的照片,看看我们创建的模型如何捕获它们。

This is done through machine learning using a library of images of common household objects from a floor perspective that iRobot had to develop from scratch.

这是通过机器学习来完成的,该机器使用iRobot必须从零开始开发的地板上的常见家庭对象的图像库。

For the curious: we are working towards autonomous microrobots, and want to have them navigate the world. In our image-based navigation dataset, cubicles look like the giant, looming walls of a maze. iRobot is trying to re-create a navigation dataset for homes. This reminds me of how Covariant is equipping robots for pick-and-place in logistics: really, really robust data.

出于好奇 :我们正在努力开发自动微型机器人,并希望它们能在整个世界中导航。 在基于图像的导航数据集中,小隔间看起来就像是迷宫般的巨大而隐约可见的墙壁。 iRobot试图重新创建房屋的导航数据集。 这让我想起了Covariant如何为机器人配备物流中的取放工具:真正强大的数据。

I leave you with a tangent that summarizes well the current state of personal electronics — what if my Roomba is stolen, will they know what kind of slippers I wear?

我要给您一个切线,以很好地总结出个人电子设备的现状-如果我的Roomba被盗了,他们会知道我穿哪种拖鞋吗?

Worst case, if all the data iRobot has about your home gets somehow stolen, the hacker would only know that (for example) your dining room has a table in it and the approximate size and location of that table, because the map iRobot has of your place only stores symbolic representations rather than images.

最坏的情况是,如果iRobot拥有的有关您房屋的所有数据都以某种方式被盗,那么黑客只会知道(例如)您的饭厅中有一张桌子以及该桌子的大概大小和位置,因为iRobot的地图包含您的位置仅存储符号表示,而不存储图像。

标准型号 (The Standard Model)

How do we make it easier for robots to bridge the gap from autonomy to intelligence? Change how the tasks are modeled.

我们如何使机器人更轻松地弥合从自治到智能的鸿沟? 更改任务的建模方式。

Let’s take a step back, all the way back. Humans are called homo sapiens which can roughly be translated as “wise man.” Is more intelligence always the answer? Consider this in the above example — is a Roomba that continues to learn more about you and always gain more abilities always the answer? Surely not — surely there is a peak with how much reasoning you want your vacuum to have.

让我们往后退一步。 人类被称为智人 ,可以粗略地翻译为“智者”。 更多的智力总是答案吗? 在上面的示例中考虑这一点–是Roomba继续不断地了解您,并且总是获得更多的能力吗? 当然不能-当然,您希望吸尘器有多少推理已经达到顶峰。

机器在达到其成果的程度上是智能的 (Machines are intelligent to the extent by which they can achieve their outcomes)

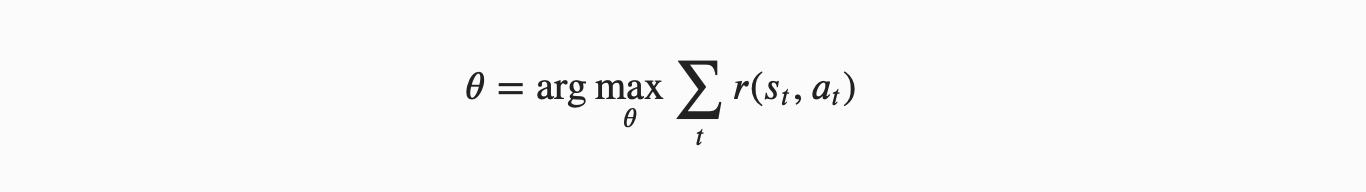

This is the definition of the standard model. All across robotics and machine learning, the measure the success of an agent by it’s performance on baselines — a small set of tasks (that are very troubling in my field). This results in a standard model shown below — computers are optimizing reward functions we given them.

这是标准模型的定义。 在整个机器人技术和机器学习中,通过代理在基线上的性能来衡量代理的成功,这是一小组任务( 在我的领域中这是非常麻烦的 )。 这将产生一个如下所示的标准模型 -计算机正在优化我们赋予它们的奖励功能。

(Credit to Stuart Russell on this naming convention).

( 感谢Stuart Russell的命名约定 )。

循环中的人类 (Humans in the loop)

What we want is (from Human Compatible):

我们想要的是(来自Human Compatible ):

machines that are beneficial to the extent that their actions can be expected to achieve our outcomes.

这些机器在一定程度上有益于预期他们的行动可以实现我们的成果。

There is a big caveat on this that we by no means know how to model human rewards, but that should be the new assumption: uncertainty and exploration. What this translates to in the model above:

有一个很大的警告,我们决不知道如何为人类的奖励建模,但这应该是一个新的假设:不确定性和探索。 在上面的模型中这意味着什么:

- assuming the previous set of parameters you chose is always imperfect because your model of humans is imperfect, 假设您选择的上一组参数总是不完美的,因为您的人体模型不完美,

- any model you use for one human cannot translate because of each human being different, 您为一个人使用的任何模型都无法翻译,因为每个人都不同,

- weighing objectives that affect multiple people is near impossible (can’t compare relative views), 权衡影响多个人的目标几乎是不可能的(无法比较相对观点),

- and finally: all humans operate on a distribution of rewards. Encoding it into a single function is always sub-optimal. 最后:所有人都在分配奖励。 将其编码为单个函数总是次优的。

The point of this point is to get to the point where we a) see that there is a different path forward and b) have a direction to point at. By no means do I expect anyone to solve this overnight, but it is a set of goalposts for us to aim at while we keep automating the world.

这一点的意思是到达以下位置:a)看到存在一条不同的前进路径,并且b)有指向的方向。 我绝不希望任何人一夜之间解决这个问题,但这是我们在保持世界自动化的同时要瞄准的一组目标。

I recommend Stuart’s recent book Human Compatible if you would like to read more.

如果您想了解更多信息,我推荐Stuart的最新著作《 Human Compatible》 。

Hopefully, you find some of this interesting and fun. You can find more about the author here. Tweet at me @natolambert, reply here.

希望您能从中找到一些有趣的乐趣。 您可以在此处找到有关作者的更多信息。 在我上发@natolambert ,在这里回复。

I write to learn and converse. Forwarded this? Subscribe here.

我写作学习和交谈。 转发了吗? 在这里订阅。

翻译自: https://medium.com/swlh/autonomy-vs-intelligence-in-robotics-ea80f8616c42

自主行走机器人

599

599

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?