背景:我们需要对多标签的问题,标签进行谱聚类,然后看相应的聚类结果。

目录

一、安装sklearn

1.1 scikit-learn概览

sklearn全称为scikit-learn

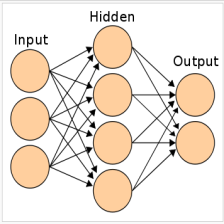

Scikit-learn(sklearn)的定位是通用机器学习库,而TensorFlow(tf)的定位主要是深度学习库。一个显而易见的不同:tf并未提供sklearn那种强大的特征工程,如维度压缩、特征选择等。究其根本,我认为是因为机器学习模型的两种不同的处理数据的方式:

- 传统机器学习:利用特征工程(feature engineering),人为对数据进行提炼清洗

- 深度学习:利用表示学习(representation learning),机器学习模型自身对数据进行提炼

1.2 安装

torch031环境下,pip install sklearn

(torch031) [xingxiangrui@gzbh-mms-gpu55.gzbh.baidu.com chun-ML_GCN]$ pip install sklearn

env环境下

env/bin/python -m pip install sklearn

Collecting sklearn

Using cached https://files.pythonhosted.org/packages/1e/7a/dbb3be0ce9bd5c8b7e3d87328e79063f8b263b2b1bfa4774cb1147bfcd3f/sklearn-0.0.tar.gz

Collecting scikit-learn (from sklearn)

Downloading https://files.pythonhosted.org/packages/5e/82/c0de5839d613b82bddd088599ac0bbfbbbcbd8ca470680658352d2c435bd/scikit_learn-0.20.3-cp36-cp36m-manylinux1_x86_64.whl (5.4MB)

100% |################################| 5.4MB 20kB/s

Requirement already satisfied: numpy>=1.8.2 in ./env/lib/python3.6/site-packages (from scikit-learn->sklearn) (1.16.3)

Requirement already satisfied: scipy>=0.13.3 in ./env/lib/python3.6/site-packages (from scikit-learn->sklearn) (1.2.1)

Building wheels for collected packages: sklearn

Running setup.py bdist_wheel for sklearn ... done

Stored in directory: /home/xingxiangrui/.cache/pip/wheels/76/03/bb/589d421d27431bcd2c6da284d5f2286c8e3b2ea3cf1594c074

Successfully built sklearn

Installing collected packages: scikit-learn, sklearn

Successfully installed scikit-learn-0.20.3 sklearn-0.0二、简单谱聚类

2.1 谱聚类详解

Graph特征提取方法:谱聚类(Spectral Clustering)详解

2.2 简单运行

参考样例

https://www.bbsmax.com/A/NMAzABqJ9p/

https://blog.csdn.net/fjssharpsword/article/details/79198054

https://blog.csdn.net/weixin_40042143/article/details/82462804

代码:

#-*-coding:utf-8 -*-

"""

created by xingxinangrui on 2019.5.5

this is the simple demo that use sk-learn to run spectral clustering

"""

#demo one use iris dataset

import numpy as np

from sklearn import datasets

X = datasets.load_iris()

# print(X)

# 查看默认的谱聚类效果

from sklearn.cluster import SpectralClustering

spectral = SpectralClustering()

pred_y = spectral.fit_predict(X.data)

from sklearn import metrics

print("Calinski-Harabasz Score", metrics.calinski_harabaz_score(X.data, pred_y))

"""

Calinski-Harabasz Score 438.286953256

"""

# 默认使用的是高斯核,需要对n_cluster和gamma进行调参,选择合适的参数

scores = []

s = dict()

for index, gamma in enumerate((0.01, 0.1, 1, 10)):

for index, k in enumerate((3, 4, 5, 6)):

pred_y = SpectralClustering(n_clusters=k).fit_predict(X.data)

print("Calinski-Harabasz Score with gamma=", gamma, "n_cluster=", k, "score=",

metrics.calinski_harabaz_score(X.data, pred_y))

tmp = dict()

tmp['gamma'] = gamma

tmp['n_cluster'] = k

tmp['score'] = metrics.calinski_harabaz_score(X.data, pred_y)

s[metrics.calinski_harabaz_score(X.data, pred_y)] = tmp

scores.append(metrics.calinski_harabaz_score(X.data, pred_y))

print(np.max(scores))

print("max score:")

print(s.get(np.max(scores)))运行结果

[xingxiangrui@gzbh-mms-gpu55.gzbh.baidu.com chun-ML_GCN]$ env/bin/python sk_spectral_cluster/demo_spectral_clustering.py

Calinski-Harabasz Score 412.6798087360221

Calinski-Harabasz Score with gamma= 0.01 n_cluster= 3 score= 556.1176919037501

Calinski-Harabasz Score with gamma= 0.01 n_cluster= 4 score= 467.76231583239655

Calinski-Harabasz Score with gamma= 0.01 n_cluster= 5 score= 432.63682714748126

Calinski-Harabasz Score with gamma= 0.01 n_cluster= 6 score= 410.18904618455855

Calinski-Harabasz Score with gamma= 0.1 n_cluster= 3 score= 556.11769190375

Calinski-Harabasz Score with gamma= 0.1 n_cluster= 4 score= 467.76231583239655

Calinski-Harabasz Score with gamma= 0.1 n_cluster= 5 score= 437.95873781534283

Calinski-Harabasz Score with gamma= 0.1 n_cluster= 6 score= 410.4013080804197

Calinski-Harabasz Score with gamma= 1 n_cluster= 3 score= 556.1176919037501

Calinski-Harabasz Score with gamma= 1 n_cluster= 4 score= 467.76231583239655

Calinski-Harabasz Score with gamma= 1 n_cluster= 5 score= 437.95873781534283

Calinski-Harabasz Score with gamma= 1 n_cluster= 6 score= 404.45914748471347

Calinski-Harabasz Score with gamma= 10 n_cluster= 3 score= 556.1176919037501

Calinski-Harabasz Score with gamma= 10 n_cluster= 4 score= 467.76231583239655

Calinski-Harabasz Score with gamma= 10 n_cluster= 5 score= 437.9587378153427

Calinski-Harabasz Score with gamma= 10 n_cluster= 6 score= 401.6775659923456

556.1176919037501

max score:

{'gamma': 10, 'n_cluster': 3, 'score': 556.1176919037501}2.3 iris数据集

用莺尾花数据集(IRIS数据集)

Iris数据集是常用的分类实验数据集,由Fisher, 1936收集整理。Iris也称鸢尾花卉数据集,是一类多重变量分析的数据集。数据集包含150个数据集,分为3类,每类50个数据,每个数据包含4个属性。可通过花萼长度,花萼宽度,花瓣长度,花瓣宽度4个属性预测鸢尾花卉属于(Setosa,Versicolour,Virginica)三个种类中的哪一类。

iris以鸢尾花的特征作为数据来源,常用在分类操作中。该数据集由3种不同类型的鸢尾花的50个样本数据构成。其中的一个种类与另外两个种类是线性可分离的,后两个种类是非线性可分离的。

该数据集包含了5个属性:

- & Sepal.Length(花萼长度),单位是cm;

- & Sepal.Width(花萼宽度),单位是cm;

- & Petal.Length(花瓣长度),单位是cm;

- & Petal.Width(花瓣宽度),单位是cm;

- & 种类:Iris Setosa(山鸢尾)、Iris Versicolour(杂色鸢尾),以及Iris Virginica(维吉尼亚鸢尾)。

数据集输入之后,我们将数据集print

dataset iris:

{'data': array([[5.1, 3.5, 1.4, 0.2],

[4.9, 3. , 1.4, 0.2],

[6.4, 3.2, 4.5, 1.5],

[6.9, 3.1, 4.9, 1.5],

[5.5, 2.3, 4. , 1.3],

。。。

[6.3, 2.5, 5. , 1.9],

[6.5, 3. , 5.2, 2. ],

[6.2, 3.4, 5.4, 2.3],

[5.9, 3. , 5.1, 1.8]]), 'target': array([0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,

1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,

1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2,

2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2,

2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2]), 'target_names': array(['setosa', 'versicolor', 'virginica'], dtype='<U10'), 'DESCR': '.. _iris_dataset:\n\nIris plants dataset\打出纬度:

#demo one use iris dataset

import numpy as np

from sklearn import datasets

X = datasets.load_iris()

print('dataset iris .data shape:')

#print(X)

print(np.array(X.data).shape)

# 查看默认的谱聚类效果

from sklearn.cluster import SpectralClustering

spectral = SpectralClustering()

pred_y = spectral.fit_predict(X.data)

print("pred_y")

print(pred_y)

print("pred_y.shape")

print(np.array(pred_y).shape)

"""

dataset iris .data shape:

(150, 4)

pred_y

[6 0 0 0 6 6 0 0 0 0 6 0 0 0 6 6 6 6 6 6 6 6 0 6 0 0 6 6 6 0 0 6 6 6 0 0 6

6 0 6 6 0 0 6 6 0 6 0 6 0 3 3 3 5 3 5 3 2 3 5 2 5 5 3 5 3 5 5 3 5 7 5 7 3

3 3 3 3 3 5 5 5 5 7 5 3 3 3 5 5 5 3 5 2 5 5 5 3 2 5 4 7 4 7 4 1 5 4 4 4 4

7 4 7 7 4 4 1 1 7 4 7 1 7 4 4 7 7 4 4 4 1 4 7 7 1 4 4 7 4 4 4 7 4 4 4 7 4

4 7]

pred_y.shape

(150,)

"""即按每行元素进行聚类,聚类得到结果输出为一个list,每个元素对应一个list

三、谱聚类函数

3.1 SpectralClustering

函数参数及用法:

http://www.cnblogs.com/pinard/p/6235920.html

https://blog.csdn.net/fjssharpsword/article/details/79198054

https://blog.csdn.net/weixin_40042143/article/details/82462804

https://www.bbsmax.com/A/NMAzABqJ9p/

from sklearn.cluster import SpectralClustering

spectral = SpectralClustering()

pred_y = spectral.fit_predict(X.data)或者:

pred_y = SpectralClustering(n_clusters=k).fit_predict(X.data)

print("Calinski-Harabasz Score with gamma=", gamma, "n_cluster=", k, "score=",

metrics.calinski_harabaz_score(X.data, pred_y))3.2 输入

输入

#gengrate our dataset

X, y = datasets.make_blobs(n_samples=500, n_features=6, centers=5, cluster_std=[0.4, 0.3, 0.4, 0.3, 0.4], random_state=11)

#print dataset shape

print("X.shape and y.shape:")

print(np.array(X).shape)

print(np.array(y).shape)

print("X is", X)随机生成的X,500个数据点,每个数据点为6维

X.shape and y.shape:

(500, 6)

(500,)

X is [[-7.76324808 -8.84656238 4.27901582 2.24092088 -9.01179124 -0.38420006]

[-6.7332813 -9.41315963 -0.7936109 4.74079464 -1.07047174 -0.74584275]

[-6.95129282 -9.51077056 -0.84525704 4.10274755 -1.28878541 0.14329266]

...

[-3.52246694 -6.80041267 4.91296278 6.27507964 -3.01961225 -3.42770556]

[ 7.76663892 7.82404263 -6.8318606 2.88728932 -9.76742041 -7.50407371]

[ 8.40228838 7.63184647 -6.50528954 3.36423644 -9.48793872 -7.73365915]]例如对于iris数据集,输入为:

dataset iris:

{'data': array([[5.1, 3.5, 1.4, 0.2],

[4.9, 3. , 1.4, 0.2],

[6.4, 3.2, 4.5, 1.5],

[6.9, 3.1, 4.9, 1.5],

[5.5, 2.3, 4. , 1.3],

。。。

[6.3, 2.5, 5. , 1.9],

[6.5, 3. , 5.2, 2. ],

[6.2, 3.4, 5.4, 2.3],

[5.9, 3. , 5.1, 1.8]]), 'target': array([0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,

1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,

1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2,

2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2,

2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2]), 'target_names': array(['setosa', 'versicolor', 'virginica'], dtype='<U10'), 'DESCR': '.. _iris_dataset:\n\nIris plants dataset\3.3 输出

与输入的维度有关,多少个样本点得到对应的输出。对于5维为 0到4, 对于3维为 0到2

Calinski-Harabasz Score with gamma= 0.01 n_clusters= 5 score: 23410.638949991386

(500,)

y_pred is [0 3 3 2 3 3 1 2 3 3 0 0 3 3 3 2 3 1 1 1 4 4 0 1 4 2 2 2 3 0 0 1 3 3 1 0 0

2 4 2 2 1 1 3 2 4 0 0 1 4 4 0 2 2 0 1 4 4 2 2 0 4 4 4 1 3 2 4 4 2 4 1 1 0

3 1 2 1 2 0 3 2 4 4 2 3 3 3 3 0 2 3 3 4 2 1 1 3 0 3 2 0 2 0 1 2 0 0 3 1 3

0 2 2 2 4 0 2 2 3 0 3 1 3 3 0 0 1 0 2 4 2 3 4 1 1 1 1 3 1 4 0 4 4 4 4 4 0

2 1 4 0 1 0 2 4 1 1 1 4 3 1 0 2 4 0 2 2 1 4 4 3 1 2 2 1 2 4 4 2 2 3 2 3 1

3 0 3 4 1 4 1 4 3 2 0 3 2 0 1 4 4 0 4 0 2 4 3 2 4 1 0 1 3 2 0 4 4 2 4 1 1

0 1 0 0 3 0 3 1 3 1 0 4 1 2 3 1 3 3 4 3 1 1 3 2 1 0 2 2 0 3 2 4 3 1 4 1 1

0 1 0 1 1 1 4 0 0 2 3 4 4 2 0 1 3 1 0 3 0 2 4 3 1 0 1 4 2 0 4 0 4 4 1 4 4

4 2 1 0 0 1 0 3 0 1 1 0 3 2 3 4 3 2 1 4 1 1 1 3 0 2 1 3 1 0 0 1 2 4 3 2 1

3 3 2 0 2 2 0 0 3 3 0 4 2 1 4 2 4 0 3 1 4 3 3 0 1 2 4 1 0 4 2 2 2 0 4 3 2

4 0 3 0 4 2 4 0 0 0 0 0 3 3 4 1 4 2 0 4 3 1 3 2 4 3 3 1 0 1 1 4 4 3 0 4 0

3 3 2 3 4 3 1 0 4 2 2 4 4 4 2 2 3 0 4 1 3 0 0 1 2 0 3 0 1 2 0 3 0 3 4 1 1

3 4 0 4 4 0 2 3 3 4 3 2 2 3 0 1 2 4 1 1 3 4 2 2 2 2 3 2 3 2 4 4 2 2 0 1 0

0 1 3 3 3 1 1 0 1 4 4 2 2 0 4 1 4 2 2]

Calinski-Harabasz Score with gamma= 0.1 n_clusters= 3 score: 1979.7709609161868

(500,)

y_pred is [1 1 1 2 1 1 0 2 1 1 1 1 1 1 1 2 1 0 0 0 1 1 1 0 1 2 2 2 1 1 1 0 1 1 0 1 1

2 1 2 2 0 0 1 2 1 1 1 0 1 1 1 2 2 1 0 1 1 2 2 1 1 1 1 0 1 2 1 1 2 1 0 0 1

1 0 2 0 2 1 1 2 1 1 2 1 1 1 1 1 2 1 1 1 2 0 0 1 1 1 2 1 2 1 0 2 1 1 1 0 1

1 2 2 2 1 1 2 2 1 1 1 0 1 1 1 1 0 1 2 1 2 1 1 0 0 0 0 1 0 1 1 1 1 1 1 1 1

2 0 1 1 0 1 2 1 0 0 0 1 1 0 1 2 1 1 2 2 0 1 1 1 0 2 2 0 2 1 1 2 2 1 2 1 0

1 1 1 1 0 1 0 1 1 2 1 1 2 1 0 1 1 1 1 1 2 1 1 2 1 0 1 0 1 2 1 1 1 2 1 0 0

1 0 1 1 1 1 1 0 1 0 1 1 0 2 1 0 1 1 1 1 0 0 1 2 0 1 2 2 1 1 2 1 1 0 1 0 0

1 0 1 0 0 0 1 1 1 2 1 1 1 2 1 0 1 0 1 1 1 2 1 1 0 1 0 1 2 1 1 1 1 1 0 1 1

1 2 0 1 1 0 1 1 1 0 0 1 1 2 1 1 1 2 0 1 0 0 0 1 1 2 0 1 0 1 1 0 2 1 1 2 0

1 1 2 1 2 2 1 1 1 1 1 1 2 0 1 2 1 1 1 0 1 1 1 1 0 2 1 0 1 1 2 2 2 1 1 1 2

1 1 1 1 1 2 1 1 1 1 1 1 1 1 1 0 1 2 1 1 1 0 1 2 1 1 1 0 1 0 0 1 1 1 1 1 1

1 1 2 1 1 1 0 1 1 2 2 1 1 1 2 2 1 1 1 0 1 1 1 0 2 1 1 1 0 2 1 1 1 1 1 0 0

1 1 1 1 1 1 2 1 1 1 1 2 2 1 1 0 2 1 0 0 1 1 2 2 2 2 1 2 1 2 1 1 2 2 1 0 1

1 0 1 1 1 0 0 1 0 1 1 2 2 1 1 0 1 2 2]

四、用coco标签进行谱聚类

4.1 coco互相关矩阵保存

将coco的互相关矩阵存下来,用pickle存入

correlations = {}

correlations.update(pp=A_B) #p(A/B)

correlations.update(fp=notA_B) # P(not A/B)

correlations.update(pf=A_notB)

correlations.update(ff=notA_notB)

with open('sk_spectral_cluster/coco_correlations.pkl', 'wb') as f:

print("write correlations in sk_spectral_cluster/coco_correlations.pkl")

pickle.dump(correlations, f)

with open('sk_spectral_cluster/coco_names.pkl','wb') as name_file:

print("write correlations in sk_spectral_cluster/coco_names.pkl")

pickle.dump(names, name_file)4.3 加载

加载出来

# ----------------------load coco_correlations.pkl and load coco_names.pkl--------

with open('sk_spectral_cluster/coco_correlations.pkl', 'rb') as f:

print("loading coco_correlations.pkl ")

correlations= pickle.load(f)

with open('sk_spectral_cluster/coco_names.pkl', 'rb') as f:

print("loading coco_names.pkl")

names=pickle.load(f)

coco_correlation_A_B=correlations['pp']

print('coco label corrrelation matrix (80*80) : \n' , coco_correlation_A_B)4.4 直接进行预测

运用前面所讲的SpectralClustering 函数

#-----------------------spectral clustering----------------------------------------

y_pred = SpectralClustering().fit_predict(coco_correlation_A_B)

print ("Calinski-Harabasz Score:")

print(metrics.calinski_harabaz_score(coco_correlation_A_B, y_pred))

print("y_pred",y_pred)但是会出现一定的warning

/env/lib/python3.6/site-packages/sklearn/cluster/spectral.py:462:

UserWarning: The spectral clustering API has changed. ``fit``now constructs an affinity matrix from data.

To use a custom affinity matrix, set ``affinity=precomputed``.

warnings.warn("The spectral clustering API has changed. ``fit``"聚类结果

Calinski-Harabasz Score:

8.367635511918138

y_pred [1 2 6 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 7 2 7 7 2

2 2 4 0 4 0 0 0 4 2 2 0 2 2 2 2 0 2 0 4 0 0 2 4 2 5 5 5 0 5 2 3 3 2 3 3 0

2 0 2 2 2 2]4.5 affinity matrix有向图

此warning非常有用,给出了如果想要使用affinity matrix,直接代码中添加程序,affinity=precomputed

adjacent matrix和affinity matrix之间的区别:

https://blog.csdn.net/songkun123/article/details/80720938

adjacent表示点与点之间的关系,affinity可以理解为点与边之间的关系。

更改代码

y_pred = SpectralClustering(affinity=precomputed).fit_predict(coco_correlation_A_B)不可行,precomputed未定义不可用,可能需要将affinity matrix定义出来才可以运用。还是需要理解谱聚类中参数细节才可以运用这个。

4.6 调整获取最好参数

根据 calinski_harabaz_score 来获取最佳参数。

# ---------------choose parameters by calinski_harabaz_score

scores = []

best_parameters = dict()

for index, gamma in enumerate((0.01, 0.1, 1, 10)):

for index, k in enumerate((3, 4, 5, 6, 7, 8, 9, 10)):

pred_y = SpectralClustering(n_clusters=k,gamma=gamma).fit_predict(coco_correlation_A_B)

print("Calinski-Harabasz Score with gamma=", gamma, "n_cluster=", k, "score=",

metrics.calinski_harabaz_score(coco_correlation_A_B, pred_y))

tmp = dict()

tmp['gamma'] = gamma

tmp['n_cluster'] = k

tmp['score'] = metrics.calinski_harabaz_score(coco_correlation_A_B, pred_y)

best_parameters[metrics.calinski_harabaz_score(coco_correlation_A_B, pred_y)] = tmp

scores.append(metrics.calinski_harabaz_score(coco_correlation_A_B, pred_y))

print("pred_y:",pred_y)

print(np.max(scores))

print("max score:")

print(best_parameters.get(np.max(scores)))运行结果

env/bin/python sk_spectral_cluster/coco_spectral_cluster.py

loading coco_correlations.pkl

loading coco_names.pkl

coco label corrrelation matrix (80*80) :

[[1.00000000e+00 8.26410144e-01 7.04392284e-01 ... 4.03311258e-01

4.45312500e-01 5.40000000e-01]

[4.18382255e-02 1.00000000e+00 1.02719033e-01 ... 1.12582781e-02

0.00000000e+00 5.71428571e-03]

[1.34192234e-01 3.86532575e-01 1.00000000e+00 ... 3.84105960e-02

7.81250000e-03 8.57142857e-03]

...

[1.34812060e-02 7.43331876e-03 6.73948408e-03 ... 1.00000000e+00

2.34375000e-02 8.57142857e-03]

[1.26178775e-03 0.00000000e+00 1.16198001e-04 ... 1.98675497e-03

1.00000000e+00 2.57142857e-02]

[8.36764511e-03 1.74901618e-03 6.97188008e-04 ... 3.97350993e-03

1.40625000e-01 1.00000000e+00]]

Calinski-Harabasz Score without affinity:

7.791262553375102

y_pred [2 4 1 4 4 1 4 1 4 1 4 4 4 4 4 4 4 4 4 4 4 4 4 4 4 4 1 4 4 4 4 4 0 4 0 0 4

4 4 6 4 3 4 4 4 4 4 4 4 4 4 4 4 4 4 4 5 5 5 4 3 4 7 7 7 5 7 4 6 6 4 6 6 5

4 5 4 4 4 4]

Calinski-Harabasz Score with n_cluster= 3 score= 9.492174347024601

pred_y: [1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 1 0 1 0 0 0 1 0 0 0 0 0 0 0 0 0 0 1 2 0 0 1 0 2 2 2 0 2 0 0 0 0 0 0 2

0 0 0 0 0 0]

Calinski-Harabasz Score with n_cluster= 4 score= 8.725501530904658

pred_y: [1 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3

3 3 0 3 1 3 0 0 0 3 3 3 3 3 3 3 3 3 3 2 2 3 3 1 3 2 2 2 3 2 3 0 0 3 0 0 2

3 3 3 3 3 3]

Calinski-Harabasz Score with n_cluster= 5 score= 7.40234200110186

pred_y: [1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 3 0 3 3 0

0 0 4 0 4 0 4 0 4 0 0 0 0 0 0 0 0 0 0 4 0 0 0 1 0 0 0 0 0 0 0 2 2 0 2 2 0

0 0 0 0 0 0]

Calinski-Harabasz Score with n_cluster= 6 score= 9.752144844448322

pred_y: [1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 4 0 4 4 0

0 0 2 0 2 0 0 0 2 0 0 0 0 0 0 0 0 0 0 2 5 0 0 2 0 5 5 5 0 5 0 3 3 0 3 3 5

0 0 0 0 0 0]

Calinski-Harabasz Score with n_cluster= 7 score= 8.785937326387648

pred_y: [1 0 6 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 2 0 2 2 0

0 0 3 0 3 0 0 0 3 0 0 0 0 0 0 0 0 0 0 3 0 0 0 3 0 4 4 4 0 4 0 5 5 0 5 5 4

0 0 0 0 0 0]

Calinski-Harabasz Score with n_cluster= 8 score= 7.506167403594684

pred_y: [1 0 3 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 6 0 6 6 0

0 0 4 0 2 0 0 0 0 0 0 0 0 0 0 0 0 0 0 7 7 7 0 2 0 5 5 5 7 5 0 4 4 0 4 4 7

0 7 0 0 0 0]

Calinski-Harabasz Score with n_cluster= 9 score= 7.752484557844312

pred_y: [1 0 6 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 7 0 7 7 0

0 0 3 0 2 5 5 5 5 0 0 0 0 0 0 0 0 0 0 4 4 4 0 2 0 8 8 8 4 8 0 3 3 0 3 3 4

0 4 0 0 0 0]

Calinski-Harabasz Score with n_cluster= 10 score= 7.382406625549057

pred_y: [1 2 5 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 6 2 6 6 2

2 2 8 7 3 7 7 7 7 9 9 2 9 2 2 2 2 2 7 0 0 0 2 3 2 4 4 4 0 4 2 8 8 2 8 8 0

2 0 2 2 2 2]

9.752144844448322

max score:

{'n_cluster': 6, 'score': 9.752144844448322}

1376

1376

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?