目录

Feature Crosses

A feature cross is a synthetic feature formed by multiplying (crossing) two or more features. Crossing combinations of features can provide predictive abilities beyond what those features can provide individually.

Learning Objectives

- Build an understanding of feature crosses.

- Implement feature crosses in TensorFlow.

Encoding Nonlinearity

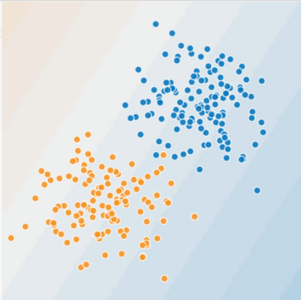

In Figures 1 and 2, imagine the following:

- The blue dots represent sick trees.

- The orange dots represent healthy trees.

Figure 1. Is this a linear problem?

Can you draw a line that neatly separates the sick trees from the healthy trees? Sure. This is a linear problem. The line won't be perfect. A sick tree or two might be on the "healthy" side, but your line will be a good predictor.

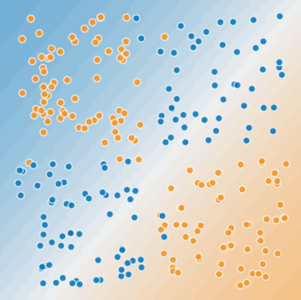

Now look at the following figure:

Figure 2. Is this a linear problem?

Can you draw a single straight line that neatly separates the sick trees from the healthy trees? No, you can't. This is a nonlinear problem. Any line you draw will be a poor predictor of tree health.

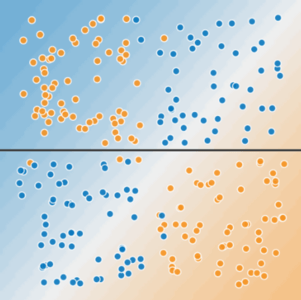

Figure 3. A single line can't separate the two classes.

To solve the nonlinear problem shown in Figure 2, create a feature cross.

A feature cross is a synthetic feature that encodes nonlinearity in the feature space by multiplying two or more input features together. (The term cross comes from cross product.) Let's create a feature cross named x3 by crossing x1 and x2:

x3=x1x2

We treat this newly minted x3 feature cross just like any other feature. The linear formula becomes:

y=b+w1x1+w2x2+w3x3

A linear algorithm can learn a weight for w3 just as it would for w1 and w2. In other words, although w3 encodes nonlinear information, you don’t need to change how the linear model trains to determine the value of w3.

Kinds of feature crosses

We can create many different kinds of feature crosses. For example:

[A X B]: a feature cross formed by multiplying the values of two features.[A x B x C x D x E]: a feature cross formed by multiplying the values of five features.[A x A]: a feature cross formed by squaring a single feature.

Thanks to stochastic gradient descent, linear models can be trained efficiently. Consequently, supplementing scaled linear models with feature crosses has traditionally been an efficient way to train on massive-scale data sets.

特征交叉是一种通过将两个或多个特征相乘来创建合成特征的方法,可以捕捉特征之间的非线性关系。本文介绍了特征交叉的概念、用途,以及如何在TensorFlow中实现。通过特征交叉,线性模型能够学习到更复杂的模式,解决非线性问题。

特征交叉是一种通过将两个或多个特征相乘来创建合成特征的方法,可以捕捉特征之间的非线性关系。本文介绍了特征交叉的概念、用途,以及如何在TensorFlow中实现。通过特征交叉,线性模型能够学习到更复杂的模式,解决非线性问题。

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

2331

2331

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?