背景

AX7020 sdk 双裸核使用 lwip

其中,cpu1使用lwip eth0,一直ping不通网络,什么原因?

可以解决一下吗?可有偿

使用的是sdk篇章,摄像头数据以太网传输的例程,将这个例程应用在cpu1上,cpu0只进行了中断的初始化然后进入一个while的空循环。

在cpu1里改动的地方是timer中断的重映射和emac中断的重映射,是还需要再配置什么地方吗

有个小伙伴搞AMP CPU1运行LWIP,搞了好几天还是ping不通,于是向我有偿求助,看在钱的面子上研究了一下,在这里做个分享

前言

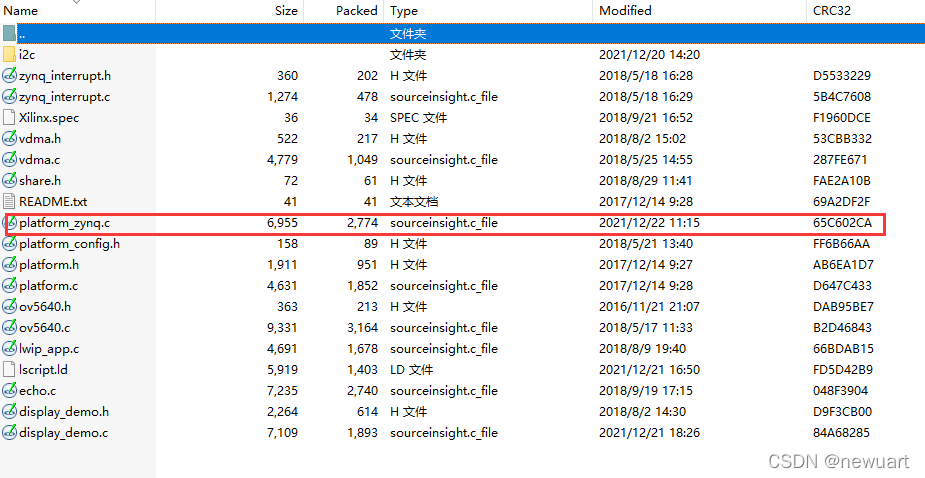

小伙伴把她的工程发给我了,我大致看了一下,看到修改了platform_zynq.c

主要是修改了platform_setup_interrupts(void)函数,做了中断映射。

void platform_setup_interrupts(void)

{

// Xil_ExceptionInit();

//

// XScuGic_DeviceInitialize(INTC_DEVICE_ID);

//

// /*

// * Connect the interrupt controller interrupt handler to the hardware

// * interrupt handling logic in the processor.

// */

// Xil_ExceptionRegisterHandler(XIL_EXCEPTION_ID_IRQ_INT,

// (Xil_ExceptionHandler)XScuGic_DeviceInterruptHandler,

// (void *)INTC_DEVICE_ID);

/*

* Connect the device driver handler that will be called when an

* interrupt for the device occurs, the handler defined above performs

* the specific interrupt processing for the device.

*/

// XScuGic_RegisterHandler(INTC_BASE_ADDR, TIMER_IRPT_INTR,

// (Xil_ExceptionHandler)timer_callback,

// (void *)&TimerInstance);

// /*

// * Enable the interrupt for scu timer.

// */

// XScuGic_EnableIntr(INTC_DIST_BASE_ADDR, TIMER_IRPT_INTR);

//set up the timer interrupt

//set up the timer interrupt

XScuGic_Connect(&XScuGicInstance, TIMER_IRPT_INTR,

(Xil_ExceptionHandler)timer_callback,

(void *)&TimerInstance);

//enable the interrupt for the Timer at GIC

XScuGic_InterruptMaptoCpu(&XScuGicInstance,1, TIMER_IRPT_INTR);

XScuGic_Enable(&XScuGicInstance, TIMER_IRPT_INTR);

return;

}

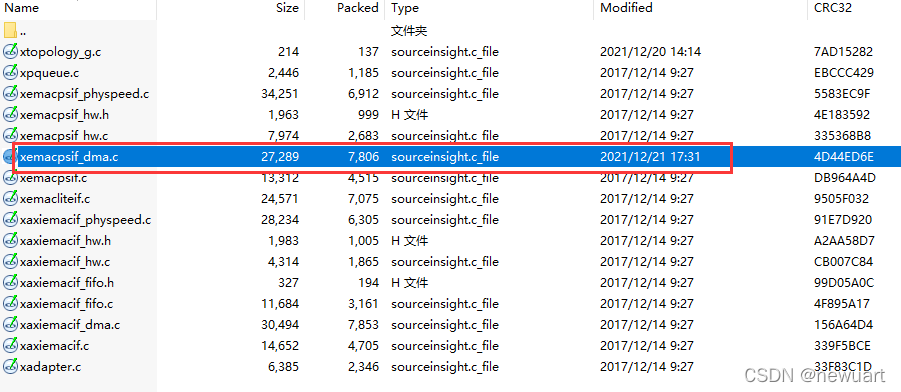

想到lwip除了用timer中断,还用了mac中断,所以就问她有没有映射MAC中断,她说映射了,原来是修改了BSP代码主要修改了init_dma函数

XStatus init_dma(struct xemac_s *xemac)

{

XEmacPs_Bd bdtemplate;

XEmacPs_BdRing *rxringptr, *txringptr;

XEmacPs_Bd *rxbd;

struct pbuf *p;

XStatus status;

s32_t i;

u32_t bdindex;

volatile UINTPTR tempaddress;

u32_t index;

u32_t gigeversion;

XEmacPs_Bd *bdtxterminate;

XEmacPs_Bd *bdrxterminate;

u32 *temp;

/*

* Disable L1 prefetch if the processor type is Cortex A53. It is

* observed that the L1 prefetching for ARMv8 can cause issues while

* dealing with cache memory on Rx path. On Rx path, the lwIP adapter

* does a clean and invalidation of buffers (pbuf payload) before

* allocating them to Rx BDs. However, there are chances that the

* the same cache line may get prefetched by the time Rx data is

* DMAed to the same buffer. In such cases, CPU fetches stale data from

* cache memory instead of getting them from memory. To avoid such

* scenarios L1 prefetch is being disabled for ARMv8. That can cause

* a performance degaradation in the range of 3-5%. In tests, it is

* generally observed that this performance degaradation is quite

* insignificant to be really visible.

*/

#if defined __aarch64__

Xil_ConfigureL1Prefetch(0);

#endif

xemacpsif_s *xemacpsif = (xemacpsif_s *)(xemac->state);

struct xtopology_t *xtopologyp = &xtopology[xemac->topology_index];

index = get_base_index_rxpbufsstorage (xemacpsif);

gigeversion = ((Xil_In32(xemacpsif->emacps.Config.BaseAddress + 0xFC)) >> 16) & 0xFFF;

/*

* The BDs need to be allocated in uncached memory. Hence the 1 MB

* address range allocated for Bd_Space is made uncached

* by setting appropriate attributes in the translation table.

* The Bd_Space is aligned to 1MB and has a size of 1 MB. This ensures

* a reserved uncached area used only for BDs.

*/

if (bd_space_attr_set == 0) {

#if defined (ARMR5)

Xil_SetTlbAttributes((s32_t)bd_space, STRONG_ORDERD_SHARED | PRIV_RW_USER_RW); // addr, attr

#else

#if defined __aarch64__

Xil_SetTlbAttributes((u64)bd_space, NORM_NONCACHE | INNER_SHAREABLE);

#else

Xil_SetTlbAttributes((s32_t)bd_space, DEVICE_MEMORY); // addr, attr

#endif

#endif

bd_space_attr_set = 1;

}

rxringptr = &XEmacPs_GetRxRing(&xemacpsif->emacps);

txringptr = &XEmacPs_GetTxRing(&xemacpsif->emacps);

LWIP_DEBUGF(NETIF_DEBUG, ("rxringptr: 0x%08x\r\n", rxringptr));

LWIP_DEBUGF(NETIF_DEBUG, ("txringptr: 0x%08x\r\n", txringptr));

/* Allocate 64k for Rx and Tx bds each to take care of extreme cases */

tempaddress = (UINTPTR)&(bd_space[bd_space_index]);

xemacpsif->rx_bdspace = (void *)tempaddress;

bd_space_index += 0x10000;

tempaddress = (UINTPTR)&(bd_space[bd_space_index]);

xemacpsif->tx_bdspace = (void *)tempaddress;

bd_space_index += 0x10000;

if (gigeversion > 2) {

tempaddress = (UINTPTR)&(bd_space[bd_space_index]);

bdrxterminate = (XEmacPs_Bd *)tempaddress;

bd_space_index += 0x10000;

tempaddress = (UINTPTR)&(bd_space[bd_space_index]);

bdtxterminate = (XEmacPs_Bd *)tempaddress;

bd_space_index += 0x10000;

}

LWIP_DEBUGF(NETIF_DEBUG, ("rx_bdspace: %p \r\n", xemacpsif->rx_bdspace));

LWIP_DEBUGF(NETIF_DEBUG, ("tx_bdspace: %p \r\n", xemacpsif->tx_bdspace));

if (!xemacpsif->rx_bdspace || !xemacpsif->tx_bdspace) {

xil_printf("%s@%d: Error: Unable to allocate memory for TX/RX buffer descriptors",

__FILE__, __LINE__);

return ERR_IF;

}

/*

* Setup RxBD space.

*

* Setup a BD template for the Rx channel. This template will be copied to

* every RxBD. We will not have to explicitly set these again.

*/

XEmacPs_BdClear(&bdtemplate);

/*

* Create the RxBD ring

*/

status = XEmacPs_BdRingCreate(rxringptr, (UINTPTR) xemacpsif->rx_bdspace,

(UINTPTR) xemacpsif->rx_bdspace, BD_ALIGNMENT,

XLWIP_CONFIG_N_RX_DESC);

if (status != XST_SUCCESS) {

LWIP_DEBUGF(NETIF_DEBUG, ("Error setting up RxBD space\r\n"));

return ERR_IF;

}

status = XEmacPs_BdRingClone(rxringptr, &bdtemplate, XEMACPS_RECV);

if (status != XST_SUCCESS) {

LWIP_DEBUGF(NETIF_DEBUG, ("Error initializing RxBD space\r\n"));

return ERR_IF;

}

XEmacPs_BdClear(&bdtemplate);

XEmacPs_BdSetStatus(&bdtemplate, XEMACPS_TXBUF_USED_MASK);

/*

* Create the TxBD ring

*/

status = XEmacPs_BdRingCreate(txringptr, (UINTPTR) xemacpsif->tx_bdspace,

(UINTPTR) xemacpsif->tx_bdspace, BD_ALIGNMENT,

XLWIP_CONFIG_N_TX_DESC);

if (status != XST_SUCCESS) {

return ERR_IF;

}

/* We reuse the bd template, as the same one will work for both rx and tx. */

status = XEmacPs_BdRingClone(txringptr, &bdtemplate, XEMACPS_SEND);

if (status != XST_SUCCESS) {

return ERR_IF;

}

/*

* Allocate RX descriptors, 1 RxBD at a time.

*/

for (i = 0; i < XLWIP_CONFIG_N_RX_DESC; i++) {

#ifdef ZYNQMP_USE_JUMBO

p = pbuf_alloc(PBUF_RAW, MAX_FRAME_SIZE_JUMBO, PBUF_POOL);

#else

p = pbuf_alloc(PBUF_RAW, XEMACPS_MAX_FRAME_SIZE, PBUF_POOL);

#endif

if (!p) {

#if LINK_STATS

lwip_stats.link.memerr++;

lwip_stats.link.drop++;

#endif

printf("unable to alloc pbuf in init_dma\r\n");

return ERR_IF;

}

status = XEmacPs_BdRingAlloc(rxringptr, 1, &rxbd);

if (status != XST_SUCCESS) {

LWIP_DEBUGF(NETIF_DEBUG, ("init_dma: Error allocating RxBD\r\n"));

pbuf_free(p);

return ERR_IF;

}

/* Enqueue to HW */

status = XEmacPs_BdRingToHw(rxringptr, 1, rxbd);

if (status != XST_SUCCESS) {

LWIP_DEBUGF(NETIF_DEBUG, ("Error: committing RxBD to HW\r\n"));

pbuf_free(p);

XEmacPs_BdRingUnAlloc(rxringptr, 1, rxbd);

return ERR_IF;

}

bdindex = XEMACPS_BD_TO_INDEX(rxringptr, rxbd);

temp = (u32 *)rxbd;

*temp = 0;

if (bdindex == (XLWIP_CONFIG_N_RX_DESC - 1)) {

*temp = 0x00000002;

}

temp++;

*temp = 0;

dsb();

#ifdef ZYNQMP_USE_JUMBO

if (xemacpsif->emacps.Config.IsCacheCoherent == 0) {

Xil_DCacheInvalidateRange((UINTPTR)p->payload, (UINTPTR)MAX_FRAME_SIZE_JUMBO);

}

#else

if (xemacpsif->emacps.Config.IsCacheCoherent == 0) {

Xil_DCacheInvalidateRange((UINTPTR)p->payload, (UINTPTR)XEMACPS_MAX_FRAME_SIZE);

}

#endif

XEmacPs_BdSetAddressRx(rxbd, (UINTPTR)p->payload);

rx_pbufs_storage[index + bdindex] = (UINTPTR)p;

}

XEmacPs_SetQueuePtr(&(xemacpsif->emacps), xemacpsif->emacps.RxBdRing.BaseBdAddr, 0, XEMACPS_RECV);

if (gigeversion > 2) {

XEmacPs_SetQueuePtr(&(xemacpsif->emacps), xemacpsif->emacps.TxBdRing.BaseBdAddr, 1, XEMACPS_SEND);

}else {

XEmacPs_SetQueuePtr(&(xemacpsif->emacps), xemacpsif->emacps.TxBdRing.BaseBdAddr, 0, XEMACPS_SEND);

}

if (gigeversion > 2)

{

/*

* This version of GEM supports priority queuing and the current

* dirver is using tx priority queue 1 and normal rx queue for

* packet transmit and receive. The below code ensure that the

* other queue pointers are parked to known state for avoiding

* the controller to malfunction by fetching the descriptors

* from these queues.

*/

XEmacPs_BdClear(bdrxterminate);

XEmacPs_BdSetAddressRx(bdrxterminate, (XEMACPS_RXBUF_NEW_MASK |

XEMACPS_RXBUF_WRAP_MASK));

XEmacPs_Out32((xemacpsif->emacps.Config.BaseAddress + XEMACPS_RXQ1BASE_OFFSET),

(UINTPTR)bdrxterminate);

XEmacPs_BdClear(bdtxterminate);

XEmacPs_BdSetStatus(bdtxterminate, (XEMACPS_TXBUF_USED_MASK |

XEMACPS_TXBUF_WRAP_MASK));

XEmacPs_Out32((xemacpsif->emacps.Config.BaseAddress + XEMACPS_TXQBASE_OFFSET),

(UINTPTR)bdtxterminate);

}

/*

* Connect the device driver handler that will be called when an

* interrupt for the device occurs, the handler defined above performs

* the specific interrupt processing for the device.

*/

// XScuGic_RegisterHandler(INTC_BASE_ADDR, xtopologyp->scugic_emac_intr,

// (Xil_ExceptionHandler)XEmacPs_IntrHandler,

// (void *)&xemacpsif->emacps);

// /*

// * Enable the interrupt for emacps.

// */

// XScuGic_EnableIntr(INTC_DIST_BASE_ADDR, (u32) xtopologyp->scugic_emac_intr);

// emac_intr_num = (u32) xtopologyp->scugic_emac_intr;

XScuGic_Connect(&XScuGicInstance, (u32) xtopologyp->scugic_emac_intr,

(Xil_ExceptionHandler)XEmacPs_IntrHandler,

(void *)&xemacpsif->emacps);

XScuGic_InterruptMaptoCpu(&XScuGicInstance,1, (u32) xtopologyp->scugic_emac_intr);

XScuGic_Enable(&XScuGicInstance, (u32) xtopologyp->scugic_emac_intr);

emac_intr_num = (u32) xtopologyp->scugic_emac_intr;

return 0;

}

可以说思路大部分工作做的还可以。就是不能PING。

一、问题诊断

1.判断中断是否正常工作

在timer和dma中断服务函数上加上断点,发现能够进入中断服务函数,说明中断映射都是正确的。那为什么LWIP不能ping通呢?是不是百思不得其姐?

只有2种可能:1,网口真坏了,2,读写数据错误了

网口是不是坏了好排查,用cpu0建立一个lwip测试一下,可以ping通,排除这个可能;那就是数据读写错误,只有cache会影响数据读写。那先把L2 cache禁用掉

2.禁用L2 cache

使用简单粗暴的方法:Xil_L2CacheDisable();禁用L2 cache后,立刻可以ping通了,问题解决。

总结:

ZYNQ AMP双核运行中断要映射。

L2 cache是双核共享,在使用AMP模式后CPU1 BSP屏蔽了对L2 cache的操作,导致cache不能刷新,要禁用CPU1的L2 cache。

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?