目录

出现问题2:TypeError: Cannot handle this data type: (1, 1, 256), |u1

Step1:环境配置

获取代码

git clone https://github.com/hiroharu-kato/neural_renderer.git在bashrc中配置以下环境变量,并生效

export PYTHONPATH=/home/sqy/neural_renderer/neural_renderer创建conda环境

conda create -n neural_renderer python=3.8安装包

pip install chainer

pip install scikit-image

# (根据CUDA版本选择cupy,本句是针对11.4版本,所以是114,其他版本类似。请参考以下url)

# https://www.its404.com/searchArticle?qc=cuda11.4%E5%AF%B9%E5%BA%94cupy%E7%89%88%E6%9C%AC&page=1

pip install -i https://pypi.tuna.tsinghua.edu.cn/simple cupy-cuda114执行安装

(编译需要很久,参数就是user,不是自己的用户名,不要改)

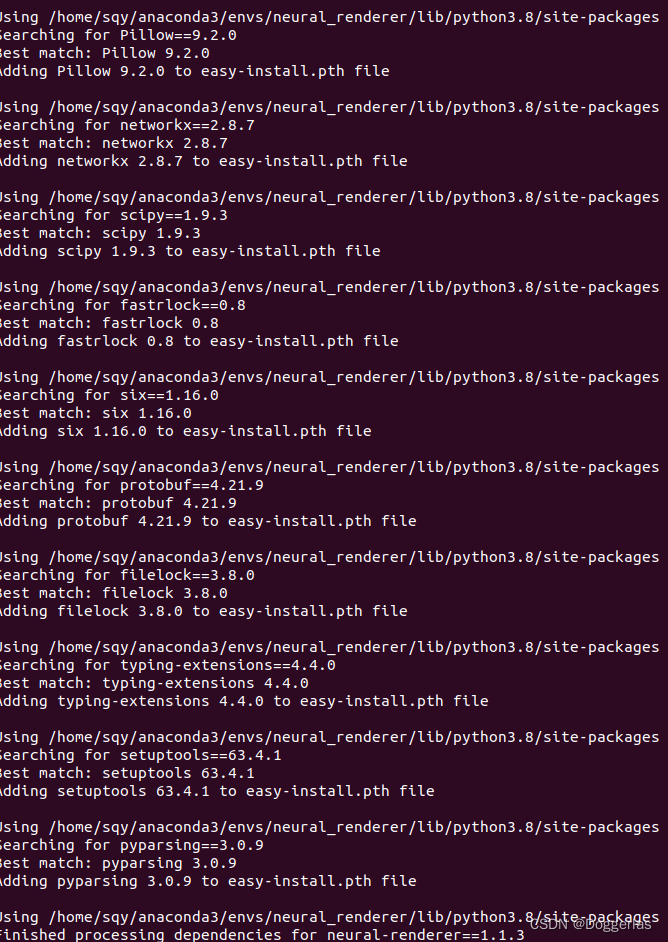

python setup.py install --user安装完毕

Step2:代码测试

更改权限

sudo chmod 777 /examples/run.sh 测试

执行不通过

bash ./examples/run.sh因为代码是好几年以前的了,所以以下API已经被丢弃掉了

scipy.misc.imread

scipy.misc.toimage

为了正常运行,需要将example1-4的相关语句更改API 换成同功能的

imageio.imread

Image.fromarray

以下给出几个更改样例,这样替换亲测有效

#self.image_ref = (scipy.misc.imread(filename_ref).max(-1) != 0).astype('float32')

self.image_ref = (imageio.imread(filename_ref).max(-1) != 0).astype('float32')#scipy.misc.toimage(image, cmin=0, cmax=1).save(filename_ref)

image = image[0] * 255# 这个image比较特殊 需要加上[0]

image=image.astype(np.uint8)

Image.fromarray(image).save(filename_ref)image = images.data.get()[0].transpose((1, 2, 0))

# add by wlm 20221027

#scipy.misc.toimage(image, cmin=0, cmax=1).save('%s/_tmp_%04d.png' % (working_directory, num))

image = image * 255

image=image.astype(np.uint8)

Image.fromarray(image).save(f'{working_directory:s}/_tmp_{num:04d}.png', 'png')example1-4完整更改版见下:

example1.py

"""

Example 1. Drawing a teapot from multiple viewpoints.

"""

import argparse

import glob

import os

import subprocess

from tokenize import Ignore

import chainer

import numpy as np

import scipy.misc

import tqdm

import neural_renderer

# add by wlm 20221027

import imageio.v2 as imageio

from PIL import Image

import warnings

warnings.simplefilter("ignore")

def run():

parser = argparse.ArgumentParser()

parser.add_argument('-i', '--filename_input', type=str, default='./examples/data/teapot.obj')

parser.add_argument('-o', '--filename_output', type=str, default='./examples/data/example1.gif')

parser.add_argument('-g', '--gpu', type=int, default=0)

args = parser.parse_args()

working_directory = os.path.dirname(args.filename_output)

# other settings

camera_distance = 2.732

elevation = 30

texture_size = 2

# load .obj

vertices, faces = neural_renderer.load_obj(args.filename_input)

vertices = vertices[None, :, :] # [num_vertices, XYZ] -> [batch_size=1, num_vertices, XYZ]

faces = faces[None, :, :] # [num_faces, 3] -> [batch_size=1, num_faces, 3]

# create texture [batch_size=1, num_faces, texture_size, texture_size, texture_size, RGB]

textures = np.ones((1, faces.shape[1], texture_size, texture_size, texture_size, 3), 'float32')

# to gpu

chainer.cuda.get_device_from_id(args.gpu).use()

vertices = chainer.cuda.to_gpu(vertices)

faces = chainer.cuda.to_gpu(faces)

textures = chainer.cuda.to_gpu(textures)

# create renderer

renderer = neural_renderer.Renderer()

# draw object

loop = tqdm.tqdm(range(0, 360, 4))

for num, azimuth in enumerate(loop):

loop.set_description('Drawing')

renderer.eye = neural_renderer.get_points_from_angles(camera_distance, elevation, azimuth)

images = renderer.render(vertices, faces, textures) # [batch_size, RGB, image_size, image_size]

image = images.data.get()[0].transpose((1, 2, 0)) # [image_size, image_size, RGB]

# add by wlm 20221027

#scipy.misc.toimage(image, cmin=0, cmax=1).save('%s/_tmp_%04d.png' % (working_directory, num))

image = image * 255

image=image.astype(np.uint8)

Image.fromarray(image).save(f'{working_directory:s}/_tmp_{num:04d}.png', 'png')

# generate gif (need ImageMagick)

options = '-delay 8 -loop 0 -layers optimize'

subprocess.call('convert %s %s/_tmp_*.png %s' % (options, working_directory, args.filename_output), shell=True)

# remove temporary files

for filename in glob.glob('%s/_tmp_*.png' % working_directory):

os.remove(filename)

if __name__ == '__main__':

run()

example2.py

"""

Example 2. Optimizing vertices.

"""

import argparse

import glob

import os

import subprocess

import chainer

import chainer.functions as cf

import numpy as np

import scipy.misc

import tqdm

import neural_renderer

# add by wlm 20221027

import imageio.v2 as imageio

from PIL import Image

import warnings

warnings.simplefilter("ignore")

class Model(chainer.Link):

def __init__(self, filename_obj, filename_ref):

super(Model, self).__init__()

with self.init_scope():

# load .obj

vertices, faces = neural_renderer.load_obj(filename_obj)

self.vertices = chainer.Parameter(vertices[None, :, :])

self.faces = faces[None, :, :]

# create textures

texture_size = 2

textures = np.ones((1, self.faces.shape[1], texture_size, texture_size, texture_size, 3), 'float32')

self.textures = textures

# load reference image

# add by wlm 20221027

#self.image_ref = scipy.misc.imread(filename_ref).astype('float32').mean(-1) / 255.

self.image_ref = imageio.imread(filename_ref).astype('float32').mean(-1) / 255.

# setup renderer

renderer = neural_renderer.Renderer()

self.renderer = renderer

def to_gpu(self, device=None):

super(Model, self).to_gpu(device)

self.faces = chainer.cuda.to_gpu(self.faces, device)

self.textures = chainer.cuda.to_gpu(self.textures, device)

self.image_ref = chainer.cuda.to_gpu(self.image_ref, device)

def __call__(self):

self.renderer.eye = neural_renderer.get_points_from_angles(2.732, 0, 90)

image = self.renderer.render_silhouettes(self.vertices, self.faces)

loss = cf.sum(cf.square(image - self.image_ref[None, :, :]))

return loss

def make_gif(working_directory, filename):

# generate gif (need ImageMagick)

options = '-delay 8 -loop 0 -layers optimize'

subprocess.call('convert %s %s/_tmp_*.png %s' % (options, working_directory, filename), shell=True)

for filename in glob.glob('%s/_tmp_*.png' % working_directory):

os.remove(filename)

def run():

parser = argparse.ArgumentParser()

parser.add_argument('-io', '--filename_obj', type=str, default='./examples/data/teapot.obj')

parser.add_argument('-ir', '--filename_ref', type=str, default='./examples/data/example2_ref.png')

parser.add_argument(

'-oo', '--filename_output_optimization', type=str, default='./examples/data/example2_optimization.gif')

parser.add_argument(

'-or', '--filename_output_result', type=str, default='./examples/data/example2_result.gif')

parser.add_argument('-g', '--gpu', type=int, default=0)

args = parser.parse_args()

working_directory = os.path.dirname(args.filename_output_result)

model = Model(args.filename_obj, args.filename_ref)

model.to_gpu()

optimizer = chainer.optimizers.Adam()

optimizer.setup(model)

loop = tqdm.tqdm(range(300))

for i in loop:

loop.set_description('Optimizing')

optimizer.target.cleargrads()

loss = model()

loss.backward()

optimizer.update()

images = model.renderer.render_silhouettes(model.vertices, model.faces)

image = images.data.get()[0]

# add by wlm 20221027

# scipy.misc.toimage(image, cmin=0, cmax=1).save('%s/_tmp_%04d.png' % (working_directory, i))

image = image * 255

image=image.astype(np.uint8)

Image.fromarray(image).save(f'{working_directory:s}/_tmp_{i:04d}.png', 'png')

make_gif(working_directory, args.filename_output_optimization)

# draw object

loop = tqdm.tqdm(range(0, 360, 4))

for num, azimuth in enumerate(loop):

loop.set_description('Drawing')

model.renderer.eye = neural_renderer.get_points_from_angles(2.732, 0, azimuth)

images = model.renderer.render(model.vertices, model.faces, model.textures)

image = images.data.get()[0].transpose((1, 2, 0))

# add by wlm 20221027

#scipy.misc.toimage(image, cmin=0, cmax=1).save('%s/_tmp_%04d.png' % (working_directory, num))

image = image * 255

image=image.astype(np.uint8)

Image.fromarray(image).save(f'{working_directory:s}/_tmp_{num:04d}.png', 'png')

make_gif(working_directory, args.filename_output_result)

if __name__ == '__main__':

run()

example3.py

"""

Example 3. Optimizing textures.

"""

import argparse

import glob

import os

import subprocess

import chainer

import chainer.functions as cf

import numpy as np

import scipy.misc

import tqdm

import neural_renderer

# add by wlm 20221027

import imageio.v2 as imageio

from PIL import Image

import warnings

warnings.simplefilter("ignore")

class Model(chainer.Link):

def __init__(self, filename_obj, filename_ref):

super(Model, self).__init__()

with self.init_scope():

# load .obj

vertices, faces = neural_renderer.load_obj(filename_obj)

self.vertices = vertices[None, :, :]

self.faces = faces[None, :, :]

# create textures

texture_size = 4

textures = np.zeros((1, self.faces.shape[1], texture_size, texture_size, texture_size, 3), 'float32')

self.textures = chainer.Parameter(textures)

# load reference image

#self.image_ref = scipy.misc.imread(filename_ref).astype('float32') / 255.

# add by wlm 20221027

self.image_ref = imageio.imread(filename_ref).astype('float32') / 255.

# setup renderer

renderer = neural_renderer.Renderer()

renderer.perspective = False

renderer.light_intensity_directional = 0.0

renderer.light_intensity_ambient = 1.0

self.renderer = renderer

def to_gpu(self, device=None):

super(Model, self).to_gpu(device)

self.faces = chainer.cuda.to_gpu(self.faces, device)

self.vertices = chainer.cuda.to_gpu(self.vertices, device)

self.image_ref = chainer.cuda.to_gpu(self.image_ref, device)

def __call__(self):

self.renderer.eye = neural_renderer.get_points_from_angles(2.732, 0, np.random.uniform(0, 360))

image = self.renderer.render(self.vertices, self.faces, cf.tanh(self.textures))

loss = cf.sum(cf.square(image - self.image_ref.transpose((2, 0, 1))[None, :, :, :]))

return loss

def make_gif(working_directory, filename):

# generate gif (need ImageMagick)

options = '-delay 8 -loop 0 -layers optimize'

subprocess.call('convert %s %s/_tmp_*.png %s' % (options, working_directory, filename), shell=True)

for filename in glob.glob('%s/_tmp_*.png' % working_directory):

os.remove(filename)

def run():

parser = argparse.ArgumentParser()

parser.add_argument('-io', '--filename_obj', type=str, default='./examples/data/teapot.obj')

parser.add_argument('-ir', '--filename_ref', type=str, default='./examples/data/example3_ref.png')

parser.add_argument('-or', '--filename_output', type=str, default='./examples/data/example3_result.gif')

parser.add_argument('-g', '--gpu', type=int, default=0)

args = parser.parse_args()

working_directory = os.path.dirname(args.filename_output)

model = Model(args.filename_obj, args.filename_ref)

model.to_gpu()

optimizer = chainer.optimizers.Adam(alpha=0.1, beta1=0.5)

optimizer.setup(model)

loop = tqdm.tqdm(range(300))

for _ in loop:

loop.set_description('Optimizing')

optimizer.target.cleargrads()

loss = model()

loss.backward()

optimizer.update()

# draw object

loop = tqdm.tqdm(range(0, 360, 4))

for num, azimuth in enumerate(loop):

loop.set_description('Drawing')

model.renderer.eye = neural_renderer.get_points_from_angles(2.732, 0, azimuth)

images = model.renderer.render(model.vertices, model.faces, cf.tanh(model.textures))

image = images.data.get()[0].transpose((1, 2, 0))

# add by wlm 20221027

#scipy.misc.toimage(image, cmin=0, cmax=1).save('%s/_tmp_%04d.png' % (working_directory, num))

image = image * 255

image=image.astype(np.uint8)

Image.fromarray(image).save(f'{working_directory:s}/_tmp_{num:04d}.png', 'png')

make_gif(working_directory, args.filename_output)

if __name__ == '__main__':

run()

example4.py

"""

Example 4. Finding camera parameters.

"""

import argparse

import glob

import os

import subprocess

import chainer

import chainer.functions as cf

import numpy as np

import scipy.misc

import tqdm

import warnings

warnings.simplefilter("ignore")

import neural_renderer

# add by wlm 20221027

import imageio.v2 as imageio

from PIL import Image

class Model(chainer.Link):

def __init__(self, filename_obj, filename_ref=None):

super(Model, self).__init__()

with self.init_scope():

# load .obj

vertices, faces = neural_renderer.load_obj(filename_obj)

self.vertices = vertices[None, :, :]

self.faces = faces[None, :, :]

# create textures

texture_size = 2

textures = np.ones((1, self.faces.shape[1], texture_size, texture_size, texture_size, 3), 'float32')

self.textures = textures

# load reference image

if filename_ref is not None:

# add by wlm 20221027

#self.image_ref = (scipy.misc.imread(filename_ref).max(-1) != 0).astype('float32')

self.image_ref = (imageio.imread(filename_ref).max(-1) != 0).astype('float32')

else:

self.image_ref = None

# camera parameters

self.camera_position = chainer.Parameter(np.array([6, 10, -14], 'float32'))

# setup renderer

renderer = neural_renderer.Renderer()

renderer.eye = self.camera_position

self.renderer = renderer

def to_gpu(self, device=None):

super(Model, self).to_gpu(device)

self.faces = chainer.cuda.to_gpu(self.faces, device)

self.vertices = chainer.cuda.to_gpu(self.vertices, device)

self.textures = chainer.cuda.to_gpu(self.textures, device)

if self.image_ref is not None:

self.image_ref = chainer.cuda.to_gpu(self.image_ref)

def __call__(self):

image = self.renderer.render_silhouettes(self.vertices, self.faces)

loss = cf.sum(cf.square(image - self.image_ref[None, :, :]))

return loss

def make_gif(working_directory, filename):

# generate gif (need ImageMagick)

options = '-delay 8 -loop 0 -layers optimize'

subprocess.call('convert %s %s/_tmp_*.png %s' % (options, working_directory, filename), shell=True)

for filename in glob.glob('%s/_tmp_*.png' % working_directory):

os.remove(filename)

def make_reference_image(filename_ref, filename_obj):

model = Model(filename_obj)

model.to_gpu()

model.renderer.eye = neural_renderer.get_points_from_angles(2.732, 30, -15)

images = model.renderer.render(model.vertices, model.faces, cf.tanh(model.textures))

image = images.data.get()[0]

# add by wlm 20221027

#scipy.misc.toimage(image, cmin=0, cmax=1).save(filename_ref)

image = image[0] * 255# 这个image比较特殊 需要加上[0]

image=image.astype(np.uint8)

Image.fromarray(image).save(filename_ref)

def run():

parser = argparse.ArgumentParser()

parser.add_argument('-io', '--filename_obj', type=str, default='./examples/data/teapot.obj')

parser.add_argument('-ir', '--filename_ref', type=str, default='./examples/data/example4_ref.png')

parser.add_argument('-or', '--filename_output', type=str, default='./examples/data/example4_result.gif')

parser.add_argument('-mr', '--make_reference_image', type=int, default=0)

parser.add_argument('-g', '--gpu', type=int, default=0)

args = parser.parse_args()

working_directory = os.path.dirname(args.filename_output)

if args.make_reference_image:

make_reference_image(args.filename_ref, args.filename_obj)

model = Model(args.filename_obj, args.filename_ref)

model.to_gpu()

optimizer = chainer.optimizers.Adam(alpha=0.1)

optimizer.setup(model)

loop = tqdm.tqdm(range(1000))

for i in loop:

optimizer.target.cleargrads()

loss = model()

loss.backward()

optimizer.update()

images = model.renderer.render(model.vertices, model.faces, cf.tanh(model.textures))

image = images.data.get()[0]

# add by wlm 20221027

#scipy.misc.toimage(image, cmin=0, cmax=1).save('%s/_tmp_%04d.png' % (working_directory, i))

image = image[0] * 255# 这个image比较特殊 需要加上[0]

image=image.astype(np.uint8)

Image.fromarray(image).save(f'{working_directory:s}/_tmp_{i:04d}.png', 'png')

loop.set_description('Optimizing (loss %.4f)' % loss.data)

if loss.data < 70:

break

make_gif(working_directory, args.filename_output)

if __name__ == '__main__':

run()

遇到的一些小问题

出现问题1:

convert-im6.q16: unable to open image ........ @ error/blob.c/OpenBlob/2874.

convert-im6.q16: no images defined ...... error/convert.c/ConvertImageCommand/3258.

原因:输出格式书写错误

错误地写成了

Image.fromarray(image).save(f'{working_directory:s}_tmp_{i:04d}.png', 'png')解决方法:应该改成以下语句(少了一个/)

Image.fromarray(image).save(f'{working_directory:s}/_tmp_{i:04d}.png', 'png')出现问题2:TypeError: Cannot handle this data type: (1, 1, 256), |u1

原因:格式错误,变量类型问题。image是单一变量不是数组

错误地写成了

image = image[0] * 255

解决方法:改成以下语句

image = image * 255Step3:执行结果

example/data中仅保留以下几个文件即可

example2_ref.png

example3_ref.png

example4_ref.png

以及teapot.obj

执行

bash ./examples/run.sh显示

在example/data中生成以下文件

example1

example2_optimization

example2_result

example3_result

example4_result

conda list

# packages in environment at /home/sqy/anaconda3/envs/neural_renderer:

#

# Name Version Build Channel

_libgcc_mutex 0.1 main

_openmp_mutex 5.1 1_gnu

ca-certificates 2022.07.19 h06a4308_0

certifi 2022.9.24 py38h06a4308_0

chainer 7.8.1 pypi_0 pypi

cupy-cuda114 9.3.0 pypi_0 pypi

fastrlock 0.8 pypi_0 pypi

filelock 3.8.0 pypi_0 pypi

imageio 2.22.2 pypi_0 pypi

ld_impl_linux-64 2.38 h1181459_1

libffi 3.3 he6710b0_2

libgcc-ng 11.2.0 h1234567_1

libgomp 11.2.0 h1234567_1

libstdcxx-ng 11.2.0 h1234567_1

ncurses 6.3 h5eee18b_3

networkx 2.8.7 pypi_0 pypi

numpy 1.23.4 pypi_0 pypi

openssl 1.1.1q h7f8727e_0

packaging 21.3 pypi_0 pypi

pillow 9.2.0 pypi_0 pypi

pip 22.2.2 py38h06a4308_0

protobuf 4.21.9 pypi_0 pypi

pyparsing 3.0.9 pypi_0 pypi

python 3.8.13 haa1d7c7_1

pywavelets 1.4.1 pypi_0 pypi

readline 8.2 h5eee18b_0

scikit-image 0.19.3 pypi_0 pypi

scipy 1.9.3 pypi_0 pypi

setuptools 63.4.1 py38h06a4308_0

six 1.16.0 pypi_0 pypi

sqlite 3.39.3 h5082296_0

tifffile 2022.10.10 pypi_0 pypi

tk 8.6.12 h1ccaba5_0

typing-extensions 4.4.0 pypi_0 pypi

wheel 0.37.1 pyhd3eb1b0_0

xz 5.2.6 h5eee18b_0

zlib 1.2.13 h5eee18b_0

4545

4545

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?