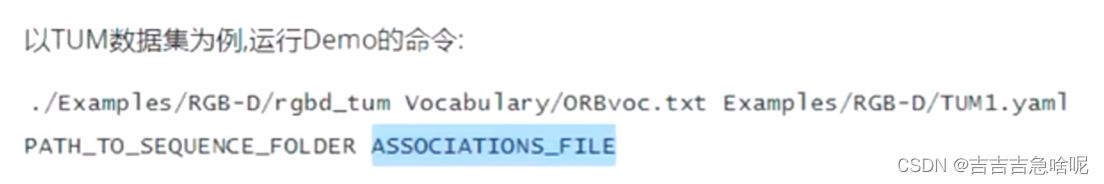

Examples

tum比如rgbd_tum(程序)可以处理rgbd数据集

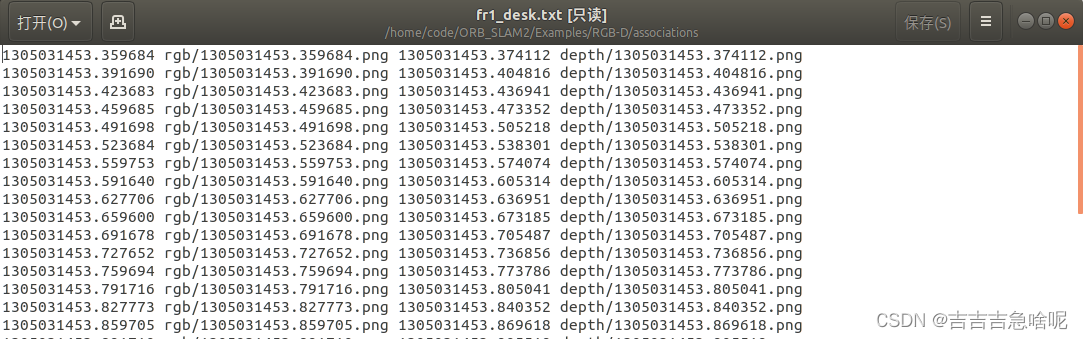

association文件中左右目配置文件:左边是某一时刻的rgb图像,右边是某一时刻的d图像,左右两个相机的帧位实际上并不是完全相同的,所以把这两个大致配置一下

rgbd_tum.cc

#include<iostream>

#include<algorithm>

#include<fstream>

#include<chrono>

#include<opencv2/core/core.hpp>

#include<System.h>

using namespace std;

void LoadImages(const string &strAssociationFilename, vector<string> &vstrImageFilenamesRGB,

vector<string> &vstrImageFilenamesD, vector<double> &vTimestamps);

int main(int argc, char **argv)

{

if(argc != 5)

{

cerr << endl << "Usage: ./rgbd_tum path_to_vocabulary path_to_settings path_to_sequence path_to_association" << endl;

return 1;

}

// Retrieve paths to images

//step1. 读取图片及左右目关联信息

vector<string> vstrImageFilenamesRGB;

vector<string> vstrImageFilenamesD;

vector<double> vTimestamps;

string strAssociationFilename = string(argv[4]);

LoadImages(strAssociationFilename, vstrImageFilenamesRGB, vstrImageFilenamesD, vTimestamps);

// Check consistency in the number of images and depthmaps

//step2. 检查图片文件及输入文件的一致性

int nImages = vstrImageFilenamesRGB.size();

if(vstrImageFilenamesRGB.empty())

{

cerr << endl << "No images found in provided path." << endl;

return 1;

}

else if(vstrImageFilenamesD.size()!=vstrImageFilenamesRGB.size())

{

cerr << endl << "Different number of images for rgb and depth." << endl;

return 1;

}

// Create SLAM system. It initializes all system threads and gets ready to process frames.

//step3. 创建SLAM对象,它是一个ORB_SLAM2::System类型对象

//整个SLAM项目被封装成一个system类

ORB_SLAM2::System SLAM(argv[1],argv[2],ORB_SLAM2::System::RGBD,true);

// Vector for tracking time statistics

vector<float> vTimesTrack;

vTimesTrack.resize(nImages);

cout << endl << "-------" << endl;

cout << "Start processing sequence ..." << endl;

cout << "Images in the sequence: " << nImages << endl << endl;

// Main loop

cv::Mat imRGB, imD;

//step4. 遍历图片,进行SLAM

for(int ni=0; ni<nImages; ni++)

{

// Read image and depthmap from file

//step4.1. 读取图片

imRGB = cv::imread(string(argv[3])+"/"+vstrImageFilenamesRGB[ni],CV_LOAD_IMAGE_UNCHANGED);

imD = cv::imread(string(argv[3])+"/"+vstrImageFilenamesD[ni],CV_LOAD_IMAGE_UNCHANGED);

double tframe = vTimestamps[ni];

if(imRGB.empty())

{

cerr << endl << "Failed to load image at: "

<< string(argv[3]) << "/" << vstrImageFilenamesRGB[ni] << endl;

return 1;

}

#ifdef COMPILEDWITHC11

std::chrono::steady_clock::time_point t1 = std::chrono::steady_clock::now();

#else

std::chrono::monotonic_clock::time_point t1 = std::chrono::monotonic_clock::now();

#endif

// Pass the image to the SLAM system

SLAM.TrackRGBD(imRGB,imD,tframe);

#ifdef COMPILEDWITHC11

std::chrono::steady_clock::time_point t2 = std::chrono::steady_clock::now();

#else

std::chrono::monotonic_clock::time_point t2 = std::chrono::monotonic_clock::now();

#endif

double ttrack= std::chrono::duration_cast<std::chrono::duration<double> >(t2 - t1).count();

vTimesTrack[ni]=ttrack;

// Wait to load the next frame

double T=0;

if(ni<nImages-1)

T = vTimestamps[ni+1]-tframe;

else if(ni>0)

T = tframe-vTimestamps[ni-1];

if(ttrack<T)

usleep((T-ttrack)*1e6);

}

// Stop all threads

SLAM.Shutdown();

// Tracking time statistics

sort(vTimesTrack.begin(),vTimesTrack.end());

float totaltime = 0;

for(int ni=0; ni<nImages; ni++)

{

totaltime+=vTimesTrack[ni];

}

cout << "-------" << endl << endl;

cout << "median tracking time: " << vTimesTrack[nImages/2] << endl;

cout << "mean tracking time: " << totaltime/nImages << endl;

// Save camera trajectory

SLAM.SaveTrajectoryTUM("CameraTrajectory.txt");

SLAM.SaveKeyFrameTrajectoryTUM("KeyFrameTrajectory.txt");

return 0;

}

void LoadImages(const string &strAssociationFilename, vector<string> &vstrImageFilenamesRGB,

vector<string> &vstrImageFilenamesD, vector<double> &vTimestamps)

{

ifstream fAssociation;

fAssociation.open(strAssociationFilename.c_str());

while(!fAssociation.eof())

{

string s;

getline(fAssociation,s);

if(!s.empty())

{

stringstream ss;

ss << s;

double t;

string sRGB, sD;

ss >> t;

vTimestamps.push_back(t);

ss >> sRGB;

vstrImageFilenamesRGB.push_back(sRGB);

ss >> t;

ss >> sD;

vstrImageFilenamesD.push_back(sD);

}

}

}Vocabulary

ORBvo.txt:ORB-SLAM2中是一个文本文件,ORB-SLAM3中改成了二进制文件,但也保留了;每一行都是一个描述子;这个描述子是经过近邻数聚类之后的

ORB-SLAM2变量命名规则——匈牙利命名法

变量名的第一个字母为m,表示该变量为某类的成员变量

变量名的第一、二个字母表示数据类型:

- p表示指针类型

- n表示int类型

- b表示bool类型

- s表示std:set类型

- v表示std::vector 类型

- 1表示std::list类型

- kf表示KeyFrame 类型

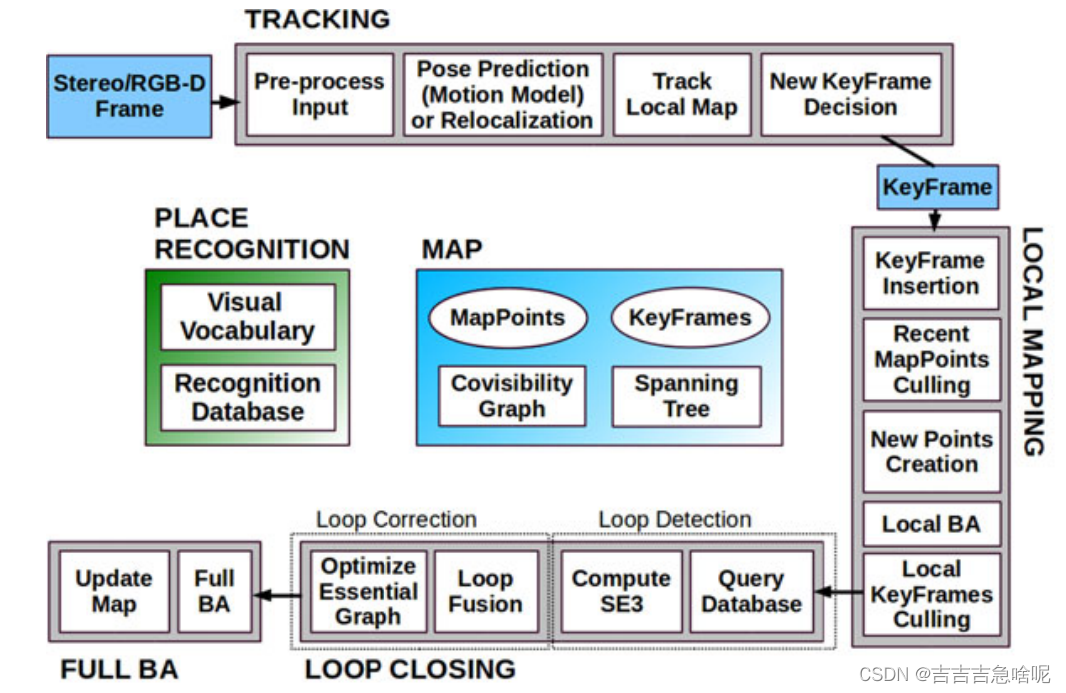

多线程

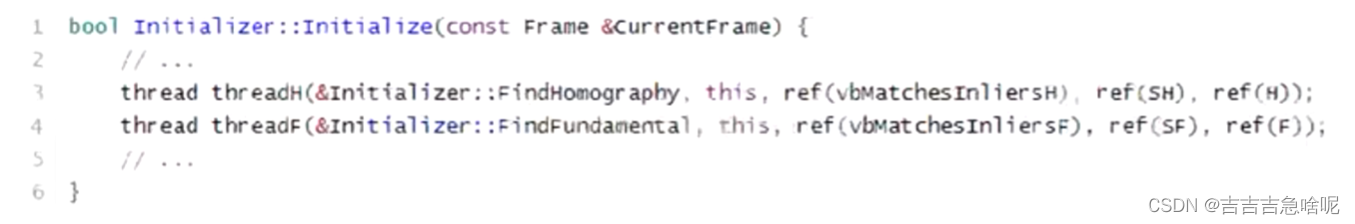

使用多线程的原因

1、 加快运算速度:

比如单目相机初始化时不知道使用单应矩阵还是基础矩阵就都算出来看哪个矩阵表现更好就用哪一个,奇异值分解(SVD)那一套计算量不大,但是像这种运算密集型的任务,开一个单独的线程出来都会加快速度,不至于出现一核有难八核围观的玩具hhh

2、系统的随机性

因为系统的随机性各步的运行顺序是不确定的

Tracking 线程不产生关键帧时 Localmapping 和 Loopclosing 线程基本上处于空转的状态

而Tracking 线程产生关键帧的频率和时机不是固定的。因此需要3个线程同时运行LocalMapping 和Loopclosing 线程不断循环查 Tracking 线程是否产生关键帧,产生了的话就处理

// Tracking线程主函数

void Tracking::Track() {

// 进行跟踪

// ...

// 若跟踪成功,根据条件判定是否产生关键帧

if (NeedNewKeyFrame())

// 产生关键帧并将关键帧传给LocalMapping线程

KeyFrame *pKF = new KeyFrame(mCurrentFrame, mpMap, mpKeyFrameDB);

mpLocalMapper->InsertKeyFrame(pKF);

}

// LocalMapping线程主函数

void LocalMapping::Run() {

// 死循环

while (1) {

// 判断是否接收到关键帧

if (CheckNewKeyFrames()) {

// 处理关键帧

// ...

// 将关键帧传给LoopClosing线程

mpLoopCloser->InsertKeyFrame(mpCurrentKeyFrame);

}

// 线程暂停3毫秒,3毫秒结束后再从while(1)循环首部运行

std::this_thread::sleep_for(std::chrono::milliseconds(3));

}

}

// LoopClosing线程主函数

void LoopClosing::Run() {

// 死循环

while (1) {

// 判断是否接收到关键帧

if (CheckNewKeyFrames()) {

// 处理关键帧

// ...

}

// 查看是否有外部线程请求复位当前线程

ResetIfRequested();

// 线程暂停5毫秒,5毫秒结束后再从while(1)循环首部运行

std::this_thread::sleep_for(std::chrono::milliseconds(5));

}

}

多线程中的锁

为防止多个线程同时操作同一变量造成混乱,引入锁机制:

将成员函数本身设为私有变量(private或protected),并在操作它们的公有函数内加锁.

class KeyFrame {

protected:

KeyFrame* mpParent;

public:

void KeyFrame::ChangeParent(KeyFrame *pKF) {

unique_lock<mutex> lockCon(mMutexConnections); // 加锁

mpParent = pKF;

pKF->AddChild(this);

}

KeyFrame *KeyFrame::GetParent() {

unique_lock<mutex> lockCon(mMutexConnections); // 加锁

return mpParent;

}

}

unique_lock<mutex> lockCon(mMutexConnections):这句话就是加锁,锁的有效性仅限于大括号之内。也就是说,程序运行出大括号之后就释放锁了,因此可以看到有一些代码中加上了看似莫名其妙的大括号。

void KeyFrame::EraseConnection(KeyFrame *pKF) {

// 第一部分加锁

{

unique_lock<mutex> lock(mMutexConnections);

if (mConnectedKeyFrameWeights.count(pKF)) {

mConnectedKeyFrameWeights.erase(pKF);

bUpdate = true;

}

}// 程序运行到这里就释放锁,后面的操作不需要抢到锁就能执行

UpdateBestCovisibles();

}

System类

System.h头文件

构造函数System(const string &strVocFile, const string &strSettingsFile, const eSensor sensor, const bool bUseViewer = true)

System::System(const string &strVocFile, const string &strSettingsFile, const eSensor sensor,

const bool bUseViewer):mSensor(sensor), mpViewer(static_cast<Viewer*>(NULL)), mbReset(false),mbActivateLocalizationMode(false),

mbDeactivateLocalizationMode(false)

{

//step1.初始化各成员变量

//Check settings file

//step1.1. 读取配置文件信息

cv::FileStorage fsSettings(strSettingsFile.c_str(), cv::FileStorage::READ);

if(!fsSettings.isOpened())

{

cerr << "Failed to open settings file at: " << strSettingsFile << endl;

exit(-1);

}

//step1.2. 创建ORB词袋

mpVocabulary = new ORBVocabulary();

bool bVocLoad = mpVocabulary->loadFromTextFile(strVocFile);

if(!bVocLoad)

{

cerr << "Wrong path to vocabulary. " << endl;

cerr << "Falied to open at: " << strVocFile << endl;

exit(-1);

}

cout << "Vocabulary loaded!" << endl << endl;

//step1.3. 创建关键帧数据库,主要保存ORB描述子倒排索引(即根据描述子查找拥有该描述子的关键帧)

mpKeyFrameDatabase = new KeyFrameDatabase(*mpVocabulary);

//step1.4. 创建地图

mpMap = new Map();

//Create Drawers. These are used by the Viewer

mpFrameDrawer = new FrameDrawer(mpMap);

mpMapDrawer = new MapDrawer(mpMap, strSettingsFile);

//step2. 创建三大线程Tracking、LocalMapping和LoopClosing

mpTracker = new Tracking(this, mpVocabulary, mpFrameDrawer, mpMapDrawer,

mpMap, mpKeyFrameDatabase, strSettingsFile, mSensor);

//Initialize the Local Mapping thread and launch

mpLocalMapper = new LocalMapping(mpMap, mSensor==MONOCULAR);

mptLocalMapping = new thread(&ORB_SLAM2::LocalMapping::Run,mpLocalMapper);

//Initialize the Loop Closing thread and launch

mpLoopCloser = new LoopClosing(mpMap, mpKeyFrameDatabase, mpVocabulary, mSensor!=MONOCULAR);

mptLoopClosing = new thread(&ORB_SLAM2::LoopClosing::Run, mpLoopCloser);

//Initialize the Viewer thread and launch

if(bUseViewer)

{

mpViewer = new Viewer(this, mpFrameDrawer,mpMapDrawer,mpTracker,strSettingsFile);

mptViewer = new thread(&Viewer::Run, mpViewer);

mpTracker->SetViewer(mpViewer);

}

//step3. 设置线程间通信

//Set pointers between threads

mpTracker->SetLocalMapper(mpLocalMapper);

mpTracker->SetLoopClosing(mpLoopCloser);

mpLocalMapper->SetTracker(mpTracker);

mpLocalMapper->SetLoopCloser(mpLoopCloser);

mpLoopCloser->SetTracker(mpTracker);

mpLoopCloser->SetLocalMapper(mpLocalMapper);

}LocalMapping和Loopclosing 线程在类中有对应的std::threads线程成员变量,为什么Tracking 线程没有对应的std:thread成员变量?

因为Trackingt 线程就是主线程,而LocalMapping和LoopClosing线程是其子线程,主线程通过持有两个子线程的指针来控制子线程。

虽然在编程实现上三大线程呈父子关系,但逻辑上我们任务三者是并发的,不存在谁控制谁的问题。

ORBextractor.cc

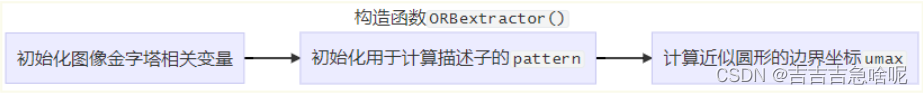

ORBextractor()

1、 初始化图像金字塔相关变量

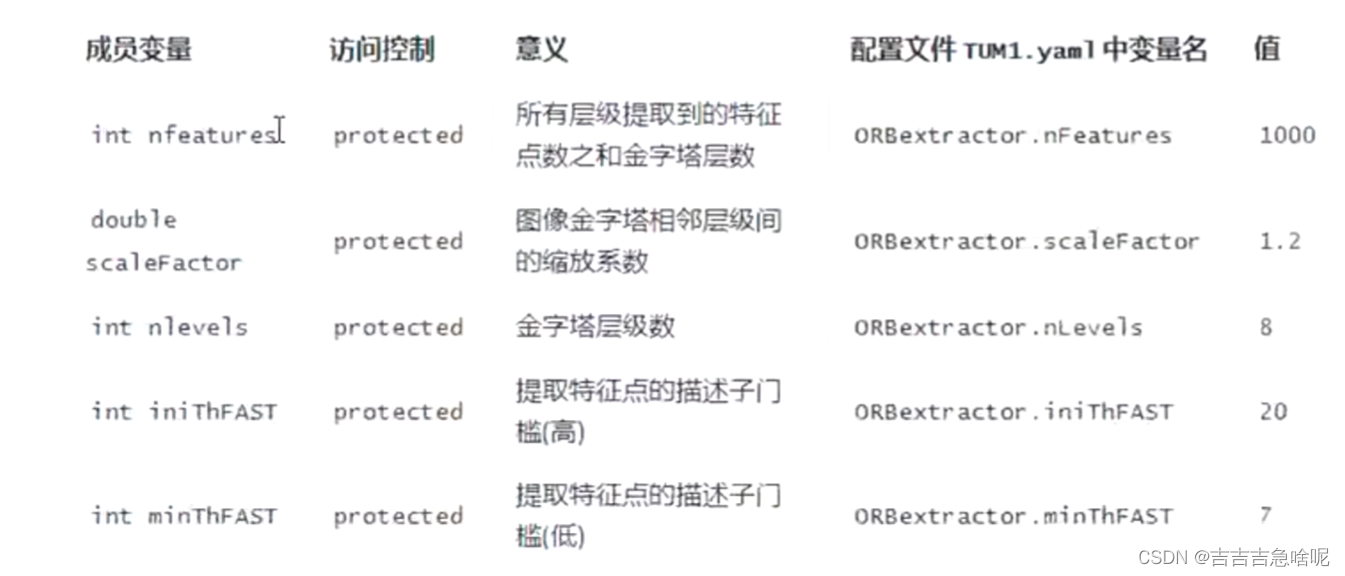

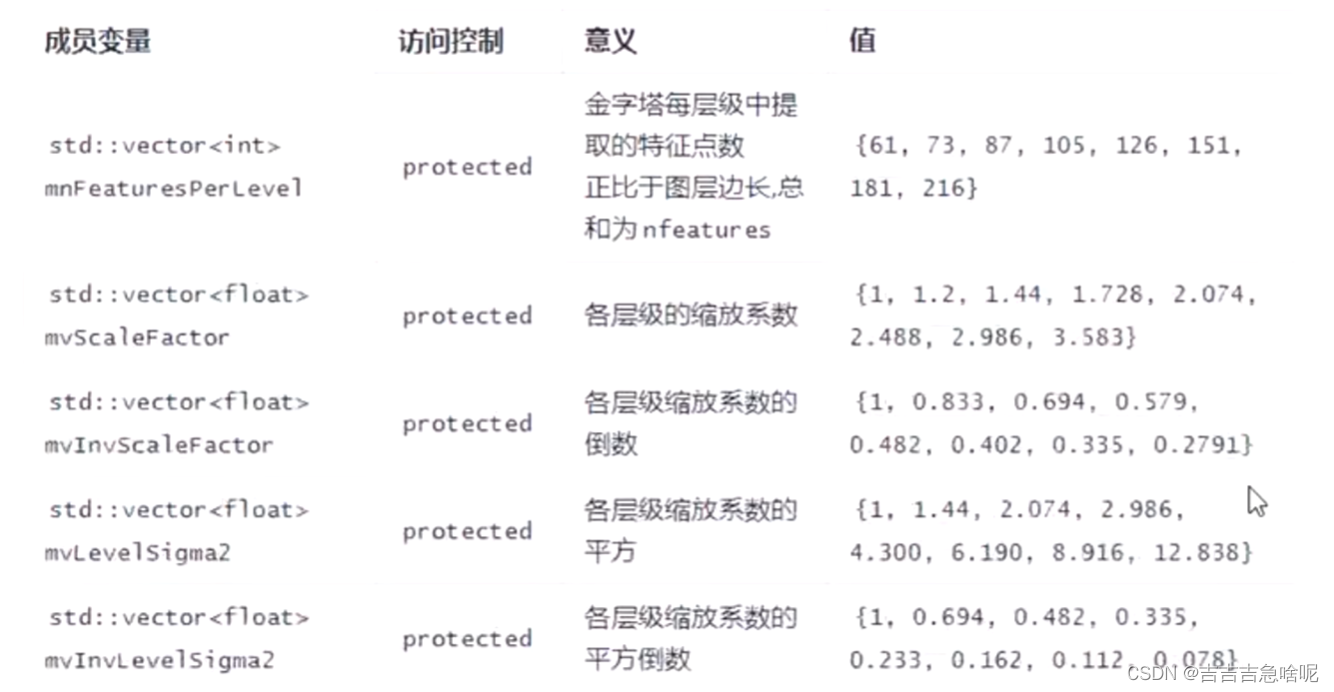

以下成员变量从配置文件TUM1.yaml中读入

根据上面的变量计算下面的成员变量

2、初始化用于计算描述子的pattern变量,pattern是用于计算描述子的256对坐标,其值写死在源码文件ORBextractor.cc里,在构造函数里做类型转换将其转换为const cv::Point*变量

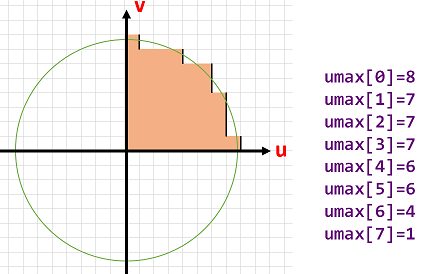

3、计算一个半径为16的圆的近似坐标

记住带黑边的这8个点就相当于记住这个圆了

成员变量std::vector <int> umax里存储的实际上是逼近圆的第一象限内四分之一圆周上每个v坐标对应的u坐标,为保证严格对称性先计算下45°圆周上点的坐标,再根据对称性补全上45°圆周上点的坐标

//45度射线与圆周交点的纵坐标

int v, v0, vmax = cvFloor(HALF_PATCH_SIZE * sqrt(2.f) / 2 + 1);

int vmin = cvCeil(HALF_PATCH_SIZE * sqrt(2.f) / 2);

const double hp2 = HALF_PATCH_SIZE*HALF_PATCH_SIZE;

//先计算 下半45度的umax

for (v = 0; v <= vmax; ++v)

umax[v] = cvRound(sqrt(hp2 - v * v));

//根据对称性补出 上半45度的umax

for (v = HALF_PATCH_SIZE, v0 = 0; v >= vmin; --v)

{

while (umax[v0] == umax[v0 + 1])

++v0;

umax[v] = v0;

++v0;

}ComputePyramind()

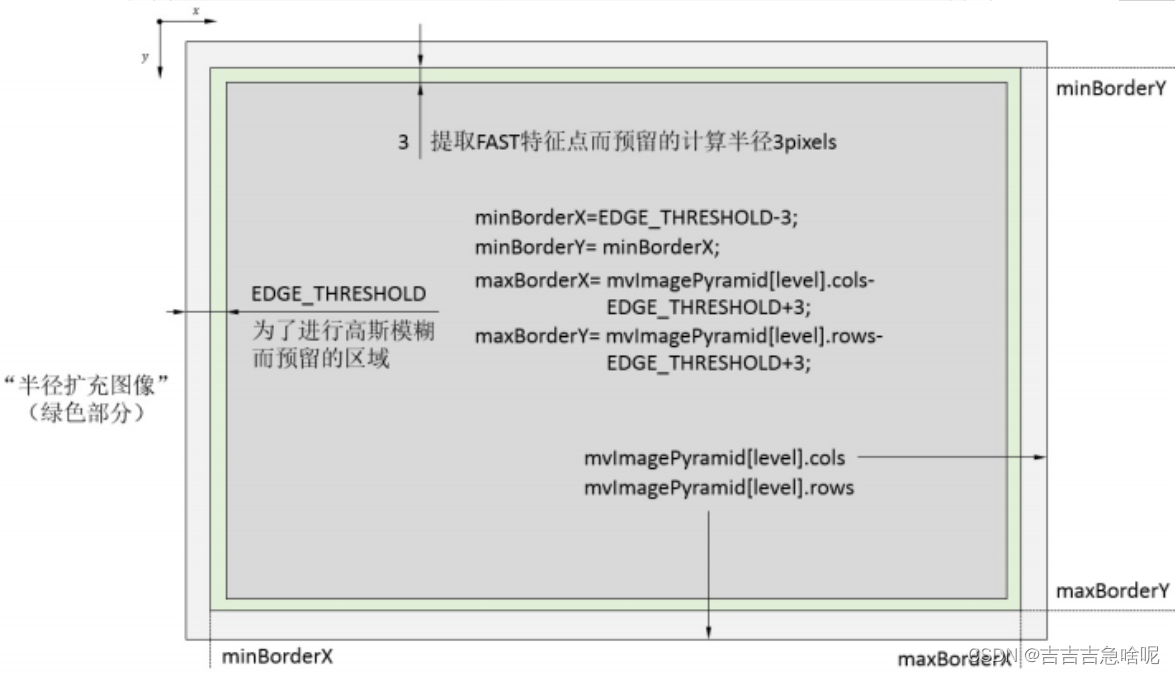

成员变量 访问控制 意义

std::vector mvImagePyramid public 图像金字塔每层的图像

const int EDGE_THRESHOLD 全局变量 为计算描述子和提取特征点补的padding厚度

函数void ORBextractor::ComputePyramid(cv::Mat image)逐层计算图像金字塔,对于每层图像进行以下两步:

先进行图片缩放,缩放到mvInvScaleFactor对应尺寸.

在图像外补一圈厚度为19的padding(提取FAST特征点需要特征点周围半径为3的圆域,计算ORB描述子需要特征点周围半径为16的圆域).

下图表示图像金字塔每层结构:

深灰色为缩放后的原始图像.

包含绿色边界在内的矩形用于提取FAST特征点.

包含浅灰色边界在内的整个矩形用于计算ORB描述子.

void ORBextractor::ComputePyramid(cv::Mat image)

{

for (int level = 0; level < nlevels; ++level)

{

//计算缩放+补padding后该层图像的尺寸

float scale = mvInvScaleFactor[level];

Size sz(cvRound((float)image.cols*scale), cvRound((float)image.rows*scale));

Size wholeSize(sz.width + EDGE_THRESHOLD*2, sz.height + EDGE_THRESHOLD*2);

Mat temp(wholeSize, image.type()), masktemp;

//缩放图像并复制到对应图层并补边

mvImagePyramid[level] = temp(Rect(EDGE_THRESHOLD, EDGE_THRESHOLD, sz.width, sz.height));

// Compute the resized image

if( level != 0 )

{

resize(mvImagePyramid[level-1], mvImagePyramid[level], sz, 0, 0, INTER_LINEAR);

copyMakeBorder(mvImagePyramid[level], temp, EDGE_THRESHOLD, EDGE_THRESHOLD, EDGE_THRESHOLD, EDGE_THRESHOLD,

BORDER_REFLECT_101+BORDER_ISOLATED);

}

else

{

copyMakeBorder(image, temp, EDGE_THRESHOLD, EDGE_THRESHOLD, EDGE_THRESHOLD, EDGE_THRESHOLD,

BORDER_REFLECT_101);

}

}

}CopyMakeBorder函数实现了复制和padding填充,其参数BORDER_REFLECT_101参数指定对padding进行镜像填充

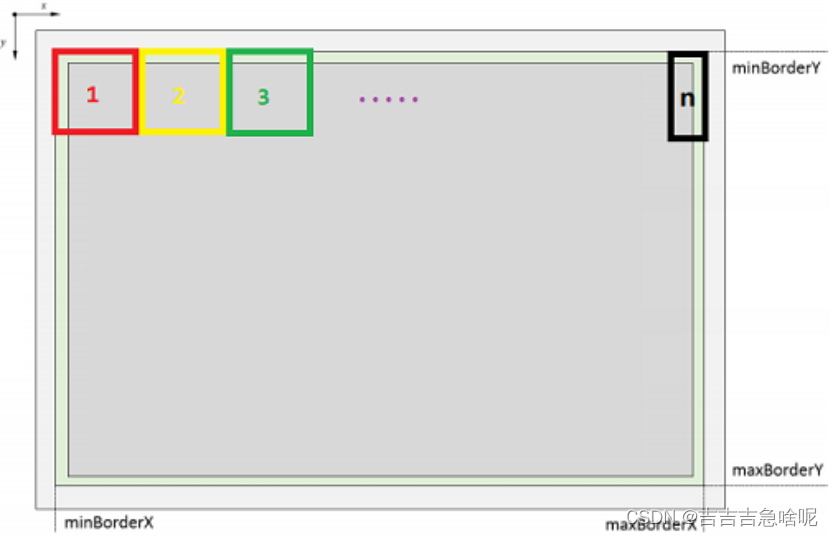

ComputeKeyPointsOctTree()

提取特征点最重要的就是力求特征点均匀地分布在图像的所有部分为实现这一目标编程实现上使用了两个技巧

1、分cell搜索特征点,若某CELL内特征点响应值普遍较小的话就降低分数线再搜索一遍

2、对得到的所有特征点进行八又树筛选若某区域内特征点数目过于密集则只取其中响应值最大的那个(非极大值抑制)

CELL搜索的示意图如下,每个CELL的大小约为30✖30,搜索到边上,剩余尺寸不够大的时候,最后一个CELL有多大就用多大的区域.

void ORBextractor::ComputeKeyPointsOctTree(vector<vector<KeyPoint> >& allKeypoints)

{

allKeypoints.resize(nlevels);

const float W = 30;

for (int level = 0; level < nlevels; ++level)

{

//计算图像边界

const int minBorderX = EDGE_THRESHOLD-3;

const int minBorderY = minBorderX;

const int maxBorderX = mvImagePyramid[level].cols-EDGE_THRESHOLD+3;

const int maxBorderY = mvImagePyramid[level].rows-EDGE_THRESHOLD+3;

//存储需要进行平均分配的特征点

vector<cv::KeyPoint> vToDistributeKeys;

vToDistributeKeys.reserve(nfeatures*10);

const float width = (maxBorderX-minBorderX);

const float height = (maxBorderY-minBorderY);

const int nCols = width/W; //每一列有多少cell

const int nRows = height/W; //每一行有多少cell

const int wCell = ceil(width/nCols); //每个cell的宽度

const int hCell = ceil(height/nRows);//每个cell的高度

//step1. 遍历每行和每列,依次分别用高低阈值搜索FAST特征点

for(int i=0; i<nRows; i++)

{

const float iniY =minBorderY+i*hCell;

float maxY = iniY+hCell+6;

if(iniY>=maxBorderY-3)

continue;

if(maxY>maxBorderY)

maxY = maxBorderY;

for(int j=0; j<nCols; j++)

{

const float iniX =minBorderX+j*wCell;

float maxX = iniX+wCell+6;

if(iniX>=maxBorderX-6)

continue;

if(maxX>maxBorderX)

maxX = maxBorderX;

vector<cv::KeyPoint> vKeysCell;

//先用高阈值搜索FAST特征点

FAST(mvImagePyramid[level].rowRange(iniY,maxY).colRange(iniX,maxX),

vKeysCell,iniThFAST,true);

//高阈值搜索不到的话,就用低阈值搜索FAST特征点

if(vKeysCell.empty())

{

FAST(mvImagePyramid[level].rowRange(iniY,maxY).colRange(iniX,maxX),

vKeysCell,minThFAST,true);

}

if(!vKeysCell.empty())

{

//把vKeysCell中提取到的特征点全添加到容器vToDistributeKeys中

for(vector<cv::KeyPoint>::iterator vit=vKeysCell.begin(); vit!=vKeysCell.end();vit++)

{

(*vit).pt.x+=j*wCell;

(*vit).pt.y+=i*hCell;

vToDistributeKeys.push_back(*vit);

}

}

}

}

vector<KeyPoint> & keypoints = allKeypoints[level];

keypoints.reserve(nfeatures);

//step2. 对提取到的特征点进行八叉树筛选

keypoints = DistributeOctTree(vToDistributeKeys, minBorderX, maxBorderX,

minBorderY, maxBorderY,mnFeaturesPerLevel[level], level);

const int scaledPatchSize = PATCH_SIZE*mvScaleFactor[level];

// Add border to coordinates and scale information

const int nkps = keypoints.size();

for(int i=0; i<nkps ; i++)

{

keypoints[i].pt.x+=minBorderX;

keypoints[i].pt.y+=minBorderY;

keypoints[i].octave=level;

keypoints[i].size = scaledPatchSize;

}

}

//计算每个特征点的方向

for (int level = 0; level < nlevels; ++level)

computeOrientation(mvImagePyramid[level], allKeypoints[level], umax);

}

static void computeOrbDescriptor(const KeyPoint& kpt,

const Mat& img, const Point* pattern,

uchar* desc)

{

float angle = (float)kpt.angle*factorPI;

float a = (float)cos(angle), b = (float)sin(angle);

const uchar* center = &img.at<uchar>(cvRound(kpt.pt.y), cvRound(kpt.pt.x));

const int step = (int)img.step;

#define GET_VALUE(idx) \

center[cvRound(pattern[idx].x*b + pattern[idx].y*a)*step + \

cvRound(pattern[idx].x*a - pattern[idx].y*b)]

for (int i = 0; i < 32; ++i, pattern += 16)

{

int t0, t1, val;

t0 = GET_VALUE(0); t1 = GET_VALUE(1);

val = t0 < t1; //描述子本字节的bit0

t0 = GET_VALUE(2); t1 = GET_VALUE(3);

val |= (t0 < t1) << 1; //描述子本字节的bit1

t0 = GET_VALUE(4); t1 = GET_VALUE(5);

val |= (t0 < t1) << 2; //描述子本字节的bit2

t0 = GET_VALUE(6); t1 = GET_VALUE(7);

val |= (t0 < t1) << 3; //描述子本字节的bit3

t0 = GET_VALUE(8); t1 = GET_VALUE(9);

val |= (t0 < t1) << 4; //描述子本字节的bit4

t0 = GET_VALUE(10); t1 = GET_VALUE(11);

val |= (t0 < t1) << 5; //描述子本字节的bit5

t0 = GET_VALUE(12); t1 = GET_VALUE(13);

val |= (t0 < t1) << 6; //描述子本字节的bit6

t0 = GET_VALUE(14); t1 = GET_VALUE(15);

val |= (t0 < t1) << 7; //描述子本字节的bit7

//保存当前比较出来的描述子的这个字节

desc[i] = (uchar)val;

}ORBextractor类提取特征点的主函数void operator()()

ORBextractor被用于Tracking线程对输入图像预处理的第一步.

void ORBextractor::operator()( InputArray _image, InputArray _mask, vector<KeyPoint>& _keypoints,

OutputArray _descriptors)

{

//step1. 检查图像有效性

if(_image.empty())

return;

Mat image = _image.getMat();

assert(image.type() == CV_8UC1 );

//step2. 构建图像金字塔

ComputePyramid(image);

//step3. 计算特征点并进行八叉树筛选

vector < vector<KeyPoint> > allKeypoints;

ComputeKeyPointsOctTree(allKeypoints);

//ComputeKeyPointsOld(allKeypoints);

Mat descriptors;

int nkeypoints = 0;

for (int level = 0; level < nlevels; ++level)

nkeypoints += (int)allKeypoints[level].size();

if( nkeypoints == 0 )

_descriptors.release();

else

{

_descriptors.create(nkeypoints, 32, CV_8U);

descriptors = _descriptors.getMat();

}

_keypoints.clear();

_keypoints.reserve(nkeypoints);

//step4. 遍历每一层图像,计算描述子

int offset = 0;

for (int level = 0; level < nlevels; ++level)

{

vector<KeyPoint>& keypoints = allKeypoints[level];

int nkeypointsLevel = (int)keypoints.size();

if(nkeypointsLevel==0)

continue;

// preprocess the resized image

Mat workingMat = mvImagePyramid[level].clone();

//计算描述子之前先进行一次高斯模糊

GaussianBlur(workingMat, workingMat, Size(7, 7), 2, 2, BORDER_REFLECT_101);

// Compute the descriptors

Mat desc = descriptors.rowRange(offset, offset + nkeypointsLevel);

computeDescriptors(workingMat, keypoints, desc, pattern);

offset += nkeypointsLevel;

// Scale keypoint coordinates

if (level != 0)

{

float scale = mvScaleFactor[level]; //getScale(level, firstLevel, scaleFactor);

for (vector<KeyPoint>::iterator keypoint = keypoints.begin(),

keypointEnd = keypoints.end(); keypoint != keypointEnd; ++keypoint)

keypoint->pt *= scale;

}

// And add the keypoints to the output

_keypoints.insert(_keypoints.end(), keypoints.begin(), keypoints.end());

}

}

这个函数重载了()算符,使得其他类可以将ORBextractor 类型变量当作函数来使用。该函数是ORBextractor的主函数,内部依次调用了上面提到的各过程

这个重载()运算符的用法被用在Frame类的ExtractORB()函数中了,这也是ORBextractor类在整个项目中唯一被调用的地方

// 函数中`mpORBextractorLeft`和`mpORBextractorRight`都是`ORBextractor`对象

// 星号加括号指得到了这个变量

void Frame::ExtractORB(int flag, const cv::Mat &im) {

if(flag==0)

(*mpORBextractorLeft)(im, cv::Mat(), mvKeys, mDescriptors);

else

(*mpORBextractorRight)(im,cv::Mat(),mvKeysRight,mDescriptorsRight);

}

// Frame类的两个ORBextractor是在调用构造函数时传入的,构造函数中调用ExtractORB()提取特征点

Frame::Frame(ORBextractor *extractorLeft, ORBextractor *extractorRight)

: mpORBextractorLeft(extractorLeft), mpORBextractorRight(extractorRight) {

// ...

// 提取ORB特征点

thread threadLeft(&Frame::ExtractORB, this, 0, imLeft);

thread threadRight(&Frame::ExtractORB, this, 1, imRight);

threadLeft.join();

threadRight.join();

//等待这两个子线程运行完了再回到主线程

// ...

}

// 提取特征点

void Frame::ExtractORB(int flag, const cv::Mat &im) {

if (flag == 0)

(*mpORBextractorLeft)(im, cv::Mat(), mvKeys, mDescriptors);

else

(*mpORBextractorRight)(im, cv::Mat(), mvKeysRight, mDescriptorsRight);

}

Frame类的两个ORBextractor指针指向的变量是Tracking类的构造函数中创建的

// Tracking构造函数

Tracking::Tracking() {

// ...

// 创建两个ORB特征点提取器

mpORBextractorLeft = new ORBextractor(nFeatures, fScaleFactor, nLevels, fIniThFAST, fMinThFAST);

if (sensor == System::STEREO)

mpORBextractorRight = new ORBextractor(nFeatures, fScaleFactor, nLevels, fIniThFAST, fMinThFAST);

// ...

}

// Tracking线程每收到一帧输入图片,就创建一个Frame对象,创建Frame对象时将提取器mpORBextractorLeft和mpORBextractorRight给构造函数

cv::Mat Tracking::GrabImageStereo(const cv::Mat &imRectLeft, const cv::Mat &imRectRight, const double ×tamp) {

// ...

// 创建Frame对象

mCurrentFrame = Frame(mImGray, imGrayRight, timestamp, mpORBextractorLeft, mpORBextractorRight);

// ...

}

由上述代码分析可知,每次完成ORB特征点提取之后,图像金字塔信息就作废了,下一帧图像到来时调用ComputePyramid()函数会覆盖掉本帧图像的图像金字塔信息;但从金字塔中提取的图像特征点的信息会被保存在Frame对象中.所以ORB-SLAM2是稀疏重建,对每帧图像只保留最多nfeatures个特征点(及其对应的地图点).

nobs应该是个私有变量,get方法public

成员变量std::map mObservations保存了当前关键点对关键帧KeyFrame的观测关系,std::map是一个key-value结构,其key为某个关键帧,value为当前地图点在该关键帧中的索引(是在该关键帧成员变量std::vector mvpMapPoints中的索引).

成员int nObs记录了当前地图点被多少个关键帧相机观测到了(单目关键帧每次观测算1个相机,双目/RGBD帧每次观测算2个相机).

// 向参考帧pKF中添加对本地图点的观测,本地图点在pKF中的编号为idx

void MapPoint::AddObservation(KeyFrame* pKF, size_t idx) {

unique_lock<mutex> lock(mMutexFeatures);

// 如果已经添加过观测,返回

if(mObservations.count(pKF))

return;

// 如果没有添加过观测,记录下能观测到该MapPoint的KF和该MapPoint在KF中的索引

mObservations[pKF]=idx;

// 根据观测形式是单目还是双目更新观测计数变量nObs

if(pKF->mvuRight[idx]>=0)

nObs += 2;

else

nObs++;

}

// 从参考帧pKF中移除本地图点

void MapPoint::EraseObservation(KeyFrame* pKF) {

bool bBad=false;

{

unique_lock<mutex> lock(mMutexFeatures);

// 查找这个要删除的观测,根据单目和双目类型的不同从其中删除当前地图点的被观测次数

if(mObservations.count(pKF)) {

if(pKF->mvuRight[mObservations[pKF]]>=0)

nObs-=2;

else

nObs--;

mObservations.erase(pKF);

// 如果该keyFrame是参考帧,该Frame被删除后重新指定RefFrame

if(mpRefKF == pKF)

mpRefKF = mObservations.begin()->first; // ????参考帧指定得这么草率真的好么?

// 当观测到该点的相机数目少于2时,丢弃该点(至少需要两个观测才能三角化)

if(nObs<=2)

bBad=true;

}

}

if(bBad)

// 告知可以观测到该MapPoint的Frame,该MapPoint已被删除

SetBadFlag();

}

函数GetIndexInKeyFrame()和IsInKeyFrame()就是对mObservations的简单查询

int MapPoint::GetIndexInKeyFrame(KeyFrame *pKF) {

unique_lock<mutex> lock(mMutexFeatures);

if(mObservations.count(pKF))

return mObservations[pKF];

else

return -1;

}

bool MapPoint::IsInKeyFrame(KeyFrame *pKF) {

unique_lock<mutex> lock(mMutexFeatures);

return (mObservations.count(pKF));

}

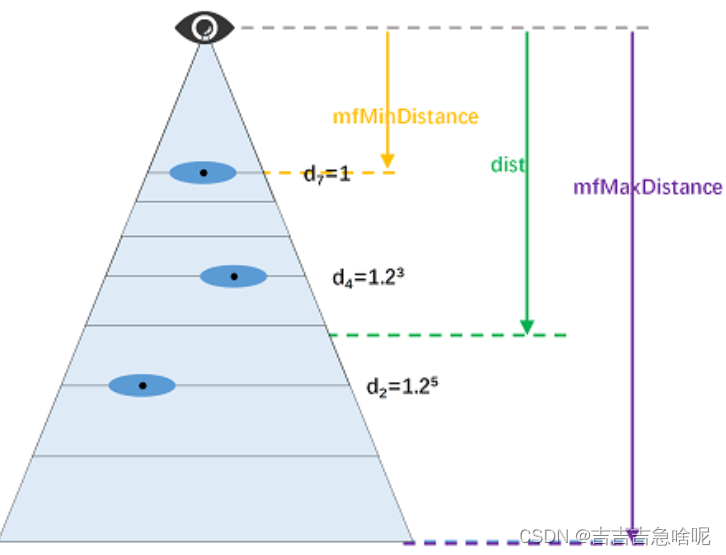

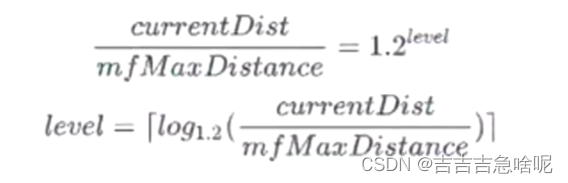

平均观测距离: mfMinDistance和mfMaxDistance

特征点的观测距离与其在图像金字塔中的图层呈线性关系.直观上理解,如果一个图像区域被放大后才能识别出来,说明该区域的观测深度较深.

特征点的平均观测距离的上下限由成员变量mfMaxDistance和mfMinDistance表示:

mfMaxDistance表示若地图点匹配在某特征提取器图像金字塔第0层上的某特征点,观测距离值

mfMinDistance表示若地图点匹配在某特征提取器图像金字塔第7层上的某特征点,观测距离值

void MapPoint::UpdateNormalAndDepth() {

// step1. 获取地图点相关信息

map<KeyFrame *, size_t> observations;

KeyFrame *pRefKF;

cv::Mat Pos;

{

unique_lock<mutex> lock1(mMutexFeatures);

unique_lock<mutex> lock2(mMutexPos);

observations = mObservations;

pRefKF = mpRefKF;

Pos = mWorldPos.clone();

}

// step2. 根据观测到但钱地图点的关键帧取平均计算平均观测方向

cv::Mat normal = cv::Mat::zeros(3, 1, CV_32F);

int n = 0;

for (KeyFrame *pKF : observations.begin()) {

normal = normal + normali / cv::norm(mWorldPos - pKF->GetCameraCenter());

n++;

}

// step3. 根据参考帧计算平均观测距离

cv::Mat PC = Pos - pRefKF->GetCameraCenter();

const float dist = cv::norm(PC);

const int level = pRefKF->mvKeysUn[observations[pRefKF]].octave;

const float levelScaleFactor = pRefKF->mvScaleFactors[level];

const int nLevels = pRefKF->mnScaleLevels;

{

unique_lock<mutex> lock3(mMutexPos);

mfMaxDistance = dist * levelScaleFactor;

mfMinDistance = mfMaxDistance / pRefKF->mvScaleFactors[nLevels - 1];

mNormalVector = normal / n;

}

}

地图点的平均观测距离是根据其参考关键帧计算的,那么参考关键帧KeyFrame* mpRefKF是如何指定的呢?

- 构造函数中,创建该地图点的参考帧被设为参考关键帧.

- 若当前地图点对参考关键帧的观测被删除(EraseObservation(KeyFrame* pKF)),则取第一个观测到当前地图点的关键帧做参考关键帧.

函数MapPoint::UpdateNormalAndDepth()的调用时机:

1、创建地图点时调用UpdateNormalAndDepth()初始化其观测信息.

pNewMP->AddObservation(pKF, i);

pKF->AddMapPoint(pNewMP, i);

pNewMP->ComputeDistinctiveDescriptors();

pNewMP->UpdateNormalAndDepth(); // 更新平均观测方向和距离

mpMap->AddMapPoint(pNewMP);

2、地图点对关键帧的观测mObservations更新时(跟踪局部地图添加或删除对关键帧的观测时、LocalMapping线程删除冗余关键帧时或**LoopClosing线程闭环矫正**时),调用UpdateNormalAndDepth()初始化其观测信息。

pMP->AddObservation(mpCurrentKeyFrame, i);

pMP->UpdateNormalAndDepth();

3、地图点世界坐标mWorldPos发生变化时(BA优化之后),调用UpdateNormalAndDepth()初始化其观测信息.

pMP->SetWorldPos(cvCorrectedP3Dw);

pMP->UpdateNormalAndDepth();

总结成一句话: 只要地图点本身或关键帧对该地图点的观测发生变化,就应该调用函数MapPoint::UpdateNormalAndDepth()更新其观测尺度和方向信息.

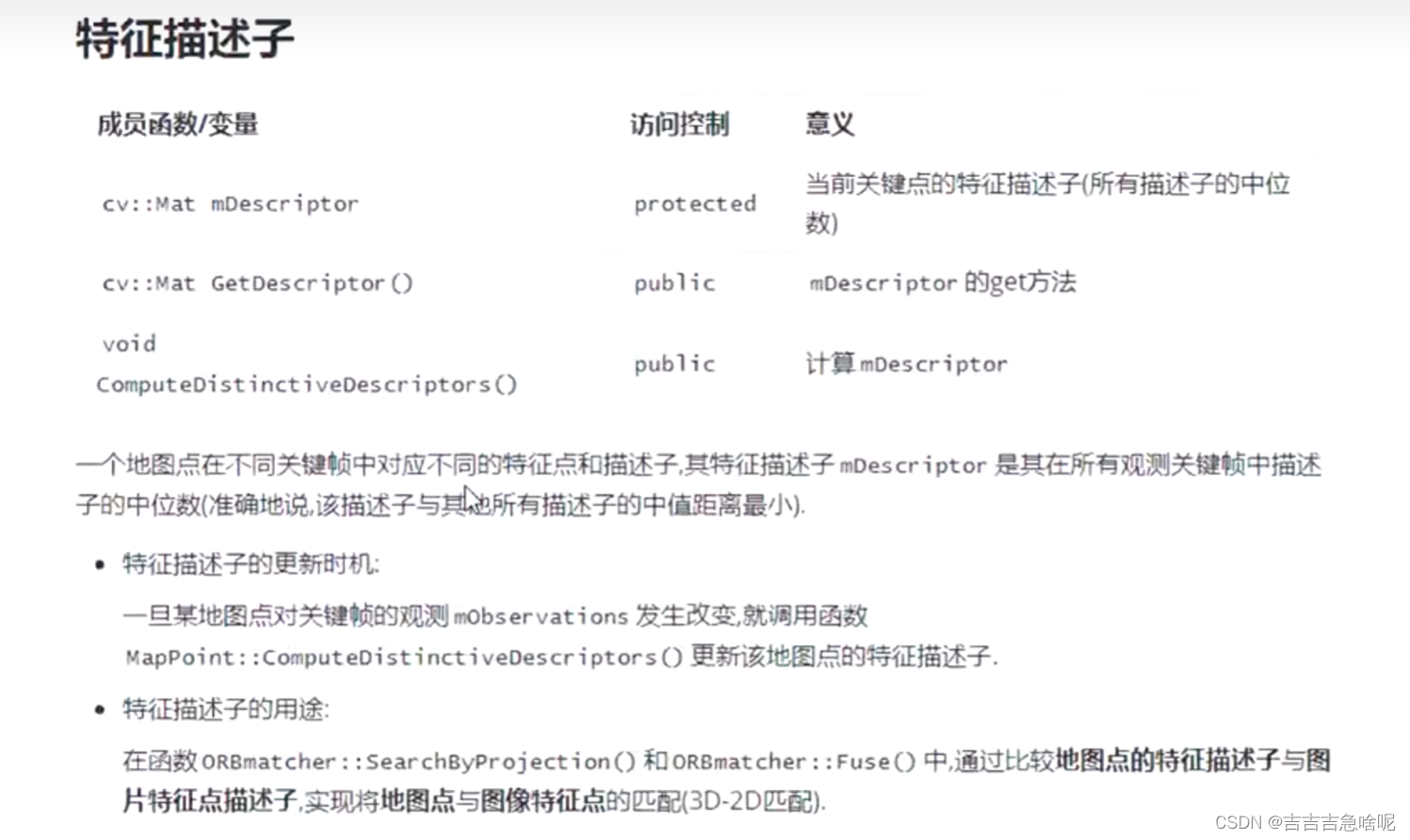

当前地图点在多个关键帧上有特征点,每个特征点都有描述子,计算它们的中心,哪个特征点的描述子到其他特征点的描述子距离总和最小就取哪一个

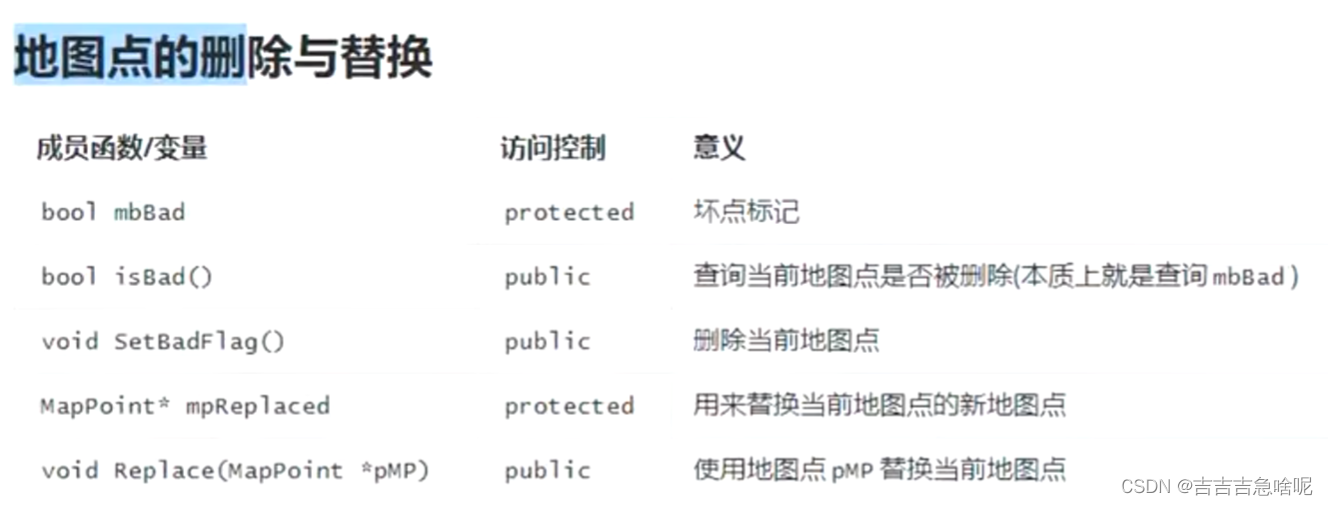

地图点的删除: SetBadFlag()

变量mbBad用来表征当前地图点是否被删除.

删除地图点的各成员变量是一个较耗时的过程,因此函数SetBadFlag()删除关键点时采取先标记再清除的方式,具体的删除过程分为以下两步:

先将坏点标记mbBad置为true,逻辑上删除该地图点.(地图点的社会性死亡)

再依次清空当前地图点的各成员变量,物理上删除该地图点.(地图点的肉体死亡)

这样只有在设置坏点标记mbBad时需要加锁,之后的操作就不需要加锁了.

void MapPoint::SetBadFlag() {

map<KeyFrame *, size_t> obs;

{

unique_lock<mutex> lock1(mMutexFeatures);

unique_lock<mutex> lock2(mMutexPos);

mbBad = true; // 标记mbBad,逻辑上删除当前地图点

obs = mObservations;

mObservations.clear();

}

// 删除关键帧对当前地图点的观测

for (KeyFrame *pKF : obs.begin()) {

pKF->EraseMapPointMatch(mit->second);

}

// 在地图类上注册删除当前地图点,这里会发生内存泄漏

mpMap->EraseMapPoint(this);

}

int KeyFrame::TrackedMapPoints(const int &minObs) {

// ...

for (int i = 0; i < N; i++) {

MapPoint *pMP = mvpMapPoints[i];

if (pMP && !pMP->isBad()) { // 依次检查该地图点物理上和逻辑上是否删除,若删除了就不对其操作

// ...

}

}

// ...

}

地图点的替换: Replace()

函数Replace(MapPoint* pMP)将当前地图点的成员变量叠加到新地图点pMP上.

void MapPoint::Replace(MapPoint *pMP) {

// 如果是同一地图点则跳过

if (pMP->mnId == this->mnId)

return;

// step1. 逻辑上删除当前地图点

int nvisible, nfound;

map<KeyFrame *, size_t> obs;

{

unique_lock<mutex> lock1(mMutexFeatures);

unique_lock<mutex> lock2(mMutexPos);

obs = mObservations;

mObservations.clear();

mbBad = true;

nvisible = mnVisible;

nfound = mnFound;

mpReplaced = pMP;

}

// step2. 将当地图点的数据叠加到新地图点上

for (map<KeyFrame *, size_t>::iterator mit = obs.begin(), mend = obs.end(); mit != mend; mit++) {

KeyFrame *pKF = mit->first;

if (!pMP->IsInKeyFrame(pKF)) {

pKF->ReplaceMapPointMatch(mit->second, pMP);

pMP->AddObservation(pKF, mit->second);

} else {

pKF->EraseMapPointMatch(mit->second);

}

}

pMP->IncreaseFound(nfound);

pMP->IncreaseVisible(nvisible);

pMP->ComputeDistinctiveDescriptors();

// step3. 删除当前地图点

mpMap->EraseMapPoint(this);

}

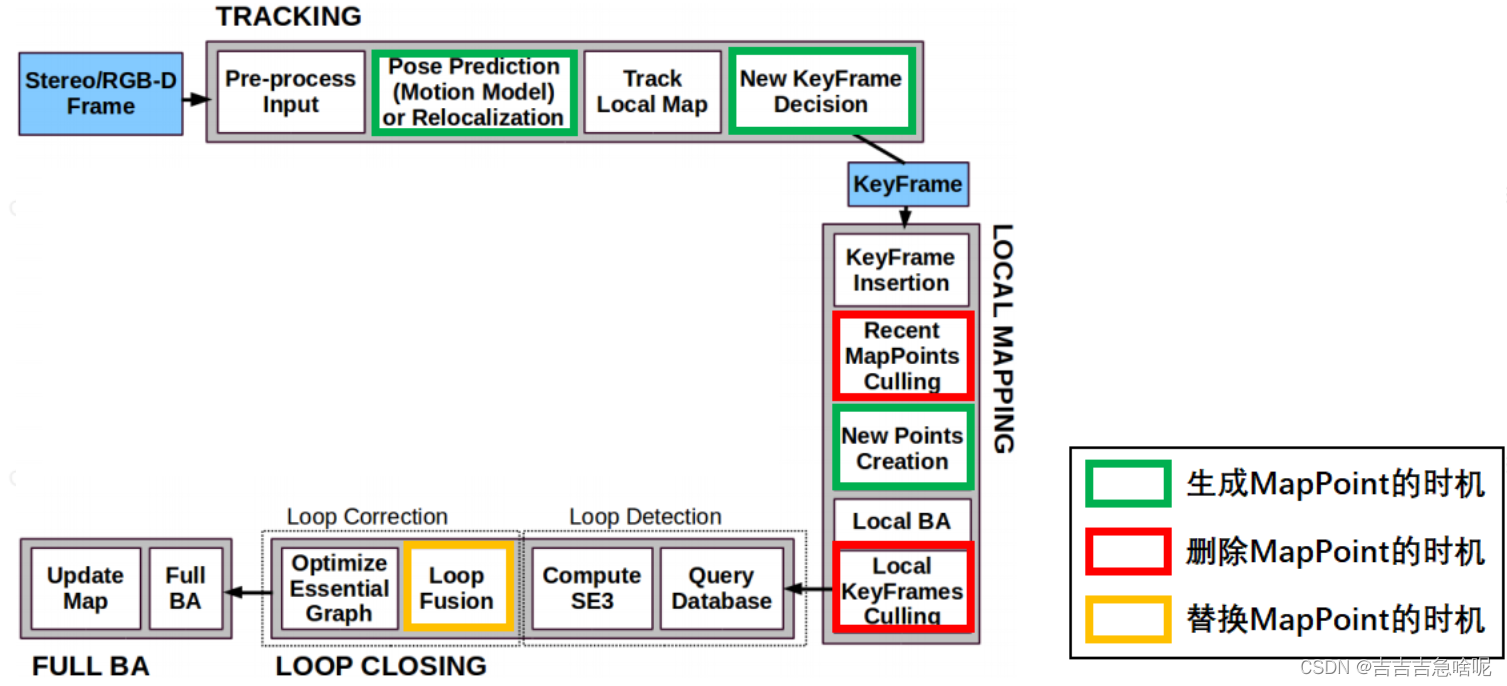

MapPoint类的用途

MapPoint的生命周期

针对MapPoint的生命周期,我们关心以下3个问题:

- 创建MapPoint的时机:

- Tracking线程中初始化过程(Tracking::MonocularInitialization()和Tracking::StereoInitialization())

- Tracking线程中创建新的关键帧(Tracking::CreateNewKeyFrame())

- Tracking线程中恒速运动模型跟踪(Tracking::TrackWithMotionModel())也会产生临时地图点,但这些临时地图点在跟踪成功后会被马上删除(那跟踪失败怎么办?跟踪失败的话不会产生关键帧,这些地图点也不会被注册进地图)。

- LocalMapping线程中创建新地图点的步骤(LocalMapping::CreateNewMapPoints())会将当前关键帧与前一关键帧进行匹配,生成新地图点。

- 删除MapPoint的时机:

- LocalMapping线程中删除恶劣地图点的步骤(LocalMapping::MapPointCulling()).

- 删除关键帧的函数KeyFrame::SetBadFlag()会调用函数MapPoint::EraseObservation()删除地图点对关键帧的观测,若地图点对关键帧的观测少于2,则地图点无法被三角化,就删除该地图点。

- 替换MapPoint的时机:

- LoopClosing线程中闭环矫正(LoopClosing::CorrectLoop())时当前关键帧和闭环关键帧上的地图点发生冲突时,会使用闭环关键帧的地图点替换当前关键帧的地图点。

- LoopClosing线程中闭环矫正函数LoopClosing::CorrectLoop()会调用LoopClosing::SearchAndFuse()将闭环关键帧的共视关键帧组中所有地图点投影到当前关键帧的共视关键帧组中,发生冲突时就会替换。

帧Frame

其实普通帧中的属性关键帧里都有

但是在设计的时候,作者把普通帧和关键帧写成了不想关的两个帧,其实不应该这样,会造成大量的冗余代码,其实应该用组合的方式,把普通帧写在关键帧里头

判断一个特征点是双目还是单目特征点,是基线乘以ThDepth,如果大于的话就不要这个深度信息了,就觉得其误差太大,就是单目特征点。

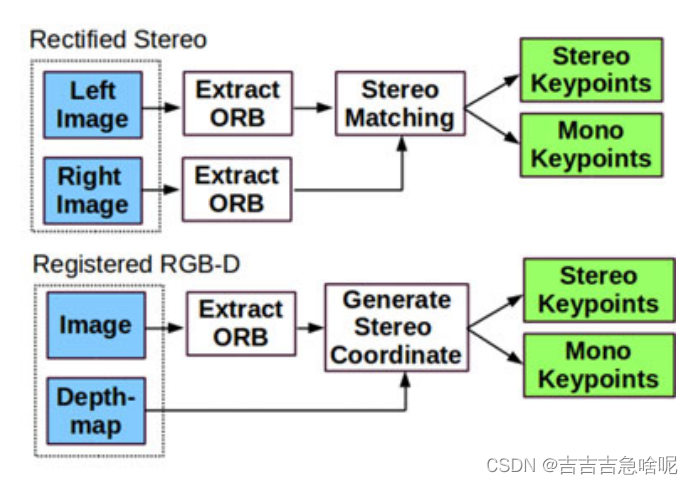

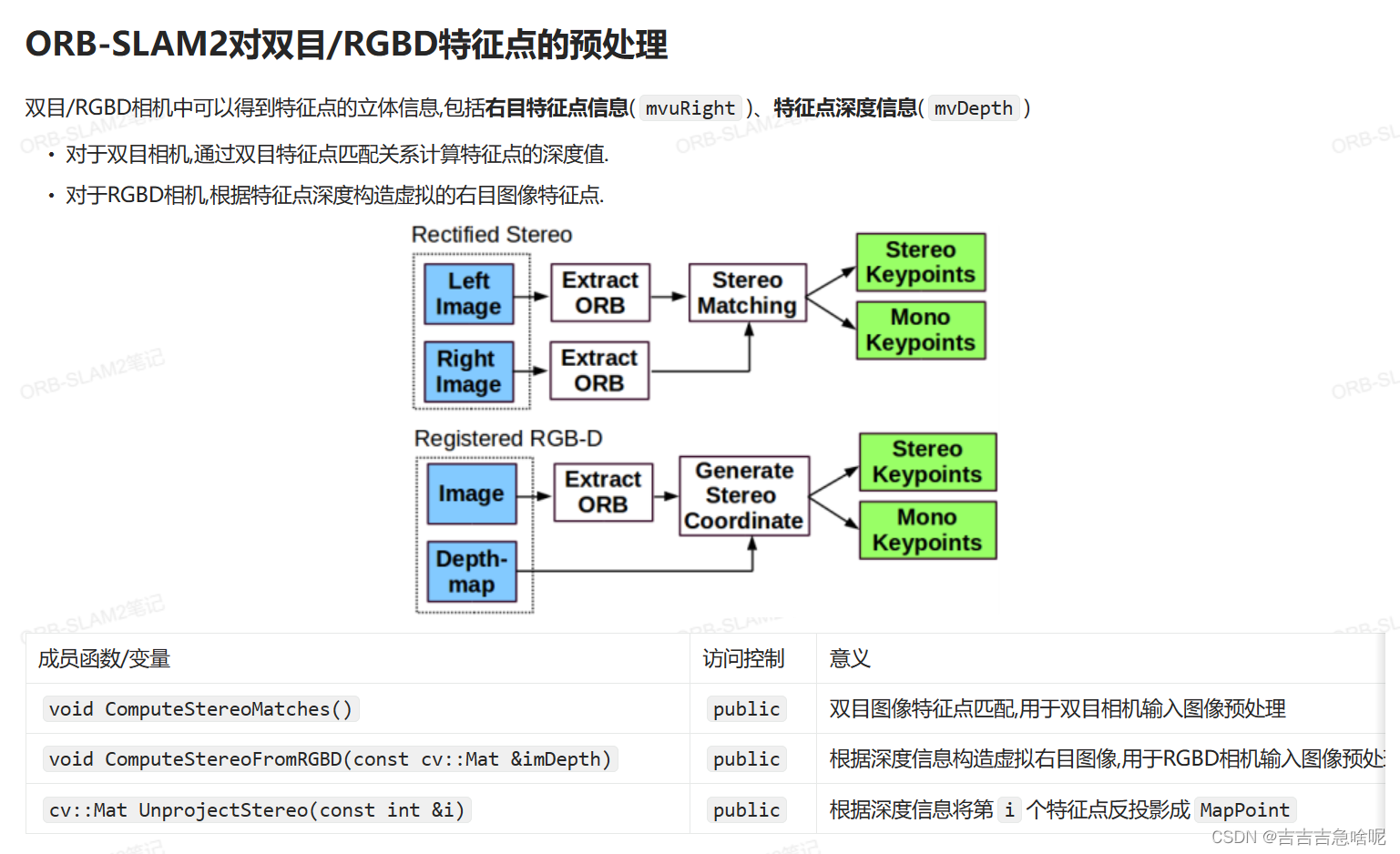

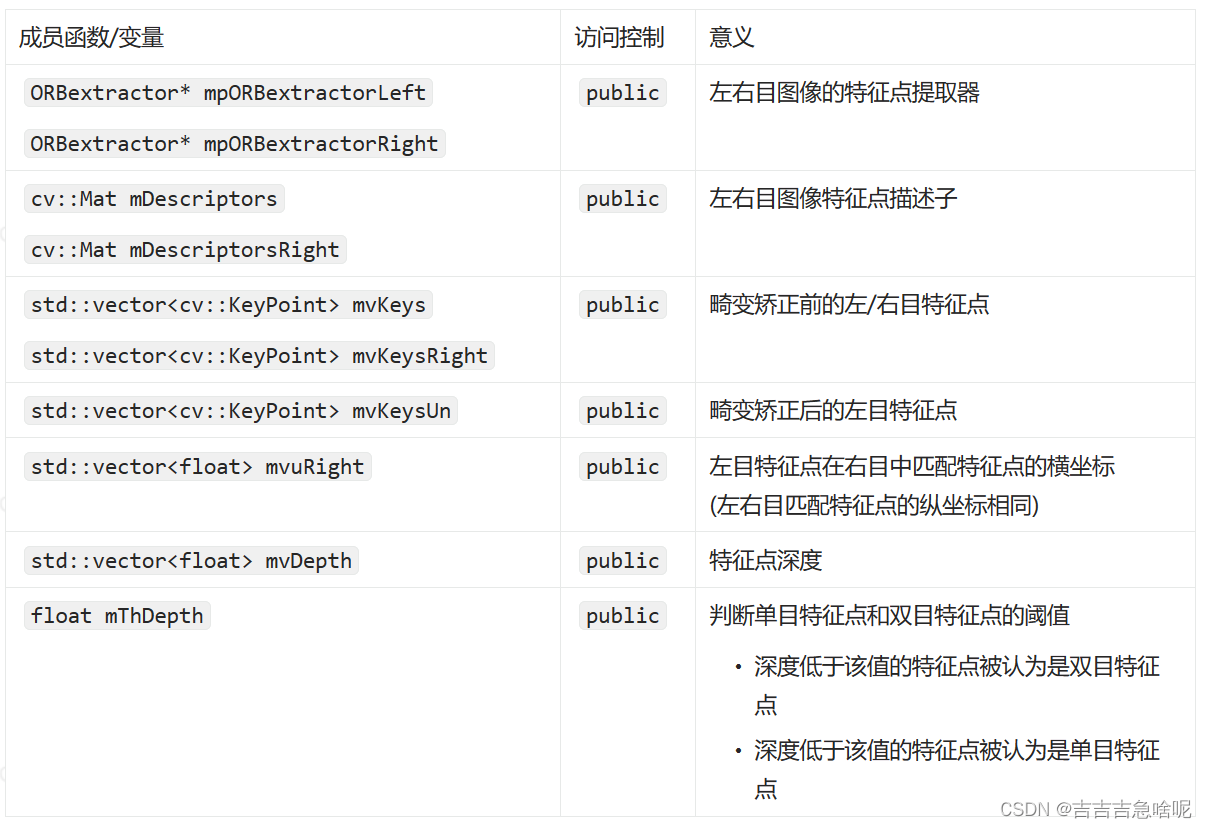

RGBD相机,左图图像——特征点,右图深度,反过来可以计算视差![]()

意思就是不管是双目还是RGBD都看成双目得

mK mb mbf应该都是static,应该就在第一次创建frame对象就给赋值了

bf是为了计算双目视差公式用的

Tracking::Tracking(const string &strSettingPath, ...) {

// 从配置文件中读取相机参数并构造内参矩阵

cv::FileStorage fSettings(strSettingPath, cv::FileStorage::READ);

float fx = fSettings["Camera.fx"];

float fy = fSettings["Camera.fy"];

float cx = fSettings["Camera.cx"];

float cy = fSettings["Camera.cy"];

cv::Mat K = cv::Mat::eye(3, 3, CV_32F);

K.at<float>(0, 0) = fx;

K.at<float>(1, 1) = fy;

K.at<float>(0, 2) = cx;

K.at<float>(1, 2) = cy;

K.copyTo(mK);

// ...

}

// 每传来一帧图像,就调用一次该函数

cv::Mat Tracking::GrabImageStereo(..., const cv::Mat &imRectLeft, const cv::Mat &imRectRight, const double ×tamp) {

mCurrentFrame = Frame(mImGray, mK, mDistCoef, mbf, mThDepth);

Track();

// ...

}

// Frame构造函数

Frame::Frame(cv::Mat &K, cv::Mat &distCoef, const float &bf, const float &thDepth)

: mK(K.clone()), mDistCoef(distCoef.clone()), mbf(bf), mThDepth(thDepth) {

// ...

// 第一次调用Frame()构造函数时为所有static变量赋值

if (mbInitialComputations) {

fx = K.at<float>(0, 0);

fy = K.at<float>(1, 1);

cx = K.at<float>(0, 2);

cy = K.at<float>(1, 2);

invfx = 1.0f / fx;

invfy = 1.0f / fy;

// ...

mbInitialComputations = false; // 赋值完毕后将mbInitialComputations复位

}

mb = mbf / fx;

}

特征点提取

在Frame类构造函数中调用成员变量mpORBextractorLeft和mpORBextractorRight的()运算符进行特征点提取。

mvKeys、 mvKeysUn、 mvuRight、 mvDepth的坐标索引是对应的,也就是说对于第i个图像特征点:

- 其畸变矫正前的左目特征点是mvKeys[i].

- 其畸变矫正后的左目特征点是mvKeysUn[i].

- 其在右目图片中对应特征点的横坐标为mvuRight[i],纵坐标与mvKeys[i]的纵坐标相同.

- 特征点的深度是mvDepth[i].

对于单目特征点(单目相机输入的特征点或没有找到右目匹配的左目图像特征点),其mvuRight和mvDepth均为-1.

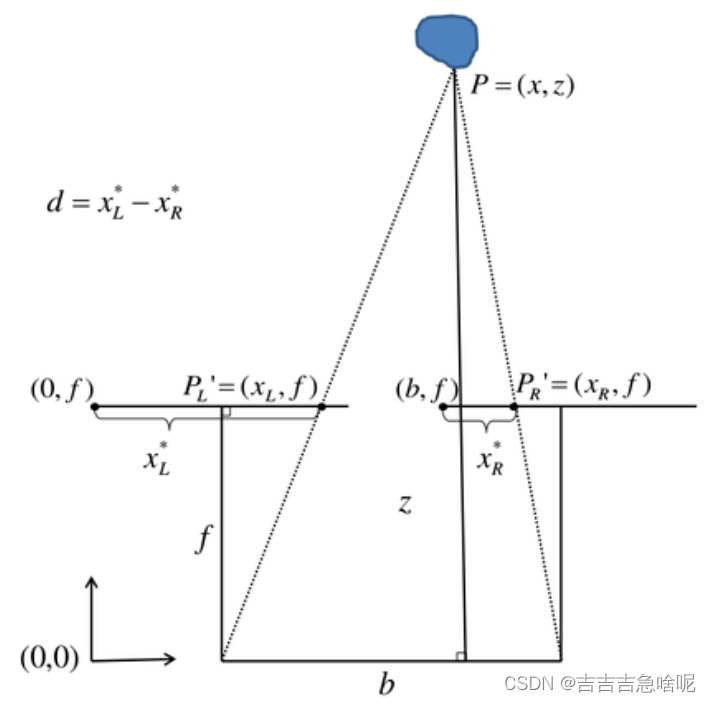

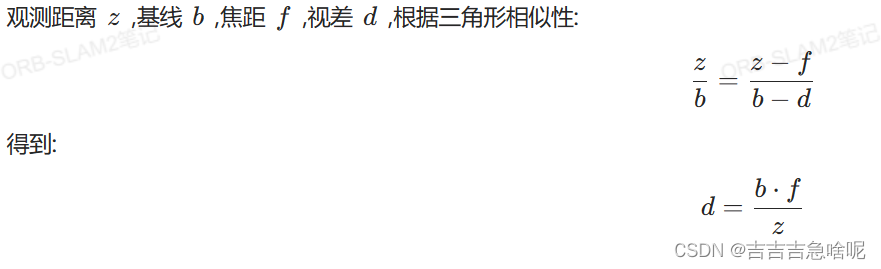

横坐标不同的差称为视差

双目相机分别提取到左右目特征点后对特征点进行双目匹配,并通过双目视差估计特征点深度.双目特征点匹配步骤:

- 粗匹配:根据特征点描述子距离和金字塔层级判断匹配。粗匹配关系是按行寻找的,对于左目图像中每个特征点,在右目图像对应行上寻找匹配特征点。

- 精匹配:根据特征点周围窗口内容相似度判断匹配。

- 亚像素插值:将特征点相似度与匹配坐标之间拟合成二次曲线,寻找最佳匹配位置(得到的是一个小数)。

- 记录右目匹配mvuRight和深度mvDepth信息。

- 离群点筛选:以平均相似度的2.1倍为标准,筛选离群点。

void Frame::ComputeStereoMatches() {

mvuRight = vector<float>(N, -1.0f);

mvDepth = vector<float>(N, -1.0f);

// step0. 右目图像特征点逐行统计: 将右目图像中每个特征点注册到附近几行上

vector<vector<size_t> > vRowIndices(nRows, vector<size_t>()); // 图像每行的1右目特征点索引

for (int iR = 0; iR < mvKeysRight.size(); iR++) {

const cv::KeyPoint &kp = mvKeysRight[iR];

const float &kpY = kp.pt.y;

const int maxr = ceil(kpY + 2.0f * mvScaleFactors[mvKeysRight[iR].octave]);

const int minr = floor(kpY - 2.0f * mvScaleFactors[mvKeysRight[iR].octave]);

for (int yi = minr; yi <= maxr; yi++)

vRowIndices[yi].push_back(iR);

}

// step1. + 2. 粗匹配+精匹配

const float minZ = mb, minD = 0, maxD = mbf / minZ; // 根据视差公式计算两个特征点匹配搜索的范围

const int thOrbDist = (ORBmatcher::TH_HIGH + ORBmatcher::TH_LOW) / 2;

vector<pair<int, int> > vDistIdx; // 保存特征点匹配

for (int iL = 0; iL < N; iL++) {

const cv::KeyPoint &kpL = mvKeys[iL];

const int &levelL = kpL.octave;

const float &vL = kpL.pt.y, &uL = kpL.pt.x;

const vector<size_t> &vCandidates = vRowIndices[vL];

if (vCandidates.empty()) continue;

// step1. 粗匹配,根据特征点描述子和金字塔层级进行粗匹配

int bestDist = ORBmatcher::TH_HIGH;

size_t bestIdxR = 0;

const cv::Mat &dL = mDescriptors.row(iL);

for (size_t iC = 0; iC < vCandidates.size(); iC++) {

const size_t iR = vCandidates[iC];

const cv::KeyPoint &kpR = mvKeysRight[iR];

if (kpR.octave < levelL - 1 || kpR.octave > levelL + 1)

continue;

const float &uR = kpR.pt.x;

if (uR >= minU && uR <= maxU) {

const cv::Mat &dR = mDescriptorsRight.row(iR);

const int dist = ORBmatcher::DescriptorDistance(dL, dR);

if (dist < bestDist) {

bestDist = dist;

bestIdxR = iR;

}

}

}

// step2. 精匹配: 滑动窗口匹配,根据匹配点周围5✖5窗口寻找精确匹配

if (bestDist < thOrbDist) {

const float uR0 = mvKeysRight[bestIdxR].pt.x;

const float scaleFactor = mvInvScaleFactors[kpL.octave];

const float scaleduL = round(kpL.pt.x * scaleFactor);

const float scaledvL = round(kpL.pt.y * scaleFactor);

const float scaleduR0 = round(uR0 * scaleFactor);

const int w = 5;

cv::Mat IL = mpORBextractorLeft->mvImagePyramid[kpL.octave].rowRange(scaledvL - w, scaledvL + w + 1).colRange(scaleduL - w, scaleduL + w + 1);

IL.convertTo(IL, CV_32F);

IL = IL - IL.at<float>(w, w) * cv::Mat::ones(IL.rows, IL.cols, CV_32F);

int bestDist = INT_MAX;

int bestincR = 0;

const int L = 5;

vector<float> vDists;

vDists.resize(2 * L + 1);

const float iniu = scaleduR0 + L - w;

const float endu = scaleduR0 + L + w + 1;

for (int incR = -L; incR <= +L; incR++) {

cv::Mat IR = mpORBextractorRight->mvImagePyramid[kpL.octave].rowRange(scaledvL - w, scaledvL + w + 1).colRange(scaleduR0 + incR - w, scaleduR0 + incR + w + 1);

IR.convertTo(IR, CV_32F);

IR = IR - IR.at<float>(w, w) * cv::Mat::ones(IR.rows, IR.cols, CV_32F);

float dist = cv::norm(IL, IR, cv::NORM_L1);

if (dist < bestDist) {

bestDist = dist;

bestincR = incR;

}

vDists[L + incR] = dist;

}

// step3. 亚像素插值: 将特征点匹配距离拟合成二次曲线,寻找二次曲线最低点(是一个小数)作为最优匹配点坐标

const float dist1 = vDists[L + bestincR - 1];

const float dist2 = vDists[L + bestincR];

const float dist3 = vDists[L + bestincR + 1];

const float deltaR = (dist1 - dist3) / (2.0f * (dist1 + dist3 - 2.0f * dist2));

// step4. 记录特征点的右目和深度信息

float bestuR = mvScaleFactors[kpL.octave] * ((float) scaleduR0 + (float) bestincR + deltaR);

float disparity = (uL - bestuR);

if (disparity >= minD && disparity < maxD) {

mvDepth[iL] = mbf / disparity;

mvuRight[iL] = bestuR;

vDistIdx.push_back(pair<int, int>(bestDist, iL));

}

}

}

// step5. 删除离群点: 匹配距离大于平均匹配距离2.1倍的视为误匹配

sort(vDistIdx.begin(), vDistIdx.end());

const float median = vDistIdx[vDistIdx.size() / 2].first;

const float thDist = 1.5f * 1.4f * median;

for (int i = vDistIdx.size() - 1; i >= 0; i--) {

if (vDistIdx[i].first < thDist)

break;

else {

mvuRight[vDistIdx[i].second] = -1;

mvDepth[vDistIdx[i].second] = -1;

}

}

}

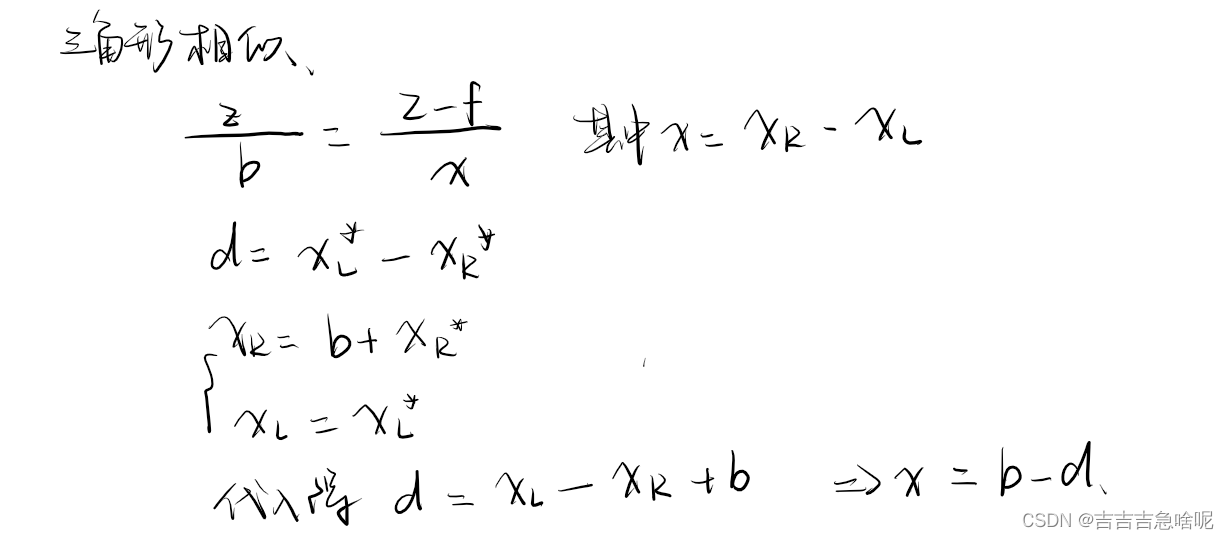

双目视差公式

void Frame::UndistortKeyPoints() {

// step1. 若输入图像是双目图像,则已做好了双目矫正,其畸变参数为0

if (mDistCoef.at<float>(0) == 0.0) {

mvKeysUn = mvKeys;

return;

}

// 将特征点坐标转为undistortPoints()函数要求的格式

cv::Mat mat(N, 2, CV_32F);

for (int i = 0; i < N; i++) {

mat.at<float>(i, 0) = mvKeys[i].pt.x;

mat.at<float>(i, 1) = mvKeys[i].pt.y;

}

mat = mat.reshape(2);

// 进行畸变矫正

cv::undistortPoints(mat, mat, mK, mDistCoef, cv::Mat(), mK);

// 记录校正后的特征点

mat = mat.reshape(1);

mvKeysUn.resize(N);

for (int i = 0; i < N; i++) {

cv::KeyPoint kp = mvKeys[i];

mvKeysUn[i].pt.x = mat.at<float>(i, 0);

mvKeysUn[i].pt.y = mat.at<float>(i, 1);

}

}

// 通过计算图片顶点畸变矫正后的坐标来计算畸变矫正后的图片有效范围

void Frame::ComputeImageBounds(const cv::Mat &imLeft) {

if (mDistCoef.at<float>(0) != 0.0) {

// 4个顶点坐标

cv::Mat mat(4, 2, CV_32F);

mat.at<float>(0, 0) = 0.0; //左上

mat.at<float>(0, 1) = 0.0;

mat.at<float>(1, 0) = imLeft.cols; //右上

mat.at<float>(1, 1) = 0.0;

mat.at<float>(2, 0) = 0.0; //左下

mat.at<float>(2, 1) = imLeft.rows;

mat.at<float>(3, 0) = imLeft.cols; //右下

mat.at<float>(3, 1) = imLeft.rows;

// 畸变矫正

mat = mat.reshape(2);

cv::undistortPoints(mat, mat, mK, mDistCoef, cv::Mat(), mK);

mat = mat.reshape(1);

// 记录图片有效范围

mnMinX = min(mat.at<float>(0, 0), mat.at<float>(2, 0)); //左上和左下横坐标最小的

mnMaxX = max(mat.at<float>(1, 0), mat.at<float>(3, 0)); //右上和右下横坐标最大的

mnMinY = min(mat.at<float>(0, 1), mat.at<float>(1, 1)); //左上和右上纵坐标最小的

mnMaxY = max(mat.at<float>(2, 1), mat.at<float>(3, 1)); //左下和右下纵坐标最小的

} else {

mnMinX = 0.0f;

mnMaxX = imLeft.cols;

mnMinY = 0.0f;

mnMaxY = imLeft.rows;

}

}

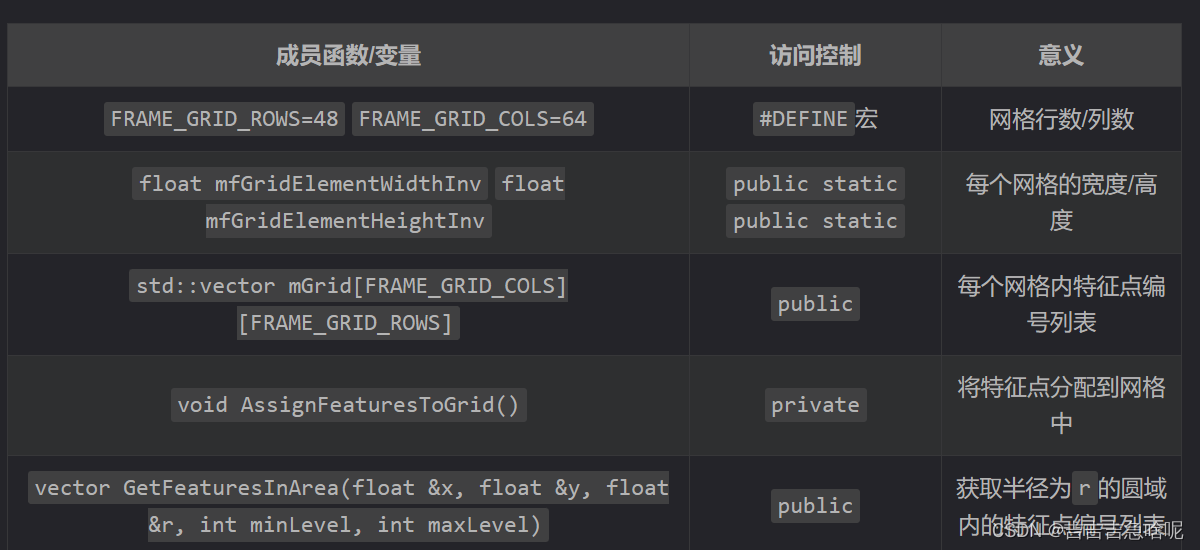

特征点分配: AssignFeaturesToGrid()

在对特征点进行预处理后,将特征点分配到48行64列的网格中以加速匹配

void Frame::AssignFeaturesToGrid() {

for (int i = 0; i < N; i++) {

// 遍历特征点,将每个特征点索引加入到对应网格中

const cv::KeyPoint &kp = mvKeysUn[i];

int nGridPosX, nGridPosY;

if (PosInGrid(kp, nGridPosX, nGridPosY))

mGrid[nGridPosX][nGridPosY].push_back(i);

}

}

构造函数: Frame()

Frame()构造函数依次进行上面介绍的步骤:

// 双目相机Frame构造函数

Frame::Frame(const cv::Mat &imLeft, const cv::Mat &imRight, const double &timeStamp, ORBextractor *extractorLeft, ORBextractor *extractorRight, ORBVocabulary *voc, cv::Mat &K, cv::Mat &distCoef, const float &bf, const float &thDepth)

: mpORBvocabulary(voc), mpORBextractorLeft(extractorLeft), mpORBextractorRight(extractorRight), mTimeStamp(timeStamp), mK(K.clone()), mDistCoef(distCoef.clone()), mbf(bf), mThDepth(thDepth), mpReferenceKF(static_cast<KeyFrame *>(NULL)) {

// step0. 帧ID自增

mnId = nNextId++;

// step1. 计算金字塔参数

mnScaleLevels = mpORBextractorLeft->GetLevels();

mfScaleFactor = mpORBextractorLeft->GetScaleFactor();

mfLogScaleFactor = log(mfScaleFactor);

mvScaleFactors = mpORBextractorLeft->GetScaleFactors();

mvInvScaleFactors = mpORBextractorLeft->GetInverseScaleFactors();

mvLevelSigma2 = mpORBextractorLeft->GetScaleSigmaSquares();

mvInvLevelSigma2 = mpORBextractorLeft->GetInverseScaleSigmaSquares();

// step2. 提取双目图像特征点

thread threadLeft(&Frame::ExtractORB, this, 0, imLeft);

thread threadRight(&Frame::ExtractORB, this, 1, imRight);

threadLeft.join();

threadRight.join();

N = mvKeys.size();

if (mvKeys.empty())

return;

// step3. 畸变矫正,实际上UndistortKeyPoints()不对双目图像进行矫正

UndistortKeyPoints();

// step4. 双目图像特征点匹配

ComputeStereoMatches();

// step5. 第一次调用构造函数时计算static变量

if (mbInitialComputations) {

ComputeImageBounds(imLeft);

mfGridElementWidthInv = static_cast<float>(FRAME_GRID_COLS) / static_cast<float>(mnMaxX - mnMinX);

mfGridElementHeightInv = static_cast<float>(FRAME_GRID_ROWS) / static_cast<float>(mnMaxY - mnMinY);

fx = K.at<float>(0, 0);

fy = K.at<float>(1, 1);

cx = K.at<float>(0, 2);

cy = K.at<float>(1, 2);

invfx = 1.0f / fx;

invfy = 1.0f / fy;

// 计算完成,标志复位

mbInitialComputations = false;

}

mvpMapPoints = vector<MapPoint *>(N, static_cast<MapPoint *>(NULL)); // 初始化本帧的地图点

mvbOutlier = vector<bool>(N, false); // 标记当前帧的地图点不是外点

mb = mbf / fx; // 计算双目基线长度

// step6. 将特征点分配到网格中

AssignFeaturesToGrid();

}

Frame类的用途

Tracking类有两个Frame类型的成员变量

Tracking线程每收到一帧图像,就调用函数Tracking::GrabImageMonocular()、Tracking::GrabImageStereo()或Tracking::GrabImageRGBD()创建一个Frame对象,赋值给mCurrentFrame.

// 每传来一帧图像,就调用一次这个函数

cv::Mat Tracking::GrabImageMonocular(const cv::Mat &im, const double ×tamp) {

mImGray = im;

// 图像通道转换

if (mImGray.channels() == 3) {

if (mbRGB)

cvtColor(mImGray, mImGray, CV_RGB2GRAY);

else

cvtColor(mImGray, mImGray, CV_BGR2GRAY);

} else if (mImGray.channels() == 4) {

if (mbRGB)

cvtColor(mImGray, mImGray, CV_RGBA2GRAY);

else

cvtColor(mImGray, mImGray, CV_BGRA2GRAY);

}

// 构造Frame

if (mState == NOT_INITIALIZED || mState == NO_IMAGES_YET) //没有成功初始化的前一个状态就是NO_IMAGES_YET

mCurrentFrame = Frame(mImGray, timestamp, mpIniORBextractor, mpORBVocabulary, mK, mDistCoef, mbf, mThDepth);

else

mCurrentFrame = Frame(mImGray, timestamp, mpORBextractorLeft, mpORBVocabulary, mK, mDistCoef, mbf, mThDepth);

// 跟踪

Track();

// 返回当前帧的位姿

return mCurrentFrame.mTcw.clone();

}

Track()函数跟踪结束后,会将mCurrentFrame赋值给mLastFrame

除了少数被选为KeyFrame的帧以外,大部分Frame对象的作用仅在于Tracking线程内追踪当前帧位姿,不会对LocalMapping线程和LoopClosing线程产生任何影响,在mLastFrame和mCurrentFrame更新之后就被系统销毁了.

关键帧KeyFrame

各成员函数/变量

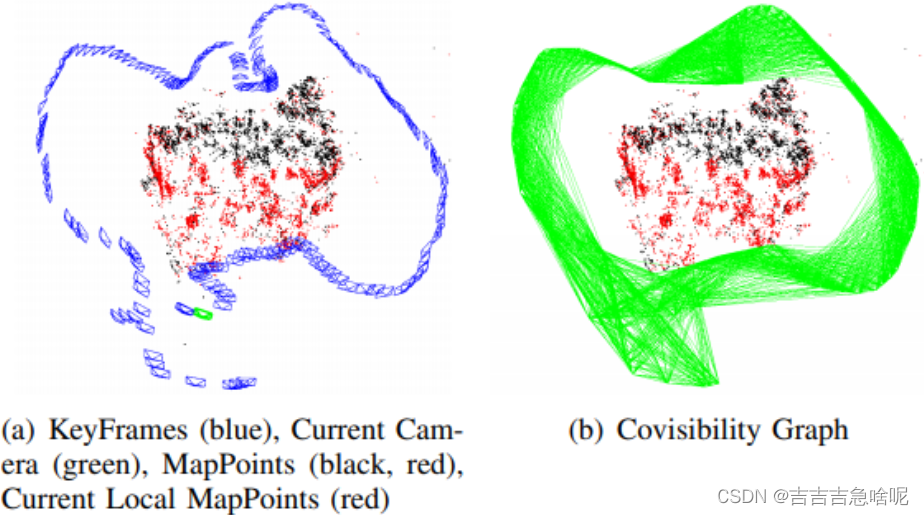

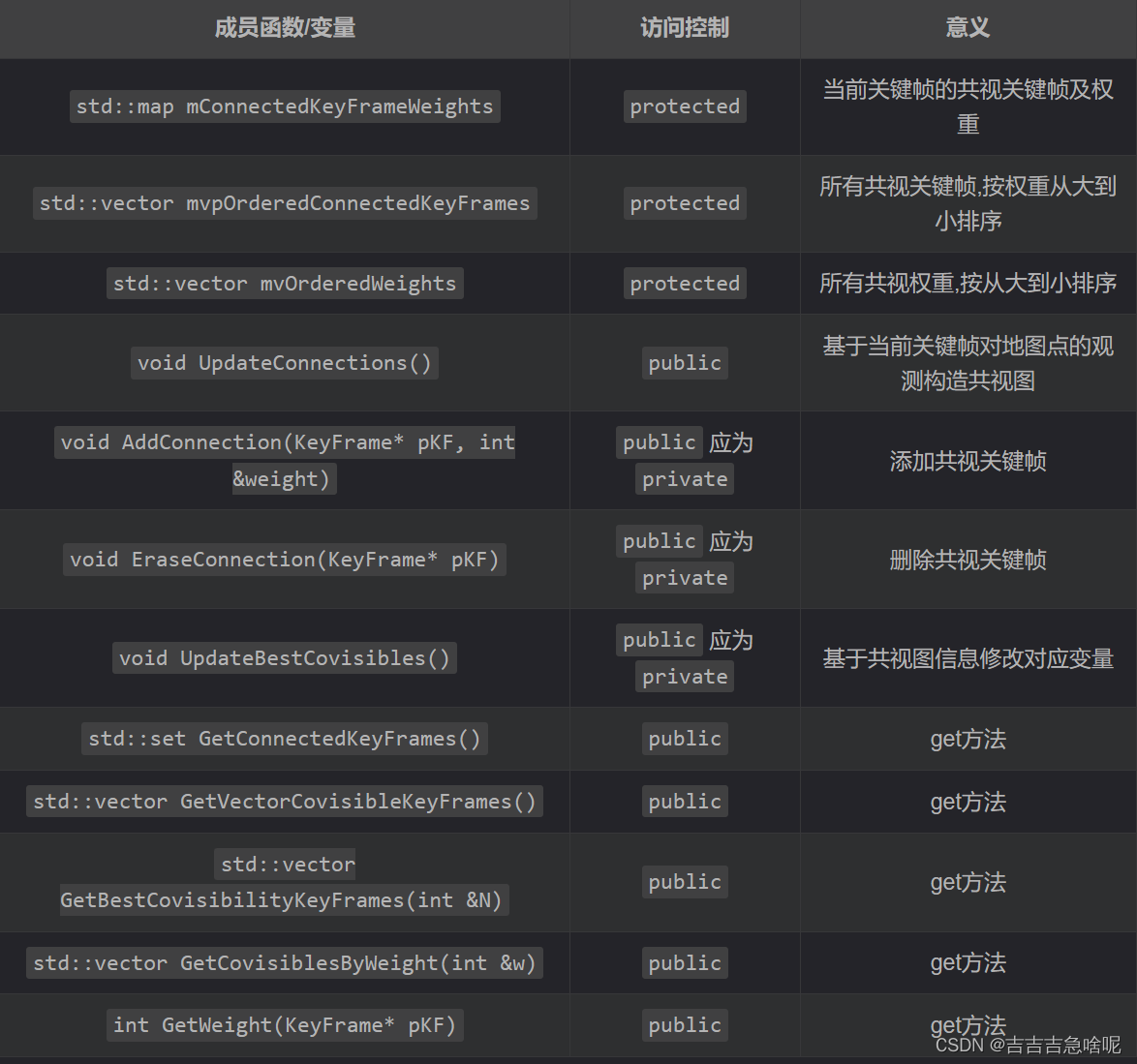

共视图: mConnectedKeyFrameWeights

能看到同一地图点的两关键帧之间存在共视关系,共视地图点的数量被称为权重.

共视图结构由3个成员变量维护:

- mConnectedKeyFrameWeights是一个std::map,无序地保存当前关键帧的共视关键帧及权重.

- mvpOrderedConnectedKeyFrames和mvOrderedWeights按权重降序分别保存当前关键帧的共视关键帧列表和权重列表.

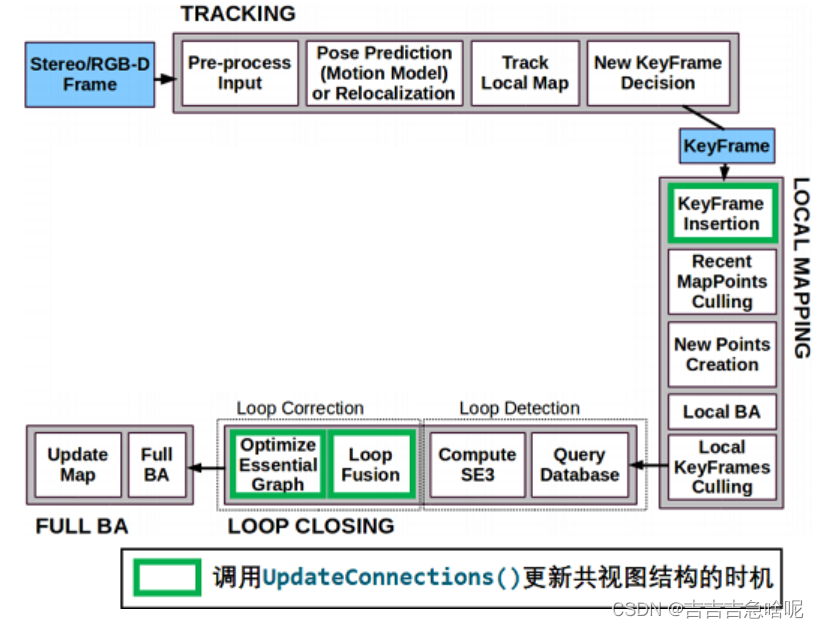

基于对地图点的观测重新构造共视图: UpdateConnections()

这3个变量由函数KeyFrame::UpdateConnections()进行初始化和维护,基于当前关键帧看到的地图点信息重新生成共视关键帧.

void KeyFrame::UpdateConnections() {

// 1. 通过遍历当前帧地图点获取其与其它关键帧的共视程度,存入变量KFcounter中

vector<MapPoint *> vpMP;

{

unique_lock<mutex> lockMPs(mMutexFeatures);

vpMP = mvpMapPoints;

}

map<KeyFrame *, int> KFcounter;

for (MapPoint *pMP : vpMP) {

map<KeyFrame *, size_t> observations = pMP->GetObservations();

for (map<KeyFrame *, size_t>::iterator mit = observations.begin(); mit != observations.end(); mit++) {

if (mit->first->mnId == mnId) // 与当前关键帧本身不算共视

continue;

KFcounter[mit->first]++;

}

}

// step2. 找到与当前关键帧共视程度超过15的关键帧,存入变量vPairs中

vector<pair<int, KeyFrame *> > vPairs;

int th = 15;

int nmax = 0;

KeyFrame *pKFmax = NULL;

for (map<KeyFrame *, int>::iterator mit = KFcounter.begin(), mend = KFcounter.end(); mit != mend; mit++) {

if (mit->second > nmax) {

nmax = mit->second;

pKFmax = mit->first;

}

if (mit->second >= th) {

vPairs.push_back(make_pair(mit->second, mit->first));

(mit->first)->AddConnection(this, mit->second); // 对超过阈值的共视边建立连接

}

}

// step3. 对关键帧按照共视权重降序排序,存入变量mvpOrderedConnectedKeyFrames和mvOrderedWeights中

sort(vPairs.begin(), vPairs.end());

list<KeyFrame *> lKFs;

list<int> lWs;

for (size_t i = 0; i < vPairs.size(); i++) {

lKFs.push_front(vPairs[i].second);

lWs.push_front(vPairs[i].first);

}

{

unique_lock<mutex> lockCon(mMutexConnections);

mConnectedKeyFrameWeights = KFcounter;

mvpOrderedConnectedKeyFrames = vector<KeyFrame *>(lKFs.begin(), lKFs.end());

mvOrderedWeights = vector<int>(lWs.begin(), lWs.end());

// step4. 对于第一次加入生成树的关键帧,取共视程度最高的关键帧为父关键帧

if (mbFirstConnection && mnId != 0) {

mpParent = mvpOrderedConnectedKeyFrames.front();

mpParent->AddChild(this);

mbFirstConnection = false;

}

}

}

- Tracking线程中初始化函数Tracking::StereoInitialization()或Tracking::MonocularInitialization()函数创建关键帧后会调用KeyFrame::UpdateConnections()初始化共视图信息.

- LocalMapping线程接受到新关键帧时会调用函数LocalMapping::ProcessNewKeyFrame()处理跟踪过程中加入的地图点,之后会调用KeyFrame::UpdateConnections()初始化共视图信息.(实际上这里处理的是Tracking线程中函数Tracking::CreateNewKeyFrame()创建的关键帧)

- LocalMapping线程处理完毕缓冲队列内所有关键帧后会调用LocalMapping::SearchInNeighbors()融合当前关键帧和共视关键帧间的重复地图点,之后会调用KeyFrame::UpdateConnections()更新共视图信息.

- LoopClosing线程闭环矫正函数LoopClosing::CorrectLoop()会多次调用KeyFrame::UpdateConnections()更新共视图信息.

函数AddConnection(KeyFrame* pKF, const int &weight)和EraseConnection(KeyFrame* pKF)先对变量mConnectedKeyFrameWeights进行修改,再调用函数UpdateBestCovisibles()修改变量mvpOrderedConnectedKeyFrames和mvOrderedWeights.

这3个函数都只在函数KeyFrame::UpdateConnections()内部被调用了,应该设为私有成员函数.

void KeyFrame::AddConnection(KeyFrame *pKF, const int &weight) {

// step1. 修改变量mConnectedKeyFrameWeights

{

unique_lock<mutex> lock(mMutexConnections);

if (!mConnectedKeyFrameWeights.count(pKF) || mConnectedKeyFrameWeights[pKF] != weight)

mConnectedKeyFrameWeights[pKF] = weight;

else

return;

}

// step2. 调用函数UpdateBestCovisibles()修改变量mvpOrderedConnectedKeyFrames和mvOrderedWeights

UpdateBestCovisibles();

}

void KeyFrame::EraseConnection(KeyFrame *pKF) {

// step1. 修改变量mConnectedKeyFrameWeights

bool bUpdate = false;

{

unique_lock<mutex> lock(mMutexConnections);

if (mConnectedKeyFrameWeights.count(pKF)) {

mConnectedKeyFrameWeights.erase(pKF);

bUpdate = true;

}

}

// step2. 调用函数UpdateBestCovisibles()修改变量mvpOrderedConnectedKeyFrames和mvOrderedWeights

if (bUpdate)

UpdateBestCovisibles();

}

void KeyFrame::UpdateBestCovisibles() {

unique_lock<mutex> lock(mMutexConnections);

// 取出所有关键帧进行排序,排序结果存入变量mvpOrderedConnectedKeyFrames和mvOrderedWeights中

vector<pair<int, KeyFrame *> > vPairs;

vPairs.reserve(mConnectedKeyFrameWeights.size());

for (map<KeyFrame *, int>::iterator mit = mConnectedKeyFrameWeights.begin(), mend = mConnectedKeyFrameWeights.end(); mit != mend; mit++)

vPairs.push_back(make_pair(mit->second, mit->first));

sort(vPairs.begin(), vPairs.end());

list<KeyFrame *> lKFs;

list<int> lWs;

for (size_t i = 0, iend = vPairs.size(); i < iend; i++) {

lKFs.push_front(vPairs[i].second);

lWs.push_front(vPairs[i].first);

}

mvpOrderedConnectedKeyFrames = vector<KeyFrame *>(lKFs.begin(), lKFs.end());

mvOrderedWeights = vector<int>(lWs.begin(), lWs.end());

}

325

325

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?