目录

Exercise 1

Set test to “Hello World” in the cell below to print “Hello World” and run the two cells below.

# (≈ 1 line of code)

# test =

# YOUR CODE STARTS HERE

test = "Hello World"

# YOUR CODE ENDS HERE

Exercise 2 basic_sigmoid

Build a function that returns the sigmoid of a real number x. Use math.exp(x) for the exponential function.

To refer to a function belonging to a specific package you could call it using package_name.function(). Run the code below to see an example with math.exp().

import math

from public_tests import *

# GRADED FUNCTION: basic_sigmoid

def basic_sigmoid(x):

"""

Compute sigmoid of x.

Arguments:

x -- A scalar

Return:

s -- sigmoid(x)

"""

# (≈ 1 line of code)

# s =

# YOUR CODE STARTS HERE

s = 1/(1 + math.exp(-x))

# YOUR CODE ENDS HERE

return s

接下来是一个错误提示:

sigmoid输入的x不能是list,将x转化为array后还要注意,传入的x只能是一个数,不能为整个array

修改后结果如下:

Exercise 3 sigmoid

Implement the sigmoid function using numpy.

# GRADED FUNCTION: sigmoid

def sigmoid(x):

"""

Compute the sigmoid of x

Arguments:

x -- A scalar or numpy array of any size

Return:

s -- sigmoid(x)

"""

# (≈ 1 line of code)

# s =

# YOUR CODE STARTS HERE

s = 1/(1 + np.exp(-x))

# YOUR CODE ENDS HERE

return s

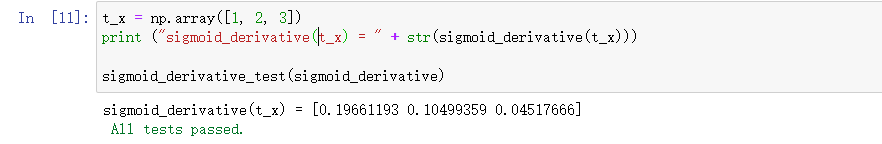

Exercise 4 sigmoid_derivative

Implement the function sigmoid_grad() to compute the gradient of the sigmoid function with respect to its input x.

# GRADED FUNCTION: sigmoid_derivative

def sigmoid_derivative(x):

"""

Compute the gradient (also called the slope or derivative) of the sigmoid function with respect to its input x.

You can store the output of the sigmoid function into variables and then use it to calculate the gradient.

Arguments:

x -- A scalar or numpy array

Return:

ds -- Your computed gradient.

"""

#(≈ 2 lines of code)

# s =

# ds =

# YOUR CODE STARTS HERE

s = sigmoid(x)

ds = s * (1 - s)

# YOUR CODE ENDS HERE

return ds

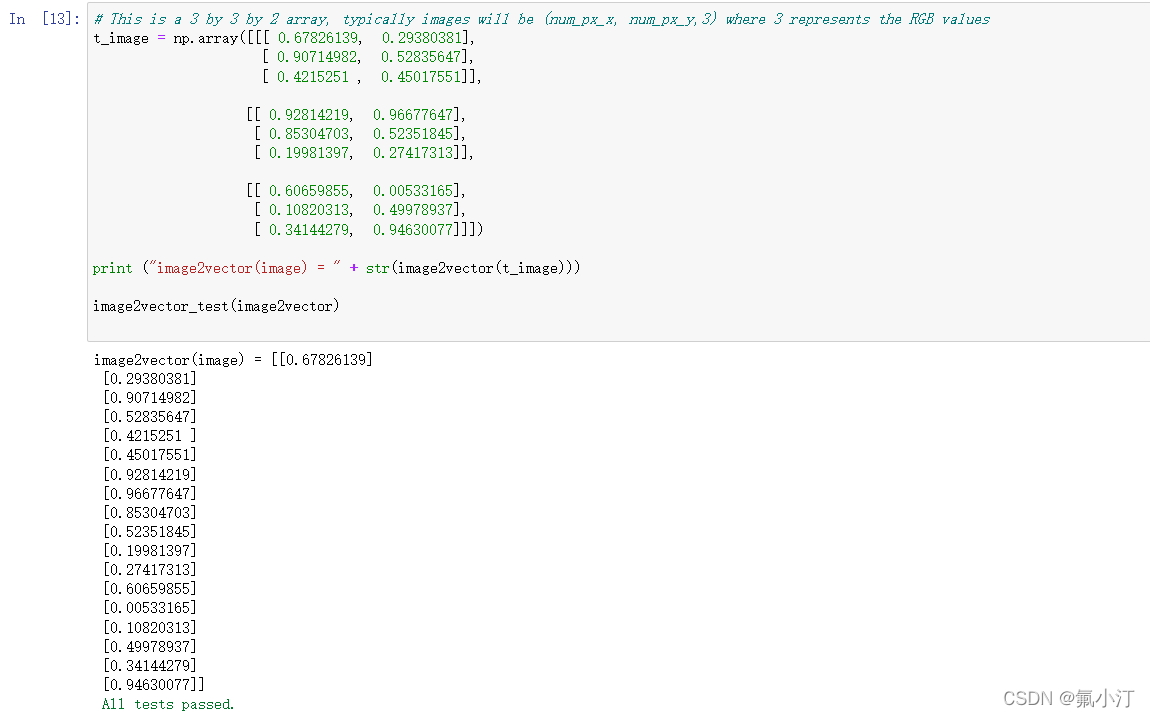

Exercise 5 image2vector

Implement image2vector() that takes an input of shape (length, height, 3) and returns a vector of shape (lengthheight3, 1).

# GRADED FUNCTION:image2vector

def image2vector(image):

"""

Argument:

image -- a numpy array of shape (length, height, depth)

Returns:

v -- a vector of shape (length*height*depth, 1)

"""

# (≈ 1 line of code)

# v =

# YOUR CODE STARTS HERE

v = image.reshape(image.shape[0] * image.shape[1] * image.shape[2], 1)

# YOUR CODE ENDS HERE

return v

Exercise 6 normalize_rows

这里不同于Andrew Ng在Deep Learning课程中使用的最大最小化归一化

这里使用的是L2范式归一化

np.linalg.norm(求范数)

np.linalg.norm的参数解释(blog)

Numpy官网解释

ord=1:列和的最大值

ord=2:|λE-ATA|=0,求特征值,然后求最大特征值得算术平方根

ord=∞:行和的最大值

ord=None:默认情况下,是求整体的矩阵元素平方和,再开根号。(没仔细看,以为默认情况下就是矩阵的二范数,修正一下,默认情况下是求整个矩阵元素平方和再开根号)

axis:处理类型

axis=1表示按行向量处理,求多个行向量的范数

axis=0表示按列向量处理,求多个列向量的范数

axis=None表示矩阵范数。

keepding:是否保持矩阵的二维特性

True表示保持矩阵的二维特性,False相反

# GRADED FUNCTION: normalize_rows

def normalize_rows(x):

"""

Implement a function that normalizes each row of the matrix x (to have unit length).

Argument:

x -- A numpy matrix of shape (n, m)

Returns:

x -- The normalized (by row) numpy matrix. You are allowed to modify x.

"""

#(≈ 2 lines of code)

# Compute x_norm as the norm 2 of x. Use np.linalg.norm(..., ord = 2, axis = ..., keepdims = True)

x_norm = np.linalg.norm(x, axis = 1, keepdims = True)

# Divide x by its norm.

# x =

# YOUR CODE STARTS HERE

x = x / x_norm

# YOUR CODE ENDS HERE

return x

Exercise 7 softmax

Implement a softmax function using numpy. You can think of softmax as a normalizing function used when your algorithm needs to classify two or more classes. You will learn more about softmax in the second course of this specialization

# GRADED FUNCTION: softmax

def softmax(x):

"""Calculates the softmax for each row of the input x.

Your code should work for a row vector and also for matrices of shape (m,n).

Argument:

x -- A numpy matrix of shape (m,n)

Returns:

s -- A numpy matrix equal to the softmax of x, of shape (m,n)

"""

#(≈ 3 lines of code)

# Apply exp() element-wise to x. Use np.exp(...).

# Create a vector x_sum that sums each row of x_exp. Use np.sum(..., axis = 1, keepdims = True).

# Compute softmax(x) by dividing x_exp by x_sum. It should automatically use numpy broadcasting.

# YOUR CODE STARTS HERE

x_exp = np.exp(x)

x_sum = np.sum(x_exp, axis = 1, keepdims = True)

s = x_exp / x_sum

# YOUR CODE ENDS HERE

return s

Exercise 8 L1

Implement the numpy vectorized version of the L1 loss. You may find the function abs(x) (absolute value of x) useful.

# GRADED FUNCTION: L1

def L1(yhat, y):

"""

Arguments:

yhat -- vector of size m (predicted labels)

y -- vector of size m (true labels)

Returns:

loss -- the value of the L1 loss function defined above

"""

#(≈ 1 line of code)

# YOUR CODE STARTS HERE

loss = np.sum(np.abs(yhat - y))

# YOUR CODE ENDS HERE

return loss

Exercise 9 L2

# GRADED FUNCTION: L2

def L2(yhat, y):

"""

Arguments:

yhat -- vector of size m (predicted labels)

y -- vector of size m (true labels)

Returns:

loss -- the value of the L2 loss function defined above

"""

#(≈ 1 line of code)

# loss = ...

# YOUR CODE STARTS HERE

loss = np.dot((yhat - y),(yhat - y).T)

# 第二种写法:loss = np.sum(np.square((yhat - y)))

# YOUR CODE ENDS HERE

return loss

8123

8123

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?