LSTM预测黄金价格

数据代码下载地址见文末

数据说明

Date-日期

Close/Last-收盘价

Volume-成交量

Open-开盘价

High-最高价

Low-最低价

导入数据

import os,math

from tensorflow.keras.layers import Dropout, Dense, Dense, LSTM,LayerNormalization

from sklearn.preprocessing import StandardScaler,MinMaxScaler

from sklearn import metrics

import numpy as np

import tensorflow as tf

from matplotlib import pyplot as plt

import pandas as pd

%matplotlib inline

from tensorflow.keras.models import Sequential

plt.rcParams['font.sans-serif'] = ['SimHei']

plt.rcParams['axes.unicode_minus'] = False

df = pd.read_csv('/home/mw/input/data2152/gold_data.csv')

df.head()

Date Close/Last Volume Open High Low

0 10/28/2022 1648.3 186519.0 1667.2 1670.9 1640.7

1 10/27/2022 1668.8 180599.0 1668.8 1674.8 1658.5

2 10/26/2022 1669.2 183453.0 1657.7 1679.4 1653.8

3 10/25/2022 1658.0 178706.0 1654.5 1666.8 1641.2

4 10/24/2022 1654.1 167448.0 1662.9 1675.5 1648.0

df.isnull().sum()

Date 0

Close/Last 0

Volume 39

Open 0

High 0

Low 0

dtype: int64

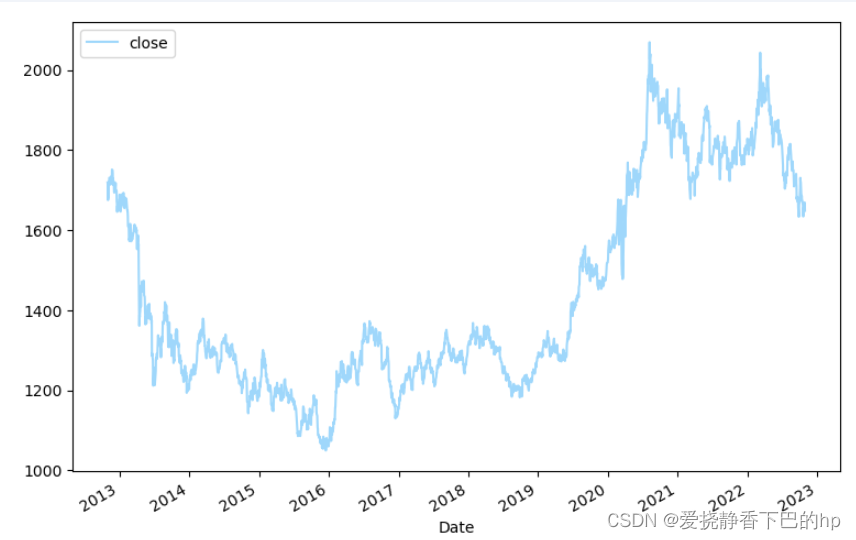

看下趋势

#设置画布

fig,ax = plt.subplots(figsize=(9,6), dpi=100)

#设置子图间距

Date = df['Date']

stock_Close = df['Close/Last']

ax.plot(Date, stock_Close, '-', color='lightskyblue', alpha=0.8, label='close')

ax.legend(loc="best")

ax.set_xlabel('Date')

fig.autofmt_xdate()

切分数据

train, test = df.loc[df['Date'] <= '2021-01-01'], df.loc[df['Date'] > '2021-01-01']

train.shape, test.shape

((2087, 6), (460, 6))

数据标准化

scaler = MinMaxScaler(feature_range=(0, 1))

train['Close/Last'] = scaler.fit_transform(train[['Close/Last']])

test['Close/Last'] = scaler.transform(test[['Close/Last']])

构造数据

TIME_STEPS=365

def create_sequences(X, y, time_steps=TIME_STEPS):

Xs, ys = [], []

for i in range(len(X)-time_steps):

Xs.append(X.iloc[i:(i+time_steps)].values)

ys.append(y.iloc[i+time_steps])

return np.array(Xs), np.array(ys)

X_train, y_train = create_sequences(train[['Close/Last']], train['Close/Last'])

X_test, y_test = create_sequences(test[['Close/Last']], test['Close/Last'])

print(f'Training shape: {X_train.shape}')

print(f'Testing shape: {X_test.shape}')

Training shape: (1722, 365, 1)

Testing shape: (95, 365, 1)

构建模型

model = Sequential()

model.add(LSTM(50, return_sequences=True, input_shape=(X_train.shape[1], X_train.shape[2])))

model.add(Dropout(rate=0.2))

model.add(LSTM(40, return_sequences=True))

model.add(Dropout(rate=0.2))

model.add(LSTM(30))

model.add(Dropout(rate=0.2))

model.add(Dense(1))

model.summary()

Model: "sequential_14"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

lstm_42 (LSTM) (None, 365, 50) 10400

_________________________________________________________________

dropout_42 (Dropout) (None, 365, 50) 0

_________________________________________________________________

lstm_43 (LSTM) (None, 365, 40) 14560

_________________________________________________________________

dropout_43 (Dropout) (None, 365, 40) 0

_________________________________________________________________

lstm_44 (LSTM) (None, 30) 8520

_________________________________________________________________

dropout_44 (Dropout) (None, 30) 0

_________________________________________________________________

dense_14 (Dense) (None, 1) 31

=================================================================

Total params: 33,511

Trainable params: 33,511

Non-trainable params: 0

_________________________________________________________________

模型编译

lr = 1e-2

optimizer = tf.keras.optimizers.Adam(learning_rate=lr)

model.compile(loss='mean_squared_error',

optimizer=optimizer)

from tensorflow.keras.callbacks import ModelCheckpoint, EarlyStopping

epochs = 80

# 保存最佳模型参数

checkpointer = ModelCheckpoint('best_model.h5',

monitor='val_loss',

verbose=1,

save_best_only=True,

save_weights_only=True)

# 设置早停

earlystopper = EarlyStopping(monitor='val_loss',

min_delta=0.001,

patience=10,

verbose=1)

训练开始

history = model.fit(X_train, y_train,

batch_size=64,

epochs=epochs,

validation_split=0.2,

validation_freq=1,

callbacks=[checkpointer, earlystopper])

Epoch 1/80

22/22 [==============================] - 41s 2s/step - loss: 0.0064 - val_loss: 0.0053

Epoch 00001: val_loss improved from inf to 0.00529, saving model to best_model.h5

Epoch 2/80

22/22 [==============================] - 35s 2s/step - loss: 0.0011 - val_loss: 0.0128

Epoch 00002: val_loss did not improve from 0.00529

Epoch 3/80

22/22 [==============================] - 35s 2s/step - loss: 8.6890e-04 - val_loss: 0.0015

Epoch 00003: val_loss improved from 0.00529 to 0.00153, saving model to best_model.h5

Epoch 4/80

22/22 [==============================] - 35s 2s/step - loss: 7.4595e-04 - val_loss: 0.0020

Epoch 00004: val_loss did not improve from 0.00153

Epoch 5/80

22/22 [==============================] - 35s 2s/step - loss: 6.1666e-04 - val_loss: 0.0038

Epoch 00005: val_loss did not improve from 0.00153

Epoch 6/80

22/22 [==============================] - 35s 2s/step - loss: 6.9752e-04 - val_loss: 0.0166

Epoch 00006: val_loss did not improve from 0.00153

Epoch 7/80

22/22 [==============================] - 35s 2s/step - loss: 7.1338e-04 - val_loss: 0.0026

Epoch 00007: val_loss did not improve from 0.00153

Epoch 8/80

22/22 [==============================] - 35s 2s/step - loss: 6.1054e-04 - val_loss: 0.0035

Epoch 00008: val_loss did not improve from 0.00153

Epoch 9/80

22/22 [==============================] - 35s 2s/step - loss: 6.3556e-04 - val_loss: 0.0038

Epoch 00009: val_loss did not improve from 0.00153

Epoch 10/80

22/22 [==============================] - 35s 2s/step - loss: 4.9548e-04 - val_loss: 0.0013

Epoch 00010: val_loss improved from 0.00153 to 0.00131, saving model to best_model.h5

Epoch 11/80

22/22 [==============================] - 35s 2s/step - loss: 4.7562e-04 - val_loss: 0.0064

Epoch 00011: val_loss did not improve from 0.00131

Epoch 12/80

22/22 [==============================] - 35s 2s/step - loss: 4.8332e-04 - val_loss: 0.0013

Epoch 00012: val_loss improved from 0.00131 to 0.00130, saving model to best_model.h5

Epoch 13/80

22/22 [==============================] - 35s 2s/step - loss: 4.2576e-04 - val_loss: 0.0013

Epoch 00013: val_loss did not improve from 0.00130

Epoch 00013: early stopping

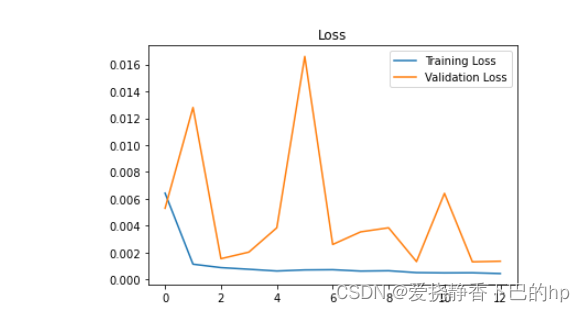

训练过程中损失可视化

plt.plot(history.history['loss'], label='Training Loss')

plt.plot(history.history['val_loss'], label='Validation Loss')

plt.title('Loss')

plt.legend()

波动比较大

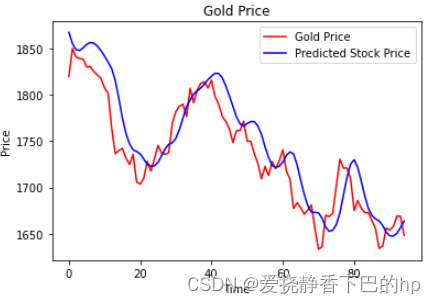

模型预测

model.load_weights('/home/mw/project/best_model.h5')

pre = model.predict(X_test, verbose =1)

predicted_price = scaler.inverse_transform(pre)

real_price = scaler.inverse_transform(test[['Close/Last']][365:])

3/3 [==============================] - 1s 169ms/step

plt.plot(real_price, color='red', label='Gold Price')

plt.plot(predicted_price, color='blue', label='Predicted Stock Price')

plt.title('Gold Price')

plt.xlabel('Time')

plt.ylabel('Price')

plt.legend()

R2 = metrics.r2_score(predicted_price, real_price)

print('R2: %.5f' % R2)

R2: 0.81060

数据代码下载

往期文章可以关注我的csdn

或者和鲸社区专栏专栏下巴同学的数据加油小站

会不定期分享数据挖掘、机器学习、风控模型、深度学习、NLP等方向的学习项目

代码地址:跳转后,点击右上角fork可以获得

数据地址:上述代码地址有数据,CSDN我也传了一份CSDN数据下载

499

499

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?