基本信息:

-

源码:GitHub - QwenLM/Qwen2.5-VL: Qwen2.5-VL is the multimodal large language model series developed by Qwen team, Alibaba Cloud.

-

博客:Qwen2.5 VL! Qwen2.5 VL! Qwen2.5 VL!

QWen2.5-VL 相比 QWen2-VL 主要改进之处:

-

强大的文档解析能力:将文本识别升级为全文档解析,在处理多场景、多语言以及各类内置元素(手写内容、表格、图表、化学公式和乐谱)的文档方面表现卓越。

-

跨格式的精准目标定位:在检测、定位和统计目标方面实现更高的准确性,支持绝对坐标和 JSON 格式,以进行更高级的空间推理。

-

超长视频理解与细粒度视频定位:将原生动态分辨率扩展到时间维度,增强对长达数小时视频的理解能力,同时能够在数秒内提取事件片段。

-

计算机和移动设备上增强的智能体功能:借助先进的定位、推理和决策能力,提升模型在智能手机和计算机上的智能体功能表现。

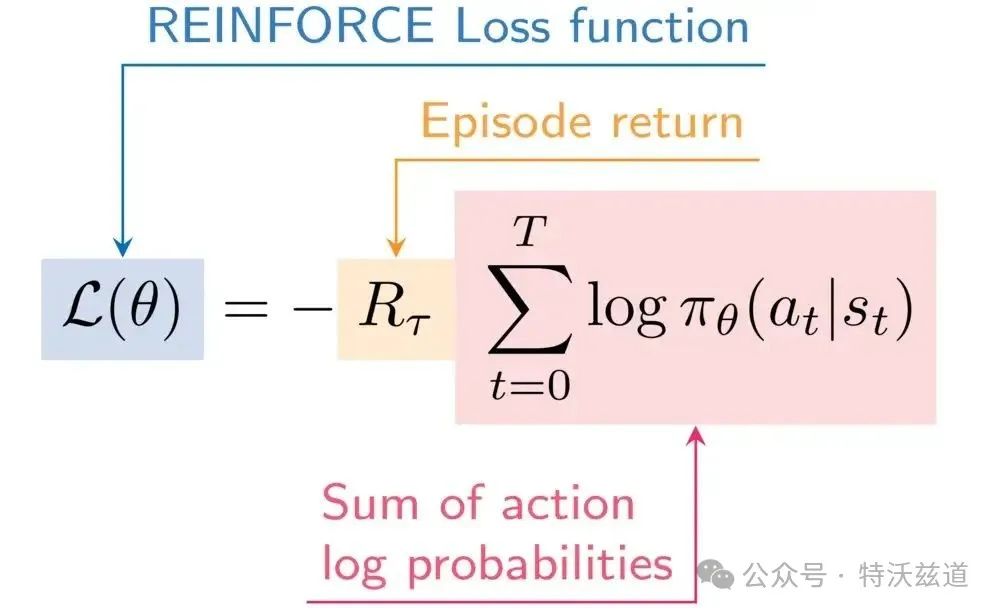

先来看下效果,访问 Qwen2.5-VL-72B-Instruct展示,上传如下图片:

输入提示词:

这张图是什么意思?请识别并提取图中的公式为 latex 文本

点击下方的 Submit(发送) 按钮。

下面是 QWen2.5-VL 的回答:

这张图展示了REINFORCE算法的损失函数。REINFORCE是一种策略梯度方法,用于解决强化学习问题。图中的公式表示的是该算法中损失函数的具体形式。

根据图片中的信息,我们可以提取出以下LaTeX格式的公式:

其中:

-

表示参数为(\theta)的损失函数。

-

表示一个episode(即一次完整的运行过程)的回报(return),它衡量了在该episode中采取一系列动作后的累积奖励。

-

表示从时间步0到T对所有时间步上的项求和。

-

表示在状态 下,根据当前策略 选择动作 的概率的对数。

这个损失函数的目标是通过最大化累积奖励来优化策略参数 。在实际应用中,我们会使用这个损失函数来更新策略网络的参数,以期望在未来能够获得更高的累积奖励。

docker部署

docker run -it \-p 7860:7860 \-p 7869:7869 \-v /path/to/models:/models \-v /dev/shm:/dev/shm \qwenllm/qwenvl:2.5-cu121 \vllm serve /models/qwen/Qwen2___5-VL-7B-Instruct \--served-model-name qwen2.5-VL-7B-Instruct \--api-key "sk-你想设置的的apikey" \--port 7869 \--dtype bfloat16 \--limit-mm-per-prompt "image=5,video=0" \--gpu-memory-utilization 0.9 \--swap-space 20 \--max-model-len 24576 \--block-size 16

k8s 部署

关键配置:

ec:containers:- name: qwen-vl-vllmimage: qwenllm/qwenvl:2.5-cu121ports:- containerPort: 7860- containerPort: 7869volumeMounts:- name: models-volumemountPath: /models- name: dshmmountPath: /dev/shmcommand:- "vllm"- "serve"- "/models/qwen/Qwen2___5-VL-7B-Instruct"- "--served-model-name"- "qwen2.5-VL-7B-Instruct"- "--api-key"- "sk-你想设置的的apikey"- "--port"- "7869"- "--dtype"- "bfloat16"- "--limit-mm-per-prompt"- "image=1,video=0"- "--gpu-memory-utilization"- "0.9"- "--swap-space"- "20"- "--max-model-len"- "24576" # 介于16384和32768之间- "--block-size"- "16" # 减小KV缓存块大小(默认16,可选8)

启动和测试

启动日志

成功启动的日志:

02-16 10:02:59 __init__.py:186] Automatically detected platform cuda.INFO 02-16 10:03:00 api_server.py:840] vLLM API server version 0.7.2.dev56+gbf3b79efINFO 02-16 10:03:00 api_server.py:841] args: Namespace(。。。)INFO 02-16 10:03:00 api_server.py:206] Started engine process with PID 77WARNING 02-16 10:03:00 config.py:2387] Casting torch.bfloat16 to torch.float16.INFO 02-16 10:03:04 __init__.py:186] Automatically detected platform cuda.WARNING 02-16 10:03:05 config.py:2387] Casting torch.bfloat16 to torch.float16.INFO 02-16 10:03:07 config.py:542] This model supports multiple tasks: {'embed', 'reward', 'score', 'generate', 'classify'}. Defaulting to 'generate'.INFO 02-16 10:03:11 config.py:542] This model supports multiple tasks: {'classify', 'embed', 'score', 'generate', 'reward'}. Defaulting to 'generate'.INFO 02-16 10:03:11 llm_engine.py:234] Initializing a V0 LLM engine (v0.7.2.dev56+gbf3b79ef) with config: model='/models/qwen/Qwen2___5-VL-7B-Instruct', 。。。, use_cached_outputs=True,INFO 02-16 10:03:12 cuda.py:230] Using Flash Attention backend.INFO 02-16 10:03:13 model_runner.py:1110] Starting to load model /models/qwen/Qwen2___5-VL-7B-Instruct...INFO 02-16 10:03:13 config.py:2993] cudagraph sizes specified by model runner [1, 2, 4, 8, 16, 24, 32, 40, 48, 56, 64, 72, 80, 88, 96, 104, 112, 120, 128, 136, 144, 152, 160, 168, 176, 184, 192, 200, 208, 216, 224, 232, 240, 248, 256] is overridden by config [256, 128, 2, 1, 4, 136, 8, 144, 16, 152, 24, 160, 32, 168, 40, 176, 48, 184, 56, 192, 64, 200, 72, 208, 80, 216, 88, 120, 224, 96, 232, 104, 240, 112, 248]Loading safetensors checkpoint shards: 0% Completed | 0/5 [00:00<?, ?it/s]Loading safetensors checkpoint shards: 20% Completed | 1/5 [00:05<00:20, 5.08s/it]Loading safetensors checkpoint shards: 40% Completed | 2/5 [00:05<00:07, 2.60s/it]Loading safetensors checkpoint shards: 60% Completed | 3/5 [00:11<00:07, 3.80s/it]Loading safetensors checkpoint shards: 80% Completed | 4/5 [00:16<00:04, 4.47s/it]Loading safetensors checkpoint shards: 100% Completed | 5/5 [00:22<00:00, 5.10s/it]Loading safetensors checkpoint shards: 100% Completed | 5/5 [00:22<00:00, 4.58s/it]INFO 02-16 10:03:36 model_runner.py:1115] Loading model weights took 15.6270 GBINFO 02-16 10:03:49 worker.py:267] Memory profiling takes 12.83 secondsINFO 02-16 10:03:49 worker.py:267] the current vLLM instance can use total_gpu_memory (23.65GiB) x gpu_memory_utilization (0.70) = 16.55GiBINFO 02-16 10:03:49 worker.py:267] model weights take 15.63GiB; non_torch_memory takes 0.08GiB; PyTorch activation peak memory takes 0.69GiB; the rest of the memory reserved for KV Cache is 0.16GiB.INFO 02-16 10:03:50 executor_base.py:110] # CUDA blocks: 186, # CPU blocks: 23405INFO 02-16 10:03:50 executor_base.py:115] Maximum concurrency for 1024 tokens per request: 2.91xINFO 02-16 10:04:05 model_runner.py:1434] Capturing cudagraphs for decoding. This may lead to unexpected consequences if the model is not static. To run the model in eager mode, set 'enforce_eager=True' or use '--enforce-eager' in the CLI. If out-of-memory error occurs during cudagraph capture, consider decreasing `gpu_memory_utilization` or switching to eager mode. You can also reduce the `max_num_seqs` as needed to decrease memory usage.Capturing CUDA graph shapes: 100%|██████████| 35/35 [00:17<00:00, 1.97it/s]INFO 02-16 10:04:23 model_runner.py:1562] Graph capturing finished in 18 secs, took 1.91 GiBINFO 02-16 10:04:23 llm_engine.py:431] init engine (profile, create kv cache, warmup model) took 46.92 secondsINFO 02-16 10:04:24 api_server.py:756] Using supplied chat template:INFO 02-16 10:04:24 api_server.py:756] NoneINFO 02-16 10:04:24 launcher.py:21] Available routes are:INFO 02-16 10:04:24 launcher.py:29] Route: /openapi.json, Methods: HEAD, GETINFO 02-16 10:04:24 launcher.py:29] Route: /docs, Methods: HEAD, GETINFO 02-16 10:04:24 launcher.py:29] Route: /docs/oauth2-redirect, Methods: HEAD, GETINFO 02-16 10:04:24 launcher.py:29] Route: /redoc, Methods: HEAD, GETINFO 02-16 10:04:24 launcher.py:29] Route: /health, Methods: GETINFO 02-16 10:04:24 launcher.py:29] Route: /ping, Methods: GET, POSTINFO 02-16 10:04:24 launcher.py:29] Route: /tokenize, Methods: POSTINFO 02-16 10:04:24 launcher.py:29] Route: /detokenize, Methods: POSTINFO 02-16 10:04:24 launcher.py:29] Route: /v1/models, Methods: GETINFO 02-16 10:04:24 launcher.py:29] Route: /version, Methods: GETINFO 02-16 10:04:24 launcher.py:29] Route: /v1/chat/completions, Methods: POSTINFO 02-16 10:04:24 launcher.py:29] Route: /v1/completions, Methods: POSTINFO 02-16 10:04:24 launcher.py:29] Route: /v1/embeddings, Methods: POSTINFO 02-16 10:04:24 launcher.py:29] Route: /pooling, Methods: POSTINFO 02-16 10:04:24 launcher.py:29] Route: /score, Methods: POSTINFO 02-16 10:04:24 launcher.py:29] Route: /v1/score, Methods: POSTINFO 02-16 10:04:24 launcher.py:29] Route: /rerank, Methods: POSTINFO 02-16 10:04:24 launcher.py:29] Route: /v1/rerank, Methods: POSTINFO 02-16 10:04:24 launcher.py:29] Route: /v2/rerank, Methods: POSTINFO 02-16 10:04:24 launcher.py:29] Route: /invocations, Methods: POSTINFO: Started server process [1]INFO: Waiting for application startup.INFO: Application startup complete.INFO: Uvicorn running on http://0.0.0.0:7869 (Press CTRL+C to quit)

API访问

以这个图片为例:

curl -X POST http://host:port/v1/chat/completions \-H "Content-Type: application/json" \-H "Authorization: Bearer 在命令行设置的api-key" \-d '{"model": "qwen2.5-VL-7B-Instruct","messages": [{"role": "system", "content": "You are a helpful assistant."},{"role": "user", "content": [{"type": "image_url", "image_url": {"url": "https://modelscope.oss-cn-beijing.aliyuncs.com/resource/qwen.png"}},{"type": "text", "text": "图片里的文字是啥?"}]}]}'{"id":"chatcmpl-11cc39af-26d3-99e7-82a3-0ff4e93e4db8","object":"chat.completion","created":1739715835,"model":"qwen2.5-VL-7B-Instruct","choices":[{"index":0,"message":{"role":"assistant","reasoning_content":null,"content":"图片中的文字是“TONGYIQwen”。","tool_calls":[]},"logprobs":null,"finish_reason":"stop","stop_reason":null}],"usage":{"prompt_tokens":72,"total_tokens":84,"completion_tokens":12,"prompt_tokens_details":null},"prompt_logprobs":null}%

base64方式:

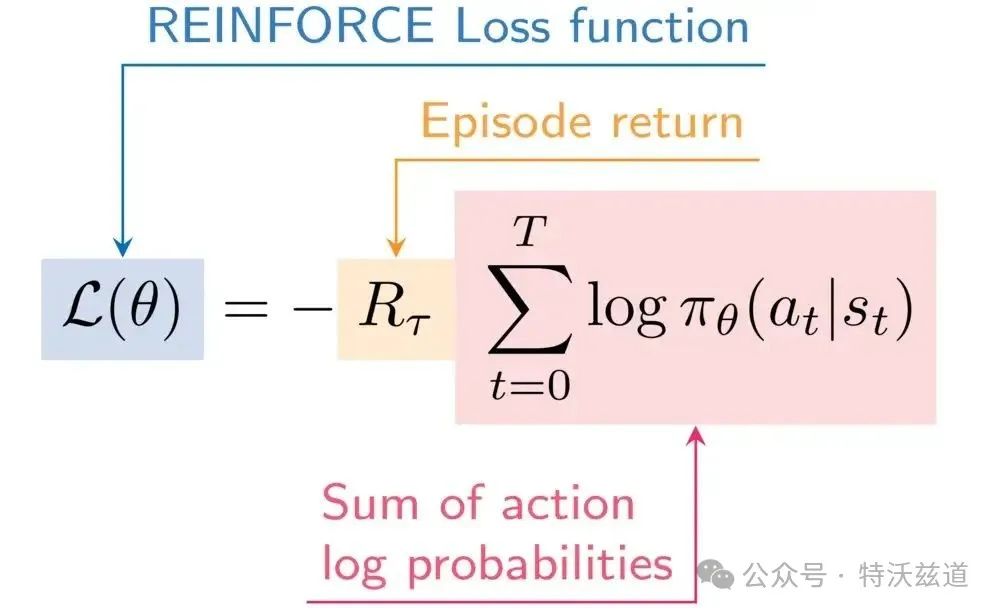

将图片

做base64编码后的内容,填入如下命令:

curl -X POST http://host:port/v1/chat/completions \-H "Content-Type: application/json" \-H "Authorization: Bearer 在命令行设置的api-key" \-d '{"model": "qwen2.5-VL-7B-Instruct","messages": [{"role": "system", "content": "You are a helpful assistant."},{"role": "user", "content": [{"type": "image_url", "image_url": {"url": "data:image;base64,图片文件base64后的内容"}},{"type": "text", "text": "图片里的文字是啥?"}]}]}'{"id":"chatcmpl-ad67cdd7-e2ce-92a3-aa94-1bfbede01e58","object":"chat.completion","created":1739757353,"model":"qwen2.5-VL-7B-Instruct","choices":[{"index":0,"message":{"role":"assistant","reasoning_content":null,"content":"这张图展示了 REINFORCE 算法中的损失函数(Loss function),具体公式如下:\n\n\\[\n\\mathcal{L}(\\theta) = - R_{\\tau} \\sum_{t=0}^{T} \\log \\pi_{\\theta}(a_t | s_t)\n\\]\n\n其中:\n- \\( \\mathcal{L}(\\theta) \\) 表示损失函数。\n- \\( R_{\\tau} \\) 表示该episode的回报。\n- \\(\\sum_{t=0}^{T} \\log \\pi_{\\theta}(a_t | s_t)\\) 表示每个动作的概率的对数和。","tool_calls":[]},"logprobs":null,"finish_reason":"stop","stop_reason":null}],"usage":{"prompt_tokens":830,"total_tokens":972,"completion_tokens":142,"prompt_tokens_details":null},"prompt_logprobs":null}%

返回内容:

这张图展示了 REINFORCE 算法中的损失函数(Loss function),具体公式如下:

其中:

-

表示损失函数。

-

表示该episode的回报。

-

表示每个动作的概率的对数和。

webui访问

将官网的 web_demo_mm.py 修改下,改为请求直接转发到 api 接口,且将图片本地地址 file:///path/to/upload/filename 中的 /path/to/upload/filename 本地路径的文件 base64 结果保证为 base64 格式的 url 发送给 api 接口。这样即有 api 服务,又有 web 服务的容器就可用了。k8s 配置中的关键启动命令:

command:- "sh"- "-c"- |vllm serve /models/qwen/Qwen2___5-VL-7B-Instruct \--served-model-name qwen2.5-VL-7B-Instruct \--api-key sk-mOzPiNBIJFM4msQktNypl9PolWL58NDc47MF8szfSRE2wskM \--port 7869 \--dtype bfloat16 \--limit-mm-per-prompt image=1,video=0 \--gpu-memory-utilization 0.9 \--swap-space 20 \--max-model-len 24576 \--block-size 16 & # 放到后台,否则会阻塞后面的命令执行python webui-call-api-only.py --server-port 7860

遇到的问题

transformers 不识别 qwen2_5_vl

➜ k logs -f -n aitryouts qwen-vl-5fcb84cf6-ldtdjINFO 02-16 08:31:21 __init__.py:190] Automatically detected platform cuda.INFO 02-16 08:31:21 api_server.py:840] vLLM API server version 0.7.2。。。INFO 02-16 08:31:21 api_server.py:206] Started engine process with PID 77Traceback (most recent call last):File "/opt/conda/lib/python3.11/site-packages/transformers/models/auto/configuration_auto.py", line 1071, in from_pretrainedconfig_class = CONFIG_MAPPING[config_dict["model_type"]]~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^^^^^^^^^File "/opt/conda/lib/python3.11/site-packages/transformers/models/auto/configuration_auto.py", line 773, in __getitem__raise KeyError(key)KeyError: 'qwen2_5_vl'During handling of the above exception, another exception occurred:Traceback (most recent call last):File "/opt/conda/bin/vllm", line 8, in <module>sys.exit(main())^^^^^^。。。File "/opt/conda/lib/python3.11/site-packages/vllm/transformers_utils/config.py", line 245, in get_configraise eFile "/opt/conda/lib/python3.11/site-packages/vllm/transformers_utils/config.py", line 225, in get_configconfig = AutoConfig.from_pretrained(^^^^^^^^^^^^^^^^^^^^^^^^^^^File "/opt/conda/lib/python3.11/site-packages/transformers/models/auto/configuration_auto.py", line 1073, in from_pretrainedraise ValueError(ValueError: The checkpoint you are trying to load has model type `qwen2_5_vl` but Transformers does not recognize this architecture. This could be because of an issue with the checkpoint, or because your version of Transformers is out of date.You can update Transformers with the command `pip install --upgrade transformers`. If this does not work, and the checkpoint is very new, then there may not be a release version that supports this model yet. In this case, you can get the most up-to-date code by installing Transformers from source with the command `pip install git+https://github.com/huggingface/transformers.git`

这就是官网中提到的 KeyError: 'qwen2_5_vl'; 但是镜像中的 vLLM 和 tansformers 库已经都很新了:

root@10-9-30-34:~# docker run --rm -it r.lccomputing.com/lcc/vllm:0.7.2_cu121 bashroot@a5c01aea0b47:/home# pip list | grep -E 'vllm|transformers'transformers 4.48.3vllm 0.7.2

用qwen-vl官方的镜像 qwenllm/qwenvl:2.5-cu121 后,好了:

root@qwen-vl-84f5f8b99f-j7hfx:/data/shared/Qwen# pip list | grep -E 'vllm|transformers'transformers 4.49.0.dev0transformers-stream-generator 0.0.4vllm 0.7.2.dev56+gbf3b79ef

CUDA OOM

ERROR 02-16 09:04:35 engine.py:389] CUDA out of memory. Tried to allocate 9.03 GiB. GPU 0 has a total capacity of 23.65 GiB of which 5.69 GiB is free. Process 698854 has 17.95 GiB memory in use. Of the allocated memory 17.35 GiB is allocated by PyTorch, and 152.49 MiB is reserved by PyTorch but unallocated. If reserved but unallocated memory is large try setting PYTORCH_CUDA_ALLOC_CONF=expandable_segments:True to avoid fragmentation. See documentation for Memory Management (https://pytorch.org/docs/stable/notes/cuda.html#environment-variables)ERROR 02-16 09:04:35 engine.py:389] Traceback (most recent call last):Process SpawnProcess-1:

以下参数调整无效,仍然OOM:

-

--dtype bfloat16 改成 float16

-

--limit-mm-per-prompt image=5,video=5 改为 image=3,video=3

直到修改 max_model_len=4096 等值,才没有 OOM 了

context length 警告

WARNING 02-16 10:03:38 model_runner.py:1288] Computed max_num_seqs (min(256, 5120 // 98304)) to be less than 1. Setting it to the minimum value of 1.It looks like you are trying to rescale already rescaled images. If the input images have pixel values between 0 and 1, set `do_rescale=False` to avoid rescaling them again.WARNING 02-16 10:03:48 profiling.py:187] The context length (5120) of the model is too short to hold the multi-modal embeddings in the worst case (98304 tokens in total, out of which {'image': 49152, 'video': 49152} are reserved for multi-modal embeddings). This may cause certain multi-modal inputs to fail during inference, even when the input text is short. To avoid this, you should increase `max_model_len`, reduce `max_num_seqs`, and/or reduce `mm_counts`.

根据 Deepseek 的回答解决了, 以下是 Deepseek 的回答内容, 一开头即切中要害:

根据最新的错误日志和测试结果,问题核心在于 多模态嵌入的显存需求与KV缓存容量之间的冲突。以下是分步优化方案:

关键矛盾分析

-

多模态嵌入需求:

-

模型要求为图像和视频预留

16384 + 32768 = 49152 tokens,但max_model_len=32768的上下文长度不足(需满足总需求 <= max_model_len)。

-

-

KV缓存容量限制:

-

当设置

max_model_len=32768时,KV缓存所需显存超过GPU容量(24GB),导致OOM。

-

优化策略

1. 显存分配平衡

目标:在模型权重加载(约15.6GB)和KV缓存之间合理分配显存。

ounter(lineounter(lineounter(lineounter(line# 调整参数示例--max-model-len 24576 \ # 介于16384和32768之间--gpu-memory-utilization 0.9 \ # 提升显存利用率至90%(24GB * 0.9 = 21.6GB)--block-size 16 \ # 减小KV缓存块大小(默认16,可选8)

2. 严格限制多模态Token

ounter(lineounter(line# 进一步降低图像/视频的Token配额(默认值可能过高)--limit-mm-per-prompt image=1024,video=2048 \ # 总配额从49152降到3072

3. 强制关闭视频支持(若无视频输入)

ounter(lineounter(line# 如果不需要处理视频,直接禁用视频Token预留--limit-mm-per-prompt video=0

4. 使用量化技术(终极方案)

ounter(lineounter(lineounter(lineounter(line# 加载AWQ量化模型(显存需求降低30-40%)--model /path/to/qwen2.5-vl-7b-instruct-awq \--quantization awq \--max-model-len 32768 # 量化后显存足够支持更大上下文

完整启动命令示例

ounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(lineounter(linevllm serve /models/qwen/Qwen2___5-VL-7B-Instruct \--served-model-name qwen2.5-VL-7B-Instruct \--api-key sk-mOzPiNBIJFM4msQktNypl9PolWL58NDc47MF8szfSRE2wskM \--port 7869 \--dtype bfloat16 \--max-model-len 24576 \ # 平衡上下文长度和显存--gpu-memory-utilization 0.9 \ # 显存利用率提升到90%--block-size 8 \ # 减小KV缓存块大小(可选)--limit-mm-per-prompt image=1024,video=0 \ # 仅支持图像,严格限制Token--max-num-seqs 2 \ # 限制并发请求数--enforce-eager # 避免动态批处理开销

参数调整验证逻辑

-

KV缓存容量计算:

kv_cache_size = 2 * num_layers * hidden_size * block_size * dtype_size# 假设模型参数:# num_layers=32, hidden_size=4096, block_size=8, dtype_size=2 (bfloat16)kv_cache_size = 2 * 32 * 4096 * 8 * 2 = 4.19 MB/blocktotal_blocks = 23008 // 8 = 2876 blocks # 日志中显示23008 tokenstotal_kv_cache = 2876 * 4.19 MB ≈ 11.8 GB-

总显存占用:模型权重(15.6GB) + KV缓存(11.8GB)≈ 27.4GB(超过24GB)

-

必须通过量化或降低

block_size/max_model_len减少显存需求。

- ounter(line

- ounter(line

- ounter(line

- ounter(line

- ounter(line

- ounter(line

-

多模态Token容量验证:

required_tokens = image_tokens + video_tokens + text_tokens# 调整后:1024(image) + 0(video) + 2048(text) = 3072 tokensassert required_tokens <= max_model_len # 3072 <= 24576 ✅- ounter(line

- ounter(line

- ounter(line

附加调试建议

-

显存实时监控:

watch -n 0.5 nvidia-smi # 高频率监控显存波动- ounter(line

-

最小化输入测试:

# 使用极简输入验证功能curl ... -d '{"messages": [{"role": "user","content": [{"type": "text", "text": "<image> Describe briefly"},{"type": "image_url", "image_url": {"url": "URL"}}]}]}'- ounter(line

- ounter(line

- ounter(line

- ounter(line

- ounter(line

- ounter(line

- ounter(line

- ounter(line

- ounter(line

- ounter(line

-

升级vLLM:

pip install -U vllm # 确保版本≥0.4.2(修复多模态内存计算)- ounter(line

最终选择

-

若需完整功能:使用量化模型 +

max_model_len=32768。 -

若接受功能裁剪:当前参数组合可运行,但需严格限制输入复杂度。

API文件参数

不用webui,命令行直接发起如下 curl 请求没响应:

curl -X POST http://host:port/v1/chat/completions \-H "Content-Type: application/json" \-H "Authorization: Bearer sk-设置的apikey" \-d '{"model": "qwen2.5-VL-7B-Instruct","messages": [{"role": "system", "content": "You are a helpful assistant."},{"role": "user", "content": [{"type": "image_url", "image_url": {"url": "file:///tmp/gradio/94106baa19cfb3a9ce9acd4c5a47eae99d40d0e6e457291abefdaf00fdeeee54/ReinforceLossFunction.jpeg"}},{"type": "text", "text": "图片里的文字是啥?"}]}]}'

API服务端报错:

INFO: 10.196.84.25:51066 - "POST /v1/chat/completions HTTP/1.1" 500 Internal Server Error服务端的文件 /tmp/gradio/94106baa19cfb3a9ce9acd4c5a47eae99d40d0e6e457291abefdaf00fdeeee54/ReinforceLossFunction.jpeg 是在的。

--- 以上是 Deepseek 的回答

然后实测配置成如下是没有这个警告的:

vllm serve /models/qwen/Qwen2___5-VL-7B-Instruct \--served-model-name qwen2.5-VL-7B-Instruct \--api-key sk-mOzPiNBIJFM4msQktNypl9PolWL58NDc47MF8szfSRE2wskM \--port 7869 \--dtype bfloat16 \--limit-mm-per-prompt image=1,video=0 \--gpu-memory-utilization 0.9 \--swap-space 20 \--max-model-len 24576 \--block-size 16 || true &&

但是把 limit-mm-per-prompt 从 image=1,video=0 调整为 image=5,video=0

警告又出现了:

ounter(lineounter(lineWARNING 02-17 03:21:07 model_runner.py:1288] Computed max_num_seqs (min(256, 24576 // 81920)) to be less than 1. Setting it to the minimum value of 1.WARNING 02-17 03:21:12 profiling.py:187] The context length (24576) of the model is too short to hold the multi-modal embeddings in the worst case (81920 tokens in total, out of which {'image': 81920} are reserved for multi-modal embeddings). This may cause certain multi-modal inputs to fail during inference, even when the input text is short. To avoid this, you should increase `max_model_len`, reduce `max_num_seqs`, and/or reduce `mm_counts`.

可见 worst case 是根据 limit-mm-per-prompt 计算得来的

如何学习AI大模型?

我在一线互联网企业工作十余年里,指导过不少同行后辈。帮助很多人得到了学习和成长。

我意识到有很多经验和知识值得分享给大家,也可以通过我们的能力和经验解答大家在人工智能学习中的很多困惑,所以在工作繁忙的情况下还是坚持各种整理和分享。但苦于知识传播途径有限,很多互联网行业朋友无法获得正确的资料得到学习提升,故此将并将重要的AI大模型资料包括AI大模型入门学习思维导图、精品AI大模型学习书籍手册、视频教程、实战学习等录播视频免费分享出来。

第一阶段: 从大模型系统设计入手,讲解大模型的主要方法;

第二阶段: 在通过大模型提示词工程从Prompts角度入手更好发挥模型的作用;

第三阶段: 大模型平台应用开发借助阿里云PAI平台构建电商领域虚拟试衣系统;

第四阶段: 大模型知识库应用开发以LangChain框架为例,构建物流行业咨询智能问答系统;

第五阶段: 大模型微调开发借助以大健康、新零售、新媒体领域构建适合当前领域大模型;

第六阶段: 以SD多模态大模型为主,搭建了文生图小程序案例;

第七阶段: 以大模型平台应用与开发为主,通过星火大模型,文心大模型等成熟大模型构建大模型行业应用。

👉学会后的收获:👈

• 基于大模型全栈工程实现(前端、后端、产品经理、设计、数据分析等),通过这门课可获得不同能力;

• 能够利用大模型解决相关实际项目需求: 大数据时代,越来越多的企业和机构需要处理海量数据,利用大模型技术可以更好地处理这些数据,提高数据分析和决策的准确性。因此,掌握大模型应用开发技能,可以让程序员更好地应对实际项目需求;

• 基于大模型和企业数据AI应用开发,实现大模型理论、掌握GPU算力、硬件、LangChain开发框架和项目实战技能, 学会Fine-tuning垂直训练大模型(数据准备、数据蒸馏、大模型部署)一站式掌握;

• 能够完成时下热门大模型垂直领域模型训练能力,提高程序员的编码能力: 大模型应用开发需要掌握机器学习算法、深度学习框架等技术,这些技术的掌握可以提高程序员的编码能力和分析能力,让程序员更加熟练地编写高质量的代码。

1.AI大模型学习路线图

2.100套AI大模型商业化落地方案

3.100集大模型视频教程

4.200本大模型PDF书籍

5.LLM面试题合集

6.AI产品经理资源合集

👉获取方式:

😝有需要的小伙伴,可以保存图片到wx扫描二v码免费领取【保证100%免费】🆓

666

666

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?