https://dev.intelrealsense.com/docs/rs-hello-realsense

👆上面代码仅供参考,不要用它,要到👇下面下载source code源码

要先安装inter realsense SDK 2.0并下载source code

文章目录

rs-hello-realsense(C++)(调用摄像头显示中心深度距离)

Demonstrates the basics of connecting to a RealSense device and using depth data

realsense_test.cpp

#include <stdio.h>

#include <iostream>

#include <librealsense2/rs.hpp> // Include Intel RealSense Cross Platform API

int main() {

// Create a Pipeline - this serves as a top-level API for streaming and processing frames

rs2::pipeline p;

// Configure and start the pipeline

p.start();

// Block program until frames arrive

rs2::frameset frames = p.wait_for_frames();

// Try to get a frame of a depth image

rs2::depth_frame depth = frames.get_depth_frame();

// Get the depth frame's dimensions

float width = depth.get_width();

float height = depth.get_height();

// Query the distance from the camera to the object in the center of the image

float dist_to_center = depth.get_distance(width / 2, height / 2);

// Print the distance

std::cout << "The camera is facing an object " << dist_to_center << " meters away \r";

return 0;

}

运行结果:

The camera is facing an object 3.602 meters away

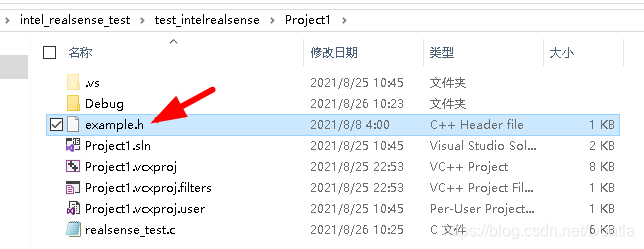

Distance(C)(测试depth流)(SDK里少了example.h文件,搞不了[我勒个去,它没集成在安装包里面,要下source code才有这个文件])

Equivalent to hello-realsense but rewritten for C users

Intel® RealSense™ SDK 2.0 (v2.49.0)

直接把这个文件拷到项目工程文件夹下,跟.c文件放在一起

然后编译运行,一次成功(中间还有个小插曲,就是realsense2.dll文件是2.48的,但是最新的是要求2.49的,所以第一次运行没成功,把2.49的realsense2.dll拷过去就运行成功了)

realsense_test.c

// License: Apache 2.0. See LICENSE file in root directory.

// Copyright(c) 2017 Intel Corporation. All Rights Reserved.

/* Include the librealsense C header files */

#include <librealsense2/rs.h>

#include <librealsense2/h/rs_pipeline.h>

#include <librealsense2/h/rs_option.h>

#include <librealsense2/h/rs_frame.h>

#include "example.h"

#include <stdlib.h>

#include <stdint.h>

#include <stdio.h>

// These parameters are reconfigurable //

#define STREAM RS2_STREAM_DEPTH // rs2_stream is a types of data provided by RealSense device //

#define FORMAT RS2_FORMAT_Z16 // rs2_format identifies how binary data is encoded within a frame //

#define WIDTH 640 // Defines the number of columns for each frame or zero for auto resolve//

#define HEIGHT 0 // Defines the number of lines for each frame or zero for auto resolve //

#define FPS 30 // Defines the rate of frames per second //

#define STREAM_INDEX 0 // Defines the stream index, used for multiple streams of the same type //

int main()

{

rs2_error* e = 0;

// Create a context object. This object owns the handles to all connected realsense devices.

// The returned object should be released with rs2_delete_context(...)

rs2_context* ctx = rs2_create_context(RS2_API_VERSION, &e);

check_error(e);

/* Get a list of all the connected devices. */

// The returned object should be released with rs2_delete_device_list(...)

rs2_device_list* device_list = rs2_query_devices(ctx, &e);

check_error(e);

int dev_count = rs2_get_device_count(device_list, &e);

check_error(e);

printf("There are %d connected RealSense devices.\n", dev_count);

if (0 == dev_count)

return EXIT_FAILURE;

// Get the first connected device

// The returned object should be released with rs2_delete_device(...)

rs2_device* dev = rs2_create_device(device_list, 0, &e);

check_error(e);

print_device_info(dev);

// Create a pipeline to configure, start and stop camera streaming

// The returned object should be released with rs2_delete_pipeline(...)

rs2_pipeline* pipeline = rs2_create_pipeline(ctx, &e);

check_error(e);

// Create a config instance, used to specify hardware configuration

// The retunred object should be released with rs2_delete_config(...)

rs2_config* config = rs2_create_config(&e);

check_error(e);

// Request a specific configuration

rs2_config_enable_stream(config, STREAM, STREAM_INDEX, WIDTH, HEIGHT, FORMAT, FPS, &e);

check_error(e);

// Start the pipeline streaming

// The retunred object should be released with rs2_delete_pipeline_profile(...)

rs2_pipeline_profile* pipeline_profile = rs2_pipeline_start_with_config(pipeline, config, &e);

if (e)

{

printf("The connected device doesn't support depth streaming!\n");

exit(EXIT_FAILURE);

}

while (1)

{

// This call waits until a new composite_frame is available

// composite_frame holds a set of frames. It is used to prevent frame drops

// The returned object should be released with rs2_release_frame(...)

rs2_frame* frames = rs2_pipeline_wait_for_frames(pipeline, RS2_DEFAULT_TIMEOUT, &e);

check_error(e);

// Returns the number of frames embedded within the composite frame

int num_of_frames = rs2_embedded_frames_count(frames, &e);

check_error(e);

int i;

for (i = 0; i < num_of_frames; ++i)

{

// The retunred object should be released with rs2_release_frame(...)

rs2_frame* frame = rs2_extract_frame(frames, i, &e);

check_error(e);

// Check if the given frame can be extended to depth frame interface

// Accept only depth frames and skip other frames

if (0 == rs2_is_frame_extendable_to(frame, RS2_EXTENSION_DEPTH_FRAME, &e))

continue;

// Get the depth frame's dimensions

int width = rs2_get_frame_width(frame, &e);

check_error(e);

int height = rs2_get_frame_height(frame, &e);

check_error(e);

// Query the distance from the camera to the object in the center of the image

float dist_to_center = rs2_depth_frame_get_distance(frame, width / 2, height / 2, &e);

check_error(e);

// Print the distance

printf("The camera is facing an object %.3f meters away.\n", dist_to_center);

rs2_release_frame(frame);

}

rs2_release_frame(frames);

}

// Stop the pipeline streaming

rs2_pipeline_stop(pipeline, &e);

check_error(e);

// Release resources

rs2_delete_pipeline_profile(pipeline_profile);

rs2_delete_config(config);

rs2_delete_pipeline(pipeline);

rs2_delete_device(dev);

rs2_delete_device_list(device_list);

rs2_delete_context(ctx);

return EXIT_SUCCESS;

}

运行结果:

Color(C)(测试color流)

Demonstrate how to stream color data and prints some frame information

realsense_test.c

// License: Apache 2.0. See LICENSE file in root directory.

// Copyright(c) 2017 Intel Corporation. All Rights Reserved.

/* Include the librealsense C header files */

#include <librealsense2/rs.h>

#include <librealsense2/h/rs_pipeline.h>

#include <librealsense2/h/rs_frame.h>

#include <stdlib.h>

#include <stdint.h>

#include <stdio.h>

#include "example.h"

// These parameters are reconfigurable //

#define STREAM RS2_STREAM_COLOR // rs2_stream is a types of data provided by RealSense device //

#define FORMAT RS2_FORMAT_RGB8 // rs2_format identifies how binary data is encoded within a frame //

#define WIDTH 640 // Defines the number of columns for each frame //

#define HEIGHT 480 // Defines the number of lines for each frame //

#define FPS 30 // Defines the rate of frames per second //

#define STREAM_INDEX 0 // Defines the stream index, used for multiple streams of the same type //

int main()

{

rs2_error* e = 0;

// Create a context object. This object owns the handles to all connected realsense devices.

// The returned object should be released with rs2_delete_context(...)

rs2_context* ctx = rs2_create_context(RS2_API_VERSION, &e);

check_error(e);

/* Get a list of all the connected devices. */

// The returned object should be released with rs2_delete_device_list(...)

rs2_device_list* device_list = rs2_query_devices(ctx, &e);

check_error(e);

int dev_count = rs2_get_device_count(device_list, &e);

check_error(e);

printf("There are %d connected RealSense devices.\n", dev_count);

if (0 == dev_count)

return EXIT_FAILURE;

// Get the first connected device

// The returned object should be released with rs2_delete_device(...)

rs2_device* dev = rs2_create_device(device_list, 0, &e);

check_error(e);

print_device_info(dev);

// Create a pipeline to configure, start and stop camera streaming

// The returned object should be released with rs2_delete_pipeline(...)

rs2_pipeline* pipeline = rs2_create_pipeline(ctx, &e);

check_error(e);

// Create a config instance, used to specify hardware configuration

// The retunred object should be released with rs2_delete_config(...)

rs2_config* config = rs2_create_config(&e);

check_error(e);

// Request a specific configuration

rs2_config_enable_stream(config, STREAM, STREAM_INDEX, WIDTH, HEIGHT, FORMAT, FPS, &e);

check_error(e);

// Start the pipeline streaming

// The retunred object should be released with rs2_delete_pipeline_profile(...)

rs2_pipeline_profile* pipeline_profile = rs2_pipeline_start_with_config(pipeline, config, &e);

if (e)

{

printf("The connected device doesn't support color streaming!\n");

exit(EXIT_FAILURE);

}

while (1)

{

// This call waits until a new composite_frame is available

// composite_frame holds a set of frames. It is used to prevent frame drops

// The returned object should be released with rs2_release_frame(...)

rs2_frame* frames = rs2_pipeline_wait_for_frames(pipeline, RS2_DEFAULT_TIMEOUT, &e);

check_error(e);

// Returns the number of frames embedded within the composite frame

int num_of_frames = rs2_embedded_frames_count(frames, &e);

check_error(e);

int i;

for (i = 0; i < num_of_frames; ++i)

{

// The retunred object should be released with rs2_release_frame(...)

rs2_frame* frame = rs2_extract_frame(frames, i, &e);

check_error(e);

const uint8_t* rgb_frame_data = (const uint8_t*)(rs2_get_frame_data(frame, &e));

check_error(e);

unsigned long long frame_number = rs2_get_frame_number(frame, &e);

check_error(e);

rs2_time_t frame_timestamp = rs2_get_frame_timestamp(frame, &e);

check_error(e);

// Specifies the clock in relation to which the frame timestamp was measured

rs2_timestamp_domain frame_timestamp_domain = rs2_get_frame_timestamp_domain(frame, &e);

check_error(e);

const char* frame_timestamp_domain_str = rs2_timestamp_domain_to_string(frame_timestamp_domain);

rs2_metadata_type frame_metadata_time_of_arrival = rs2_get_frame_metadata(frame, RS2_FRAME_METADATA_TIME_OF_ARRIVAL, &e);

check_error(e);

printf("RGB frame arrived.\n");

printf("First 10 bytes: ");

int i;

for (i = 0; i < 10; ++i)

printf("%02x ", rgb_frame_data[i]);

printf("\nFrame No: %llu\n", frame_number);

printf("Timestamp: %f\n", frame_timestamp);

printf("Timestamp domain: %s\n", frame_timestamp_domain_str);

printf("Time of arrival: %lld\n\n", frame_metadata_time_of_arrival);

rs2_release_frame(frame);

}

rs2_release_frame(frames);

}

// Stop the pipeline streaming

rs2_pipeline_stop(pipeline, &e);

check_error(e);

// Release resources

rs2_delete_pipeline_profile(pipeline_profile);

rs2_delete_config(config);

rs2_delete_pipeline(pipeline);

rs2_delete_device(dev);

rs2_delete_device_list(device_list);

rs2_delete_context(ctx);

return EXIT_SUCCESS;

}

运行结果:

Capture(C++)(显示color和depth图)(裂开,官方给的要引用的库还得自己编译!)(还有不要用官网上那个不全的代码,要用source code里的代码!)

Shows how to synchronize and render multiple streams: left, right, depth and RGB streams

example.hpp不能直接拷贝到源码文件夹下,要指定路径引用

裂开,各种报错

不用安装包的文件了,直接用source code

悄悄告诉你,文件夹名字为include的极有可能是需要你包含的。。。😅,,,比如这个

经过同事的点拨,终于知道为啥报错了,因为还有一些关于其他比如opencv的头文件、库没包含进去。。。

要把这三文件里的内容都给加进去,为了方便,就不手动添加了,直接把这三加进属性表管理器里(添加顺序没有要求!)

然后编译还是不行,报错!

通过报错的情况来看,应该跟glfw这玩意有关

在SDK中找到第三方库,发现这货居然没编译(没有生成.lib或.dll文件)

幸好它有工程项目文件,打开它直接用VS编译

编译后生成了glfw-imgui.lib文件

把它的文件名和它所在的目录分别添加到属性页的输入-附加依赖项和常规-附加库目录中

然后编译运行程序,大功告成

// License: Apache 2.0. See LICENSE file in root directory.

// Copyright(c) 2017 Intel Corporation. All Rights Reserved.

#include <librealsense2/rs.hpp> // Include RealSense Cross Platform API

#include "example.hpp" // Include short list of convenience functions for rendering

// Capture Example demonstrates how to

// capture depth and color video streams and render them to the screen

int main(int argc, char* argv[]) try

{

rs2::log_to_console(RS2_LOG_SEVERITY_ERROR);

// Create a simple OpenGL window for rendering:

window app(1280, 720, "RealSense Capture Example");

// Declare depth colorizer for pretty visualization of depth data

rs2::colorizer color_map;

// Declare rates printer for showing streaming rates of the enabled streams.

rs2::rates_printer printer;

// Declare RealSense pipeline, encapsulating the actual device and sensors

rs2::pipeline pipe;

// Start streaming with default recommended configuration

// The default video configuration contains Depth and Color streams

// If a device is capable to stream IMU data, both Gyro and Accelerometer are enabled by default

pipe.start();

while (app) // Application still alive?

{

rs2::frameset data = pipe.wait_for_frames(). // Wait for next set of frames from the camera

apply_filter(printer). // Print each enabled stream frame rate

apply_filter(color_map); // Find and colorize the depth data

// The show method, when applied on frameset, break it to frames and upload each frame into a gl textures

// Each texture is displayed on different viewport according to it's stream unique id

app.show(data);

}

return EXIT_SUCCESS;

}

catch (const rs2::error& e)

{

std::cerr << "RealSense error calling " << e.get_failed_function() << "(" << e.get_failed_args() << "):\n " << e.what() << std::endl;

return EXIT_FAILURE;

}

catch (const std::exception& e)

{

std::cerr << e.what() << std::endl;

return EXIT_FAILURE;

}

运行结果:

imshow (C++)

// License: Apache 2.0. See LICENSE file in root directory.

// Copyright(c) 2017 Intel Corporation. All Rights Reserved.

#include <librealsense2/rs.hpp> // Include RealSense Cross Platform API

#include <opencv2/opencv.hpp> // Include OpenCV API

int main(int argc, char* argv[]) try

{

// Declare depth colorizer for pretty visualization of depth data

//rs2::colorizer color_map;

// Declare RealSense pipeline, encapsulating the actual device and sensors

rs2::pipeline pipe;

// Start streaming with default recommended configuration

pipe.start();

using namespace cv;

const auto window_name = "Display Image";

namedWindow(window_name, WINDOW_AUTOSIZE);

while (waitKey(1) < 0 && getWindowProperty(window_name, WND_PROP_AUTOSIZE) >= 0)

{

rs2::frameset data = pipe.wait_for_frames(); // Wait for next set of frames from the camera

//rs2::frame depth = data.get_depth_frame().apply_filter(color_map);

rs2::frame color = data.get_color_frame();

// Query frame size (width and height)

//const int w = depth.as<rs2::video_frame>().get_width();

const int w = color.as<rs2::video_frame>().get_width();

//const int h = depth.as<rs2::video_frame>().get_height();

const int h = color.as<rs2::video_frame>().get_height();

// Create OpenCV matrix of size (w,h) from the colorized depth data

//Mat image(Size(w, h), CV_8UC3, (void*)depth.get_data(), Mat::AUTO_STEP);

Mat image(Size(w, h), CV_8UC3, (void*)color.get_data(), Mat::AUTO_STEP);

// Update the window with new data

imshow(window_name, image);

}

return EXIT_SUCCESS;

}

catch (const rs2::error& e)

{

std::cerr << "RealSense error calling " << e.get_failed_function() << "(" << e.get_failed_args() << "):\n " << e.what() << std::endl;

return EXIT_FAILURE;

}

catch (const std::exception& e)

{

std::cerr << e.what() << std::endl;

return EXIT_FAILURE;

}

怎么通道顺序是反的?

添加了cvtColor函数后,好了(转换一下通道RGB2BGR)

// License: Apache 2.0. See LICENSE file in root directory.

// Copyright(c) 2017 Intel Corporation. All Rights Reserved.

#include <librealsense2/rs.hpp> // Include RealSense Cross Platform API

#include <opencv2/opencv.hpp> // Include OpenCV API

//Dontla 20210827 (cvtColor函数)

//写出函数,右键速览定义,看头文件在哪个目录下,在附加包含目录引用它并且在代码中也要include一下

#include <opencv2/imgproc.hpp>

int main(int argc, char* argv[]) try

{

// Declare depth colorizer for pretty visualization of depth data

//rs2::colorizer color_map;

// Declare RealSense pipeline, encapsulating the actual device and sensors

rs2::pipeline pipe;

// Start streaming with default recommended configuration

pipe.start();

using namespace cv;

const auto window_name = "Display Image";

namedWindow(window_name, WINDOW_AUTOSIZE);

while (waitKey(1) < 0 && getWindowProperty(window_name, WND_PROP_AUTOSIZE) >= 0)

{

rs2::frameset data = pipe.wait_for_frames(); // Wait for next set of frames from the camera

//rs2::frame depth = data.get_depth_frame().apply_filter(color_map);

rs2::frame color = data.get_color_frame();

// Query frame size (width and height)

//const int w = depth.as<rs2::video_frame>().get_width();

const int w = color.as<rs2::video_frame>().get_width();

//const int h = depth.as<rs2::video_frame>().get_height();

const int h = color.as<rs2::video_frame>().get_height();

// Create OpenCV matrix of size (w,h) from the colorized depth data

//Mat image(Size(w, h), CV_8UC3, (void*)depth.get_data(), Mat::AUTO_STEP);

Mat image(Size(w, h), CV_8UC3, (void*)color.get_data(), Mat::AUTO_STEP);

//Dontla 20210827

Mat output_image;

cvtColor(image, output_image, CV_BGR2RGB);//转换

// Update the window with new data

imshow(window_name, output_image);

}

return EXIT_SUCCESS;

}

catch (const rs2::error& e)

{

std::cerr << "RealSense error calling " << e.get_failed_function() << "(" << e.get_failed_args() << "):\n " << e.what() << std::endl;

return EXIT_FAILURE;

}

catch (const std::exception& e)

{

std::cerr << e.what() << std::endl;

return EXIT_FAILURE;

}

为啥opencv显示是反色的,这是因为opencv它默认展示图片就是反色的,你得通过配置让它正确显示,可以用realsense的enable函数解决(主要看那个RS2_FORMAT_BGR)

rs2::config tempcfg;

tempcfg.enable_stream(RS2_STREAM_DEPTH, 640, 480, RS2_FORMAT_Z16, 30);

tempcfg.enable_stream(RS2_STREAM_COLOR, 640, 480, RS2_FORMAT_BGR8, 30);

1840

1840

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?