You are viewing the description of the latest version of Open Images (V7 - released Oct 2022),

if you would like to view the description of a previous version, please select it here:

Contents

- Download Dense annotations over 1.9M images

- Download image labels over 9M images

- Download all images

- Open Images extended

- Data formats

- References

Download dense annotations over 1.9M images

Out of the 9M images, a subset of 1.9M images have been annotated with: bounding boxes, object segmentations, visual relationships, localized narratives, point-level labels, and image-level labels. (The remaining images have only image-level labels).

This subset of images and dense annotations are available via three data channels:

Manual download of the images and raw annotations.

Access to all annotations via Tensorflow datasets.

Access to a subset of annotations (images, image labels, boxes, relationships, masks, and point labels) via FiftyOne thirtd-party open source library.

Trouble accessing the data? Let us know.

Download using Tensorflow Datasets

2022-09:To be released. Please +1 and subscribe to this Github issue if you want TFDS support.

Tensorflow datasets provides an unified API to access hundreds of datasets.

Once installed Open Images data can be directly accessed via:

dataset = tfds.load(‘open_images/v7’, split='train') for datum in dataset: image, bboxes = datum["image"], example["bboxes"]

Previous versions open_images/v6, /v5, and /v4 are also available.

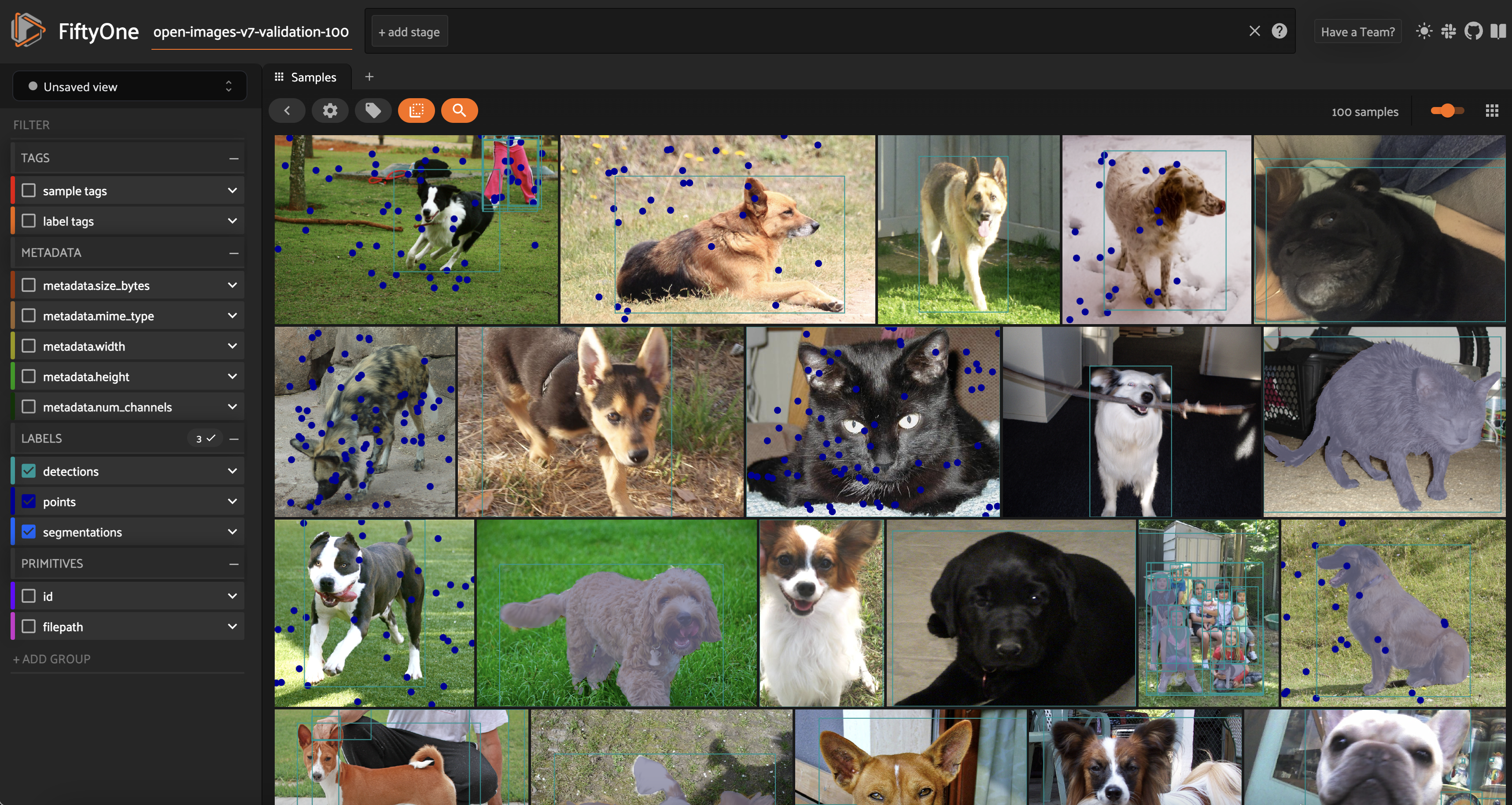

Download and Visualize using FiftyOne

We have collaborated with the team at Voxel51 to make downloading and visualizing (a subset of) Open Images a breeze using their open-source tool FiftyOne.

As with any other dataset in the FiftyOne Dataset Zoo, downloading it is as easy as calling:

dataset = fiftyone.zoo.load_zoo_dataset("open-images-v7", split="validation")

The function allows you to:

- Choose which split to download.

- Choose which types of annotations to download ("detections", "classifications", "relationships", "segmentations", or "points").

- Choose which classes of objects to download (e.g. cats and dogs).

- Limit the number of samples, to do a first exploration of the data.

- Download specific images by ID.

These properties give you the ability to quickly download subsets of the dataset that are relevant to you.

dataset = fiftyone.zoo.load_zoo_dataset(

"open-images-v7",

split="validation",

label_types=["detections", "segmentations", "points"],

classes=["Cat", "Dog"],

max_samples=100,

)

FiftyOne also provides native support for Open Images-style evaluation to compute mAP, plot PR curves, interact with confusion matrices, and explore individual label-level results.

results = dataset.evaluate_detections("predictions", gt_field="detections", method="open-images")

Get started!

Download Manually

Images

If you're interested in downloading the full set of training, test, or validation images (1.7M, 125k, and 42k, respectively; annotated with bounding boxes, etc.), you can download them packaged in various compressed files from CVDF's site:

Download from CVDF

If you only need a certain subset of these images and you'd rather avoid downloading the full 1.9M images, we provide a Python script that downloads images from CVDF.

- Download the file downloader.py (open and press

Ctrl + S), or directly run:wget https://raw.githubusercontent.com/openimages/dataset/master/downloader.py

- Create a text file containing all the image IDs that you're interested in downloading. It can come from filtering the annotations with certain classes, those annotated with a certain type of annotations (e.g., MIAP). Each line should follow the format

$SPLIT/$IMAGE_ID, where$SPLITis either "train", "test", "validation", or "challenge2018"; and$IMAGE_IDis the image ID that uniquely identifies the image. A sample file could be:train/f9e0434389a1d4dd train/1a007563ebc18664 test/ea8bfd4e765304db

- Run the following script, making sure you have the dependencies installed:

python downloader.py $IMAGE_LIST_FILE --download_folder=$DOWNLOAD_FOLDER --num_processes=5

For help, run:python downloader.py -h

Annotations and metadata

Image IDs

Train

Validation

Test

Image labels

Train

Validation

Test

Boxes

Train

Validation

Test

Segmentations

Train

Validation

Test

Relationships

Train

Validation

Test

Train

Validation

Test

Localized narratives voice recordings

Train

Validation

Test

Point-labels

Train

Validation

Test

Metadata

Boxable class names

Relationship names

Attribute names

Relationship triplets

Segmentation classes

Point-label classes

Download image labels over 9M images

These image-label annotation files provide annotations for all images over 20,638 classes. In the train set, the human-verified labels span 7,337,077 images, while the machine-generated labels span 8,949,445 images. The image IDs below list all images that have human-verified labels. The annotation files span the full validation (41,620 images) and test (125,436 images) sets.

Images

Download from CVDF

Human-verified labels

Train

Validation

Test

Machine-generated labels

Train

Validation

Test

Image IDs

Train

Validation

Test

Metadata

Class Names (all sets)

Trainable Classes

Trouble downloading the data? Let us know.

Download all Open Images images

The full set of 9,178,275 images.

Images

Download from CVDF

Image IDs

Full Set

Trouble downloading the pixels? Let us know.

Open Images Extended

Open Images extended complements the existing dataset with additional images and annotations.

More people box annotations

Crowdsourced images

Data formats

The Open Image annotations come in diverse text, csv, audio, and image files. These file formats are documented below. \

Bounding boxes

Each row defines one bounding box.

ImageID,Source,LabelName,Confidence,XMin,XMax,YMin,YMax,IsOccluded,IsTruncated,IsGroupOf,IsDepiction,IsInside,XClick1X,XClick2X,XClick3X,XClick4X,XClick1Y,XClick2Y,XClick3Y,XClick4Y

000002b66c9c498e,xclick,/m/04bcr3,1,0.312500,0.578125,0.351562,0.464063,0,0,0,0,0,0.312500,0.578125,0.385937,0.576562,0.454688,0.364063,0.351562,0.464063

3550e6ef5d44d91a,activemil,/m/0220r2,1,0.022461,0.865234,0.234375,0.986979,1,0,0,0,0,-1.000000,-1.000000,-1.000000,-1.000000,-1.000000,-1.000000,-1.000000,-1.000000

880b4b00f75260ec,xclick,/m/0ch_cf,1,0.641875,0.693125,0.818333,0.878333,1,0,0,0,0,0.641875,0.643125,0.678750,0.693125,0.818333,0.842500,0.871667,0.878333

b17e3f11cb77c7c8,xclick,/m/0dzct,1,0.510156,0.664844,0.337632,0.623681,1,0,0,0,0,0.573438,0.510156,0.664844,0.587500,0.337632,0.438453,0.468933,0.623681

[...]

ImageID: the image this box lives in.Source: indicates how the box was made:xclickare manually drawn boxes using the method presented in [1], were the annotators click on the four extreme points of the object. In V6 we release the actual 4 extreme points for all xclick boxes in train (13M), see below.activemilare boxes produced using an enhanced version of the method [2]. These are human verified to be accurate at IoU>0.7.

LabelName: the MID of the object class this box belongs to.Confidence: a dummy value, always 1.XMin,XMax,YMin,YMax: coordinates of the box, in normalized image coordinates. XMin is in [0,1], where 0 is the leftmost pixel, and 1 is the rightmost pixel in the image. Y coordinates go from the top pixel (0) to the bottom pixel (1).XClick1X,XClick2X,XClick3X,XClick4X,XClick1Y,XClick2Y,XClick3Y,XClick4Y: normalized image coordinates (asXMin, etc.) of the four extreme points of the object that produced the box using [1] in the case ofxclickboxes. Dummy values of -1 in the case ofactivemilboxes.

The attributes have the following definitions:

IsOccluded: Indicates that the object is occluded by another object in the image.IsTruncated: Indicates that the object extends beyond the boundary of the image.IsGroupOf: Indicates that the box spans a group of objects (e.g., a bed of flowers or a crowd of people). We asked annotators to use this tag for cases with more than 5 instances which are heavily occluding each other and are physically touching.IsDepiction: Indicates that the object is a depiction (e.g., a cartoon or drawing of the object, not a real physical instance).IsInside: Indicates a picture taken from the inside of the object (e.g., a car interior or inside of a building).

For each of them, value 1 indicates present, 0 not present, and -1 unknown.

Instance segmentation masks

The masks information is stored in two files:

- Individual mask images, with information encoded in the filename.

- A comma-separated-values (CSV) file with additional information (

masks_data.csv).

The masks images are PNG binary images, where non-zero pixels belong to a single object instance and zero pixels are background. The file names look as follows (random 5 examples): e88da03f2d80f1a1_m019jd_e16d01b9.png 540c5536e95a3282_m014j1m_b00fa52e.png 1c84bdd61fa3b883_m06m11_62ef2388.png 663389d2c9d562d8_m04_sv_7e23f2a5.png 072b8fd82919ab3e_m06mf6_dd70f221.png

The format of .zip archives names is the following: each <subset>_<suffix>.zip contains all masks for all images with the first characted of ImageID equal to <suffix>. The value of <suffix> is from 0-9 and a-f.

Each row in masks_data.csv describes one instance, using similar conventions as the boxes CSV data file.

MaskPath,ImageID,LabelName,BoxID,BoxXMin,BoxXMax,BoxYMin,BoxYMax,PredictedIoU,Clicks

25adb319ebc72921_m02mqfb_8423aba8.png,25adb319ebc72921,/m/02mqfb,8423aba8,0.000000,0.998438,0.089062,0.770312,0.62821,0.15808 0.26206 1;0.90333 0.41076 0;0.17578 0.66566 1;0.00761 0.23197 1;0.07918 0.26058 0;0.31792 0.47737 1;0.12858 0.59262 0;0.73229 0.34016 1;0.01865 0.20001 1;0.52214 0.31037 0;0.83596 0.28105 1;0.23418 0.60177 0

0a419be97dec2fa3_m02mqfb_8ad2c442.png,0a419be97dec2fa3,/m/02mqfb,8ad2c442,0.057813,0.943750,0.056250,0.960938,0.87836,0.89971 0.08481 1;0.20175 0.90471 0;0.11511 0.89990 0;0.94728 0.28410 0;0.19611 0.85369 0;0.07672 0.87857 1;0.82215 0.62642 0;0.13916 0.92650 1;0.51738 0.48419 1

8eef6e54789ce66d_m02mqfb_83dae39c.png,8eef6e54789ce66d,/m/02mqfb,83dae39c,0.037500,0.978750,0.129688,0.925000,0.70206,0.40219 0.16838 1;0.56758 0.65286 1;0.08311 0.90762 1;0.20840 0.56515 1;0.43336 0.23679 0;0.24689 0.43426 0;0.49292 0.65762 1;0.31383 0.51431 0;0.07137 0.86214 0;0.68160 0.38210 1;0.69462 0.59568 0

...

MaskPath: name of the corresponding mask image.ImageID: the image this mask lives in.LabelName: the MID of the object class this mask belongs to.BoxID: an identifier for the box within the image.BoxXMin,BoxXMax,BoxYMin,BoxYMax: coordinates of the box linked to the mask, in normalized image coordinates. Note that this is not the bounding box of the mask, but the starting box from which the mask was annotated. These coordinates can be used to relate the mask data with the boxes data.PredictedIoU: if present, indicates a predicted IoU value with respect to ground-truth. This quality estimate is machine-generated based on human annotator behaviour. See [3] for details.Clicks: if present, indicates the human annotator clicks, which provided guidance during the annotation process we carried out (See [3] for details). This field is encoded using the following format:X1 Y1 T1;X2 Y2 T2;X3 Y3 T3;....Xi Yiare the coordinates of the click in normalized image coordinates.Tiis the click type, value0indicates the annotator marks the point as background, value1as part of the object instance (foreground). These clicks can be interesting for researchers in the field of interactive segmentation. They are not necessary for users interested in the final masks only.

Visual relationships

Each row in the file corresponds to a single annotation.

ImageID,LabelName1,LabelName2,XMin1,XMax1,YMin1,YMax1,XMin2,XMax2,YMin2,YMax2,RelationLabel

0009fde62ded08a6,/m/0342h,/m/01d380,0.2682927,0.78549093,0.4977778,0.8288889,0.2682927,0.78549093,0.4977778,0.8288889,is

00198353ef684011,/m/01mzpv,/m/04bcr3,0.23779725,0.30162704,0.6500938,0.7335835,0,0.5819775,0.6482176,0.99906194,at

001e341dd7456c72,/m/04yx4,/m/01mzpv,0.07009346,0.2859813,0.2332708,0.5203252,0.14018692,0.31588784,0.32082552,0.48405254,on

001e341dd7456c72,/m/04yx4,/m/01mzpv,0,0.28317758,0.26454034,0.5540963,0.2224299,0.3411215,0.3908693,0.4859287,on

001e341dd7456c72,/m/01599,/m/04bcr3,0.5551402,0.6084112,0.50343966,0.5490932,0.5411215,0.95981306,0.5090682,0.78361475,on

001e341dd7456c72,/m/04bcr3,/m/01d380,0.7392523,0.9990654,0.3889931,0.518449,0.7392523,0.9990654,0.3889931,0.518449,is

...

ImageID: the image this relationship instance lives in.LabelName1: the label of the first object in the relationship triplet.XMin1,XMax1,YMin1,YMax1: normalized bounding box coordinates of the bounding box of the first object.LabelName2: the label of the second object in the relationship triplet, or an attribute.XMin2,XMax2,YMin2,YMax2: If the relationship is between a pair of objects: normalized bounding box coordinates of the bounding box of the second object. For an object-attribute relationship (RelationLabel="is"): normalized bounding box of the first object (repeated). In this case, LabelName2 is an attribute.RelationLabel: the label of the relationship ("is" in case of attributes).

Localized narratives

The Localized Narrative annotations are in JSON Lines format, that is, each line of the file is an independent valid JSON-encoded object. The largest files are split into smaller sub-files (shards) for ease of download. Since each line of the file is independent, the whole file can be reconstructed by simply concatenating the contents of the shards.

Each line represents one Localized Narrative annotation on one image by one annotator and has the following fields:

dataset_id: String identifying the dataset and split where the image belongs, e.g.openimages-train.image_id: String identifier of the image, as specified on each dataset.annotator_id: Integer number uniquely identifying each annotator.caption: Image caption as a string of characters.timed_caption: List of timed utterances, i.e.{utterance, start_time, end_time}whereutteranceis a word (or group of words) and(start_time, end_time)is the time during which it was spoken, with respect to the start of the recording.traces: List of trace segments, one between each time the mouse pointer enters the image and goes away from it. Each trace segment is represented as a list of timed points, i.e.{x, y, t}, wherexandyare the normalized image coordinates (with origin at the top-left corner of the image) andtis the time in seconds since the start of the recording. Please note that the coordinates can go a bit beyond the image, i.e. <0 or >=1, as we recorded the mouse traces including a small band around the image.voice_recording: Relative URL path with respect tohttps://storage.googleapis.com/localized-narratives/voice-recordingswhere to find the voice recording (in OGG format) for that particular image.

Below a sample of one Localized Narrative in this format:

{

dataset_id: 'open_image',

image_id: 'abe9ff8763cdcc5d',

annotator_id: 93,

caption: 'In this image there are group of cows standing and eating th...',

timed_caption: [{'utterance': 'In this', 'start_time': 0.0, 'end_time': 0.4}, ...],

traces: [[{'x': 0.2086, 'y': -0.0533, 't': 0.022}, ...], ...],

voice_recording: "open_images_validation/open_images_validation_abe9ff8763cdcc5d_110.ogg",

}

For more information, additional download files, and annotations over other datasets, consult the localized narratives website.

Point Labels

The point label data is contained in two types of comma separated value files. One per-split file containing the point labels, and one dataset-wide file describing the annotated classes.

Per-split point labels data looks as follows:

ImageId,X,Y,Label,EstimatedYesNo,Source,YesVotes,NoVotes,UnsureVotes,TextLabel

00c73a28068f9b33,0.32324219,0.22656250,/m/01280g,no,ih,0,3,0,

00c73a28068f9b33,0.43164062,0.74869792,/m/01280g,unsure,ih,2,1,0,

00c73a28068f9b33,0.81737432,0.42705993,/m/096mb,no,cc,0,1,0,

00c73a28068f9b33,0.25814126,0.98454896,/m/096mb,yes,cc,1,0,0,

00c73a28068f9b33,0.53840625,0.62643750,/m/06q74,yes,ff,1,0,0,ship

00ccda615ec9731d,0.44140625,0.43652344,/g/11bc5yhfnk,unsure,ih,2,1,0,

00ccda615ec9731d,0.81510417,0.59082031,/g/11bc5yhfnk,yes,ih,3,0,0,

00ccda615ec9731d,0.46223958,0.91894531,/m/01z562,no,ih,0,3,0,

...

Where:

ImageID: the image this point lives in.X,Y: coordinates of the point, in normalized image coordinates. Where X=0 is the leftmost pixel, and 1 is the rightmost pixel in the image. Y coordinates go from the top pixel (0) to the bottom pixel (1).Label: the MID of the thing or stuff class this point belongs to.EstimatedYesNo: estimated yes/no/unsure label for this point, based on the available votes.Source: source of the votes available:ih: stands for "in-house verification", where internal human raters voted for the point-label.cc: stands for points collected via corrective clicks (see box annotations), and converted to point-labels using the box annotations.ff: point-labels annotated via free-from input (see 4 ). This process only provides yes annotations.cs: stands for "crowdsource verification", where external contributors voted for the point-label via the CrowdSource application.

YesVotes: number of yes votes received.NoVotes: number of no votes received.Unsure: number of unsure votes received.TextLabel: forffannotations, provides the specific text label provided.

Otherwise consult the class-description for MID->human name mapping.

The class description data looks as follows:

LabelName,DisplayName,YesPoints,Points,TextMentions,...

/m/016q19,Petal,2681,5656,petals,petal,with petals,with petals and,flower petal,they have pink petals,color petals,are petals,some petals of flowers,see petals,petals which are in,petal and

/m/01qr50,Mud,2871,14850,mud,on the mud,the mud,is mud,a mud,mud and,the mud and in,see mud,see the mud,we can see mud,there is mud,in the mud

/m/0cyhj_,Orange (fruit),25002,55836,orange,oranges,an orange,the orange,an orange on this,can see orange,and orange,an oranges,halved orange,wearing orange,orange slice,oranges and some

Where:

MID: machine identifier of the thing or stuff class.ClassName: canonical humane name for this class.YesPoints: total of yes point-labels for this class (based onEstimatedYesNo, across all splits and vote sources).Points: total of point-labels for this class (with at least one vote; across all splits, vote sources).TextMentions: examples of text mentions from free-form labels or localized narratives captions parsing, sorted by frequency of appearance (with removal of repeated entries). These give an idea of the kind of text mentions used to detect the class presence.

(Forih/csquestions theClassNamewas used, not the mention)

For more details about these annotations, see 5.

Image Labels

Human-verified and machine-generated image-level labels:

ImageID,Source,LabelName,Confidence

000026e7ee790996,verification,/m/04hgtk,0

000026e7ee790996,verification,/m/07j7r,1

000026e7ee790996,crowdsource-verification,/m/01bqvp,1

000026e7ee790996,crowdsource-verification,/m/0csby,1

000026e7ee790996,verification,/m/01_m7,0

000026e7ee790996,verification,/m/01cbzq,1

000026e7ee790996,verification,/m/01czv3,0

000026e7ee790996,verification,/m/01v4jb,0

000026e7ee790996,verification,/m/03d1rd,0

...

Source: indicates how the annotation was created:

verificationare labels verified by in-house annotators at Google.crowdsource-verificationare labels verified from the Crowdsource app.machineare machine-generated labels.

Confidence: Labels that are human-verified to be present in an image have confidence = 1 (positive labels). Labels that are human-verified to be absent from an image have confidence = 0 (negative labels). Machine-generated labels have fractional confidences, generally >= 0.5. The higher the confidence, the smaller the chance for the label to be a false positive.

Class names

The class names in MID format can be converted to their short descriptions by looking into class-descriptions.csv:

...

/m/0pc9,Alphorn

/m/0pckp,Robin

/m/0pcm_,Larch

/m/0pcq81q,Soccer player

/m/0pcr,Alpaca

/m/0pcvyk2,Nem

/m/0pd7,Army

/m/0pdnd2t,Bengal clockvine

/m/0pdnpc9,Bushwacker

/m/0pdnsdx,Enduro

/m/0pdnymj,Gekkonidae

...

Note the presence of characters like commas and quotes. The file follows standard CSV escaping rules. e.g.:

/m/02wvth,"Fiat 500 ""topolino"""

/m/03gtp5,Lamb's quarters

/m/03hgsf0,"Lemon, lime and bitters"

Image information

It has image URLs, their OpenImages IDs, the rotation information, titles, authors, and license information:

ImageID,Subset,OriginalURL,OriginalLandingURL,License,AuthorProfileURL,Author,Title,

OriginalSize,OriginalMD5,Thumbnail300KURL,Rotation

...

000060e3121c7305,train,https://c1.staticflickr.com/5/4129/5215831864_46f356962f_o.jpg,\

https://www.flickr.com/photos/brokentaco/5215831864,\

https://creativecommons.org/licenses/by/2.0/,\

"https://www.flickr.com/people/brokentaco/","David","28 Nov 2010 Our new house."\

211079,0Sad+xMj2ttXM1U8meEJ0A==,https://c1.staticflickr.com/5/4129/5215831864_ee4e8c6535_z.jpg,0

...

Each image has a unique 64-bit ID assigned. In the CSV files they appear as zero-padded hex integers, such as 000060e3121c7305.

The data is as it appears on the destination websites.

OriginalSizeis the download size of the original image.OriginalMD5is base64-encoded binary MD5, as described here.Thumbnail300KURLis an optional URL to a thumbnail with ~300K pixels (~640x480). It is provided for the convenience of downloading the data in the absence of more convenient ways to get the images. If missing,OriginalURLmust be used (and then resized to the same size, if needed). These thumbnails are generated on the fly and their contents and even resolution might be different every day.Rotationis the number of degrees that the image should be rotated counterclockwise to match the Flickr user intended orientation (0,90,180,270).nanmeans that this information is not available. Check this announcement for more information about the issue.

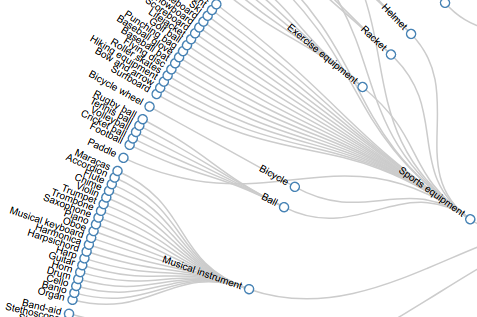

Hierarchy for 600 boxable classes

View the set of boxable classes as a hierarchy here or download it as a JSON file:

References

-

"Extreme clicking for efficient object annotation", Papadopolous et al., ICCV 2017.

-

"We don't need no bounding-boxes: Training object class detectors using only human verification, Papadopolous et al., CVPR 2016.

-

"Large-scale interactive object segmentation with human annotators", Benenson et al., CVPR 2019.

-

"Natural Vocabulary Emerges from Free-Form Annotations", Pont-Tuset et al., arXiv 2019.

-

"From couloring-in to pointillism: revisiting semantic segmentation supervision", Benenson et al., arXiv 2022.

1595

1595

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?