需求

原本想给视频中的人物批量打码,百度之后发现大部分教程都是使用pr之类的工具进行遮罩处理,由于许久没碰pr,故想着做个自动化处理人脸的工具,编写代码的过程中使用了lindevs大佬训练好的模型,lindevs大佬的项目地址如下:

GitHub - lindevs/yolov8-face: Pre-trained YOLOv8-Face models.

对应的预训练模型链接:https://download.csdn.net/download/Huang_X_H/89813266

以下是该工具的主要代码。

代码实现

代码结构

project

----block_face

----block_images

----block_image.png

----input_files

----test_image.jpg

----test.mp4

----models

----yolov8s-face-lindevs.pt

----output_files

----block_face.pyblock_face.py

导入对应库

主要使用ultralytics(即YOLOV8)来识别人脸,使用moviepy来剪辑视频

import os

import time

from abc import ABCMeta, abstractmethod

from datetime import datetime

import cv2

import numpy as np

from moviepy.audio.io.AudioFileClip import AudioFileClip

from moviepy.video.io.VideoFileClip import VideoFileClip

from ultralytics import YOLO抽象工具类

此处有抽象类是因为原本想着可以改为有多种具体的人脸检测方法,可以自行合并为一个类

class BlockFaceUtil(metaclass=ABCMeta):

input_file_path = ""

output_file_path = None

flag_mosaic = True

block_image_path = ""

input_file_type = "unknown"

input_file_extension = None

mosaic_block_size = 10

block_image = None

block_image_opacity = 1

flag_stretch = False

flag_over_full = True

flag_show_box = False

flag_initialed = False

def get_file_type(self, file_path):

"""

获取文件类型,图片还是视频

:param file_path:

:return:

"""

if not os.path.exists(file_path):

return "unknown", None

image_extensions = ['.jpg', '.jpeg', '.png', '.bmp', '.gif', '.tiff']

video_extensions = ['.mp4', '.avi', '.mkv', '.mov', '.flv', '.wmv']

_, file_extension = os.path.splitext(file_path)

if file_extension.lower() in image_extensions:

return "image", file_extension

elif file_extension.lower() in video_extensions:

return "video", file_extension

else:

return "unknown", None

def initial_params(self, input_file_path, output_file_path=None, flag_mosaic=True, block_image_path="",

mosaic_block_size=10, block_image_opacity=1, flag_stretch=False, flag_over_full=True,

flag_show_box=False):

"""

初始化目录等基本信息

:param input_file_path:

:param output_file_path:

:param flag_mosaic:

:param block_image_path:

:param mosaic_block_size:

:param block_image_opacity:

:param flag_stretch:

:param flag_over_full:

:param flag_show_box:

:return:

"""

self.input_file_path = input_file_path

self.output_file_path = output_file_path

self.flag_mosaic = flag_mosaic

self.block_image_path = block_image_path

self.mosaic_block_size = mosaic_block_size

self.block_image_opacity = block_image_opacity

self.flag_stretch = flag_stretch

self.flag_over_full = flag_over_full

self.flag_show_box = flag_show_box

if not self.flag_mosaic and (not os.path.exists(self.block_image_path) or self.get_file_type(self.block_image_path)[0] != "image"):

print(f"invalid block_image_path: {self.block_image_path}")

else:

self.input_file_type, self.input_file_extension = self.get_file_type(self.input_file_path)

if self.input_file_type == "image" or self.input_file_type == "video":

if self.output_file_path is None or self.output_file_path == "" or not os.path.exists(os.path.dirname(self.output_file_path)) or os.path.exists(self.output_file_path):

if not os.path.exists("block_face"):

os.mkdir("block_face")

if not os.path.exists(os.path.join("block_face", "output_files")):

os.mkdir(os.path.join("block_face", "output_files"))

now = datetime.now()

self.output_file_path = os.path.join("block_face", "output_files", f"output_{now.strftime('%Y%m%d%H%M%S')}{self.input_file_extension}")

# 加载遮挡图片

self.block_image = cv2.imread(block_image_path, cv2.IMREAD_UNCHANGED) if not self.flag_mosaic else None

self.flag_initialed = True

else:

print("Unsupported file type")

@abstractmethod

def block_face(self):

"""

遮挡人脸

:return:

"""

pass

@abstractmethod

def handle_frame(self, input_image):

"""

处理单个图像

:param input_image:

:return:

"""

pass

@abstractmethod

def block_face_in_image(self):

"""

遮挡图片中的人脸

:return:

"""

pass

@abstractmethod

def block_face_in_video(self):

"""

遮挡视频中的人脸

:return:

"""

pass

@abstractmethod

def detect_face(self, original_image):

"""

检测人脸

:param original_image:

:return:

"""

pass

@abstractmethod

def block_image_area_with_mosaic(self, original_image, area):

"""

使用马赛克对指定区域进行遮挡

:param original_image:

:param area:

:return:

"""

pass

@abstractmethod

def block_image_area_with_image(self, original_image, block_image, block_area):

"""

使用图片对指定区域进行遮挡

:param original_image:

:param block_image:

:param block_area:

:return:

"""

pass具体工具类

class YoloV8FaceBlockFaceUtil(BlockFaceUtil):

def __init__(self):

super().__init__()

self.model_path = ""

self.yolo_model = None

self.flag_yolo_initialed = False

self.conf = 0.6

def initial_yolo(self, model_path, conf=0.6):

"""

除了默认的参数,还需要设置一下yolov8相关的参数

:param model_path:

:param conf:

:return:

"""

self.model_path = model_path

self.yolo_model = YOLO(self.model_path)

self.conf = conf

self.flag_yolo_initialed = True

def block_face(self):

if self.flag_initialed:

if self.flag_yolo_initialed:

if self.input_file_type == "image":

self.block_face_in_image()

elif self.input_file_type == "video":

self.block_face_in_video()

else:

print("未初始化YOLO参数")

else:

print("未初始化参数")

def handle_frame(self, input_image):

face_locations = self.detect_face(input_image)

if self.flag_mosaic:

for face_location in face_locations:

x1, y1, x2, y2 = face_location

block_area = (int(x1), int(y1), int(x2), int(y2)) # x1, y1, x2, y2 左上角坐标和右下角坐标

# 应用马赛克

input_image = self.block_image_area_with_mosaic(input_image, block_area)

else:

for face_location in face_locations:

x1, y1, x2, y2 = face_location

block_area = (int(x1), int(y1), int(x2), int(y2)) # x1, y1, x2, y2 左上角坐标和右下角坐标

# 应用遮挡

input_image = self.block_image_area_with_image(

original_image=input_image,

block_image=self.block_image,

block_area=block_area)

if self.flag_show_box:

# 对于每张脸,画一个矩形框

for face_location in face_locations:

x1, y1, x2, y2 = face_location

cv2.rectangle(input_image, (int(x1), int(y1)), (int(x2), int(y2)), (0, 255, 0), 2)

def block_face_in_image(self):

input_image = cv2.imread(self.input_file_path)

self.handle_frame(input_image)

# 保存结果

cv2.imwrite(self.output_file_path, input_image)

print(f"已输出文件:{self.output_file_path}")

def block_face_in_video(self):

output_file_name, file_extension = os.path.splitext(os.path.basename(self.output_file_path))

output_file_temp_path = os.path.join(os.path.dirname(self.output_file_path),

f"{output_file_name}-temp{file_extension}")

# 读取视频

cap = cv2.VideoCapture(self.input_file_path)

# 获取视频的基本信息

frame_width = int(cap.get(cv2.CAP_PROP_FRAME_WIDTH))

frame_height = int(cap.get(cv2.CAP_PROP_FRAME_HEIGHT))

fps = cap.get(cv2.CAP_PROP_FPS)

# 创建视频写入器

fourcc = cv2.VideoWriter_fourcc(*'mp4v')

out = cv2.VideoWriter(output_file_temp_path, fourcc, fps, (frame_width, frame_height))

print("开始生成画面")

while cap.isOpened():

ret, frame = cap.read()

if not ret:

break

self.handle_frame(frame)

# 写入帧

out.write(frame)

# 释放资源

cap.release()

out.release()

print("已创建画面,正在合并音频")

# 加载没有声音的新视频和原视频的声音

video_without_audio = VideoFileClip(output_file_temp_path)

audio = AudioFileClip(self.input_file_path)

# 将音频添加到新视频中

final_video = video_without_audio.set_audio(audio)

# 写入最终结果

final_video.write_videofile(self.output_file_path, codec='libx264')

print(f"已输出文件:{self.output_file_path}")

def detect_face(self, original_image):

# 使用YOLOv8进行人脸检测,conf 参数用于设置置信度阈值,verbose 参数用于在控制台显示预测信息

results = self.yolo_model.predict(source=original_image, conf=self.conf, verbose=False) # conf 参数用于设置置信度阈值

face_locations = []

# 提取检测结果

for result in results:

boxes = result.boxes.cpu().numpy()

for box in boxes:

x1, y1, x2, y2 = box.xyxy[0].astype(int)

face_locations.append((x1, y1, x2, y2))

return face_locations

def block_image_area_with_mosaic(self, original_image, area):

# 获取图片的宽度和高度

height = original_image.shape[0]

width = original_image.shape[1]

# 检查坐标是否超出范围

x1 = max(0, min(area[0], width))

y1 = max(0, min(area[1], height))

x2 = max(x1, min(area[2], width))

y2 = max(y1, min(area[3], height))

# 提取指定区域

region = original_image[y1:y2, x1:x2]

# 计算马赛克块的大小

h, w, _ = region.shape

nh = h // self.mosaic_block_size

nw = w // self.mosaic_block_size

# 创建一个空的数组用于存储马赛克后的区域

mosaic_region = np.zeros_like(region)

# 对每个马赛克块求平均值并填充

for i in range(nh):

for j in range(nw):

y_start, y_end = i * self.mosaic_block_size, (i + 1) * self.mosaic_block_size

x_start, x_end = j * self.mosaic_block_size, (j + 1) * self.mosaic_block_size

# 计算当前块的平均颜色

avg_color = np.mean(region[y_start:y_end, x_start:x_end], axis=(0, 1)).astype(np.uint8)

# 将当前块填充为平均颜色

mosaic_region[y_start:y_end, x_start:x_end] = avg_color

# 处理最后一行

if h % self.mosaic_block_size != 0:

y_start = nh * self.mosaic_block_size

for j in range(nw):

x_start, x_end = j * self.mosaic_block_size, (j + 1) * self.mosaic_block_size

# 计算当前块的平均颜色

avg_color = np.mean(region[y_start:h, x_start:x_end], axis=(0, 1)).astype(np.uint8)

# 填充剩余部分

mosaic_region[y_start:h, x_start:x_end] = avg_color

# 处理最后一列

if w % self.mosaic_block_size != 0:

x_start = nw * self.mosaic_block_size

for i in range(nh):

y_start, y_end = i * self.mosaic_block_size, (i + 1) * self.mosaic_block_size

# 计算当前块的平均颜色

avg_color = np.mean(region[y_start:y_end, x_start:w], axis=(0, 1)).astype(np.uint8)

# 填充剩余部分

mosaic_region[y_start:y_end, x_start:w] = avg_color

# 如果最后一行和最后一列都有剩余,则单独处理最后一个色块

if h % self.mosaic_block_size != 0 and w % self.mosaic_block_size != 0:

y_start = nh * self.mosaic_block_size

x_start = nw * self.mosaic_block_size

# 计算当前块的平均颜色

avg_color = np.mean(region[y_start:h, x_start:w], axis=(0, 1)).astype(np.uint8)

# 填充剩余部分

mosaic_region[y_start:h, x_start:w] = avg_color

# 将马赛克后的区域放回原图

original_image[y1:y2, x1:x2] = mosaic_region

return original_image

def block_image_area_with_image(self, original_image, block_image, block_area):

# 获取背景图片的尺寸

bg_height, bg_width = original_image.shape[:2]

# 获取前景图片的尺寸

fg_height, fg_width = block_image.shape[:2]

# 计算指定区域的中心

region_center = ((block_area[0] + block_area[2]) // 2,

(block_area[1] + block_area[3]) // 2)

# 计算前景图片的中心

fg_center = (fg_width // 2, fg_height // 2)

# 获取指定区域的尺寸

region_width = block_area[2] - block_area[0]

region_height = block_area[3] - block_area[1]

if self.flag_stretch:

new_height = int(region_height)

new_width = int(region_width)

else:

# 调整前景图片大小以完全覆盖指定区域

aspect_ratio = fg_width / fg_height

if self.flag_over_full:

if region_width / region_height < aspect_ratio:

new_height = int(region_height)

new_width = int(new_height * aspect_ratio)

else:

new_width = int(region_width)

new_height = int(new_width / aspect_ratio)

else:

if region_width / region_height > aspect_ratio:

new_height = int(region_height)

new_width = int(new_height * aspect_ratio)

else:

new_width = int(region_width)

new_height = int(new_width / aspect_ratio)

# 保持长宽比,调整前景图片大小

resized_foreground = cv2.resize(block_image, (new_width, new_height))

# 计算调整后前景图片的中心

resized_fg_center = (new_width // 2, new_height // 2)

# 计算调整后前景图片在背景图片中的左上角坐标

bg_x_start = region_center[0] - resized_fg_center[0]

bg_y_start = region_center[1] - resized_fg_center[1]

# 计算调整后前景图片在背景图片中的右下角坐标

bg_x_end = bg_x_start + new_width

bg_y_end = bg_y_start + new_height

# 获取背景图片的重叠区域

bg_overlap = original_image[max(0, bg_y_start):min(bg_y_end, bg_height), max(0, bg_x_start):min(bg_x_end, bg_width)]

# 获取前景图片的重叠区域

fg_overlap = resized_foreground[max(0, -bg_y_start):min(new_height, bg_y_end - bg_y_start),

max(0, -bg_x_start):min(new_width, bg_x_end - bg_x_start)]

# 处理超出区域的部分

if fg_overlap.shape[2] == 4:

# 前景图片有Alpha通道

alpha_channel = fg_overlap[:, :, 3] / 255.0

# 对超出区域的部分设置透明度

alpha_channel[alpha_channel > 0] = self.block_image_opacity

# 确保 fg_overlap 和 bg_overlap 的形状匹配

if fg_overlap.shape[:2] != bg_overlap.shape[:2]:

# 裁剪或填充 fg_overlap 以匹配 bg_overlap

fg_overlap = fg_overlap[:bg_overlap.shape[0], :bg_overlap.shape[1]]

alpha_channel = alpha_channel[:bg_overlap.shape[0], :bg_overlap.shape[1]]

# 应用Alpha混合

blended = (alpha_channel[..., np.newaxis] * fg_overlap[:, :, :3] + (

1 - alpha_channel[..., np.newaxis]) * bg_overlap).astype(np.uint8)

# 替换背景图片的重叠区域

original_image[max(0, bg_y_start):min(bg_y_end, bg_height),

max(0, bg_x_start):min(bg_x_end, bg_width)] = blended

else:

# 前景图片没有Alpha通道

# 应用透明度

blended = (self.block_image_opacity * fg_overlap + (1 - self.block_image_opacity) * bg_overlap).astype(np.uint8)

# 替换背景图片的重叠区域

original_image[max(0, bg_y_start):min(bg_y_end, bg_height),

max(0, bg_x_start):min(bg_x_end, bg_width)] = blended

return original_image程序入口

def main():

start_time = time.time()

print("程序开始运行")

block_face_util = YoloV8FaceBlockFaceUtil()

# 遮挡图片中的人脸,只设置了必要的参数

block_face_util.initial_params(input_file_path="block_face/input_files/test_image.png",

output_file_path="block_face/output_files/test_image_yolov8_face.png",

flag_mosaic=False,

block_image_path="block_face/block_images/block_image.png",)

# 遮挡视频中的人脸

block_face_util.initial_params(input_file_path="block_face/input_files/test.mp4",

output_file_path="block_face/output_files/output_yolo_face.mp4",

flag_mosaic=False,

block_image_path="block_face/block_images/block_image.png",

mosaic_block_size=10,

block_image_opacity=1,

flag_stretch=False,

flag_over_full=True,

flag_show_box=False)

block_face_util.initial_yolo(model_path="block_face/models/yolov8s-face-lindevs.pt", conf=0.6)

block_face_util.block_face()

print(f"耗时:{time.time() - start_time}秒")

if __name__ == '__main__':

main()效果展示

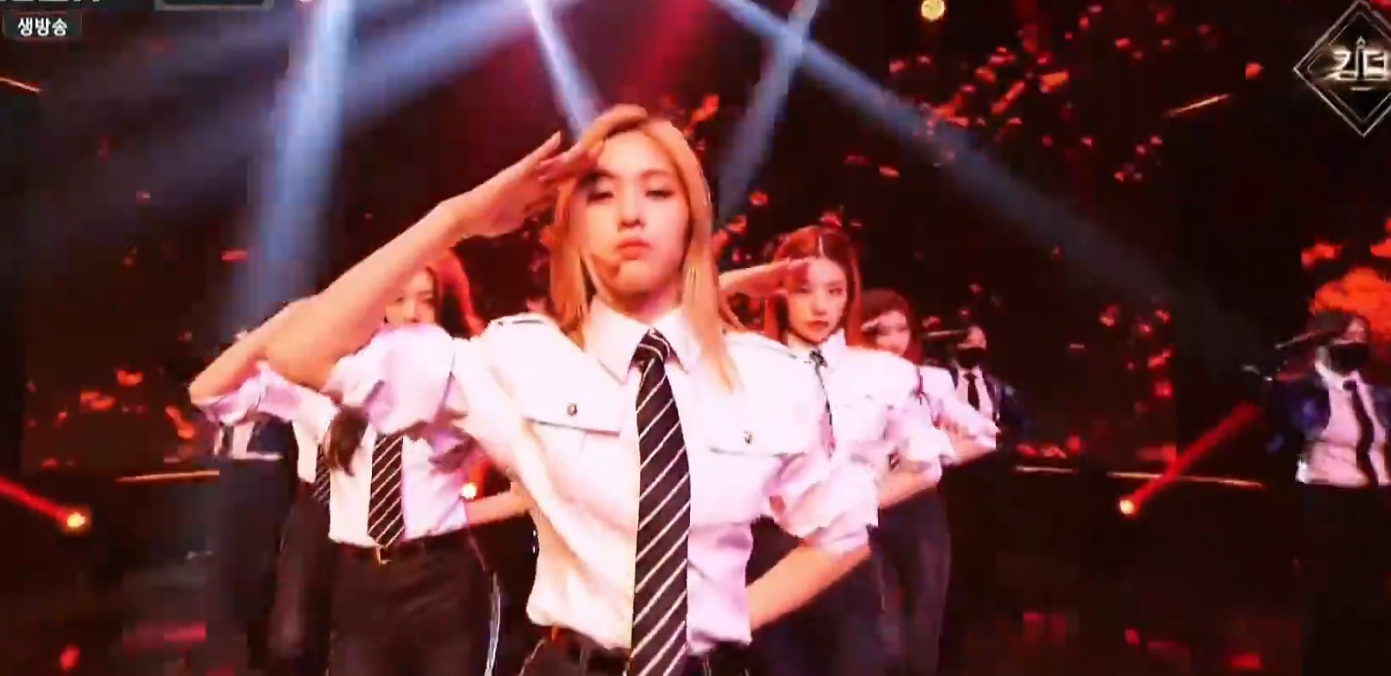

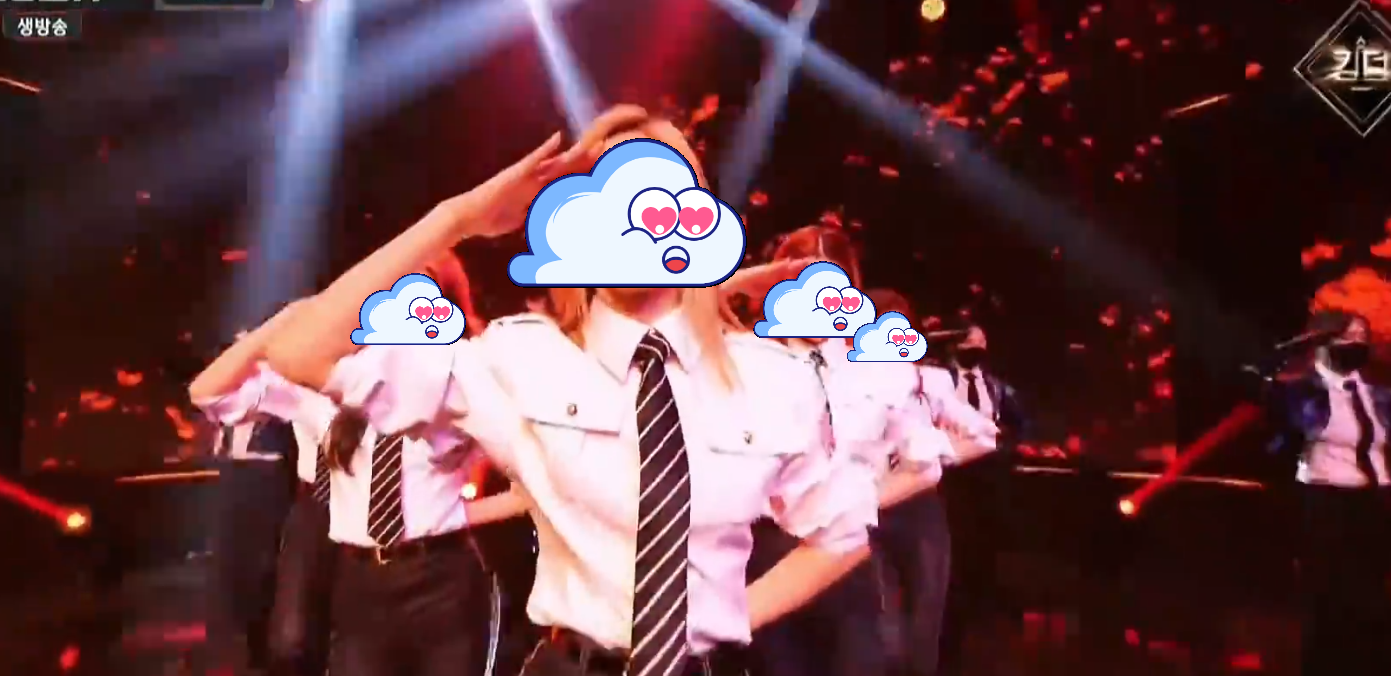

原图

程序处理之后,人脸露出较多的都能检测到了

849

849

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?