1. 前人的工作方向、面临的挑战、本文的贡献

1.1 前人的工作方向

To capture the 3D geometries, prior works mainly rely on exploring sophisticated local geometric extractors using convolution, graph, or attention mechanisms.

1.2 面临的挑战

These methods, however, incur(招致、引起) unfavorable latency during inference, and the performance saturates over the past few years.

1.3 本文的贡献

In this paper, we present a novel perspective on this task. We notice that detailed local geometrical information probably is not the key to point cloud analysis – we introduce a pure residual MLP network, called PointMLP, which integrates no “sophisticated” local geometrical extractors but still performs very competitively. Equipped with a proposed lightweight geometric affine module, PointMLP delivers the new state-of-the-art on multiple datasets.

1.4 PointMLP的性能

On the real-world ScanObjectNN dataset, our method even surpasses the prior best method by 3.3% accuracy. We emphasize that PointMLP achieves this strong performance without any sophisticated operations, hence leading to a superior inference speed. Compared to most recent CurveNet, PointMLP trains 2× faster, tests 7× faster, and is more accurate on ModelNet40 benchmark.

1.5 代码网址

https://github.com/ma-xu/pointMLP-pytorch

2. 论文的起源:

3. 论文采取的方法:DEEP RESIDUAL MLP FOR POINT CLOUD

We begin with representing local points with simple residual MLPs as they are permutation-invariant and straightforward. Then we introduce a lightweight geometric affine module to boost the performance. To improve efficiency further, we also introduce a lightweight counterpart, dubbed as PointMLP-elite.

3.1 REVISITING POINT-BASED METHODS

The design of point-based methods for point cloud analysis dates back to the PointNet and PointNet++ papers (Qi et al., 2017a;b), if not earlier. The motivation behind this direction is to directly

consume point clouds from the beginning and avoid unnecessary rendering processes.

简单介绍了pointnet++、RSCNN和Point Transformer。

接着提出这些方法没有解决的问题:

While these methods can easily take the advantage of detailed local geometric information and usually exhibit promising results, two issues limit their development.

- First, with the introduction of delicate extractors, the computational complexity is largely increased, leading to prohibitive inference latency . For example, the FLOPs of Equation 3 in Point Transformer would be 14Kd^2,ignoring the summation and subtraction operations. Compared with the conventional FC layer that enjoys 2Kd2 FLOPs, it increases the computations by times. Notice that the memory access cost is not considered yet.

- Second, with the development of local feature extractors, the performance gain has started to saturate on popular benchmarks. Moreover, empirical analysis in Liu et al. (2020) reveals that most sophisticated local extractors make surprisingly similar contributions to the network performance under the same network input. Both limitations encourage us to develop a new method that circumvents the employment of sophisticated local extractors, and provides gratifying results

3.2 FRAMEWORK OF POINTMLP

We propose to learn the point cloud representation by a simple feed-forward residual MLP network

(named PointMLP), which hierarchically aggregates the local features extracted by MLPs, and abandons the use of delicate local geometric extractors.

POINTMLP的结构

In order to get rid of the restrictions mentioned above, we present a simple yet effective MLP-based

network for point cloud analysis that no sophisticated or heavy operations are introduced. The key

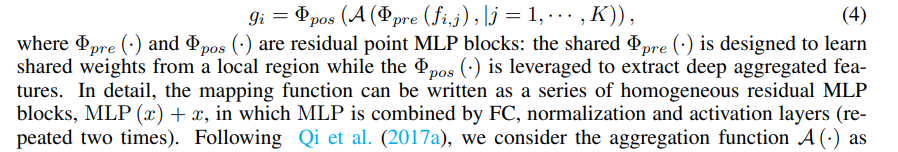

operation of our PointMLP can be formulated as:

A (·) :max-pooling operation. Equation 4 describes one stage of of PointMLP. For a hierarchical and deep network, we recursively repeat the operation by s stages.

A (·) :max-pooling operation. Equation 4 describes one stage of of PointMLP. For a hierarchical and deep network, we recursively repeat the operation by s stages.

PointMLP的优点

Albeit(尽管) the framework of PointMLP is succinct(简明的), it exhibits some prominent merits.

- Since PointMLP only leverages MLPs, it is naturally invariant to permutation, which perfectly fits the characteristic of point cloud.

- By incorporating residual connections, PointMLP can be easily extended to dozens layers, resulting deep feature

representations. - In addition, since there is no sophisticated extractors included and the main operation is only highly optimized feed-forward MLPs, even we introduce more layers, our PointMLP still performs efficiently.

3.3GEOMETRIC AFFINE MODULE

To further improve the robustness and improve the performance, we also introduce a lightweight geometric affine module to transform the local points to a normal distribution.

简单 stacking more blocks来增加深度带来的问题

While it may be easy to simply increase the depth by considering more stages or stacking more blocks in Φpre and Φpos, we notice that a simple deep MLP structure will decrease the accuracy and stability, making the model less robust. This is perhaps caused by the sparse and irregular geometric structures in local regions. Diverse geometric structures among different local regions may require different extractors but shared residual MLPs struggle at achieving this.

解决的方法

We flesh out (充实、具体化)this intuition and develop a lightweight geometric affine module to tackle this problem.

即增加了一个几何仿射模块来解决

4. 论文达到的性能

5.关键代码段

class LocalGrouper(nn.Module):

def __init__(self, channel, groups, kneighbors, use_xyz=True, normalize="center", **kwargs):

"""

Give xyz[b,p,3] and fea[b,p,d], return new_xyz[b,g,3] and new_fea[b,g,k,d]

:param groups: groups number

:param kneighbors: k-nerighbors

:param kwargs: others

"""

super(LocalGrouper, self).__init__()

self.groups = groups

self.kneighbors = kneighbors

self.use_xyz = use_xyz

if normalize is not None:

self.normalize = normalize.lower()

else:

self.normalize = None

if self.normalize not in ["center", "anchor"]:

print(f"Unrecognized normalize parameter (self.normalize), set to None. Should be one of [center, anchor].")

self.normalize = None

if self.normalize is not None:

add_channel=3 if self.use_xyz else 0

self.affine_alpha = nn.Parameter(torch.ones([1,1,1,channel + add_channel]))

self.affine_beta = nn.Parameter(torch.zeros([1, 1, 1, channel + add_channel]))

def forward(self, xyz, points):

B, N, C = xyz.shape

S = self.groups

xyz = xyz.contiguous() # xyz [btach, points, xyz]

# fps_idx = torch.multinomial(torch.linspace(0, N - 1, steps=N).repeat(B, 1).to(xyz.device), num_samples=self.groups, replacement=False).long()

# fps_idx = farthest_point_sample(xyz, self.groups).long()

fps_idx = pointnet2_utils.furthest_point_sample(xyz, self.groups).long() # [B, npoint]

new_xyz = index_points(xyz, fps_idx) # [B, npoint, 3]

new_points = index_points(points, fps_idx) # [B, npoint, d]

idx = knn_point(self.kneighbors, xyz, new_xyz)

# idx = query_ball_point(radius, nsample, xyz, new_xyz)

grouped_xyz = index_points(xyz, idx) # [B, npoint, k, 3]

grouped_points = index_points(points, idx) # [B, npoint, k, d]

if self.use_xyz:

grouped_points = torch.cat([grouped_points, grouped_xyz],dim=-1) # [B, npoint, k, d+3]

if self.normalize is not None:

if self.normalize =="center":

mean = torch.mean(grouped_points, dim=2, keepdim=True)

if self.normalize =="anchor":

mean = torch.cat([new_points, new_xyz],dim=-1) if self.use_xyz else new_points

mean = mean.unsqueeze(dim=-2) # [B, npoint, 1, d+3]

std = torch.std((grouped_points-mean).reshape(B,-1),dim=-1,keepdim=True).unsqueeze(dim=-1).unsqueeze(dim=-1)

grouped_points = (grouped_points-mean)/(std + 1e-5)

grouped_points = self.affine_alpha*grouped_points + self.affine_beta

new_points = torch.cat([grouped_points, new_points.view(B, S, 1, -1).repeat(1, 1, self.kneighbors, 1)], dim=-1)

return new_xyz, new_points

class ConvBNReLU1D(nn.Module):

def __init__(self, in_channels, out_channels, kernel_size=1, bias=True, activation='relu'):

super(ConvBNReLU1D, self).__init__()

self.act = get_activation(activation)

self.net = nn.Sequential(

nn.Conv1d(in_channels=in_channels, out_channels=out_channels, kernel_size=kernel_size, bias=bias),

nn.BatchNorm1d(out_channels),

self.act

)

def forward(self, x):

return self.net(x)

class ConvBNReLURes1D(nn.Module):

def __init__(self, channel, kernel_size=1, groups=1, res_expansion=1.0, bias=True, activation='relu'):

super(ConvBNReLURes1D, self).__init__()

self.act = get_activation(activation)

self.net1 = nn.Sequential(

nn.Conv1d(in_channels=channel, out_channels=int(channel * res_expansion),

kernel_size=kernel_size, groups=groups, bias=bias),

nn.BatchNorm1d(int(channel * res_expansion)),

self.act

)

if groups > 1:

self.net2 = nn.Sequential(

nn.Conv1d(in_channels=int(channel * res_expansion), out_channels=channel,

kernel_size=kernel_size, groups=groups, bias=bias),

nn.BatchNorm1d(channel),

self.act,

nn.Conv1d(in_channels=channel, out_channels=channel,

kernel_size=kernel_size, bias=bias),

nn.BatchNorm1d(channel),

)

else:

self.net2 = nn.Sequential(

nn.Conv1d(in_channels=int(channel * res_expansion), out_channels=channel,

kernel_size=kernel_size, bias=bias),

nn.BatchNorm1d(channel)

)

def forward(self, x):

return self.act(self.net2(self.net1(x)) + x)

class PreExtraction(nn.Module):

def __init__(self, channels, out_channels, blocks=1, groups=1, res_expansion=1, bias=True,

activation='relu', use_xyz=True):

"""

input: [b,g,k,d]: output:[b,d,g]

:param channels:

:param blocks:

"""

super(PreExtraction, self).__init__()

in_channels = 3+2*channels if use_xyz else 2*channels

self.transfer = ConvBNReLU1D(in_channels, out_channels, bias=bias, activation=activation)

operation = []

for _ in range(blocks):

operation.append(

ConvBNReLURes1D(out_channels, groups=groups, res_expansion=res_expansion,

bias=bias, activation=activation)

)

self.operation = nn.Sequential(*operation)

def forward(self, x):

b, n, s, d = x.size() # torch.Size([32, 512, 32, 6])

x = x.permute(0, 1, 3, 2)

x = x.reshape(-1, d, s)

x = self.transfer(x)

batch_size, _, _ = x.size()

x = self.operation(x) # [b, d, k]

x = F.adaptive_max_pool1d(x, 1).view(batch_size, -1)

x = x.reshape(b, n, -1).permute(0, 2, 1)

return x

class PosExtraction(nn.Module):

def __init__(self, channels, blocks=1, groups=1, res_expansion=1, bias=True, activation='relu'):

"""

input[b,d,g]; output[b,d,g]

:param channels:

:param blocks:

"""

super(PosExtraction, self).__init__()

operation = []

for _ in range(blocks):

operation.append(

ConvBNReLURes1D(channels, groups=groups, res_expansion=res_expansion, bias=bias, activation=activation)

)

self.operation = nn.Sequential(*operation)

def forward(self, x): # [b, d, g]

return self.operation(x)

class Model(nn.Module):

def __init__(self, points=1024, class_num=40, embed_dim=64, groups=1, res_expansion=1.0,

activation="relu", bias=True, use_xyz=True, normalize="center",

dim_expansion=[2, 2, 2, 2], pre_blocks=[2, 2, 2, 2], pos_blocks=[2, 2, 2, 2],

k_neighbors=[32, 32, 32, 32], reducers=[2, 2, 2, 2], **kwargs):

super(Model, self).__init__()

self.stages = len(pre_blocks)

self.class_num = class_num

self.points = points

self.embedding = ConvBNReLU1D(3, embed_dim, bias=bias, activation=activation)

assert len(pre_blocks) == len(k_neighbors) == len(reducers) == len(pos_blocks) == len(dim_expansion), \

"Please check stage number consistent for pre_blocks, pos_blocks k_neighbors, reducers."

self.local_grouper_list = nn.ModuleList()

self.pre_blocks_list = nn.ModuleList()

self.pos_blocks_list = nn.ModuleList()

last_channel = embed_dim

anchor_points = self.points

for i in range(len(pre_blocks)):

out_channel = last_channel * dim_expansion[i]

pre_block_num = pre_blocks[i]

pos_block_num = pos_blocks[i]

kneighbor = k_neighbors[i]

reduce = reducers[i]

anchor_points = anchor_points // reduce

# append local_grouper_list

local_grouper = LocalGrouper(last_channel, anchor_points, kneighbor, use_xyz, normalize) # [b,g,k,d]

self.local_grouper_list.append(local_grouper)

# append pre_block_list

pre_block_module = PreExtraction(last_channel, out_channel, pre_block_num, groups=groups,

res_expansion=res_expansion,

bias=bias, activation=activation, use_xyz=use_xyz)

self.pre_blocks_list.append(pre_block_module)

# append pos_block_list

pos_block_module = PosExtraction(out_channel, pos_block_num, groups=groups,

res_expansion=res_expansion, bias=bias, activation=activation)

self.pos_blocks_list.append(pos_block_module)

last_channel = out_channel

self.act = get_activation(activation)

self.classifier = nn.Sequential(

nn.Linear(last_channel, 512),

nn.BatchNorm1d(512),

self.act,

nn.Dropout(0.5),

nn.Linear(512, 256),

nn.BatchNorm1d(256),

self.act,

nn.Dropout(0.5),

nn.Linear(256, self.class_num)

)

def forward(self, x):

xyz = x.permute(0, 2, 1)

batch_size, _, _ = x.size()

x = self.embedding(x) # B,D,N

for i in range(self.stages):

# Give xyz[b, p, 3] and fea[b, p, d], return new_xyz[b, g, 3] and new_fea[b, g, k, d]

xyz, x = self.local_grouper_list[i](xyz, x.permute(0, 2, 1)) # [b,g,3] [b,g,k,d]

x = self.pre_blocks_list[i](x) # [b,d,g]

x = self.pos_blocks_list[i](x) # [b,d,g]

x = F.adaptive_max_pool1d(x, 1).squeeze(dim=-1)

x = self.classifier(x)

return x

def pointMLP(num_classes=40, **kwargs) -> Model:

return Model(points=1024, class_num=num_classes, embed_dim=64, groups=1, res_expansion=1.0,

activation="relu", bias=False, use_xyz=False, normalize="anchor",

dim_expansion=[2, 2, 2, 2], pre_blocks=[2, 2, 2, 2], pos_blocks=[2, 2, 2, 2],

k_neighbors=[24, 24, 24, 24], reducers=[2, 2, 2, 2], **kwargs)

def pointMLPElite(num_classes=40, **kwargs) -> Model:

return Model(points=1024, class_num=num_classes, embed_dim=32, groups=1, res_expansion=0.25,

activation="relu", bias=False, use_xyz=False, normalize="anchor",

dim_expansion=[2, 2, 2, 1], pre_blocks=[1, 1, 2, 1], pos_blocks=[1, 1, 2, 1],

k_neighbors=[24,24,24,24], reducers=[2, 2, 2, 2], **kwargs)

if __name__ == '__main__':

data = torch.rand(2, 3, 1024)

print("===> testing pointMLP ...")

model = pointMLP()

out = model(data)

print(out.shape)

6.本文中学到的、可能用到的词、搭配、表述方法

Albeit(尽管)

opt to 选择

succinct(简明的)

flesh out (充实、具体化)this intuition (直觉)

tackle this problem 解决这个问题

incur(招致、引起) unfavorable latency 招致令人不快的延迟

prior works

Empirically 根据观察、经验上

performs very competitively

we empirically found that…… 在实验中,我们发现……

we emphasize that 我们强调……

largely hamper(妨碍) the performance

7309

7309

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?