效果

实现

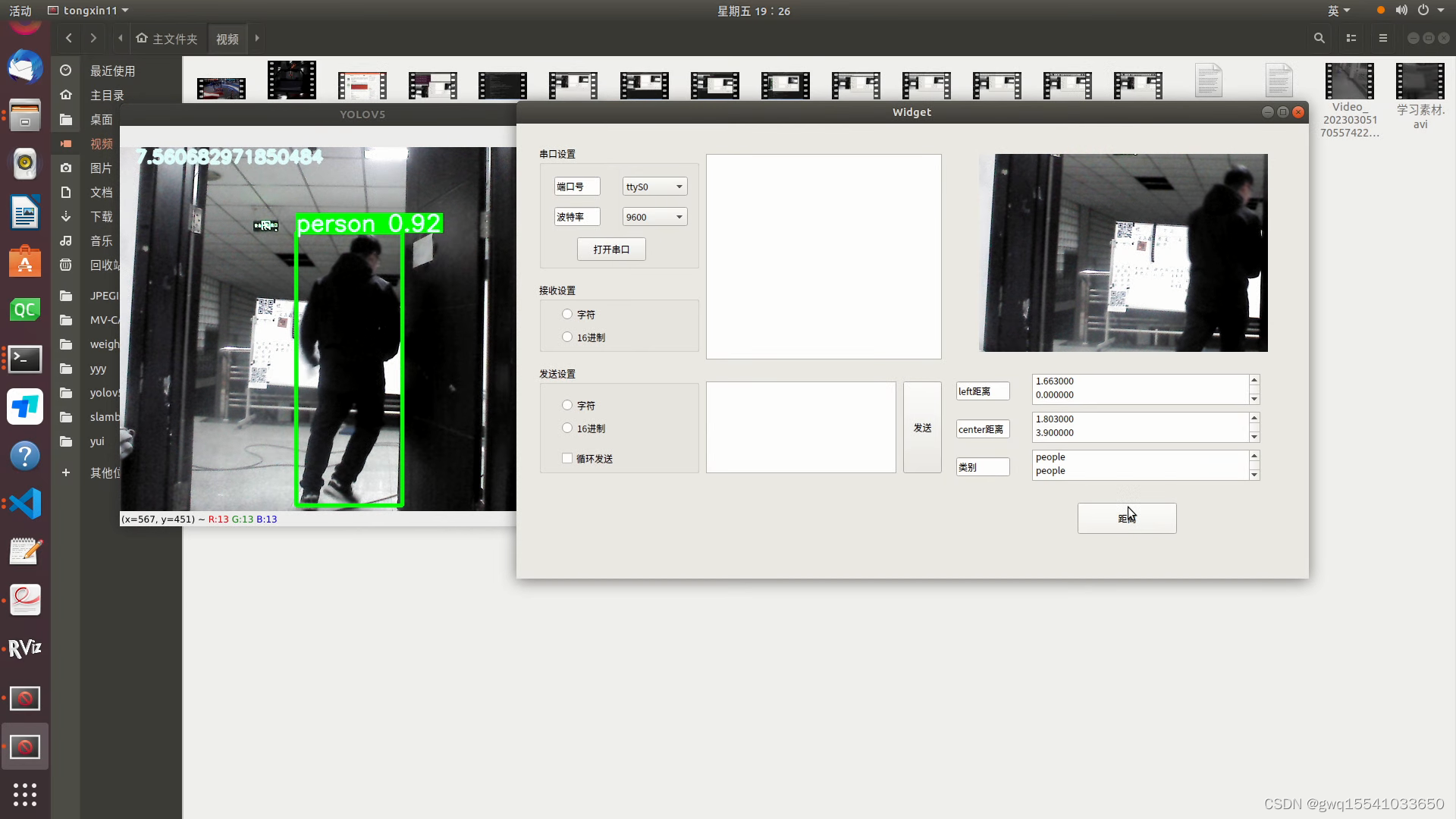

本项目采用ros框架,用yolov5作为识别模块,采用奥比中光astra深度相机测距,ui界面显示实时图像,点击显示距离。

代码解释:

思路是把yolov5封装成功能包,用python写一个ros收发接口调用模型。主函数接受yolov5模块识别到的数据,调用深度图像得到距离,再把图像和距离显示在ui界面上。

本程序预留串口通信,可与嵌入式端进行通信,扩展成更多项目。

main.cpp

#include <ros/ros.h>

#include <sensor_msgs/Image.h>

#include <sensor_msgs/image_encodings.h>

#include <cv_bridge/cv_bridge.h>

#include <iostream>

#include <opencv2/opencv.hpp>

#include <opencv2/imgproc/imgproc.hpp>

#include <image_transport/image_transport.h>

#include <sensor_msgs/Image.h>

#include <sensor_msgs/CameraInfo.h>

#include <opencv2/highgui/highgui.hpp>

#include <cv_bridge/cv_bridge.h>

#include <message_filters/subscriber.h>

#include <message_filters/time_synchronizer.h>

#include<message_filters/sync_policies/approximate_time.h>

#include <pcl/point_cloud.h>

#include<thread>

#include<pcl/point_types.h>

#include<pcl_conversions/pcl_conversions.h>

#include<pcl/visualization/cloud_viewer.h>

#include <sensor_msgs/PointCloud2.h>

#include "widget.h"

#include <QApplication>

#include "std_msgs/String.h"

using namespace std;

using namespace sensor_msgs;

using namespace message_filters;

using namespace cv;

typedef pcl::PointXYZRGB PointT;

typedef pcl::PointCloud<PointT> testcloud;

//#include<realsense_dev/depth_value.h> //自定义一个消息类型

cv::Mat depth_pic; //定义全局变量,图像矩阵Mat形式

String some="someee";

char some1[30];

//realsense_dev::depth_value command_to_pub; //待发布数据

double x,y,z;

int n=0;

void depthCallback(const sensor_msgs::Image::ConstPtr&msg)

{

cv_bridge::CvImagePtr Dest ;

Dest = cv_bridge::toCvCopy(msg,sensor_msgs::image_encodings::TYPE_16UC1);

depth_pic = Dest->image;

//cout<<"Output some info about the depth image in cv format"<<endl;

//cout<< "Rows of the depth iamge = "<<depth_pic.rows<<endl; //获取深度图的行数height

//cout<< "Cols of the depth iamge = "<<depth_pic.cols<<endl; //获取深度图的列数width

//cout<< "Type of depth_pic's element = "<<depth_pic.type()<<endl; //深度图的类型

ushort Ld = depth_pic.at<ushort>(command_to_pub2.x+5,command_to_pub2.y+5); //读取深度值,数据类型为ushort单位为mm

ushort Rd = depth_pic.at<ushort>((command_to_pub2.x1+command_to_pub2.x)/2,(command_to_pub2.y1+command_to_pub2.y)/2); //读取深度值,数据类型为ushort单位为mm

//ushort Ld = depth_pic.at<ushort>(400,788); //读取深度值,数据类型为ushort单位为mm

//ushort Rd = depth_pic.at<ushort>(406,800); //读取深度值,数据类型为ushort单位为mm

Rd_value = float(Rd)/1000 ; //强制转换

Ld_value = float(Ld)/1000 ; //强制转换

cout<< "Value of Rdepth_pic's pixel= "<<Rd_value<< "Value of Ldepth_pic's pixel= "<<Ld_value<<endl; //读取深度值

}

void imageCallback(const sensor_msgs::ImageConstPtr& msg)

{

// clock_t start,ends;

// start=clock();

try

{

cv::Mat image = cv_bridge::toCvShare(msg, "bgra8")->image; //image_raw就是我们得到的图像了

int rows = image.rows;//std::cout<<"rows="<<rows<<std::endl;

int cols = image.cols;//std::cout<<"cols="<<cols<<std::endl;

cvimage=image;

}

catch (cv_bridge::Exception &e)

{

ROS_ERROR("Could not conveextrinsicMat_RT from '%s' to 'rgb8'.", msg->encoding.c_str());

}

// ends=clock();

// std::cout<<"img call:"<<(double (ends-start)/CLOCKS_PER_SEC)<<std::endl;

}

void leidaCallback(const std_msgs::String::ConstPtr& msg_p)

{

ROS_INFO("%s",msg_p->data.c_str());

some=msg_p->data.c_str();

//ROS_INFO("我听见:%s",(*msg_p).data.c_str());

char x[6],y[6],x1[6],y1[6];

int i;

for(i=0;i<some.length();i++)

{

if(some[i-1]=='F' && some[i]=='C')

{

command_to_pub1.Value[0]=some[i+1];

command_to_pub1.Value[1]=some[i+2];

}

if(some[i-1]=='y') //x y q w h

{

for(int q=0;q<6;q++)

{

if(some[i+q]!= '.' )

{

y[q]=some[i+q];

if(some[i+q]== 'q' )

{

break;

}

}

else

{

continue;

}

}

}

if(some[i-1]=='x')

{

for(int q=0;q<6;q++)

{

if(some[i+q]!= '.' )

{

x[q]=some[i+q];

if(some[i+q]== 'y' )

{

break;

}

}

else

{

continue;

}

}

}

if(some[i-1]== 'w' )

{

for(int q=0;q<6;q++)

{

if(some[i+q]!= '.' )

{

y1[q]=some[i+q];

if(some[i+q]== 'h' )

{

break;

}

}

else

{

continue;

}

}

}

if( some[i-1]== 'q' )

{

for(int q=0;q<6;q++)

{

if(some[i+q]!= '.' )

{

x1[q]=some[i+q];

if(some[i+q]== 'w' )

{

break;

}

}

else

{

continue;

}

}

}

}

command_to_pub2.x = atoi(x);

command_to_pub2.y= atoi(y);

command_to_pub2.x1= atoi(x1);

command_to_pub2.y1= atoi(y1);

printf("\nsome:%d,%d,%d,%d",command_to_pub2.x,command_to_pub2.y,command_to_pub2.x1,command_to_pub2.y1);

}

int uuu(int argc, char *argv[])

{

QApplication a(argc, argv);

Widget w;

w.show();

return a.exec();

}

void ttt(int argc, char *argv[])

{

ros::init(argc, argv, "stream_pub"); // ros节点初始化

ros::NodeHandle nh; //创建节点句柄

image_transport::ImageTransport it(nh);

ros::AsyncSpinner spinner(1);

spinner.start();

ros::Subscriber sub1 = nh.subscribe<std_msgs::String>("/leida_num",1,leidaCallback);

ros::Subscriber image_sub = nh.subscribe<sensor_msgs::Image>("/camera/depth/image_raw",1,depthCallback); //订阅深度图像

image_transport::Subscriber sub = it.subscribe("/camera/color/image_raw", 1, &imageCallback);

//ros::Subscriber element_sub = nh.subscribe<sensor_msgs::Image>("/camera/aligned_depth_to_color/image_raw",100,pixelCallback); //订阅像素点坐标

//ros::Publisher mode_pub = nh.advertise<realsense_dev::depth_value>("/depth_info", 10);

//command_to_pub.Value = 0; //初始化深度值

ros::Rate rate(20.0); //设定自循环的频率

while(ros::ok)

{

//command_to_pub.header.stamp = ros::Time::now();

//command_to_pub.Value = d_value; //depth_pic.rows/2,depth_pic.cols/2 为像素点

}

ros::Duration(10).sleep(); //设定自循环的频率

}

int main(int argc,char *argv[])

{

int yt;

thread th2(uuu,argc, argv);

thread th3(ttt,argc, argv);

//uuu(argc, argv);

th2.join();

th3.join();

return yt;

}

widget.cpp片段

void Widget::showww()

{

Rect rect(command_to_pub2.x,command_to_pub2.y,

command_to_pub2.x1-command_to_pub2.x,command_to_pub2.y1-command_to_pub2.y);

Mat mat = cvimage;

//cvtColor( mat, mat, COLOR_BGR2RGB);//BGR转化为RGB

QImage::Format format = QImage::Format_ARGB32;

switch ( mat.type())

{

case CV_8UC1:

format = QImage::Format_Indexed8;

break;

case CV_8UC3:

format = QImage::Format_RGB888;

break;

case CV_8UC4:

format = QImage::Format_ARGB32;

break;

}

QImage image = QImage((const uchar*) mat.data, mat.cols, mat.rows,

mat.cols * mat.channels(), format);

/*cvtColor(mat, mat, CV_BGR2RGB);

QImage image(mat.data,

mat.cols,

mat.rows,

mat.step,

QImage::Format_RGB888);*/

//QString filename("/home/q/图片/标定二维码/image/1.bmp");

QImage* img=new QImage;

img=ℑ

ui->labImgShow->setPixmap(QPixmap::fromImage(*img));

timer->start(20);//启动计时器

}

void Widget::text()

{

float uio=5.8;

String utf5=to_string( Ld_value);

char utf8[20];

strcpy( utf8,utf5.c_str());

QString str2=QString::fromUtf8(utf8);

ui->Ldepth_textEdit->append(str2); //接收区显示 -- 追加方式

ui->Ldepth_textEdit->show();

String utf6=to_string( Rd_value);

char utf9[20];

strcpy( utf9,utf6.c_str());

QString str3=QString::fromUtf8(utf9);

ui->Rdepth_textEdit->append(str3); //接收区显示 -- 追加方式

ui->Rdepth_textEdit->show();

String utf59= "people";

char utf89[20];

strcpy( utf89,utf59.c_str());

QString str26=QString::fromUtf8(utf89);

ui->name_textEdit->append(str26); //接收区显示 -- 追加方式

ui->name_textEdit->show();

}yolov5识别片段

# src=cv2.imread('biye.jpg')

def detect(img):

time1 = time.time()

global ros_image

global xytoxy

global label_name

cudnn.benchmark = True

dataset = loadimg(img)

# print(dataset[3])

# plt.imshow(dataset[2][:, :, ::-1])

names = model.module.names if hasattr(model, 'module') else model.names

# colors = [[random.randint(0, 255) for _ in range(3)] for _ in range(len(names))]

# colors=[[0,255,0]]

augment = 'store_true'

conf_thres = 0.3

iou_thres = 0.45

classes = (0, 1, 2, 3, 5, 7)

agnostic_nms = 'store_true'

img = torch.zeros((1, 3, imgsz, imgsz), device=device) # init img

_ = model(img.half() if half else img) if device.type != 'cpu' else None # run once

path = dataset[0]

img = dataset[1]

im0s = dataset[2]

vid_cap = dataset[3]

img = torch.from_numpy(img).to(device)

img = img.half() if half else img.float() # uint8 to fp16/32

img /= 255.0 # 0 - 255 to 0.0 - 1.0

time2 = time.time()

if img.ndimension() == 3:

img = img.unsqueeze(0)

# Inference

pred = model(img, augment=augment)[0]

# Apply NMS

pred = non_max_suppression(pred, conf_thres, iou_thres, classes=classes, agnostic=agnostic_nms)

view_img = 1

save_txt = 1

save_conf = 'store_true'

time3 = time.time()

for i, det in enumerate(pred): # detections per image

p, s, im0 = path, '', im0s

s += '%gx%g ' % img.shape[2:] # print string

gn = torch.tensor(im0.shape)[[1, 0, 1, 0]] # normalization gain whwh

if det is not None:

# print(det)

# Rescale boxes from img_size to im0 size

det[:, :4] = scale_coords(img.shape[2:], det[:, :4], im0.shape).round()

# Print results

for c in det[:, -1].unique():

n = (det[:, -1] == c).sum() # detections per class

s += '%g %ss, ' % (n, names[int(c)]) # add to string

# Write results

for *xyxy, conf, cls in reversed(det):

if save_txt: # Write to file

xywh = (xyxy2xywh(torch.tensor(xyxy).view(1, 4)) / gn).view(-1).tolist() # normalized xywh

line = (cls, conf, *xywh) if save_conf else (cls, *xywh) # label format

y = torch.tensor(xyxy).view(1, 4)

xy = y.clone() if isinstance(y, torch.Tensor) else np.copy(y)

xytoxy = xy.view(-1).tolist()

if view_img: # Add bbox to image

label = '%s %.2f' % (names[int(cls)], conf)

label_name = names[int(cls)]

plot_one_box(xyxy, im0, label=label, color=[0, 255, 0], line_thickness=3)

time4 = time.time()

cv2.putText(im0, str(fps), (20, 20), 0, 0.75, [225, 255, 255], thickness=3, lineType=cv2.LINE_AA)

print('************')

print('2-1', time2 - time1)

print('3-2', time3 - time2)

print('4-3', time4 - time3)

print('total', time4 - time1)

print('xy2xy', xytoxy[0])

print("label_name:", label_name)

some = "FC" + str(label_name) + "x" + str(xytoxy[0]) + "y" + str(xytoxy[1]) + "q" + str(xytoxy[2]) + "w" + str(xytoxy[3]) +"h"+ "RA"

print("label_name:", some)

out_img = im0[:, :, [2, 1, 0]]

ros_image = out_img

cv2.imshow('YOLOV5', out_img)

a = cv2.waitKey(1)

#### Create CompressedIamge ####

# publish_image(im0)

publish_image1(some)CmakeList.cpp

cmake_minimum_required(VERSION 3.5)

project(picture2pcl)

## Compile as C++11, supported in ROS Kinetic and newer

#add_compile_options(-std=c++11)

set(CMAKE_CXX_STANDARD 11)

set(CMAKE_CXX_STANDARD_REQUIRED True)

set(CMAKE_AUTOUIC ON)

set(CMAKE_AUTOMOC ON)

set(CMAKE_AUTORCC ON)

find_library(librealsense REQUIRED)

find_package(catkin REQUIRED COMPONENTS

geometry_msgs

roscpp

rospy

std_msgs

cv_bridge

message_generation

image_transport

pcl_ros

sensor_msgs

tf

)

find_package(Eigen3 REQUIRED)

find_package(PCL REQUIRED)

#find_package(realsense2 REQUIRED)

find_package(OpenCV REQUIRED)

catkin_package(

# INCLUDE_DIRS include

# LIBRARIES realsense_dev

CATKIN_DEPENDS roscpp std_msgs

DEPENDS EIGEN3 PCL OpenCV

INCLUDE_DIRS

# DEPENDS system_lib

)

include_directories(

# include

${catkin_INCLUDE_DIRS}

${OpenCV_INCLUDE_DIRS}

${EIGEN3_INCLUDE_DIR}

${PCL_INCLUDE_DIRS}

)

#add_executable(stream_pub src/stream_pub.cpp)

#target_link_libraries(stream_pub ${catkin_LIBRARIES} ${OpenCV_LIBS})

#add_executable(main src/main.cpp src/serial_communication.cpp )

#find_package(Qt5 COMPONENTS Widgets REQUIRED)

#find_package(Qt5 COMPONENTS Core SerialPort REQUIRED)

find_package(Qt5Widgets REQUIRED)

find_package(Qt5Core REQUIRED)

find_package(Qt5Gui REQUIRED)

find_package(Qt5SerialPort REQUIRED)

if(ANDROID)

add_library(tongxin11 SHARED

src/main.cpp

src/serial_communication.cpp

src/serial_communication.h

src/main.cpp

src/widget.cpp

src/widget.h

src/widget.ui

)

else()

add_executable(tongxin11

src/main.cpp

src/serial_communication.cpp

src/serial_communication.h

src/main.cpp

src/main.cpp

src/widget.cpp

src/widget.h

src/widget.ui

)

endif()

target_link_libraries(tongxin11 ${Qt5Core_LIBRARIES} ${Qt5Widgets_LIBRARIES} ${Qt5SerialPort_LIBRARIES} ${catkin_LIBRARIES} ${PCL_LIBRARIES} ${OpenCV_LIBS} -lpthread -luuid)

#target_link_libraries(serialportLIB ${Qt5Core_LIBRARIES} ${Qt5Widgets_LIBRARIES} ${Qt5SerialPort_LIBRARIES})

#target_link_libraries(tongxin11 ${catkin_LIBRARIES} ${PCL_LIBRARIES} ${OpenCV_LIBS})

奥比中光深度相机驱动

2388

2388

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?