简介

地图优化实现在mapOptmization.cpp 中。

进行的内容主要是地图优化,将得到的局部地图信息融合到全局地图中去。

论文原理

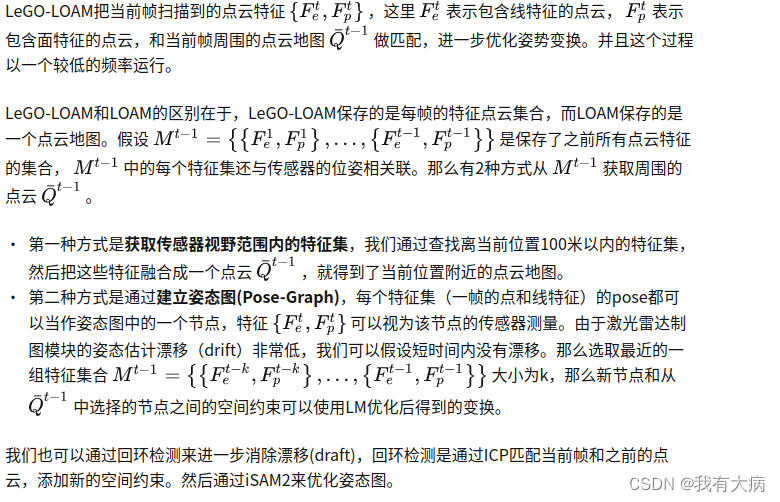

以下内容引自:LeGO-LOAM分析之建图(三)

源码解读

老样子先来看看main函数:

loopthread主要是实现闭环检测的功能,visualizeMapThread实现可视化的功能,

主要功能都在run函数中。

int main(int argc, char** argv)

{

ros::init(argc, argv, "lego_loam");

ROS_INFO("\033[1;32m---->\033[0m Map Optimization Started.");

mapOptimization MO;

// std::thread 构造函数,将MO作为参数传入构造的线程中使用

// 进行闭环检测与闭环的功能

std::thread loopthread(&mapOptimization::loopClosureThread, &MO);

// 该线程中进行的工作是publishGlobalMap(),将数据发布到ros中,可视化

std::thread visualizeMapThread(&mapOptimization::visualizeGlobalMapThread, &MO);

ros::Rate rate(200);

while (ros::ok())

{

ros::spinOnce();

MO.run();

rate.sleep();

}

loopthread.join();

visualizeMapThread.join();

return 0;

}

进入到loopClosureThread闭环线程中,闭环没开启直接返回,否则以一定频率运行performLoopClosure(),执行闭环。

void loopClosureThread(){

if (loopClosureEnableFlag == false)

return;

ros::Rate rate(1);

while (ros::ok()){

rate.sleep();

performLoopClosure();

}

}

先进行闭环检测detectLoopClosure(),如果返回true,则可能可以进行闭环,否则直接返回,程序结束。

void performLoopClosure(){

if (cloudKeyPoses3D->points.empty() == true)

return;

if (potentialLoopFlag == false){

if (detectLoopClosure() == true){

potentialLoopFlag = true;

timeSaveFirstCurrentScanForLoopClosure = timeLaserOdometry;

}

if (potentialLoopFlag == false)

return;

}

闭环检测初始化:

bool detectLoopClosure(){

latestSurfKeyFrameCloud->clear();

nearHistorySurfKeyFrameCloud->clear();

nearHistorySurfKeyFrameCloudDS->clear();

// 资源分配时初始化

// 在互斥量被析构前不解锁

std::lock_guard<std::mutex> lock(mtx);

std::vector<int> pointSearchIndLoop;

std::vector<float> pointSearchSqDisLoop;

kdtreeHistoryKeyPoses->setInputCloud(cloudKeyPoses3D);

cloudKeyPoses3D保存的是点在xyz三轴上的位移以及一个索引cloudKeyPoses3D->points.size()

搜索邻域点:

// 进行半径historyKeyframeSearchRadius内的邻域搜索,

// currentRobotPosPoint:需要查询的点,

// pointSearchIndLoop:搜索完的邻域点对应的索引

// pointSearchSqDisLoop:搜索完的每个邻域点与当前点之间的欧式距离

// 0:返回的邻域个数,为0表示返回全部的邻域点

kdtreeHistoryKeyPoses->radiusSearch(currentRobotPosPoint, historyKeyframeSearchRadius, pointSearchIndLoop, pointSearchSqDisLoop, 0);

筛选邻域内的关键点,首先时间太短不能满足闭环条件,如果邻域内没有满足条件的点,直接返回。

closestHistoryFrameID = -1;

for (int i = 0; i < pointSearchIndLoop.size(); ++i){

int id = pointSearchIndLoop[i];

// 两个时间差值大于30秒

if (abs(cloudKeyPoses6D->points[id].time - timeLaserOdometry) > 30.0){

closestHistoryFrameID = id;

break;

}

}

if (closestHistoryFrameID == -1){

// 找到的点和当前时间上没有超过30秒的

return false;

}

点云坐标转换,旋转rpy:

latestFrameIDLoopCloure = cloudKeyPoses3D->points.size() - 1;

// 点云的xyz坐标进行坐标系变换(分别绕xyz轴旋转)

*latestSurfKeyFrameCloud += *transformPointCloud(cornerCloudKeyFrames[latestFrameIDLoopCloure], &cloudKeyPoses6D->points[latestFrameIDLoopCloure]);

*latestSurfKeyFrameCloud += *transformPointCloud(surfCloudKeyFrames[latestFrameIDLoopCloure], &cloudKeyPoses6D->points[latestFrameIDLoopCloure]);

过滤点云无用点(intensity是-1,说明这些点前面根本没进行处理,当然不能用来闭环):

for (int i = 0; i < cloudSize; ++i){

// intensity不小于0的点放进hahaCloud队列

// 初始化时intensity是-1,滤掉那些点

if ((int)latestSurfKeyFrameCloud->points[i].intensity >= 0){

hahaCloud->push_back(latestSurfKeyFrameCloud->points[i]);

}

}

latestSurfKeyFrameCloud->clear();

*latestSurfKeyFrameCloud = *hahaCloud;

历史关键帧点坐标变换:

// historyKeyframeSearchNum在utility.h中定义为25,前后25个点进行变换

for (int j = -historyKeyframeSearchNum; j <= historyKeyframeSearchNum; ++j){

if (closestHistoryFrameID + j < 0 || closestHistoryFrameID + j > latestFrameIDLoopCloure)

continue;

// 要求closestHistoryFrameID + j在0到cloudKeyPoses3D->points.size()-1之间,不能超过索引

*nearHistorySurfKeyFrameCloud += *transformPointCloud(cornerCloudKeyFrames[closestHistoryFrameID+j], &cloudKeyPoses6D->points[closestHistoryFrameID+j]);

*nearHistorySurfKeyFrameCloud += *transformPointCloud(surfCloudKeyFrames[closestHistoryFrameID+j], &cloudKeyPoses6D->points[closestHistoryFrameID+j]);

}

下采样,以提高系统效率:

// 下采样滤波减少数据量

downSizeFilterHistoryKeyFrames.setInputCloud(nearHistorySurfKeyFrameCloud);

downSizeFilterHistoryKeyFrames.filter(*nearHistorySurfKeyFrameCloudDS);

if (pubHistoryKeyFrames.getNumSubscribers() != 0){

sensor_msgs::PointCloud2 cloudMsgTemp;

pcl::toROSMsg(*nearHistorySurfKeyFrameCloudDS, cloudMsgTemp);

cloudMsgTemp.header.stamp = ros::Time().fromSec(timeLaserOdometry);

cloudMsgTemp.header.frame_id = "/camera_init";

pubHistoryKeyFrames.publish(cloudMsgTemp);

}

return true;

}

回到performLoopClosure中,能进行闭环检测后,进行icp配准

pcl::IterativeClosestPoint<PointType, PointType> icp;

icp.setMaxCorrespondenceDistance(100);

icp.setMaximumIterations(100);

icp.setTransformationEpsilon(1e-6);

icp.setEuclideanFitnessEpsilon(1e-6);

// 设置RANSAC运行次数

icp.setRANSACIterations(0);

icp.setInputSource(latestSurfKeyFrameCloud);

// 使用detectLoopClosure()函数中下采样刚刚更新nearHistorySurfKeyFrameCloudDS

icp.setInputTarget(nearHistorySurfKeyFrameCloudDS);

pcl::PointCloud<PointType>::Ptr unused_result(new pcl::PointCloud<PointType>());

// 进行icp点云对齐

icp.align(*unused_result);

// 为什么匹配分数高直接返回???分数高代表噪声太多

if (icp.hasConverged() == false || icp.getFitnessScore() > historyKeyframeFitnessScore)

return;

// 以下在点云icp收敛并且噪声量在一定范围内进行

if (pubIcpKeyFrames.getNumSubscribers() != 0){

pcl::PointCloud<PointType>::Ptr closed_cloud(new pcl::PointCloud<PointType>());

// icp.getFinalTransformation()的返回值是Eigen::Matrix<Scalar, 4, 4>

pcl::transformPointCloud (*latestSurfKeyFrameCloud, *closed_cloud, icp.getFinalTransformation());

sensor_msgs::PointCloud2 cloudMsgTemp;

pcl::toROSMsg(*closed_cloud, cloudMsgTemp);

cloudMsgTemp.header.stamp = ros::Time().fromSec(timeLaserOdometry);

cloudMsgTemp.header.frame_id = "/camera_init";

pubIcpKeyFrames.publish(cloudMsgTemp);

}

得到配准后的四元数并利用gtsam的方法优化地图:

float x, y, z, roll, pitch, yaw;

Eigen::Affine3f correctionCameraFrame;

correctionCameraFrame = icp.getFinalTransformation();

// 得到平移和旋转的角度

pcl::getTranslationAndEulerAngles(correctionCameraFrame, x, y, z, roll, pitch, yaw);

Eigen::Affine3f correctionLidarFrame = pcl::getTransformation(z, x, y, yaw, roll, pitch);

Eigen::Affine3f tWrong = pclPointToAffine3fCameraToLidar(cloudKeyPoses6D->points[latestFrameIDLoopCloure]);

Eigen::Affine3f tCorrect = correctionLidarFrame * tWrong;

pcl::getTranslationAndEulerAngles (tCorrect, x, y, z, roll, pitch, yaw);

gtsam::Pose3 poseFrom = Pose3(Rot3::RzRyRx(roll, pitch, yaw), Point3(x, y, z));

gtsam::Pose3 poseTo = pclPointTogtsamPose3(cloudKeyPoses6D->points[closestHistoryFrameID]);

gtsam::Vector Vector6(6);

float noiseScore = icp.getFitnessScore();

Vector6 << noiseScore, noiseScore, noiseScore, noiseScore, noiseScore, noiseScore;

constraintNoise = noiseModel::Diagonal::Variances(Vector6);

std::lock_guard<std::mutex> lock(mtx);

gtSAMgraph.add(BetweenFactor<Pose3>(latestFrameIDLoopCloure, closestHistoryFrameID, poseFrom.between(poseTo), constraintNoise));

isam->update(gtSAMgraph);

isam->update();

gtSAMgraph.resize(0);

aLoopIsClosed = true;

}

以上是loopClosureThread闭环线程,下面是可视化线程,这个没什么好理解的

通过KDTree进行最近邻搜索;

通过搜索得到的索引放进队列;

通过两次下采样,减小数据量;:

void visualizeGlobalMapThread(){

ros::Rate rate(0.2);

while (ros::ok()){

rate.sleep();

publishGlobalMap();

}

}

void publishGlobalMap(){

if (pubLaserCloudSurround.getNumSubscribers() == 0)

return;

if (cloudKeyPoses3D->points.empty() == true)

return;

std::vector<int> pointSearchIndGlobalMap;

std::vector<float> pointSearchSqDisGlobalMap;

mtx.lock();

kdtreeGlobalMap->setInputCloud(cloudKeyPoses3D);

// 通过KDTree进行最近邻搜索

kdtreeGlobalMap->radiusSearch(currentRobotPosPoint, globalMapVisualizationSearchRadius, pointSearchIndGlobalMap, pointSearchSqDisGlobalMap, 0);

mtx.unlock();

for (int i = 0; i < pointSearchIndGlobalMap.size(); ++i)

globalMapKeyPoses->points.push_back(cloudKeyPoses3D->points[pointSearchIndGlobalMap[i]]);

// 对globalMapKeyPoses进行下采样

downSizeFilterGlobalMapKeyPoses.setInputCloud(globalMapKeyPoses);

downSizeFilterGlobalMapKeyPoses.filter(*globalMapKeyPosesDS);

for (int i = 0; i < globalMapKeyPosesDS->points.size(); ++i){

int thisKeyInd = (int)globalMapKeyPosesDS->points[i].intensity;

*globalMapKeyFrames += *transformPointCloud(cornerCloudKeyFrames[thisKeyInd], &cloudKeyPoses6D->points[thisKeyInd]);

*globalMapKeyFrames += *transformPointCloud(surfCloudKeyFrames[thisKeyInd], &cloudKeyPoses6D->points[thisKeyInd]);

*globalMapKeyFrames += *transformPointCloud(outlierCloudKeyFrames[thisKeyInd], &cloudKeyPoses6D->points[thisKeyInd]);

}

// 对globalMapKeyFrames进行下采样

downSizeFilterGlobalMapKeyFrames.setInputCloud(globalMapKeyFrames);

downSizeFilterGlobalMapKeyFrames.filter(*globalMapKeyFramesDS);

sensor_msgs::PointCloud2 cloudMsgTemp;

pcl::toROSMsg(*globalMapKeyFramesDS, cloudMsgTemp);

cloudMsgTemp.header.stamp = ros::Time().fromSec(timeLaserOdometry);

cloudMsgTemp.header.frame_id = "/camera_init";

pubLaserCloudSurround.publish(cloudMsgTemp);

globalMapKeyPoses->clear();

globalMapKeyPosesDS->clear();

globalMapKeyFrames->clear();

globalMapKeyFramesDS->clear();

}

然后分析run函数,首先判断是否有新的数据到来并且时间差值小于0.005;

void run(){

if (newLaserCloudCornerLast && std::abs(timeLaserCloudCornerLast - timeLaserOdometry) < 0.005 &&

newLaserCloudSurfLast && std::abs(timeLaserCloudSurfLast - timeLaserOdometry) < 0.005 &&

newLaserCloudOutlierLast && std::abs(timeLaserCloudOutlierLast - timeLaserOdometry) < 0.005 &&

newLaserOdometry)

{

满足条件后,再将新数据标志位复位:

newLaserCloudCornerLast = false; newLaserCloudSurfLast = false; newLaserCloudOutlierLast = false; newLaserOdometry = false;

案然后对mtx上锁,如果当前数据距离上一次处理数据的时间间隔大于设设定的阈值则进行以下操作:

1 将坐标转移到世界坐标系下,得到可用于建图的Lidar坐标;

2 提取周围的关键帧;

3 下采样当前scan;

4 当前scan进行图优化过程;

5 保存关键帧和因子;

6 校正位姿;

7 发布Tf;

8 发布关键位姿和帧数据;

std::lock_guard<std::mutex> lock(mtx);

if (timeLaserOdometry - timeLastProcessing >= mappingProcessInterval) {

timeLastProcessing = timeLaserOdometry;

transformAssociateToMap();

extractSurroundingKeyFrames();

downsampleCurrentScan();

scan2MapOptimization();

saveKeyFramesAndFactor();

correctPoses();

publishTF();

publishKeyPosesAndFrames();

clearCloud();

}

}

}

};

将坐标转移到世界坐标系下,得到可用于建图的Lidar坐标,即修改了transformTobeMapped的值

根据当前和上一次全局姿态优化时的里程计 transformSum transformBefMapped,transformSum是odometry计算得到的到世界坐标系下的转移矩阵,transformBefMapped存放mapping之前的Odometry计算的世界坐标系的转换矩阵(注:低频量,不一定与transformSum一样)

以及上一次全局姿态优化的结果 transformAftMapped,transformAftMapped存放mapping之后的经过mapping微调之后的转换矩阵

计算当前姿态优化的初始值,赋值给 transformTobeMapped, transformTobeMapped以起始位置为原点的世界坐标系下的转换矩阵(猜测与调整的对象)

void transformAssociateToMap()

{

float x1 = cos(transformSum[1]) * (transformBefMapped[3] - transformSum[3])

- sin(transformSum[1]) * (transformBefMapped[5] - transformSum[5]);

float y1 = transformBefMapped[4] - transformSum[4];

float z1 = sin(transformSum[1]) * (transformBefMapped[3] - transformSum[3])

+ cos(transformSum[1]) * (transformBefMapped[5] - transformSum[5]);

float x2 = x1;

float y2 = cos(transformSum[0]) * y1 + sin(transformSum[0]) * z1;

float z2 = -sin(transformSum[0]) * y1 + cos(transformSum[0]) * z1;

transformIncre[3] = cos(transformSum[2]) * x2 + sin(transformSum[2]) * y2;

transformIncre[4] = -sin(transformSum[2]) * x2 + cos(transformSum[2]) * y2;

transformIncre[5] = z2;

float sbcx = sin(transformSum[0]);

float cbcx = cos(transformSum[0]);

float sbcy = sin(transformSum[1]);

float cbcy = cos(transformSum[1]);

float sbcz = sin(transformSum[2]);

float cbcz = cos(transformSum[2]);

float sblx = sin(transformBefMapped[0]);

float cblx = cos(transformBefMapped[0]);

float sbly = sin(transformBefMapped[1]);

float cbly = cos(transformBefMapped[1]);

float sblz = sin(transformBefMapped[2]);

float cblz = cos(transformBefMapped[2]);

float salx = sin(transformAftMapped[0]);

float calx = cos(transformAftMapped[0]);

float saly = sin(transformAftMapped[1]);

float caly = cos(transformAftMapped[1]);

float salz = sin(transformAftMapped[2]);

float calz = cos(transformAftMapped[2]);

float srx = -sbcx*(salx*sblx + calx*cblx*salz*sblz + calx*calz*cblx*cblz)

- cbcx*sbcy*(calx*calz*(cbly*sblz - cblz*sblx*sbly)

- calx*salz*(cbly*cblz + sblx*sbly*sblz) + cblx*salx*sbly)

- cbcx*cbcy*(calx*salz*(cblz*sbly - cbly*sblx*sblz)

- calx*calz*(sbly*sblz + cbly*cblz*sblx) + cblx*cbly*salx);

transformTobeMapped[0] = -asin(srx);

float srycrx = sbcx*(cblx*cblz*(caly*salz - calz*salx*saly)

- cblx*sblz*(caly*calz + salx*saly*salz) + calx*saly*sblx)

- cbcx*cbcy*((caly*calz + salx*saly*salz)*(cblz*sbly - cbly*sblx*sblz)

+ (caly*salz - calz*salx*saly)*(sbly*sblz + cbly*cblz*sblx) - calx*cblx*cbly*saly)

+ cbcx*sbcy*((caly*calz + salx*saly*salz)*(cbly*cblz + sblx*sbly*sblz)

+ (caly*salz - calz*salx*saly)*(cbly*sblz - cblz*sblx*sbly) + calx*cblx*saly*sbly);

float crycrx = sbcx*(cblx*sblz*(calz*saly - caly*salx*salz)

- cblx*cblz*(saly*salz + caly*calz*salx) + calx*caly*sblx)

+ cbcx*cbcy*((saly*salz + caly*calz*salx)*(sbly*sblz + cbly*cblz*sblx)

+ (calz*saly - caly*salx*salz)*(cblz*sbly - cbly*sblx*sblz) + calx*caly*cblx*cbly)

- cbcx*sbcy*((saly*salz + caly*calz*salx)*(cbly*sblz - cblz*sblx*sbly)

+ (calz*saly - caly*salx*salz)*(cbly*cblz + sblx*sbly*sblz) - calx*caly*cblx*sbly);

transformTobeMapped[1] = atan2(srycrx / cos(transformTobeMapped[0]),

crycrx / cos(transformTobeMapped[0]));

float srzcrx = (cbcz*sbcy - cbcy*sbcx*sbcz)*(calx*salz*(cblz*sbly - cbly*sblx*sblz)

- calx*calz*(sbly*sblz + cbly*cblz*sblx) + cblx*cbly*salx)

- (cbcy*cbcz + sbcx*sbcy*sbcz)*(calx*calz*(cbly*sblz - cblz*sblx*sbly)

- calx*salz*(cbly*cblz + sblx*sbly*sblz) + cblx*salx*sbly)

+ cbcx*sbcz*(salx*sblx + calx*cblx*salz*sblz + calx*calz*cblx*cblz);

float crzcrx = (cbcy*sbcz - cbcz*sbcx*sbcy)*(calx*calz*(cbly*sblz - cblz*sblx*sbly)

- calx*salz*(cbly*cblz + sblx*sbly*sblz) + cblx*salx*sbly)

- (sbcy*sbcz + cbcy*cbcz*sbcx)*(calx*salz*(cblz*sbly - cbly*sblx*sblz)

- calx*calz*(sbly*sblz + cbly*cblz*sblx) + cblx*cbly*salx)

+ cbcx*cbcz*(salx*sblx + calx*cblx*salz*sblz + calx*calz*cblx*cblz);

transformTobeMapped[2] = atan2(srzcrx / cos(transformTobeMapped[0]),

crzcrx / cos(transformTobeMapped[0]));

x1 = cos(transformTobeMapped[2]) * transformIncre[3] - sin(transformTobeMapped[2]) * transformIncre[4];

y1 = sin(transformTobeMapped[2]) * transformIncre[3] + cos(transformTobeMapped[2]) * transformIncre[4];

z1 = transformIncre[5];

x2 = x1;

y2 = cos(transformTobeMapped[0]) * y1 - sin(transformTobeMapped[0]) * z1;

z2 = sin(transformTobeMapped[0]) * y1 + cos(transformTobeMapped[0]) * z1;

transformTobeMapped[3] = transformAftMapped[3]

- (cos(transformTobeMapped[1]) * x2 + sin(transformTobeMapped[1]) * z2);

transformTobeMapped[4] = transformAftMapped[4] - y2;

transformTobeMapped[5] = transformAftMapped[5]

- (-sin(transformTobeMapped[1]) * x2 + cos(transformTobeMapped[1]) * z2);

}

extractSurroundingKeyFrames()提取周围关键帧。

// 函数功能:根据当前位置,提取局部关键帧集合,以及对应的三个关键帧点云集合

// 步骤:

// 1. 在 关键帧位置集合cloudKeyPoses3D 中

// 检索 当前位置currentRobotPosPoint 附近的姿态点

// 获得局部位置点,赋值给 局部位置点集合surroundingKeyPoses

// 2. 根据 局部位置点集合surroundingKeyPoses 更新

// 局部关键帧集合 surroundingExistingKeyPosesID

// 局部关键帧 角点点云集合surroundingCornerCloudKeyFrames

// 局部关键帧 平面点点云集合surroundingSurfCloudKeyFrames

// 局部关键帧 离群点点云集合surroundingOutlierCloudKeyFrames

// 增加新进入局部的关键帧、并删除离开局部的关键帧。

// 3. 为局部点云地图赋值

// laserCloudCornerFromMap 所有局部关键帧的角点集合

// laserCloudSurfFromMap 所有局部关键帧平面点和离群点的集合

extractSurroundingKeyFrames(){

if(cloudKeyPoses3D为空) return;

void extractSurroundingKeyFrames(){

if (cloudKeyPoses3D->points.empty() == true)

return;

if(进行闭环过程){

1.若recentCornerCloudKeyFrames中的点云数量不够, 清空后重新塞入新的点云直至数量够。

// loopClosureEnableFlag 这个变量另外只在loopthread这部分中有用到

if (loopClosureEnableFlag == true){

// recentCornerCloudKeyFrames保存的点云数量太少,则清空后重新塞入新的点云直至数量够

if (recentCornerCloudKeyFrames.size() < surroundingKeyframeSearchNum){

recentCornerCloudKeyFrames. clear();

recentSurfCloudKeyFrames. clear();

recentOutlierCloudKeyFrames.clear();

int numPoses = cloudKeyPoses3D->points.size();

for (int i = numPoses-1; i >= 0; --i){

// cloudKeyPoses3D的intensity中存的是索引值?

// 保存的索引值从1开始编号;

int thisKeyInd = (int)cloudKeyPoses3D->points[i].intensity;

PointTypePose thisTransformation = cloudKeyPoses6D->points[thisKeyInd];

updateTransformPointCloudSinCos(&thisTransformation);

// 依据上面得到的变换thisTransformation,对cornerCloudKeyFrames,surfCloudKeyFrames,surfCloudKeyFrames

// 进行坐标变换

recentCornerCloudKeyFrames. push_front(transformPointCloud(cornerCloudKeyFrames[thisKeyInd]));

recentSurfCloudKeyFrames. push_front(transformPointCloud(surfCloudKeyFrames[thisKeyInd]));

recentOutlierCloudKeyFrames.push_front(transformPointCloud(outlierCloudKeyFrames[thisKeyInd]));

if (recentCornerCloudKeyFrames.size() >= surroundingKeyframeSearchNum)

break;

}

2.否则pop队列recentCornerCloudKeyFrames最前端的一个,再往队列尾部push一个;

}else{

// recentCornerCloudKeyFrames中点云保存的数量较多

// pop队列最前端的一个,再push后面一个

if (latestFrameID != cloudKeyPoses3D->points.size() - 1){

recentCornerCloudKeyFrames. pop_front();

recentSurfCloudKeyFrames. pop_front();

recentOutlierCloudKeyFrames.pop_front();

// 为什么要把recentCornerCloudKeyFrames最前面第一个元素弹出?

// (1个而不是好几个或者是全部)?

latestFrameID = cloudKeyPoses3D->points.size() - 1;

PointTypePose thisTransformation = cloudKeyPoses6D->points[latestFrameID];

updateTransformPointCloudSinCos(&thisTransformation);

recentCornerCloudKeyFrames. push_back(transformPointCloud(cornerCloudKeyFrames[latestFrameID]));

recentSurfCloudKeyFrames. push_back(transformPointCloud(surfCloudKeyFrames[latestFrameID]));

recentOutlierCloudKeyFrames.push_back(transformPointCloud(outlierCloudKeyFrames[latestFrameID]));

}

}

*laserCloudCornerFromMap += *recentCornerCloudKeyFrames[i];

*laserCloudSurfFromMap += *recentSurfCloudKeyFrames[i];

*laserCloudSurfFromMap += *recentOutlierCloudKeyFrames[i];

}else{

/这里不进行闭环过程/

surroundingKeyPoses->clear();

surroundingKeyPosesDS->clear();

kdtreeSurroundingKeyPoses->setInputCloud(cloudKeyPoses3D);

1.进行半径surroundingKeyframeSearchRadius内的邻域搜索

// 进行半径surroundingKeyframeSearchRadius内的邻域搜索,

// currentRobotPosPoint:需要查询的点,

// pointSearchInd:搜索完的邻域点对应的索引

// pointSearchSqDis:搜索完的每个领域点点与传讯点之间的欧式距离

// 0:返回的邻域个数,为0表示返回全部的邻域点

kdtreeSurroundingKeyPoses->radiusSearch(currentRobotPosPoint, (double)surroundingKeyframeSearchRadius, pointSearchInd, pointSearchSqDis, 0);

for (int i = 0; i < pointSearchInd.size(); ++i)

surroundingKeyPoses->points.push_back(cloudKeyPoses3D->points[pointSearchInd[i]]);

downSizeFilterSurroundingKeyPoses.setInputCloud(surroundingKeyPoses);

downSizeFilterSurroundingKeyPoses.filter(*surroundingKeyPosesDS);

2.双重循环,不断对比surroundingExistingKeyPosesID和surroundingKeyPosesDS中点的index,

如果能够找到一样,说明存在关键帧。然后在队列中去掉找不到的元素,留下可以找到的。

int numSurroundingPosesDS = surroundingKeyPosesDS->points.size();

for (int i = 0; i < surroundingExistingKeyPosesID.size(); ++i){

bool existingFlag = false;

for (int j = 0; j < numSurroundingPosesDS; ++j){

// 双重循环,不断对比surroundingExistingKeyPosesID[i]和surroundingKeyPosesDS的点的index

// 如果能够找到一样的,说明存在相同的关键点(因为surroundingKeyPosesDS从cloudKeyPoses3D中筛选而来)

if (surroundingExistingKeyPosesID[i] == (int)surroundingKeyPosesDS->points[j].intensity){

existingFlag = true;

break;

}

}

if (existingFlag == false){

// 如果surroundingExistingKeyPosesID[i]对比了一轮的已经存在的关键位姿的索引后(intensity保存的就是size())

// 没有找到相同的关键点,那么把这个点从当前队列中删除

// 否则的话,existingFlag为true,该关键点就将它留在队列中

surroundingExistingKeyPosesID. erase(surroundingExistingKeyPosesID. begin() + i);

surroundingCornerCloudKeyFrames. erase(surroundingCornerCloudKeyFrames. begin() + i);

surroundingSurfCloudKeyFrames. erase(surroundingSurfCloudKeyFrames. begin() + i);

surroundingOutlierCloudKeyFrames.erase(surroundingOutlierCloudKeyFrames.begin() + i);

--i;

}

}

3.再来一次双重循环,这部分比较有技巧,

这里把surroundingExistingKeyPosesID内没有对应的点放进一个队列里,

这个队列专门存放周围存在的关键帧,

但是和surroundingExistingKeyPosesID的点不在同一行。

关于行,需要参考intensity数据的存放格式,

整数部分和小数部分代表不同意义。

// 上一个两重for循环主要用于删除数据,此处的两重for循环用来添加数据

for (int i = 0; i < numSurroundingPosesDS; ++i) {

bool existingFlag = false;

for (auto iter = surroundingExistingKeyPosesID.begin(); iter != surroundingExistingKeyPosesID.end(); ++iter){

// *iter就是不同的cloudKeyPoses3D->points.size(),

// 把surroundingExistingKeyPosesID内没有对应的点放进一个队列里

// 这个队列专门存放周围存在的关键帧,但是和surroundingExistingKeyPosesID的点没有对应的,也就是新的点

if ((*iter) == (int)surroundingKeyPosesDS->points[i].intensity){

existingFlag = true;

break;

}

}

if (existingFlag == true){

continue;

}else{

int thisKeyInd = (int)surroundingKeyPosesDS->points[i].intensity;

PointTypePose thisTransformation = cloudKeyPoses6D->points[thisKeyInd];

updateTransformPointCloudSinCos(&thisTransformation);

surroundingExistingKeyPosesID. push_back(thisKeyInd);

surroundingCornerCloudKeyFrames. push_back(transformPointCloud(cornerCloudKeyFrames[thisKeyInd]));

surroundingSurfCloudKeyFrames. push_back(transformPointCloud(surfCloudKeyFrames[thisKeyInd]));

surroundingOutlierCloudKeyFrames.push_back(transformPointCloud(outlierCloudKeyFrames[thisKeyInd]));

}

}

for (int i = 0; i < surroundingExistingKeyPosesID.size(); ++i) {

*laserCloudCornerFromMap += *surroundingCornerCloudKeyFrames[i];

*laserCloudSurfFromMap += *surroundingSurfCloudKeyFrames[i];

*laserCloudSurfFromMap += *surroundingOutlierCloudKeyFrames[i];

}

}

不管是否进行闭环过程,最后的输出都要进行一次下采样减小数据量的过程。

最后的输出结果是laserCloudCornerFromMapDS和laserCloudSurfFromMapDS。

// 进行两次下采样

// 最后的输出结果是laserCloudCornerFromMapDS和laserCloudSurfFromMapDS

downSizeFilterCorner.setInputCloud(laserCloudCornerFromMap);

downSizeFilterCorner.filter(*laserCloudCornerFromMapDS);

laserCloudCornerFromMapDSNum = laserCloudCornerFromMapDS->points.size();

downSizeFilterSurf.setInputCloud(laserCloudSurfFromMap);

downSizeFilterSurf.filter(*laserCloudSurfFromMapDS);

laserCloudSurfFromMapDSNum = laserCloudSurfFromMapDS->points.size();

}

downsampleCurrentScan函数是将当前各类点云降采样,其中laserCloudSurfTotalLast是平面部分与异常部分的叠加。

void downsampleCurrentScan(){

laserCloudCornerLastDS->clear();

downSizeFilterCorner.setInputCloud(laserCloudCornerLast);

downSizeFilterCorner.filter(*laserCloudCornerLastDS);

laserCloudCornerLastDSNum = laserCloudCornerLastDS->points.size();

laserCloudSurfLastDS->clear();

downSizeFilterSurf.setInputCloud(laserCloudSurfLast);

downSizeFilterSurf.filter(*laserCloudSurfLastDS);

laserCloudSurfLastDSNum = laserCloudSurfLastDS->points.size();

laserCloudOutlierLastDS->clear();

downSizeFilterOutlier.setInputCloud(laserCloudOutlierLast);

downSizeFilterOutlier.filter(*laserCloudOutlierLastDS);

laserCloudOutlierLastDSNum = laserCloudOutlierLastDS->points.size();

laserCloudSurfTotalLast->clear();

laserCloudSurfTotalLastDS->clear();

*laserCloudSurfTotalLast += *laserCloudSurfLastDS;

*laserCloudSurfTotalLast += *laserCloudOutlierLastDS;

downSizeFilterSurf.setInputCloud(laserCloudSurfTotalLast);

downSizeFilterSurf.filter(*laserCloudSurfTotalLastDS);

laserCloudSurfTotalLastDSNum = laserCloudSurfTotalLastDS->points.size();

}

scan2MapOptimization函数是根据现有地图与最新点云数据进行配准从而更新机器人精确位姿与融合建图,它分为角点优化、平面点优化、配准与更新等部分。

void scan2MapOptimization(){

// laserCloudCornerFromMapDSNum是extractSurroundingKeyFrames()函数最后降采样得到的coner点云数

// laserCloudSurfFromMapDSNum是extractSurroundingKeyFrames()函数降采样得到的surface点云数

if (laserCloudCornerFromMapDSNum > 10 && laserCloudSurfFromMapDSNum > 100) {

// laserCloudCornerFromMapDS和laserCloudSurfFromMapDS的来源有2个:

// 当有闭环时,来源是:recentCornerCloudKeyFrames,没有闭环时,来源surroundingCornerCloudKeyFrames

kdtreeCornerFromMap->setInputCloud(laserCloudCornerFromMapDS);

kdtreeSurfFromMap->setInputCloud(laserCloudSurfFromMapDS);

for (int iterCount = 0; iterCount < 10; iterCount++) {

// 用for循环控制迭代次数,最多迭代10次

laserCloudOri->clear();

coeffSel->clear();

cornerOptimization(iterCount);

surfOptimization(iterCount);

if (LMOptimization(iterCount) == true)

break;

}

// 迭代结束更新相关的转移矩阵

transformUpdate();

}

cornerOptimization

函数分成了几个部分:

进行坐标变换,转换到全局坐标中去;

void cornerOptimization(int iterCount){

updatePointAssociateToMapSinCos();

for (int i = 0; i < laserCloudCornerLastDSNum; i++) {

pointOri = laserCloudCornerLastDS->points[i];

// 进行坐标变换,转换到全局坐标中去(世界坐标系)

// pointSel:表示选中的点,point select

// 输入是pointOri,输出是pointSel

pointAssociateToMap(&pointOri, &pointSel);

进行5邻域搜索,得到结果后对搜索得到的5点求平均值;

// 进行5邻域搜索,

// pointSel为需要搜索的点,

// pointSearchInd搜索完的邻域对应的索引

// pointSearchSqDis 邻域点与查询点之间的距离

kdtreeCornerFromMap->nearestKSearch(pointSel, 5, pointSearchInd, pointSearchSqDis);

// 只有当最远的那个邻域点的距离pointSearchSqDis[4]小于1m时才进行下面的计算

// 以下部分的计算是在计算点集的协方差矩阵,Zhang Ji的论文中有提到这部分

if (pointSearchSqDis[4] < 1.0) {

// 先求5个样本的平均值

float cx = 0, cy = 0, cz = 0;

for (int j = 0; j < 5; j++) {

cx += laserCloudCornerFromMapDS->points[pointSearchInd[j]].x;

cy += laserCloudCornerFromMapDS->points[pointSearchInd[j]].y;

cz += laserCloudCornerFromMapDS->points[pointSearchInd[j]].z;

}

cx /= 5; cy /= 5; cz /= 5;

求矩阵matA1=[ax,ay,az]t*[ax,ay,az],例如ax代表的是x-cx,表示均值与每个实际值的差值,求取5个之后再次取平均,得到matA1;

// 下面在求矩阵matA1=[ax,ay,az]^t*[ax,ay,az]

// 更准确地说应该是在求协方差matA1

float a11 = 0, a12 = 0, a13 = 0, a22 = 0, a23 = 0, a33 = 0;

for (int j = 0; j < 5; j++) {

// ax代表的是x-cx,表示均值与每个实际值的差值,求取5个之后再次取平均,得到matA1

float ax = laserCloudCornerFromMapDS->points[pointSearchInd[j]].x - cx;

float ay = laserCloudCornerFromMapDS->points[pointSearchInd[j]].y - cy;

float az = laserCloudCornerFromMapDS->points[pointSearchInd[j]].z - cz;

a11 += ax * ax; a12 += ax * ay; a13 += ax * az;

a22 += ay * ay; a23 += ay * az;

a33 += az * az;

}

a11 /= 5; a12 /= 5; a13 /= 5; a22 /= 5; a23 /= 5; a33 /= 5;

matA1.at<float>(0, 0) = a11; matA1.at<float>(0, 1) = a12; matA1.at<float>(0, 2) = a13;

matA1.at<float>(1, 0) = a12; matA1.at<float>(1, 1) = a22; matA1.at<float>(1, 2) = a23;

matA1.at<float>(2, 0) = a13; matA1.at<float>(2, 1) = a23; matA1.at<float>(2, 2) = a33;

求正交阵的特征值和特征向量,特征值:matD1,特征向量:保存在矩阵matV1中。

// 求正交阵的特征值和特征向量

// 特征值:matD1,特征向量:matV1中

cv::eigen(matA1, matD1, matV1);

因为求取的特征值是按照降序排列的,所以根据论文里面提到的:

1.如果这是一个边缘特征,则它的一个特征值远大于其余两个;

2.如果这是一个平面特征,那么其中一个特征值远小于其余两个特征值;

根据上面两个原则进行判断要不要进行优化。 如果没有满足条件1,就不进行优化过程,因为这不是一个边缘特征。

// 边缘:与较大特征值相对应的特征向量代表边缘线的方向(一大两小,大方向)

// 以下这一大块是在计算点到边缘的距离,最后通过系数s来判断是否距离很近

// 如果距离很近就认为这个点在边缘上,需要放到laserCloudOri中

if (matD1.at<float>(0, 0) > 3 * matD1.at<float>(0, 1)) {

float x0 = pointSel.x;

float y0 = pointSel.y;

float z0 = pointSel.z;

float x1 = cx + 0.1 * matV1.at<float>(0, 0);

float y1 = cy + 0.1 * matV1.at<float>(0, 1);

float z1 = cz + 0.1 * matV1.at<float>(0, 2);

float x2 = cx - 0.1 * matV1.at<float>(0, 0);

float y2 = cy - 0.1 * matV1.at<float>(0, 1);

float z2 = cz - 0.1 * matV1.at<float>(0, 2);

// 这边是在求[(x0-x1),(y0-y1),(z0-z1)]与[(x0-x2),(y0-y2),(z0-z2)]叉乘得到的向量的模长

// 这个模长是由0.2*V1[0]和点[x0,y0,z0]构成的平行四边形的面积

// 因为[(x0-x1),(y0-y1),(z0-z1)]x[(x0-x2),(y0-y2),(z0-z2)]=[XXX,YYY,ZZZ],

// [XXX,YYY,ZZZ]=[(y0-y1)(z0-z2)-(y0-y2)(z0-z1),-(x0-x1)(z0-z2)+(x0-x2)(z0-z1),(x0-x1)(y0-y2)-(x0-x2)(y0-y1)]

float a012 = sqrt(((x0 - x1)*(y0 - y2) - (x0 - x2)*(y0 - y1))

* ((x0 - x1)*(y0 - y2) - (x0 - x2)*(y0 - y1))

+ ((x0 - x1)*(z0 - z2) - (x0 - x2)*(z0 - z1))

* ((x0 - x1)*(z0 - z2) - (x0 - x2)*(z0 - z1))

+ ((y0 - y1)*(z0 - z2) - (y0 - y2)*(z0 - z1))

* ((y0 - y1)*(z0 - z2) - (y0 - y2)*(z0 - z1)));

// l12表示的是0.2*(||V1[0]||)

// 也就是平行四边形一条底的长度

float l12 = sqrt((x1 - x2)*(x1 - x2) + (y1 - y2)*(y1 - y2) + (z1 - z2)*(z1 - z2));

// 求叉乘结果[la',lb',lc']=[(x1-x2),(y1-y2),(z1-z2)]x[XXX,YYY,ZZZ]

// [la,lb,lc]=[la',lb',lc']/a012/l12

// LLL=[la,lb,lc]是0.2*V1[0]这条高上的单位法向量。||LLL||=1;

float la = ((y1 - y2)*((x0 - x1)*(y0 - y2) - (x0 - x2)*(y0 - y1))

+ (z1 - z2)*((x0 - x1)*(z0 - z2) - (x0 - x2)*(z0 - z1))) / a012 / l12;

float lb = -((x1 - x2)*((x0 - x1)*(y0 - y2) - (x0 - x2)*(y0 - y1))

- (z1 - z2)*((y0 - y1)*(z0 - z2) - (y0 - y2)*(z0 - z1))) / a012 / l12;

float lc = -((x1 - x2)*((x0 - x1)*(z0 - z2) - (x0 - x2)*(z0 - z1))

+ (y1 - y2)*((y0 - y1)*(z0 - z2) - (y0 - y2)*(z0 - z1))) / a012 / l12;

// 计算点pointSel到直线的距离

// 这里需要特别说明的是ld2代表的是点pointSel到过点[cx,cy,cz]的方向向量直线的距离

float ld2 = a012 / l12;

// 如果在最理想的状态的话,ld2应该为0,表示点在直线上

// 最理想状态s=1;

float s = 1 - 0.9 * fabs(ld2);

// coeff代表系数的意思

// coff用于保存距离的方向向量

coeff.x = s * la;

coeff.y = s * lb;

coeff.z = s * lc;

// intensity本质上构成了一个核函数,ld2越接近于1,增长越慢

// intensity=(1-0.9*ld2)*ld2=ld2-0.9*ld2*ld2

coeff.intensity = s * ld2;

// 所以就应该认为这个点是边缘点

// s>0.1 也就是要求点到直线的距离ld2要小于1m

// s越大说明ld2越小(离边缘线越近),这样就说明点pointOri在直线上

if (s > 0.1) {

laserCloudOri->push_back(pointOri);

coeffSel->push_back(coeff);

}

}

}

}

}

void surfOptimization(int)函数进行面优化,内容和函数cornerOptimization(int)的内容基本相同。

步骤如下:

进行坐标变换,转换到全局坐标中去;

进行5邻域搜索,得到结果后判断搜索结果是否满足条件(pointSearchSqDis[4] < 1.0),不满足条件就不需要进行优化;

void surfOptimization(int iterCount){

updatePointAssociateToMapSinCos();

for (int i = 0; i < laserCloudSurfTotalLastDSNum; i++) {

pointOri = laserCloudSurfTotalLastDS->points[i];

pointAssociateToMap(&pointOri, &pointSel);

kdtreeSurfFromMap->nearestKSearch(pointSel, 5, pointSearchInd, pointSearchSqDis);

if (pointSearchSqDis[4] < 1.0) {

for (int j = 0; j < 5; j++) {

matA0.at<float>(j, 0) = laserCloudSurfFromMapDS->points[pointSearchInd[j]].x;

matA0.at<float>(j, 1) = laserCloudSurfFromMapDS->points[pointSearchInd[j]].y;

matA0.at<float>(j, 2) = laserCloudSurfFromMapDS->points[pointSearchInd[j]].z;

}

将搜索结果全部保存到matA0中,形成一个5x3的矩阵;

解这个矩阵cv::solve(matA0, matB0, matX0, cv::DECOMP_QR);。

关于cv::solve函数,参考官网

matB0是一个5x1的矩阵,需要求解的matX0是3x1的矩阵;

这个公式其实是在求由matA0中的点构成的平面的法向量matX0。

求解得到的matX0=[pa,pb,pc,pd],对[pa,pb,pc,pd]进行单位化,

误差在允许的范围内的话把这个点放到点云laserCloudOri中去,把对应的向量coeff放到coeffSel中。

// matB0是一个5x1的矩阵

// matB0 = cv::Mat (5, 1, CV_32F, cv::Scalar::all(-1));

// matX0是3x1的矩阵

// 求解方程matA0*matX0=matB0

// 公式其实是在求由matA0中的点构成的平面的法向量matX0

cv::solve(matA0, matB0, matX0, cv::DECOMP_QR);

// [pa,pb,pc,pd]=[matX0,pd]

// 正常情况下(见后面planeValid判断条件),应该是

// pa * laserCloudSurfFromMapDS->points[pointSearchInd[j]].x +

// pb * laserCloudSurfFromMapDS->points[pointSearchInd[j]].y +

// pc * laserCloudSurfFromMapDS->points[pointSearchInd[j]].z = -1

// 所以pd设置为1

float pa = matX0.at<float>(0, 0);

float pb = matX0.at<float>(1, 0);

float pc = matX0.at<float>(2, 0);

float pd = 1;

// 对[pa,pb,pc,pd]进行单位化

float ps = sqrt(pa * pa + pb * pb + pc * pc);

pa /= ps; pb /= ps; pc /= ps; pd /= ps;

// 求解后再次检查平面是否是有效平面

bool planeValid = true;

for (int j = 0; j < 5; j++) {

if (fabs(pa * laserCloudSurfFromMapDS->points[pointSearchInd[j]].x +

pb * laserCloudSurfFromMapDS->points[pointSearchInd[j]].y +

pc * laserCloudSurfFromMapDS->points[pointSearchInd[j]].z + pd) > 0.2) {

planeValid = false;

break;

}

}

if (planeValid) {

float pd2 = pa * pointSel.x + pb * pointSel.y + pc * pointSel.z + pd;

// 后面部分相除求的是[pa,pb,pc,pd]与pointSel的夹角余弦值(两个sqrt,其实并不是余弦值)

// 这个夹角余弦值越小越好,越小证明所求的[pa,pb,pc,pd]与平面越垂直

float s = 1 - 0.9 * fabs(pd2) / sqrt(sqrt(pointSel.x * pointSel.x

+ pointSel.y * pointSel.y + pointSel.z * pointSel.z));

coeff.x = s * pa;

coeff.y = s * pb;

coeff.z = s * pc;

coeff.intensity = s * pd2;

// 判断是否是合格平面,是就加入laserCloudOri

if (s > 0.1) {

laserCloudOri->push_back(pointOri);

coeffSel->push_back(coeff);

}

}

}

}

}

LMOptimization:

这部分的代码是基于高斯牛顿法的优化,不是zhang ji论文中提到的基于L-M的优化方法

这部分的代码使用旋转矩阵对欧拉角求导,优化欧拉角,不是zhang ji论文中提到的使用angle-axis的优化

bool LMOptimization(int iterCount){

float srx = sin(transformTobeMapped[0]);

float crx = cos(transformTobeMapped[0]);

float sry = sin(transformTobeMapped[1]);

float cry = cos(transformTobeMapped[1]);

float srz = sin(transformTobeMapped[2]);

float crz = cos(transformTobeMapped[2]);

int laserCloudSelNum = laserCloudOri->points.size();

// laser cloud original 点云太少,就跳过这次循环

if (laserCloudSelNum < 50) {

return false;

}

cv::Mat matA(laserCloudSelNum, 6, CV_32F, cv::Scalar::all(0));

cv::Mat matAt(6, laserCloudSelNum, CV_32F, cv::Scalar::all(0));

cv::Mat matAtA(6, 6, CV_32F, cv::Scalar::all(0));

cv::Mat matB(laserCloudSelNum, 1, CV_32F, cv::Scalar::all(0));

cv::Mat matAtB(6, 1, CV_32F, cv::Scalar::all(0));

cv::Mat matX(6, 1, CV_32F, cv::Scalar::all(0));

for (int i = 0; i < laserCloudSelNum; i++) {

pointOri = laserCloudOri->points[i];

coeff = coeffSel->points[i];

// 求雅克比矩阵中的元素,距离d对roll角度的偏导量即d(d)/d(roll)

// 更详细的数学推导参看wykxwyc.github.io

float arx = (crx*sry*srz*pointOri.x + crx*crz*sry*pointOri.y - srx*sry*pointOri.z) * coeff.x

+ (-srx*srz*pointOri.x - crz*srx*pointOri.y - crx*pointOri.z) * coeff.y

+ (crx*cry*srz*pointOri.x + crx*cry*crz*pointOri.y - cry*srx*pointOri.z) * coeff.z;

// 同上,求解的是对pitch的偏导量

float ary = ((cry*srx*srz - crz*sry)*pointOri.x

+ (sry*srz + cry*crz*srx)*pointOri.y + crx*cry*pointOri.z) * coeff.x

+ ((-cry*crz - srx*sry*srz)*pointOri.x

+ (cry*srz - crz*srx*sry)*pointOri.y - crx*sry*pointOri.z) * coeff.z;

float arz = ((crz*srx*sry - cry*srz)*pointOri.x + (-cry*crz-srx*sry*srz)*pointOri.y)*coeff.x

+ (crx*crz*pointOri.x - crx*srz*pointOri.y) * coeff.y

+ ((sry*srz + cry*crz*srx)*pointOri.x + (crz*sry-cry*srx*srz)*pointOri.y)*coeff.z;

/*

在求点到直线的距离时,coeff表示的是如下内容

[la,lb,lc]表示的是点到直线的垂直连线方向,s是长度

coeff.x = s * la;

coeff.y = s * lb;

coeff.z = s * lc;

coeff.intensity = s * ld2;

在求点到平面的距离时,coeff表示的是

[pa,pb,pc]表示过外点的平面的法向量,s是线的长度

coeff.x = s * pa;

coeff.y = s * pb;

coeff.z = s * pc;

coeff.intensity = s * pd2;

*/

matA.at<float>(i, 0) = arx;

matA.at<float>(i, 1) = ary;

matA.at<float>(i, 2) = arz;

// 这部分是雅克比矩阵中距离对平移的偏导

matA.at<float>(i, 3) = coeff.x;

matA.at<float>(i, 4) = coeff.y;

matA.at<float>(i, 5) = coeff.z;

// 残差项

matB.at<float>(i, 0) = -coeff.intensity;

}

// 将矩阵由matA转置生成matAt

// 先进行计算,以便于后边调用 cv::solve求解

cv::transpose(matA, matAt);

matAtA = matAt * matA;

matAtB = matAt * matB;

// 利用高斯牛顿法进行求解,

// 高斯牛顿法的原型是J^(T)*J * delta(x) = -J*f(x)

// J是雅克比矩阵,这里是A,f(x)是优化目标,这里是-B(符号在给B赋值时候就放进去了)

// 通过QR分解的方式,求解matAtA*matX=matAtB,得到解matX

cv::solve(matAtA, matAtB, matX, cv::DECOMP_QR);

// iterCount==0 说明是第一次迭代,需要初始化

if (iterCount == 0) {

cv::Mat matE(1, 6, CV_32F, cv::Scalar::all(0));

cv::Mat matV(6, 6, CV_32F, cv::Scalar::all(0));

cv::Mat matV2(6, 6, CV_32F, cv::Scalar::all(0));

// 对近似的Hessian矩阵求特征值和特征向量,

cv::eigen(matAtA, matE, matV);

matV.copyTo(matV2);

isDegenerate = false;

float eignThre[6] = {100, 100, 100, 100, 100, 100};

for (int i = 5; i >= 0; i--) {

if (matE.at<float>(0, i) < eignThre[i]) {

for (int j = 0; j < 6; j++) {

matV2.at<float>(i, j) = 0;

}

isDegenerate = true;

} else {

break;

}

}

matP = matV.inv() * matV2;

}

if (isDegenerate) {

cv::Mat matX2(6, 1, CV_32F, cv::Scalar::all(0));

matX.copyTo(matX2);

matX = matP * matX2;

}

transformTobeMapped[0] += matX.at<float>(0, 0);

transformTobeMapped[1] += matX.at<float>(1, 0);

transformTobeMapped[2] += matX.at<float>(2, 0);

transformTobeMapped[3] += matX.at<float>(3, 0);

transformTobeMapped[4] += matX.at<float>(4, 0);

transformTobeMapped[5] += matX.at<float>(5, 0);

float deltaR = sqrt(

pow(pcl::rad2deg(matX.at<float>(0, 0)), 2) +

pow(pcl::rad2deg(matX.at<float>(1, 0)), 2) +

pow(pcl::rad2deg(matX.at<float>(2, 0)), 2));

float deltaT = sqrt(

pow(matX.at<float>(3, 0) * 100, 2) +

pow(matX.at<float>(4, 0) * 100, 2) +

pow(matX.at<float>(5, 0) * 100, 2));

// 旋转或者平移量足够小就停止这次迭代过程

if (deltaR < 0.05 && deltaT < 0.05) {

return true;

}

return false;

}

void saveKeyFramesAndFactor()保存关键帧和进行优化的功能。

程序开始:

1.把上次优化得到的transformAftMapped(3:5)坐标点作为当前的位置,

void saveKeyFramesAndFactor(){

currentRobotPosPoint.x = transformAftMapped[3];

currentRobotPosPoint.y = transformAftMapped[4];

currentRobotPosPoint.z = transformAftMapped[5];

计算和再之前的位置的欧拉距离,距离太小并且cloudKeyPoses3D不为空(初始化时为空),则结束;

bool saveThisKeyFrame = true;

if (sqrt((previousRobotPosPoint.x-currentRobotPosPoint.x)*(previousRobotPosPoint.x-currentRobotPosPoint.x)

+(previousRobotPosPoint.y-currentRobotPosPoint.y)*(previousRobotPosPoint.y-currentRobotPosPoint.y)

+(previousRobotPosPoint.z-currentRobotPosPoint.z)*(previousRobotPosPoint.z-currentRobotPosPoint.z)) < 0.3){

saveThisKeyFrame = false;

}

if (saveThisKeyFrame == false && !cloudKeyPoses3D->points.empty())

return;

2.如果是刚刚初始化,cloudKeyPoses3D为空,

那么NonlinearFactorGraph增加一个PriorFactor因子,

initialEstimate的数据类型是Values(其实就是一个map),这里在0对应的值下面保存一个Pose3,

本次的transformTobeMapped参数保存到transformLast中去。

if (cloudKeyPoses3D->points.empty()){

// static Rot3 RzRyRx (double x, double y, double z),Rotations around Z, Y, then X axes

// RzRyRx依次按照z(transformTobeMapped[2]),y(transformTobeMapped[0]),x(transformTobeMapped[1])坐标轴旋转

// Point3 (double x, double y, double z) Construct from x(transformTobeMapped[5]), y(transformTobeMapped[3]), and z(transformTobeMapped[4]) coordinates.

// Pose3 (const Rot3 &R, const Point3 &t) Construct from R,t. 从旋转和平移构造姿态

// NonlinearFactorGraph增加一个PriorFactor因子

gtSAMgraph.add(PriorFactor<Pose3>(0, Pose3(Rot3::RzRyRx(transformTobeMapped[2], transformTobeMapped[0], transformTobeMapped[1]),

Point3(transformTobeMapped[5], transformTobeMapped[3], transformTobeMapped[4])), priorNoise));

// initialEstimate的数据类型是Values,其实就是一个map,这里在0对应的值下面保存了一个Pose3

initialEstimate.insert(0, Pose3(Rot3::RzRyRx(transformTobeMapped[2], transformTobeMapped[0], transformTobeMapped[1]),

Point3(transformTobeMapped[5], transformTobeMapped[3], transformTobeMapped[4])));

for (int i = 0; i < 6; ++i)

transformLast[i] = transformTobeMapped[i];

}

3.如果本次不是刚刚初始化,从transformLast得到上一次位姿,

从transformAftMapped得到本次位姿,

gtSAMgraph.add(BetweenFactor),到它的约束中去,

initialEstimate.insert(序号,位姿)。

else{

gtsam::Pose3 poseFrom = Pose3(Rot3::RzRyRx(transformLast[2], transformLast[0], transformLast[1]),

Point3(transformLast[5], transformLast[3], transformLast[4]));

gtsam::Pose3 poseTo = Pose3(Rot3::RzRyRx(transformAftMapped[2], transformAftMapped[0], transformAftMapped[1]),

Point3(transformAftMapped[5], transformAftMapped[3], transformAftMapped[4]));

// 构造函数原型:BetweenFactor (Key key1, Key key2, const VALUE &measured, const SharedNoiseModel &model)

gtSAMgraph.add(BetweenFactor<Pose3>(cloudKeyPoses3D->points.size()-1, cloudKeyPoses3D->points.size(), poseFrom.between(poseTo), odometryNoise));

initialEstimate.insert(cloudKeyPoses3D->points.size(), Pose3(Rot3::RzRyRx(transformAftMapped[2], transformAftMapped[0], transformAftMapped[1]),

Point3(transformAftMapped[5], transformAftMapped[3], transformAftMapped[4])));

}

4.不管是否是初始化,都进行优化,isam->update(gtSAMgraph, initialEstimate);

得到优化的结果:latestEstimate = isamCurrentEstimate.at(isamCurrentEstimate.size()-1),

将结果保存,cloudKeyPoses3D->push_back(thisPose3D);

cloudKeyPoses6D->push_back(thisPose6D);

// gtsam::ISAM2::update函数原型:

// ISAM2Result gtsam::ISAM2::update ( const NonlinearFactorGraph & newFactors = NonlinearFactorGraph(),

// const Values & newTheta = Values(),

// const std::vector< size_t > & removeFactorIndices = std::vector<size_t>(),

// const boost::optional< FastMap< Key, int > > & constrainedKeys = boost::none,

// const boost::optional< FastList< Key > > & noRelinKeys = boost::none,

// const boost::optional< FastList< Key > > & extraReelimKeys = boost::none,

// bool force_relinearize = false )

// gtSAMgraph是新加到系统中的因子

// initialEstimate是加到系统中的新变量的初始点

isam->update(gtSAMgraph, initialEstimate);

// update 函数为什么需要调用两次?

isam->update();

// 删除内容?

gtSAMgraph.resize(0);

initialEstimate.clear();

PointType thisPose3D;

PointTypePose thisPose6D;

Pose3 latestEstimate;

// Compute an estimate from the incomplete linear delta computed during the last update.

isamCurrentEstimate = isam->calculateEstimate();

latestEstimate = isamCurrentEstimate.at<Pose3>(isamCurrentEstimate.size()-1);

thisPose3D.x = latestEstimate.translation().y();

thisPose3D.y = latestEstimate.translation().z();

thisPose3D.z = latestEstimate.translation().x();

thisPose3D.intensity = cloudKeyPoses3D->points.size();

cloudKeyPoses3D->push_back(thisPose3D);

thisPose6D.x = thisPose3D.x;

thisPose6D.y = thisPose3D.y;

thisPose6D.z = thisPose3D.z;

thisPose6D.intensity = thisPose3D.intensity;

thisPose6D.roll = latestEstimate.rotation().pitch();

thisPose6D.pitch = latestEstimate.rotation().yaw();

thisPose6D.yaw = latestEstimate.rotation().roll();

thisPose6D.time = timeLaserOdometry;

cloudKeyPoses6D->push_back(thisPose6D);

5.对transformAftMapped进行更新;

if (cloudKeyPoses3D->points.size() > 1){

transformAftMapped[0] = latestEstimate.rotation().pitch();

transformAftMapped[1] = latestEstimate.rotation().yaw();

transformAftMapped[2] = latestEstimate.rotation().roll();

transformAftMapped[3] = latestEstimate.translation().y();

transformAftMapped[4] = latestEstimate.translation().z();

transformAftMapped[5] = latestEstimate.translation().x();

for (int i = 0; i < 6; ++i){

transformLast[i] = transformAftMapped[i];

transformTobeMapped[i] = transformAftMapped[i];

}

}

6.最后保存最终的结果:

pcl::PointCloud<PointType>::Ptr thisCornerKeyFrame(new pcl::PointCloud<PointType>());

pcl::PointCloud<PointType>::Ptr thisSurfKeyFrame(new pcl::PointCloud<PointType>());

pcl::PointCloud<PointType>::Ptr thisOutlierKeyFrame(new pcl::PointCloud<PointType>());

// PCL::copyPointCloud(const pcl::PCLPointCloud2 &cloud_in,pcl::PCLPointCloud2 &cloud_out )

pcl::copyPointCloud(*laserCloudCornerLastDS, *thisCornerKeyFrame);

pcl::copyPointCloud(*laserCloudSurfLastDS, *thisSurfKeyFrame);

pcl::copyPointCloud(*laserCloudOutlierLastDS, *thisOutlierKeyFrame);

cornerCloudKeyFrames.push_back(thisCornerKeyFrame);

surfCloudKeyFrames.push_back(thisSurfKeyFrame);

outlierCloudKeyFrames.push_back(thisOutlierKeyFrame);

}

void correctPoses()的调用只在回环结束时进行(aLoopIsClosed == true)

校正位姿的过程主要是将isamCurrentEstimate的x,y,z平移坐标更新到cloudKeyPoses3D中,另外还需要更新cloudKeyPoses6D的姿态角。

关于isamCurrentEstimate:

isamCurrentEstimate是gtsam库中的Values类。

void correctPoses(){

if (aLoopIsClosed == true){

recentCornerCloudKeyFrames. clear();

recentSurfCloudKeyFrames. clear();

recentOutlierCloudKeyFrames.clear();

int numPoses = isamCurrentEstimate.size();

for (int i = 0; i < numPoses; ++i)

{

cloudKeyPoses3D->points[i].x = isamCurrentEstimate.at<Pose3>(i).translation().y();

cloudKeyPoses3D->points[i].y = isamCurrentEstimate.at<Pose3>(i).translation().z();

cloudKeyPoses3D->points[i].z = isamCurrentEstimate.at<Pose3>(i).translation().x();

cloudKeyPoses6D->points[i].x = cloudKeyPoses3D->points[i].x;

cloudKeyPoses6D->points[i].y = cloudKeyPoses3D->points[i].y;

cloudKeyPoses6D->points[i].z = cloudKeyPoses3D->points[i].z;

//

cloudKeyPoses6D->points[i].roll = isamCurrentEstimate.at<Pose3>(i).rotation().pitch();

cloudKeyPoses6D->points[i].pitch = isamCurrentEstimate.at<Pose3>(i).rotation().yaw();

cloudKeyPoses6D->points[i].yaw = isamCurrentEstimate.at<Pose3>(i).rotation().roll();

}

aLoopIsClosed = false;

}

}

void publishTF()是进行发布坐标变换信息的函数,发布的消息类型是nav_msgs::Odometry.

void publishTF(){

geometry_msgs::Quaternion geoQuat = tf::createQuaternionMsgFromRollPitchYaw

(transformAftMapped[2], -transformAftMapped[0], -transformAftMapped[1]);

odomAftMapped.header.stamp = ros::Time().fromSec(timeLaserOdometry);

odomAftMapped.pose.pose.orientation.x = -geoQuat.y;

odomAftMapped.pose.pose.orientation.y = -geoQuat.z;

odomAftMapped.pose.pose.orientation.z = geoQuat.x;

odomAftMapped.pose.pose.orientation.w = geoQuat.w;

odomAftMapped.pose.pose.position.x = transformAftMapped[3];

odomAftMapped.pose.pose.position.y = transformAftMapped[4];

odomAftMapped.pose.pose.position.z = transformAftMapped[5];

odomAftMapped.twist.twist.angular.x = transformBefMapped[0];

odomAftMapped.twist.twist.angular.y = transformBefMapped[1];

odomAftMapped.twist.twist.angular.z = transformBefMapped[2];

odomAftMapped.twist.twist.linear.x = transformBefMapped[3];

odomAftMapped.twist.twist.linear.y = transformBefMapped[4];

odomAftMapped.twist.twist.linear.z = transformBefMapped[5];

pubOdomAftMapped.publish(odomAftMapped);

aftMappedTrans.stamp_ = ros::Time().fromSec(timeLaserOdometry);

aftMappedTrans.setRotation(tf::Quaternion(-geoQuat.y, -geoQuat.z, geoQuat.x, geoQuat.w));

aftMappedTrans.setOrigin(tf::Vector3(transformAftMapped[3], transformAftMapped[4], transformAftMapped[5]));

tfBroadcaster.sendTransform(aftMappedTrans);

}

如果有节点订阅"/key_pose_origin"这个话题,则用pubKeyPoses发布cloudKeyPoses3D;

如果有节点订阅"/recent_cloud"这个话题,则用pubRecentKeyFrames发布laserCloudSurfFromMapDS;

void publishKeyPosesAndFrames(){

if (pubKeyPoses.getNumSubscribers() != 0){

sensor_msgs::PointCloud2 cloudMsgTemp;

pcl::toROSMsg(*cloudKeyPoses3D, cloudMsgTemp);

cloudMsgTemp.header.stamp = ros::Time().fromSec(timeLaserOdometry);

cloudMsgTemp.header.frame_id = "/camera_init";

pubKeyPoses.publish(cloudMsgTemp);

}

if (pubRecentKeyFrames.getNumSubscribers() != 0){

sensor_msgs::PointCloud2 cloudMsgTemp;

pcl::toROSMsg(*laserCloudSurfFromMapDS, cloudMsgTemp);

cloudMsgTemp.header.stamp = ros::Time().fromSec(timeLaserOdometry);

cloudMsgTemp.header.frame_id = "/camera_init";

pubRecentKeyFrames.publish(cloudMsgTemp);

}

}

完成一次建图后,清除相关数据以便下一次的建图。

void clearCloud(){

laserCloudCornerFromMap->clear();

laserCloudSurfFromMap->clear();

laserCloudCornerFromMapDS->clear();

laserCloudSurfFromMapDS->clear();

}

大部分内容参考了LeGo-LOAM源码阅读笔记(四)—mapOptmization.cpp

参考:

LeGO-LOAM分析之建图(三)

LeGo-LOAM源码阅读笔记(四)—mapOptmization.cpp

1823

1823

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?