这一篇介绍特征匹配的函数。

函数/Functions

函数名称:matchFeatures

功能:两个特征描述子进行匹配

语法: indexPairs = matchFeatures(features1,features2);

[indexPairs,matchmetric] = matchFeatures(features1,feature2);

[indexPairs,matchmetric] = matchFeatures(features1,feature2,Name,Value);

其中,features1,features2可以是2-值型的特征描述子对象(binaryFeatures object)或者矩阵;indexPairs为Px2的向量,即匹配上的指标对;matchmetric为匹配上的特征描述子之间的测度值;Name为用一对单引号包含的字符串,Value为对应Name的值。

| Name | Value |

| 'Method' | 默认值为'NearestNeighborRatio',表示进行匹配时所用方法,详细见“Method及其含义"表 |

| ‘MatchThreshold' | 对于2-值型特征向量,默认值为10.0,对于非2-值型特征向量,默认值为1.0,范围(0,100),为百分比值,表示选择最强的匹配的百分比,取较大值时,返回更多的匹配对 |

| ’Metric' | 仅对输入非2-值型特征向量有用,表示特征匹配的测度,默认值为SSD(差的平方和),还可以取值为SAD(绝对差之和)和normcorr(归一化交叉相关) 注:对于2-值型特征向量,使用Hamming距离作为测度 |

| ‘Prenormalized' | 仅对输入非2-值型特征向量有用,默认值为false,若置为true,表示在进行特征匹配前特征都进行归一化(如果输入的没有进行归一化,匹配结果可能出错),若置为false,函数进行归一化后再进行匹配。 |

| ’MaxRatio' | 默认值为0.6,范围为(0,1],配合‘Method’取‘NearestNeighborRatio'使用,消除匹配模糊 |

| Method | 含义 |

|---|---|

| ‘Threshold' | 仅使用匹配阈值,可能导致一个特征有多个匹配特征 |

| ’NearestNeighborSymmetric' | 结合匹配阈值,产生一一对应的匹配结果 |

| ‘NearestNeighborRatio' | 结合匹配阈值消除匹配模糊(匹配模糊定义为:如果第一个匹配的特征不能明显优于第二个匹配特征时),那么如下定义额比值测试(the ratio test)用于决策: 1. 计算features1和feaures2中任意两个特征之间的最近距离: D 2. 计算feature1中相同特征到feature2特征空间之间的第二近距离: d 3.如果两个距离的比值D/d大于MaxRaio时,可以消除匹配模糊。 注:这个方法可以产生更加鲁棒性的匹配,同时,如果图像中存在重复模式(repeating patterns),该方法为了消除匹配模糊,可能会消除合理的匹配特征 |

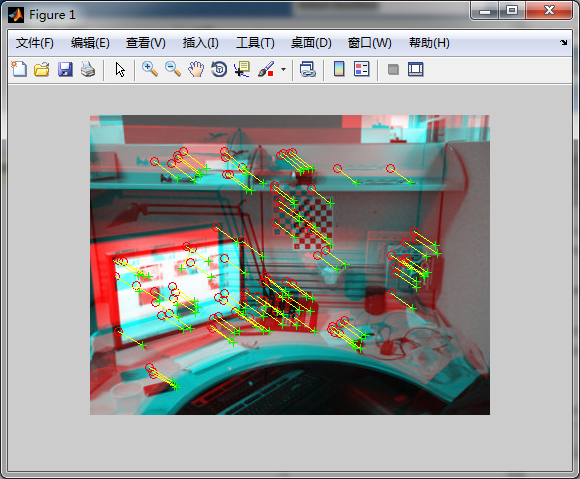

举例:

close all;

clear all;

clc;

I1 = rgb2gray(imread('viprectification_deskLeft.png'));

I2 = rgb2gray(imread('viprectification_deskRight.png'));

points1 = detectHarrisFeatures(I1);

points2 = detectHarrisFeatures(I2);

[features1,valid_points1] = extractFeatures(I1,points1);

[features2,valid_points2] = extractFeatures(I2,points2);

indexPairs = matchFeatures(features1,features2);

matchedPoints1 = valid_points1(indexPairs(:,1));

matchedPoints2 = valid_points2(indexPairs(:,2));

figure;

showMatchedFeatures(I1,I2,matchedPoints1,matchedPoints2);

1470

1470

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?