ChatGPT 的训练,共三阶段,

- 预训练阶段:在大量文本数据上进行无监督学习

- 监督微调阶段(SFT):在人工标注的对话数据上进行有监督学习

- RLHF阶段:使用PPO基于人类反馈优化模型

SFT

1.预训练模型=毛坯房

开发商(预训练)用通用材料(海量无标签数据)盖好毛坯房,具备基本结构(通用语言理解能力)。

2.监督微调 =个性化装修

房主(用户)根据需求(特定任务)用少量定制家具(标注数据)改造厨房(调整模型参数),使其适合烹饪(如生成医疗报告)。

LoRA, Adapter 就属于 SFT

Pytorch 代码

数据集处理

import json import random with open("ruozhiba_dataset/ruozhiba_qa.json", "r", encoding="utf-8") as f: data = json.load(f) # 只保留需要的字段 processed = [] for item in data: if item.get("instruction") and item.get("output"): processed.append({ "instruction": item["instruction"], "input": "", # 通常为空 "output": item["output"], }) # 打乱数据 random.shuffle(processed) # 划分训练与验证集 train_data = processed[:1343] # 90% 用于训练 val_data = processed[1343:] # 10% 用于验证 # 保存 with open("ruozhiba_train_2449.json", "w", encoding="utf-8") as f: json.dump(train_data, f, ensure_ascii=False, indent=2) with open("ruozhiba_val_153.json", "w", encoding="utf-8") as f: json.dump(val_data, f, ensure_ascii=False, indent=2)SFT

这是全量微调,没有用 LoRA

import json import numpy as np import torch from torch.utils.data import Dataset from torch.utils.data import DataLoader from torch.utils.tensorboard import SummaryWriter from transformers import AutoModelForCausalLM, AutoTokenizer from tqdm import tqdm import time, sys import os os.environ['HF_ENDPOINT'] = 'https://hf-mirror.com' os.environ['HF_HOME'] = '/nfs/volume-74-1/haoxixuan/hf_cache' os.environ['TRANSFORMERS_CACHE'] = '/nfs/volume-74-1/haoxixuan/hf_cache' os.environ['HF_DATASETS_CACHE'] = '/nfs/volume-74-1/haoxixuan/hf_cache' os.environ['HF_HUB_CACHE'] = '/nfs/volume-74-1/haoxixuan/hf_cache' class NerDataset(Dataset): def __init__(self, data_path, tokenizer, max_source_length, max_target_length) -> None: super().__init__() self.tokenizer = tokenizer self.max_source_length = max_source_length self.max_target_length = max_target_length self.max_seq_length = self.max_source_length + self.max_target_length self.data = [] if data_path: with open(data_path, 'r', encoding='utf-8') as file: data = json.load(file) for obj in data: instruction = obj["instruction"] output = obj["output"] self.data.append({ "instruction": instruction, "output": output }) print("data load , size:", len(self.data)) def preprocess(self, instruction, output): messages = [ {"role": "system", "content": "你是一个有帮助的助手"}, {"role": "user", "content": instruction} ] prompt = self.tokenizer.apply_chat_template(messages, tokenize=False, add_generation_prompt=True) instruction = self.tokenizer(prompt, add_special_tokens=False, max_length=self.max_source_length, padding="max_length", truncation=True) response = self.tokenizer(output, add_special_tokens=False, max_length=self.max_target_length, padding="max_length", truncation=True) input_ids = instruction["input_ids"] + response["input_ids"] + [self.tokenizer.pad_token_id] attention_mask = (instruction["attention_mask"] + response["attention_mask"] + [1]) labels = [-100] * len(instruction["input_ids"]) + response["input_ids"] + [self.tokenizer.pad_token_id] return input_ids, attention_mask, labels def __getitem__(self, index): item_data = self.data[index] input_ids, attention_mask, labels = self.preprocess(**item_data) return { "input_ids": torch.LongTensor(np.array(input_ids)), "attention_mask": torch.LongTensor(np.array(attention_mask)), "labels": torch.LongTensor(np.array(labels)) } def __len__(self): return len(self.data) def train_model(model, train_loader, val_loader, optimizer, device, num_epochs, model_output_dir, writer): batch_step = 0 for epoch in range(num_epochs): time1 = time.time() model.train() for index, data in enumerate(tqdm(train_loader, file=sys.stdout, desc="Train Epoch: " + str(epoch))): input_ids = data['input_ids'].to(device, dtype=torch.long) attention_mask = data['attention_mask'].to(device, dtype=torch.long) labels = data['labels'].to(device, dtype=torch.long) optimizer.zero_grad() outputs = model(input_ids=input_ids, attention_mask=attention_mask, labels=labels) loss = outputs.loss loss.backward() optimizer.step() writer.add_scalar('Loss/train', loss, batch_step) batch_step += 1 # 100轮打印一次 loss if index % 100 == 0 or index == len(train_loader) - 1: time2 = time.time() tqdm.write( f"{index}, epoch: {epoch} -loss: {str(loss)} ; each step's time spent: {(str(float(time2 - time1) / float(index + 0.0001)))}") # 验证 model.eval() val_loss = validate_model(model, device, val_loader) writer.add_scalar('Loss/val', val_loss, epoch) print(f"val loss: {val_loss} , epoch: {epoch}") print("Save Model To ", model_output_dir) model.save_pretrained(model_output_dir) def validate_model(model, device, val_loader): running_loss = 0.0 with torch.no_grad(): for _, data in enumerate(tqdm(val_loader, file=sys.stdout, desc="Validation Data")): input_ids = data['input_ids'].to(device, dtype=torch.long) attention_mask = data['attention_mask'].to(device, dtype=torch.long) labels = data['labels'].to(device, dtype=torch.long) outputs = model(input_ids=input_ids, attention_mask=attention_mask, labels=labels, ) loss = outputs.loss running_loss += loss.item() return running_loss / len(val_loader) def main(): model_name = "Qwen/Qwen2.5-0.5B-Instruct" # model = AutoModelForCausalLM.from_pretrained("Qwen/Qwen2.5-0.5B-Instruct", cache_dir=cache_dir, device_map="auto") train_json_path = "./ruozhiba_train_2449.json" val_json_path = "./ruozhiba_val_153.json" max_source_length = 62 # text 样本 i_95 是 32 ,模板自身大约 30 个,总共 62 个 max_target_length = 151 # label 样本 o_95 是 151 epochs = 3 batch_size = 12 lr = 1e-4 model_output_dir = "sft_ruozhi" logs_dir = "logs" device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu") cache_dir = '/nfs/volume-74-1/haoxixuan/hf_cache' # tokenizer = AutoTokenizer.from_pretrained(model_name, trust_remote_code=True) tokenizer = AutoTokenizer.from_pretrained("Qwen/Qwen2.5-0.5B-Instruct", cache_dir=cache_dir) # model = AutoModelForCausalLM.from_pretrained(model_name, trust_remote_code=True) model = AutoModelForCausalLM.from_pretrained("Qwen/Qwen2.5-0.5B-Instruct", cache_dir=cache_dir) model = model.to(device) # 设置pad_token_id if tokenizer.pad_token is None: tokenizer.pad_token = tokenizer.eos_token print("Start Load Train Data...") training_set = NerDataset(train_json_path, tokenizer, max_source_length, max_target_length) training_loader = DataLoader(training_set, batch_size=batch_size, shuffle=True, num_workers=4,) print("Start Load Validation Data...") val_set = NerDataset(val_json_path, tokenizer, max_source_length, max_target_length) # 检查验证数据是否为空 if len(val_set) == 0: print("Warning: Validation dataset is empty! Using training data for validation.") val_loader = DataLoader(training_set, batch_size=batch_size, shuffle=False, num_workers=4) else: val_loader = DataLoader(val_set, batch_size=batch_size, shuffle=False, num_workers=4) writer = SummaryWriter(logs_dir) optimizer = torch.optim.AdamW(params=model.parameters(), lr=lr) model = model.to(device) print("Start Training...") train_model(model=model, train_loader=training_loader, val_loader=val_loader, optimizer=optimizer, device=device, num_epochs=epochs, model_output_dir=model_output_dir, writer=writer ) writer.close() if __name__ == '__main__': main()inference

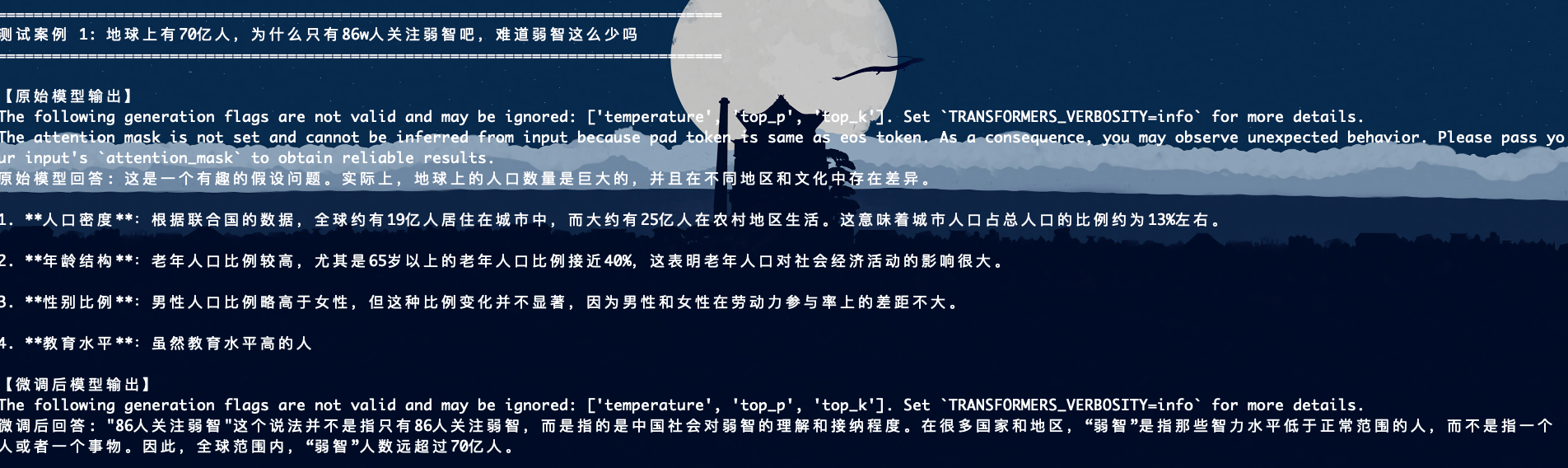

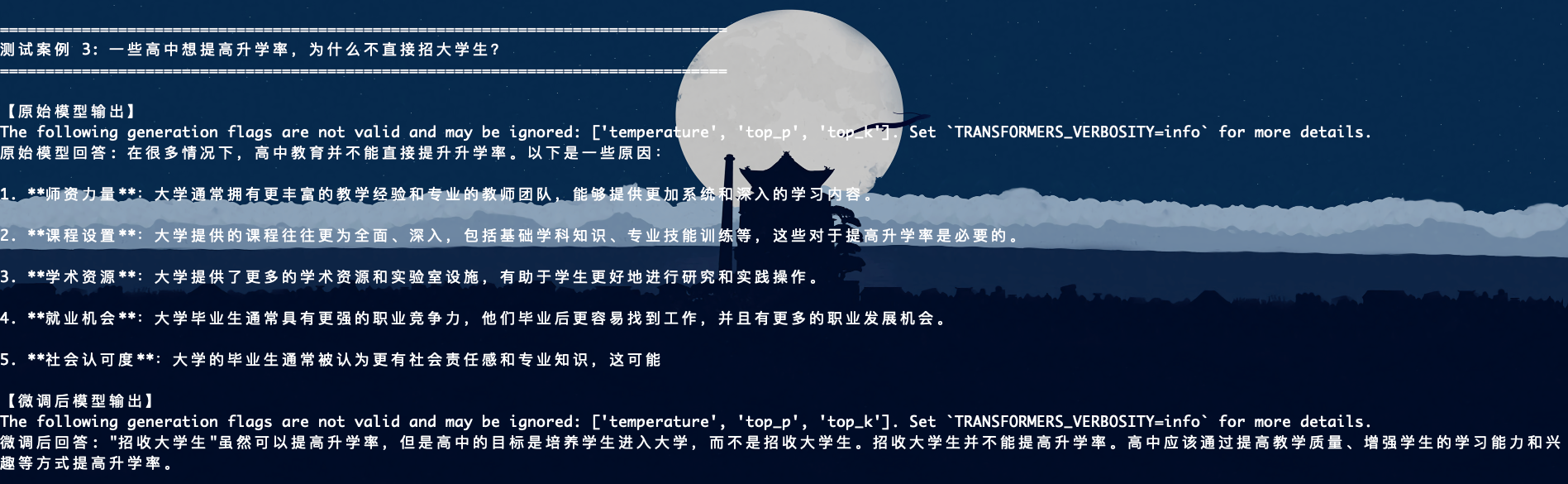

from transformers import AutoModelForCausalLM, AutoTokenizer import torch def main(): # model_path = "D:\Qwen2.5-0.5B-Instruct" train_model_path = "sft_ruozhi" cache_dir = '/nfs/volume-74-1/haoxixuan/hf_cache' device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu") print("Loading tokenizer...") tokenizer = AutoTokenizer.from_pretrained("Qwen/Qwen2.5-0.5B-Instruct", cache_dir=cache_dir) print("Loading original model (before fine-tuning)...") original_model = AutoModelForCausalLM.from_pretrained("Qwen/Qwen2.5-0.5B-Instruct", cache_dir=cache_dir) original_model.to(device) original_model.eval() print("Loading fine-tuned model...") finetuned_model = AutoModelForCausalLM.from_pretrained(train_model_path, trust_remote_code=True) finetuned_model.to(device) finetuned_model.eval() print("Models loaded successfully") test_case = [ "地球上有70亿人,为什么只有86w人关注弱智吧,难道弱智这么少吗", "游泳比赛时把水喝光后跑步犯规吗", "一些高中想提高升学率,为什么不直接招大学生?" ] for i, case in enumerate(test_case): print(f"\n{'='*80}") print(f"测试案例 {i+1}: {case}") print(f"{'='*80}") messages = [ {"role": "system", "content": "你是一个有帮助的助手"}, {"role": "user", "content": case}] text = tokenizer.apply_chat_template(messages, tokenize=False, add_generation_prompt=True) model_inputs = tokenizer([text], return_tensors="pt").to(device) # 原始模型输出 print("\n【原始模型输出】") with torch.no_grad(): generated_ids_original = original_model.generate( model_inputs.input_ids, max_new_tokens=151, top_k=1, do_sample=False ) generated_ids_original = [output_ids[len(input_ids):] for input_ids, output_ids in zip(model_inputs.input_ids, generated_ids_original)] response_original = tokenizer.batch_decode(generated_ids_original, skip_special_tokens=True)[0] print(f"原始模型回答: {response_original}") # 微调后模型输出 print("\n【微调后模型输出】") with torch.no_grad(): generated_ids_finetuned = finetuned_model.generate( model_inputs.input_ids, max_new_tokens=151, top_k=1, do_sample=False ) generated_ids_finetuned = [output_ids[len(input_ids):] for input_ids, output_ids in zip(model_inputs.input_ids, generated_ids_finetuned)] response_finetuned = tokenizer.batch_decode(generated_ids_finetuned, skip_special_tokens=True)[0] print(f"微调后回答: {response_finetuned}") print(f"\n{'-'*80}") if __name__ == '__main__': main()

570

570

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?