(四)PointPillars论文的MMDetection3D代码解读——网络结构篇

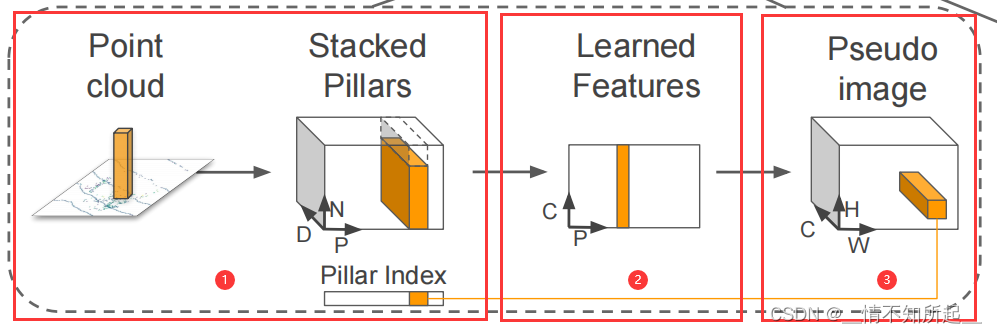

PointPillars 是一个来自工业界的模型,整体的思想是基于图片的处理框架,直接将点云从俯视图的视角划分为一个个的立方柱体(Pillars),从而构成了伪图片数据,然后再使用2D检测框架进行特征提取和预测得到检测框,从而使得该模型在速度和精度都达到了一个很好的平衡。 PointPillars 的网络结构如图所示:

本文将会以 MMDetection3D 的代码为基础,详细解读 PointPillars 的每一行代码实现以及原因。这是本人的第一篇代码讲解,解读中难免会出现不足之处,欢迎各位的批评指正,如果有好的意见大家都可以在评论区留言。感谢大家!

承接上文 PointPillars论文的MMDetection3D代码解读——数据处理篇,接下来将进入到我们 PointPillars 的网络结构部分。

第三章 PointPillars的点云数据处理

PointPillars 的点云数据处理过程是将 3D 的点云信息从俯视图的形式进行获取,假设在点云中有

N

∗

4

N * 4

N∗4 个点的信息,所有的这些点都位于 KITTI Lidar 坐标系

x

y

z

xyz

xyz 中(单位是米,其中

x

x

x向前,

y

y

y向左,

z

z

z向上),并且所有的这些点都会被分配到均等大小的x-y平面的立方柱体中,这些立方柱就被称为 pillar。KITTI 的点云数据是一个4维度的数据 (

x

x

x,

y

y

y,

z

z

z,

r

r

r),其中

x

y

z

xyz

xyz 表示该点在点云中的坐标,

r

r

r 表示该点的反射强度,并且在将所有点放入每个 pillar 中的时候不需要像 voxel 那样考虑高度,可以将一个 pillar 理解为就是一个z轴上所有 voxel 组合在一起的。

在进行 PointPillars 的数据增强时,需要对 pillar 中的数据进行增强操作,也就是将每个 pillar 中的点再增加 6 个维度的数据,包含 x c x_c xc, y c y_c yc, z c z_c zc, x p x_p xp, y p y_p yp 和 z p z_p zp,其中下标 c c c 表示每个点到该点所对应的 pillar 中所有点平均值的偏移量,下标 p p p 代表了每个点到所对应的 pillar 几何中心点的 x x x, y y y 和 z z z的偏移量,所有经过数据增强操作后每个点的维度是 10 维:包含了( x x x, y y y, z z z, r r r, x c x_c xc, y c y_c yc, z c z_c zc, x p x_p xp, y p y_p yp, z p z_p zp)。

经过上述操作之后,我们可以把原始的点云结构 ( N ∗ 4 ) (N * 4) (N∗4)变换成了 ( D , P , N ) (D,P,N) (D,P,N),其中 D D D 代表了每个点云的特征维度,也就是每个点云的10个特征, P P P 代表了所有非空的立方柱体, N N N 代表了每个pillar中最多会有多少个点。

PointPillars 的点云数据处理流程如下:

- 经过上图 红色标记的①后,我们得到了一个 ( D , P , N ) (D,P,N) (D,P,N)的张量;

- 接下来经过上图 红色标记的②,通过使用了一个简化版的 PointNet 网络对点云的数据进行特征提取(将这些点通过MLP先升维,然后跟着BatchNormalization层和Relu激活层),得到一个 ( C , P , N ) (C,P,N) (C,P,N)形状的张量,然后再使用 MaxPooling 操作提取每个 pillar 中最能代表该 pillar 的点,那么输出会从 ( C , P , N ) (C,P,N) (C,P,N)变成 ( C , P ) (C,P) (C,P),其中 C C C 代表了每个点云的特征维度, P P P 代表了所有非空的立方柱体;

- 在经过上述操作编码后的点,需要经过上图 红色标记的③,再重新放回到原来对应pillar的 x x x, y y y 位置上,最终生成我们的伪图象数据 ( C , H , W ) (C,H,W) (C,H,W)。

下面看这一部分的 MMDetection3D 代码实现:

3.1 从 Det3DDataPreprocessor 的 forward() 函数开始解读:

点云数据预处理 Det3DDataPreprocessor 实现代码在 mmdetection3d/mmdet3d/models/data_preprocessors/data_preprocessor.py

# Copyright (c) OpenMMLab. All rights reserved.

import math

from numbers import Number

from typing import Dict, List, Optional, Sequence, Union

import numpy as np

import torch

from mmdet.models import DetDataPreprocessor

from mmengine.model import stack_batch

from mmengine.utils import is_list_of

from torch.nn import functional as F

from mmdet3d.registry import MODELS

from mmdet3d.structures.det3d_data_sample import SampleList

from mmdet3d.utils import OptConfigType

from .utils import multiview_img_stack_batch

from .voxelize import VoxelizationByGridShape, dynamic_scatter_3d

@MODELS.register_module()

class Det3DDataPreprocessor(DetDataPreprocessor):

"""Points / Image pre-processor for point clouds / vision-only / multi-

modality 3D detection tasks.

It provides the data pre-processing as follows

- Collate and move image and point cloud data to the target device.

- 1) For image data:

- Pad images in inputs to the maximum size of current batch with defined

``pad_value``. The padding size can be divisible by a defined

``pad_size_divisor``.

- Stack images in inputs to batch_imgs.

- Convert images in inputs from bgr to rgb if the shape of input is

(3, H, W).

- Normalize images in inputs with defined std and mean.

- Do batch augmentations during training.

- 2) For point cloud data:

- If no voxelization, directly return list of point cloud data.

- If voxelization is applied, voxelize point cloud according to

``voxel_type`` and obtain ``voxels``.

Args:

voxel (bool): Whether to apply voxelization to point cloud.

Defaults to False.

voxel_type (str): Voxelization type. Two voxelization types are

provided: 'hard' and 'dynamic', respectively for hard

voxelization and dynamic voxelization. Defaults to 'hard'.

voxel_layer (dict or :obj:`ConfigDict`, optional): Voxelization layer

config. Defaults to None.

mean (Sequence[Number], optional): The pixel mean of R, G, B channels.

Defaults to None.

std (Sequence[Number], optional): The pixel standard deviation of

R, G, B channels. Defaults to None.

pad_size_divisor (int): The size of padded image should be

divisible by ``pad_size_divisor``. Defaults to 1.

pad_value (Number): The padded pixel value. Defaults to 0.

pad_mask (bool): Whether to pad instance masks. Defaults to False.

mask_pad_value (int): The padded pixel value for instance masks.

Defaults to 0.

pad_seg (bool): Whether to pad semantic segmentation maps.

Defaults to False.

seg_pad_value (int): The padded pixel value for semantic

segmentation maps. Defaults to 255.

bgr_to_rgb (bool): Whether to convert image from BGR to RGB.

Defaults to False.

rgb_to_bgr (bool): Whether to convert image from RGB to BGR.

Defaults to False.

boxtype2tensor (bool): Whether to keep the ``BaseBoxes`` type of

bboxes data or not. Defaults to True.

batch_augments (List[dict], optional): Batch-level augmentations.

Defaults to None.

"""

def __init__(self,

voxel: bool = False,

voxel_type: str = 'hard',

voxel_layer: OptConfigType = None,

mean: Sequence[Number] = None,

std: Sequence[Number] = None,

pad_size_divisor: int = 1,

pad_value: Union[float, int] = 0,

pad_mask: bool = False,

mask_pad_value: int = 0,

pad_seg: bool = False,

seg_pad_value: int = 255,

bgr_to_rgb: bool = False,

rgb_to_bgr: bool = False,

boxtype2tensor: bool = True,

batch_augments: Optional[List[dict]] = None) -> None:

super(Det3DDataPreprocessor, self).__init__(

mean=mean,

std=std,

pad_size_divisor=pad_size_divisor,

pad_value=pad_value,

pad_mask=pad_mask,

mask_pad_value=mask_pad_value,

pad_seg=pad_seg,

seg_pad_value=seg_pad_value,

bgr_to_rgb=bgr_to_rgb,

rgb_to_bgr=rgb_to_bgr,

batch_augments=batch_augments)

self.voxel = voxel

self.voxel_type = voxel_type

if voxel:

self.voxel_layer = VoxelizationByGridShape(**voxel_layer)

def forward(self,

data: Union[dict, List[dict]],

training: bool = False) -> Union[dict, List[dict]]:

"""Perform normalization, padding and bgr2rgb conversion based on

``BaseDataPreprocessor``.

Args:

data (dict or List[dict]): Data from dataloader.

The dict contains the whole batch data, when it is

a list[dict], the list indicate test time augmentation.

training (bool): Whether to enable training time augmentation.

Defaults to False.

Returns:

dict or List[dict]: Data in the same format as the model input.

"""

if isinstance(data, list):

num_augs = len(data)

aug_batch_data = []

for aug_id in range(num_augs):

single_aug_batch_data = self.simple_process(

data[aug_id], training)

aug_batch_data.append(single_aug_batch_data)

return aug_batch_data

else:

return self.simple_process(data, training)

def simple_process(self, data: dict, training: bool = False) -> dict:

"""Perform normalization, padding and bgr2rgb conversion for img data

based on ``BaseDataPreprocessor``, and voxelize point cloud if `voxel`

is set to be True.

Args:

data (dict): Data sampled from dataloader.

training (bool): Whether to enable training time augmentation.

Defaults to False.

Returns:

dict: Data in the same format as the model input.

"""

if 'img' in data['inputs']:

batch_pad_shape = self._get_pad_shape(data)

data = self.collate_data(data)

inputs, data_samples = data['inputs'], data['data_samples']

batch_inputs = dict()

if 'points' in inputs:

batch_inputs['points'] = inputs['points']

if self.voxel:

voxel_dict = self.voxelize(inputs['points'], data_samples)

batch_inputs['voxels'] = voxel_dict

if 'imgs' in inputs:

imgs = inputs['imgs']

if data_samples is not None:

# NOTE the batched image size information may be useful, e.g.

# in DETR, this is needed for the construction of masks, which

# is then used for the transformer_head.

batch_input_shape = tuple(imgs[0].size()[-2:])

for data_sample, pad_shape in zip(data_samples,

batch_pad_shape):

data_sample.set_metainfo({

'batch_input_shape': batch_input_shape,

'pad_shape': pad_shape

})

if hasattr(self, 'boxtype2tensor') and self.boxtype2tensor:

from mmdet.models.utils.misc import \

samplelist_boxtype2tensor

samplelist_boxtype2tensor(data_samples)

elif hasattr(self, 'boxlist2tensor') and self.boxlist2tensor:

from mmdet.models.utils.misc import \

samplelist_boxlist2tensor

samplelist_boxlist2tensor(data_samples)

if self.pad_mask:

self.pad_gt_masks(data_samples)

if self.pad_seg:

self.pad_gt_sem_seg(data_samples)

if training and self.batch_augments is not None:

for batch_aug in self.batch_augments:

imgs, data_samples = batch_aug(imgs, data_samples)

batch_inputs['imgs'] = imgs

return {'inputs': batch_inputs, 'data_samples': data_samples}

def preprocess_img(self, _batch_img: torch.Tensor) -> torch.Tensor:

# channel transform

if self._channel_conversion:

_batch_img = _batch_img[[2, 1, 0], ...]

# Convert to float after channel conversion to ensure

# efficiency

_batch_img = _batch_img.float()

# Normalization.

if self._enable_normalize:

if self.mean.shape[0] == 3:

assert _batch_img.dim() == 3 and _batch_img.shape[0] == 3, (

'If the mean has 3 values, the input tensor '

'should in shape of (3, H, W), but got the '

f'tensor with shape {_batch_img.shape}')

_batch_img = (_batch_img - self.mean) / self.std

return _batch_img

def collate_data(self, data: dict) -> dict:

"""Copying data to the target device and Performs normalization,

padding and bgr2rgb conversion and stack based on

``BaseDataPreprocessor``.

Collates the data sampled from dataloader into a list of dict and

list of labels, and then copies tensor to the target device.

Args:

data (dict): Data sampled from dataloader.

Returns:

dict: Data in the same format as the model input.

"""

data = self.cast_data(data) # type: ignore

if 'img' in data['inputs']:

_batch_imgs = data['inputs']['img']

# Process data with `pseudo_collate`.

if is_list_of(_batch_imgs, torch.Tensor):

batch_imgs = []

img_dim = _batch_imgs[0].dim()

for _batch_img in _batch_imgs:

if img_dim == 3: # standard img

_batch_img = self.preprocess_img(_batch_img)

elif img_dim == 4:

_batch_img = [

self.preprocess_img(_img) for _img in _batch_img

]

_batch_img = torch.stack(_batch_img, dim=0)

batch_imgs.append(_batch_img)

# Pad and stack Tensor.

if img_dim == 3:

batch_imgs = stack_batch(batch_imgs, self.pad_size_divisor,

self.pad_value)

elif img_dim == 4:

batch_imgs = multiview_img_stack_batch(

batch_imgs, self.pad_size_divisor, self.pad_value)

# Process data with `default_collate`.

elif isinstance(_batch_imgs, torch.Tensor):

assert _batch_imgs.dim() == 4, (

'The input of `ImgDataPreprocessor` should be a NCHW '

'tensor or a list of tensor, but got a tensor with '

f'shape: {_batch_imgs.shape}')

if self._channel_conversion:

_batch_imgs = _batch_imgs[:, [2, 1, 0], ...]

# Convert to float after channel conversion to ensure

# efficiency

_batch_imgs = _batch_imgs.float()

if self._enable_normalize:

_batch_imgs = (_batch_imgs - self.mean) / self.std

h, w = _batch_imgs.shape[2:]

target_h = math.ceil(

h / self.pad_size_divisor) * self.pad_size_divisor

target_w = math.ceil(

w / self.pad_size_divisor) * self.pad_size_divisor

pad_h = target_h - h

pad_w = target_w - w

batch_imgs = F.pad(_batch_imgs, (0, pad_w, 0, pad_h),

'constant', self.pad_value)

else:

raise TypeError(

'Output of `cast_data` should be a list of dict '

'or a tuple with inputs and data_samples, but got'

f'{type(data)}: {data}')

data['inputs']['imgs'] = batch_imgs

data.setdefault('data_samples', None)

return data

def _get_pad_shape(self, data: dict) -> List[tuple]:

"""Get the pad_shape of each image based on data and

pad_size_divisor."""

# rewrite `_get_pad_shape` for obtaining image inputs.

_batch_inputs = data['inputs']['img']

# Process data with `pseudo_collate`.

if is_list_of(_batch_inputs, torch.Tensor):

batch_pad_shape = []

for ori_input in _batch_inputs:

if ori_input.dim() == 4:

# mean multiview input, select one of the

# image to calculate the pad shape

ori_input = ori_input[0]

pad_h = int(

np.ceil(ori_input.shape[1] /

self.pad_size_divisor)) * self.pad_size_divisor

pad_w = int(

np.ceil(ori_input.shape[2] /

self.pad_size_divisor)) * self.pad_size_divisor

batch_pad_shape.append((pad_h, pad_w))

# Process data with `default_collate`.

elif isinstance(_batch_inputs, torch.Tensor):

assert _batch_inputs.dim() == 4, (

'The input of `ImgDataPreprocessor` should be a NCHW tensor '

'or a list of tensor, but got a tensor with shape: '

f'{_batch_inputs.shape}')

pad_h = int(

np.ceil(_batch_inputs.shape[1] /

self.pad_size_divisor)) * self.pad_size_divisor

pad_w = int(

np.ceil(_batch_inputs.shape[2] /

self.pad_size_divisor)) * self.pad_size_divisor

batch_pad_shape = [(pad_h, pad_w)] * _batch_inputs.shape[0]

else:

raise TypeError('Output of `cast_data` should be a list of dict '

'or a tuple with inputs and data_samples, but got '

f'{type(data)}: {data}')

return batch_pad_shape

@torch.no_grad()

def voxelize(self, points: List[torch.Tensor],

data_samples: SampleList) -> Dict[str, torch.Tensor]:

"""Apply voxelization to point cloud.

Args:

points (List[Tensor]): Point cloud in one data batch.

data_samples: (list[:obj:`Det3DDataSample`]): The annotation data

of every samples. Add voxel-wise annotation for segmentation.

Returns:

Dict[str, Tensor]: Voxelization information.

- voxels (Tensor): Features of voxels, shape is MxNxC for hard

voxelization, NxC for dynamic voxelization.

- coors (Tensor): Coordinates of voxels, shape is Nx(1+NDim),

where 1 represents the batch index.

- num_points (Tensor, optional): Number of points in each voxel.

- voxel_centers (Tensor, optional): Centers of voxels.

"""

voxel_dict = dict()

if self.voxel_type == 'hard':

voxels, coors, num_points, voxel_centers = [], [], [], []

for i, res in enumerate(points):

res_voxels, res_coors, res_num_points = self.voxel_layer(res)

res_voxel_centers = (

res_coors[:, [2, 1, 0]] + 0.5) * res_voxels.new_tensor(

self.voxel_layer.voxel_size) + res_voxels.new_tensor(

self.voxel_layer.point_cloud_range[0:3])

res_coors = F.pad(res_coors, (1, 0), mode='constant', value=i)

voxels.append(res_voxels)

coors.append(res_coors)

num_points.append(res_num_points)

voxel_centers.append(res_voxel_centers)

voxels = torch.cat(voxels, dim=0)

coors = torch.cat(coors, dim=0)

num_points = torch.cat(num_points, dim=0)

voxel_centers = torch.cat(voxel_centers, dim=0)

voxel_dict['num_points'] = num_points

voxel_dict['voxel_centers'] = voxel_centers

elif self.voxel_type == 'dynamic':

coors = []

# dynamic voxelization only provide a coors mapping

for i, res in enumerate(points):

res_coors = self.voxel_layer(res)

res_coors = F.pad(res_coors, (1, 0), mode='constant', value=i)

coors.append(res_coors)

voxels = torch.cat(points, dim=0)

coors = torch.cat(coors, dim=0)

elif self.voxel_type == 'cylindrical':

voxels, coors = [], []

for i, (res, data_sample) in enumerate(zip(points, data_samples)):

rho = torch.sqrt(res[:, 0]**2 + res[:, 1]**2)

phi = torch.atan2(res[:, 1], res[:, 0])

polar_res = torch.stack((rho, phi, res[:, 2]), dim=-1)

min_bound = polar_res.new_tensor(

self.voxel_layer.point_cloud_range[:3])

max_bound = polar_res.new_tensor(

self.voxel_layer.point_cloud_range[3:])

try: # only support PyTorch >= 1.9.0

polar_res_clamp = torch.clamp(polar_res, min_bound,

max_bound)

except TypeError:

polar_res_clamp = polar_res.clone()

for coor_idx in range(3):

polar_res_clamp[:, coor_idx][

polar_res[:, coor_idx] >

max_bound[coor_idx]] = max_bound[coor_idx]

polar_res_clamp[:, coor_idx][

polar_res[:, coor_idx] <

min_bound[coor_idx]] = min_bound[coor_idx]

res_coors = torch.floor(

(polar_res_clamp - min_bound) / polar_res_clamp.new_tensor(

self.voxel_layer.voxel_size)).int()

self.get_voxel_seg(res_coors, data_sample)

res_coors = F.pad(res_coors, (1, 0), mode='constant', value=i)

res_voxels = torch.cat((polar_res, res[:, :2], res[:, 3:]),

dim=-1)

voxels.append(res_voxels)

coors.append(res_coors)

voxels = torch.cat(voxels, dim=0)

coors = torch.cat(coors, dim=0)

elif self.voxel_type == 'minkunet':

voxels, coors = [], []

voxel_size = points[0].new_tensor(self.voxel_layer.voxel_size)

for i, (res, data_sample) in enumerate(zip(points, data_samples)):

res_coors = torch.round(res[:, :3] / voxel_size).int()

res_coors -= res_coors.min(0)[0]

res_coors_numpy = res_coors.cpu().numpy()

inds, voxel2point_map = self.sparse_quantize(

res_coors_numpy, return_index=True, return_inverse=True)

voxel2point_map = torch.from_numpy(voxel2point_map).cuda()

if self.training:

if len(inds) > 80000:

inds = np.random.choice(inds, 80000, replace=False)

inds = torch.from_numpy(inds).cuda()

data_sample.gt_pts_seg.voxel_semantic_mask \

= data_sample.gt_pts_seg.pts_semantic_mask[inds]

res_voxel_coors = res_coors[inds]

res_voxels = res[inds]

res_voxel_coors = F.pad(

res_voxel_coors, (0, 1), mode='constant', value=i)

data_sample.voxel2point_map = voxel2point_map.long()

voxels.append(res_voxels)

coors.append(res_voxel_coors)

voxels = torch.cat(voxels, dim=0)

coors = torch.cat(coors, dim=0)

else:

raise ValueError(f'Invalid voxelization type {self.voxel_type}')

voxel_dict['voxels'] = voxels

voxel_dict['coors'] = coors

return voxel_dict

def get_voxel_seg(self, res_coors: torch.Tensor, data_sample: SampleList):

"""Get voxel-wise segmentation label and point2voxel map.

Args:

res_coors (Tensor): The voxel coordinates of points, Nx3.

data_sample: (:obj:`Det3DDataSample`): The annotation data of

every samples. Add voxel-wise annotation forsegmentation.

"""

if self.training:

pts_semantic_mask = data_sample.gt_pts_seg.pts_semantic_mask

voxel_semantic_mask, _, point2voxel_map = dynamic_scatter_3d(

F.one_hot(pts_semantic_mask.long()).float(), res_coors, 'mean',

True)

voxel_semantic_mask = torch.argmax(voxel_semantic_mask, dim=-1)

data_sample.gt_pts_seg.voxel_semantic_mask = voxel_semantic_mask

data_sample.gt_pts_seg.point2voxel_map = point2voxel_map

else:

pseudo_tensor = res_coors.new_ones([res_coors.shape[0], 1]).float()

_, _, point2voxel_map = dynamic_scatter_3d(pseudo_tensor,

res_coors, 'mean', True)

data_sample.gt_pts_seg.point2voxel_map = point2voxel_map

def ravel_hash(self, x: np.ndarray) -> np.ndarray:

"""Get voxel coordinates hash for np.unique().

Args:

x (np.ndarray): The voxel coordinates of points, Nx3.

Returns:

np.ndarray: Voxels coordinates hash.

"""

assert x.ndim == 2, x.shape

x = x - np.min(x, axis=0)

x = x.astype(np.uint64, copy=False)

xmax = np.max(x, axis=0).astype(np.uint64) + 1

h = np.zeros(x.shape[0], dtype=np.uint64)

for k in range(x.shape[1] - 1):

h += x[:, k]

h *= xmax[k + 1]

h += x[:, -1]

return h

def sparse_quantize(self,

coords: np.ndarray,

return_index: bool = False,

return_inverse: bool = False) -> List[np.ndarray]:

"""Sparse Quantization for voxel coordinates used in Minkunet.

Args:

coords (np.ndarray): The voxel coordinates of points, Nx3.

return_index (bool): Whether to return the indices of the

unique coords, shape (M,).

return_inverse (bool): Whether to return the indices of the

original coords shape (N,).

Returns:

List[np.ndarray] or None: Return index and inverse map if

return_index and return_inverse is True.

"""

_, indices, inverse_indices = np.unique(

self.ravel_hash(coords), return_index=True, return_inverse=True)

coords = coords[indices]

outputs = []

if return_index:

outputs += [indices]

if return_inverse:

outputs += [inverse_indices]

return outputs

- 第125行代码,

data 是一个字典,if 条件不成立,所以跳过 if 后面的语句,进入 else 后面的语句;

- 第135行代码,调用

simple_process()函数; - 第150行代码,

data['input'] 中不包含 'img' 字段,if 条件不成立,所以跳过 if 后面的语句; - 第153行代码,调用

self.collate_data()函数对数据进行打包,由于data['input'] 中不包含 'img' 字段,调用self.collate_data()函数后 data 没有改变; - 第154行代码,通过

data['inputs'], data['data_samples'] 分别来设置 inputs, data_samples;

- 第155行代码,将

batch_inputs 初始化为空字典;

- 第157行代码,

data['input'] 中包含 'points' 字段,if 条件成立,所以进入 if 后面的语句; - 第158行代码,通过

inputs['points'] 来设置 batch_inputs['points'];

- 第160行代码,

self.voxel = True,if 条件成立,所以进入 if 后面的语句; - 第161行代码,调用

self.voxelize(inputs['points'], data_samples)函数来设置voxel_dict; - 第353行代码,将

voxel_dict 初始化为空字典;

- 第355行代码,这里以

self.voxel_type = 'hard'举例,if 条件成立,所以进入 if 后面的语句; - 第356行代码,将

voxels,coors,num_points,voxel_centers 初始化为空列表; - 第357行代码,进入 for 循环,

遍历这里的 points;

- 第358行代码,

调用 self.voxel_layer(res) 函数对每一个点云数据提取出res_voxels,res_coors,res_num_points;

- 第359行代码,通过

res_coors、voxel_size和point_cloud_range[0:3]来设置 res_voxel_centers`;

- 第363行代码,设置

F.pad 函数对 res_coors 进行 batch 填充,也就是给每个生成的 pillar 打上其对于的 batch 标签;

- 第364行代码,

在 voxels 列表中添加当前点云的数据 res_voxels; - 第365行代码,

在 coors 列表中添加当前点云的数据 res_coors; - 第366行代码,

在 num_points 列表中添加当前点云的数据 res_num_points; - 第367行代码,

在 voxel_centers 列表中添加当前点云的数据 res_voxels_centers;

- 第358行代码,

- 第357行代码,for 循环结束,

遍历完这里的 points 中所有的点云;

- 第369行代码,

将 voxels 在 dim=0 维度上进行拼接;

- 第370行代码,

将 coors 在 dim=0 维度上进行拼接;

- 第371行代码,

将 num_points 在 dim=0 维度上进行拼接;

- 第372行代码,

将 voxel_centers 在 dim=0 维度上进行拼接;

- 第374行代码,

通过 num_points 来设置 voxel_dict['num_points']; - 第375行代码,

通过 voxel_centers 来设置 voxel_dict['voxel_centers']; - 第448行代码,

通过 voxels 来设置 voxel_dict['voxels']; - 第449行代码,

通过 coors 来设置 voxel_dict['coors']; - 第451行代码,返回得到的

voxel_dict给前面调用的函数中;

这里解释一下每个数据到底是什么,后面提取特征时需要使用:

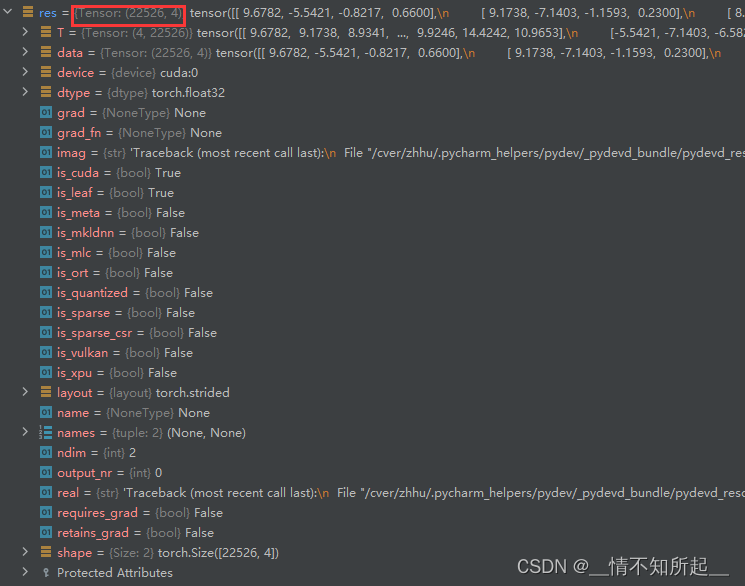

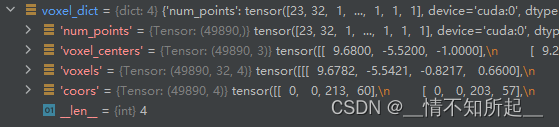

voxels, 维度是[M,32,4]: 表示生成了 M 个 pillar 数据,其中每个 pillar 中的最大点云数量是 32,

如果一个 pillar 中的点云数量超过 32,那么便会进行随机采样选取 32 个点,

如果一个 pillar 中的点云数量少于 32,那么便会对这个 pillar 使用 0 样本填充,

4 表示点云的 xyzr,其中 xyz 表示点云的坐标,r 表示强度或反射值。

coors, 维度是[M,4]: 表示每个生成的 pillar 所在的 batch 编号和 zyx 轴坐标,

如果 batch_size = 8,那么 coors 的第一个维度的取值范围便是 [0, 7],

而且 coors 的第二个维度 z 恒为0,coors 的第三四个维度表示的是该 pillar 在激光点云坐标系下的 x 和 y 坐标。

num_points, 维度是[M,]: 代表了每个生成的 pillar 中实际有多少个有效的点,,因为 pillar 中点云不满足 32 会被 0 填充;

voxel_centers, 维度是[M,3]: 代表了每个生成的 pillar 的中心点所在的 zxy 坐标;

- 第162行代码,通过

voxel_dict 来设置 batch_inputs['voxels'];

- 第164行代码,

input 中不包含 'img' 字段,if 条件不成立,所以跳过 if 后面的语句; - 第198行代码,返回

字典 {'inputs': batch_inputs, 'data_samples': data_samples}给前面的forward()函数;

- 第135行代码,调用

simple_process()函数结束,得到的返回结果是一个字典 {'inputs': batch_inputs, 'data_samples': data_samples},最终得到的 data 数据如下图所示;

3.2 从 VoxelNet 的 forward() 函数开始解读:

实现代码在 mmdetection/mmdet3d/models/detectors/voxelnet.py

# Copyright (c) OpenMMLab. All rights reserved.

from typing import Tuple

from torch import Tensor

from mmdet3d.registry import MODELS

from mmdet3d.utils import ConfigType, OptConfigType, OptMultiConfig

from .single_stage import SingleStage3DDetector

@MODELS.register_module()

class VoxelNet(SingleStage3DDetector):

r"""`VoxelNet <https://arxiv.org/abs/1711.06396>`_ for 3D detection."""

def __init__(self,

voxel_encoder: ConfigType,

middle_encoder: ConfigType,

backbone: ConfigType,

neck: OptConfigType = None,

bbox_head: OptConfigType = None,

train_cfg: OptConfigType = None,

test_cfg: OptConfigType = None,

data_preprocessor: OptConfigType = None,

init_cfg: OptMultiConfig = None) -> None:

super().__init__(

backbone=backbone,

neck=neck,

bbox_head=bbox_head,

train_cfg=train_cfg,

test_cfg=test_cfg,

data_preprocessor=data_preprocessor,

init_cfg=init_cfg)

self.voxel_encoder = MODELS.build(voxel_encoder)

self.middle_encoder = MODELS.build(middle_encoder)

def extract_feat(self, batch_inputs_dict: dict) -> Tuple[Tensor]:

"""Extract features from points."""

voxel_dict = batch_inputs_dict['voxels']

voxel_features = self.voxel_encoder(voxel_dict['voxels'],

voxel_dict['num_points'],

voxel_dict['coors'])

batch_size = voxel_dict['coors'][-1, 0].item() + 1

x = self.middle_encoder(voxel_features, voxel_dict['coors'],

batch_size)

x = self.backbone(x)

if self.with_neck:

x = self.neck(x)

return x

-

第38行代码,通过

batch_inputs_dict['voxels'] 来设置 voxel_dict;

-

第39行代码,调用

self.voxel_encoder(voxel_dict['voxels'], voxel_dict['num_points'], voxel_dict['coors']) 来获得 voxel_features,这里的 voxel_encoder 便是调用PillarFeatureNet 中的 forward() 函数,详细见 3.3 节内容; -

第42行代码,

batch_size = voxel_dict['coors'][-1, 0].item() + 1 = 7 + 1 = 8; -

第43行代码,调用

self.middle_encoder(voxel_features, voxel_dict['coors'], batch_size) 获得特征 x,这里的 middle_encoder 便是调用PointPillarsScatter 中的 forward() 函数,详细见 3.4 节内容; -

第45行代码,调用

self.backbone(x) 处理特征 x,这里的 backbone 便是调用SECOND 中的 forward() 函数,详细见 4.1 节内容; -

第46行代码,

self.neck = True, if 条件成立,所以进入后面的语句; -

第47行代码,调用

self.neck(x) 处理特征 x,这里的 neck 便是调用SECONDFPN 中的 forward() 函数,详细见 4.2 节内容; -

经过上述网络结构后得到的特征 x 再送入 Anchor3DHead 的检测头获得最终的类别、检测框和方向,详细见 4.3 节内容。

3.3 从 PillarFeatureNet 的 forward() 函数开始解读:

实现代码在 mmdetection/mmdet3d/models/voxel_encoders/pillar_encoder.py

# Copyright (c) OpenMMLab. All rights reserved.

import torch

from mmcv.cnn import build_norm_layer

from mmcv.ops import DynamicScatter

from torch import nn

from mmdet3d.registry import MODELS

from .utils import PFNLayer, get_paddings_indicator

@MODELS.register_module()

class PillarFeatureNet(nn.Module):

"""Pillar Feature Net.

The network prepares the pillar features and performs forward pass

through PFNLayers.

Args:

in_channels (int, optional): Number of input features,

either x, y, z or x, y, z, r. Defaults to 4.

feat_channels (tuple, optional): Number of features in each of the

N PFNLayers. Defaults to (64, ).

with_distance (bool, optional): Whether to include Euclidean distance

to points. Defaults to False.

with_cluster_center (bool, optional): [description]. Defaults to True.

with_voxel_center (bool, optional): [description]. Defaults to True.

voxel_size (tuple[float], optional): Size of voxels, only utilize x

and y size. Defaults to (0.2, 0.2, 4).

point_cloud_range (tuple[float], optional): Point cloud range, only

utilizes x and y min. Defaults to (0, -40, -3, 70.4, 40, 1).

norm_cfg ([type], optional): [description].

Defaults to dict(type='BN1d', eps=1e-3, momentum=0.01).

mode (str, optional): The mode to gather point features. Options are

'max' or 'avg'. Defaults to 'max'.

legacy (bool, optional): Whether to use the new behavior or

the original behavior. Defaults to True.

"""

def __init__(self,

in_channels=4,

feat_channels=(64, ),

with_distance=False,

with_cluster_center=True,

with_voxel_center=True,

voxel_size=(0.2, 0.2, 4),

point_cloud_range=(0, -40, -3, 70.4, 40, 1),

norm_cfg=dict(type='BN1d', eps=1e-3, momentum=0.01),

mode='max',

legacy=True):

super(PillarFeatureNet, self).__init__()

assert len(feat_channels) > 0

self.legacy = legacy

if with_cluster_center:

in_channels += 3

if with_voxel_center:

in_channels += 3

if with_distance:

in_channels += 1

self._with_distance = with_distance

self._with_cluster_center = with_cluster_center

self._with_voxel_center = with_voxel_center

self.fp16_enabled = False

# Create PillarFeatureNet layers

self.in_channels = in_channels

feat_channels = [in_channels] + list(feat_channels)

pfn_layers = []

for i in range(len(feat_channels) - 1):

in_filters = feat_channels[i]

out_filters = feat_channels[i + 1]

if i < len(feat_channels) - 2:

last_layer = False

else:

last_layer = True

pfn_layers.append(

PFNLayer(

in_filters,

out_filters,

norm_cfg=norm_cfg,

last_layer=last_layer,

mode=mode))

self.pfn_layers = nn.ModuleList(pfn_layers)

# Need pillar (voxel) size and x/y offset in order to calculate offset

self.vx = voxel_size[0]

self.vy = voxel_size[1]

self.vz = voxel_size[2]

self.x_offset = self.vx / 2 + point_cloud_range[0]

self.y_offset = self.vy / 2 + point_cloud_range[1]

self.z_offset = self.vz / 2 + point_cloud_range[2]

self.point_cloud_range = point_cloud_range

def forward(self, features, num_points, coors, *args, **kwargs):

"""Forward function.

Args:

features (torch.Tensor): Point features or raw points in shape

(N, M, C).

num_points (torch.Tensor): Number of points in each pillar.

coors (torch.Tensor): Coordinates of each voxel.

Returns:

torch.Tensor: Features of pillars.

"""

features_ls = [features]

# Find distance of x, y, and z from cluster center

if self._with_cluster_center:

points_mean = features[:, :, :3].sum(

dim=1, keepdim=True) / num_points.type_as(features).view(

-1, 1, 1)

f_cluster = features[:, :, :3] - points_mean

features_ls.append(f_cluster)

# Find distance of x, y, and z from pillar center

dtype = features.dtype

if self._with_voxel_center:

if not self.legacy:

f_center = torch.zeros_like(features[:, :, :3])

f_center[:, :, 0] = features[:, :, 0] - (

coors[:, 3].to(dtype).unsqueeze(1) * self.vx +

self.x_offset)

f_center[:, :, 1] = features[:, :, 1] - (

coors[:, 2].to(dtype).unsqueeze(1) * self.vy +

self.y_offset)

f_center[:, :, 2] = features[:, :, 2] - (

coors[:, 1].to(dtype).unsqueeze(1) * self.vz +

self.z_offset)

else:

f_center = features[:, :, :3]

f_center[:, :, 0] = f_center[:, :, 0] - (

coors[:, 3].type_as(features).unsqueeze(1) * self.vx +

self.x_offset)

f_center[:, :, 1] = f_center[:, :, 1] - (

coors[:, 2].type_as(features).unsqueeze(1) * self.vy +

self.y_offset)

f_center[:, :, 2] = f_center[:, :, 2] - (

coors[:, 1].type_as(features).unsqueeze(1) * self.vz +

self.z_offset)

features_ls.append(f_center)

if self._with_distance:

points_dist = torch.norm(features[:, :, :3], 2, 2, keepdim=True)

features_ls.append(points_dist)

# Combine together feature decorations

features = torch.cat(features_ls, dim=-1)

# The feature decorations were calculated without regard to whether

# pillar was empty. Need to ensure that

# empty pillars remain set to zeros.

voxel_count = features.shape[1]

mask = get_paddings_indicator(num_points, voxel_count, axis=0)

mask = torch.unsqueeze(mask, -1).type_as(features)

features *= mask

for pfn in self.pfn_layers:

features = pfn(features, num_points)

return features.squeeze(1)

@MODELS.register_module()

class DynamicPillarFeatureNet(PillarFeatureNet):

"""Pillar Feature Net using dynamic voxelization.

The network prepares the pillar features and performs forward pass

through PFNLayers. The main difference is that it is used for

dynamic voxels, which contains different number of points inside a voxel

without limits.

Args:

in_channels (int, optional): Number of input features,

either x, y, z or x, y, z, r. Defaults to 4.

feat_channels (tuple, optional): Number of features in each of the

N PFNLayers. Defaults to (64, ).

with_distance (bool, optional): Whether to include Euclidean distance

to points. Defaults to False.

with_cluster_center (bool, optional): [description]. Defaults to True.

with_voxel_center (bool, optional): [description]. Defaults to True.

voxel_size (tuple[float], optional): Size of voxels, only utilize x

and y size. Defaults to (0.2, 0.2, 4).

point_cloud_range (tuple[float], optional): Point cloud range, only

utilizes x and y min. Defaults to (0, -40, -3, 70.4, 40, 1).

norm_cfg ([type], optional): [description].

Defaults to dict(type='BN1d', eps=1e-3, momentum=0.01).

mode (str, optional): The mode to gather point features. Options are

'max' or 'avg'. Defaults to 'max'.

legacy (bool, optional): Whether to use the new behavior or

the original behavior. Defaults to True.

"""

def __init__(self,

in_channels=4,

feat_channels=(64, ),

with_distance=False,

with_cluster_center=True,

with_voxel_center=True,

voxel_size=(0.2, 0.2, 4),

point_cloud_range=(0, -40, -3, 70.4, 40, 1),

norm_cfg=dict(type='BN1d', eps=1e-3, momentum=0.01),

mode='max',

legacy=True):

super(DynamicPillarFeatureNet, self).__init__(

in_channels,

feat_channels,

with_distance,

with_cluster_center=with_cluster_center,

with_voxel_center=with_voxel_center,

voxel_size=voxel_size,

point_cloud_range=point_cloud_range,

norm_cfg=norm_cfg,

mode=mode,

legacy=legacy)

self.fp16_enabled = False

feat_channels = [self.in_channels] + list(feat_channels)

pfn_layers = []

# TODO: currently only support one PFNLayer

for i in range(len(feat_channels) - 1):

in_filters = feat_channels[i]

out_filters = feat_channels[i + 1]

if i > 0:

in_filters *= 2

norm_name, norm_layer = build_norm_layer(norm_cfg, out_filters)

pfn_layers.append(

nn.Sequential(

nn.Linear(in_filters, out_filters, bias=False), norm_layer,

nn.ReLU(inplace=True)))

self.num_pfn = len(pfn_layers)

self.pfn_layers = nn.ModuleList(pfn_layers)

self.pfn_scatter = DynamicScatter(voxel_size, point_cloud_range,

(mode != 'max'))

self.cluster_scatter = DynamicScatter(

voxel_size, point_cloud_range, average_points=True)

def map_voxel_center_to_point(self, pts_coors, voxel_mean, voxel_coors):

"""Map the centers of voxels to its corresponding points.

Args:

pts_coors (torch.Tensor): The coordinates of each points, shape

(M, 3), where M is the number of points.

voxel_mean (torch.Tensor): The mean or aggregated features of a

voxel, shape (N, C), where N is the number of voxels.

voxel_coors (torch.Tensor): The coordinates of each voxel.

Returns:

torch.Tensor: Corresponding voxel centers of each points, shape

(M, C), where M is the number of points.

"""

# Step 1: scatter voxel into canvas

# Calculate necessary things for canvas creation

canvas_y = int(

(self.point_cloud_range[4] - self.point_cloud_range[1]) / self.vy)

canvas_x = int(

(self.point_cloud_range[3] - self.point_cloud_range[0]) / self.vx)

canvas_channel = voxel_mean.size(1)

batch_size = pts_coors[-1, 0] + 1

canvas_len = canvas_y * canvas_x * batch_size

# Create the canvas for this sample

canvas = voxel_mean.new_zeros(canvas_channel, canvas_len)

# Only include non-empty pillars

indices = (

voxel_coors[:, 0] * canvas_y * canvas_x +

voxel_coors[:, 2] * canvas_x + voxel_coors[:, 3])

# Scatter the blob back to the canvas

canvas[:, indices.long()] = voxel_mean.t()

# Step 2: get voxel mean for each point

voxel_index = (

pts_coors[:, 0] * canvas_y * canvas_x +

pts_coors[:, 2] * canvas_x + pts_coors[:, 3])

center_per_point = canvas[:, voxel_index.long()].t()

return center_per_point

def forward(self, features, coors):

"""Forward function.

Args:

features (torch.Tensor): Point features or raw points in shape

(N, M, C).

coors (torch.Tensor): Coordinates of each voxel

Returns:

torch.Tensor: Features of pillars.

"""

features_ls = [features]

# Find distance of x, y, and z from cluster center

if self._with_cluster_center:

voxel_mean, mean_coors = self.cluster_scatter(features, coors)

points_mean = self.map_voxel_center_to_point(

coors, voxel_mean, mean_coors)

# TODO: maybe also do cluster for reflectivity

f_cluster = features[:, :3] - points_mean[:, :3]

features_ls.append(f_cluster)

# Find distance of x, y, and z from pillar center

if self._with_voxel_center:

f_center = features.new_zeros(size=(features.size(0), 3))

f_center[:, 0] = features[:, 0] - (

coors[:, 3].type_as(features) * self.vx + self.x_offset)

f_center[:, 1] = features[:, 1] - (

coors[:, 2].type_as(features) * self.vy + self.y_offset)

f_center[:, 2] = features[:, 2] - (

coors[:, 1].type_as(features) * self.vz + self.z_offset)

features_ls.append(f_center)

if self._with_distance:

points_dist = torch.norm(features[:, :3], 2, 1, keepdim=True)

features_ls.append(points_dist)

# Combine together feature decorations

features = torch.cat(features_ls, dim=-1)

for i, pfn in enumerate(self.pfn_layers):

point_feats = pfn(features)

voxel_feats, voxel_coors = self.pfn_scatter(point_feats, coors)

if i != len(self.pfn_layers) - 1:

# need to concat voxel feats if it is not the last pfn

feat_per_point = self.map_voxel_center_to_point(

coors, voxel_feats, voxel_coors)

features = torch.cat([point_feats, feat_per_point], dim=1)

return voxel_feats, voxel_coors

- 第104行代码,将

voxel_dict['voxels'], voxel_dict['num_points'], voxel_dict['coors']作为实参传入 PointPillarsScatter 的forward()函数中的features, num_points, coors, 再用features 形成一个列表来设置 features_ls;

- 第106行代码,

self._with_cluster_center=True,if 条件成立,所以进入后面的语句; - 第107行代码,

通过 features 和 num_points 来获得 points_mean;

- 第110行代码,

通过 features 和 points_mean 来获得获得 f_cluster;

- 第111行代码,

将 f_cluster 添加进 features_ls 列表中;

- 第114行代码,

将 dtype 设置成 features.dtype=torch.float32;

- 第115行代码,

self._with_voxel_center=True,if 条件成立,所以进入后面的语句; - 第116行代码,

self.legacy=True,if not 条件不成立,所以进入后面的 else 语句; - 第128行代码,

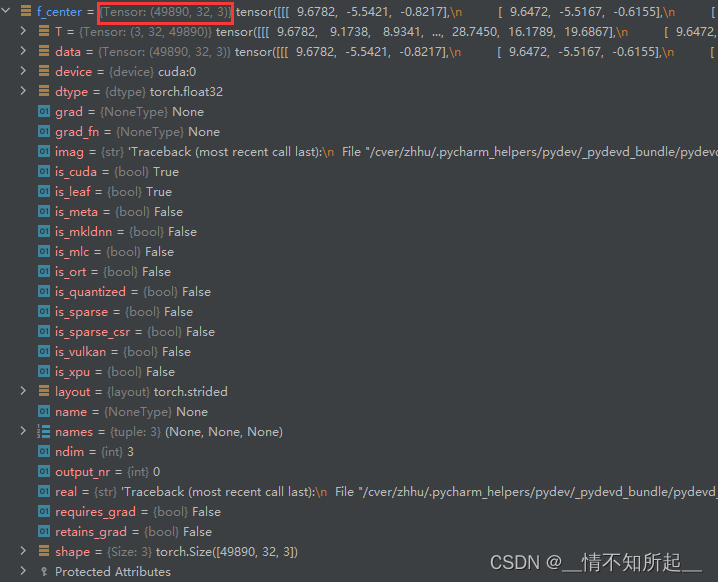

通过 features[:, :, :3] 来设置 f_center;

- 第129行代码,

重新计算 f_center[:, :, 0],后面的132行和135行代码的与129行代码的计算方法相同;

- 第138行代码,

将 f_center 添加进 features_ls 列表中;

- 第140行代码,

self._with_distance=False,if 条件不成立,所以跳过后面的语句; - 第145行代码,

通过 torch.cat() 函数在最后一个维度上拼接 features_ls 得到 features;

- 第149行代码,

通过 features.shape[1] 来设置 voxel_count;

- 第150行代码,

通过 num_points 来获得掩码 mask;

- 第151行代码,

通过 torch.unsqueeze() 函数给 mask 增加一个维度;

- 第152行代码,

将 features 再乘以 mask 重新设置 features;

- 第154行代码,PFNLayer 的代码如下所示,就是将 features 依次经过 线性层 ➱ 标准化层 ➱ RELU激活函数 ➱ dim=1的维度取最大值 的过程,最终获得

features;

class PFNLayer(nn.Module):

"""Pillar Feature Net Layer.

The Pillar Feature Net is composed of a series of these layers, but the

PointPillars paper results only used a single PFNLayer.

Args:

in_channels (int): Number of input channels.

out_channels (int): Number of output channels.

norm_cfg (dict, optional): Config dict of normalization layers.

Defaults to dict(type='BN1d', eps=1e-3, momentum=0.01).

last_layer (bool, optional): If last_layer, there is no

concatenation of features. Defaults to False.

mode (str, optional): Pooling model to gather features inside voxels.

Defaults to 'max'.

"""

def __init__(self,

in_channels,

out_channels,

norm_cfg=dict(type='BN1d', eps=1e-3, momentum=0.01),

last_layer=False,

mode='max'):

super().__init__()

self.fp16_enabled = False

self.name = 'PFNLayer'

self.last_vfe = last_layer

if not self.last_vfe:

out_channels = out_channels // 2

self.units = out_channels

self.norm = build_norm_layer(norm_cfg, self.units)[1]

self.linear = nn.Linear(in_channels, self.units, bias=False)

assert mode in ['max', 'avg']

self.mode = mode

def forward(self, inputs, num_voxels=None, aligned_distance=None):

"""Forward function.

Args:

inputs (torch.Tensor): Pillar/Voxel inputs with shape (N, M, C).

N is the number of voxels, M is the number of points in

voxels, C is the number of channels of point features.

num_voxels (torch.Tensor, optional): Number of points in each

voxel. Defaults to None.

aligned_distance (torch.Tensor, optional): The distance of

each points to the voxel center. Defaults to None.

Returns:

torch.Tensor: Features of Pillars.

"""

x = self.linear(inputs)

x = self.norm(x.permute(0, 2, 1).contiguous()).permute(0, 2,

1).contiguous()

x = F.relu(x)

if self.mode == 'max':

if aligned_distance is not None:

x = x.mul(aligned_distance.unsqueeze(-1))

x_max = torch.max(x, dim=1, keepdim=True)[0]

elif self.mode == 'avg':

if aligned_distance is not None:

x = x.mul(aligned_distance.unsqueeze(-1))

x_max = x.sum(

dim=1, keepdim=True) / num_voxels.type_as(inputs).view(

-1, 1, 1)

if self.last_vfe:

return x_max

else:

x_repeat = x_max.repeat(1, inputs.shape[1], 1)

x_concatenated = torch.cat([x, x_repeat], dim=2)

return x_concatenated

- PFNLayer 的第54行代码,

首先将 inputs 经过一个线性层;

- PFNLayer 的第55行代码,

然后将得到的 x 经过一个标准化层,注意这里的 x 经过了两次 permute 调整维度;- 经过第一次 permute 调整维度的 x:

- 经过第二次 permute 调整维度的 x:

- 经过第一次 permute 调整维度的 x:

- PFNLayer 的第57行代码,

之后将得到的 x 经过一个 RELU 激活函数层,

- PFNLayer 的第59行代码,

self.mode=max, if 条件满足,所以进入后面的语句; - PFNLayer 的第60行代码,

aligned_distance is None, if 条件不满足,所以跳过后面的语句; - PFNLayer 的第62行代码,

将 x 在 dim=1 的维度上进行拼接,取出每个 pillar 中的最大值;

- PFNLayer 的第70行代码,

self.last_vfe=True, if 条件满足,所以进入后面的语句; - PFNLayer 的第71行代码,

返回 x_max 给前面调用的函数; - 第155代码,获得经过 PFNLayers 处理之后的

features;

- 第157行代码,

最终返回 features.squeeze(1),这里最终得到的特征 features 相对于给每个 pillar 提取出来了一个 64 维的特征;

3.4 从 PointPillarsScatter 的 forward() 函数开始解读:

实现代码在 mmdetection/mmdet3d/models/middle_encoders/pillar_scatter.py

# Copyright (c) OpenMMLab. All rights reserved.

import torch

from torch import nn

from mmdet3d.registry import MODELS

@MODELS.register_module()

class PointPillarsScatter(nn.Module):

"""Point Pillar's Scatter.

Converts learned features from dense tensor to sparse pseudo image.

Args:

in_channels (int): Channels of input features.

output_shape (list[int]): Required output shape of features.

"""

def __init__(self, in_channels, output_shape):

super().__init__()

self.output_shape = output_shape

self.ny = output_shape[0]

self.nx = output_shape[1]

self.in_channels = in_channels

self.fp16_enabled = False

def forward(self, voxel_features, coors, batch_size=None):

"""Foraward function to scatter features."""

# TODO: rewrite the function in a batch manner

# no need to deal with different batch cases

if batch_size is not None:

return self.forward_batch(voxel_features, coors, batch_size)

else:

return self.forward_single(voxel_features, coors)

def forward_single(self, voxel_features, coors):

"""Scatter features of single sample.

Args:

voxel_features (torch.Tensor): Voxel features in shape (N, M, C).

coors (torch.Tensor): Coordinates of each voxel.

The first column indicates the sample ID.

"""

# Create the canvas for this sample

canvas = torch.zeros(

self.in_channels,

self.nx * self.ny,

dtype=voxel_features.dtype,

device=voxel_features.device)

indices = coors[:, 2] * self.nx + coors[:, 3]

indices = indices.long()

voxels = voxel_features.t()

# Now scatter the blob back to the canvas.

canvas[:, indices] = voxels

# Undo the column stacking to final 4-dim tensor

canvas = canvas.view(1, self.in_channels, self.ny, self.nx)

return canvas

def forward_batch(self, voxel_features, coors, batch_size):

"""Scatter features of single sample.

Args:

voxel_features (torch.Tensor): Voxel features in shape (N, M, C).

coors (torch.Tensor): Coordinates of each voxel in shape (N, 4).

The first column indicates the sample ID.

batch_size (int): Number of samples in the current batch.

"""

# batch_canvas will be the final output.

batch_canvas = []

for batch_itt in range(batch_size):

# Create the canvas for this sample

canvas = torch.zeros(

self.in_channels,

self.nx * self.ny,

dtype=voxel_features.dtype,

device=voxel_features.device)

# Only include non-empty pillars

batch_mask = coors[:, 0] == batch_itt

this_coors = coors[batch_mask, :]

indices = this_coors[:, 2] * self.nx + this_coors[:, 3]

indices = indices.type(torch.long)

voxels = voxel_features[batch_mask, :]

voxels = voxels.t()

# Now scatter the blob back to the canvas.

canvas[:, indices] = voxels

# Append to a list for later stacking.

batch_canvas.append(canvas)

# Stack to 3-dim tensor (batch-size, in_channels, nrows*ncols)

batch_canvas = torch.stack(batch_canvas, 0)

# Undo the column stacking to final 4-dim tensor

batch_canvas = batch_canvas.view(batch_size, self.in_channels, self.ny,

self.nx)

return batch_canvas

- 第31行代码,将

voxel_features, voxel_dict['coors'], batch_size作为实参传入 PointPillarsScatter 的forward()函数中的voxel_features, coors, batch_size, 这里的batch_size is not None,所以 if 条件成立,进入后面的语句。注意:这里的 51679 和前面得到的 49890 其实都是指的是batch_size=8的情况下所有的 pillar 数量,这里是因为我中间 debug 的时候中断了,所以重新 debug 一次,所以两者不一样了;

- 第32行代码,调用批处理函数

self.forward_batch(voxel_features, coors, batch_size); - 第70行代码,

初始化 batch_canvas 为空列表,最终返回的就是batch_canvas; - 第71行代码,进入 for 循环,这里以

batch_itt = 0进行举例,剩下的依次类推;

- 第73行代码,

设置画布 canvas,大小为(self.in_channels, self.nx * self.ny)=(64, 432*496)=(64, 214272);

- 第80行代码,

设置掩码 batch_mask;

- 第81行代码,

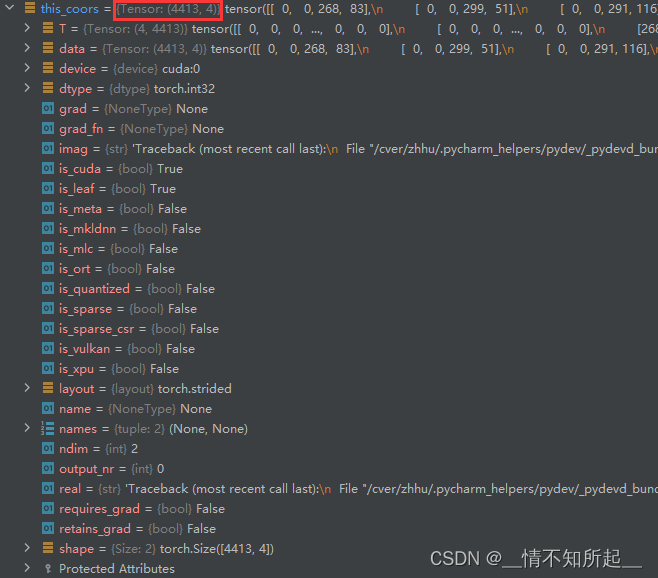

通过第 batch_itt=0 批次的 coors 来设置 this_coors;

- 第82行代码,

通过 this_coors 和 self.nx 来设置 indices;

- 第83行代码,

设置 indices 的类型;

- 第84行代码,

通过第 batch_itt=0 批次的 voxel_features 来设置 voxels;

- 第85行代码,

将 voxels 进行转置;

- 第88行代码,设置 canvas;

- 第91行代码,

在 batch_canvas 列表中添加 canvas;

- 第73行代码,

- 第71行代码,结束 for 循环,

获得 batch_canvas;

- 第94行代码,

通过 torch.stack 函数堆叠 batch_canvas;

- 第97行代码,

通过 view 函数展开 batch_canvas;

- 第32行代码,

最终返回 batch_canvas,这里最终得到的特征 batch_canvas 相对于是总共8个点云场景打包成一个 batch,每个点云场景都提取到了(C, H, W)= (64, 496, 432)的特征,相当于伪图像了,后面再送入2D检测框架;

第四章 PointPillars的2D检测框架使用

经过 PointPillars 的点云数据处理过程后,我们得到类似于图像的数据,因为只有 pillar 是非空的坐标处有提取的点云数据特征,其余地方都是 0 数据,所以我们得到的一个(batch_size,64, 496, 432)的张量还是很稀疏的,我们再将这个张量送入上图所示的 2D 检测框架。

PiontPillars 中的检测头采用了类似SSD的检测头设置,在检测头的先验框设置上一共有三个类别的先验框,每个类别的先验框只有一种尺度信息,分别是人[0.8, 0.6, 1.73]、自行车[1.76, 0.6, 1.73] 和车 [3.9, 1.6, 1.56](单位:米),而且每个先验框都有两个方向,分别是BEV视角下的0度和90度,所以总共有 6 个 anchor。

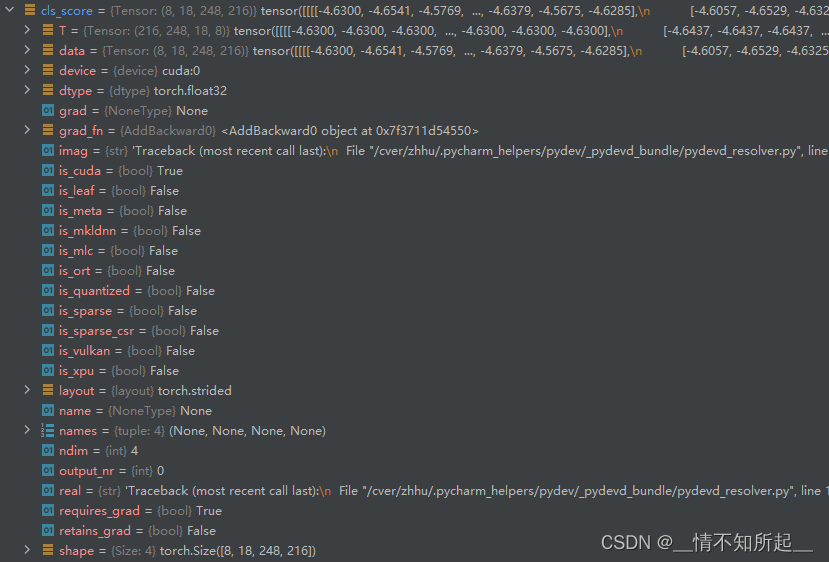

- 类别的预测:每个 anchor 的 c l s _ p r e d cls\_pred cls_pred 需要预测3个参数,分别表示该 anchor 是人、自行车和车的概率。

- 边界框的预测:每个 anchor 的 b b o x _ p r e d bbox\_pred bbox_pred 需要预测7个参数 ( x x x, y y y, z z z, w w w, l l l, h h h, θ θ θ),其中 x x x, y y y, z z z 预测一个 anchor 的中心坐标在点云中的位置, w w w, l l l, h h h预测了一个 anchor 的长宽高数据, θ θ θ 预测了 box 的旋转角度。

- 方向的预测:每个 anchor 的 d i r _ c l s _ p r e d dir\_cls\_pred dir_cls_pred 需要预测2个参数,因为在角度 θ θ θ 预测时候不可以区分两个完全相反的 box,所以 PiontPillars 的检测头中还添加了对一个 anchor 的方向预测。

PointPillars 的2D检测框架使用流程如下:

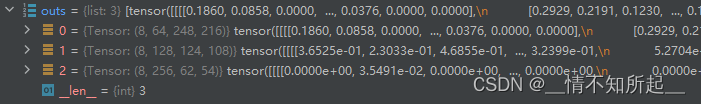

- 经过上图 红色标记的④后,我们得到了三个多尺度的特征,大小从上到下依次为: ( b a t c h _ s i z e , 64 , 248 , 216 ) (batch\_size,64, 248, 216) (batch_size,64,248,216)、 ( b a t c h _ s i z e , 128 , 124 , 108 ) (batch\_size,128, 124, 108) (batch_size,128,124,108)和 ( b a t c h _ s i z e , 256 , 62 , 54 ) (batch\_size,256, 62, 54) (batch_size,256,62,54)的张量;

- 接下来经过上图 红色标记的⑤,通过分别对多尺度特征进行上采样(将这些点通过 ConvTranspose2d 先升维,然后跟着 BatchNormalization 层和 Relu 激活层),得到相同大小的 ( b a t c h _ s i z e , 128 , 248 , 216 ) (batch\_size,128, 248, 216) (batch_size,128,248,216)形状的张量,再将其拼接在一起得到最终的特征 ( b a t c h _ s i z e , 384 , 248 , 216 ) (batch\_size,384, 248, 216) (batch_size,384,248,216);

- 最后将最终的特征 ( b a t c h _ s i z e , 384 , 248 , 216 ) (batch\_size,384, 248, 216) (batch_size,384,248,216)送入到三个卷积层分别预测 c l s _ s c o r e cls\_score cls_score, b b o x _ p r e d bbox\_pred bbox_pred, d i r _ c l s _ p r e d dir\_cls\_pred dir_cls_pred。

下面看这一部分的 MMDetection3D 代码实现:

4.1 从 SECOND 的 forward() 函数开始解读:

实现代码在 mmdetection/mmdet3d/models/backbones/second.py

# Copyright (c) OpenMMLab. All rights reserved.

import warnings

from mmcv.cnn import build_conv_layer, build_norm_layer

from mmengine.model import BaseModule

from torch import nn as nn

from mmdet3d.registry import MODELS

@MODELS.register_module()

class SECOND(BaseModule):

"""Backbone network for SECOND/PointPillars/PartA2/MVXNet.

Args:

in_channels (int): Input channels.

out_channels (list[int]): Output channels for multi-scale feature maps.

layer_nums (list[int]): Number of layers in each stage.

layer_strides (list[int]): Strides of each stage.

norm_cfg (dict): Config dict of normalization layers.

conv_cfg (dict): Config dict of convolutional layers.

"""

def __init__(self,

in_channels=128,

out_channels=[128, 128, 256],

layer_nums=[3, 5, 5],

layer_strides=[2, 2, 2],

norm_cfg=dict(type='BN', eps=1e-3, momentum=0.01),

conv_cfg=dict(type='Conv2d', bias=False),

init_cfg=None,

pretrained=None):

super(SECOND, self).__init__(init_cfg=init_cfg)

assert len(layer_strides) == len(layer_nums)

assert len(out_channels) == len(layer_nums)

in_filters = [in_channels, *out_channels[:-1]]

# note that when stride > 1, conv2d with same padding isn't

# equal to pad-conv2d. we should use pad-conv2d.

blocks = []

for i, layer_num in enumerate(layer_nums):

block = [

build_conv_layer(

conv_cfg,

in_filters[i],

out_channels[i],

3,

stride=layer_strides[i],

padding=1),

build_norm_layer(norm_cfg, out_channels[i])[1],

nn.ReLU(inplace=True),

]

for j in range(layer_num):

block.append(

build_conv_layer(

conv_cfg,

out_channels[i],

out_channels[i],

3,

padding=1))

block.append(build_norm_layer(norm_cfg, out_channels[i])[1])

block.append(nn.ReLU(inplace=True))

block = nn.Sequential(*block)

blocks.append(block)

self.blocks = nn.ModuleList(blocks)

assert not (init_cfg and pretrained), \

'init_cfg and pretrained cannot be setting at the same time'

if isinstance(pretrained, str):

warnings.warn('DeprecationWarning: pretrained is a deprecated, '

'please use "init_cfg" instead')

self.init_cfg = dict(type='Pretrained', checkpoint=pretrained)

else:

self.init_cfg = dict(type='Kaiming', layer='Conv2d')

def forward(self, x):

"""Forward function.

Args:

x (torch.Tensor): Input with shape (N, C, H, W).

Returns:

tuple[torch.Tensor]: Multi-scale features.

"""

outs = []

for i in range(len(self.blocks)):

x = self.blocks[i](x)

outs.append(x)

return tuple(outs)

- 第87行代码,

初始化 out 为空列表; - 第88行代码,

遍历 blocks,得到 multi-scale features;

注意:3个 block 的内部组成如下所示:

ModuleList(

(0): Sequential(

(0): Conv2d(64, 64, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(1): BatchNorm2d(64, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(4): BatchNorm2d(64, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(5): ReLU(inplace=True)

(6): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(7): BatchNorm2d(64, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(8): ReLU(inplace=True)

(9): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(10): BatchNorm2d(64, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(11): ReLU(inplace=True)

)

(1): Sequential(

(0): Conv2d(64, 128, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(1): BatchNorm2d(128, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(4): BatchNorm2d(128, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(5): ReLU(inplace=True)

(6): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(7): BatchNorm2d(128, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(8): ReLU(inplace=True)

(9): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(10): BatchNorm2d(128, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(11): ReLU(inplace=True)

(12): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(13): BatchNorm2d(128, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(14): ReLU(inplace=True)

(15): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(16): BatchNorm2d(128, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(17): ReLU(inplace=True)

)

(2): Sequential(

(0): Conv2d(128, 256, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(1): BatchNorm2d(256, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(4): BatchNorm2d(256, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(5): ReLU(inplace=True)

(6): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(7): BatchNorm2d(256, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(8): ReLU(inplace=True)

(9): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(10): BatchNorm2d(256, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(11): ReLU(inplace=True)

(12): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(13): BatchNorm2d(256, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(14): ReLU(inplace=True)

(15): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(16): BatchNorm2d(256, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(17): ReLU(inplace=True)

)

)

- 第91行代码,

最终返回 tuple(outs);

4.2 从 SECONDFPN 的 forward() 函数开始解读:

实现代码在 mmdetection/mmdet3d/models/necks/second_fpn.py

# Copyright (c) OpenMMLab. All rights reserved.

import numpy as np

import torch

from mmcv.cnn import build_conv_layer, build_norm_layer, build_upsample_layer

from mmengine.model import BaseModule

from torch import nn as nn

from mmdet3d.registry import MODELS

@MODELS.register_module()

class SECONDFPN(BaseModule):

"""FPN used in SECOND/PointPillars/PartA2/MVXNet.

Args:

in_channels (list[int]): Input channels of multi-scale feature maps.

out_channels (list[int]): Output channels of feature maps.

upsample_strides (list[int]): Strides used to upsample the

feature maps.

norm_cfg (dict): Config dict of normalization layers.

upsample_cfg (dict): Config dict of upsample layers.

conv_cfg (dict): Config dict of conv layers.

use_conv_for_no_stride (bool): Whether to use conv when stride is 1.

"""

def __init__(self,

in_channels=[128, 128, 256],

out_channels=[256, 256, 256],

upsample_strides=[1, 2, 4],

norm_cfg=dict(type='BN', eps=1e-3, momentum=0.01),

upsample_cfg=dict(type='deconv', bias=False),

conv_cfg=dict(type='Conv2d', bias=False),

use_conv_for_no_stride=False,

init_cfg=None):

# if for GroupNorm,

# cfg is dict(type='GN', num_groups=num_groups, eps=1e-3, affine=True)

super(SECONDFPN, self).__init__(init_cfg=init_cfg)

assert len(out_channels) == len(upsample_strides) == len(in_channels)

self.in_channels = in_channels

self.out_channels = out_channels

self.fp16_enabled = False

deblocks = []

for i, out_channel in enumerate(out_channels):

stride = upsample_strides[i]

if stride > 1 or (stride == 1 and not use_conv_for_no_stride):

upsample_layer = build_upsample_layer(

upsample_cfg,

in_channels=in_channels[i],

out_channels=out_channel,

kernel_size=upsample_strides[i],

stride=upsample_strides[i])

else:

stride = np.round(1 / stride).astype(np.int64)

upsample_layer = build_conv_layer(

conv_cfg,

in_channels=in_channels[i],

out_channels=out_channel,

kernel_size=stride,

stride=stride)

deblock = nn.Sequential(upsample_layer,

build_norm_layer(norm_cfg, out_channel)[1],

nn.ReLU(inplace=True))

deblocks.append(deblock)

self.deblocks = nn.ModuleList(deblocks)

if init_cfg is None:

self.init_cfg = [

dict(type='Kaiming', layer='ConvTranspose2d'),

dict(type='Constant', layer='NaiveSyncBatchNorm2d', val=1.0)

]

def forward(self, x):

"""Forward function.

Args:

x (torch.Tensor): 4D Tensor in (N, C, H, W) shape.

Returns:

list[torch.Tensor]: Multi-level feature maps.

"""

assert len(x) == len(self.in_channels)

ups = [deblock(x[i]) for i, deblock in enumerate(self.deblocks)]

if len(ups) > 1:

out = torch.cat(ups, dim=1)

else:

out = ups[0]

return [out]

- 第83行代码,

判断 x 的长度和 self.in_channels 的长度是否相同,两者都为 3,程序继续运行; - 第84行代码,

遍历 deblocks,对通过 backbone 得到的 multi-scale features 经过 upsample_layer,上采样到相同大小的特征图 ups;

注意:3个 deblock 的内部组成如下所示:

ModuleList(

(0): Sequential(

(0): ConvTranspose2d(64, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(128, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(1): Sequential(

(0): ConvTranspose2d(128, 128, kernel_size=(2, 2), stride=(2, 2), bias=False)

(1): BatchNorm2d(128, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

(2): Sequential(

(0): ConvTranspose2d(256, 128, kernel_size=(4, 4), stride=(4, 4), bias=False)

(1): BatchNorm2d(128, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

)

- 第86行代码,

len(ups) = 3,if 条件满足,所以进入后面的语句; - 第87行代码,

将 特征ups 在dim=1 的维度上进行拼接得到 out;

- 第90行代码,

返回最终得到的 [out];

4.3 从 Anchor3DHead 的 forward() 函数开始解读:

实现代码在 mmdetection/mmdet3d/models/dense_heads/anchor3d_head.py

# Copyright (c) OpenMMLab. All rights reserved.

import warnings

from typing import List, Tuple

import numpy as np

import torch

from mmdet.models.utils import multi_apply

from torch import Tensor

from torch import nn as nn

from mmdet3d.models.task_modules import PseudoSampler

from mmdet3d.models.test_time_augs import merge_aug_bboxes_3d

from mmdet3d.registry import MODELS, TASK_UTILS

from mmdet3d.utils.typing_utils import (ConfigType, InstanceList,

OptConfigType, OptInstanceList)

from .base_3d_dense_head import Base3DDenseHead

from .train_mixins import AnchorTrainMixin

@MODELS.register_module()

class Anchor3DHead(Base3DDenseHead, AnchorTrainMixin):

"""Anchor-based head for SECOND/PointPillars/MVXNet/PartA2.

Args:

num_classes (int): Number of classes.

in_channels (int): Number of channels in the input feature map.

feat_channels (int): Number of channels of the feature map.

use_direction_classifier (bool): Whether to add a direction classifier.

anchor_generator(dict): Config dict of anchor generator.

assigner_per_size (bool): Whether to do assignment for each separate

anchor size.

assign_per_class (bool): Whether to do assignment for each class.

diff_rad_by_sin (bool): Whether to change the difference into sin

difference for box regression loss.

dir_offset (float | int): The offset of BEV rotation angles.

(TODO: may be moved into box coder)

dir_limit_offset (float | int): The limited range of BEV

rotation angles. (TODO: may be moved into box coder)

bbox_coder (dict): Config dict of box coders.

loss_cls (dict): Config of classification loss.

loss_bbox (dict): Config of localization loss.

loss_dir (dict): Config of direction classifier loss.

train_cfg (dict): Train configs.

test_cfg (dict): Test configs.

init_cfg (dict or list[dict], optional): Initialization config dict.

"""

def __init__(self,

num_classes: int,

in_channels: int,

feat_channels: int = 256,

use_direction_classifier: bool = True,

anchor_generator: ConfigType = dict(

type='Anchor3DRangeGenerator',

range=[0, -39.68, -1.78, 69.12, 39.68, -1.78],

strides=[2],

sizes=[[3.9, 1.6, 1.56]],

rotations=[0, 1.57],

custom_values=[],

reshape_out=False),

assigner_per_size: bool = False,

assign_per_class: bool = False,

diff_rad_by_sin: bool = True,

dir_offset: float = -np.pi / 2,

dir_limit_offset: int = 0,

bbox_coder: ConfigType = dict(type='DeltaXYZWLHRBBoxCoder'),

loss_cls: ConfigType = dict(

type='mmdet.CrossEntropyLoss',

use_sigmoid=True,

loss_weight=1.0),

loss_bbox: ConfigType = dict(

type='mmdet.SmoothL1Loss',

beta=1.0 / 9.0,

loss_weight=2.0),

loss_dir: ConfigType = dict(

type='mmdet.CrossEntropyLoss', loss_weight=0.2),

train_cfg: OptConfigType = None,

test_cfg: OptConfigType = None,

init_cfg: OptConfigType = None) -> None:

super().__init__(init_cfg=init_cfg)

self.in_channels = in_channels

self.num_classes = num_classes

self.feat_channels = feat_channels

self.diff_rad_by_sin = diff_rad_by_sin

self.use_direction_classifier = use_direction_classifier

self.train_cfg = train_cfg

self.test_cfg = test_cfg

self.assigner_per_size = assigner_per_size

self.assign_per_class = assign_per_class

self.dir_offset = dir_offset

self.dir_limit_offset = dir_limit_offset

warnings.warn(

'dir_offset and dir_limit_offset will be depressed and be '

'incorporated into box coder in the future')

self.fp16_enabled = False

# build anchor generator

self.prior_generator = TASK_UTILS.build(anchor_generator)

# In 3D detection, the anchor stride is connected with anchor size

self.num_anchors = self.prior_generator.num_base_anchors

# build box coder

self.bbox_coder = TASK_UTILS.build(bbox_coder)

self.box_code_size = self.bbox_coder.code_size

# build loss function

self.use_sigmoid_cls = loss_cls.get('use_sigmoid', False)

self.sampling = loss_cls['type'] not in [

'mmdet.FocalLoss', 'mmdet.GHMC'

]

if not self.use_sigmoid_cls:

self.num_classes += 1

self.loss_cls = MODELS.build(loss_cls)

self.loss_bbox = MODELS.build(loss_bbox)

self.loss_dir = MODELS.build(loss_dir)

self.fp16_enabled = False

self._init_layers()

self._init_assigner_sampler()

if init_cfg is None:

self.init_cfg = dict(

type='Normal',

layer='Conv2d',

std=0.01,

override=dict(

type='Normal', name='conv_cls', std=0.01, bias_prob=0.01))

def _init_assigner_sampler(self):

"""Initialize the target assigner and sampler of the head."""

if self.train_cfg is None:

return

if self.sampling:

self.bbox_sampler = TASK_UTILS.build(self.train_cfg.sampler)

else:

self.bbox_sampler = PseudoSampler()

if isinstance(self.train_cfg.assigner, dict):

self.bbox_assigner = TASK_UTILS.build(self.train_cfg.assigner)

elif isinstance(self.train_cfg.assigner, list):

self.bbox_assigner = [

TASK_UTILS.build(res) for res in self.train_cfg.assigner

]

def _init_layers(self):

"""Initialize neural network layers of the head."""

self.cls_out_channels = self.num_anchors * self.num_classes

self.conv_cls = nn.Conv2d(self.feat_channels, self.cls_out_channels, 1)

self.conv_reg = nn.Conv2d(self.feat_channels,

self.num_anchors * self.box_code_size, 1)

if self.use_direction_classifier:

self.conv_dir_cls = nn.Conv2d(self.feat_channels,

self.num_anchors * 2, 1)

def forward_single(self, x: Tensor) -> Tuple[Tensor, Tensor, Tensor]:

"""Forward function on a single-scale feature map.

Args:

x (Tensor): Features of a single scale level.

Returns:

tuple:

cls_score (Tensor): Cls scores for a single scale level

the channels number is num_base_priors * num_classes.

bbox_pred (Tensor): Box energies / deltas for a single scale

level, the channels number is num_base_priors * C.

dir_cls_pred (Tensor | None): Direction classification

prediction for a single scale level, the channels

number is num_base_priors * 2.

"""

cls_score = self.conv_cls(x)

bbox_pred = self.conv_reg(x)

dir_cls_pred = None

if self.use_direction_classifier:

dir_cls_pred = self.conv_dir_cls(x)

return cls_score, bbox_pred, dir_cls_pred

def forward(self, x: Tuple[Tensor]) -> Tuple[List[Tensor]]:

"""Forward pass.

Args:

x (tuple[Tensor]): Features from the upstream network,

each is a 4D-tensor.

Returns:

tuple: A tuple of classification scores, bbox and direction

classification prediction.

- cls_scores (list[Tensor]): Classification scores for all

scale levels, each is a 4D-tensor, the channels number

is num_base_priors * num_classes.

- bbox_preds (list[Tensor]): Box energies / deltas for all

scale levels, each is a 4D-tensor, the channels number

is num_base_priors * C.

- dir_cls_preds (list[Tensor|None]): Direction classification

predictions for all scale levels, each is a 4D-tensor,

the channels number is num_base_priors * 2.

"""

return multi_apply(self.forward_single, x)

# TODO: Support augmentation test

def aug_test(self,

aug_batch_feats,

aug_batch_input_metas,

rescale=False,

**kwargs):

aug_bboxes = []

# only support aug_test for one sample

for x, input_meta in zip(aug_batch_feats, aug_batch_input_metas):

outs = self.forward(x)

bbox_list = self.get_results(*outs, [input_meta], rescale=rescale)

bbox_dict = dict(

bboxes_3d=bbox_list[0].bboxes_3d,

scores_3d=bbox_list[0].scores_3d,

labels_3d=bbox_list[0].labels_3d)

aug_bboxes.append(bbox_dict)

# after merging, bboxes will be rescaled to the original image size

merged_bboxes = merge_aug_bboxes_3d(aug_bboxes, aug_batch_input_metas,

self.test_cfg)

return [merged_bboxes]

def get_anchors(self,

featmap_sizes: List[tuple],

input_metas: List[dict],

device: str = 'cuda') -> list:

"""Get anchors according to feature map sizes.

Args:

featmap_sizes (list[tuple]): Multi-level feature map sizes.

input_metas (list[dict]): contain pcd and img's meta info.

device (str): device of current module.

Returns:

list[list[torch.Tensor]]: Anchors of each image, valid flags

of each image.

"""

num_imgs = len(input_metas)

# since feature map sizes of all images are the same, we only compute

# anchors for one time

multi_level_anchors = self.prior_generator.grid_anchors(

featmap_sizes, device=device)

anchor_list = [multi_level_anchors for _ in range(num_imgs)]

return anchor_list

def _loss_by_feat_single(self, cls_score: Tensor, bbox_pred: Tensor,

dir_cls_pred: Tensor, labels: Tensor,

label_weights: Tensor, bbox_targets: Tensor,

bbox_weights: Tensor, dir_targets: Tensor,

dir_weights: Tensor, num_total_samples: int):

"""Calculate loss of Single-level results.

Args:

cls_score (Tensor): Class score in single-level.

bbox_pred (Tensor): Bbox prediction in single-level.

dir_cls_pred (Tensor): Predictions of direction class

in single-level.

labels (Tensor): Labels of class.

label_weights (Tensor): Weights of class loss.

bbox_targets (Tensor): Targets of bbox predictions.

bbox_weights (Tensor): Weights of bbox loss.

dir_targets (Tensor): Targets of direction predictions.

dir_weights (Tensor): Weights of direction loss.

num_total_samples (int): The number of valid samples.

Returns:

tuple[torch.Tensor]: Losses of class, bbox

and direction, respectively.

"""

# classification loss

if num_total_samples is None:

num_total_samples = int(cls_score.shape[0])

labels = labels.reshape(-1)

label_weights = label_weights.reshape(-1)

cls_score = cls_score.permute(0, 2, 3, 1).reshape(-1, self.num_classes)

assert labels.max().item() <= self.num_classes

loss_cls = self.loss_cls(

cls_score, labels, label_weights, avg_factor=num_total_samples)

# regression loss

bbox_pred = bbox_pred.permute(0, 2, 3,

1).reshape(-1, self.box_code_size)

bbox_targets = bbox_targets.reshape(-1, self.box_code_size)

bbox_weights = bbox_weights.reshape(-1, self.box_code_size)

bg_class_ind = self.num_classes

pos_inds = ((labels >= 0)

& (labels < bg_class_ind)).nonzero(

as_tuple=False).reshape(-1)

num_pos = len(pos_inds)

pos_bbox_pred = bbox_pred[pos_inds]

pos_bbox_targets = bbox_targets[pos_inds]

pos_bbox_weights = bbox_weights[pos_inds]

# dir loss

if self.use_direction_classifier:

dir_cls_pred = dir_cls_pred.permute(0, 2, 3, 1).reshape(-1, 2)

dir_targets = dir_targets.reshape(-1)

dir_weights = dir_weights.reshape(-1)

pos_dir_cls_pred = dir_cls_pred[pos_inds]

pos_dir_targets = dir_targets[pos_inds]

pos_dir_weights = dir_weights[pos_inds]

if num_pos > 0:

code_weight = self.train_cfg.get('code_weight', None)

if code_weight:

pos_bbox_weights = pos_bbox_weights * bbox_weights.new_tensor(

code_weight)

if self.diff_rad_by_sin:

pos_bbox_pred, pos_bbox_targets = self.add_sin_difference(

pos_bbox_pred, pos_bbox_targets)

loss_bbox = self.loss_bbox(

pos_bbox_pred,

pos_bbox_targets,

pos_bbox_weights,

avg_factor=num_total_samples)

# direction classification loss

loss_dir = None

if self.use_direction_classifier:

loss_dir = self.loss_dir(

pos_dir_cls_pred,

pos_dir_targets,

pos_dir_weights,

avg_factor=num_total_samples)

else:

loss_bbox = pos_bbox_pred.sum()

if self.use_direction_classifier:

loss_dir = pos_dir_cls_pred.sum()

return loss_cls, loss_bbox, loss_dir

@staticmethod

def add_sin_difference(boxes1: Tensor, boxes2: Tensor) -> tuple:

"""Convert the rotation difference to difference in sine function.

Args:

boxes1 (torch.Tensor): Original Boxes in shape (NxC), where C>=7

and the 7th dimension is rotation dimension.

boxes2 (torch.Tensor): Target boxes in shape (NxC), where C>=7 and

the 7th dimension is rotation dimension.

Returns:

tuple[torch.Tensor]: ``boxes1`` and ``boxes2`` whose 7th

dimensions are changed.

"""

rad_pred_encoding = torch.sin(boxes1[..., 6:7]) * torch.cos(

boxes2[..., 6:7])

rad_tg_encoding = torch.cos(boxes1[..., 6:7]) * torch.sin(boxes2[...,

6:7])

boxes1 = torch.cat(

[boxes1[..., :6], rad_pred_encoding, boxes1[..., 7:]], dim=-1)

boxes2 = torch.cat([boxes2[..., :6], rad_tg_encoding, boxes2[..., 7:]],

dim=-1)

return boxes1, boxes2

def loss_by_feat(

self,

cls_scores: List[Tensor],

bbox_preds: List[Tensor],

dir_cls_preds: List[Tensor],

batch_gt_instances_3d: InstanceList,

batch_input_metas: List[dict],

batch_gt_instances_ignore: OptInstanceList = None) -> dict:

"""Calculate the loss based on the features extracted by the detection

head.

Args:

cls_scores (list[torch.Tensor]): Multi-level class scores.

bbox_preds (list[torch.Tensor]): Multi-level bbox predictions.

dir_cls_preds (list[torch.Tensor]): Multi-level direction

class predictions.

batch_gt_instances_3d (list[:obj:`InstanceData`]): Batch of

gt_instances. It usually includes ``bboxes_3d``

and ``labels_3d`` attributes.

batch_input_metas (list[dict]): Contain pcd and img's meta info.

batch_gt_instances_ignore (list[:obj:`InstanceData`], optional):

Batch of gt_instances_ignore. It includes ``bboxes`` attribute

data that is ignored during training and testing.

Defaults to None.

Returns:

dict[str, list[torch.Tensor]]: Classification, bbox, and

direction losses of each level.

- loss_cls (list[torch.Tensor]): Classification losses.

- loss_bbox (list[torch.Tensor]): Box regression losses.

- loss_dir (list[torch.Tensor]): Direction classification

losses.

"""

featmap_sizes = [featmap.size()[-2:] for featmap in cls_scores]

assert len(featmap_sizes) == self.prior_generator.num_levels

device = cls_scores[0].device

anchor_list = self.get_anchors(

featmap_sizes, batch_input_metas, device=device)

label_channels = self.cls_out_channels if self.use_sigmoid_cls else 1

cls_reg_targets = self.anchor_target_3d(

anchor_list,

batch_gt_instances_3d,

batch_input_metas,

batch_gt_instances_ignore=batch_gt_instances_ignore,

num_classes=self.num_classes,

label_channels=label_channels,

sampling=self.sampling)

if cls_reg_targets is None:

return None

(labels_list, label_weights_list, bbox_targets_list, bbox_weights_list,

dir_targets_list, dir_weights_list, num_total_pos,

num_total_neg) = cls_reg_targets

num_total_samples = (

num_total_pos + num_total_neg if self.sampling else num_total_pos)

# num_total_samples = None

losses_cls, losses_bbox, losses_dir = multi_apply(

self._loss_by_feat_single,

cls_scores,

bbox_preds,

dir_cls_preds,

labels_list,

label_weights_list,

bbox_targets_list,

bbox_weights_list,

dir_targets_list,

dir_weights_list,

num_total_samples=num_total_samples)

return dict(

loss_cls=losses_cls, loss_bbox=losses_bbox, loss_dir=losses_dir)

- 第198行代码,

调用 self.forward_single 函数处理 x;

- 第170行代码,

调用 self.conv_cls = nn.Conv2d(self.feat_channels, self.cls_out_channels, 1) 一个二维卷积层处理 x 来得到 cls_score;

- 第171行代码,

调用 self.conv_reg = nn.Conv2d(self.feat_channels, self.num_anchors * self.box_code_size, 1) 一个二维卷积层处理 x 来得到 bbox_pred;

- 第174行代码,

调用 self.conv_dir_cls = nn.Conv2d(self.feat_channels, self.num_anchors * 2, 1)一个二维卷积层处理 x 来得到 dir_cls_pred;

- 第175行代码,

返回得到的 cls_score, bbox_pred, dir_cls_pred 给前面调用的函数; - 第198行代码,

调用 self.forward_single 函数处理 x 结束;

至此,我们的 网络结构篇 完满结束,接下来将进入到我们 PointPillars 的损失计算部分。

3万+

3万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?