小觅双目深度版性能分析

今年(18年)11月9号小觅智能科技的深度版双目相机上市,于是我在12月初花了2999软妹币购买了120度视角的相机。

其中我比较感兴趣的是 双目+惯导+结构光 的多传感器融合,这样跑单目,双目,RGBD,VIO都没问题。

关于深度计算

内置了一颗“深度计算芯片”,可以在设备端完成双目深度计算,通常双目深度计算还是很耗时间的,之前的标准版需要性能很强的计算平台才能实时获得深度图。双目的最大测量距离在20米。

关于IMU

内部采用博世的MBI088高性能惯性测量单元,由一个三轴16位加速度传感器和一个三轴16位陀螺仪组成。这款IMU可在温度波动环境下提供低噪音和低漂移特性。非常适合在高振动环境下使用。这一款IMU还是比较先进的。

关于IR红外

采用红外主动光技术,在黑暗的环境中通过投射出红外结构光到物体上,然后反射回到相机感光芯片中(双目的感光芯片的可识别波段包括红外,具体波段不清楚)。第二个作用是由于我们获取深度图是通过双目计算得到的,不像kinect通过TOF技术获取深度图,单独使用双目测距技术无法获取类似白墙的深度信息,小觅采用的红外主动光技术可以在白墙上投射一些云纹,这种人为的制造纹理的方法可以让双目通过匹配计算出白墙的深度信息。

其他特点

提供了可选择的帧率,分辨率大小等,RGB全彩色,相机支持双目同步曝光和全局曝光,还有一个测距功能。最高支持帧率为80fps,识别最远距离为20m,精度为mm到cm级别。

安装好相关的SDK,进行VIO测试。

VIO测试

修改相机配置

打开 MYNT-EYE-D-SDK/wrappers/ros/src/mynteye_wrapper_d/launch路径下的 mynteye.launch 文件

里面可以修改一些默认参数。

我修改了其中部分数据

<arg name="framerate" default="30" />

<arg name="dev_index" default="0" />

<arg name="color_mode" default="$(arg color_raw)" />

<arg name="depth_mode" default="$(arg depth_raw)" />

<arg name="stream_mode" default="$(arg stream_1280x720)" />

修改VINS-Mono

第一步:在config文件下建立一个名为mynteye的文件夹,添加mynteye_config.yaml文件。

第二步:新建一个mynteye.launch文件。参考euroc.launch修改即可。

imu_topic订阅Image话题 /mynteye/left/image_color

imu_topic订阅IMU话题 /mynteye/imu/data_raw

image_width和image_height修改为对应的大小

projection_parameters和distortion_parameters 按照获取的image_params.params对应修改

output_path和pose_graph_save_path 保存路径和输出路径改为自己对应的路径下

extrinsicRotation和extrinsicTranslation 按照获取的imu_params.params对应修改,这两个矩阵代表IMU和相机之间的位姿关系。

下面是详细的代码

%YAML:1.0

#common parameters

imu_topic: "/mynteye/imu/data_raw"

imu_topic: "/mynteye/left/image_color"

output_path: "/home/wpr/catkin_vins/src/VINS-Mono/config/output_path/"

#camera calibration

model_type: PINHOLE

camera_name: camera

image_width: 1280

image_height: 720

distortion_parameters:

k1: -0.29416275024414062

k2: 0.07796478271484375

p1: -0.00074768066406250

p2: 0.00035476684570312

projection_parameters:

fx: 700.96905517578125000

fy: 701.55493164062500000

cx: 642.62341308593750000

cy: 341.66424560546875000

# Extrinsic parameter between IMU and Camera.

estimate_extrinsic: 0 # 0 Have an accurate extrinsic parameters. We will trust the following imu^R_cam, imu^T_cam, don't change it.

# 1 Have an initial guess about extrinsic parameters. We will optimize around your initial guess.

# 2 Don't know anything about extrinsic parameters. You don't need to give R,T. We will try to calibrate it. Do some rotation movement at beginning.

#If you choose 0 or 1, you should write down the following matrix.

#Rotation from camera frame to imu frame, imu^R_cam

extrinsicRotation: !!opencv-matrix

rows: 3

cols: 3

dt: d

data: [0.99996651999999997, 0.00430873000000000, 0.00695718000000000, 0.00434878000000000, -0.99997400999999997, -0.00575128000000000, 0.00693222000000000, 0.00578135000000000, -0.99995926000000002]

#Translation from camera frame to imu frame, imu^T_cam

extrinsicTranslation: !!opencv-matrix

rows: 3

cols: 1

dt: d

data: [-0.04777362000000000108, -0.00223730999999999991, -0.00160071000000000008]

#feature traker paprameters

max_cnt: 150 # max feature number in feature tracking

min_dist: 30 # min distance between two features

freq: 10 # frequence (Hz) of publish tracking result. At least 10Hz for good estimation. If set 0, the frequence will be same as raw image

F_threshold: 1.0 # ransac threshold (pixel)

show_track: 1 # publish tracking image as topic

equalize: 1 # if image is too dark or light, trun on equalize to find enough features

fisheye: 0 # if using fisheye, trun on it. A circle mask will be loaded to remove edge noisy points

#optimization parameters

max_solver_time: 0.04 # max solver itration time (ms), to guarantee real time

max_num_iterations: 8 # max solver itrations, to guarantee real time

keyframe_parallax: 10.0 # keyframe selection threshold (pixel)

#imu parameters The more accurate parameters you provide, the better performance

acc_n: 0.08 # accelerometer measurement noise standard deviation. #0.2 0.04

gyr_n: 0.004 # gyroscope measurement noise standard deviation. #0.05 0.004

acc_w: 0.00004 # accelerometer bias random work noise standard deviation. #0.02

gyr_w: 2.0e-6 # gyroscope bias random work noise standard deviation. #4.0e-5

g_norm: 9.81007 # gravity magnitude

#loop closure parameters

loop_closure: 1 # start loop closure

load_previous_pose_graph: 0 # load and reuse previous pose graph; load from 'pose_graph_save_path'

fast_relocalization: 0 # useful in real-time and large project

pose_graph_save_path: "/home/wpr/catkin_vins/src/VINS-Mono/config/output_path/" # save and load path

#unsynchronization parameters

estimate_td: 0 # online estimate time offset between camera and imu

td: 0.0 # initial value of time offset. unit: s. readed image clock + td = real image clock (IMU clock)

#rolling shutter parameters

rolling_shutter: 0 # 0: global shutter camera, 1: rolling shutter camera

rolling_shutter_tr: 0 # unit: s. rolling shutter read out time per frame (from data sheet).

#visualization parameters

save_image: 1 # save image in pose graph for visualization prupose; you can close this function by setting 0

visualize_imu_forward: 0 # output imu forward propogation to achieve low latency and high frequence results

visualize_camera_size: 0.4 # size of camera marker in RVIZ

<launch>

<arg name="config_path" default = "$(find feature_tracker)/../config/mynteye/mynteye_config.yaml" />

<arg name="vins_path" default = "$(find feature_tracker)/../config/../" />

<node name="feature_tracker" pkg="feature_tracker" type="feature_tracker" output="log">

<param name="config_file" type="string" value="$(arg config_path)" />

<param name="vins_folder" type="string" value="$(arg vins_path)" />

</node>

<node name="vins_estimator" pkg="vins_estimator" type="vins_estimator" output="screen">

<param name="config_file" type="string" value="$(arg config_path)" />

<param name="vins_folder" type="string" value="$(arg vins_path)" />

</node>

<node name="pose_graph" pkg="pose_graph" type="pose_graph" output="screen">

<param name="config_file" type="string" value="$(arg config_path)" />

<param name="visualization_shift_x" type="int" value="0" />

<param name="visualization_shift_y" type="int" value="0" />

<param name="skip_cnt" type="int" value="0" />

<param name="skip_dis" type="double" value="0" />

</node>

</launch>

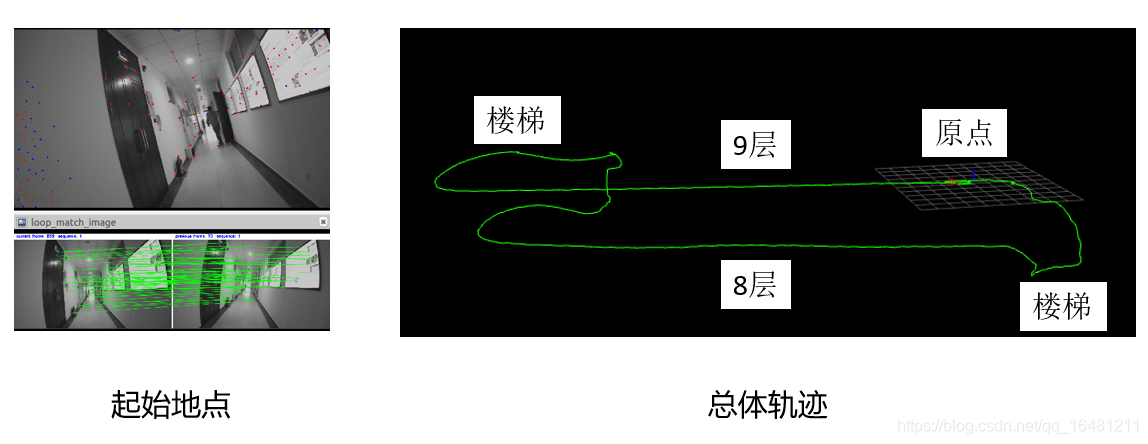

运行VINS测试轨迹

source ./wrappers/ros/devel/setup.bash

roslaunch mynteye_wrapper_d mynteye.launch

roslaunch vins_estimator mynteye.launch

roslaunch vins_estimator vins_rviz.launch

运行中遇到的一些小问题以及解决方法:

一开始由于IMU的extrinsicTranslation和extrinsicRotation写错,导致IMU初始化后就立马跑飞,轨迹图很杂乱,小觅sdk生成的矩阵的元素单位为豪米,配置文件为米,导致差别特别大,修改之后就不会乱飘啦。

另外初始化的时候尽量对着特征点比较多的方向,否则初始化生成的不好的参数会影响后面的效果。

测试结果

1552

1552

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?