记录|深度学习100例-卷积神经网络(CNN)minist数字分类 | 第2天

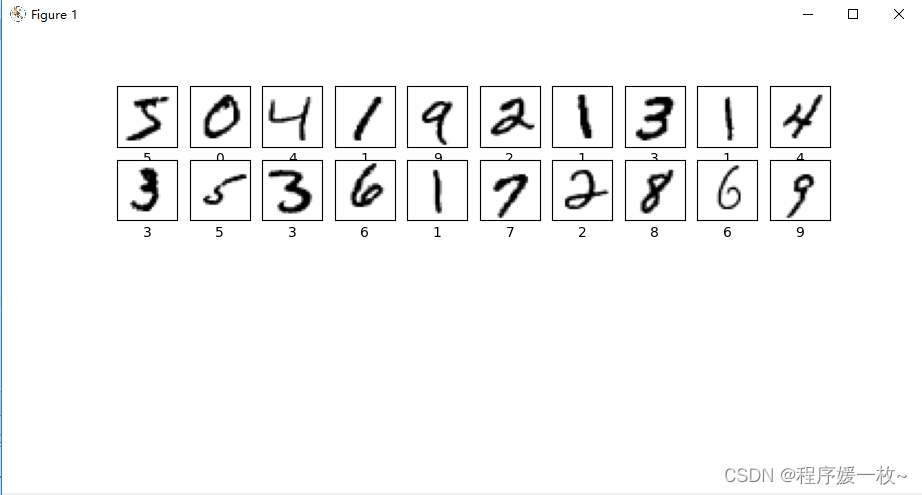

1. minist0-9数字分类效果图

数据集如下:

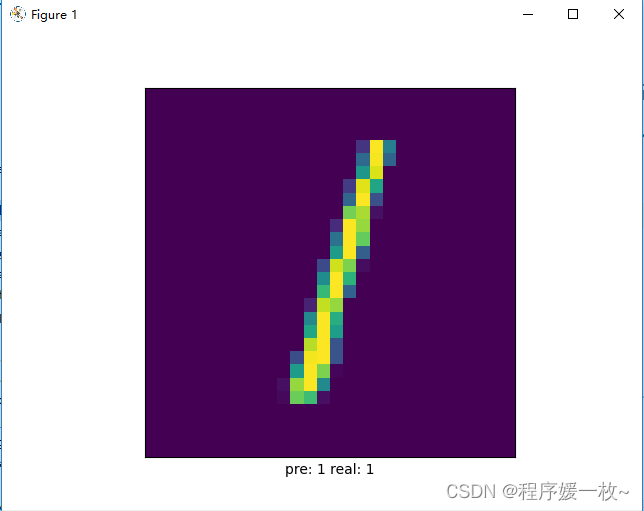

分类及预测图如下:预测标签值和真实标签值如下图所示,成功预测

分类及预测图如下:预测标签值和真实标签值如下图所示,成功预测

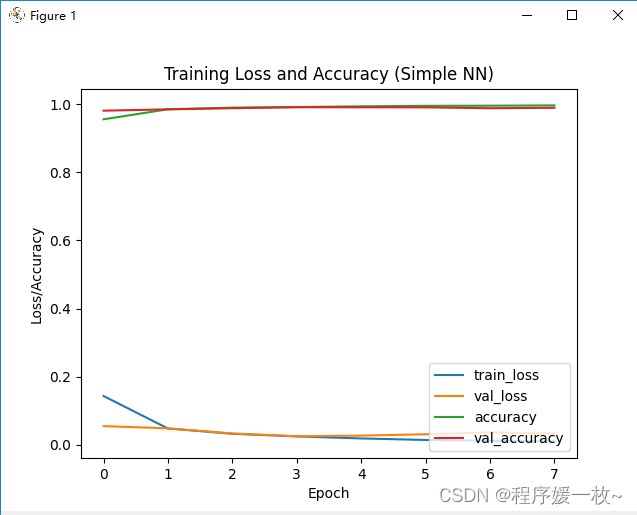

训练Loss/Accuracy图如下:

源码

# 深度学习100例-卷积神经网络(CNN)实现mnist手写数字识别 | 第1天

# USAGE

# python img_digit1.py

import matplotlib.pyplot as plt

import numpy as np

import tensorflow as tf

from tensorflow.keras import datasets, layers, models

gpus = tf.config.list_physical_devices("GPU")

if gpus:

gpu0 = gpus[0] # 如果有多个GPU,仅使用第0个GPU

tf.config.experimental.set_memory_growth(gpu0, True) # 设置GPU显存用量按需使用

tf.config.set_visible_devices([gpu0], "GPU")

# 导入数据

(train_images, train_labels), (test_images, test_labels) = datasets.mnist.load_data()

# 将像素的值标准化至0到1的区间内。

train_images, test_images = train_images / 255.0, test_images / 255.0

print(train_images.shape, test_images.shape, train_labels.shape, test_labels.shape)

# 调整数据到我们需要的格式

train_images = train_images.reshape((60000, 28, 28, 1))

test_images = test_images.reshape((10000, 28, 28, 1))

print(train_images.shape, test_images.shape, train_labels.shape, test_labels.shape)

# 可视化

plt.figure(figsize=(20, 10))

for i in range(20):

plt.subplot(5, 10, i + 1)

plt.xticks([])

plt.yticks([])

plt.grid(False)

plt.imshow(train_images[i], cmap=plt.cm.binary)

plt.xlabel(train_labels[i])

plt.show()

# 构建网络

model = models.Sequential([

layers.Conv2D(32, (3, 3), activation='relu', input_shape=(28, 28, 1)), # 卷积层1,卷积核3*3

layers.MaxPooling2D((2, 2)), # 池化层1,2*2采样

layers.Conv2D(64, (3, 3), activation='relu'), # 卷积层2,卷积核3*3

layers.MaxPooling2D((2, 2)), # 池化层2,2*2采样

layers.Flatten(), # Flatten层,连接卷积层与全连接层

layers.Dense(64, activation='relu'), # 全连接层,特征进一步提取

layers.Dense(10) # 输出层,输出预期结果

])

# 打印网络结构

model.summary()

# 编译模型

"""

设置优化器、损失函数以及metrics

这三者具体介绍可参考:https://blog.csdn.net/qq_38251616/category_10258234.html

"""

model.compile(optimizer='adam',

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True),

metrics=['accuracy'])

# 训练模型

"""

这里设置输入训练数据集(图片及标签)、验证数据集(图片及标签)以及迭代次数epochs

关于model.fit()函数的具体介绍可参考:https://blog.csdn.net/qq_38251616/category_10258234.html

"""

history = model.fit(train_images, train_labels, epochs=8,

validation_data=(test_images, test_labels))

pre = model.predict(test_images)

print('pre: ' + str(np.argmax(pre[2])) + ' real: ' + str(test_labels[2]))

plt.imshow(test_images[2])

plt.xticks([])

plt.yticks([])

plt.xlabel('pre: ' + str(np.argmax(pre[2])) + ' real: ' + str(test_labels[2]))

plt.show()

plt.plot(history.history["loss"], label="train_loss")

plt.plot(history.history["val_loss"], label="val_loss")

plt.plot(history.history['accuracy'], label='accuracy')

plt.plot(history.history['val_accuracy'], label='val_accuracy')

plt.title("Training Loss and Accuracy (Simple NN)")

plt.xlabel('Epoch')

plt.ylabel('Loss/Accuracy')

# plt.ylim([0.5, 1])

plt.legend(loc='lower right')

plt.show()

test_loss, test_acc = model.evaluate(test_images, test_labels, verbose=2)

print(test_acc)

9万+

9万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?