①yolov7的默认锚框

yolov7的默认锚框都在 .\cfg\training 的yaml文件里面,比如yolov7-tiny.yaml

# anchors

anchors:

- [10,13, 16,30, 33,23] # P3/8

- [30,61, 62,45, 59,119] # P4/16

- [116,90, 156,198, 373,326] # P5/32其中, 每一行代表应用不同的特征图;一行最大,二行中等,三行最小。

②自定义锚框

yolov5和yolov7带有自适应锚框

具体代码在 ..\utils\autoanchor.py 文件里面,

代码如下:

1.训练时自动计算锚框:

def check_anchors(dataset, model, thr=4.0, imgsz=640):

# Check anchor fit to data, recompute if necessary

prefix = colorstr('autoanchor: ')

print(f'\n{prefix}Analyzing anchors... ', end='')

m = model.module.model[-1] if hasattr(model, 'module') else model.model[-1] # Detect()

shapes = imgsz * dataset.shapes / dataset.shapes.max(1, keepdims=True)

scale = np.random.uniform(0.9, 1.1, size=(shapes.shape[0], 1)) # augment scale

wh = torch.tensor(np.concatenate([l[:, 3:5] * s for s, l in zip(shapes * scale, dataset.labels)])).float() # wh

def metric(k): # compute metric

r = wh[:, None] / k[None]

x = torch.min(r, 1. / r).min(2)[0] # ratio metric

best = x.max(1)[0] # best_x

aat = (x > 1. / thr).float().sum(1).mean() # anchors above threshold

bpr = (best > 1. / thr).float().mean() # best possible recall

return bpr, aat

anchors = m.anchor_grid.clone().cpu().view(-1, 2) # current anchors

bpr, aat = metric(anchors)

print(f'anchors/target = {aat:.2f}, Best Possible Recall (BPR) = {bpr:.4f}', end='')

if bpr < 0.98: # threshold to recompute

print('. Attempting to improve anchors, please wait...')

na = m.anchor_grid.numel() // 2 # number of anchors

try:

anchors = kmean_anchors(dataset, n=na, img_size=imgsz, thr=thr, gen=1000, verbose=False)

except Exception as e:

print(f'{prefix}ERROR: {e}')

new_bpr = metric(anchors)[0]

if new_bpr > bpr: # replace anchors

anchors = torch.tensor(anchors, device=m.anchors.device).type_as(m.anchors)

m.anchor_grid[:] = anchors.clone().view_as(m.anchor_grid) # for inference

check_anchor_order(m)

m.anchors[:] = anchors.clone().view_as(m.anchors) / m.stride.to(m.anchors.device).view(-1, 1, 1) # loss

print(f'{prefix}New anchors saved to model. Update model *.yaml to use these anchors in the future.')

else:

print(f'{prefix}Original anchors better than new anchors. Proceeding with original anchors.')

print('') # newline其中的主要参数是 bpr和aat

其中bpr 参数就是判断是否需要重新计算锚定框的依据(是否小于 0.98)。

2.重新计算符合数据集的锚框代码如下:

def kmean_anchors(path='./data/coco.yaml', n=9, img_size=640, thr=4.0, gen=1000, verbose=True):

""" Creates kmeans-evolved anchors from training dataset

Arguments:

path: path to dataset *.yaml, or a loaded dataset

n: number of anchors

img_size: image size used for training

thr: anchor-label wh ratio threshold hyperparameter hyp['anchor_t'] used for training, default=4.0

gen: generations to evolve anchors using genetic algorithm

verbose: print all results

Return:

k: kmeans evolved anchors

Usage:

from utils.autoanchor import *; _ = kmean_anchors()

"""

thr = 1. / thr

prefix = colorstr('autoanchor: ')

def metric(k, wh): # compute metrics

r = wh[:, None] / k[None]

x = torch.min(r, 1. / r).min(2)[0] # ratio metric

# x = wh_iou(wh, torch.tensor(k)) # iou metric

return x, x.max(1)[0] # x, best_x

def anchor_fitness(k): # mutation fitness

_, best = metric(torch.tensor(k, dtype=torch.float32), wh)

return (best * (best > thr).float()).mean() # fitness

def print_results(k):

k = k[np.argsort(k.prod(1))] # sort small to large

x, best = metric(k, wh0)

bpr, aat = (best > thr).float().mean(), (x > thr).float().mean() * n # best possible recall, anch > thr

print(f'{prefix}thr={thr:.2f}: {bpr:.4f} best possible recall, {aat:.2f} anchors past thr')

print(f'{prefix}n={n}, img_size={img_size}, metric_all={x.mean():.3f}/{best.mean():.3f}-mean/best, '

f'past_thr={x[x > thr].mean():.3f}-mean: ', end='')

for i, x in enumerate(k):

print('%i,%i' % (round(x[0]), round(x[1])), end=', ' if i < len(k) - 1 else '\n') # use in *.cfg

return k

if isinstance(path, str): # *.yaml file

with open(path) as f:

data_dict = yaml.load(f, Loader=yaml.SafeLoader) # model dict

from utils.datasets import LoadImagesAndLabels

dataset = LoadImagesAndLabels(data_dict['train'], augment=True, rect=True)

else:

dataset = path # dataset

# Get label wh

shapes = img_size * dataset.shapes / dataset.shapes.max(1, keepdims=True)

wh0 = np.concatenate([l[:, 3:5] * s for s, l in zip(shapes, dataset.labels)]) # wh

# Filter

i = (wh0 < 3.0).any(1).sum()

if i:

print(f'{prefix}WARNING: Extremely small objects found. {i} of {len(wh0)} labels are < 3 pixels in size.')

wh = wh0[(wh0 >= 2.0).any(1)] # filter > 2 pixels

# wh = wh * (np.random.rand(wh.shape[0], 1) * 0.9 + 0.1) # multiply by random scale 0-1

# Kmeans calculation

print(f'{prefix}Running kmeans for {n} anchors on {len(wh)} points...')

s = wh.std(0) # sigmas for whitening

k, dist = kmeans(wh / s, n, iter=30) # points, mean distance

assert len(k) == n, print(f'{prefix}ERROR: scipy.cluster.vq.kmeans requested {n} points but returned only {len(k)}')

k *= s

wh = torch.tensor(wh, dtype=torch.float32) # filtered

wh0 = torch.tensor(wh0, dtype=torch.float32) # unfiltered

k = print_results(k)

# Plot

# k, d = [None] * 20, [None] * 20

# for i in tqdm(range(1, 21)):

# k[i-1], d[i-1] = kmeans(wh / s, i) # points, mean distance

# fig, ax = plt.subplots(1, 2, figsize=(14, 7), tight_layout=True)

# ax = ax.ravel()

# ax[0].plot(np.arange(1, 21), np.array(d) ** 2, marker='.')

# fig, ax = plt.subplots(1, 2, figsize=(14, 7)) # plot wh

# ax[0].hist(wh[wh[:, 0]<100, 0],400)

# ax[1].hist(wh[wh[:, 1]<100, 1],400)

# fig.savefig('wh.png', dpi=200)

# Evolve

npr = np.random

f, sh, mp, s = anchor_fitness(k), k.shape, 0.9, 0.1 # fitness, generations, mutation prob, sigma

pbar = tqdm(range(gen), desc=f'{prefix}Evolving anchors with Genetic Algorithm:') # progress bar

for _ in pbar:

v = np.ones(sh)

while (v == 1).all(): # mutate until a change occurs (prevent duplicates)

v = ((npr.random(sh) < mp) * npr.random() * npr.randn(*sh) * s + 1).clip(0.3, 3.0)

kg = (k.copy() * v).clip(min=2.0)

fg = anchor_fitness(kg)

if fg > f:

f, k = fg, kg.copy()

pbar.desc = f'{prefix}Evolving anchors with Genetic Algorithm: fitness = {f:.4f}'

if verbose:

print_results(k)

return print_results(k)对 kmean_anchors()函数中的参数做一下简单解释(代码中已经有了英文注释):

🔆path:包含数据集文件路径等相关信息的 yaml 文件(比如 coco128.yaml), 或者 数据集张量(yolov5 自动计算锚定框时就是用的这种方式,先把数据集标签信息读取再处理)

🔆n:锚定框的数量,即有几组;默认值是9

🔆img_size:图像尺寸。计算数据集样本标签框的宽高比时,是需要缩放到 img_size 大小后再计算的;默认值是640

🔆thr:数据集中标注框宽高比最大阈值,默认是使用 超参文件 hyp.scratch.yaml 中的 “anchor_t” 参数值;默认值是4.0;自动计算时,会自动根据你所使用的数据集,来计算合适的阈值。

🔆gen:kmean聚类算法迭代次数,默认值是1000

🔆verbose:是否打印输出所有计算结果,默认值是true。

不自动计算锚框可以在训练时设置 default=False

parser.add_argument('--noautoanchor', action='store_true', help='disable autoan③如果bpr小于0.98,没有自动计算锚框,可以手动计算锚框

新建一个py文件,手动计算锚框:

# 从utils.autoanchor 导入kmean_anchors函数

import utils.autoanchor as autoAnchor

'''

path: 储存yaml文件路径,yaml文件中应包含数据集文件路径

n : 生成锚框的数量

img_size : 图片分辨率尺寸,需要将图片缩放到img_size大小尺寸后再进行锚框计算

thr : 数据集中标注框宽高比最大阈值,默认是使用 超参文件 hyp.scratch.yaml 中的 “anchor_t” 参数值;默认值是 4.0;自动计算时,会自动根据你所使用的数据集,来计算合适的阈值。

gen : kmean 聚类算法迭代次数,默认值是 1000

verbose : 打印所有结果

'''

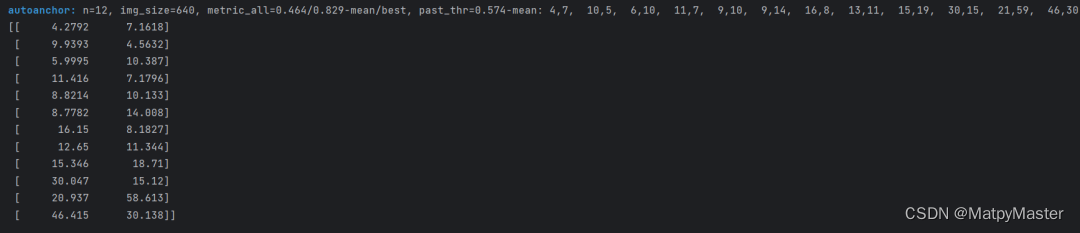

new_anchors = autoAnchor.kmean_anchors(path=r'D:\yolov7-main\data\K-means.yaml', n=12, img_size=640, thr=3, gen=1000, verbose=False)

print(new_anchors)

最后:

如果你想要进一步了解更多的相关知识,可以关注下面公众号联系~会不定期发布相关设计内容包括但不限于如下内容:信号处理、通信仿真、算法设计、matlab appdesigner,gui设计、simulink仿真......希望能帮到你!

6809

6809

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?