Ceres实现位姿优化代码详解(基于BA求解pnp问题)

Ceres计算最小化重投影误差:重载operator()

-

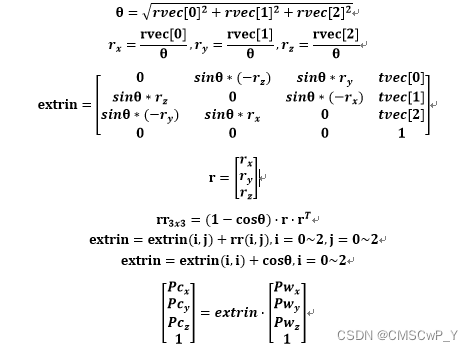

对3D坐标Pw进行坐标变换,换算到相机坐标系下

-

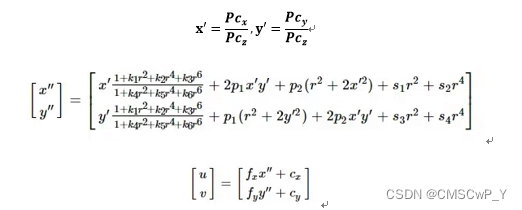

将3D坐标投影至图像坐标系下(畸变矫正)

-

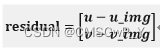

计算投影点与图像点的误差

-

最小化30个投影点与图像点的重投影误差之和

参考:

ceres实现的pnp解算后的位姿优化代码详解

Ceres的Problem::AddResidualBlock()函数

Ceres Solver 入门教程

【SLAM】Ceres优化库超详细解析

struct ZCostFunctorPnP

{

std::vector<float> object_point_;

std::vector<float> image_point_;

std::vector< std::vector<double> > K_; // fx, fy, cx, cy

std::vector<double> D_; // k1, k2, p1, p2, k3, k4, k5, k6

double *tra_;

ZCostFunctorPnP( std::vector<float> objpoint, std::vector<float> imgpoint, std::vector< std::vector<double> > camera_intrinsic, std::vector<double> dist_coeffs,double *tra):

object_point_(objpoint), image_point_(imgpoint), K_(camera_intrinsic), D_(dist_coeffs),tra_(tra){}

template<typename T>

bool operator()(const T* const rot, const T* const tra, T* residual) const

{

T point_in[3]; // 真实世界3D点坐标

T point_out[3]; // 相机坐标系3D点坐标

point_in[0] = T(object_point_[0]);

point_in[1] = T(object_point_[1]);

point_in[2] = T(object_point_[2]);

// 对3D点坐标进行坐标变换,换算到相机坐标系下

// rotation

T theta=sqrt(rot[0]*rot[0]+rot[1]*rot[1]+rot[2]*rot[2]);

T rx=rot[0]/theta;

T ry=rot[1]/theta;

T rz=rot[2]/theta;

Eigen::Matrix<T,4,4> extrin;

extrin(0,0)=T(0.0);

extrin(0,1)=sin(theta)*(-rz);

extrin(0,2)=sin(theta)*(ry);

extrin(0,3)=T(tra_[0]);

extrin(1,0)=sin(theta)*(rz);

extrin(1,1)=T(0.0);

extrin(1,2)=sin(theta)*(-rx);

extrin(1,3)=T(tra_[1]);

extrin(2,0)=sin(theta)*(-ry);

extrin(2,1)=sin(theta)*(rx);

extrin(2,2)=T(0.0);

extrin(2,3)=T(tra_[2]);

extrin(3,0)=T(0.0);

extrin(3,1)=T(0.0);

extrin(3,2)=T(0.0);

extrin(3,3)=T(1.0);

Eigen::Matrix<T,3,1> r;

r(0,0)=rx;

r(1,0)=ry;

r(2,0)=rz;

Eigen::Matrix<T,3,3> rr=(T(1.0)-cos(theta))*r*r.transpose();

for(int i=0;i<3;i++)

for(int j=0;j<3;j++)

extrin(i,j)+=rr(i,j);

for(int i=0;i<3;i++)

extrin(i,i)+=cos(theta);

extrin=extrin.inverse().eval();

Eigen::Matrix<T,4,1> pt_in;

pt_in(0,0)=point_in[0];

pt_in(1,0)=point_in[1];

pt_in(2,0)=point_in[2];

pt_in(3,0)=T(1.0);

Eigen::Matrix<T,4,1> pt_out=extrin*pt_in;

point_out[0]=pt_out(0,0);

point_out[1]=pt_out(1,0);

point_out[2]=pt_out(2,0);

// projection

T x = point_out[0] / point_out[2];

T y = point_out[1] / point_out[2];

// undistortation with dist coefficients as [k1, k2, p1, p2, k3, k4, k5, k6]

// if (!D_empty())

T r2 = x * x + y * y;

T xy = x * y;

// 畸变矫正计算公式

x = x * (1.0 + D_[0] * r2 + D_[1] * r2 * r2 + D_[4] * r2* r2* r2)/(1.0 + D_[5] * r2 + D_[6] * r2 * r2 + D_[7] * r2* r2* r2) + 2.0 * xy * D_[2]

+ (r2 + 2.0*x * x) * D_[3];

y = y * (1.0 + D_[0] * r2 + D_[1] * r2 * r2 + D_[4] * r2* r2* r2)/(1.0 + D_[5] * r2 + D_[6] * r2 * r2 + D_[7] * r2* r2* r2) + 2.0 * xy * D_[3]

+ (r2 + 2.0*y * y) * D_[2];

// 坐标归一化并转换到图像坐标系下

// to image plane

T u = x * K_[0][0] + K_[0][2]; // x * fx + cx

T v = y * K_[1][1] + K_[1][2]; // x * fy + cy

T u_img = T(image_point_[0]);

T v_img = T(image_point_[1]);

// 计算投影点与图像点的误差

residual[0] = u - u_img;

residual[1] = v - v_img;

// std::cout<<"residual: "<<residual[0]<<','<<residual[1]<<std::endl;

return true;

}

static ceres::CostFunction* create( std::vector<float> objpoint,

std::vector<float> imgpoint, std::vector< std::vector<double> > camera_intrinsic, std::vector<double> dist_coeffs,double *tra)

{

return new ceres::AutoDiffCostFunction<ZCostFunctorPnP, 2, 3, 3> // Dimension of residual; Dimension of x(rvec); Dimension of y(tvec)

(new ZCostFunctorPnP(objpoint, imgpoint, camera_intrinsic, dist_coeffs,tra));

}

};

/*

obj_pts: 圆心的真实世界坐标

imgpts2d:圆心的图像坐标

intrinsic: 内参矩阵[[fx, 0, cx], [0, fy, cy], [0, 0, 1]]

distortion_coeffs: 畸变矫正参数[k1, k2, p1, p2, k3, k4, k5, k6]

rvec: 旋转向量,需要随机初始化,且初始值不能为0

tvec: 平移向量,需要随机初始化,且初始值不能为0

*/

bool solveCamPnP(std::vector< std::vector<float> >& objpoints, std::vector< std::vector<float> >& imgpoints,

std::vector< std::vector<double> >& camera_intrinsic, std::vector<double>& dist_coeffs, std::vector<float>& rvec, std::vector<float>& tvec){

double rot[3];

double tra[3];

rot[0] = rvec[0];

rot[1] = rvec[1];

rot[2] = rvec[2];

tra[0] = tvec[0];

tra[1] = tvec[1];

tra[2] = tvec[2];

ceres::Problem problem;

for (int i = 0; i < imgpoints.size(); i++) // 要求每行存储一个关键点的坐标

{

// 使用30个圆心构建最小二乘问题

ceres::CostFunction* cost = ZCostFunctorPnP::create(objpoints[i], imgpoints[i], camera_intrinsic, dist_coeffs, tra);

problem.AddResidualBlock(cost, nullptr, rot, tra); // costFunction, lossFunction,parameterBlock

}

// 设置优化器参数及优化方法

ceres::Solver::Options options;

options.linear_solver_type = ceres::DENSE_SCHUR;

options.max_num_iterations = 80;

options.trust_region_strategy_type = ceres::LEVENBERG_MARQUARDT;

options.minimizer_progress_to_stdout = false;

// 调用求解器进行优化

ceres::Solver::Summary summary; // 用于存放日志

ceres::Solve(options, &problem, &summary); // 构建雅可比矩阵

std::cout << summary.FullReport() << std::endl;

// 对优化后的rvec和tvec进行赋值还原

rvec[0] = rot[0];

rvec[1] = rot[1];

rvec[2] = rot[2];

tvec[0] = tra[0];

tvec[1] = tra[1];

tvec[2] = tra[2];

return true;

}

bool solveLidarPnP(std::vector< std::vector<float> >& objpoints, std::vector< std::vector<float> >& lidarpoints,

std::vector<float>& rvec, std::vector<float>& tvec){

double rot[3];

double tra[3];

rot[0] = rvec[0];

rot[1] = rvec[1];

rot[2] = rvec[2];

tra[0] = tvec[0];

tra[1] = tvec[1];

tra[2] = tvec[2];

ceres::Problem problem;

for (int i = 0; i < lidarpoints.size(); i++)

{

ceres::CostFunction* cost = YCostFunctorPnP::create(objpoints[i], lidarpoints[i],tra);

problem.AddResidualBlock(cost, nullptr, rot, tra);

}

ceres::Solver::Options options;

options.linear_solver_type = ceres::DENSE_SCHUR;

options.max_num_iterations = 80;

options.trust_region_strategy_type = ceres::LEVENBERG_MARQUARDT;

options.minimizer_progress_to_stdout = false;

ceres::Solver::Summary summary;

ceres::Solve(options, &problem, &summary);

rvec[0] = rot[0];

rvec[1] = rot[1];

rvec[2] = rot[2];

tvec[0] = tra[0];

tvec[1] = tra[1];

tvec[2] = tra[2];

return true;

}

605

605

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?