1器械动态追踪的算法

1.1 寻找到这个程序

已经存放于压缩包中:

main.cpp的代码是

#include <opencv2/video/video.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/imgproc/imgproc.hpp>

#include <opencv2/core/core.hpp>

#include <iostream>

#include <cstdio>

#include "stdafx.h"

#include <math.h>

using namespace std;

using namespace cv;

Mat frame, gray;//当前帧图片

Mat dst;

Mat prev_frame, prev_gray;//前一帧图片

vector<Point2f> features;//保存特征点

vector<Point2f> inPoints;//初始化特征点

vector<Point2f> fpts[2];//保存当前帧和前一帧的特征点位置 //定义一个一维数组,数组元素的类型是Point2f(2维点, 含xy,浮点类型)

vector<uchar> status;//特征点跟踪标志位

vector<float> err;//误差和

double pixel[550];

int k = 2;//定义optical_flow[k]的

double optical_flow[550];

int const maxCorners = 200;//特征点数量 = fpts[1].size()

double x, y, z;//存放第一帧的位置xy和矩形宽度z

Point2f pointA;//起始点

const double g_dReferWidth=2.3;//mm

double g_dPixelsPerMetric = 10;//每毫米多少像素

double distance1;

Scalar scalarL = Scalar(0, 25, 0);

Scalar scalarH = Scalar(26, 150, 255);

int flag_start = 0;//确定开始检测的flag

bool flag_firstframe = true;//确定开始检测第一帧的flag

bool flag_end = false;

void delectFeature(Mat &inFrame, Mat &ingray);//角点检测

//void drawFeature(Mat &inFrame);//画点

void track();//运动

void drawLine();//画运动轨迹

// Point2f midpoint(Point2f& ptA, Point2f& ptB);

void delectFirstFrame(Mat &frame, Mat &dst);

void delectFeature_single(Mat &inFrame,Mat &indst);

//void findContours(const Mat& src, vector<vector<Point>>& contours, vector<Vec4i>& hierarchy,//重载findContours

// int retr = RETR_LIST, int method = CHAIN_APPROX_SIMPLE, Point offset = Point(0, 0))//重载了findContours函数,防止运行结束时发生崩溃

//{

// //CvMat c_image = src;

// Mat c_image = src;

// MemStorage storage(cvCreateMemStorage());

// CvSeq* _ccontours = 0;

// //cvFindContours(&c_image, storage, &_ccontours, sizeof(CvContour), retr, method, CvPoint(offset));

// cvFindContours(&c_image, storage, &_ccontours, sizeof(CvContour), retr, method, Point(offset));

// if (!_ccontours)

// {

// contours.clear();

// return;

// }

// Seq<CvSeq*> all_contours(cvTreeToNodeSeq(_ccontours, sizeof(CvSeq), storage));

// int total = (int)all_contours.size();

// contours.resize(total);

//

// SeqIterator<CvSeq*> it = all_contours.begin();

// for (int i = 0; i < total; i++, ++it)

// {

// CvSeq* c = *it;

// ((CvContour*)c)->color = (int)i;

// int count = (int)c->total;

// int* data = new int[count * 2];

// cvCvtSeqToArray(c, data);

// for (int j = 0; j < count; j++)

// {

// contours[i].push_back(Point(data[j * 2], data[j * 2 + 1]));

// }

// delete[] data;

// }

//

// hierarchy.resize(total);

// it = all_contours.CV_THRESH_BINARY_INVbegin();

// for (int i = 0; i < total; i++, ++it)

// {

// CvSeq* c = *it;

// int h_next = c->h_next ? ((CvContour*)c->h_next)->color : -1;

// int h_prev = c->h_prev ? ((CvContour*)c->h_prev)->color : -1;

// int v_next = c->v_next ? ((CvContour*)c->v_next)->color : -1;

// int v_prev = c->v_prev ? ((CvContour*)c->v_prev)->color : -1;

// hierarchy[i] = Vec4i(h_next, h_prev, v_next, v_prev);

// }

// storage.release();

//}

//2022.9.24zaoshang

//Point2f midpoint(Point2f& ptA, Point2f& ptB) {

// return Point2f((ptA.x+ ptB.x)*0.5,(ptA.y + ptB.y)*0.5);

//}

//double length(Point2f& ptA, Point2f& ptB) {

// return sqrtf( powf((ptA.x - ptB.x), 2) + powf((ptA.y - ptB.y), 2) );

//}

Point2f midpoint(Point2f ptA, Point2f ptB) {

return Point2f((ptA.x+ ptB.x)*0.5,(ptA.y + ptB.y)*0.5);

}

double length(Point2f ptA, Point2f ptB) {

return sqrtf( powf((ptA.x - ptB.x), 2) + powf((ptA.y - ptB.y), 2) );

}

int main(){

//VideoCapture capture("/media/smile/Moving HHD 1.8T/佳豪师兄项目/3tjh01.mp4");

//VideoCapture capture("D:\\佳豪师兄项目\\3tjh.mp4");

VideoCapture capture("/media/smile/Moving HHD 1.8T/佳豪师兄项目/3.将vs2022_win10下的程序迁移到ubuntu下Qt5.9.2/1.将我之前在vs2022跑起来的程序在vs2022中转为qt文件并迁移到ubuntu下/1.成功在ubuntu-qt下运行的迁移程序/segmentation_only_qt/3tjhx0.5.mp4");

//J:\佳豪师兄项目\分割演示220815\光流法\新数据12.22

// VideoCapture capture(1);

//VideoCapture cap(0);

//VideoCapture capture("H:\ev_recode/20220813_163511.mp4");

//VideoCapture capture("D:/VSc++code/Vs2012code/实验数据1.5/4n.mp4");

/*获取视频帧的尺寸和帧率*/

// int CV_CAP_PROP_FPS;

// int CV_CAP_PROP_FRAME_WIDTH;

// int CV_CAP_PROP_FRAME_HEIGHT;

float CV_CAP_PROP_FPS,CV_CAP_PROP_FRAME_WIDTH,CV_CAP_PROP_FRAME_HEIGHT;

double rate = capture.get(CV_CAP_PROP_FPS);

int width = capture.get(CV_CAP_PROP_FRAME_WIDTH);

int height = capture.get(CV_CAP_PROP_FRAME_HEIGHT);

if (capture.isOpened())

{

while (capture.read(frame)) {

cvtColor(frame, gray, COLOR_BGR2GRAY);

delectFeature_single(frame,dst);

imshow("输CV_THRESH_BINARY_INV出视频", frame);//imshow("input video", frame);??

waitKey(30);

}

}

}

void delectFeature_single(Mat &frame, Mat &dst){

int largest_area=0;

int largest_contour_index=0;

int CV_THRESH_BINARY_INV;

Point2f pointO = Point2f(dst.size().width, dst.size().height);

Point2f pointB;

//颜色空间转换 dst=hsv

cvtColor(frame, dst, COLOR_BGR2HSV);

//Scalar scalarL = Scalar(0, 25, 48);0

//Scalar scalarH = Scalar(40, 145, 236);

//掩码mask=dst

inRange(dst, scalarL, scalarH, dst);

threshold(dst, dst, 160, 255, CV_THRESH_BINARY_INV);

//获取自定义核kernel 第一个参数MORPH_RECT表示矩形的卷积核

Mat kernel3 = getStructuringElement(MORPH_RECT, Size(1, 1));

Mat kernel2 = getStructuringElement(MORPH_RECT, Size(2, 2));

Mat kernel0 = getStructuringElement(MORPH_RECT, Size(3, 3));

Mat kernel = getStructuringElement(MORPH_RECT, Size(4, 4));

erode(dst, dst, kernel3);

dilate(dst, dst, kernel0);

//morphologyEx(dst, dst, MORPH_OPEN, kernel3);//MORPH_OPEN 开运算

//imshow("膨胀(+开运算)后", dst);

vector<vector<Point> > contours; // Vector for storing contours

vector<Vec4i> hierarchy;

findContours( dst, contours, hierarchy, RETR_CCOMP, CHAIN_APPROX_SIMPLE ); // Find the contours in the image RETR_CCOMP

Rect boundRect; //定义普通正外接矩形集合

vector<RotatedRect> box(contours.size()); //定义最小外接矩形集合

Point2f rect[4];

size_t i;

for( i = 0; i< contours.size(); i++ ) // iterate through each contour.

{

double area = contourArea( contours[i] ); // Find the area of contour

if( area > largest_area )

{

largest_area = area;

largest_contour_index = i; //Store the index of largest contour

//boundRect[i] = boundingRect(Mat(contours[i]));

boundRect = boundingRect( contours[i] ); // 普通的外界矩形轮廓

box[i] = minAreaRect(contours[i]); //计算轮廓最小外接矩形

box[i].points(rect);//把最小外接矩形四个顶点复制给rect

}

}

//cout<<"每一帧中的最大面积"<<largest_area<<endl;

//rectangle(frame, Point(boundRect.x, boundRect.y), Point(boundRect.x + boundRect.width, boundRect.y + boundRect.height), Scalar(255, 255, 255), 1, 8);//绘制一般的外接矩形

for (int j = 0; j < 4; j++)

{

line(frame, rect[j], rect[(j + 1) % 4], Scalar(0, 0, 255), 2, 8); //绘制最小外接矩形每条边

}

drawContours( frame, contours,largest_contour_index, Scalar( 0, 255, 0 ), 2 ); // 用之前的索引largest_contour_index绘制最大轮廓

//确定末端中点坐标

if(length(rect[0], rect[3])<length(rect[0], rect[1])){//此时短边为03或12

if(length(pointO, midpoint(rect[0], rect[3]))<length(pointO, midpoint(rect[1], rect[2]))){

pointB = midpoint(rect[0], rect[3]);//中点坐标

}else{

pointB = midpoint(rect[1], rect[2]);

}

}else{//此时短边为01或23

if(length(pointO, midpoint(rect[0], rect[1]))<length(pointO, midpoint(rect[2], rect[3]))){

pointB = midpoint(rect[0], rect[1]);//中点坐标

}else{

pointB = midpoint(rect[2], rect[3]);

}

}

// if(length(rect[0], rect[3])<length(rect[0], rect[1])){//此时短边为03或12

// if(length(pointO, midpoint(rect[0], rect[3]))<length(pointO, midpoint(rect[1], rect[2]))){

// pointB = midpoint(rect[0], rect[3]);//中点坐标

// }else{

// pointB = midpoint(rect[1], rect[2]);

// }

// }else{//此时短边为01或23

// if(length(pointO, midpoint(rect[0], rect[1]))<length(pointO, midpoint(rect[2], rect[3]))){

// pointB = midpoint(rect[0], rect[1]);//中点坐标

// }else{

// pointB = midpoint(rect[2], rect[3]);

// }

// }

//Point2f pointB = midpoint(rect[0], rect[3]);//中点坐标

circle( frame, pointB, 4, Scalar(255, 0, 0), -1);

//distance1 = sqrtf( powf((x - pointB.x), 2) + powf((y - pointB.y), 2) );

distance1 = sqrtf( powf((601 - pointB.x), 2) + powf((192 - pointB.y), 2) );

putText(frame, "The test is start:", Point(20, 10),FONT_HERSHEY_COMPLEX,0.5,Scalar(0,0,255));

putText(frame, cv::format("Device end point: X = %.0f pixels, Y = %.0f pixels", pointB.x, pointB.y), Point(20, 30),FONT_HERSHEY_COMPLEX,0.5,Scalar(0,0,255));

putText(frame, cv::format("The deformation is %.4f mm",-(distance1 / g_dPixelsPerMetric-130)), Point(20, 50),FONT_HERSHEY_COMPLEX,0.5,Scalar(0,0,255));

//if(distance1/g_dPixelsPerMetric > 40)

if ((110 > (distance1 / g_dPixelsPerMetric)) & ((distance1 / g_dPixelsPerMetric) > 100)) {

putText(frame, cv::format("Attention: Not Safe!!"), Point(20, 70),FONT_HERSHEY_COMPLEX,0.5,Scalar(0,0,255));

}

//line(frame, pointA, pointB, Scalar(0, 0, 255), 1, 8, 0);

circle( frame, pointA, 4, Scalar(0, 255, 0), -1);//起始点

//cout << "The deformation is"<<distance1/g_dPixelsPerMetric << " mm"<<endl;

}

1.2 只要将这部分放入您的主程序中

int main(){

VideoCapture capture("要进行识别的视频位置");

float CV_CAP_PROP_FPS,CV_CAP_PROP_FRAME_WIDTH,CV_CAP_PROP_FRAME_HEIGHT;

double rate = capture.get(CV_CAP_PROP_FPS);

int width = capture.get(CV_CAP_PROP_FRAME_WIDTH);

int height = capture.get(CV_CAP_PROP_FRAME_HEIGHT);

if (capture.isOpened())

{

while (capture.read(frame)) {

cvtColor(frame, gray, COLOR_BGR2GRAY);

delectFeature_single(frame,dst);

imshow("输CV_THRESH_BINARY_INV出视频", frame);//imshow("input video", frame);??

waitKey(30);

}

}

}

1.3 并且将其他部分放入您的主函数中;

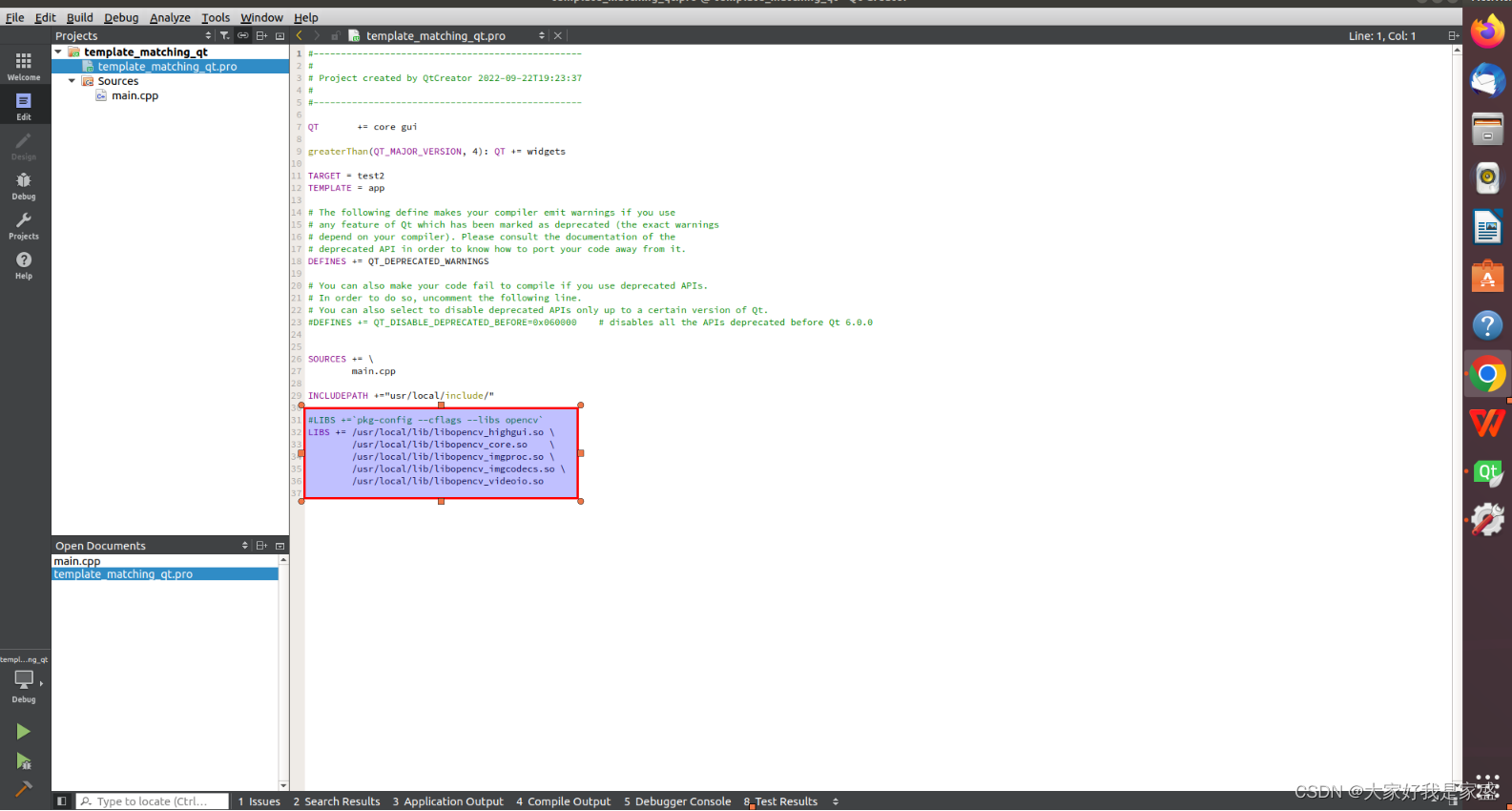

1.4将本工程文件中的.pro文件配置到您的qt工程文件的pro中

如果这些库的索引你没有配置,请配置一下

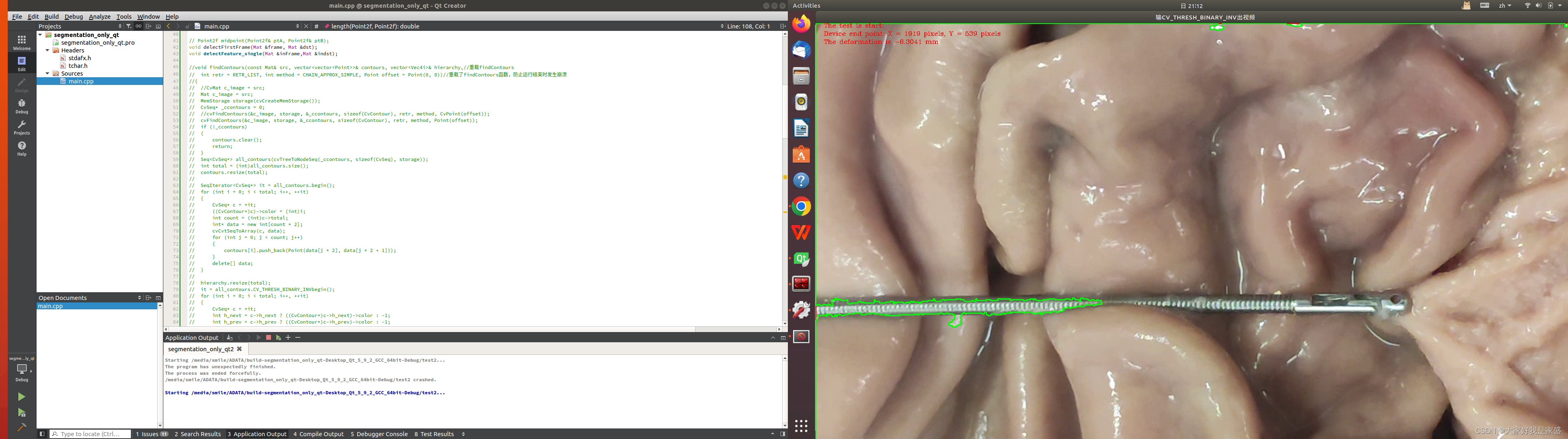

最后的效果

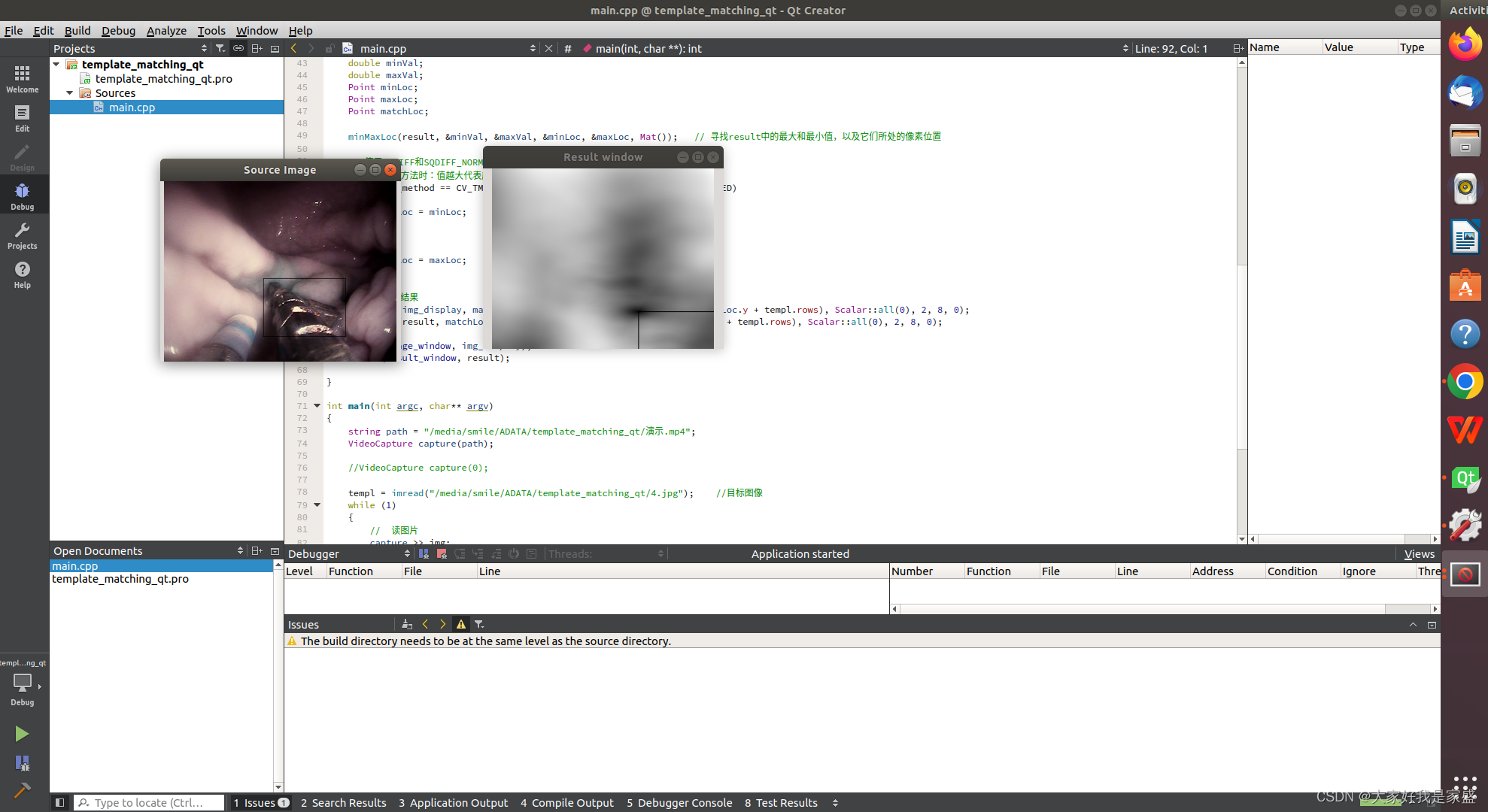

2器械识别的算法

2.1 寻找到这个程序

已经存放于压缩包中:

main.cpp的代码是

#include <opencv2/imgcodecs.hpp>

#include <opencv2/highgui.hpp>

#include <opencv2/imgproc.hpp>

#include <iostream>

using namespace std;

using namespace cv;

/// 全局变量

Mat img; Mat templ; Mat result;

//char* image_window = "Source Image";

//char* result_window = "Result window";

#define image_window "Source Image" //为窗口标题定义的宏

#define result_window "Result window" //为窗口标题定义的宏

int match_method;

int CV_TM_SQDIFF;

int CV_TM_SQDIFF_NORMED;

int CV_WINDOW_AUTOSIZE;

int max_Trackbar = 5;

/// 函数声明

// 模板匹配

void MatchingMethod(int, void*)

{

// 用于显示结果

Mat img_display;

img.copyTo(img_display);//img_display=img

// 用于存储匹配结果的矩阵

int result_cols = img.cols - templ.cols + 1;

int result_rows = img.rows - templ.rows + 1;

result.create(result_cols, result_rows, CV_32FC1);

// 进行模板匹配

matchTemplate(img, templ, result, match_method);

// 归一化结果(方便显示结果)

normalize(result, result, 0, 1, NORM_MINMAX, -1, Mat());

// 找到最佳匹配位置

double minVal;

double maxVal;

Point minLoc;

Point maxLoc;

Point matchLoc;

minMaxLoc(result, &minVal, &maxVal, &minLoc, &maxLoc, Mat()); // 寻找result中的最大和最小值,以及它们所处的像素位置

// 使用SQDIFF和SQDIFF_NORMED方法时:值越小代表越相似

// 使用其他方法时:值越大代表越相似

if (match_method == CV_TM_SQDIFF || match_method == CV_TM_SQDIFF_NORMED)

{

matchLoc = minLoc;

}

else

{

matchLoc = maxLoc;

}

// 显示匹配结果

rectangle(img_display, matchLoc, Point(matchLoc.x + templ.cols, matchLoc.y + templ.rows), Scalar::all(0), 2, 8, 0);

rectangle(result, matchLoc, Point(matchLoc.x + templ.cols, matchLoc.y + templ.rows), Scalar::all(0), 2, 8, 0);

imshow(image_window, img_display);

imshow(result_window, result);

}

int main(int argc, char** argv)

{

string path = "/media/smile/ADATA/template_matching_qt/演示.mp4";

VideoCapture capture(path);

//VideoCapture capture(0);

templ = imread("/media/smile/ADATA/template_matching_qt/4.jpg"); //目标图像

while (1)

{

// 读图片

capture >> img;

// 创建图像显示窗口

namedWindow(image_window, CV_WINDOW_AUTOSIZE);

namedWindow(result_window, CV_WINDOW_AUTOSIZE);

// 创建混动条

char trackbar_label[109] = "Method: \n 0: SQDIFF \n 1: SQDIFF NORMED \n 2: TM CCORR \n 3: TM CCORR NORMED \n 4: TM COEFF \n 5: TM COEFF NORMED";

// char* trackbar_label = "Method: \n 0: SQDIFF \n 1: SQDIFF NORMED \n 2: TM CCORR \n 3: TM CCORR NORMED \n 4: TM COEFF \n 5: TM COEFF NORMED";

createTrackbar(trackbar_label, image_window, &match_method, max_Trackbar, MatchingMethod);

MatchingMethod(0, 0);

waitKey(1);

}

return 0;

}

2.2 寻只要将这部分放入您的主程序中

int main(int argc, char** argv)

{

string path = "/media/smile/ADATA/template_matching_qt/演示.mp4";

VideoCapture capture(path);

//VideoCapture capture(0);

templ = imread("/media/smile/ADATA/template_matching_qt/4.jpg"); //目标图像

while (1)

{

// 读图片

capture >> img;

// 创建图像显示窗口

namedWindow(image_window, CV_WINDOW_AUTOSIZE);

namedWindow(result_window, CV_WINDOW_AUTOSIZE);

// 创建混动条

char trackbar_label[109] = "Method: \n 0: SQDIFF \n 1: SQDIFF NORMED \n 2: TM CCORR \n 3: TM CCORR NORMED \n 4: TM COEFF \n 5: TM COEFF NORMED";

// char* trackbar_label = "Method: \n 0: SQDIFF \n 1: SQDIFF NORMED \n 2: TM CCORR \n 3: TM CCORR NORMED \n 4: TM COEFF \n 5: TM COEFF NORMED";

createTrackbar(trackbar_label, image_window, &match_method, max_Trackbar, MatchingMethod);

MatchingMethod(0, 0);

waitKey(1);

}

return 0;

}

2.3 并且将其他部分放入您的主函数中;

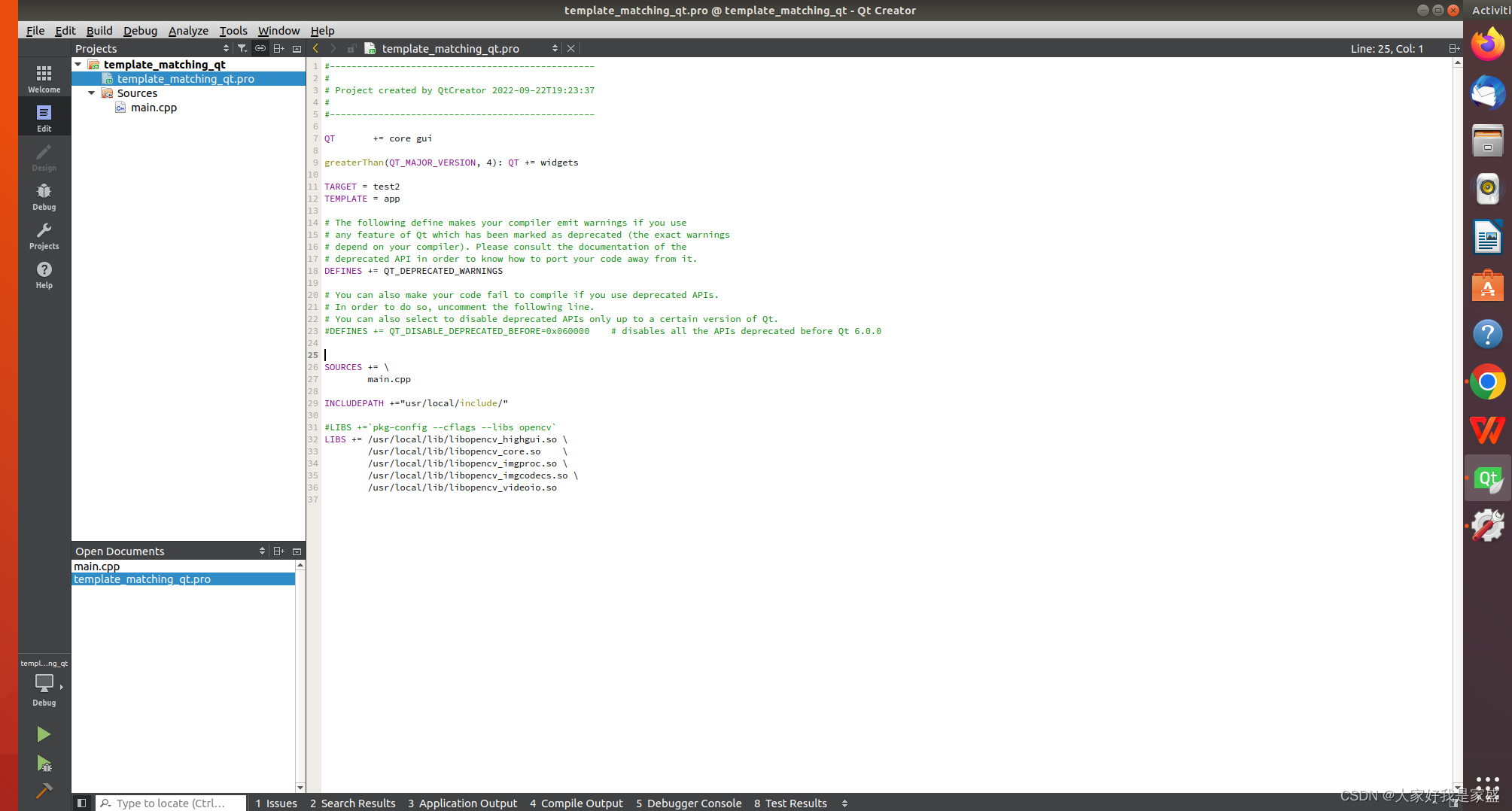

2.4将本工程文件中的.pro文件配置到您的qt工程文件的pro中

如果这些库的索引你没有配置,请配置一下

最后的效果

8万+

8万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?