残差网络Resnet50

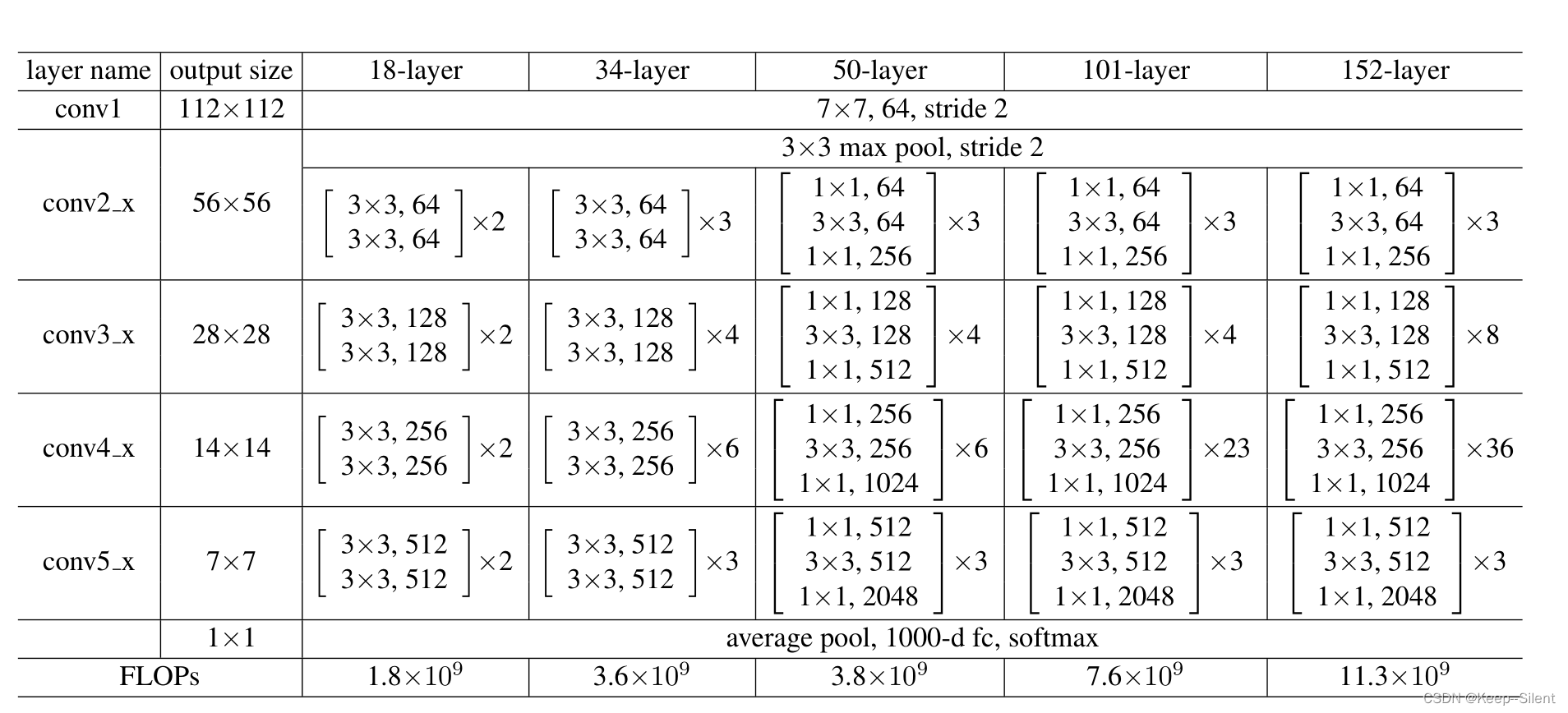

网络结构

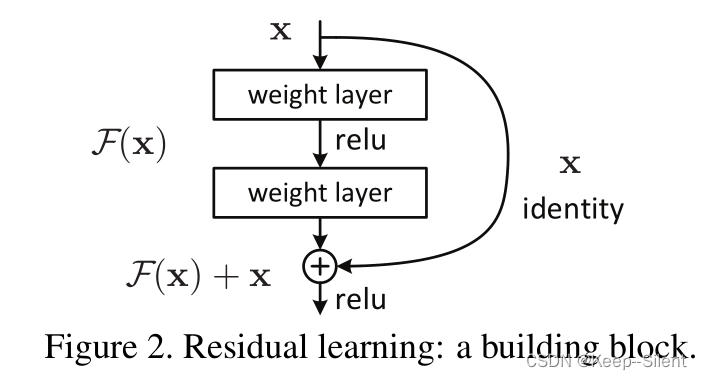

由于卷积层的堆叠,前面的信息可能在之后被丢失,造成精度下降。为了防止这种情况,将前面的层和后面的层进行叠加,防止信息丢失

Resnet网络结构:

Resnet50网络结构:

| level | input | stride | output | ||

| 1 | 224*224*3 | 7*7*64 | 2 | 112*112*64 | |

| MaxPool | 112*112*64 | MaxPool | 2 | 56*56*64 | |

| 2 | 56*56*64 | 1*1*64 | 1 | 56*56*64 | 1 |

| 3 | 56*56*64 | 3*3*64 | 1 | 56*56*64 | |

| 4 | 56*56*64 | 1*1*256 | 1 | 56*56*256 | |

| 5 | 56*56*256 | 1*1*64 | 1 | 56*56*64 | 1 |

| 6 | 56*56*64 | 3*3*64 | 1 | 56*56*64 | |

| 7 | 56*56*64 | 1*1*256 | 1 | 56*56*256 | |

| 8 | 56*56*256 | 1*1*64 | 1 | 56*56*64 | 1 |

| 9 | 56*56*64 | 3*3*64 | 1 | 56*56*64 | |

| 10 | 56*56*64 | 1*1*256 | 1 | 56*56*256 | |

| 11 | 56*56*256 | 1*1*128 | 1 | 56*56*128 | 2 |

| 12 | 56*56*128 | 3*3*128 | 2 | 28*28*128 | |

| 13 | 28*28*128 | 1*1*512 | 1 | 28*28*512 | |

| 14 | 28*28*512 | 1*1*128 | 1 | 28*28*128 | 2 |

| 15 | 28*28*128 | 3*3*128 | 1 | 28*28*128 | |

| 16 | 28*28*128 | 1*1*512 | 1 | 28*28*512 | |

| 17 | 28*28*512 | 1*1*128 | 1 | 28*28*128 | 2 |

| 18 | 28*28*128 | 3*3*128 | 1 | 28*28*128 | |

| 19 | 28*28*128 | 1*1*512 | 1 | 28*28*512 | |

| 20 | 28*28*512 | 1*1*128 | 1 | 28*28*128 | 2 |

| 21 | 28*28*128 | 3*3*128 | 1 | 28*28*128 | |

| 22 | 28*28*128 | 1*1*512 | 1 | 28*28*512 | |

| 23 | 28*28*512 | 1*1*256 | 1 | 28*28*256 | 3 |

| 24 | 28*28*256 | 3*3*256 | 2 | 14*14*256 | |

| 25 | 14*14*256 | 1*1*1024 | 1 | 14*14*1024 | |

| 26 | 14*14*1024 | 1*1*256 | 1 | 14*14*256 | 3 |

| 27 | 14*14*256 | 3*3*256 | 1 | 14*14*256 | |

| 28 | 14*14*256 | 1*1*1024 | 1 | 14*14*1024 | |

| 29 | 14*14*1024 | 1*1*256 | 1 | 14*14*256 | 3 |

| 30 | 14*14*256 | 3*3*256 | 1 | 14*14*256 | |

| 31 | 14*14*256 | 1*1*1024 | 1 | 14*14*1024 | |

| 32 | 14*14*1024 | 1*1*256 | 1 | 14*14*256 | 3 |

| 33 | 14*14*256 | 3*3*256 | 1 | 14*14*256 | |

| 34 | 14*14*256 | 1*1*1024 | 1 | 14*14*1024 | |

| 35 | 14*14*1024 | 1*1*256 | 1 | 14*14*256 | 3 |

| 36 | 14*14*256 | 3*3*256 | 1 | 14*14*256 | |

| 37 | 14*14*256 | 1*1*1024 | 1 | 14*14*1024 | |

| 38 | 14*14*1024 | 1*1*256 | 1 | 14*14*256 | 3 |

| 39 | 14*14*256 | 3*3*256 | 1 | 14*14*256 | |

| 40 | 14*14*256 | 1*1*1024 | 1 | 14*14*1024 | |

| 41 | 14*14*1024 | 1*1*512 | 1 | 14*14*512 | 4 |

| 42 | 14*14*512 | 3*3*512 | 2 | 7*7*512 | |

| 43 | 7*7*512 | 1*1*2048 | 1 | 7*7*2048 | |

| 44 | 7*7*2048 | 1*1*512 | 1 | 7*7*512 | 4 |

| 45 | 7*7*512 | 3*3*512 | 1 | 7*7*512 | |

| 46 | 7*7*512 | 1*1*2048 | 1 | 7*7*2048 | |

| 47 | 7*7*2048 | 1*1*512 | 1 | 7*7*512 | 4 |

| 48 | 7*7*512 | 3*3*512 | 1 | 7*7*512 | |

| 49 | 7*7*512 | 1*1*2048 | 1 | 7*7*2048 | |

| AvgPool | 7*7*2048 | 1*1*2048 | |||

| 50FC | 1*1*2048 | 2048 | |||

以Resnet50为backbone、一个全连接层作为head,形成一个花卉检测的cnn网络结构

加载数据集并划分训练集

import torch

from torchvision import datasets, transforms

from torch.utils.data import DataLoader

import numpy as np

from sklearn.model_selection import train_test_split

from torch.utils.data import Subset

# 数据预处理和增强

transform = transforms.Compose([

transforms.Resize((224, 224)), # 调整图像大小为 224x224像素

transforms.ToTensor(), # 转换为张量

transforms.Normalize(mean=[0.5, 0.5, 0.5], std=[0.5, 0.5, 0.5]) # 标准化

])

# 加载数据集

dataset = datasets.ImageFolder('flower', transform=transform)

# set_size = 200 # 设置要使用的数据集大小

# if len(dataset) > set_size:

# dataset = Subset(dataset, range(set_size))

# 划分训练集和测试集

train_size = int(0.7 * len(dataset))

test_size = len(dataset) - train_size

train_dataset, test_dataset = torch.utils.data.random_split(dataset, [train_size, test_size])

# 创建数据加载器

train_loader = DataLoader(train_dataset, batch_size=8, shuffle=True)

test_loader = DataLoader(test_dataset, batch_size=8, shuffle=False)

# 查看数据加载器的样本数量

print('训练集样本数量:', len(train_loader.dataset))

print('测试集样本数量:', len(test_loader.dataset))

class_labels = dataset.classes

print('花卉类别:',dataset.classes)

训练集样本数量: 3021

测试集样本数量: 1296

花卉类别: ['daisy', 'dandelion', 'rose', 'sunflower', 'tulip']

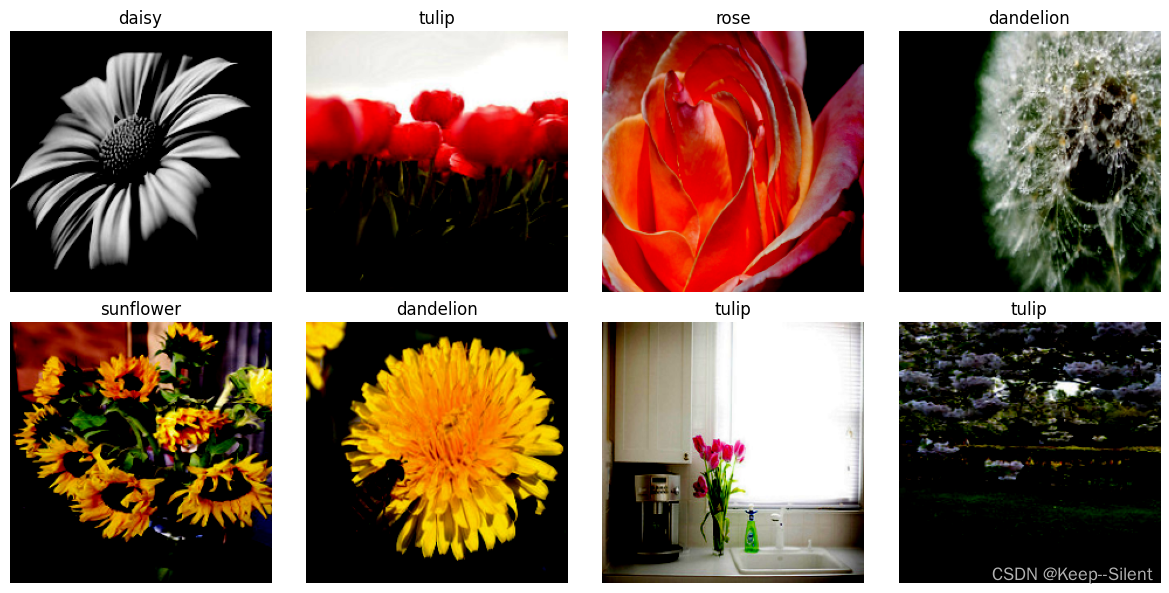

进行数据集的可视化

import matplotlib.pyplot as plt

from sklearn.preprocessing import MinMaxScaler

# 归一化转为[0,255]

transfer=MinMaxScaler(feature_range=(0, 255))

def visualize_loader(batch,predicted=''):

# batch=[32*1*224*224,32]

imgs=batch[0]

fig, axes = plt.subplots(2, 4, figsize=(12, 6))

labels=batch[1].numpy()

# print(imgs.shape)

if str(predicted)=='':

predicted=labels

for i, ax in enumerate(axes.flat):

img=imgs[i].permute(1, 2, 0).numpy()

img=np.clip(img, 0, 1)

ax.imshow(img)

title=class_labels[predicted[i]]

color='black'

if predicted[i]!=labels[i]:

title+= '('+str(class_labels[labels[i]])+')'

color='red'

ax.set_title(title,color=color )

ax.axis('off')

plt.tight_layout()

plt.show()

# loader.shape=3000*[32*1*224*224,32]

for batch in train_loader:

break

visualize_loader(batch)

残缺块ResidualBlock和网络ResNet50

# 残缺块和ResNet50

import torch

import torch.nn as nn

# 残缺块

class ResidualBlock(nn.Module):

def __init__(self, in_channels, out_channels, stride=1):

super(ResidualBlock, self).__init__()

self.conv1 = nn.Conv2d(in_channels, in_channels//stride, kernel_size=3, stride=1, padding=1, bias=False)

self.bn1 = nn.BatchNorm2d(in_channels//stride)

self.relu = nn.ReLU(inplace=True)

self.conv2 = nn.Conv2d(in_channels//stride, in_channels//stride, kernel_size=3, stride=stride, padding=1, bias=False)

self.bn2 = nn.BatchNorm2d(in_channels//stride)

self.conv3 = nn.Conv2d(in_channels//stride, out_channels, kernel_size=3, stride=1, padding=1, bias=False)

self.bn3 = nn.BatchNorm2d(out_channels)

self.shortcut = nn.Sequential()

# 将输入特征图通过1x1的卷积操作调整其通道数,使其与残差块的输出特征图具有相同的通道数,以便进行跳跃连接

if stride != 1 or in_channels!=out_channels:

self.shortcut = nn.Sequential(

nn.Conv2d(in_channels, out_channels, kernel_size=1, stride=stride, bias=False),

nn.BatchNorm2d(out_channels)

)

def forward(self, x):

residual = x

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

out = self.conv3(out)

out = self.bn3(out)

out += self.shortcut(residual)

out = self.relu(out)

return out

# ResNet50

class ResNet50(nn.Module):

def __init__(self, num_classes=1000):

super(ResNet50, self).__init__()

self.conv1 = nn.Conv2d(3, 64, kernel_size=7, stride=2, padding=3, bias=False)

self.bn1 = nn.BatchNorm2d(64)

self.relu = nn.ReLU(inplace=True)

self.maxpool = nn.MaxPool2d(kernel_size=3, stride=2, padding=1)

self.layer1 = self._make_layer(64, 256, blocks=3, stride=1)

self.layer2 = self._make_layer(256, 512, blocks=4, stride=2)

self.layer3 = self._make_layer(512, 1024, blocks=6, stride=2)

self.layer4 = self._make_layer(1024, 2048, blocks=3, stride=2)

self.avgpool = nn.AdaptiveAvgPool2d((1, 1))

self.flatten = nn.Flatten()

def _make_layer(self, in_channels, out_channels, blocks, stride):

layers = []

layers.append(ResidualBlock(in_channels, out_channels, stride))

for _ in range(1, blocks):

layers.append(ResidualBlock(out_channels, out_channels))

return nn.Sequential(*layers)

def forward(self, x):

x = self.conv1(x)

x = self.bn1(x)

x = self.relu(x)

x = self.maxpool(x)

x = self.layer1(x)

x = self.layer2(x)

x = self.layer3(x)

x = self.layer4(x)

x = self.avgpool(x)

x = self.flatten(x)

return x

CNN(Resnet50:backbone,Linear:head)

import torch

import torch.nn as nn

import torchvision.models as models

class CNN(nn.Module):

def __init__(self, num_classes=5):

super(CNN, self).__init__()

# 骨干网络(ResNet-50)

self.backbone = ResNet50()

# 头部(Head)

self.head = nn.Sequential(

nn.ReLU(inplace=True),

nn.Linear(2048, num_classes)

)

def forward(self, x):

x = self.backbone(x)

x = self.head(x)

return x

model = CNN()

print(model)

CNN(

(backbone): ResNet50(

(conv1): Conv2d(3, 64, kernel_size=(7, 7), stride=(2, 2), padding=(3, 3), bias=False)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(maxpool): MaxPool2d(kernel_size=3, stride=2, padding=1, dilation=1, ceil_mode=False)

(layer1): Sequential(

(0): ResidualBlock(

(conv1): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(64, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn3): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(shortcut): Sequential(

(0): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): ResidualBlock(

(conv1): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn3): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(shortcut): Sequential()

)

(2): ResidualBlock(

(conv1): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn3): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(shortcut): Sequential()

)

)

(layer2): Sequential(

(0): ResidualBlock(

(conv1): Conv2d(256, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(128, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn3): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(shortcut): Sequential(

(0): Conv2d(256, 512, kernel_size=(1, 1), stride=(2, 2), bias=False)

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): ResidualBlock(

(conv1): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn3): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(shortcut): Sequential()

)

(2): ResidualBlock(

(conv1): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn3): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(shortcut): Sequential()

)

(3): ResidualBlock(

(conv1): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn3): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(shortcut): Sequential()

)

)

(layer3): Sequential(

(0): ResidualBlock(

(conv1): Conv2d(512, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(256, 1024, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(shortcut): Sequential(

(0): Conv2d(512, 1024, kernel_size=(1, 1), stride=(2, 2), bias=False)

(1): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): ResidualBlock(

(conv1): Conv2d(1024, 1024, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(conv2): Conv2d(1024, 1024, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(1024, 1024, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(shortcut): Sequential()

)

(2): ResidualBlock(

(conv1): Conv2d(1024, 1024, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(conv2): Conv2d(1024, 1024, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(1024, 1024, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(shortcut): Sequential()

)

(3): ResidualBlock(

(conv1): Conv2d(1024, 1024, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(conv2): Conv2d(1024, 1024, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(1024, 1024, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(shortcut): Sequential()

)

(4): ResidualBlock(

(conv1): Conv2d(1024, 1024, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(conv2): Conv2d(1024, 1024, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(1024, 1024, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(shortcut): Sequential()

)

(5): ResidualBlock(

(conv1): Conv2d(1024, 1024, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(conv2): Conv2d(1024, 1024, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(1024, 1024, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(shortcut): Sequential()

)

)

(layer4): Sequential(

(0): ResidualBlock(

(conv1): Conv2d(1024, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(512, 2048, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn3): BatchNorm2d(2048, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(shortcut): Sequential(

(0): Conv2d(1024, 2048, kernel_size=(1, 1), stride=(2, 2), bias=False)

(1): BatchNorm2d(2048, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): ResidualBlock(

(conv1): Conv2d(2048, 2048, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(2048, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(conv2): Conv2d(2048, 2048, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(2048, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(2048, 2048, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn3): BatchNorm2d(2048, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(shortcut): Sequential()

)

(2): ResidualBlock(

(conv1): Conv2d(2048, 2048, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(2048, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(conv2): Conv2d(2048, 2048, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(2048, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(2048, 2048, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn3): BatchNorm2d(2048, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(shortcut): Sequential()

)

)

(avgpool): AdaptiveAvgPool2d(output_size=(1, 1))

(flatten): Flatten(start_dim=1, end_dim=-1)

)

(head): Sequential(

(0): ReLU(inplace=True)

(1): Linear(in_features=2048, out_features=5, bias=True)

)

)

训练模型

import torch.optim as optim

import time

num_epochs=7

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

model.to(device)

criterion = nn.CrossEntropyLoss()

optimizer = optim.Adam(model.parameters(), lr=0.003)

for epoch in range(num_epochs):

model.train()

running_loss = 0.0

correct = 0

total = 0

start_time = time.time()

for images, labels in train_loader:

images = images.to(device)

labels = labels.to(device)

# 前向传播

outputs = model(images)

loss = criterion(outputs, labels)

# 反向传播和优化

optimizer.zero_grad()

loss.backward()

optimizer.step()

# 统计准确率

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

running_loss += loss.item()

train_loss = running_loss / len(train_loader)

train_accuracy = correct / total

# 在测试集上评估模型

model.eval()

test_loss = 0.0

correct = 0

total = 0

with torch.no_grad():

for images, labels in test_loader:

images = images.to(device)

labels = labels.to(device)

outputs = model(images)

loss = criterion(outputs, labels)

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

test_loss += loss.item()

end_time=time.time()

duration = int(end_time - start_time )

m,s=duration//60,duration%60

test_loss = test_loss / len(test_loader)

test_accuracy = correct / total

torch.save(model.state_dict(), 'FlowerRecognitionModel.pth')

# 打印训练过程中的损失和准确率

print(f"Epoch [{epoch+1}/{num_epochs}] :{m}minutes{s}seconds")

print(f" Train Loss: {train_loss:.4f}, Train Accuracy: {train_accuracy:.4f}")

print(f" Test Loss: {test_loss:.4f}, Test Accuracy: {test_accuracy:.4f}")

Epoch [1/7] :3minutes5seconds

Train Loss: 1.1862, Train Accuracy: 0.4826

Test Loss: 1.1131, Test Accuracy: 0.5154

Epoch [2/7] :3minutes11seconds

Train Loss: 1.1405, Train Accuracy: 0.5008

Test Loss: 1.0442, Test Accuracy: 0.5409

Epoch [3/7] :3minutes11seconds

Train Loss: 1.1196, Train Accuracy: 0.5167

Test Loss: 1.1199, Test Accuracy: 0.5386

Epoch [4/7] :3minutes11seconds

Train Loss: 1.0836, Train Accuracy: 0.5468

Test Loss: 0.9931, Test Accuracy: 0.6119

Epoch [5/7] :3minutes10seconds

Train Loss: 1.0372, Train Accuracy: 0.5922

Test Loss: 0.9380, Test Accuracy: 0.6312

Epoch [6/7] :3minutes9seconds

Train Loss: 0.9958, Train Accuracy: 0.6087

Test Loss: 0.9079, Test Accuracy: 0.6535

Epoch [7/7] :3minutes9seconds

Train Loss: 0.9515, Train Accuracy: 0.6316

Test Loss: 0.8918, Test Accuracy: 0.6543

保存和加载模型

#保存模型

# torch.save(model.state_dict(), 'FlowerRecognitionModel.pth')

# 创建一个新的模型实例

model = CNN()

# 加载模型的参数

model.load_state_dict(torch.load('FlowerRecognitionModel.pth'))

<All keys matched successfully>

测试模型

for batch in test_loader:

break

imgs=batch[0]

outputs = model(imgs)

_, predicted = torch.max(outputs.data, 1)

predicted=predicted.numpy()

visualize_loader(batch,predicted)

有一定的准确度了,模型能差不多识别就好,因为资源问题,就没有一直练下去了。

2863

2863

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?