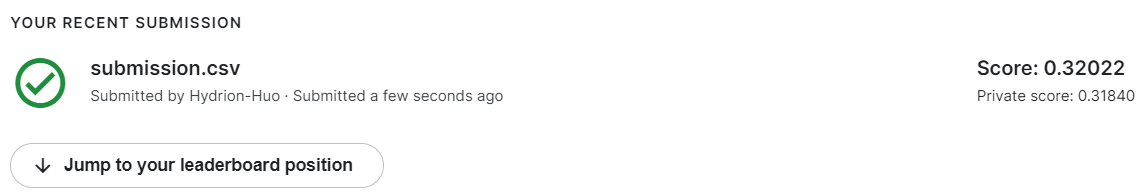

先放一下最终结果,参加的竞赛是Kaggle的Classify Leaves,是李沐大神举办的一个比赛,虽然已经过期了,但是还是可以来熟悉一下基本操作,总共有176个种类,随机预测概率大概为0.0056,我最终得到的预测结果正确率大概为30%,用了最简单的AlenNet,加了一点点的预处理,也就水平翻转之类的,对这个结果还是比较满意的,后续对这个模型继续修改,希望能把正确率提升到70左右吧。

真的是太激动了,用了差不多一个礼拜,从头到尾写出了自己的第一个能用的模型,从开始不知道如何加载数据,如何是不知道如何加载模型,训练模型,到最后生成预测结果,真的是看的再多也不如自己动手敲一遍。

曾经看了吴恩达的机器学习,深度学习也看了一部分,然后转去了李沐的动手学深度学习,吴恩达的视频都是理论,虽然有课后习题但是只做了一部分,然后跟着李沐前面敲了敲代码,本来想着最起码基础的都会吧,但是实际操作起来真的是折磨,首先一直用的都是java,python只稍微学了一点点,然后再就是pytorch也不熟,再就是基本操作过程一直都是跟着敲得,也没有自己从零开始写过,然后呃,整个过程非常的非常的折磨。

就只能问问同学,然后得到一个大概的方向再去看文档,看博客,看源码,看注释,然后一点一点啃,最终是成功磨出来了这个模型,里面很多地方的写法肯定都非常离谱,但是当时为了达到效果实在是不知道该怎么办了,等后面修改的时候再把它改好一点把,希望可以跟李沐大神一样,也不说一样吧,有他代码60%的水平就很好了,它的代码真的是太优美了,致敬。

在这里放上动手学深度学习系列的专栏链接,用框架是pytorch,真的非常赞,坚持啃完肯定会有很多收获的。

下面代码只是我个人纪念,还是不要继续往下看了,也不知道会不会有人看,但是作为入行这个方向不久的小白来说,我觉得虽然它不是很好,但是实现了一部分功能,后面再改进吧,估计也没人看,都是自己搁着纪念了hhhh,如果有人看到了这里,祝你后面的科研路顺利,idea多多,论文多多!!!

import os

os.chdir('/learn/dive-into-deep-learning/src/competition_2')

# -*- coding:utf-8

"""

作者: Jia

日期: 2022年03月14日

描述: 自定义DataSet类,用于读入数据

"""

from torch.utils.data import Dataset

from PIL import Image

import matplotlib.pyplot as plt

class CustomDataSet(Dataset):

def __init__(self, image_path, image_class, transform=None, device="cpu"):

self.image_path = image_path

self.image_class = image_class

self.transform = transform

def show_img(self, index):

plt.subplots(1, 1)

img = Image.open(self.image_path[index])

plt.imshow(img[2])

plt.show()

def __getitem__(self, index):

img = Image.open(self.image_path[index])

if img.mode != 'RGB':

raise ValueError("image:{} isn't RGB mode.".format(self.image_path[index]))

label = np.argmax(self.image_class[index])

label = torch.tensor(label).to(device)

if self.transform is not None:

img = self.transform(img)

return img.to(device), label

def __len__(self):

return len(self.image_path)

import pandas as pd

from torch.utils.data import DataLoader

from torchvision import transforms

import torchvision.models as model

import torch.optim as optim

import torch.nn as nn

import torch

import numpy as np

first_train = True

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

# 训练集transform,随机裁剪并且随机水平翻转,然后转换为tensor类型并做规范化

train_transform = transforms.Compose([

transforms.RandomResizedCrop(224, scale=(0.64, 1.0), ratio=(1, 1)),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

transforms.Normalize([0.4914, 0.4822, 0.4465], [0.2023, 0.1994, 0.2010])

])

# 测试集transform

test_transform = transforms.Compose([

transforms.ToTensor(),

transforms.Normalize([0.4914, 0.4822, 0.4465], [0.2023, 0.1994, 0.2010])

])

train_data = pd.read_csv('train.csv')

num_classes = len(train_data['label'].unique()) # 类别的总数,用于确定网络的输出层有多少参数

# 编码为 one-hot 格式

train_data = pd.get_dummies(train_data, columns=['label'], prefix="", prefix_sep="")

print(train_data.shape)

(18353, 177)

# 建立DataSet

np_train_data = np.array(train_data)

print(np_train_data.shape)

image_path = np_train_data[:, 0]

image_label = np_train_data[:, 1:].astype(float)

train_set = CustomDataSet(image_path, image_label, train_transform, device)

(18353, 177)

# 查询可用GPU数量

torch.cuda.device_count()

8

batch_size = 8

train_loader = DataLoader(train_set, batch_size=batch_size, shuffle=True, drop_last=True)

alexnet = model.alexnet(num_classes=num_classes)

alexnet.train() # 打开训练模式

AlexNet(

(features): Sequential(

(0): Conv2d(3, 64, kernel_size=(11, 11), stride=(4, 4), padding=(2, 2))

(1): ReLU(inplace=True)

(2): MaxPool2d(kernel_size=3, stride=2, padding=0, dilation=1, ceil_mode=False)

(3): Conv2d(64, 192, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(4): ReLU(inplace=True)

(5): MaxPool2d(kernel_size=3, stride=2, padding=0, dilation=1, ceil_mode=False)

(6): Conv2d(192, 384, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(7): ReLU(inplace=True)

(8): Conv2d(384, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(9): ReLU(inplace=True)

(10): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(11): ReLU(inplace=True)

(12): MaxPool2d(kernel_size=3, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(avgpool): AdaptiveAvgPool2d(output_size=(6, 6))

(classifier): Sequential(

(0): Dropout(p=0.5, inplace=False)

(1): Linear(in_features=9216, out_features=4096, bias=True)

(2): ReLU(inplace=True)

(3): Dropout(p=0.5, inplace=False)

(4): Linear(in_features=4096, out_features=4096, bias=True)

(5): ReLU(inplace=True)

(6): Linear(in_features=4096, out_features=176, bias=True)

)

)

# 初始化参数

def init_weights(m):

if type(m) == nn.Linear:

torch.nn.init.normal_(m.weight, std=0.01)

alexnet.apply(init_weights)

AlexNet(

(features): Sequential(

(0): Conv2d(3, 64, kernel_size=(11, 11), stride=(4, 4), padding=(2, 2))

(1): ReLU(inplace=True)

(2): MaxPool2d(kernel_size=3, stride=2, padding=0, dilation=1, ceil_mode=False)

(3): Conv2d(64, 192, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(4): ReLU(inplace=True)

(5): MaxPool2d(kernel_size=3, stride=2, padding=0, dilation=1, ceil_mode=False)

(6): Conv2d(192, 384, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(7): ReLU(inplace=True)

(8): Conv2d(384, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(9): ReLU(inplace=True)

(10): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(11): ReLU(inplace=True)

(12): MaxPool2d(kernel_size=3, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(avgpool): AdaptiveAvgPool2d(output_size=(6, 6))

(classifier): Sequential(

(0): Dropout(p=0.5, inplace=False)

(1): Linear(in_features=9216, out_features=4096, bias=True)

(2): ReLU(inplace=True)

(3): Dropout(p=0.5, inplace=False)

(4): Linear(in_features=4096, out_features=4096, bias=True)

(5): ReLU(inplace=True)

(6): Linear(in_features=4096, out_features=176, bias=True)

)

)

# 将模型放到GPU1上

alexnet.to(device=device)

AlexNet(

(features): Sequential(

(0): Conv2d(3, 64, kernel_size=(11, 11), stride=(4, 4), padding=(2, 2))

(1): ReLU(inplace=True)

(2): MaxPool2d(kernel_size=3, stride=2, padding=0, dilation=1, ceil_mode=False)

(3): Conv2d(64, 192, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(4): ReLU(inplace=True)

(5): MaxPool2d(kernel_size=3, stride=2, padding=0, dilation=1, ceil_mode=False)

(6): Conv2d(192, 384, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(7): ReLU(inplace=True)

(8): Conv2d(384, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(9): ReLU(inplace=True)

(10): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(11): ReLU(inplace=True)

(12): MaxPool2d(kernel_size=3, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(avgpool): AdaptiveAvgPool2d(output_size=(6, 6))

(classifier): Sequential(

(0): Dropout(p=0.5, inplace=False)

(1): Linear(in_features=9216, out_features=4096, bias=True)

(2): ReLU(inplace=True)

(3): Dropout(p=0.5, inplace=False)

(4): Linear(in_features=4096, out_features=4096, bias=True)

(5): ReLU(inplace=True)

(6): Linear(in_features=4096, out_features=176, bias=True)

)

)

# 定义超参数

epochs = 5

learning_rate = 0.02

# 损失函数和优化函数

criterion = nn.CrossEntropyLoss()

optimizer = optim.SGD(alexnet.parameters(), lr=learning_rate)

for step, data in enumerate(train_loader):

print(data[0].shape, data[1])

break

torch.Size([8, 3, 224, 224]) tensor([173, 58, 153, 101, 45, 125, 54, 150], device='cuda:0')

f = open('log.txt', 'w')

if first_train:

for epoch in range(epochs):

running_loss = 0.0

for step, data in enumerate(train_loader):

# 将导数值清零

optimizer.zero_grad()

# forward + backward + optimize

loss = criterion(alexnet(data[0]), data[1])

loss.backward()

optimizer.step()

# 打印日志

running_loss += loss.item()

if step % 50 == 49:

print(

'[%d, %5d] loss: %.3f' % (epoch + 1, step + 1, running_loss / 500)

)

f.write('[%d, %5d] loss: %.3f' % (epoch + 1, step + 1, running_loss / 50) + "\n")

running_loss = 0.0

torch.save(alexnet.state_dict(), "alexNet.params")

f.close()

else:

alexnet.load_state_dict(torch.load('alexNet.params'))

alexnet.eval()

# 生成预测文件

test_data = pd.read_csv("test.csv")

test_data_img_path = test_data['image'].values

print(type(test_data_img_path))

print(test_data_img_path)

test_data_set = CustomDataSet(test_data_img_path, np.zeros_like(test_data_img_path), transform=test_transform,

device=device)

<class 'numpy.ndarray'>

['images/18353.jpg' 'images/18354.jpg' 'images/18355.jpg' ...

'images/27150.jpg' 'images/27151.jpg' 'images/27152.jpg']

test_data_loader = DataLoader(test_data_set, batch_size=batch_size)

pred = []

for X, _ in test_data_loader:

y_hat = alexnet(X.to(device))

pred.extend(y_hat.argmax(dim=1).type(torch.int32).cpu().numpy())

# np_pred = np.array(pred)

# np_pred.shape

labels = train_data.columns.values[1:]

labels

array(['abies_concolor', 'abies_nordmanniana', 'acer_campestre',

'acer_ginnala', 'acer_griseum', 'acer_negundo', 'acer_palmatum',

'acer_pensylvanicum', 'acer_platanoides', 'acer_pseudoplatanus',

'acer_rubrum', 'acer_saccharinum', 'acer_saccharum',

'aesculus_flava', 'aesculus_glabra', 'aesculus_hippocastamon',

'aesculus_pavi', 'ailanthus_altissima', 'albizia_julibrissin',

'amelanchier_arborea', 'amelanchier_canadensis',

'amelanchier_laevis', 'asimina_triloba', 'betula_alleghaniensis',

'betula_jacqemontii', 'betula_lenta', 'betula_nigra',

'betula_populifolia', 'broussonettia_papyrifera',

'carpinus_betulus', 'carpinus_caroliniana', 'carya_cordiformis',

'carya_glabra', 'carya_ovata', 'carya_tomentosa',

'castanea_dentata', 'catalpa_bignonioides', 'catalpa_speciosa',

'cedrus_atlantica', 'cedrus_deodara', 'cedrus_libani',

'celtis_occidentalis', 'celtis_tenuifolia',

'cercidiphyllum_japonicum', 'cercis_canadensis',

'chamaecyparis_pisifera', 'chamaecyparis_thyoides',

'chionanthus_retusus', 'chionanthus_virginicus',

'cladrastis_lutea', 'cornus_florida', 'cornus_kousa', 'cornus_mas',

'crataegus_crus-galli', 'crataegus_laevigata',

'crataegus_phaenopyrum', 'crataegus_pruinosa', 'crataegus_viridis',

'cryptomeria_japonica', 'diospyros_virginiana',

'eucommia_ulmoides', 'evodia_daniellii', 'fagus_grandifolia',

'ficus_carica', 'fraxinus_nigra', 'fraxinus_pennsylvanica',

'ginkgo_biloba', 'gleditsia_triacanthos', 'gymnocladus_dioicus',

'halesia_tetraptera', 'ilex_opaca', 'juglans_cinerea',

'juglans_nigra', 'juniperus_virginiana', 'koelreuteria_paniculata',

'larix_decidua', 'liquidambar_styraciflua',

'liriodendron_tulipifera', 'maclura_pomifera',

'magnolia_acuminata', 'magnolia_denudata', 'magnolia_grandiflora',

'magnolia_macrophylla', 'magnolia_stellata', 'magnolia_tripetala',

'magnolia_virginiana', 'malus_baccata', 'malus_coronaria',

'malus_floribunda', 'malus_hupehensis', 'malus_pumila',

'metasequoia_glyptostroboides', 'morus_alba', 'morus_rubra',

'nyssa_sylvatica', 'ostrya_virginiana', 'oxydendrum_arboreum',

'paulownia_tomentosa', 'phellodendron_amurense', 'picea_abies',

'picea_orientalis', 'picea_pungens', 'pinus_bungeana',

'pinus_cembra', 'pinus_densiflora', 'pinus_echinata',

'pinus_flexilis', 'pinus_koraiensis', 'pinus_nigra',

'pinus_parviflora', 'pinus_peucea', 'pinus_pungens',

'pinus_resinosa', 'pinus_rigida', 'pinus_strobus',

'pinus_sylvestris', 'pinus_taeda', 'pinus_thunbergii',

'pinus_virginiana', 'pinus_wallichiana', 'platanus_acerifolia',

'platanus_occidentalis', 'populus_deltoides',

'populus_grandidentata', 'populus_tremuloides',

'prunus_pensylvanica', 'prunus_sargentii', 'prunus_serotina',

'prunus_serrulata', 'prunus_subhirtella', 'prunus_virginiana',

'prunus_yedoensis', 'pseudolarix_amabilis', 'ptelea_trifoliata',

'pyrus_calleryana', 'quercus_acutissima', 'quercus_alba',

'quercus_bicolor', 'quercus_cerris', 'quercus_coccinea',

'quercus_imbricaria', 'quercus_macrocarpa', 'quercus_marilandica',

'quercus_michauxii', 'quercus_montana', 'quercus_muehlenbergii',

'quercus_nigra', 'quercus_palustris', 'quercus_phellos',

'quercus_robur', 'quercus_shumardii', 'quercus_stellata',

'quercus_velutina', 'quercus_virginiana', 'robinia_pseudo-acacia',

'salix_babylonica', 'salix_caroliniana', 'salix_matsudana',

'salix_nigra', 'sassafras_albidum', 'staphylea_trifolia',

'stewartia_pseudocamellia', 'styrax_japonica',

'taxodium_distichum', 'tilia_americana', 'tilia_cordata',

'tilia_europaea', 'tilia_tomentosa', 'tsuga_canadensis',

'ulmus_americana', 'ulmus_glabra', 'ulmus_parvifolia',

'ulmus_procera', 'ulmus_pumila', 'ulmus_rubra', 'zelkova_serrata'],

dtype=object)

df = pd.DataFrame({'image': test_data_img_path, 'label': labels[pred]})

df

| image | label | |

|---|---|---|

| 0 | images/18353.jpg | prunus_virginiana |

| 1 | images/18354.jpg | eucommia_ulmoides |

| 2 | images/18355.jpg | acer_pseudoplatanus |

| 3 | images/18356.jpg | abies_concolor |

| 4 | images/18357.jpg | platanus_occidentalis |

| ... | ... | ... |

| 8795 | images/27148.jpg | abies_concolor |

| 8796 | images/27149.jpg | amelanchier_canadensis |

| 8797 | images/27150.jpg | abies_concolor |

| 8798 | images/27151.jpg | juniperus_virginiana |

| 8799 | images/27152.jpg | prunus_virginiana |

8800 rows × 2 columns

df.to_csv('submission.csv', index=False)

305

305

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?