deep neural

介绍 (Introduction)

Core ML is a Machine Learning Library launched by Apple in WWDC 2017.

Core ML是Apple在WWDC 2017中推出的机器学习库。

It allows iOS developers to add real-time, personalized experiences with industry-leading, on-device machine learning models in their apps by using Neural Engine.

它使iOS开发人员可以使用Neural Engine在其应用程序中使用行业领先的设备上机器学习模型来添加实时,个性化的体验。

A11仿生芯片概述 (A11 Bionic Chip Overview)

No of Transistors: 4.3B transistors

晶体管数:4.3B晶体管

Number of Cores:6 ARM cores (64bit) –2 fast (2.4GHz) — 4 low energy

核心数量:6个ARM核心(64位)– 2个快速(2.4GHz)— 4个低能耗

Number of Graphical Processing Unit:3 custom GPUs

图形处理单元数量:3个定制GPU

Neural Engine –600 Bops

神经引擎–600 Bops

Apple introduced A11 Bionic Chip with Neural Engine on September 12, 2017. This neural network hardware can perform up to 600 Basic Operations per Second(BOPS) and is used for FaceID, Animoji and other Machine Learning tasks. Developers can take advantage of the neural engine by using Core ML API.

苹果推出9月12日,2017年A11仿生芯片与神经引擎该神经网络硬件能够执行高达每秒600个基本操作(BOPS),用于FaceID, 一个 imoji和其他机器学习任务。 开发人员可以通过使用Core ML API来利用神经引擎。

Core ML optimizes on-device performance by leveraging the CPU, GPU, and Neural Engine while minimizing its memory footprint and power consumption.

Core ML通过利用CPU,GPU和神经引擎来优化设备上的性能,同时最大程度地减少其内存占用空间和功耗。

Running a model strictly on the user’s device removes any need for a network connection, which helps keep the user’s data private and your app responsive.

严格在用户设备上运行模型将消除对网络连接的任何需求,这有助于保持用户数据的私密性和您的应用程序的响应速度。

Core ML is the foundation for domain-specific frameworks and functionality. Core ML supports Vision for analyzing images, Natural Language for processing text, Speech for converting audio to text, and Sound Analysis for identifying sounds in audio.

Core ML是特定于领域的框架和功能的基础。 Core ML支持用于分析图像的视觉,用于处理文本的自然语言,用于将音频转换为文本的语音以及用于识别音频中声音的声音分析。

We can easily automate the task of building machine learning models which include training and testing of the model by using Playground and integrate the resulting model file to our iOS project.

我们可以使用Playground轻松地自动完成构建机器学习模型的任务,包括对模型的训练和测试,并将生成的模型文件集成到我们的iOS项目中。

Starter Tip📝 In machine learning classification problems have discrete labels.

入门提示📝在机器学习中,分类问题具有离散的标签。

好。 我们要建立什么? (Well. What we are going to build?)

In this tutorial, we are going to see how to build an image classifier model using Core ML which can classify Orange and Strawberry images and add the model to our iOS application.

在本教程中,我们将了解如何使用Core ML构建图像分类器模型,该模型可以对Orange和Strawberry图像进行分类并将模型添加到我们的iOS应用程序中。

Starter Tip📝: Image classification comes under supervised machine learning task in which we use labeled data ( in our case label is image name)

入门提示📝:图像分类属于有监督的机器学习任务,其中我们使用标记的数据(在我们的情况下,标签为图像名称)

先决条件: (Prerequisites:)

- Swift 💖language proficiency Swift的语言能力

- iOS development basics iOS开发基础

- Object Oriented Programming concepts 面向对象的编程概念

Application Programs:

应用程序:

- X-code 10 or later X代码10或更高版本

- iOS 11.0+ SDK iOS 11.0+ SDK

- macOS 10.13+ macOS 10.13以上

收集数据集 (Gathering Data set)

When gathering data set for image classification make sure you follow the below guidelines recommended by Apple.

收集用于图像分类的数据集时,请确保遵循Apple建议的以下准则。

- Aim for a minimum of 10 images per category — the more, the better. 每个类别的目标至少是10张图像-越多越好。

- Avoid highly unbalanced datasets by preparing a roughly equal number between categories. 通过在类别之间准备大致相等的数量来避免高度不平衡的数据集。

- Make your model more robust by enabling the Create ML UI’s Augmentation options: Crop, Rotate, Blur, Expose, Noise, and Flip. 通过启用“创建ML用户界面”的“增强”选项,使模型更健壮:“裁剪”,“旋转”,“模糊”,“曝光”,“噪波”和“翻转”。

- Include redundancy in your training set: Take lots of images at different angles, on different backgrounds, and in different lighting conditions. Simulate real-world camera capture, including noise and motion blur. 在您的训练集中包括冗余:在不同角度,不同背景和不同光照条件下拍摄大量图像。 模拟现实世界中的相机捕获,包括噪点和运动模糊。

- Photograph sample objects in your hand to simulate real-world users that try to classify objects in their hands. 为您的手中的示例对象拍照,以模拟现实世界中的用户,这些用户试图对手中的对象进行分类。

- Remove other objects, especially ones that you’d like to classify differently, from view. 从视图中删除其他对象,尤其是您想要不同分类的对象。

Once you have gathered your Data Set make sure that you split the data set as a training data set and testing data set and place them in their respective directory 📁

收集数据集后,请确保将数据集拆分为训练数据集和测试数据集,然后将其放置在各自的目录中📁

IMPORTANT NOTE ⚠ : Make sure you place the respective images in their corresponding directory inside the test directory.Because of the folder name act as Label for our images.

我 MPORTANT注意⚠:请确保您将各自的图像以它们相应的目录中的文件夹名称用作标签为我们的图像测试directory.Because内。

建立模型🔨⚒ (Building a Model 🔨⚒)

Don’t panic! Apple has made this task much more simpler by automating major tasks.

不要惊慌! 苹果通过自动执行主要任务使这项任务变得更加简单。

With Core ML you can use an already trained machine learning model or build your own model to classify input data. The Vision framework works with Core ML to apply classification models to images, and to pre-process those images to make machine learning tasks easier and more reliable.

使用Core ML,您可以使用已经训练有素的机器学习模型,也可以构建自己的模型对输入数据进行分类。 Vision框架与Core ML配合使用,将分类模型应用于图像,并对这些图像进行预处理,从而使机器学习任务更加轻松,更加可靠。

Just follow the below steps.

只需按照以下步骤操作即可。

STEP 1: Pull open your X-code 🛠

步骤1:拉开您的X代码🛠

STEP 2:Create a Blank Swift Playground

步骤2:建立空白的Swift游乐场

STEP 3: Clear the default code and add the following program and run the playground.

步骤3:清除默认代码,然后添加以下程序并运行Playground。

说明: (Description :)

Here we open the default model builder interface provided by the Xcode.

在这里,我们打开Xcode提供的默认模型构建器界面。

STEP 4: Drag the train directory into the training area.

步骤4:将火车目录拖到训练区域中。

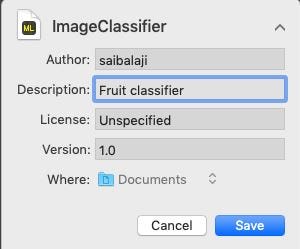

Starter Tip 📝: We can also provide custom name to our model by clicking the down arrow in the training area

入门提示📝:我们还可以通过单击训练区域中的向下箭头来为模型提供自定义名称

Step 5: Xcode will automatically process the image and start the training process. By default, the training takes 10 iterations time taken to train the model depend upon your Mac specs and Data set size. You can see the training progress in the Playground terminal window.

步骤5:Xcode将自动处理图像并开始训练过程。 默认情况下,训练需要10次迭代时间才能训练模型,具体取决于您的Mac规格和数据集大小。 您可以在“操场”终端窗口中查看训练进度。

STEP 6: Once training is completed you can test your model by dragging the Test directory into the testing area. Xcode automatically Test your model and display the result.

步骤6:训练完成后,您可以通过将Test目录拖到测试区域来测试模型。 Xcode自动测试您的模型并显示结果。

STEP 7: Save 💾 your model.

步骤7:保存Save您的模型。

iOS应用集成: (iOS App integration:)

Step 1: Pull open your X-code 🛠.

步骤1:拉开您的X代码🛠。

Step 2: Create a Single Page iOS application 📱.

步骤2:创建Single Page iOS应用程序application。

STEP 3: Open up the project navigator 🧭.

步骤3:打开项目导航器🧭。

STEP 4: Drag and drop the trained model into the project navigator.

步骤4:将训练好的模型拖放到项目导航器中。

STEP 5: Open up Main.storyboard and create a simple interface as shown below add the IBOutlets and IBActions for corresponding views.

步骤5:打开Main.storyboard并创建一个简单的界面,如下所示,为相应的视图添加IBOutlet和IBAction。

STEP 6: Open ViewController.swift file and add the following code as an extension.

步骤6:打开ViewController.swift文件,并添加以下代码作为扩展名。

Description: Here we create an extension for our ViewController class and implement UINavigationControllerDelegate and UIImagePickerControllerDelegate to pop an UIImagePickerView when user clicks PickImage UIButton. Make sure you set the delegate with context.

说明 :在这里,我们为ViewController类创建了一个扩展,并实现UINavigationControllerDelegate和UIImagePickerControllerDelegate来在用户单击PickImage UIButton时弹出UIImagePickerView。 确保使用context设置委托 。

在iOS App中访问核心ML模型的相关步骤 (Steps Involved Accessing the Core ML Model in iOS App)

Step 1: Make sure you import the following libraries.

步骤1:确保您导入以下库。

Step 2: Create an instance for our Core ML model class.

步骤2:为我们的Core ML模型类创建一个实例。

Step 3: To make the Core ML to perform classification we should first create a request of type VNCoreMLRequest (VN stands for Vision👁)

步骤3:要使Core ML进行分类,我们首先应该创建一个VNCoreMLRequest类型的请求(VN代表Vision👁)

STEP 4: Make sure you crop the image so that it is compatible with the core ml model.

步骤4:确保裁剪图像,使其与Core ml模型兼容。

STEP 5: Place the above codes inside an user defined function which returns the request object.

步骤5:将上述代码放在用户定义的函数中,该函数返回请求对象。

STEP 6: Now we should convert our UIImage to CIImage (CI:CoreImage) so that it can be used as an input for our core ml model.It can be done easily by creating an instance for CIImage and passing UIImage in the constructor.

步骤6:现在我们应该将UIImage转换为CIImage(CI:CoreImage),以便可以将其用作我们的核心ml模型的输入,这可以通过为CIImage创建实例并将UIImage传入构造函数来轻松完成。

STEP 7:Now we can handle our VNCoreMLRequest by creating a request handler and passing the ciImage.

步骤7:现在,我们可以通过创建请求处理程序并传递ciImage来处理VNCoreMLRequest。

STEP 8:The request can be excecuted by calling perform() method and passing the VNCoreMLRequest as the parameter.

步骤8:可以通过调用perform()方法并传递VNCoreMLRequest作为参数来执行该请求。

Description: DispatchQueue is an object that manages the execution of tasks serially or concurrently on your app’s main thread or on a background thread.

描述 :DispatchQueue是一个对象,用于管理应用程序主线程或后台线程上串行或并发执行任务。

STEP 10: Place the above code in an user defined function as shown below.

步骤10:将上述代码放入用户定义的函数中,如下所示。

STEP 11:Create a user defined function called handleResult() which takes VNRequest object and error object as parameters this function will be called when VNCoreMLRequest has been completed.

步骤11:创建一个名为handleResult()的用户定义函数,该函数将VNRequest对象和错误对象作为参数,当VNCoreMLRequest完成时将调用该函数。

Note 📓: DispatchQueue.main.async is used to update the UIKit objects (in our case it is UILabel) using UI Thread or Main Thread because all the classification tasks are done in background thread.

注意:DispatchQueue.main.async用于使用UI线程或主线程来更新UIKit对象(在我们的示例中为UILabel),因为所有分类任务都是在后台线程中完成的。

Entire ViewController.Swift code

整个ViewController.Swift代码

All set!

可以了,好了!

Now fire up 🔥 your Simulator and test the app.

现在启动您的模拟器并测试应用程序。

Note 📝:Make sure you have some oranges 🍊 and strawberries🍓 pictures in photo library of your Simulator

注意📝:请确保您的模拟器的图片库中有一些橘子和草莓的图片

脱帽🎉🙌🥳: (Hats off 🎉🙌🥳:)

You have built your first iOS app using Core ML.

您已经使用Core ML构建了您的第一个iOS应用。

deep neural

525

525

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?