嵌套交叉验证 输出超参数

Cross-Validation also referred to as out of sampling technique is an essential element of a data science project. It is a resampling procedure used to evaluate machine learning models and access how the model will perform for an independent test dataset.

交叉验证(也称为“过采样”技术)是数据科学项目的基本要素。 它是一个重采样过程,用于评估机器学习模型并访问该模型对独立测试数据集的性能。

Hyperparameter optimization or tuning is a process of choosing a set of hyperparameters for a machine learning algorithm that performs best for a particular dataset.

超参数优化或调整是为机器学习算法选择一组超参数的过程,该算法对特定数据集的性能最佳。

Both Cross-Validation and Hyperparameter Optimization is an important aspect of a data science project. Cross-validation is used to evaluate the performance of a machine learning algorithm and Hyperparameter tuning is used to find the best set of hyperparameters for that machine learning algorithm.

交叉验证和超参数优化都是数据科学项目的重要方面。 交叉验证用于评估机器学习算法的性能,而超参数调整则用于为该机器学习算法找到最佳的超参数集。

Model selection without nested cross-validation uses the same data to tune model parameters and evaluate model performance that may lead to an optimistically biased evaluation of the model. We get a poor estimation of errors in training or test data due to information leakage. To overcome this problem, Nested Cross-Validation comes into the picture.

没有嵌套交叉验证的模型选择使用相同的数据来调整模型参数并评估模型性能,这可能导致对模型的乐观评估。 由于信息泄漏,我们对训练或测试数据中的错误的估计很差。 为了克服这个问题,嵌套交叉验证成为了图片。

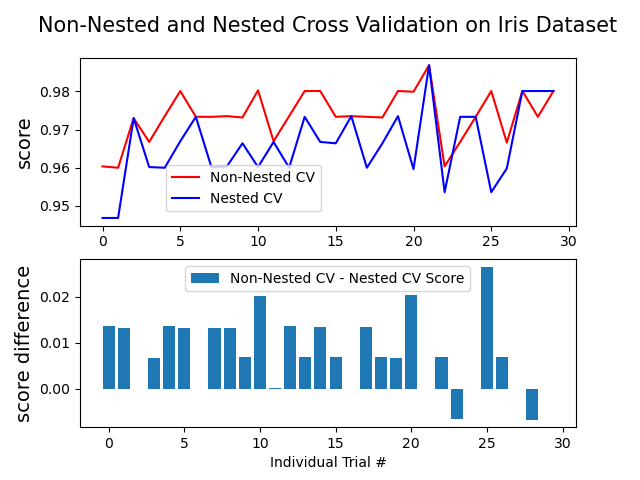

Comparing the performance of non-nested and nested CV strategies for the Iris dataset using a Support Vector Classifier. You can observe the performance plot below, from this article.

使用支持向量分类器比较虹膜数据集的非嵌套和嵌套CV策略的性能。 您可以从本文观察下面的性能图。

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

8209

8209

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?