LearnOpenGL学习笔记—高级OpenGL 09:几何着色器

【项目地址:点击这里这里这里】

本节对应官网学习内容:几何着色器

1 几何着色器知识

在顶点和片段着色器之间有一个可选的几何着色器(Geometry Shader),几何着色器的输入是一个图元(如点或三角形)的一组顶点。

几何着色器可以在顶点发送到下一着色器阶段之前对它们随意变换。

然而,几何着色器最有趣的地方在于,它能够将(这一组)顶点变换为完全不同的图元,并且还能生成比原来更多的顶点。

废话不多说,我们直接先看一个几何着色器的例子:

#version 330 core

layout (points) in;

layout (line_strip, max_vertices = 2) out;

void main() {

gl_Position = gl_in[0].gl_Position + vec4(-0.1, 0.0, 0.0, 0.0);

EmitVertex();

gl_Position = gl_in[0].gl_Position + vec4( 0.1, 0.0, 0.0, 0.0);

EmitVertex();

EndPrimitive();

}

在几何着色器的顶部,我们需要声明从顶点着色器输入的图元类型。这需要在in关键字前声明一个布局修饰符(Layout Qualifier)。

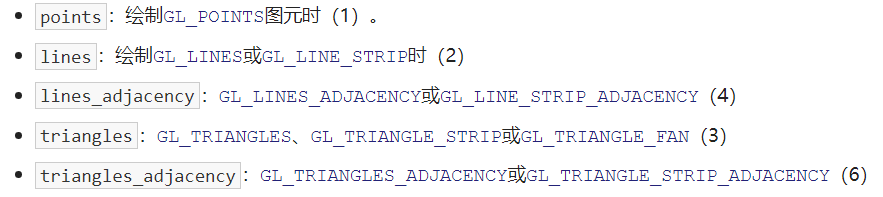

这个输入布局修饰符可以从顶点着色器接收下列任何一个图元值:

- 以上是能提供给glDrawArrays渲染函数的几乎所有图元了。

如果我们想要将顶点绘制为GL_TRIANGLES,我们就要将输入修饰符设置为triangles。

括号内的数字表示的是一个图元所包含的最小顶点数。

我们还需要指定几何着色器输出的图元类型,这需要在out关键字前面加一个布局修饰符。、和输入布局修饰符一样,输出布局修饰符也可以接受几个图元值:

有了这3个输出修饰符,我们就可以使用输入图元创建几乎任意的形状了。

要生成一个三角形的话,我们将输出定义为triangle_strip,并输出3个顶点。

几何着色器同时希望我们设置一个它最大能够输出的顶点数量(如果你超过了这个值,OpenGL将不会绘制多出的顶点),这个也可以在out关键字的布局修饰符中设置。

在上面的例子中,我们将输出一个line_strip,并将最大顶点数设置为2个,这将只能输出一条线段,因为最大顶点数等于2。

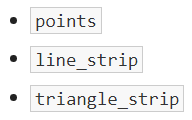

- 线条(Line Strip):线条连接了一组点,形成一条连续的线,它最少要由两个点来组成。

在渲染函数中每多加一个点,就会在这个点与前一个点之间形成一条新的线。在下面这张图中,我们有5个顶点:

为了生成更有意义的结果,我们需要某种方式来获取前一着色器阶段的输出。GLSL提供给我们一个内建(Built-in)变量,在内部看起来(可能)是这样的:

in gl_Vertex

{

vec4 gl_Position;

float gl_PointSize;

float gl_ClipDistance[];

} gl_in[];

这里,它被声明为一个接口块(Interface Block,我们在上一节已经讨论过),它包含了几个很有意思的变量,其中最有趣的一个是gl_Position,它是和顶点着色器输出非常相似的一个向量。

要注意的是,它被声明为一个数组,因为大多数的渲染图元包含多于1个的顶点,而几何着色器的输入是一个图元的所有顶点。

有了之前顶点着色器阶段的顶点数据,我们就可以使用2个几何着色器函数,EmitVertex和EndPrimitive,来生成新的数据了。

几何着色器希望能够生成并输出至少一个定义为输出的图元。

在我们的例子中,我们需要至少生成一个线条图元。

void main() {

gl_Position = gl_in[0].gl_Position + vec4(-0.1, 0.0, 0.0, 0.0);

EmitVertex();

gl_Position = gl_in[0].gl_Position + vec4( 0.1, 0.0, 0.0, 0.0);

EmitVertex();

EndPrimitive();

}

每次我们调用EmitVertex时,gl_Position中的向量会被添加到图元中来。

当EndPrimitive被调用时,所有发射出的(Emitted)顶点都会合成为指定的输出渲染图元。

在一个或多个EmitVertex调用之后重复调用EndPrimitive能够生成多个图元。

在这个例子中,我们发射了两个顶点,它们从原始顶点位置平移了一段距离,之后调用了EndPrimitive,将这两个顶点合成为一个包含两个顶点的线条。

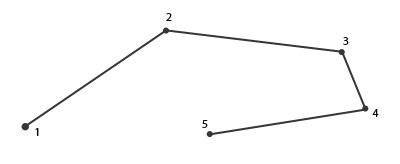

现在(大概)了解了几何着色器的工作方式,我们可能已经猜出这个几何着色器是做什么的了。

它接受一个点图元作为输入,以这个点为中心,创建一条水平的线图元。

如果我们渲染它,看起来会是这样的:

目前还并没有什么令人惊叹的效果,但考虑到这个输出是通过调用下面的渲染函数来生成的,它还是很有意思的:

glDrawArrays(GL_POINTS, 0, 4);

虽然这是一个比较简单的例子,它的确展示了如何能够使用几何着色器来(动态地)生成新的形状。

在之后我们会利用几何着色器创建出更有意思的效果,但现在我们仍将从创建一个简单的几何着色器开始。

2 实现:使用几何着色器

为了展示几何着色器的用法,我们将会渲染一个非常简单的场景,我们只会在标准化设备坐标的z平面上绘制四个点。

以下是简单的代码,都能看懂

#include <iostream>

#define GLEW_STATIC

#include <GL/glew.h>

#include <GLFW/glfw3.h>

float points[] = {

-0.5f, 0.5f, // 左上

0.5f, 0.5f, // 右上

0.5f, -0.5f, // 右下

-0.5f, -0.5f // 左下

};

const char* vertexShaderSource =

"#version 330 core \n"

"layout(location = 0) in vec2 aPos; // 位置变量的属性位置值为 0\n"

"void main(){ \n"

" gl_Position = vec4(aPos.x, aPos.y, 0.0, 1.0); ; \n"

"} \n";

const char* fragmentShaderSource =

"#version 330 core \n"

"out vec4 FragColor; \n"

"void main(){ \n"

" FragColor = vec4(0.0, 1.0, 0.0, 1.0);} \n";

void processInput(GLFWwindow* window) {

if (glfwGetKey(window, GLFW_KEY_ESCAPE) == GLFW_PRESS)

{

glfwSetWindowShouldClose(window, true);

}

}

int main() {

glfwInit();

glfwWindowHint(GLFW_CONTEXT_VERSION_MAJOR, 3);

glfwWindowHint(GLFW_CONTEXT_VERSION_MINOR, 3);

glfwWindowHint(GLFW_OPENGL_PROFILE, GLFW_OPENGL_CORE_PROFILE);

//Open GLFW Window

GLFWwindow* window = glfwCreateWindow(800, 600, "My OpenGL Game", NULL, NULL);

if (window == NULL)

{

printf("Open window failed.");

glfwTerminate();

return -1;

}

glfwMakeContextCurrent(window);

//Init GLEW

glewExperimental = true;

if (glewInit() != GLEW_OK)

{

printf("Init GLEW failed.");

glfwTerminate();

return -1;

}

glViewport(0, 0, 800, 600);

unsigned int VAO;

glGenVertexArrays(1, &VAO);

glBindVertexArray(VAO);

unsigned int VBO;

glGenBuffers(1, &VBO);

glBindBuffer(GL_ARRAY_BUFFER, VBO);

glBufferData(GL_ARRAY_BUFFER, sizeof(points),points, GL_STATIC_DRAW);

unsigned int vertexShader;

vertexShader = glCreateShader(GL_VERTEX_SHADER);

glShaderSource(vertexShader, 1, &vertexShaderSource, NULL);

glCompileShader(vertexShader);

unsigned int fragmentShader;

fragmentShader = glCreateShader(GL_FRAGMENT_SHADER);

glShaderSource(fragmentShader, 1, &fragmentShaderSource, NULL);

glCompileShader(fragmentShader);

unsigned int shaderProgram;

shaderProgram = glCreateProgram();

glAttachShader(shaderProgram, vertexShader);

glAttachShader(shaderProgram, fragmentShader);

glLinkProgram(shaderProgram);

// 位置属性

glVertexAttribPointer(0, 2, GL_FLOAT, GL_FALSE, 2 * sizeof(float), (void*)0);

glEnableVertexAttribArray(0);

while (!glfwWindowShouldClose(window))

{

processInput(window);

glClearColor(0.2f, 0.3f, 0.3f, 1.0f);

glClear(GL_COLOR_BUFFER_BIT);

glUseProgram(shaderProgram);

glBindVertexArray(VAO);

glDrawArrays(GL_POINTS, 0, 4);

glfwSwapBuffers(window);

glfwPollEvents();

}

glfwTerminate();

return 0;

}

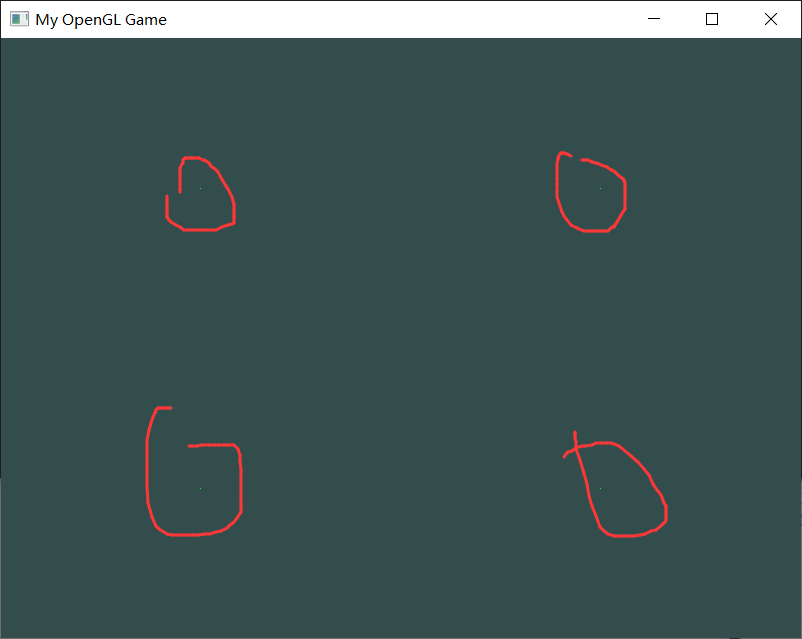

我们可以看到,被我们圈起来的地方出现了四个点

我们之前入门阶段就学过这些操作了,但是现在我们将会添加一个几何着色器,为场景添加活力。

出于学习目的,我们将会创建一个传递(Pass-through)几何着色器,它会接收一个点图元,并直接将它传递(Pass)到下一个着色器

它只是将它接收到的顶点位置不作修改直接发射出去,并生成一个点图元

在创建着色器时我们将会使用GL_GEOMETRY_SHADER作为着色器类型:

以下是代码

#include <iostream>

#define GLEW_STATIC

#include <GL/glew.h>

#include <GLFW/glfw3.h>

float points[] = {

-0.5f, 0.5f, // 左上

0.5f, 0.5f, // 右上

0.5f, -0.5f, // 右下

-0.5f, -0.5f // 左下

};

const char* vertexShaderSource =

"#version 330 core \n"

"layout(location = 0) in vec2 aPos; // 位置变量的属性位置值为 0\n"

"void main(){ \n"

" gl_Position = vec4(aPos.x, aPos.y, 0.0, 1.0); ; \n"

"} \n";

const char* fragmentShaderSource =

"#version 330 core \n"

"out vec4 FragColor; \n"

"void main(){ \n"

" FragColor = vec4(0.0, 1.0, 0.0, 1.0);} \n";

const char* geometryShaderSource =

"#version 330 core \n"

"layout (points) in; \n"

"layout (points, max_vertices = 1) out; \n"

"void main(){ \n"

"gl_Position = gl_in[0].gl_Position; \n"

"EmitVertex(); \n"

"EndPrimitive();} \n";

void processInput(GLFWwindow* window) {

if (glfwGetKey(window, GLFW_KEY_ESCAPE) == GLFW_PRESS)

{

glfwSetWindowShouldClose(window, true);

}

}

int main() {

glfwInit();

glfwWindowHint(GLFW_CONTEXT_VERSION_MAJOR, 3);

glfwWindowHint(GLFW_CONTEXT_VERSION_MINOR, 3);

glfwWindowHint(GLFW_OPENGL_PROFILE, GLFW_OPENGL_CORE_PROFILE);

//Open GLFW Window

GLFWwindow* window = glfwCreateWindow(800, 600, "My OpenGL Game", NULL, NULL);

if (window == NULL)

{

printf("Open window failed.");

glfwTerminate();

return -1;

}

glfwMakeContextCurrent(window);

//Init GLEW

glewExperimental = true;

if (glewInit() != GLEW_OK)

{

printf("Init GLEW failed.");

glfwTerminate();

return -1;

}

glViewport(0, 0, 800, 600);

unsigned int VAO;

glGenVertexArrays(1, &VAO);

glBindVertexArray(VAO);

unsigned int VBO;

glGenBuffers(1, &VBO);

glBindBuffer(GL_ARRAY_BUFFER, VBO);

glBufferData(GL_ARRAY_BUFFER, sizeof(points),points, GL_STATIC_DRAW);

unsigned int vertexShader;

vertexShader = glCreateShader(GL_VERTEX_SHADER);

glShaderSource(vertexShader, 1, &vertexShaderSource, NULL);

glCompileShader(vertexShader);

unsigned int fragmentShader;

fragmentShader = glCreateShader(GL_FRAGMENT_SHADER);

glShaderSource(fragmentShader, 1, &fragmentShaderSource, NULL);

glCompileShader(fragmentShader);

unsigned int geometryShader;

geometryShader = glCreateShader(GL_GEOMETRY_SHADER);

glShaderSource(geometryShader, 1, &geometryShaderSource, NULL);

glCompileShader(geometryShader);

unsigned int shaderProgram;

shaderProgram = glCreateProgram();

glAttachShader(shaderProgram, vertexShader);

glAttachShader(shaderProgram, fragmentShader);

glAttachShader(shaderProgram, geometryShader);

glLinkProgram(shaderProgram);

// 位置属性

glVertexAttribPointer(0, 2, GL_FLOAT, GL_FALSE, 2 * sizeof(float), (void*)0);

glEnableVertexAttribArray(0);

while (!glfwWindowShouldClose(window))

{

processInput(window);

glClearColor(0.2f, 0.3f, 0.3f, 1.0f);

glClear(GL_COLOR_BUFFER_BIT);

glUseProgram(shaderProgram);

glBindVertexArray(VAO);

glDrawArrays(GL_POINTS, 0, 4);

glfwSwapBuffers(window);

glfwPollEvents();

}

glfwTerminate();

return 0;

}

如果没有编译错误并运行程序,会看到和之前的效果图一样

这是有点无聊,但既然我们仍然能够绘制这些点,所以几何着色器是正常工作的,现在是时候做点更有趣的东西了!

3 实现:造几个房子

绘制点和线并没有那么有趣,所以我们会使用一点创造力,利用几何着色器在每个点的位置上绘制一个房子。

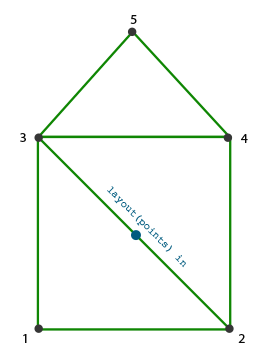

要实现这个,我们可以将几何着色器的输出设置为triangle_strip,并绘制三个三角形:其中两个组成一个正方形,另一个用作房顶。

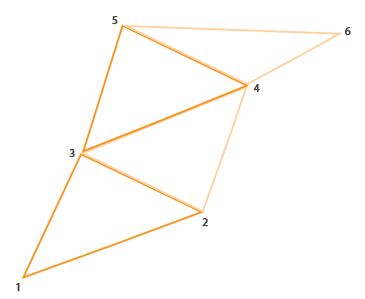

OpenGL中,三角形带(Triangle Strip)是绘制三角形更高效的方式,它使用顶点更少。

在第一个三角形绘制完之后,每个后续顶点将会在上一个三角形边上生成另一个三角形:每3个临近的顶点将会形成一个三角形。

如果我们一共有6个构成三角形带的顶点,那么我们会得到这些三角形:(1, 2, 3)、(2, 3, 4)、(3, 4, 5)和(4, 5, 6),共形成4个三角形。

一个三角形带至少需要3个顶点,并会生成N-2个三角形。

使用6个顶点,我们创建了6-2 = 4个三角形。下面这幅图展示了这点:

通过使用三角形带作为几何着色器的输出,我们可以很容易创建出需要的房子形状,只需要以正确的顺序生成3个相连的三角形就行了。

下面这幅图展示了顶点绘制的顺序,蓝点代表的是输入点:

在几何着色器中我们这样写

const char* geometryShaderSource =

"#version 330 core \n"

"layout(points) in; \n"

"layout(triangle_strip, max_vertices = 5) out; \n"

"void build_house(vec4 position) \n"

"{ \n"

" gl_Position = position + vec4(-0.2, -0.2, 0.0, 0.0); // 1:左下 \n"

" EmitVertex(); \n"

" gl_Position = position + vec4(0.2, -0.2, 0.0, 0.0); // 2:右下 \n"

" EmitVertex(); \n"

" gl_Position = position + vec4(-0.2, 0.2, 0.0, 0.0); // 3:左上 \n"

" EmitVertex(); \n"

" gl_Position = position + vec4(0.2, 0.2, 0.0, 0.0); // 4:右上 \n"

" EmitVertex(); \n"

" gl_Position = position + vec4(0.0, 0.4, 0.0, 0.0); // 5:顶部 \n"

" EmitVertex(); \n"

" EndPrimitive(); \n"

"} \n"

"void main() { \n"

" build_house(gl_in[0].gl_Position); \n"

"} \n";

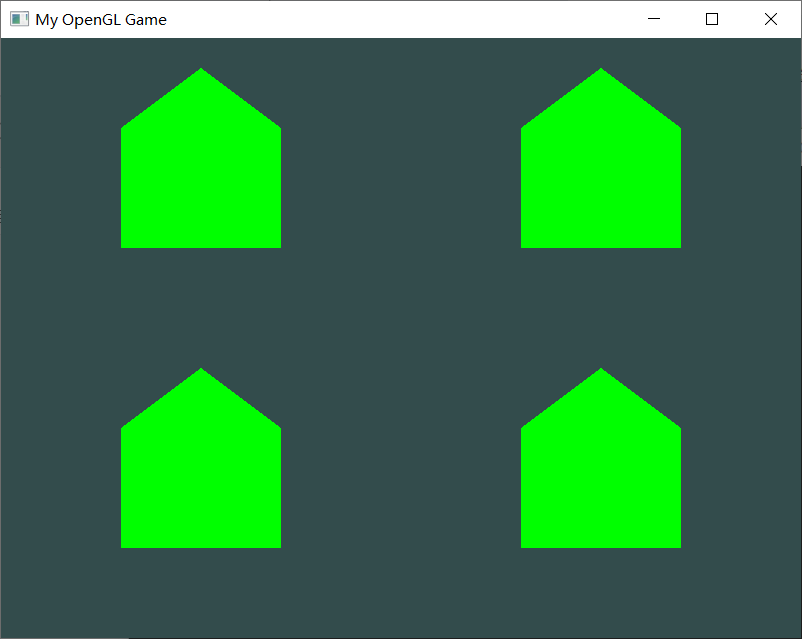

这个几何着色器生成了5个顶点,每个顶点都是原始点的位置加上一个偏移量,来组成一个大的三角形带。最终的图元会被光栅化,然后片段着色器会处理整个三角形带,最终在每个绘制的点处生成一个绿色房子:

可以看到,每个房子实际上是由3个三角形组成的——全部都是使用空间中一点来绘制的。

这些绿房子看起来是有点无聊,所以我们会再给每个房子分配一个不同的颜色。

为了实现这个,我们需要在顶点着色器中添加一个额外的顶点属性,表示颜色信息,将它传递至几何着色器,并再次发送到片段着色器中。

float points[] = {

-0.5f, 0.5f, 1.0f, 0.0f, 0.0f, // 左上

0.5f, 0.5f, 0.0f, 1.0f, 0.0f, // 右上

0.5f, -0.5f, 0.0f, 0.0f, 1.0f, // 右下

-0.5f, -0.5f, 1.0f, 1.0f, 0.0f // 左下

};

然后我们更新顶点着色器,使用一个接口块将颜色属性发送到几何着色器中:

const char* vertexShaderSource =

"#version 330 core \n"

"layout(location = 0) in vec2 aPos; \n"

"layout(location = 1) in vec3 aColor; \n"

" \n"

"out VS_OUT{ \n"

" vec3 color; \n"

"} vs_out; \n"

" \n"

"void main() \n"

"{ \n"

" gl_Position = vec4(aPos.x, aPos.y, 0.0, 1.0); \n"

" vs_out.color = aColor; \n"

"} \n";

接下来我们还需要在几何着色器中声明相同的接口块(使用一个不同的接口名):

in VS_OUT {

vec3 color;

} gs_in[];

因为几何着色器是作用于输入的一组顶点的,从顶点着色器发来输入数据总是会以数组的形式表示出来,即便我们现在只有一个顶点。

接下来我们还需要为下个片段着色器阶段声明一个输出颜色向量:

out vec3 fColor;

因为片段着色器只需要一个(插值的)颜色,发送多个颜色并没有什么意义。

所以,fColor向量就不是一个数组,而是一个单独的向量。

当发射一个顶点的时候,每个顶点将会使用最后在fColor中储存的值,来用于片段着色器的运行。

对我们的房子来说,我们只需要在第一个顶点发射之前,使用顶点着色器中的颜色填充fColor一次就可以了。

所有发射出的顶点都将嵌有最后储存在fColor中的值,即顶点的颜色属性值。所有的房子都会有它们自己的颜色了

我们也可以假装这是冬天,将最后一个顶点的颜色设置为白色,给屋顶落上一些雪。

const char* geometryShaderSource =

"#version 330 core \n"

"layout(points) in; \n"

"layout(triangle_strip, max_vertices = 5) out; \n"

"in VS_OUT{ \n"

" vec3 color; \n"

"} gs_in[]; \n"

"out vec3 fColor; \n"

"void build_house(vec4 position) \n"

"{ \n"

" fColor = gs_in[0].color; \n"

" gl_Position = position + vec4(-0.2, -0.2, 0.0, 0.0); // 1:左下 \n"

" EmitVertex(); \n"

" gl_Position = position + vec4(0.2, -0.2, 0.0, 0.0); // 2:右下 \n"

" EmitVertex(); \n"

" gl_Position = position + vec4(-0.2, 0.2, 0.0, 0.0); // 3:左上 \n"

" EmitVertex(); \n"

" gl_Position = position + vec4(0.2, 0.2, 0.0, 0.0); // 4:右上 \n"

" EmitVertex(); \n"

" gl_Position = position + vec4(0.0, 0.4, 0.0, 0.0); // 5:顶部 \n"

" fColor = vec3(1.0, 1.0, 1.0); \n"

" EmitVertex(); \n"

" EndPrimitive(); \n"

"} \n"

"void main() { \n"

" build_house(gl_in[0].gl_Position); \n"

"} \n";

可以看到,有了几何着色器,甚至可以将最简单的图元变得十分有创意。

因为这些形状是在GPU的超快硬件中动态生成的,这会比在顶点缓冲中手动定义图形要高效很多。

因此,几何缓冲对简单而且经常重复的形状来说是一个很好的优化工具,比如体素(Voxel)世界中的方块和室外草地的每一根草。

以下是这个房子的所有代码

#include <iostream>

#define GLEW_STATIC

#include <GL/glew.h>

#include <GLFW/glfw3.h>

float points[] = {

-0.5f, 0.5f, 1.0f, 0.0f, 0.0f, // 左上

0.5f, 0.5f, 0.0f, 1.0f, 0.0f, // 右上

0.5f, -0.5f, 0.0f, 0.0f, 1.0f, // 右下

-0.5f, -0.5f, 1.0f, 1.0f, 0.0f // 左下

};

const char* vertexShaderSource =

"#version 330 core \n"

"layout(location = 0) in vec2 aPos; \n"

"layout(location = 1) in vec3 aColor; \n"

" \n"

"out VS_OUT{ \n"

" vec3 color; \n"

"} vs_out; \n"

" \n"

"void main() \n"

"{ \n"

" gl_Position = vec4(aPos.x, aPos.y, 0.0, 1.0); \n"

" vs_out.color = aColor; \n"

"} \n";

const char* fragmentShaderSource =

"#version 330 core \n"

"out vec4 FragColor; \n"

"in vec3 fColor; \n"

"void main(){ \n"

" FragColor = vec4(fColor, 1.0);} \n";

const char* geometryShaderSource =

"#version 330 core \n"

"layout(points) in; \n"

"layout(triangle_strip, max_vertices = 5) out; \n"

"in VS_OUT{ \n"

" vec3 color; \n"

"} gs_in[]; \n"

"out vec3 fColor; \n"

"void build_house(vec4 position) \n"

"{ \n"

" fColor = gs_in[0].color; \n"

" gl_Position = position + vec4(-0.2, -0.2, 0.0, 0.0); // 1:左下 \n"

" EmitVertex(); \n"

" gl_Position = position + vec4(0.2, -0.2, 0.0, 0.0); // 2:右下 \n"

" EmitVertex(); \n"

" gl_Position = position + vec4(-0.2, 0.2, 0.0, 0.0); // 3:左上 \n"

" EmitVertex(); \n"

" gl_Position = position + vec4(0.2, 0.2, 0.0, 0.0); // 4:右上 \n"

" EmitVertex(); \n"

" gl_Position = position + vec4(0.0, 0.4, 0.0, 0.0); // 5:顶部 \n"

" fColor = vec3(1.0, 1.0, 1.0); \n"

" EmitVertex(); \n"

" EndPrimitive(); \n"

"} \n"

"void main() { \n"

" build_house(gl_in[0].gl_Position); \n"

"} \n";

void processInput(GLFWwindow* window) {

if (glfwGetKey(window, GLFW_KEY_ESCAPE) == GLFW_PRESS)

{

glfwSetWindowShouldClose(window, true);

}

}

int main() {

glfwInit();

glfwWindowHint(GLFW_CONTEXT_VERSION_MAJOR, 3);

glfwWindowHint(GLFW_CONTEXT_VERSION_MINOR, 3);

glfwWindowHint(GLFW_OPENGL_PROFILE, GLFW_OPENGL_CORE_PROFILE);

//Open GLFW Window

GLFWwindow* window = glfwCreateWindow(800, 600, "My OpenGL Game", NULL, NULL);

if (window == NULL)

{

printf("Open window failed.");

glfwTerminate();

return -1;

}

glfwMakeContextCurrent(window);

//Init GLEW

glewExperimental = true;

if (glewInit() != GLEW_OK)

{

printf("Init GLEW failed.");

glfwTerminate();

return -1;

}

glViewport(0, 0, 800, 600);

unsigned int VAO;

glGenVertexArrays(1, &VAO);

glBindVertexArray(VAO);

unsigned int VBO;

glGenBuffers(1, &VBO);

glBindBuffer(GL_ARRAY_BUFFER, VBO);

glBufferData(GL_ARRAY_BUFFER, sizeof(points),&points, GL_STATIC_DRAW);

unsigned int vertexShader;

vertexShader = glCreateShader(GL_VERTEX_SHADER);

glShaderSource(vertexShader, 1, &vertexShaderSource, NULL);

glCompileShader(vertexShader);

unsigned int fragmentShader;

fragmentShader = glCreateShader(GL_FRAGMENT_SHADER);

glShaderSource(fragmentShader, 1, &fragmentShaderSource, NULL);

glCompileShader(fragmentShader);

unsigned int geometryShader;

geometryShader = glCreateShader(GL_GEOMETRY_SHADER);

glShaderSource(geometryShader, 1, &geometryShaderSource, NULL);

glCompileShader(geometryShader);

unsigned int shaderProgram;

shaderProgram = glCreateProgram();

glAttachShader(shaderProgram, vertexShader);

glAttachShader(shaderProgram, fragmentShader);

glAttachShader(shaderProgram, geometryShader);

glLinkProgram(shaderProgram);

// 位置属性

glEnableVertexAttribArray(0);

glVertexAttribPointer(0, 2, GL_FLOAT, GL_FALSE, 5 * sizeof(float), (void*)0);

// 颜色属性

glEnableVertexAttribArray(1);

glVertexAttribPointer(1, 3, GL_FLOAT, GL_FALSE, 5 * sizeof(float), (void*)(2 * sizeof(float)));

while (!glfwWindowShouldClose(window))

{

processInput(window);

glClearColor(0.2f, 0.3f, 0.3f, 1.0f);

glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT);

glUseProgram(shaderProgram);

glBindVertexArray(VAO);

glDrawArrays(GL_POINTS, 0, 4);

glfwSwapBuffers(window);

glfwPollEvents();

}

glfwTerminate();

return 0;

}

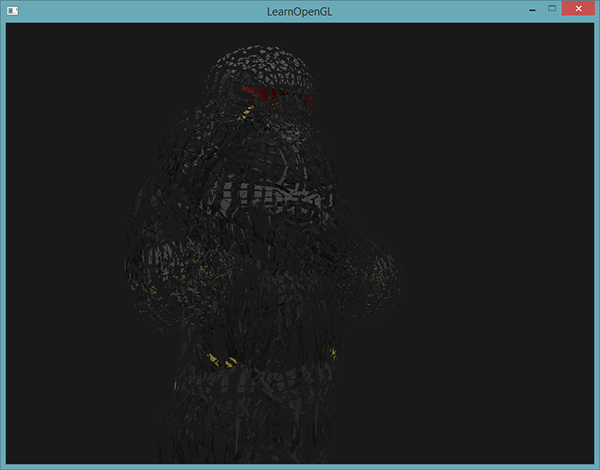

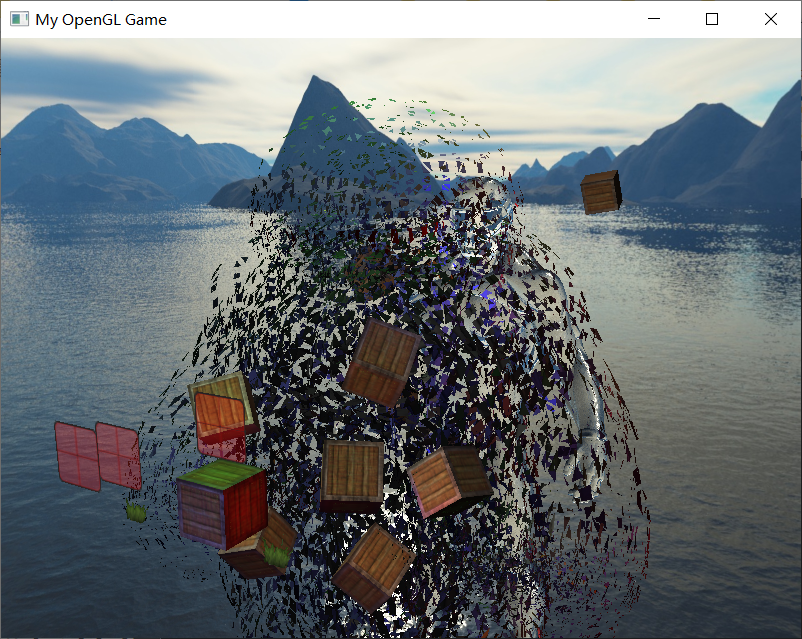

4 爆破物体

尽管绘制房子非常有趣,但我们不会经常这么做。

这也是为什么我们接下来要继续深入,来爆破(Explode)物体!

虽然这也是一个不怎么常用的东西,但是它能向你展示几何着色器的强大之处。

当我们说爆破一个物体时,我们并不是指要将宝贵的顶点集给炸掉,我们是要将每个三角形沿着法向量的方向移动一小段时间。

效果就是,整个物体看起来像是沿着每个三角形的法线向量爆炸一样。爆炸三角形的效果在纳米装模型上看起来像是这样的(官网的图):

这样的几何着色器效果的一个好处就是,无论物体有多复杂,它都能够应用上去。

因为我们想要沿着三角形的法向量位移每个顶点,我们首先需要计算这个法向量。

我们所要做的是计算垂直于三角形表面的向量,仅使用我们能够访问的3个顶点。

你可能还记得在变换小节中,我们使用叉乘来获取垂直于其它两个向量的一个向量。

如果我们能够获取两个平行于三角形表面的向量a和b,我们就能够对这两个向量进行叉乘来获取法向量了。

下面这个几何着色器函数做的正是这个,来使用3个输入顶点坐标来获取法向量:

vec3 GetNormal()

{

vec3 a = vec3(gl_in[0].gl_Position) - vec3(gl_in[1].gl_Position);

vec3 b = vec3(gl_in[2].gl_Position) - vec3(gl_in[1].gl_Position);

return normalize(cross(a, b));

}

这里我们使用减法获取了两个平行于三角形表面的向量a和b。

因为两个向量相减能够得到这两个向量之间的差值,并且三个点都位于三角平面上,对任意两个向量相减都能够得到一个平行于平面的向量。

注意,如果我们交换了cross函数中a和b的位置,我们会得到一个指向相反方向的法向量——这里的顺序很重要!

既然知道了如何计算法向量了,我们就能够创建一个explode函数了,它使用法向量和顶点位置向量作为参数。

这个函数会返回一个新的向量,它是位置向量沿着法线向量进行位移之后的结果:

vec4 explode(vec4 position, vec3 normal)

{

float magnitude = 6.0;

vec3 direction = normal * ((sin(time1/1.5) + 1.0) / 2.0) * magnitude;

return position + vec4(direction.xy,0.0, 0.0);

}

函数本身应该不是非常复杂。sin函数接收一个time参数,它根据时间返回一个-1.0到1.0之间的值。

因为我们不想让物体向内爆炸(Implode),我们将sin值变换到了[0, 1]的范围内。

最终的结果会乘以normal向量,并且最终的direction向量会被加到位置向量上。

注意我们这里和教程不一样,因为默认的direction的Z值会破坏深度测试的结果(坐标里已经有Z值了),所以我们把它清零,变成只有xy

4.1 检查:bug修复

写完到后期(博客时间为刚写到高级光照的阴影部分)发现的一个BUG,进行修改:

官网在这里直接在顶点着色器里算了gl_Position,然后做爆破的位移,其实不妥

我们需要把用MVP矩阵算gl_Position(以及为了自己加的光照算FragPos)的这个步骤,放在爆破以后。

因为我们需要在几何着色器中对gl_Position进行操作,然而在顶点着色器中经过了MVP矩阵变换(特别是因为透视投影)的gl_Position并不是在线性空间的!!!(并且如果提早算了FragPos,着色计算就没有效果了。)

正确步骤是未进行MVP变换就爆破,爆破后接着用M算FragPos(因为着色点是不能受VP来影响的),再把VP变换加上算gl_Position

下文的Shader代码已经做了修正,可以放心使用,效果图未进行修正,请注意(因为做到了后面加上了很多其他功能,不好还原到这一步了Orz)

正确的效果图中,爆炸的碎片会比较亮,并且能正确反应光照效果(此文中效果图是错误的,是黑的,因为是FragPos错误了,反应不了光照)

4.2 设置爆破效果

为了实现这个效果,我们扩充一下Shader类的建构函数,让其允许几何着色器的加入

Shader.h

#pragma once

#include <string>

#include <glm/glm.hpp>

#include <glm/gtc/matrix_transform.hpp>

#include <glm/gtc/type_ptr.hpp>

class Shader

{

public:

Shader(const char* vertexPath, const char* fragmentPath);

Shader(const char* vertexPath, const char* fragmentPath, const char* geometryPath);

std::string vertexString;

std::string fragmentString;

std::string geometryString;

const char* vertexSource;

const char* fragmentSource;

const char* geometrySource;

unsigned int ID; //Shader Program ID

void use();

void SetUniform3f(const char* paraNameString,glm::vec3 param);

void SetUniform1f(const char* paraNameString, float param);

void SetUniform1i(const char* paraNameString, int slot);

//~Shader();

private:

void checkCompileErrors(unsigned int ID, std::string type);

};

Shader.cpp

#include "Shader.h"

#include <iostream>

#include <fstream>

#include <SStream>

#define GLEW_STATIC

#include <GL/glew.h>

#include <GLFW/glfw3.h>

using namespace std;

Shader::Shader(const char* vertexPath, const char* fragmentPath)

{

//从文件路径中获取顶点/片段着色器

ifstream vertexFile;

ifstream fragmentFile;

stringstream vertexSStream;

stringstream fragmentSStream;

//打开文件

vertexFile.open(vertexPath);

fragmentFile.open(fragmentPath);

//保证ifstream对象可以抛出异常:

vertexFile.exceptions(ifstream::failbit || ifstream::badbit);

fragmentFile.exceptions(ifstream::failbit || ifstream::badbit);

try

{

if (!vertexFile.is_open() || !fragmentFile.is_open())

{

throw exception("open file error");

}

//读取文件缓冲内容到数据流

vertexSStream << vertexFile.rdbuf();

fragmentSStream << fragmentFile.rdbuf();

//转换数据流到string

vertexString = vertexSStream.str();

fragmentString = fragmentSStream.str();

vertexSource = vertexString.c_str();

fragmentSource = fragmentString.c_str();

// 编译着色器

unsigned int vertex, fragment;

// 顶点着色器

vertex = glCreateShader(GL_VERTEX_SHADER);

glShaderSource(vertex, 1, &vertexSource, NULL);

glCompileShader(vertex);

checkCompileErrors(vertex, "VERTEX");

// 片段着色器

fragment = glCreateShader(GL_FRAGMENT_SHADER);

glShaderSource(fragment, 1, &fragmentSource, NULL);

glCompileShader(fragment);

checkCompileErrors(fragment, "FRAGMENT");

// 着色器程序

ID = glCreateProgram();

glAttachShader(ID, vertex);

glAttachShader(ID, fragment);

glLinkProgram(ID);

checkCompileErrors(ID, "PROGRAM");

// 删除着色器,它们已经链接到我们的程序中了,已经不再需要了

glDeleteShader(vertex);

glDeleteShader(fragment);

}

catch (const std::exception& ex)

{

printf(ex.what());

}

}

Shader::Shader(const char* vertexPath, const char* fragmentPath, const char* geometryPath)

{

//从文件路径中获取顶点/片段着色器

ifstream vertexFile;

ifstream fragmentFile;

ifstream geometryFile;

stringstream vertexSStream;

stringstream fragmentSStream;

stringstream geometrySStream;

//打开文件

vertexFile.open(vertexPath);

fragmentFile.open(fragmentPath);

geometryFile.open(geometryPath);

//保证ifstream对象可以抛出异常:

vertexFile.exceptions(ifstream::failbit || ifstream::badbit);

fragmentFile.exceptions(ifstream::failbit || ifstream::badbit);

geometryFile.exceptions(ifstream::failbit || ifstream::badbit);

try

{

if (!vertexFile.is_open() || !fragmentFile.is_open() || !geometryFile.is_open())

{

throw exception("open file error");

}

//读取文件缓冲内容到数据流

vertexSStream << vertexFile.rdbuf();

fragmentSStream << fragmentFile.rdbuf();

geometrySStream << geometryFile.rdbuf();

//转换数据流到string

vertexString = vertexSStream.str();

fragmentString = fragmentSStream.str();

geometryString = geometrySStream.str();

vertexSource = vertexString.c_str();

fragmentSource = fragmentString.c_str();

geometrySource = geometryString.c_str();

// 编译着色器

unsigned int vertex, fragment, geometry;

// 顶点着色器

vertex = glCreateShader(GL_VERTEX_SHADER);

glShaderSource(vertex, 1, &vertexSource, NULL);

glCompileShader(vertex);

checkCompileErrors(vertex, "VERTEX");

// 片段着色器

fragment = glCreateShader(GL_FRAGMENT_SHADER);

glShaderSource(fragment, 1, &fragmentSource, NULL);

glCompileShader(fragment);

checkCompileErrors(fragment, "FRAGMENT");

// 几何着色器

geometry = glCreateShader(GL_GEOMETRY_SHADER);

glShaderSource(geometry, 1, &geometrySource, NULL);

glCompileShader(geometry);

checkCompileErrors(geometry, "GEOMETRY");

// 着色器程序

ID = glCreateProgram();

glAttachShader(ID, vertex);

glAttachShader(ID, fragment);

glAttachShader(ID, geometry);

glLinkProgram(ID);

checkCompileErrors(ID, "PROGRAM");

// 删除着色器,它们已经链接到我们的程序中了,已经不再需要了

glDeleteShader(vertex);

glDeleteShader(fragment);

glDeleteShader(geometry);

}

catch (const std::exception& ex)

{

printf(ex.what());

}

}

void Shader::use()

{

glUseProgram(ID);

}

void Shader::SetUniform3f(const char * paraNameString, glm::vec3 param)

{

glUniform3f(glGetUniformLocation(ID, paraNameString), param.x, param.y, param.z);

}

void Shader::SetUniform1f(const char * paraNameString, float param)

{

glUniform1f(glGetUniformLocation(ID, paraNameString), param);

}

void Shader::SetUniform1i(const char * paraNameString, int slot)

{

glUniform1i(glGetUniformLocation(ID, paraNameString), slot);

}

void Shader::checkCompileErrors(unsigned int ID, std::string type) {

int sucess;

char infolog[512];

if (type != "PROGRAM")

{

glGetShaderiv(ID, GL_COMPILE_STATUS, &sucess);

if (!sucess)

{

glGetShaderInfoLog(ID, 512, NULL, infolog);

cout << "shader compile error:" << infolog << endl;

}

}

else

{

glGetProgramiv(ID, GL_LINK_STATUS, &sucess);

if (!sucess)

{

glGetProgramInfoLog(ID, 512, NULL, infolog);

cout << "program linking error:" << infolog << endl;

}

}

}

为机器人建立一个Shader

Shader* myShaderObj = new Shader("vertexSource.vert", "fragmentSource.frag", "geometrySource.geom");

我们为机器人爆炸设置正确的顶点着色器 vertexSourceObjExplode.vert

(后期注:这里我们不用MVP算FragPos以及gl_Position)

#version 330 core

layout(location = 0) in vec3 aPos; // 位置变量的属性位置值为 0

layout(location = 1) in vec3 aNormal; // 法向量的属性位置值为 1

layout(location = 2) in vec2 aTexCoord; // uv变量的属性位置值为 2

out VS_OUT {

vec2 texCoords;

} vs_out;

//着色点和法向

//out vec3 FragPos;

out mat4 modelMat1;

uniform mat4 modelMat;

layout (std140) uniform Matrices

{

mat4 viewMat;

mat4 projMat;

};

void main()

{

modelMat1 = modelMat;

vs_out.texCoords = aTexCoord;

gl_Position = vec4(aPos.xyz,1.0);

// FragPos=(modelMat * vec4(aPos.xyz,1.0)).xyz;

}

片元着色器(虽然还是之前的那种) fragmentSource.frag

#version 330 core

//着色点和法向

in vec3 FragPos;

in vec3 Normal;

in vec2 TexCoord;

struct Material {

vec3 ambient;

sampler2D diffuse;

sampler2D specular;

sampler2D emission;

float shininess;

};

uniform Material material;

struct LightDirectional{

vec3 dirToLight;

vec3 color;

};

uniform LightDirectional lightD;

struct LightPoint{

vec3 pos;

vec3 color;

float constant;

float linear;

float quadratic;

};

uniform LightPoint lightP0;

uniform LightPoint lightP1;

uniform LightPoint lightP2;

uniform LightPoint lightP3;

struct LightSpot{

vec3 pos;

vec3 color;

float constant;

float linear;

float quadratic;

vec3 dirToLight;

float cosPhyInner;

float cosPhyOuter;

};

uniform LightSpot lightS;

out vec4 FragColor;

uniform vec3 objColor;

uniform vec3 ambientColor;

uniform vec3 cameraPos;

uniform float time;

uniform float What;

vec3 CalcLightDirectional(LightDirectional light, vec3 uNormal, vec3 dirToCamera){

//diffuse

float diffIntensity = max(dot(uNormal, light.dirToLight), 0.0);

vec3 diffuseColor = diffIntensity * texture(material.diffuse,TexCoord).rgb * light.color;

//Blinn-Phong specular

vec3 halfwarDir = normalize(light.dirToLight + dirToCamera);

float specularAmount = pow(max(dot(uNormal, halfwarDir), 0.0),material.shininess);

vec3 specularColor = texture(material.specular,TexCoord).rgb * specularAmount * light.color;

vec3 result = diffuseColor+specularColor;

return result;

}

vec3 CalcLightPoint(LightPoint light, vec3 uNormal, vec3 dirToCamera){

//attenuation

vec3 dirToLight=light.pos-FragPos;

float dist =length(dirToLight);

float attenuation= 1.0 / (light.constant + light.linear * dist + light.quadratic * (dist * dist));

//diffuse

float diffIntensity = max(dot(uNormal, normalize(dirToLight)), 0.0);

vec3 diffuseColor = diffIntensity * texture(material.diffuse,TexCoord).rgb * light.color;

//Blinn-Phong specular

vec3 halfwarDir = normalize(dirToLight + dirToCamera);

float specularAmount = pow(max(dot(uNormal, halfwarDir), 0.0),material.shininess);

vec3 specularColor = texture(material.specular,TexCoord).rgb * specularAmount * light.color;

vec3 result = (diffuseColor+specularColor)*attenuation;

return result;

}

vec3 CalcLightSpot(LightSpot light, vec3 uNormal, vec3 dirToCamera){

//attenuation

vec3 dirToLight=light.pos-FragPos;

float dist =length(dirToLight);

float attenuation= 1.0 / (light.constant + light.linear * dist + light.quadratic * (dist * dist));

//diffuse

float diffIntensity = max(dot(uNormal, normalize(dirToLight)), 0.0);

vec3 diffuseColor = diffIntensity * texture(material.diffuse,TexCoord).rgb * light.color;

//Blinn-Phong specular

vec3 halfwarDir = normalize(dirToLight + dirToCamera);

float specularAmount = pow(max(dot(uNormal, halfwarDir), 0.0),material.shininess);

vec3 specularColor = texture(material.specular,TexCoord).rgb * specularAmount * light.color;

//spot

float spotRatio;

float cosTheta = dot( normalize(-1*dirToLight) , -1*light.dirToLight );

if(cosTheta > light.cosPhyInner){

//inside

spotRatio=1;

}else if(cosTheta > light.cosPhyOuter){

//middle

spotRatio=(cosTheta-light.cosPhyOuter)/(light.cosPhyInner-light.cosPhyOuter);

}else{

//outside

spotRatio=0;

}

vec3 result=(diffuseColor+specularColor)*attenuation*spotRatio;

return result;

}

void main(){

vec3 finalResult = vec3(0,0,0);

vec3 uNormal = normalize(Normal);

vec3 dirToCamera = normalize(cameraPos - FragPos);

//emission 32 distance

float eDistance = length(cameraPos-FragPos);

float eCoefficient= 1.0 / (1.0f + 0.14f * eDistance + 0.07f * (eDistance * eDistance));

vec3 emission;

if( texture(material.specular,TexCoord).rgb== vec3(0,0,0) ){

emission= texture(material.emission,TexCoord).rgb;

//fun

emission = texture(material.emission,TexCoord + vec2(0.0,time/2)).rgb;//moving

emission = emission * (sin(time) * 0.5 + 0.5) * 2.0 * eCoefficient;//fading

}

//ambient

vec3 ambient= material.ambient * ambientColor * texture(material.diffuse,TexCoord).rgb;

finalResult += CalcLightDirectional(lightD, uNormal, dirToCamera);

finalResult += CalcLightPoint(lightP0, uNormal, dirToCamera);

finalResult += CalcLightPoint(lightP1, uNormal, dirToCamera);

finalResult += CalcLightPoint(lightP2, uNormal, dirToCamera);

finalResult += CalcLightPoint(lightP3, uNormal, dirToCamera);

finalResult += CalcLightSpot(lightS, uNormal, dirToCamera);

if(What==1){

finalResult += ambient;

}else{

finalResult += emission+ambient;

}

FragColor=vec4(finalResult,1.0);

//FragColor=vec4(1.0,1.0,1.0,1.0);

}

以及几何着色器

(后期注:爆破完以后我们再算FragPos以及gl_Position)

#version 330 core

layout (triangles) in;

layout (triangle_strip, max_vertices = 3) out;

in VS_OUT {

vec2 texCoords;

} gs_in[];

out vec2 TexCoord;

out vec3 FragPos;

uniform float time1;

out vec3 Normal;

uniform mat4 modelMat;

vec4 explodePosition;

layout (std140) uniform Matrices

{

mat4 viewMat;

mat4 projMat;

};

vec4 explode(vec4 position, vec3 normal)

{

float magnitude = 2.0;

vec3 direction = normal * (( sin(time1/1.5) + 1.0) / 2.0) * magnitude;

return position + vec4(direction.xy,0.0, 0.0);

}

vec3 GetNormal()

{

vec3 a = vec3(gl_in[0].gl_Position) - vec3(gl_in[1].gl_Position);

vec3 b = vec3(gl_in[2].gl_Position) - vec3(gl_in[1].gl_Position);

return normalize(cross(b,a));

}

void main() {

//vec3 normal = normalize(mat3(transpose(inverse(modelMat1)))*GetNormal());

vec3 normal = GetNormal();

Normal = normal;

explodePosition=modelMat *explode(gl_in[0].gl_Position, normal);

FragPos=explodePosition.xyz;

gl_Position = projMat * viewMat * explodePosition;

TexCoord = gs_in[0].texCoords;

EmitVertex();

explodePosition=modelMat *explode(gl_in[1].gl_Position, normal);

FragPos=explodePosition.xyz;

gl_Position = projMat * viewMat *explodePosition;

TexCoord = gs_in[1].texCoords;

EmitVertex();

explodePosition=modelMat *explode(gl_in[2].gl_Position, normal);

FragPos=explodePosition.xyz;

gl_Position = projMat * viewMat *explodePosition;

TexCoord = gs_in[2].texCoords;

EmitVertex();

EndPrimitive();

}

最终的效果是,3D模型看起来随着时间不断在爆破它的顶点,在这之后又回到正常状态。

虽然这并不是非常有用,它的确展示了几何着色器更高级的用法。

如果用官网的explode方法会遮挡关系错误

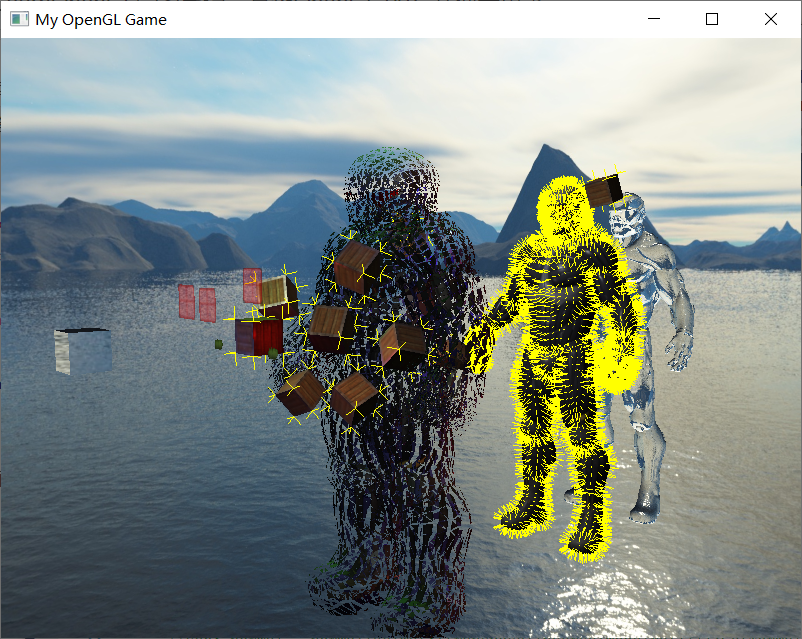

5 法向量可视化

在这一部分中,我们将使用几何着色器来实现一个真正有用的例子:显示任意物体的法向量。

当编写光照着色器时,我们可能会最终会得到一些奇怪的视觉输出,但又很难确定导致问题的原因。

光照错误很常见的原因就是法向量错误,这可能是由于不正确加载顶点数据、错误地将它们定义为顶点属性或在着色器中不正确地管理所导致的。

我们想要的是使用某种方式来检测提供的法向量是正确的。

检测法向量是否正确的一个很好的方式,就是对它们进行可视化,几何着色器正是实现这一目的非常有用的工具。

思路是这样的:我们首先不使用几何着色器正常绘制场景。然后再次绘制场景,但这次只显示通过几何着色器生成法向量。几何着色器接收一个三角形图元,并沿着法向量生成三条线——每个顶点一个法向量。

伪代码看起来会像是这样:

shader.use();

DrawScene();

normalDisplayShader.use();

DrawScene();

顶点着色器:

#version 330 core

layout(location = 0) in vec3 aPos; // 位置变量的属性位置值为 0

layout(location = 1) in vec3 aNormal; // 法向量的属性位置值为 1

layout(location = 2) in vec2 aTexCoord; // uv变量的属性位置值为 2

out VS_OUT {

vec3 normal;

} vs_out;

uniform mat4 modelMat;

layout (std140) uniform Matrices

{

mat4 viewMat;

mat4 projMat;

};

void main()

{

gl_Position = vec4(aPos, 1.0);

mat3 normalMatrix = mat3(transpose(inverse(viewMat * modelMat)));

vs_out.normal = aNormal;

}

接下来,几何着色器会接收每一个顶点(包括一个位置向量和一个法向量),并在每个位置向量处绘制一个法线向量:

注意,为了正确的遮挡关系,我们只要法向量的XY坐标,不要Z值,这点和官网不同

官网直接在顶点着色器里做法向量可视化的方法也有错误,因为投影矩阵会扭曲x/y/z,它不是线性空间,所以我们不能像官网一样计算法线,我们在几何着色器里再偏移。

#version 330 core

layout (triangles) in;

layout (line_strip, max_vertices = 6) out;

in VS_OUT {

vec3 normal;

} gs_in[];

const float MAGNITUDE = 0.4;

uniform mat4 modelMat;

layout (std140) uniform Matrices

{

mat4 viewMat;

mat4 projMat;

};

void GenerateLine(mat3 normalMatrix,int index)

{

gl_Position = projMat*viewMat*modelMat*gl_in[index].gl_Position;

EmitVertex();

vec4 ptOnNormal = viewMat*modelMat*gl_in[index].gl_Position + vec4( normalize(normalMatrix*gs_in[index].normal)*MAGNITUDE,0.0f);

gl_Position = projMat*ptOnNormal;

EmitVertex();

EndPrimitive();

}

void main()

{

mat3 nMat = mat3(transpose(inverse(viewMat*modelMat)));

GenerateLine(nMat,0); // 第一个顶点法线

GenerateLine(nMat,1); // 第二个顶点法线

GenerateLine(nMat,2); // 第三个顶点法线

像这样的几何着色器应该很容易理解了。

注意我们将法向量乘以了一个MAGNITUDE向量,来限制显示出的法向量大小(否则它们就有点大了)。

因为法线的可视化通常都是用于调试目的,我们可以使用片段着色器,将它们显示为单色的线(如果愿意也可以是非常好看的线):

#version 330 core

out vec4 FragColor;

void main()

{

FragColor = vec4(1.0, 1.0, 0.0, 1.0);

}

现在,首先使用普通着色器渲染模型,再使用特别的法线可视化着色器渲染,将看到这样的效果(我们给方块和机器人画了法向量):

6 代码文件

关于着色器的设置以及其他调整都在文中说明了

接下来放出绘制的主函数main.cpp

#include <iostream>

#define GLEW_STATIC

#include <GL/glew.h>

#include <GLFW/glfw3.h>

#include "Shader.h"

#include "Camera.h"

#include "Material.h"

#include "LightDirectional.h"

#include "LightPoint.h"

#include "LightSpot.h"

#include "Mesh.h"

#include "Model.h"

#define STB_IMAGE_IMPLEMENTATION

#include "stb_image.h"

#include <map>

#include <glm/glm.hpp>

#include <glm/gtc/matrix_transform.hpp>

#include <glm/gtc/type_ptr.hpp>

//发光正方体的数据

#pragma region Model Data

float vertices[] = {

//position //normal //TexCoord

-0.5f, -0.5f, -0.5f, 0.0f, 0.0f, -1.0f, 0.0f, 0.0f,

0.5f, 0.5f, -0.5f, 0.0f, 0.0f, -1.0f, 1.0f, 1.0f,

0.5f, -0.5f, -0.5f, 0.0f, 0.0f, -1.0f, 1.0f, 0.0f,

0.5f, 0.5f, -0.5f, 0.0f, 0.0f, -1.0f, 1.0f, 1.0f,

-0.5f, -0.5f, -0.5f, 0.0f, 0.0f, -1.0f, 0.0f, 0.0f,

-0.5f, 0.5f, -0.5f, 0.0f, 0.0f, -1.0f, 0.0f, 1.0f,

-0.5f, -0.5f, 0.5f, 0.0f, 0.0f, 1.0f, 0.0f, 0.0f,

0.5f, -0.5f, 0.5f, 0.0f, 0.0f, 1.0f, 1.0f, 0.0f,

0.5f, 0.5f, 0.5f, 0.0f, 0.0f, 1.0f, 1.0f, 1.0f,

0.5f, 0.5f, 0.5f, 0.0f, 0.0f, 1.0f, 1.0f, 1.0f,

-0.5f, 0.5f, 0.5f, 0.0f, 0.0f, 1.0f, 0.0f, 1.0f,

-0.5f, -0.5f, 0.5f, 0.0f, 0.0f, 1.0f, 0.0f, 0.0f,

-0.5f, 0.5f, 0.5f, -1.0f, 0.0f, 0.0f, 1.0f, 0.0f,

-0.5f, 0.5f, -0.5f, -1.0f, 0.0f, 0.0f, 1.0f, 1.0f,

-0.5f, -0.5f, -0.5f, -1.0f, 0.0f, 0.0f, 0.0f, 1.0f,

-0.5f, -0.5f, -0.5f, -1.0f, 0.0f, 0.0f, 0.0f, 1.0f,

-0.5f, -0.5f, 0.5f, -1.0f, 0.0f, 0.0f, 0.0f, 0.0f,

-0.5f, 0.5f, 0.5f, -1.0f, 0.0f, 0.0f, 1.0f, 0.0f,

0.5f, 0.5f, 0.5f, 1.0f, 0.0f, 0.0f, 1.0f, 0.0f,

0.5f, -0.5f, -0.5f, 1.0f, 0.0f, 0.0f, 0.0f, 1.0f,

0.5f, 0.5f, -0.5f, 1.0f, 0.0f, 0.0f, 1.0f, 1.0f,

0.5f, -0.5f, -0.5f, 1.0f, 0.0f, 0.0f, 0.0f, 1.0f,

0.5f, 0.5f, 0.5f, 1.0f, 0.0f, 0.0f, 1.0f, 0.0f,

0.5f, -0.5f, 0.5f, 1.0f, 0.0f, 0.0f, 0.0f, 0.0f,

-0.5f, -0.5f, -0.5f, 0.0f, -1.0f, 0.0f, 0.0f, 1.0f,

0.5f, -0.5f, -0.5f, 0.0f, -1.0f, 0.0f, 1.0f, 1.0f,

0.5f, -0.5f, 0.5f, 0.0f, -1.0f, 0.0f, 1.0f, 0.0f,

0.5f, -0.5f, 0.5f, 0.0f, -1.0f, 0.0f, 1.0f, 0.0f,

-0.5f, -0.5f, 0.5f, 0.0f, -1.0f, 0.0f, 0.0f, 0.0f,

-0.5f, -0.5f, -0.5f, 0.0f, -1.0f, 0.0f, 0.0f, 1.0f,

-0.5f, 0.5f, -0.5f, 0.0f, 1.0f, 0.0f, 0.0f, 1.0f,

0.5f, 0.5f, 0.5f, 0.0f, 1.0f, 0.0f, 1.0f, 0.0f,

0.5f, 0.5f, -0.5f, 0.0f, 1.0f, 0.0f, 1.0f, 1.0f,

0.5f, 0.5f, 0.5f, 0.0f, 1.0f, 0.0f, 1.0f, 0.0f,

-0.5f, 0.5f, -0.5f, 0.0f, 1.0f, 0.0f, 0.0f, 1.0f,

-0.5f, 0.5f, 0.5f, 0.0f, 1.0f, 0.0f, 0.0f, 0.0f,

};

glm::vec3 cubePositions[] = {

glm::vec3(0.0f, 0.0f, 0.0f),

glm::vec3(2.0f, 5.0f, -15.0f),

glm::vec3(-1.5f, -2.2f, -2.5f),

glm::vec3(-3.8f, -2.0f, -12.3f),

glm::vec3(2.4f, -0.4f, -3.5f),

glm::vec3(-1.7f, 3.0f, -7.5f),

glm::vec3(1.3f, -2.0f, -2.5f),

glm::vec3(1.5f, 2.0f, -2.5f),

glm::vec3(1.5f, 0.2f, -1.5f),

glm::vec3(-1.3f, 1.0f, -1.5f)

};

float grassVertices[] = {

//position //normal //TexCoord

-0.5f, -0.5f, -0.5f, 0.0f, 0.0f, -1.0f, 0.0f, 0.0f,

0.5f, 0.5f, -0.5f, 0.0f, 0.0f, -1.0f, 1.0f, 1.0f,

0.5f, -0.5f, -0.5f, 0.0f, 0.0f, -1.0f, 1.0f, 0.0f,

0.5f, 0.5f, -0.5f, 0.0f, 0.0f, -1.0f, 1.0f, 1.0f,

-0.5f, -0.5f, -0.5f, 0.0f, 0.0f, -1.0f, 0.0f, 0.0f,

-0.5f, 0.5f, -0.5f, 0.0f, 0.0f, -1.0f, 0.0f, 1.0f,

};

glm::vec3 grassPosition[] = {

glm::vec3(-1.5f, 0.0f, 1.0f),

glm::vec3(1.5f, 0.0f, 1.0f),

};

glm::vec3 glassPosition[] = {

glm::vec3(-1.5f, 1.0f, 2.0f),

glm::vec3(-1.2f, 0.9f, 1.5f),

glm::vec3(0.5f, 1.5f, 1.0f),

};

float quadVertices[] = {

// positions // texCoords

-1.0f, 1.0f, 0.0f, 1.0f,

-1.0f, -1.0f, 0.0f, 0.0f,

1.0f, -1.0f, 1.0f, 0.0f,

-1.0f, 1.0f, 0.0f, 1.0f,

1.0f, -1.0f, 1.0f, 0.0f,

1.0f, 1.0f, 1.0f, 1.0f

};

float skyboxVertices[] = {

// positions

-1.0f, 1.0f, -1.0f,

-1.0f, -1.0f, -1.0f,

1.0f, -1.0f, -1.0f,

1.0f, -1.0f, -1.0f,

1.0f, 1.0f, -1.0f,

-1.0f, 1.0f, -1.0f,

-1.0f, -1.0f, 1.0f,

-1.0f, -1.0f, -1.0f,

-1.0f, 1.0f, -1.0f,

-1.0f, 1.0f, -1.0f,

-1.0f, 1.0f, 1.0f,

-1.0f, -1.0f, 1.0f,

1.0f, -1.0f, -1.0f,

1.0f, -1.0f, 1.0f,

1.0f, 1.0f, 1.0f,

1.0f, 1.0f, 1.0f,

1.0f, 1.0f, -1.0f,

1.0f, -1.0f, -1.0f,

-1.0f, -1.0f, 1.0f,

-1.0f, 1.0f, 1.0f,

1.0f, 1.0f, 1.0f,

1.0f, 1.0f, 1.0f,

1.0f, -1.0f, 1.0f,

-1.0f, -1.0f, 1.0f,

-1.0f, 1.0f, -1.0f,

1.0f, 1.0f, -1.0f,

1.0f, 1.0f, 1.0f,

1.0f, 1.0f, 1.0f,

-1.0f, 1.0f, 1.0f,

-1.0f, 1.0f, -1.0f,

-1.0f, -1.0f, -1.0f,

-1.0f, -1.0f, 1.0f,

1.0f, -1.0f, -1.0f,

1.0f, -1.0f, -1.0f,

-1.0f, -1.0f, 1.0f,

1.0f, -1.0f, 1.0f

};

//skyBoxReflection

float skyBoxReflectionVertices[] = {

//position //normal

-0.5f, -0.5f, -0.5f, 0.0f, 0.0f, -1.0f,

0.5f, 0.5f, -0.5f, 0.0f, 0.0f, -1.0f,

0.5f, -0.5f, -0.5f, 0.0f, 0.0f, -1.0f,

0.5f, 0.5f, -0.5f, 0.0f, 0.0f, -1.0f,

-0.5f, -0.5f, -0.5f, 0.0f, 0.0f, -1.0f,

-0.5f, 0.5f, -0.5f, 0.0f, 0.0f, -1.0f,

-0.5f, -0.5f, 0.5f, 0.0f, 0.0f, 1.0f,

0.5f, -0.5f, 0.5f, 0.0f, 0.0f, 1.0f,

0.5f, 0.5f, 0.5f, 0.0f, 0.0f, 1.0f,

0.5f, 0.5f, 0.5f, 0.0f, 0.0f, 1.0f,

-0.5f, 0.5f, 0.5f, 0.0f, 0.0f, 1.0f,

-0.5f, -0.5f, 0.5f, 0.0f, 0.0f, 1.0f,

-0.5f, 0.5f, 0.5f, -1.0f, 0.0f, 0.0f,

-0.5f, 0.5f, -0.5f, -1.0f, 0.0f, 0.0f,

-0.5f, -0.5f, -0.5f, -1.0f, 0.0f, 0.0f,

-0.5f, -0.5f, -0.5f, -1.0f, 0.0f, 0.0f,

-0.5f, -0.5f, 0.5f, -1.0f, 0.0f, 0.0f,

-0.5f, 0.5f, 0.5f, -1.0f, 0.0f, 0.0f,

0.5f, 0.5f, 0.5f, 1.0f, 0.0f, 0.0f,

0.5f, -0.5f, -0.5f, 1.0f, 0.0f, 0.0f,

0.5f, 0.5f, -0.5f, 1.0f, 0.0f, 0.0f,

0.5f, -0.5f, -0.5f, 1.0f, 0.0f, 0.0f,

0.5f, 0.5f, 0.5f, 1.0f, 0.0f, 0.0f,

0.5f, -0.5f, 0.5f, 1.0f, 0.0f, 0.0f,

-0.5f, -0.5f, -0.5f, 0.0f, -1.0f, 0.0f,

0.5f, -0.5f, -0.5f, 0.0f, -1.0f, 0.0f,

0.5f, -0.5f, 0.5f, 0.0f, -1.0f, 0.0f,

0.5f, -0.5f, 0.5f, 0.0f, -1.0f, 0.0f,

-0.5f, -0.5f, 0.5f, 0.0f, -1.0f, 0.0f,

-0.5f, -0.5f, -0.5f, 0.0f, -1.0f, 0.0f,

-0.5f, 0.5f, -0.5f, 0.0f, 1.0f, 0.0f,

0.5f, 0.5f, 0.5f, 0.0f, 1.0f, 0.0f,

0.5f, 0.5f, -0.5f, 0.0f, 1.0f, 0.0f,

0.5f, 0.5f, 0.5f, 0.0f, 1.0f, 0.0f,

-0.5f, 0.5f, -0.5f, 0.0f, 1.0f, 0.0f,

-0.5f, 0.5f, 0.5f, 0.0f, 1.0f, 0.0f,

};

#pragma endregion

#pragma region Camera Declare

//建立camera

glm::vec3 cameraPos = glm::vec3(0.0f, 0.0f, 3.0f);

glm::vec3 cameraTarget = glm::vec3(0.0f, 0.0f, -1.0f);

glm::vec3 cameraUp = glm::vec3(0.0f, 1.0f, 0.0f);

Camera camera(cameraPos, cameraTarget, cameraUp);

#pragma endregion

#pragma region Light Declare

//position angle color

LightDirectional lightD (

glm::vec3(135.0f, 0,0),

glm::vec3(0.9f, 0.9f, 0.9f));

LightPoint lightP0 (glm::vec3(1.0f, 0,0),

glm::vec3(1.0f, 0, 0));

LightPoint lightP1 (glm::vec3(0, 1.0f, 0),

glm::vec3(0, 1.0f, 0));

LightPoint lightP2 (glm::vec3(0, 0, 1.0f),

glm::vec3(0, 0, 1.0f));

LightPoint lightP3 (glm::vec3(0.0f, 0.0f, -7.0f),

glm::vec3(1.0f, 1.0f, 1.0f));

LightSpot lightS (glm::vec3(0, 8.0f,0.0f),

glm::vec3(135.0f, 0, 0),

glm::vec3(0, 0,1.5f));

#pragma endregion

#pragma region Input Declare

//移动用

float deltaTime = 0.0f; // 当前帧与上一帧的时间差

float lastFrame = 0.0f; // 上一帧的时间

void processInput(GLFWwindow* window) {

//看是不是按下esc键 然后退出

if (glfwGetKey(window, GLFW_KEY_ESCAPE) == GLFW_PRESS) {

glfwSetWindowShouldClose(window, true);

}

//更流畅点的摄像机系统

if (deltaTime != 0) {

camera.cameraPosSpeed = 5 * deltaTime;

}

//camera前后左右根据镜头方向移动

if (glfwGetKey(window, GLFW_KEY_W) == GLFW_PRESS)

camera.PosUpdateForward();

if (glfwGetKey(window, GLFW_KEY_S) == GLFW_PRESS)

camera.PosUpdateBackward();

if (glfwGetKey(window, GLFW_KEY_A) == GLFW_PRESS)

camera.PosUpdateLeft();

if (glfwGetKey(window, GLFW_KEY_D) == GLFW_PRESS)

camera.PosUpdateRight();

if (glfwGetKey(window, GLFW_KEY_Q) == GLFW_PRESS)

camera.PosUpdateUp();

if (glfwGetKey(window, GLFW_KEY_E) == GLFW_PRESS)

camera.PosUpdateDown();

}

float lastX;

float lastY;

bool firstMouse = true;

//鼠标控制镜头方向

void mouse_callback(GLFWwindow* window, double xpos, double ypos) {

if (firstMouse == true)

{

lastX = xpos;

lastY = ypos;

firstMouse = false;

}

float deltaX, deltaY;

float sensitivity = 0.05f;

deltaX = (xpos - lastX)*sensitivity;

deltaY = (ypos - lastY)*sensitivity;

lastX = xpos;

lastY = ypos;

camera.ProcessMouseMovement(deltaX, deltaY);

};

//缩放

float fov = 45.0f;

void scroll_callback(GLFWwindow* window, double xoffset, double yoffset)

{

if (fov >= 1.0f && fov <= 45.0f)

fov -= yoffset;

if (fov <= 1.0f)

fov = 1.0f;

if (fov >= 45.0f)

fov = 45.0f;

}

#pragma endregion

//加载一般的图片

unsigned int LoadImageToGPU(const char* filename, GLint internalFormat, GLenum format) {

unsigned int TexBuffer;

glGenTextures(1, &TexBuffer);

glBindTexture(GL_TEXTURE_2D, TexBuffer);

// 为当前绑定的纹理对象设置环绕、过滤方式

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_REPEAT);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_REPEAT);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR_MIPMAP_LINEAR);

// 加载并生成纹理

int width, height, nrChannel;

unsigned char *data = stbi_load(filename, &width, &height, &nrChannel, 0);

if (data) {

glTexImage2D(GL_TEXTURE_2D, 0, internalFormat, width, height, 0, format, GL_UNSIGNED_BYTE, data);

glGenerateMipmap(GL_TEXTURE_2D);

}

else

{

printf("Failed to load texture");

}

stbi_image_free(data);

return TexBuffer;

}

//加载天空盒图片

unsigned int loadCubemap(std::vector<std::string> faces)

{

unsigned int textureID;

glGenTextures(1, &textureID);

glBindTexture(GL_TEXTURE_CUBE_MAP, textureID);

int width, height, nrChannels;

for (unsigned int i = 0; i < faces.size(); i++)

{

unsigned char *data = stbi_load(faces[i].c_str(), &width, &height, &nrChannels, 0);

if (data)

{

glTexImage2D(GL_TEXTURE_CUBE_MAP_POSITIVE_X + i,

0, GL_RGB, width, height, 0, GL_RGB, GL_UNSIGNED_BYTE, data

);

stbi_image_free(data);

}

else

{

std::cout << "Cubemap texture failed to load at path: " << faces[i] << std::endl;

stbi_image_free(data);

}

}

glTexParameteri(GL_TEXTURE_CUBE_MAP, GL_TEXTURE_MIN_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_CUBE_MAP, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_CUBE_MAP, GL_TEXTURE_WRAP_S, GL_CLAMP_TO_EDGE);

glTexParameteri(GL_TEXTURE_CUBE_MAP, GL_TEXTURE_WRAP_T, GL_CLAMP_TO_EDGE);

glTexParameteri(GL_TEXTURE_CUBE_MAP, GL_TEXTURE_WRAP_R, GL_CLAMP_TO_EDGE);

return textureID;

}

int main(int argc, char* argv[]) {

std::string exePath = argv[0];

#pragma region Open a Window

glfwInit();

glfwWindowHint(GLFW_CONTEXT_VERSION_MAJOR,3);

glfwWindowHint(GLFW_CONTEXT_VERSION_MINOR, 3);

glfwWindowHint(GLFW_OPENGL_PROFILE, GLFW_OPENGL_CORE_PROFILE);

//Open GLFW Window

GLFWwindow* window = glfwCreateWindow(800,600,"My OpenGL Game",NULL,NULL);

if(window == NULL)

{

printf("Open window failed.");

glfwTerminate();

return - 1;

}

glfwMakeContextCurrent(window);

//关闭鼠标显示

glfwSetInputMode(window, GLFW_CURSOR, GLFW_CURSOR_DISABLED);

//回调函数监听鼠标

glfwSetCursorPosCallback(window, mouse_callback);

//回调函数监听滚轮

glfwSetScrollCallback(window, scroll_callback);

//Init GLEW

glewExperimental = true;

if (glewInit() != GLEW_OK)

{

printf("Init GLEW failed.");

glfwTerminate();

return -1;

}

glViewport(0, 0, 800, 600);

//glEnable(GL_DEPTH_TEST);

//glDepthFunc(GL_LESS);

//glEnable(GL_STENCIL_TEST);

//glStencilOp(GL_KEEP, GL_KEEP, GL_REPLACE);

//glEnable(GL_BLEND);

//glBlendFunc(GL_SRC_ALPHA, GL_ONE_MINUS_SRC_ALPHA);

//

//glEnable(GL_CULL_FACE);

#pragma endregion

#pragma region Init Shader Program

Shader* myShader = new Shader("vertexSource.vert", "fragmentSource.frag");

Shader* myShaderObj = new Shader("vertexSourceObjExplode.vert", "fragmentSource.frag","geometrySource.geom");

Shader* ShaderNormal = new Shader("vertexSourceNormal.vert", "fragmentSourceNormal.frag", "geometrySourceNormal.geom");

Shader* border = new Shader("vertexSource.vert", "Border.frag");

Shader* grass = new Shader("vertexSource.vert", "grass.frag");

Shader* glass = new Shader("vertexSource.vert", "glass.frag");

Shader* screenShader = new Shader("screen.vert", "screen.frag");

Shader* skyBox = new Shader("skyBox.vert", "skyBox.frag");

Shader* skyBoxReflection = new Shader("skyBoxReflection.vert", "skyBoxReflection.frag");

#pragma endregion

#pragma region FBO / RBO

unsigned int fbo;

glGenFramebuffers(1, &fbo);

glBindFramebuffer(GL_FRAMEBUFFER, fbo);

// 生成fbo的纹理附件

unsigned int texColorBuffer;

glGenTextures(1, &texColorBuffer);

glBindTexture(GL_TEXTURE_2D, texColorBuffer);

glTexImage2D(GL_TEXTURE_2D, 0, GL_RGB, 800, 600, 0, GL_RGB, GL_UNSIGNED_BYTE, NULL);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

glBindTexture(GL_TEXTURE_2D, 0);

// 将它附加到当前绑定的帧缓冲对象

glFramebufferTexture2D(GL_FRAMEBUFFER, GL_COLOR_ATTACHMENT0, GL_TEXTURE_2D, texColorBuffer, 0);

// 生成fbo的rbo附件

unsigned int rbo;

glGenRenderbuffers(1, &rbo);

glBindRenderbuffer(GL_RENDERBUFFER, rbo);

glRenderbufferStorage(GL_RENDERBUFFER, GL_DEPTH24_STENCIL8, 800, 600);

glBindRenderbuffer(GL_RENDERBUFFER, 0);

// 将它附加当前绑定的帧缓冲对象

glFramebufferRenderbuffer(GL_FRAMEBUFFER, GL_DEPTH_STENCIL_ATTACHMENT, GL_RENDERBUFFER, rbo);

if (glCheckFramebufferStatus(GL_FRAMEBUFFER) != GL_FRAMEBUFFER_COMPLETE)

std::cout << "ERROR::FRAMEBUFFER:: Framebuffer is not complete!" << std::endl;

glBindFramebuffer(GL_FRAMEBUFFER, 0);

#pragma endregion

#pragma region Init Material

Material * myMaterial = new Material(myShader,

LoadImageToGPU("container2.png", GL_RGBA, GL_RGBA),

LoadImageToGPU("container2_specular.png", GL_RGBA, GL_RGBA),

LoadImageToGPU("matrix.jpg", GL_RGB, GL_RGB),

glm::vec3 (1.0f, 1.0f, 1.0f),

150.0f);

Material * myMaterialNormal = new Material(ShaderNormal,

LoadImageToGPU("container2.png", GL_RGBA, GL_RGBA),

LoadImageToGPU("container2_specular.png", GL_RGBA, GL_RGBA),

LoadImageToGPU("matrix.jpg", GL_RGB, GL_RGB),

glm::vec3(1.0f, 1.0f, 1.0f),

150.0f);

Material * Grass = new Material(grass,

LoadImageToGPU("grass.png", GL_RGBA, GL_RGBA),

LoadImageToGPU("grass.png", GL_RGBA, GL_RGBA),

LoadImageToGPU("grass.png", GL_RGBA, GL_RGBA),

glm::vec3(1.0f, 1.0f, 1.0f),

150.0f);

Material * Glass = new Material(glass,

LoadImageToGPU("blending_transparent_window.png", GL_RGBA, GL_RGBA),

LoadImageToGPU("blending_transparent_window.png", GL_RGBA, GL_RGBA),

LoadImageToGPU("blending_transparent_window.png", GL_RGBA, GL_RGBA),

glm::vec3(1.0f, 1.0f, 1.0f),

150.0f);

std::vector<std::string> faces

{

"right.jpg",

"left.jpg",

"top.jpg",

"bottom.jpg",

"front.jpg",

"back.jpg"

};

unsigned int cubemapTexture = loadCubemap(faces);

#pragma endregion

#pragma region Init and Load Models to VAO,VBO

Mesh cube(vertices);

Mesh grassMesh(grassVertices);

Mesh glassMesh(grassVertices);

Model model(exePath.substr(0, exePath.find_last_of('\\')) + "\\model\\nanosuit.obj");

// screen quad VAO

unsigned int quadVAO, quadVBO;

glGenVertexArrays(1, &quadVAO);

glGenBuffers(1, &quadVBO);

glBindVertexArray(quadVAO);

glBindBuffer(GL_ARRAY_BUFFER, quadVBO);

glBufferData(GL_ARRAY_BUFFER, sizeof(quadVertices), &quadVertices, GL_STATIC_DRAW);

glEnableVertexAttribArray(0);

glVertexAttribPointer(0, 2, GL_FLOAT, GL_FALSE, 4 * sizeof(float), (void*)0);

glEnableVertexAttribArray(1);

glVertexAttribPointer(1, 2, GL_FLOAT, GL_FALSE, 4 * sizeof(float), (void*)(2 * sizeof(float)));

// skyBox VAO

unsigned int skyboxVAO, skyboxVBO;

glGenVertexArrays(1, &skyboxVAO);

glGenBuffers(1, &skyboxVBO);

glBindVertexArray(skyboxVAO);

glBindBuffer(GL_ARRAY_BUFFER, skyboxVBO);

glBufferData(GL_ARRAY_BUFFER, sizeof(skyboxVertices), &skyboxVertices, GL_STATIC_DRAW);

glEnableVertexAttribArray(0);

glVertexAttribPointer(0, 3, GL_FLOAT, GL_FALSE, 3 * sizeof(float), (void*)0);

//skyBoxReflection VAO

unsigned int skyBoxReflectionVAO, skyBoxReflectionVBO;

glGenVertexArrays(1, &skyBoxReflectionVAO);

glGenBuffers(1, &skyBoxReflectionVBO);

glBindVertexArray(skyBoxReflectionVAO);

glBindBuffer(GL_ARRAY_BUFFER, skyBoxReflectionVBO);

glBufferData(GL_ARRAY_BUFFER, sizeof(skyBoxReflectionVertices), &skyBoxReflectionVertices, GL_STATIC_DRAW);

glEnableVertexAttribArray(0);

glVertexAttribPointer(0, 3, GL_FLOAT, GL_FALSE, 6 * sizeof(float), (void*)0);

glEnableVertexAttribArray(1);

glVertexAttribPointer(1, 3, GL_FLOAT, GL_FALSE, 6 * sizeof(float), (void*)(3 * sizeof(float)));

#pragma endregion

#pragma region Init and Load Textures

//坐标翻转

stbi_set_flip_vertically_on_load(true);

#pragma endregion

#pragma region Prepare MVP matrices

//model

glm::mat4 modelMat;

//view

glm::mat4 viewMat;

//projection

glm::mat4 projMat;

//设置观察矩阵和投影矩阵的uniform缓冲

unsigned int uniformBlockIndexmyShader = glGetUniformBlockIndex(myShader->ID, "Matrices");

unsigned int uniformBlockIndexShaderNormal = glGetUniformBlockIndex(ShaderNormal->ID, "Matrices");

unsigned int uniformBlockIndexmyShaderObj = glGetUniformBlockIndex(myShaderObj->ID, "Matrices");

unsigned int uniformBlockIndexborder = glGetUniformBlockIndex(border->ID, "Matrices");

unsigned int uniformBlockIndexgrass = glGetUniformBlockIndex(grass->ID, "Matrices");

unsigned int uniformBlockIndexglass = glGetUniformBlockIndex(glass->ID, "Matrices");

unsigned int uniformBlockIndexskyBoxReflection = glGetUniformBlockIndex(skyBoxReflection->ID, "Matrices");

glUniformBlockBinding(myShader->ID, uniformBlockIndexmyShader, 0);

glUniformBlockBinding(ShaderNormal->ID, uniformBlockIndexShaderNormal, 0);

glUniformBlockBinding(myShaderObj->ID, uniformBlockIndexmyShaderObj, 0);

glUniformBlockBinding(border->ID, uniformBlockIndexborder, 0);

glUniformBlockBinding(grass->ID, uniformBlockIndexgrass, 0);

glUniformBlockBinding(glass->ID, uniformBlockIndexglass, 0);

glUniformBlockBinding(skyBoxReflection->ID, uniformBlockIndexskyBoxReflection, 0);

//创建Uniform缓冲对象本身,并将其绑定到绑定点0

unsigned int uboMatrices;

glGenBuffers(1, &uboMatrices);

glBindBuffer(GL_UNIFORM_BUFFER, uboMatrices);

glBufferData(GL_UNIFORM_BUFFER, 2 * sizeof(glm::mat4), NULL, GL_STATIC_DRAW);

glBindBuffer(GL_UNIFORM_BUFFER, 0);

glBindBufferRange(GL_UNIFORM_BUFFER, 0, uboMatrices, 0, 2 * sizeof(glm::mat4));

//提前设置好透视矩阵(也就是滚轮功能不能用了)

projMat = glm::perspective(glm::radians(45.0f), 800.0f / 600.0f, 0.1f, 100.0f);

glBindBuffer(GL_UNIFORM_BUFFER, uboMatrices);

glBufferSubData(GL_UNIFORM_BUFFER, sizeof(glm::mat4), sizeof(glm::mat4), glm::value_ptr(projMat));

glBindBuffer(GL_UNIFORM_BUFFER, 0);

#pragma endregion

while (!glfwWindowShouldClose(window))

{

//Process Input

processInput(window);

//第一阶段处理,渲染到自己建立的fbo

glBindFramebuffer(GL_FRAMEBUFFER, fbo);

glEnable(GL_DEPTH_TEST);

glDepthFunc(GL_LESS);

glEnable(GL_STENCIL_TEST);

glStencilOp(GL_KEEP, GL_KEEP, GL_REPLACE);

glEnable(GL_BLEND);

glBlendFunc(GL_SRC_ALPHA, GL_ONE_MINUS_SRC_ALPHA);

glEnable(GL_CULL_FACE);

//Clear Screen

glClearColor(0.0f, 0.0f, 0.0f, 1.0f);

glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT | GL_STENCIL_BUFFER_BIT);

//设置好uniform缓冲中的观察矩阵

viewMat = camera.GetViewMatrix();

glBindBuffer(GL_UNIFORM_BUFFER, uboMatrices);

glBufferSubData(GL_UNIFORM_BUFFER, 0, sizeof(glm::mat4), glm::value_ptr(viewMat));

glBindBuffer(GL_UNIFORM_BUFFER, 0);

//方块 0代表模型

for (unsigned int i = 0; i < 11; i++)

{

if(i==0)

{

modelMat = glm::translate(glm::mat4(1.0f), { 0,-10,-5 });

myShaderObj->use();

//机器人的uniform

glUniformMatrix4fv(glGetUniformLocation(myShaderObj->ID, "modelMat"), 1, GL_FALSE, glm::value_ptr(modelMat));

//glUniformMatrix4fv(glGetUniformLocation(myShaderObj->ID, "viewMat"), 1, GL_FALSE, glm::value_ptr(viewMat));

//glUniformMatrix4fv(glGetUniformLocation(myShaderObj->ID, "projMat"), 1, GL_FALSE, glm::value_ptr(projMat));

glUniform3f(glGetUniformLocation(myShaderObj->ID, "cameraPos"), camera.Position.x, camera.Position.y, camera.Position.z);

glUniform1f(glGetUniformLocation(myShaderObj->ID, "time"), glfwGetTime());

glUniform1f(glGetUniformLocation(myShaderObj->ID, "time1"), glfwGetTime());

glUniform3f(glGetUniformLocation(myShaderObj->ID, "objColor"), 1.0f, 1.0f, 1.0f);

glUniform3f(glGetUniformLocation(myShaderObj->ID, "ambientColor"), 0.1f, 0.1f, 0.1f);

glUniform3f(glGetUniformLocation(myShaderObj->ID, "lightD.color"), lightD.color.x, lightD.color.y, lightD.color.z);

glUniform3f(glGetUniformLocation(myShaderObj->ID, "lightD.dirToLight"), lightD.direction.x, lightD.direction.y, lightD.direction.z);

glUniform3f(glGetUniformLocation(myShaderObj->ID, "lightP0.pos"), lightP0.position.x, lightP0.position.y, lightP0.position.z);

glUniform3f(glGetUniformLocation(myShaderObj->ID, "lightP0.color"), lightP0.color.x, lightP0.color.y, lightP0.color.z);

glUniform1f(glGetUniformLocation(myShaderObj->ID, "lightP0.constant"), lightP0.constant);

glUniform1f(glGetUniformLocation(myShaderObj->ID, "lightP0.linear"), lightP0.linear);

glUniform1f(glGetUniformLocation(myShaderObj->ID, "lightP0.quadratic"), lightP0.quadratic);

glUniform3f(glGetUniformLocation(myShaderObj->ID, "lightP1.pos"), lightP1.position.x, lightP1.position.y, lightP1.position.z);

glUniform3f(glGetUniformLocation(myShaderObj->ID, "lightP1.color"), lightP1.color.x, lightP1.color.y, lightP1.color.z);

glUniform1f(glGetUniformLocation(myShaderObj->ID, "lightP1.constant"), lightP1.constant);

glUniform1f(glGetUniformLocation(myShaderObj->ID, "lightP1.linear"), lightP1.linear);

glUniform1f(glGetUniformLocation(myShaderObj->ID, "lightP1.quadratic"), lightP1.quadratic);

glUniform3f(glGetUniformLocation(myShaderObj->ID, "lightP2.pos"), lightP2.position.x, lightP2.position.y, lightP2.position.z);

glUniform3f(glGetUniformLocation(myShaderObj->ID, "lightP2.color"), lightP2.color.x, lightP2.color.y, lightP2.color.z);

glUniform1f(glGetUniformLocation(myShaderObj->ID, "lightP2.constant"), lightP2.constant);

glUniform1f(glGetUniformLocation(myShaderObj->ID, "lightP2.linear"), lightP2.linear);

glUniform1f(glGetUniformLocation(myShaderObj->ID, "lightP2.quadratic"), lightP2.quadratic);

glUniform3f(glGetUniformLocation(myShaderObj->ID, "lightP3.pos"), lightP3.position.x, lightP3.position.y, lightP3.position.z);

glUniform3f(glGetUniformLocation(myShaderObj->ID, "lightP3.color"), lightP3.color.x, lightP3.color.y, lightP3.color.z);

glUniform1f(glGetUniformLocation(myShaderObj->ID, "lightP3.constant"), lightP3.constant);

glUniform1f(glGetUniformLocation(myShaderObj->ID, "lightP3.linear"), lightP3.linear);

glUniform1f(glGetUniformLocation(myShaderObj->ID, "lightP3.quadratic"), lightP3.quadratic);

glUniform3f(glGetUniformLocation(myShaderObj->ID, "lightS.pos"), lightS.position.x, lightS.position.y, lightS.position.z);

glUniform3f(glGetUniformLocation(myShaderObj->ID, "lightS.color"), lightS.color.x, lightS.color.y, lightS.color.z);

glUniform3f(glGetUniformLocation(myShaderObj->ID, "lightS.dirToLight"), lightS.direction.x, lightS.direction.y, lightS.direction.z);

glUniform1f(glGetUniformLocation(myShaderObj->ID, "lightS.constant"), lightS.constant);

glUniform1f(glGetUniformLocation(myShaderObj->ID, "lightS.linear"), lightS.linear);

glUniform1f(glGetUniformLocation(myShaderObj->ID, "lightS.quadratic"), lightS.quadratic);

glUniform1f(glGetUniformLocation(myShaderObj->ID, "lightS.cosPhyInner"), lightS.cosPhyInner);

glUniform1f(glGetUniformLocation(myShaderObj->ID, "lightS.cosPhyOuter"), lightS.cosPhyOuter);

glUniform3f(glGetUniformLocation(myShaderObj->ID, "material.ambient"), 1.0f, 1.0f, 1.0f);

glUniform1f(glGetUniformLocation(myShaderObj->ID, "material.shininess"), 150.0f);

glStencilMask(0x00); // 记得保证我们在绘制机器人的时候不会更新模板缓冲

glUniform1f(glGetUniformLocation(myShaderObj->ID, "What"), 1);

//注释掉的为反射机器人

//skyBoxReflection->use();

//model.Draw(skyBoxReflection);

glDisable(GL_CULL_FACE);

model.Draw(myShaderObj);

myShader->use();

modelMat = glm::translate(glm::mat4(1.0f), { 0,-10,-15 });

glUniformMatrix4fv(glGetUniformLocation(myShaderObj->ID, "modelMat"), 1, GL_FALSE, glm::value_ptr(modelMat));

model.Draw(myShader);

glEnable(GL_CULL_FACE);

//折射机器人

skyBoxReflection->use();

modelMat = glm::translate(glm::mat4(1.0f), { 0,-10,-20 });

//Set view matrix

//viewMat = camera.GetViewMatrix();

//Set projection matrix

//projMat = glm::perspective(glm::radians(fov), 800.0f / 600.0f, 0.1f, 100.0f);

glUniformMatrix4fv(glGetUniformLocation(skyBoxReflection->ID, "modelMat"), 1, GL_FALSE, glm::value_ptr(modelMat));

//glUniformMatrix4fv(glGetUniformLocation(skyBoxReflection->ID, "viewMat"), 1, GL_FALSE, glm::value_ptr(viewMat));

//glUniformMatrix4fv(glGetUniformLocation(skyBoxReflection->ID, "projMat"), 1, GL_FALSE, glm::value_ptr(projMat));

model.Draw(skyBoxReflection);

//法向量机器人

ShaderNormal->use();

modelMat = glm::translate(glm::mat4(1.0f), { 0,-10,-15 });

glUniformMatrix4fv(glGetUniformLocation(ShaderNormal->ID, "modelMat"), 1, GL_FALSE, glm::value_ptr(modelMat));

model.Draw(ShaderNormal);

glDisable(GL_CULL_FACE);

//画草

for (unsigned int i = 0; i < 2; i++)

{

//Set Model matrix

modelMat = glm::translate(glm::mat4(1.0f), grassPosition[i]);

modelMat = glm::rotate(modelMat, glm::radians(180.0f), glm::vec3(0.0f, 0.0f, 1.0f));

//Set view matrix

//viewMat = camera.GetViewMatrix();

//Set projection matrix

//projMat = glm::perspective(glm::radians(fov), 800.0f / 600.0f, 0.1f, 100.0f);

//Set Material -> Shader Program

grass->use();

glUniformMatrix4fv(glGetUniformLocation(grass->ID, "modelMat"), 1, GL_FALSE, glm::value_ptr(modelMat));

//glUniformMatrix4fv(glGetUniformLocation(grass->ID, "viewMat"), 1, GL_FALSE, glm::value_ptr(viewMat));

//glUniformMatrix4fv(glGetUniformLocation(grass->ID, "projMat"), 1, GL_FALSE, glm::value_ptr(projMat));

grassMesh.DrawSliceArray(Grass->shader, Grass->diffuse, Grass->specular, Grass->emission);

}

glEnable(GL_CULL_FACE);

}

else {

int k = i - 1;

//Set Model matrix

modelMat = glm::translate(glm::mat4(1.0f), cubePositions[k]);

float angle = 20.0f * (k);

//float angle = 20.0f * i + 50*glfwGetTime();

modelMat = glm::rotate(modelMat, glm::radians(angle), glm::vec3(1.0f, 0.3f, 0.5f));

//Set view matrix

//viewMat = camera.GetViewMatrix();

//Set projection matrix

//projMat = glm::perspective(glm::radians(fov), 800.0f / 600.0f, 0.1f, 100.0f);

//Set Material -> Shader Program

myShader->use();

//Set Material -> Uniforms

glUniform1f(glGetUniformLocation(myShader->ID, "What"), 0);

//盒子的uniform

glUniformMatrix4fv(glGetUniformLocation(myShader->ID, "modelMat"), 1, GL_FALSE, glm::value_ptr(modelMat));

//glUniformMatrix4fv(glGetUniformLocation(myShader->ID, "viewMat"), 1, GL_FALSE, glm::value_ptr(viewMat));

//glUniformMatrix4fv(glGetUniformLocation(myShader->ID, "projMat"), 1, GL_FALSE, glm::value_ptr(projMat));

glUniform3f(glGetUniformLocation(myShader->ID, "cameraPos"), camera.Position.x, camera.Position.y, camera.Position.z);

glUniform1f(glGetUniformLocation(myShader->ID, "time"), glfwGetTime());

glUniform3f(glGetUniformLocation(myShader->ID, "objColor"), 1.0f, 1.0f, 1.0f);

glUniform3f(glGetUniformLocation(myShader->ID, "ambientColor"), 0.1f, 0.1f, 0.1f);

glUniform3f(glGetUniformLocation(myShader->ID, "lightD.color"), lightD.color.x, lightD.color.y, lightD.color.z);

glUniform3f(glGetUniformLocation(myShader->ID, "lightD.dirToLight"), lightD.direction.x, lightD.direction.y, lightD.direction.z);

glUniform3f(glGetUniformLocation(myShader->ID, "lightP0.pos"), lightP0.position.x, lightP0.position.y, lightP0.position.z);

glUniform3f(glGetUniformLocation(myShader->ID, "lightP0.color"), lightP0.color.x, lightP0.color.y, lightP0.color.z);

glUniform1f(glGetUniformLocation(myShader->ID, "lightP0.constant"), lightP0.constant);

glUniform1f(glGetUniformLocation(myShader->ID, "lightP0.linear"), lightP0.linear);

glUniform1f(glGetUniformLocation(myShader->ID, "lightP0.quadratic"), lightP0.quadratic);

glUniform3f(glGetUniformLocation(myShader->ID, "lightP1.pos"), lightP1.position.x, lightP1.position.y, lightP1.position.z);

glUniform3f(glGetUniformLocation(myShader->ID, "lightP1.color"), lightP1.color.x, lightP1.color.y, lightP1.color.z);

glUniform1f(glGetUniformLocation(myShader->ID, "lightP1.constant"), lightP1.constant);

glUniform1f(glGetUniformLocation(myShader->ID, "lightP1.linear"), lightP1.linear);

glUniform1f(glGetUniformLocation(myShader->ID, "lightP1.quadratic"), lightP1.quadratic);

glUniform3f(glGetUniformLocation(myShader->ID, "lightP2.pos"), lightP2.position.x, lightP2.position.y, lightP2.position.z);

glUniform3f(glGetUniformLocation(myShader->ID, "lightP2.color"), lightP2.color.x, lightP2.color.y, lightP2.color.z);

glUniform1f(glGetUniformLocation(myShader->ID, "lightP2.constant"), lightP2.constant);

glUniform1f(glGetUniformLocation(myShader->ID, "lightP2.linear"), lightP2.linear);

glUniform1f(glGetUniformLocation(myShader->ID, "lightP2.quadratic"), lightP2.quadratic);

glUniform3f(glGetUniformLocation(myShader->ID, "lightP3.pos"), lightP3.position.x, lightP3.position.y, lightP3.position.z);

glUniform3f(glGetUniformLocation(myShader->ID, "lightP3.color"), lightP3.color.x, lightP3.color.y, lightP3.color.z);

glUniform1f(glGetUniformLocation(myShader->ID, "lightP3.constant"), lightP3.constant);

glUniform1f(glGetUniformLocation(myShader->ID, "lightP3.linear"), lightP3.linear);

glUniform1f(glGetUniformLocation(myShader->ID, "lightP3.quadratic"), lightP3.quadratic);

glUniform3f(glGetUniformLocation(myShader->ID, "lightS.pos"), lightS.position.x, lightS.position.y, lightS.position.z);

glUniform3f(glGetUniformLocation(myShader->ID, "lightS.color"), lightS.color.x, lightS.color.y, lightS.color.z);

glUniform3f(glGetUniformLocation(myShader->ID, "lightS.dirToLight"), lightS.direction.x, lightS.direction.y, lightS.direction.z);

glUniform1f(glGetUniformLocation(myShader->ID, "lightS.constant"), lightS.constant);

glUniform1f(glGetUniformLocation(myShader->ID, "lightS.linear"), lightS.linear);

glUniform1f(glGetUniformLocation(myShader->ID, "lightS.quadratic"), lightS.quadratic);

glUniform1f(glGetUniformLocation(myShader->ID, "lightS.cosPhyInner"), lightS.cosPhyInner);

glUniform1f(glGetUniformLocation(myShader->ID, "lightS.cosPhyOuter"), lightS.cosPhyOuter);

myMaterial->shader->SetUniform3f("material.ambient", myMaterial->ambient);

myMaterial->shader->SetUniform1f("material.shininess", myMaterial->shininess);

//模板测试绘制边框

方块与方块边框

//glStencilFunc(GL_ALWAYS, 1, 0xFF); // 更新模板缓冲函数,所有的片段都要写入模板

//glStencilMask(0xFF); // 启用模板缓冲写入

正常绘制十个正方体,而后记录模板值

glUniform1f(glGetUniformLocation(myShader->ID, "What"), 0);

cube.DrawArray(myMaterial->shader, myMaterial->diffuse, myMaterial->specular, myMaterial->emission);

ShaderNormal->use();

glUniformMatrix4fv(glGetUniformLocation(ShaderNormal->ID, "modelMat"), 1, GL_FALSE, glm::value_ptr(modelMat));

cube.DrawArray(myMaterialNormal->shader, myMaterialNormal->diffuse, myMaterialNormal->specular, myMaterialNormal->emission);

现在模板缓冲在箱子被绘制的地方都更新为1了,我们将要绘制放大的箱子,也就是绘制边框

//glStencilFunc(GL_NOTEQUAL, 1, 0xFF);

//glStencilMask(0x00); // 禁止模板缓冲的写入

//

//

//

glDisable(GL_DEPTH_TEST);

//border->use();

//

Set Model matrix

//modelMat = glm::translate(glm::mat4(1.0f), cubePositions[i-1]);

//float angle = 20.0f * (i-1);

//modelMat = glm::rotate(modelMat, glm::radians(angle), glm::vec3(1.0f, 0.3f, 0.5f));

//modelMat = glm::scale(modelMat, glm::vec3(1.2, 1.2, 1.2));

//glUniformMatrix4fv(glGetUniformLocation(border->ID, "modelMat"), 1, GL_FALSE, glm::value_ptr(modelMat));

//glUniformMatrix4fv(glGetUniformLocation(border->ID, "viewMat"), 1, GL_FALSE, glm::value_ptr(viewMat));

//glUniformMatrix4fv(glGetUniformLocation(border->ID, "projMat"), 1, GL_FALSE, glm::value_ptr(projMat));

//

因为之前设置了GL_NOTEQUAL,它会保证我们只绘制箱子上模板值不为1的部分

//cube.DrawArray(border,1,1,1);

//glStencilMask(0xFF);

// //glEnable(GL_DEPTH_TEST);

}

}

//绘制反射盒子

skyBoxReflection->use();

modelMat = glm::translate(glm::mat4(1.0f), glm::vec3(0, 0, 5.0f));

//Set view matrix

//viewMat = camera.GetViewMatrix();

//Set projection matrix

//projMat = glm::perspective(glm::radians(fov), 800.0f / 600.0f, 0.1f, 100.0f);

glUniformMatrix4fv(glGetUniformLocation(skyBoxReflection->ID, "modelMat"), 1, GL_FALSE, glm::value_ptr(modelMat));

//glUniformMatrix4fv(glGetUniformLocation(skyBoxReflection->ID, "viewMat"), 1, GL_FALSE, glm::value_ptr(viewMat));

//glUniformMatrix4fv(glGetUniformLocation(skyBoxReflection->ID, "projMat"), 1, GL_FALSE, glm::value_ptr(projMat));

glUniform3f(glGetUniformLocation(skyBoxReflection->ID, "cameraPos"), camera.Position.x, camera.Position.y, camera.Position.z);

glBindVertexArray(skyBoxReflectionVAO);

glBindTexture(GL_TEXTURE_CUBE_MAP, cubemapTexture);

glDrawArrays(GL_TRIANGLES, 0, 36);

//最后(透明物体之前)画天空盒

glDisable(GL_CULL_FACE);

glDepthMask(GL_FALSE);

glEnable(GL_DEPTH_TEST);

glDepthFunc(GL_LEQUAL);

skyBox->use();

//Set view matrix

viewMat = glm::mat4(glm::mat3(camera.GetViewMatrix()));

//Set projection matrix

projMat = glm::perspective(glm::radians(fov), 800.0f / 600.0f, 0.1f, 100.0f);

glUniformMatrix4fv(glGetUniformLocation(skyBox->ID, "viewMat"), 1, GL_FALSE, glm::value_ptr(viewMat));

glUniformMatrix4fv(glGetUniformLocation(skyBox->ID, "projMat"), 1, GL_FALSE, glm::value_ptr(projMat));

glBindVertexArray(skyboxVAO);

glBindTexture(GL_TEXTURE_CUBE_MAP, cubemapTexture);

glDrawArrays(GL_TRIANGLES, 0, 36);

glDepthMask(GL_TRUE);

glDepthFunc(GL_LESS);

//画玻璃

//利用map排序

std::map<float, glm::vec3 > sorted;

int length = sizeof(glassPosition) / sizeof(glassPosition[0]);

for (unsigned int i = 0; i < length; i++)

{

float distance = glm::length(camera.Position - glassPosition[i]);

sorted[distance] = glassPosition[i];

}

//在渲染的时候,我们将以逆序(从远到近)从map中获取值

//使用了map的一个反向迭代器(Reverse Iterator),反向遍历其中的条目,second会得到value,讲每个窗户四边形位移到对应的窗户位置上。

for (std::map<float, glm::vec3>::reverse_iterator it = sorted.rbegin(); it != sorted.rend(); ++it)

{

//Set Model matrix

modelMat = glm::translate(glm::mat4(1.0f), it->second);

modelMat = glm::rotate(modelMat, glm::radians(180.0f), glm::vec3(0.0f, 0.0f, 1.0f));

//Set view matrix

//viewMat = camera.GetViewMatrix();

//Set projection matrix

//projMat = glm::perspective(glm::radians(fov), 800.0f / 600.0f, 0.1f, 100.0f);

//Set Material -> Shader Program

glass->use();

glUniformMatrix4fv(glGetUniformLocation(glass->ID, "modelMat"), 1, GL_FALSE, glm::value_ptr(modelMat));

//glUniformMatrix4fv(glGetUniformLocation(glass->ID, "viewMat"), 1, GL_FALSE, glm::value_ptr(viewMat));

//glUniformMatrix4fv(glGetUniformLocation(glass->ID, "projMat"), 1, GL_FALSE, glm::value_ptr(projMat));

grassMesh.DrawSliceArray(Glass->shader, Glass->diffuse, Glass->specular, Glass->emission);

}

glEnable(GL_CULL_FACE);

//第二阶段 渲染到默认的帧缓冲 绘制四边形

glBindFramebuffer(GL_FRAMEBUFFER, 0); // 返回默认

//线框模式

//glPolygonMode(GL_FRONT_AND_BACK, GL_LINE);

glClearColor(1.0f, 1.0f, 0.0f, 0.0f);

glClear(GL_COLOR_BUFFER_BIT);

screenShader->use();

glBindVertexArray(quadVAO);

glBindTexture(GL_TEXTURE_2D, texColorBuffer); // use the color attachment texture as the texture of the quad plane

glDisable(GL_DEPTH_TEST);

glUniform1i(glGetUniformLocation(screenShader->ID, "screenTexture"), 0);

glDrawArrays(GL_TRIANGLES, 0, 6);

//Clean up prepare for next render loop

glfwSwapBuffers(window);

glfwPollEvents();

//Recording the time

float currentFrame = glfwGetTime();

deltaTime = currentFrame - lastFrame;

lastFrame = currentFrame;

}

//Exit program

glfwTerminate();

return 0;

}

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?