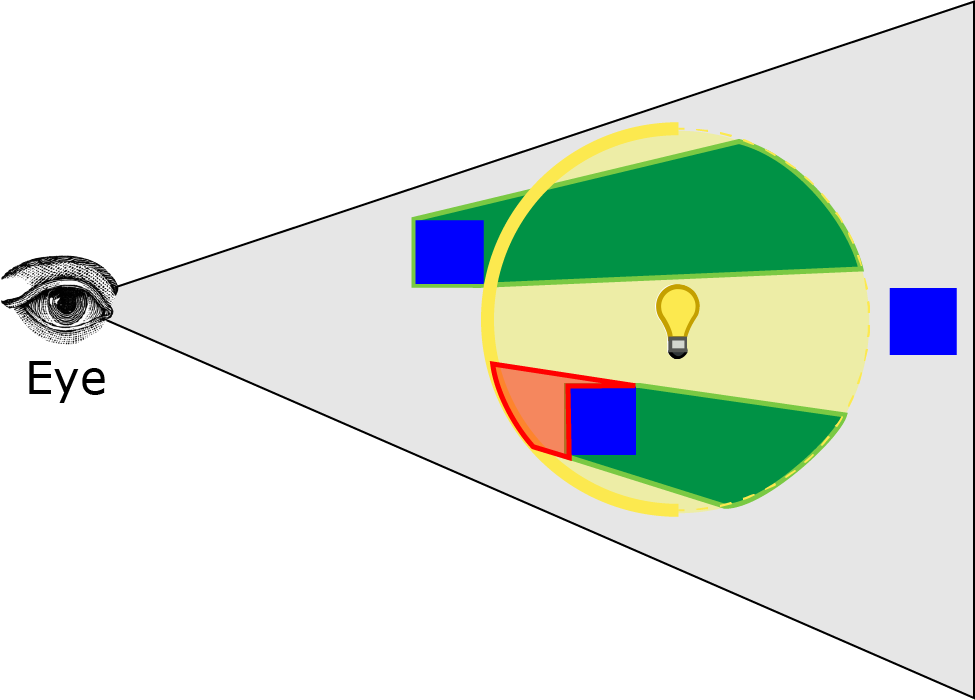

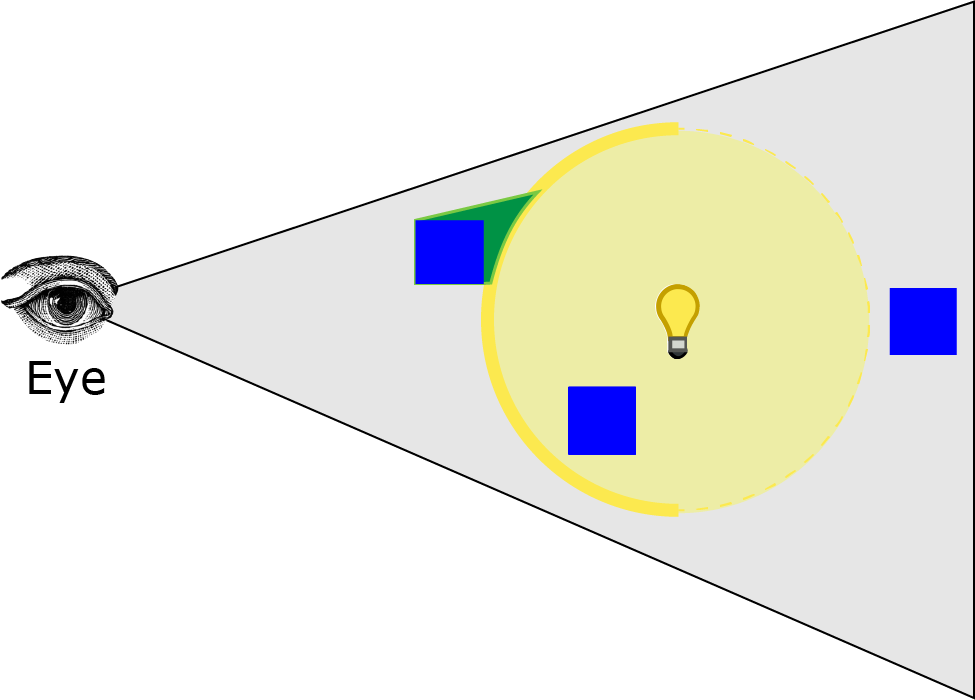

If we zoom-in to the top-left tile (highlighted green in the video above) we can inspect the top, left, bottom, and right frustum planes of the tile. If you play the video you will see that the sphere is partially contained in all four of the tile's frustum planes and thus the light cannot be culled.

In a GDC 2015 presentation by Gareth Thomas [21] he presents several methods to improve the accuracy of tile-based compute rendering. He suggests using parallel reduction instead of atomic min/max functions in the light culling compute shader. His performance analyses shows that he was able to achieve an 11 - 14 percent performance increase by using parallel reduction instead of atomic min/max.

In order to improve the accuracy of the light culling, Gareth suggests using an axis-aligned bounding box (AABB) to approximate the tile frustum. Using AABB's to approximate the size of the tile frustum proves to be a successful method for reducing the number of false positives without incurring an expensive intersection test. To perform the sphere-AABB intersection test, Gareth suggests using a very simple algorithm described by James Arvo in the first edition of the Graphics Gems series [22].

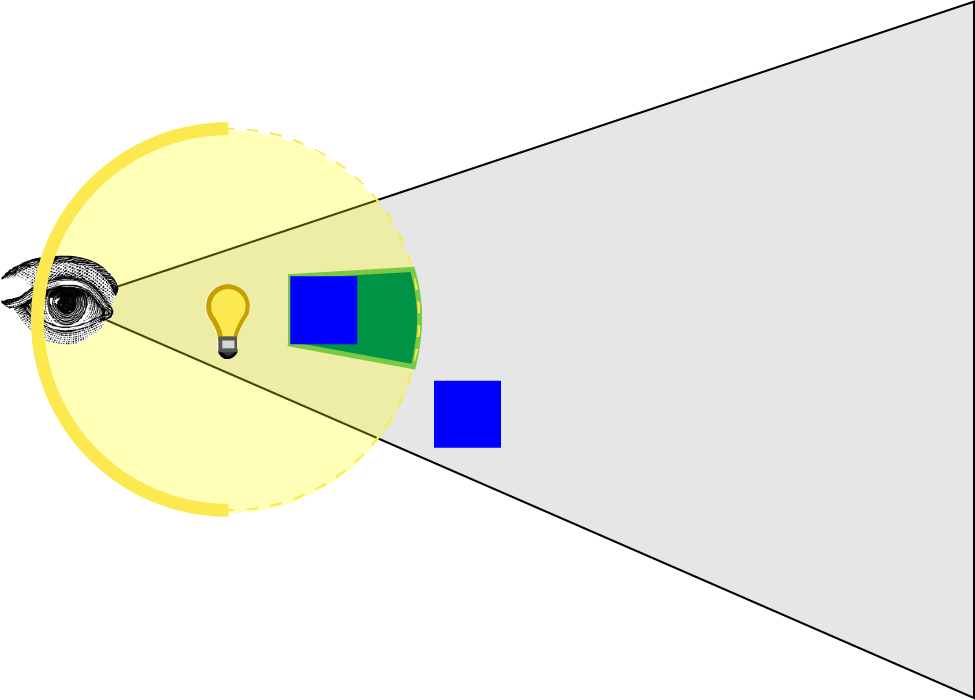

Another issue with tile-based light culling using the min/max depth bounds occurs in tiles with large depth discontinuities, for example when foreground geometry only partially overlaps a tile.

The blue and green tiles contain very few lights. In this case the minimum and maximum depth values are in close proximity. The red tiles indicate that the tile contains many lights due to a large depth disparity. In Gareth Thomas's presentation [21] he suggests splitting the frustum in two halves and computing minimum and maximum depth values for each half of the split frustum. This implies that the light culling algorithm must perform twice as much work per tile but his performance analysis shows that total frame time is reduced by about 10 - 12 percent using this technique.

A more interesting performance optimization is a method called Clustered Shading presented by Ola Olsson, Markus Billeter, and Ulf Assarsson in their paper titled "Clustered Deferred and Forward Shading" [23]. Their method groups view samples with similar properties (3D position and normals) into clusters. Lights in the scene are assigned to clusters and the per-cluster light lists are used in final shading. In their paper, they claim to be able to handle one million light sources while maintaining real-time frame-rates.

Other space partitioning algorithms may also prove to be successful at improving the performance of tile-based compute shaders. For example the use of Binary Space Partitioning (BSP) trees to split lights into the leaves of a binary tree. When performing final shading, only the lights in the leaf nodes of the BSP where the fragment exists needs to be considered for lighting.

Another possible data structure that could be used to reduce redundant lighting calculations is a sparse voxel octree as described by Cyril Crassin and Simon Green in OpenGL insights [24]. Instead of using the octree to store material information, the data structure is used to store the light index lists of lights contained in each node. During final shading, the light index lists are queried from the octree depending on the 3D position of the fragment.

Conclusion

In this article I described the implementation of three rendering techniques:

- Forward Rendering

- Deferred Rendering

- Tiled Forward (Forward+) Rendering

I have shown that traditional forward rendering is well suited for scenarios which require support for multiple shading models and semi-transparent objects. Forward rendering is also well suited for scenes that have only a few dynamic lights. The analysis shows that scenes that contain less than 100 dynamic scene lights still performs reasonably well on commercial hardware. Forward rendering also has a low memory footprint when multiple shadow maps are not required. When GPU memory is scarce and support for many dynamic lights is not a requirement (for example on mobile or embedded devices) traditional forward rendering may be the best choice.

Deferred rendering is best suited for scenarios that don't have a requirement for multiple shading models or semi-transparent objects but do have a requirement of many dynamic scene lights. Deferred rendering is well suited for many shadow casting lights because a single shadow map can be shared between successive lights in the lighting pass. Deferred rendering is not well suited for devices with limited GPU memory. Amongst the three rendering techniques, deferred rendering has the largest memory footprint requiring an additional 4 bytes per pixel per G-buffer texture (~3.7 MB per texture at a screen resolution of 1280x720).

Tiled forward rendering has a small initial overhead required to dispatch the light culling compute shader but the performance of tiled forward rendering with many dynamic lights quickly supasses the performance of both forward and deferred rendering. Tiled forward rendering requires a small amount of additional memory. Approximately 5.7 MB of additional storage is required to store the light index list and light grid using 16x16 tiles at a screen resolution of 1280x720. Tiled forward rendering requires that the target platform has support for compute shaders. It is possible to perform the light culling on the CPU and pass the light index list and light grid to the pixel shader in the case that compute shaders are not available but the performance trad-off might negate the benefit of performing light culling in the first place.

Tiled forward shading supports both multi-material and semi-transparent materials natively (using two light index lists) and both opaque and semi-transparent materials can benefit from the performance gains offered by tiled forward shading.

Although tiled forward shading may seem like the answer to life, the universe and everything (actually, 42 is), there are improvements that can be made to this technique. Clustered deferred rendering [23] should be able to perform even better at the expense of additional memory requirements. Perhaps the memory requirements of clustered deferred rendering could be mitigated by the use of sparse volume textures [20] but that has yet to be seen.

Download the Demo

The source code (including pre-built executables) can be download using the link below. The zip file is almost 1GB in size and contains all of the pre-built 3rd party libraries and the Crytek Sponza scene [11]

References

[1] T. Saito and T. Takahashi, 'Comprehensible rendering of 3-D shapes', ACM SIGGRAPH Computer Graphics, vol. 24, no. 4, pp. 197-206, 1990.

[2] T. Harada, J. McKee and J. Yang, 'Forward+: Bringing Deferred Lighting to the Next Level', Computer Graphics Forum, vol. 0, no. 0, pp. 1-4, 2012.

[3] T. Harada, J. McKee and J. Yang, 'Forward+: A Step Toward Film-Style Shading in Real Time', in GPU Pro 4, 1st ed., W. Engel, Ed. Boca Raton, Florida, USA: CRC Press, 2013, pp. 115-135.

[4] M. Billeter, O. Olsson and U. Assarsson, 'Tiled Forward Shading', in GPU Pro 4, 1st ed., W. Engel, Ed. Boca Raton, Florida, USA: CRC Press, 2013, pp. 99-114.

[5] O. Olsson and U. Assarsson, 'Tiled Shading', Journal of Graphics, GPU, and Game Tools, vol. 15, no. 4, pp. 235-251, 2011.

[6] Unity Technologies, 'Unity - Manual: Light Probes', Docs.unity3d.com, 2015. [Online]. Available: http://docs.unity3d.com/Manual/LightProbes.html. [Accessed: 04- Aug- 2015].

[7] Assimp.sourceforge.net, 'Open Asset Import Library', 2015. [Online]. Available: http://assimp.sourceforge.net/. [Accessed: 10- Aug- 2015].

[8] Msdn.microsoft.com, 'Semantics (Windows)', 2015. [Online]. Available: https://msdn.microsoft.com/en-us/library/windows/desktop/bb509647(v=vs.85).aspx. [Accessed: 10- Aug- 2015].

[9] Msdn.microsoft.com, 'Variable Syntax (Windows)', 2015. [Online]. Available: https://msdn.microsoft.com/en-us/library/windows/desktop/bb509706(v=vs.85).aspx. [Accessed: 10- Aug- 2015].

[10] Msdn.microsoft.com, 'earlydepthstencil (Windows)', 2015. [Online]. Available: https://msdn.microsoft.com/en-us/library/windows/desktop/ff471439(v=vs.85).aspx. [Accessed: 11- Aug- 2015].

[11] Crytek.com, 'Crytek3 Downloads', 2015. [Online]. Available: http://www.crytek.com/cryengine/cryengine3/downloads. [Accessed: 12- Aug- 2015].

[12] Graphics.cs.williams.edu, 'Computer Graphics Data - Meshes', 2015. [Online]. Available: http://graphics.cs.williams.edu/data/meshes.xml. [Accessed: 12- Aug- 2015].

[13] M. van der Leeuw, 'Deferred Rendering in Killzone 2', SCE Graphics Seminar, Palo Alto, California, 2007.

[14] Msdn.microsoft.com, 'D3D11_DEPTH_STENCIL_DESC structure (Windows)', 2015. [Online]. Available: https://msdn.microsoft.com/en-us/library/windows/desktop/ff476110(v=vs.85).aspx. [Accessed: 13- Aug- 2015].

[15] Electron9.phys.utk.edu, 'Coherence', 2015. [Online]. Available: http://electron9.phys.utk.edu/optics421/modules/m5/Coherence.htm. [Accessed: 14- Aug- 2015].

[16] Msdn.microsoft.com, 'Load (DirectX HLSL Texture Object) (Windows)', 2015. [Online]. Available: https://msdn.microsoft.com/en-us/library/windows/desktop/bb509694(v=vs.85).aspx. [Accessed: 14- Aug- 2015].

[17] Msdn.microsoft.com, 'Compute Shader Overview (Windows)', 2015. [Online]. Available: https://msdn.microsoft.com/en-us/library/windows/desktop/ff476331(v=vs.85).aspx. [Accessed: 04- Sep- 2015].

[18] C. Ericson, Real-time collision detection. Amsterdam: Elsevier, 2005.

[19] J. Andersson, 'DirectX 11 Rendering in Battlefield 3', 2011.

[20] Msdn.microsoft.com, 'Volume Tiled Resources (Windows)', 2015. [Online]. Available: https://msdn.microsoft.com/en-us/library/windows/desktop/dn903951(v=vs.85).aspx. [Accessed: 29- Sep- 2015].

[21] G. Thomas, 'Advancements in Tiled-Based Compute Rendering', San Francisco, California, USA, 2015.

[22] J. Arvo, 'A Simple Method for Box-Sphere Intersection Testing', in Graphics Gems, 1st ed., A. Glassner, Ed. Academic Press, 1990.

[23] O. Olsson, M. Billeter and U. Assarsson, 'Clustered Deferred and Forward Shading', High Performance Graphics, 2012.

[24] C. Crassin and S. Green, 'Octree-Based Sparse Voxelization Using the GPU Hardware Rasterizer', in OpenGL Insights, 1st ed., P. Cozzi and C. Riccio, Ed. CRC Press, 2012, p. Chapter 22.

[25] Mediacollege.com, 'Three Point Lighting', 2015. [Online]. Available: http://www.mediacollege.com/lighting/three-point/. [Accessed: 02- Oct- 2015].

原文:http://www.3dgep.com/forward-plus/

Introduction

Forward rendering works by rasterizing each geometric object in the scene. During shading, a list of lights in the scene is iterated to determine how the geometric object should be lit. This means that every geometric object has to consider every light in the scene. Of course, we can optimize this by discarding geometric objects that are occluded or do not appear in the view frustum of the camera. We can further optimize this technique by discarding lights that are not within the view frustum of the camera. If the range of the lights is known, then we can perform frustum culling on the light volumes before rendering the scene geometry. Object culling and light volume culling provide limited optimizations for this technique and light culling is often not practiced when using a forward rendering pipeline. It is more common to simply limit the number of lights that can affect a scene object. For example, some graphics engines will perform per-pixel lighting with the closest two or three lights and per-vertex lighting on three or four of the next closes lights. In traditional fixed-function rendering pipelines provided by OpenGL and DirectX the number of dynamic lights active in the scene at any time was limited to about eight. Even with modern graphics hardware, forward rendering pipelines are limited to about 100 dynamic scene lights before noticeable frame-rate issues start appearing.

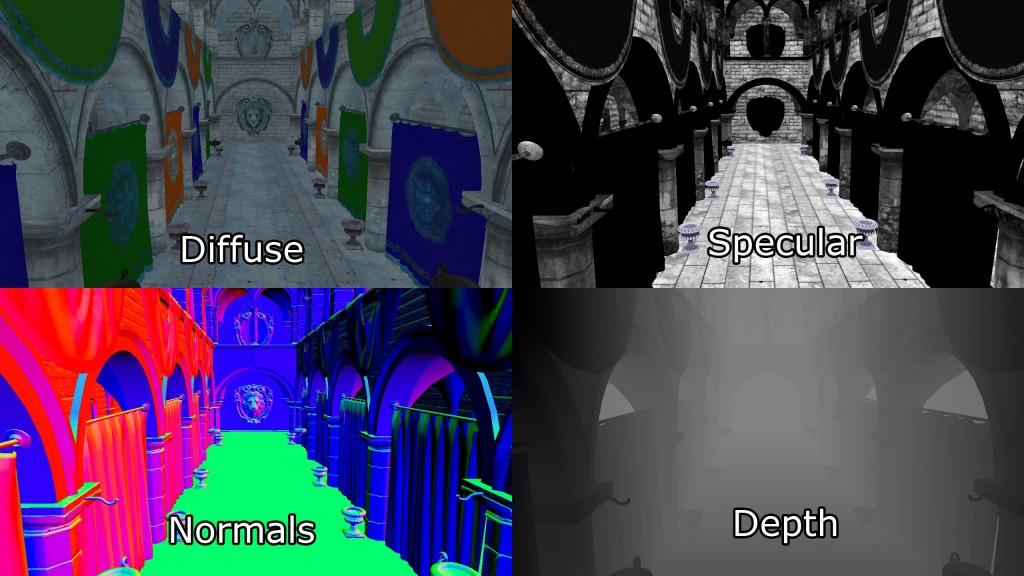

Deferred shading on the other hand, works by rasterizing all of the scene objects (without lighting) into a series of 2D image buffers that store the geometric information that is required to perform the lighting calculations in a later pass. The information that is stored into the 2D image buffers are:

- screen space depth

- surface normals

- diffuse color

- specular color and specular power

The textures that compose the G-Buffer. Diffuse (top-left), Specular (top-right), Normals (bottom-left), and Depth (bottom-right). The specular power is stored in the alpha channel of the specular texture (top-right).

The combination of these 2D image buffers are referred to as the Geometric Buffer (or G-buffer) [1].

Other information could also be stored into the image buffers if it is required for the lighting calculations that will be performed later but each G-buffer texture requires at least 8.29 MB of texture memory at full HD (1080p) and 32-bits per pixel.

After the G-buffer has been generated, the geometric information can then be used to compute the lighting information in the lighting pass. The lighting pass is performed by rendering each light source as a geometric object in the scene. Each pixel that is touched by the light’s geometric representation is shaded using the desired lighting equation.

The obvious advantage with the deferred shading technique compared to forward rendering is that the expensive lighting calculations are only computed once per light per covered pixel. With modern hardware, the deferred shading technique can handle about 2,500 dynamic scene lights at full HD resolutions (1080p) before frame-rate issues start appearing when rendering only opaque scene objects.

One of the disadvantage of using deferred shading is that only opaque objects can be rasterized into the G-buffers. The reason for this is that multiple transparent objects may cover the same screen pixels but it is only possible to store a single value per pixel in the G-buffers. In the lighting pass the depth value, surface normal, diffuse and specular colors are sampled for the current screen pixel that is being lit. Since only a single value from each G-buffer is sampled, transparent objects cannot be supported in the lighting pass. To circumvent this issue, transparent geometry must be rendered using the standard forward rendering technique which limits either the amount of transparent geometry in the scene or the number of dynamic lights in the scene. A scene which consists of only opaque objects can handle about 2000 dynamic lights before frame-rate issues start appearing.

Another disadvantage of deferred shading is that only a single lighting model can be simulated in the lighting pass. This is due to the fact that it is only possible to bind a single pixel shader when rendering the light geometry. This is usually not an issue for pipelines that make use of übershaders as rendering with a single pixel shader is the norm, however if your rendering pipeline takes advantage of several different lighting models implemented in various pixel shaders then it will be problematic to switch your rendering pipeline to use deferred shading.

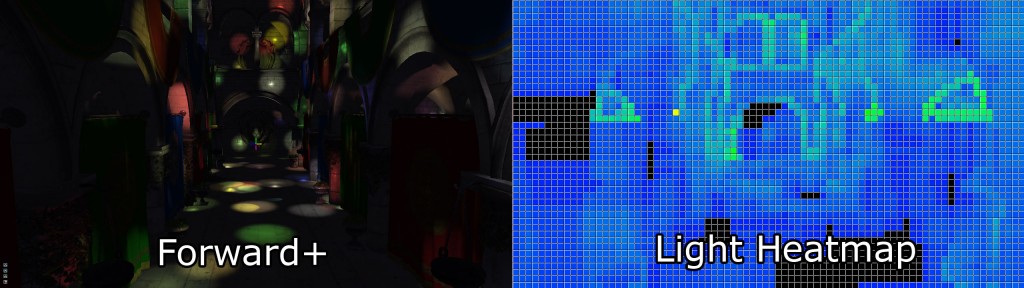

Forward+ [2][3] (also known as tiled forward shading) [4][5] is a rendering technique that combines forward rendering with tiled light culling to reduce the number of lights that must be considered during shading. Forward+ primarily consists of two stages:

- Light culling

- Forward rendering

Forward+ Lighting. Default Lighting (left), Light heatmap (right). The colors in the heatmap indicate how many lights are affecting the tile. Black tiles contain no lights while blue tiles contain between 1-10 lights. The green tiles contain 20-30 lights.

The first pass of the Forward+ rendering technique uses a uniform grid of tiles in screen space to partition the lights into per-tile lists.

The second pass uses a standard forward rendering pass to shade the objects in the scene but instead of looping over every dynamic light in the scene, the current pixel’s screen-space position is used to look-up the list of lights in the grid that was computed in the previous pass. The light culling provides a significant performance improvement over the standard forward rendering technique as it greatly reduces the number of redundant lights that must be iterated to correctly light the pixel. Both opaque and transparent geometry can be handled in a similar manner without a significant loss of performance and handling multiple materials and lighting models is natively supported with Forward+.

Since Forward+ incorporates the standard forward rendering pipeline into its technique, Forward+ can be integrated into existing graphics engines that were initially built using forward rendering. Forward+ does not make use of G-buffers and does not suffer the limitations of deferred shading. Both opaque and transparent geometry can be rendered using Forward+. Using modern graphics hardware, a scene consisting of 5,000 – 6,000 dynamic lights can be rendered in real-time at full HD resolutions (1080p).

In the remainder of this article, I will describe the implementation of these three techniques:

- Forward Rendering

- Deferred Shading

- Forward+ (Tiled Forward Rendering)

I will also show performance statistics under various circumstances to try to determine under which conditions one technique performs better than the others.

Definitions

In the context of this article, it is important to define a few terms so that the rest of the article is easier to understand. If you are familiar with the basic terminology used in graphics programming, you may skip this section.

The scene refers to a nested hierarchy of objects that can be rendered. For example, all of the static objects that can be rendered will be grouped into a scene. Each individual renderable object is referenced in the scene using a scene node. Each scene node references a single renderable object (such as a mesh) and the entire scene can be referenced using the scene’s top-level node called the root node. The connection of scene nodes within the scene is also called a scene graph. Since the root node is also a scene node, scenes can be nested to create more complex scene graphs with both static and dynamic objects.

A pass refers to a single operation that performs one step of a rendering technique. For example, the opaque pass is a pass that iterates over all of the objects in the scene and renders only the opaque objects. The transparent pass will also iterate over all of the objects in the scene but renders only the transparent objects. A pass could also be used for more general operations such as copying GPU resources or dispatching a compute shader.

A technique is the combination of several passes that must be executed in a particular order to implement a rendering algorithm.

A pipeline state refers to the configuration of the rendering pipeline before an object is rendered. A pipeline state object encapsulates the following render state:

- Shaders (vertex, tessellation, geometry, and pixel)

- Rasterizer state (polygon fill mode, culling mode, scissor culling, viewports)

- Blend state

- Depth/Stencil state

- Render target

DirectX 12 introduces a pipeline state object but my definition of the pipeline state varies slightly from the DirectX 12 definition.

Forward rendering refers to a rendering technique that traditionally has only two passes:

- Opaque Pass

- Transparent Pass

The opaque pass will render all opaque objects in the scene ideally sorted front to back (relative to the camera) in order to minimize overdraw. During the opaque pass, no blending needs to be performed.

The transparent pass will render all transparent objects in the scene ideally sorted back to front (relative to the camera) in order to support correct blending. During the transparent pass, alpha blending needs to be enabled to allow for semi-transparent materials to be blended correctly with pixels already rendered to the render target’s color buffer.

During forward rendering, all lighting is performed in the pixel shader together will all other material shading instructions.

Deferred shading refers to a rendering technique that consists of three primary passes:

- Geometry Pass

- Lighting Pass

- Transparent Pass

The first pass is the geometry pass which is similar to the opaque pass of the forward rendering technique because only opaque objects are rendered in this pass. The difference is that the geometry pass does not perform any lighting calculations but only outputs the geometric and material data to the G-buffer that was described in the introduction.

In the lighting pass, the geometric volumes that represent the lights are rendered into the scene and the material information stored in the G-buffer is used to compute the lighting for the rasterized pixels.

The final pass is the transparent pass. This pass is identical to the transparent pass of the forward rendering technique. Since deferred shading has no native support for transparent materials, transparent objects have to be rendered in a separate pass that performs lighting using the standard forward rendering method.

Forward+ (also referred to as tiled forward rendering) is a rendering technique that consists of three primary passes:

- Light Culling Pass

- Opaque Pass

- Transparent Pass

As mentioned in the introduction, the light culling pass is responsible for sorting the dynamic lights in the scene into screen space tiles. A light index list is used to indicate which light indices (from the global light list) are overlapping each screen tile. In the light culling pass, two sets of light index lists will be generated:

- Opaque light index list

- Transparent light index list

The opaque light index list is used when rendering opaque geometry and the transparent light index list is used when rendering transparent geometry.

The opaque and transparent passes of the Forward+ rendering technique are identical to that of the standard forward rendering technique but instead of looping over all of the dynamic lights in the scene, only the lights in the current fragment’s screen space tile need to be considered.

A light refers to one of the following types of lights:

- Point light

- Spot light

- Directional light

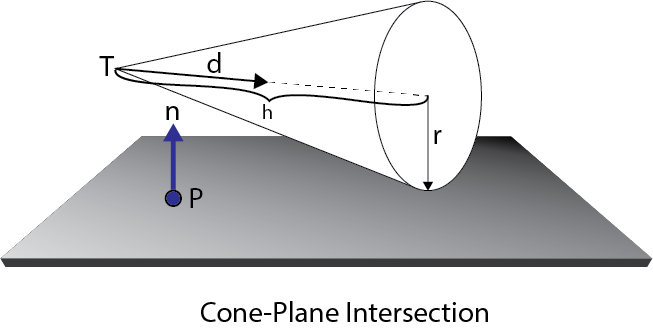

All rendering techniques described in this article have support for these three light types. Area lights are not supported. The point light and the spot light are simulated as emanating from a single point of origin while the directional light is considered to emanate from a point infinitely far away emitting light everywhere in the same direction. Point lights and spot lights have a limited range after which their intensity falls-off to zero. The fall-off of the intensity of the light called attenuation. Point lights are geometrically represented as spheres, spot lights as cones, and directional lights as full-screen quads.

Let’s first take a more detailed look at the standard forward rendering technique.

Forward Rendering

Forward rendering is the simplest of the three lighting techniques and the most common technique used to render graphics in games. It is also the most computationally expensive technique for computing lighting and for this reason, it does not allow for a large number of dynamic lights to be used in the scene.

Most graphics engines that use forward rendering will utilize various techniques to simulate many lights in the scene. For example, lightmapping and light probes are methods used to pre-compute the lighting contributions from static lights placed in the scene and storing these lighting contributions in textures that are loaded at runtime. Unfortunately, lightmapping and light probes cannot be used to simulate dynamic lights in the scene because the lights that were used to produce the lightmaps are often discarded at runtime.

For this experiment, forward rendering is used as the ground truth to compare the other two rendering techniques. The forward rendering technique is also used to establish a performance baseline that can be used to compare the performance of the other rendering techniques.

Many functions of the forward rendering technique are reused in the deferred and forward+ rendering techniques. For example, the vertex shader used in forward rendering is also used for both deferred shading and forward+ rendering. Also the methods to compute the final lighting and material shading are reused in all rendering techniques.

In the next section, I will describe the implementation of the forward rendering technique.

Vertex Shader

The vertex shader is common to all rendering techniques. In this experiment, only static geometry is supported and there is no skeletal animation or terrain that would require a different vertex shader. The vertex shader is as simple as it can be while supporting the required functionality in the pixel shader such as normal mapping.

Before I show the vertex shader code, I will describe the data structures used by the vertex shader.

|

140

141

142

143

144

145

146

147

|

struct

AppData

{

float3

position

: POSITION;

float3

tangent

: TANGENT;

float3

binormal

: BINORMAL;

float3

normal

: NORMAL;

float2

texCoord

: TEXCOORD0;

};

|

The AppData structure defines the data that is expected to be sent by the application code (for a tutorial on how to pass data from the application to a vertex shader, please refer to my previous article titled Introduction to DirectX 11). For normal mapping, in addition to the normal vector, we also need to send the tangent vector, and optionally the binormal (or bitangent) vector. The tangent and binormal vectors can either be created by the 3D artist when the model is created, or they can be generated by the model importer. In my case, I rely on the Open Asset Import Library [7] to generate the tangents and bitangents if they were not already created by the 3D artist.

In the vertex shader, we also need to know how to transform the object space vectors that are sent by the application into view space which are required by the pixel shader. To do this, we need to send the world, view, and projection matrices to the vertex shader (for a review of the various spaces used in this article, please refer to my previous article titled Coordinate Systems). To store these matrices, I will create a constant buffer that will store the per-object variables needed by the vertex shader.

|

149

150

151

152

153

|

cbuffer PerObject

: register( b0 )

{

float4x4

ModelViewProjection;

float4x4

ModelView;

}

|

Since I don’t need to store the world matrix separately, I precompute the combined model, and view, and the combined model, view, and projection matrices together in the application and send these matrices in a single constant buffer to the vertex shader.

The output from the vertex shader (and consequently, the input to the pixel shader) looks like this:

|

181

182

183

184

185

186

187

188

189

|

struct

VertexShaderOutput

{

float3

positionVS

: TEXCOORD0;

// View space position.

float2

texCoord

: TEXCOORD1;

// Texture coordinate

float3

tangentVS

: TANGENT;

// View space tangent.

float3

binormalVS

: BINORMAL;

// View space binormal.

float3

normalVS

: NORMAL;

// View space normal.

float4

position

: SV_POSITION;

// Clip space position.

};

|

The VertexShaderOutput structure is used to pass the transformed vertex attributes to the pixel shader. The members that are named with a VS postfix indicate that the vector is expressed in view space. I chose to do all of the lighting in view space, as opposed to world space, because it is easier to work in view space coordinates when implementing the deferred shading and forward+ rendering techniques.

The vertex shader is fairly straightforward and minimal. It’s only purpose is to transform the object space vectors passed by the application into view space to be used by the pixel shader.

The vertex shader must also compute the clip space position that is consumed by the rasterizer. The SV_POSITION semantic is applied to the output value from the vertex shader to specify that the value is used as the clip space position but this semantic can also be applied to an input variable of a pixel shader. When SV_POSITION is used as an input semantic to a pixel shader, the value is the position of the pixel in screen space [8]. In both the deferred shading and the forward+ shaders, I will use this semantic to the get the screen space position of the current pixel.

|

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

|

VertexShaderOutput VS_main( AppData IN )

{

VertexShaderOutput OUT;

OUT.position =

mul

( ModelViewProjection,

float4

( IN.position, 1.0f ) );

OUT.positionVS =

mul

( ModelView,

float4

( IN.position, 1.0f ) ).xyz;

OUT.tangentVS =

mul

( (

float3x3

)ModelView, IN.tangent );

OUT.binormalVS =

mul

( (

float3x3

)ModelView, IN.binormal );

OUT.normalVS =

mul

( (

float3x3

)ModelView, IN.normal );

OUT.texCoord = IN.texCoord;

return

OUT;

}

|

You will notice that I am pre-multiplying the input vectors by the matrices. This indicates that the matrices are stored in column-major order by default. Prior to DirectX 10, matrices in HLSL were loaded in row-major order and input vectors were post-multiplied by the matrices. Since DirectX 10, matrices are loaded in column-major order by default. You can change the default order by specifying the row_major type modifier on the matrix variable declarations [9].

Pixel Shader

The pixel shader will compute all of the lighting and shading that is used to determine the final color of a single screen pixel. The lighting equations used in this pixel shader are described in a previous article titled Texturing and Lighting in DirectX 11 if you are not familiar with lighting models, then you should read that article first before continuing.

The pixel shader uses several structures to do its work. The Material struct stores all of the information that describes the surface material of the object being shaded and the Light struct contains all of the parameters that are necessary to describe a light that is placed in the scene.

Material

The Material struct defines all of the properties that are necessary to describe the surface of the object currently being shaded. Since some material properties can also have an associated texture (for example, diffuse textures, specular textures, or normal texture), we will also use the material to indicate if those textures are present on the object.

|

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

|

struct

Material

{

float4

GlobalAmbient;

//-------------------------- ( 16 bytes )

float4

AmbientColor;

//-------------------------- ( 16 bytes )

float4

EmissiveColor;

//-------------------------- ( 16 bytes )

float4

DiffuseColor;

//-------------------------- ( 16 bytes )

float4

SpecularColor;

//-------------------------- ( 16 bytes )

// Reflective value.

float4

Reflectance;

//-------------------------- ( 16 bytes )

float

Opacity;

float

SpecularPower;

// For transparent materials, IOR > 0.

float

IndexOfRefraction;

bool

HasAmbientTexture;

//-------------------------- ( 16 bytes )

bool

HasEmissiveTexture;

bool

HasDiffuseTexture;

bool

HasSpecularTexture;

bool

HasSpecularPowerTexture;

//-------------------------- ( 16 bytes )

bool

HasNormalTexture;

bool

HasBumpTexture;

bool

HasOpacityTexture;

float

BumpIntensity;

//-------------------------- ( 16 bytes )

float

SpecularScale;

float

AlphaThreshold;

float2

Padding;

//--------------------------- ( 16 bytes )

};

//--------------------------- ( 16 * 10 = 160 bytes )

|

The GlobalAmbient term is used to describe the ambient contribution applied to all object in the scene globally. Technically, this variable should be a global variable (not specific to a single object) but since there is only a single material at a time in the pixel shader, I figured it was a fine place to put it.

The ambient, emissive, diffuse, and specular color values have the same meaning as in my previous article titled Texturing and Lighting in DirectX 11 so I will not explain them in detail here.

The Reflectance component could be used to indicate the amount of reflected color that should be blended with the diffuse color. This would require environment mapping to be implemented which I am not doing in this experiment so this value is not used here.

The Opacity value is used to determine the total opacity of an object. This value can be used to make objects appear transparent. This property is used to render semi-transparent objects in the transparent pass. If the opacity value is less than one (1 being fully opaque and 0 being fully transparent), the object will be considered transparent and will be rendered in the transparent pass instead of the opaque pass.

The SpecularPower variable is used to determine how shiny the object appears. Specular power was described in my previous article titled Texturing and Lighting in DirectX 11 so I won’t repeat it here.

The IndexOfRefraction variable can be applied on objects that should refract light through them. Since refraction requires environment mapping techniques that are not implemented in this experiment, this variable will not be used here.

The HasTexture variables defined on lines 29-38 indicate whether the object being rendered has an associated texture for those properties. If the parameter is true then the corresponding texture will be sampled and the texel will be blended with the corresponding material color value.

The BumpIntensity variable is used to scale the height values from a bump map (not to be confused with normal mapping which does not need to be scaled) in order to soften or accentuate the apparent bumpiness of an object’s surface. In most cases models will use normal maps to add detail to the surface of an object without high tessellation but it is also possible to use a heightmap to do the same thing. If a model has a bump map, the material’s HasBumpTexture property will be set to true and in this case the model will be bump mapped instead of normal mapped.

The SpecularScale variable is used to scale the specular power value that is read from a specular power texture. Since textures usually store values as unsigned normalized values, when sampling from the texture the value is read as a floating-point value in the range of [0..1]. A specular power of 1.0 does not make much sense (as was explained in my previous article titled Texturing and Lighting in DirectX 11) so the specular power value read from the texture will be scaled by SpecularScale before being used for the final lighting computation.

The AlphaThreshold variable can be used to discard pixels whose opacity is below a certain value using the “discard” command in the pixel shader. This can be used with “cut-out” materials where the object does not need to be alpha blended but it should have holes in the object (for example, a chain-link fence).

The Padding variable is used to explicitly add eight bytes of padding to the material struct. Although HLSL will implicitly add this padding to this struct to make sure the size of the struct is a multiple of 16 bytes, explicitly adding the padding makes it clear that the size and alignment of this struct is identical to its C++ counterpart.

The material properties are passed to the pixel shader using a constant buffer.

|

155

156

157

158

|

cbuffer Material

: register( b2 )

{

Material Mat;

};

|

This constant buffer and buffer register slot assignment is used for all pixel shaders described in this article.

Textures

The materials have support for eight different textures.

- Ambient

- Emissive

- Diffuse

- Specular

- SpecularPower

- Normals

- Bump

- Opacity

Not all scene objects will use all of the texture slots (normal and bump maps are mutually exclusive so they can probably reuse the same texture slot assignment). It is up to the 3D artist to determine which textures will be used by the models in the scene. The application will load the textures that are associated to a material. A texture parameter and an associated texture slot assignment is declared for each of these material properties.

|

167

168

169

170

171

172

173

174

|

Texture2D AmbientTexture

: register( t0 );

Texture2D EmissiveTexture

: register( t1 );

Texture2D DiffuseTexture

: register( t2 );

Texture2D SpecularTexture

: register( t3 );

Texture2D SpecularPowerTexture

: register( t4 );

Texture2D NormalTexture

: register( t5 );

Texture2D BumpTexture

: register( t6 );

Texture2D OpacityTexture

: register( t7 );

|

In every pixel shader described in this article, texture slots 0-7 will be reserved for these textures.

Lights

The Light struct stores all the information necessary to define a light in the scene. Spot lights, point lights and directional lights are not separated into different structs and all of the properties necessary to define any of those light types are stored in a single struct.

|

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

|

struct

Light

{

/**

* Position for point and spot lights (World space).

*/

float4

PositionWS;

//--------------------------------------------------------------( 16 bytes )

/**

* Direction for spot and directional lights (World space).

*/

float4

DirectionWS;

//--------------------------------------------------------------( 16 bytes )

/**

* Position for point and spot lights (View space).

*/

float4

PositionVS;

//--------------------------------------------------------------( 16 bytes )

/**

* Direction for spot and directional lights (View space).

*/

float4

DirectionVS;

//--------------------------------------------------------------( 16 bytes )

/**

* Color of the light. Diffuse and specular colors are not seperated.

*/

float4

Color;

//--------------------------------------------------------------( 16 bytes )

/**

* The half angle of the spotlight cone.

*/

float

SpotlightAngle;

/**

* The range of the light.

*/

float

Range;

/**

* The intensity of the light.

*/

float

Intensity;

/**

* Disable or enable the light.

*/

bool

Enabled;

//--------------------------------------------------------------( 16 bytes )

/**

* Is the light selected in the editor?

*/

bool

Selected;

/**

* The type of the light.

*/

uint Type;

float2

Padding;

//--------------------------------------------------------------( 16 bytes )

//--------------------------------------------------------------( 16 * 7 = 112 bytes )

};

|

The Position and Direction properties are stored in both world space (with the WS postfix) and in view space (with VS postfix). Of course the Position variable only applies to point and spot lights while the Direction variable only applies to spot and directional lights. I store both world space and view space position and direction vectors because I find it easier to work in world space in the application then convert the world space vectors to view space before uploading the lights array to the GPU. This way I do not need to maintain multiple light lists at the cost of additional space that is required on the GPU. But even 10,000 lights only require 1.12 MB on the GPU so I figured this was a reasonable sacrifice. But minimizing the size of the light structs could have a positive impact on caching on the GPU and improve rendering performance. This is further discussed in the Future Considerations section at the end of this article.

In some lighting models the diffuse and specular lighting contributions are separated. I chose not to separate the diffuse and specular color contributions because it is rare that these values differ. Instead I chose to store both the diffuse and specular lighting contributions in a single variable called Color.

The SpotlightAngle is the half-angle of the spotlight cone expressed in degrees. Working in degrees seems to be more intuitive than working in radians. Of course, the spotlight angle will be converted to radians in the shader when we need to compute the cosine angle of the spotlight and the light vector.

The Range variable determines how far away the light will reach and still contribute light to a surface. Although not entirely physically correct (real lights have an attenuation that never actually reaches 0) lights are required to have a finite range to implement the deferred shading and forward+ rendering techniques. The units of this range are scene specific but generally I try to adhere to the 1 unit is 1 meter specification. For point lights, the range is the radius of the sphere that represents the light and for spotlights, the range is the length of the cone that represents the light. Directional lights don’t use range because they are considered to be infinitely far away pointing in the same direction everywhere.

The Intensity variable is used to modulate the computed light contribution. By default, this value is 1 but it can be used to make some lights brighter or more subtle than other lights.

Lights in the scene can be toggled on and off with the Enabled flag. Lights whose Enabled flag is false will be skipped in the shader.

Lights are editable in this demo. A light can be selected by clicking on it in the demo application and its properties can be modified. To indicate that a light is currently selected, the Selected flag will be set to true. When a light is selected in the scene, its visual representation will appear darker (less transparent) to indicate that it is currently selected.

The Type variable is used to indicate which type of light this is. It can have one of the following values:

|

6

7

8

|

#define POINT_LIGHT 0

#define SPOT_LIGHT 1

#define DIRECTIONAL_LIGHT 2

|

Once again the Light struct is explicitly padded with 8 bytes to match the struct layout in C++ and to make the struct explicitly aligned to 16 bytes which is required in HLSL.

The lights array is accessed through a StructuredBuffer. Most lighting shader implementations will use a constant buffer to store the lights array but constant buffers are limited to 64 KB in size which means that it would be limited to about 570 lights before running out of constant memory on the GPU. Structured buffers are stored in texture memory which is limited to the amount of texture memory available on the GPU (usually in the GB range on desktop GPUs). Texture memory is also very fast on most GPUs so storing the lights in a structured buffer did not impose a performance impact. In fact, on my particular GPU (NVIDIA GeForce GTX 680) I noticed a considerable performance improvement when I moved the lights array to a structure buffer.

|

176

|

StructuredBuffer

<Light> Lights

: register( t8 );

|

Pixel Shader Continued

The pixel shader for the forward rendering technique is slightly more complicated than the vertex shader. If you have read my previous article titled Texturing and Lighting in DirectX 11 then you should already be familiar with most of the implementation of this shader, but I will explain it in detail here as it is the basis of all of the rendering algorithms shown in this article.

Materials

First, we need to gather the material properties of the material. If the material has textures associated with its various components, the textures will be sampled before the lighting is computed. After the material properties have been initialized, all of the lights in the scene will be iterated and the lighting contributions will be accumulated and modulated with the material properties to produce the final pixel color.

|

19

20

21

22

23

24

|

[earlydepthstencil]

float4

PS_main( VertexShaderOutput IN )

: SV_TARGET

{

// Everything is in view space.

float4

eyePos = { 0, 0, 0, 1 };

Material mat = Mat;

|

The [earlydepthstencil] attribute before the function indicates that the GPU should take advantage of early depth and stencil culling [10]. This causes the depth/stencil tests to be performed before the pixel shader is executed. This attribute can not be used on shaders that modify the pixel’s depth value by outputting a value using the SV_Depth semantic. Since this pixel shader only outputs a color value using the SV_TARGET semantic, it can take advantage of early depth/stencil testing to provide a performance improvement when a pixel is rejected. Most GPU’s will perform early depth/stencil tests anyways even without this attribute and adding this attribute to the pixel shader did not have a noticeable impact on performance but I decided to keep the attribute anyways.

Since all of the lighting computations will be performed in view space, the eye position (the position of the camera) is always (0, 0, 0). This is a nice side effect of working in view space; The camera’s eye position does not need to be passed as an additional parameter to the shader.

On line 24 a temporary copy of the material is created because its properties will be modified in the shader if there is an associated texture for the material property. Since the material properties are stored in a constant buffer, it would not be possible to directly update the materials properties from the constant buffer uniform variable so a local temporary must be used.

Diffuse

The first material property we will read is the diffuse color.

|

26

27

28

29

30

31

32

33

34

35

36

37

38

|

float4

diffuse = mat.DiffuseColor;

if

( mat.HasDiffuseTexture )

{

float4

diffuseTex = DiffuseTexture.Sample( LinearRepeatSampler, IN.texCoord );

if

(

any

( diffuse.rgb ) )

{

diffuse *= diffuseTex;

}

else

{

diffuse = diffuseTex;

}

}

|

The default diffuse color is the diffuse color assigned to the material’s DiffuseColor variable. If the material also has a diffuse texture associated with it then the color from the diffuse texture will be blended with the material’s diffuse color. If the material’s diffuse color is black (0, 0, 0, 0), then the material’s diffuse color will simply be replaced by the color in the diffuse texture. The any hlsl intrinsic function can be used to find out if any of the color components is not zero.

Opacity

The pixel’s alpha value is determined next.

|

41

42

43

44

45

46

|

float

alpha = diffuse.a;

if

( mat.HasOpacityTexture )

{

// If the material has an opacity texture, use that to override the diffuse alpha.

alpha = OpacityTexture.Sample( LinearRepeatSampler, IN.texCoord ).r;

}

|

By default, the fragment’s transparency value is determined by the alpha component of the diffuse color. If the material has an opacity texture associated with it, the red component of the opacity texture is used as the alpha value, overriding the alpha value in the diffuse texture. In most cases, opacity textures store only a single channel in the first component of the color that is returned from the Sample method. In order to read from a single-channel texture, we must read from the red channel, not the alpha channel. The alpha channel of a single channel texture will always be 1 so reading the alpha channel from the opacity map (which is most likely a single channel texture) would not provide the value we require.

Ambient and Emissive

The ambient and emissive colors are read in a similar fashion as the diffuse color. The ambient color is also combined with the value of the material’s GlobalAmbient variable.

|

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

|

float4

ambient = mat.AmbientColor;

if

( mat.HasAmbientTexture )

{

float4

ambientTex = AmbientTexture.Sample( LinearRepeatSampler, IN.texCoord );

if

(

any

( ambient.rgb ) )

{

ambient *= ambientTex;

}

else

{

ambient = ambientTex;

}

}

// Combine the global ambient term.

ambient *= mat.GlobalAmbient;

float4

emissive = mat.EmissiveColor;

if

( mat.HasEmissiveTexture )

{

float4

emissiveTex = EmissiveTexture.Sample( LinearRepeatSampler, IN.texCoord );

if

(

any

( emissive.rgb ) )

{

emissive *= emissiveTex;

}

else

{

emissive = emissiveTex;

}

}

|

Specular Power

Next the specular power is computed.

|

78

79

80

81

82

|

if

( mat.HasSpecularPowerTexture )

{

mat.SpecularPower = SpecularPowerTexture.Sample( LinearRepeatSampler, IN.texCoord ).r \

* mat.SpecularScale;

}

|

If the material has an associated specular power texture, the red component of the texture is sampled and scaled by the value of the material’s SpecularScale variable. In this case, the value of the SpecularPower variable in the material is replaced with the scaled value from the texture.

Normals

If the material has either an associated normal map or a bump map, normal mapping or bump mapping will be performed to compute the normal vector. If neither a normal map nor a bump map texture is associated with the material, the input normal is used as-is.

|

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

|

// Normal mapping

if

( mat.HasNormalTexture )

{

// For scenes with normal mapping, I don't have to invert the binormal.

float3x3

TBN =

float3x3

(

normalize

( IN.tangentVS ),

normalize

( IN.binormalVS ),

normalize

( IN.normalVS ) );

N = DoNormalMapping( TBN, NormalTexture, LinearRepeatSampler, IN.texCoord );

}

// Bump mapping

else

if

( mat.HasBumpTexture )

{

// For most scenes using bump mapping, I have to invert the binormal.

float3x3

TBN =

float3x3

(

normalize

( IN.tangentVS ),

normalize

( -IN.binormalVS ),

normalize

( IN.normalVS ) );

N = DoBumpMapping( TBN, BumpTexture, LinearRepeatSampler, IN.texCoord, mat.BumpIntensity );

}

// Just use the normal from the model.

else

{

N =

normalize

(

float4

( IN.normalVS, 0 ) );

}

|

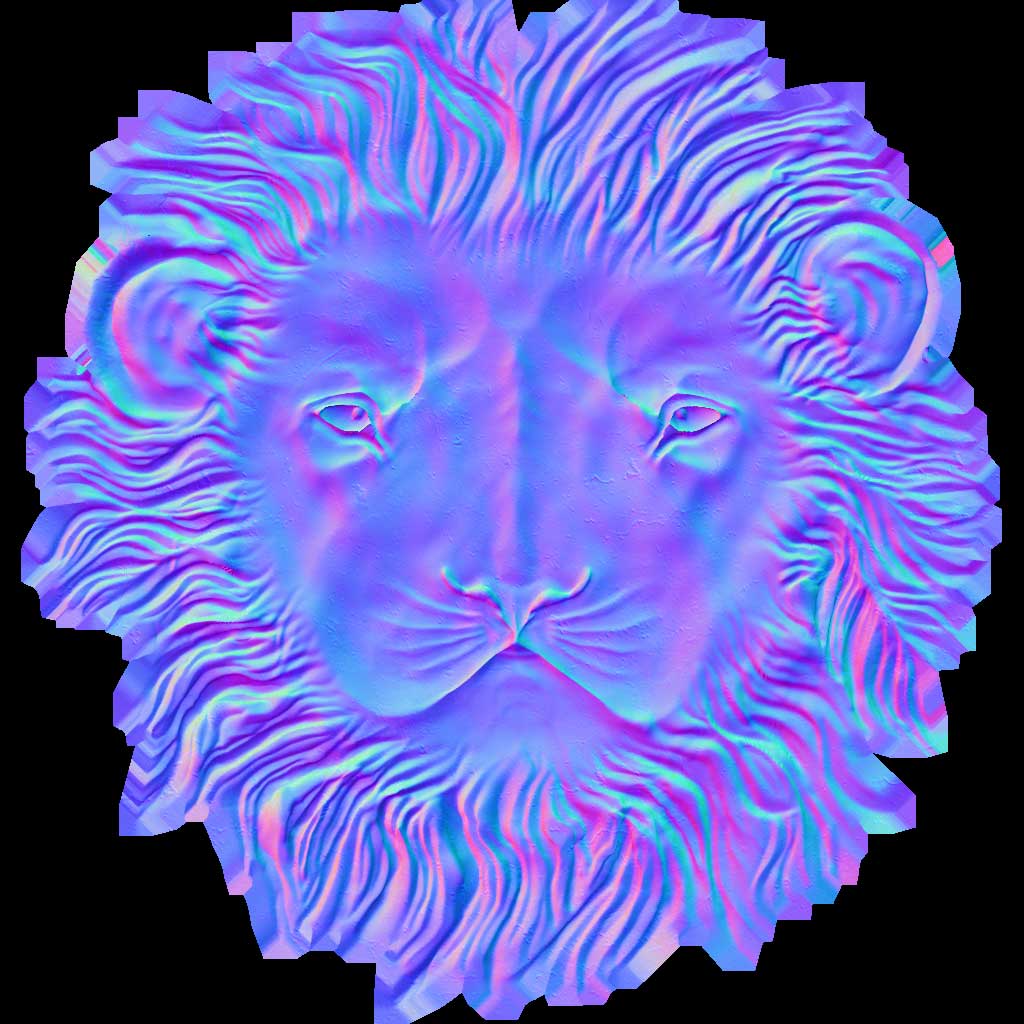

Normal Mapping

The DoNormalMapping function will perform normal mapping from the TBN (tangent, bitangent/binormal, normal) matrix and the normal map.

An example normal map texture of the lion head in the Crytek Sponza scene. [11]

|

323

324

325

326

327

328

329

330

331

332

333

334

335

336

|

float3

ExpandNormal(

float3

n )

{

return

n * 2.0f - 1.0f;

}

float4

DoNormalMapping(

float3x3

TBN, Texture2D tex,

sampler

s,

float2

uv )

{

float3

normal = tex.Sample( s, uv ).xyz;

normal = ExpandNormal( normal );

// Transform normal from tangent space to view space.

normal =

mul

( normal, TBN );

return

normalize

(

float4

( normal, 0 ) );

}

|

Normal mapping is pretty straightforward and is explained in more detail in a previous article titled Normal Mapping so I won’t explain it in detail here. Basically we just need to sample the normal from the normal map, expand the normal into the range [-1..1] and transform it from tangent space into view space by post-multiplying it by the TBN matrix.

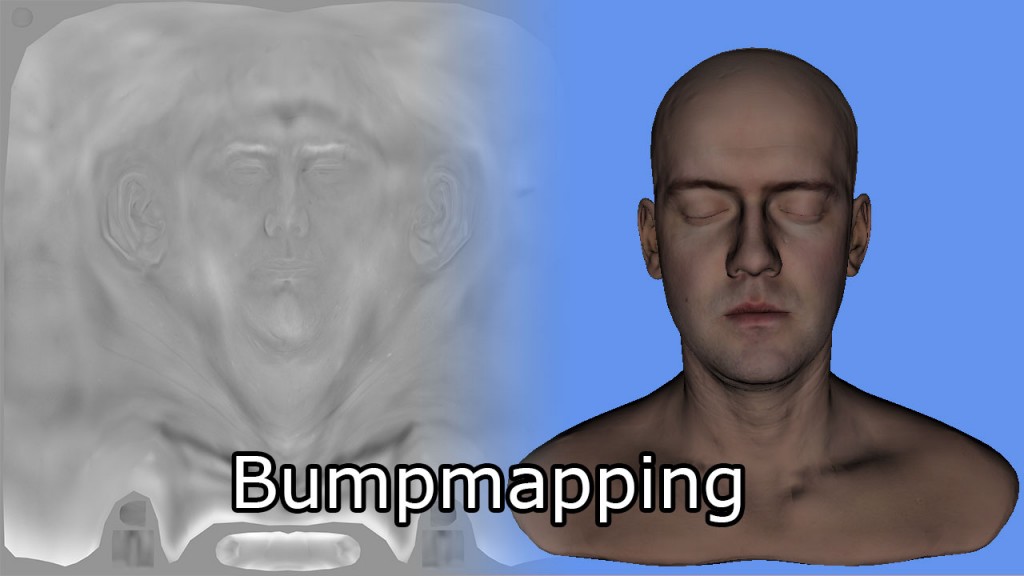

Bump Mapping

Bump mapping works in a similar way, except instead of storing the normals directly in the texture, the bumpmap texture stores height values in the range [0..1]. The normal can be generated from the height map by computing the gradient of the height values in both the U and V texture coordinate directions. Taking the cross product of the gradients in each direction gives the normal in texture space. Post-multiplying the resulting normal by the TBN matrix will give the normal in view space. The height values read from the bump map can be scaled to produce more (or less) accentuated bumpiness.

Bumpmap texture (left) and the corresponding head model (right). [12]

|

333

334

335

336

337

338

339

340

341

342

343

344

345

346

347

348

349

350

351

352

353

|

float4

DoBumpMapping(

float3x3

TBN, Texture2D tex,

sampler

s,

float2

uv,

float

bumpScale )

{

// Sample the heightmap at the current texture coordinate.

float

height = tex.Sample( s, uv ).r * bumpScale;

// Sample the heightmap in the U texture coordinate direction.

float

heightU = tex.Sample( s, uv,

int2

( 1, 0 ) ).r * bumpScale;

// Sample the heightmap in the V texture coordinate direction.

float

heightV = tex.Sample( s, uv,

int2

( 0, 1 ) ).r * bumpScale;

float3

p = { 0, 0, height };

float3

pU = { 1, 0, heightU };

float3

pV = { 0, 1, heightV };

// normal = tangent x bitangent

float3

normal =

cross

(

normalize

(pU - p),

normalize

(pV - p) );

// Transform normal from tangent space to view space.

normal =

mul

( normal, TBN );

return

float4

( normal, 0 );

}

|

If the material does not have an associated normal map or a bump map, the normal vector from the vertex shader output is used directly.

Now we have all of the data that is required to compute the lighting.

Lighting

The lighting calculations for the forward rendering technique are performed in the DoLighting function. This function accepts the following arguments:

- lights: The lights array (as a structured buffer)

- mat: The material properties that were just computed

- eyePos: The position of the camera in view space (which is always (0, 0, 0))

- P: The position of the point being shaded in view space

- N: The normal of the point being shaded in view space.

The DoLighting function returns a LightingResult structure that contains the diffuse and specular lighting contributions from all of the lights in the scene.

|

425

426

427

428

429

430

431

432

433

434

435

436

437

438

439

440

441

442

443

444

445

446

447

448

449

450

451

452

453

454

455

456

457

458

459

460

461

462

463

464

465

466

467

468

469

470

471

472

|

// This lighting result is returned by the

// lighting functions for each light type.

struct

LightingResult

{

float4

Diffuse;

float4

Specular;

};

LightingResult DoLighting(

StructuredBuffer

<Light> lights, Material mat,

float4

eyePos,

float4

P,

float4

N )

{

float4

V =

normalize

( eyePos - P );

LightingResult totalResult = (LightingResult)0;

for

(

int

i = 0; i < NUM_LIGHTS; ++i )

{

LightingResult result = (LightingResult)0;

// Skip lights that are not enabled.

if

( !lights[i].Enabled )

continue

;

// Skip point and spot lights that are out of range of the point being shaded.

if

( lights[i].Type != DIRECTIONAL_LIGHT &&

length

( lights[i].PositionVS - P ) > lights[i].Range )

continue

;

switch

( lights[i].Type )

{

case

DIRECTIONAL_LIGHT

:

{

result = DoDirectionalLight( lights[i], mat, V, P, N );

}

break

;

case

POINT_LIGHT

:

{

result = DoPointLight( lights[i], mat, V, P, N );

}

break

;

case

SPOT_LIGHT

:

{

result = DoSpotLight( lights[i], mat, V, P, N );

}

break

;

}

totalResult.Diffuse += result.Diffuse;

totalResult.Specular += result.Specular;

}

return

totalResult;

}

|

The view vector (V) is computed from the eye position and the position of the shaded pixel in view space.

The light buffer is iterated on line 439. Since we know that disabled lights and lights that are not within range of the point being shaded won’t contribute any lighting, we can skip those lights. Otherwise, the appropriate lighting function is invoked depending on the type of light.

Each of the various light types will compute their diffuse and specular lighting contributions. Since diffuse and specular lighting is computed in the same way for every light type, I will define functions to compute the diffuse and specular lighting contributions independent of the light type.

Diffuse Lighting

The DoDiffuse function is very simple and only needs to know about the light vector (L) and the surface normal (N).

|

355

356

357

358

359

|

float4

DoDiffuse( Light light,

float4

L,

float4

N )

{

float

NdotL =

max

(

dot

( N, L ), 0 );

return

light.Color * NdotL;

}

|

The diffuse lighting is computed by taking the dot product between the light vector (L) and the surface normal (N). The DoDiffuse function expects both of these vectors to be normalized.

The resulting dot product is then multiplied by the color of the light to compute the diffuse contribution of the light.

Next, we’ll compute the specular contribution of the light.

Specular Lighting

The DoSpecular function is used to compute the specular contribution of the light. In addition to the light vector (L) and the surface normal (N), this function also needs the view vector (V) to compute the specular contribution of the light.

|

361

362

363

364

365

366

367

|

float4

DoSpecular( Light light, Material material,

float4

V,

float4

L,

float4

N )

{

float4

R =

normalize

(

reflect

( -L, N ) );

float

RdotV =

max

(

dot

( R, V ), 0 );

return

light.Color *

pow

( RdotV, material.SpecularPower );

}

|

Since the light vector L is the vector pointing from the point being shaded to the light source, it needs to be negated so that it points from the light source to the point being shaded before we compute the reflection vector. The resulting dot product of the reflection vector (R) and the view vector (V) is raised to the power of the value of the material’s specular power variable and modulated by the color of the light. It’s important to remember that a specular power value in the range (0…1) is not a meaningful specular power value. For a detailed explanation of specular lighting, please refer to my previous article titled Texturing and Lighting in DirectX 11.

Attenuation

Attenuation is the fall-off of the intensity of the light as the light is further away from the point being shaded. In traditional lighting models the attenuation is computed as the reciprocal of the sum of three attenuation factors multiplied by the distance to the light (as explained in Attenuation):

- Constant attenuation

- Linear attenuation

- Quadratic attenuation

However this method of computing attenuation assumes that the fall-off of the light never reaches zero (lights have an infinite range). For deferred shading and forward+ we must be able to represent the lights in the scene as volumes with finite range so we need to use a different method to compute the attenuation of the light.

One possible method to compute the attenuation of the light is to perform a linear blend from 1.0 when the point is closest to the light and 0.0 if the point is at a distance greater than the range of the light. However a linear fall-off does not look very realistic as attenuation in reality is more similar to the reciprocal of a quadratic function.

I decided to use the smoothstep hlsl intrinsic function which returns a smooth interpolation between a minimum and maximum value.

|

396

397

398

399

400

|

// Compute the attenuation based on the range of the light.

float

DoAttenuation( Light light,

float

d )

{

return

1.0f -

smoothstep

( light.Range * 0.75f, light.Range, d );

}

|

The smoothstep function will return 0 when the distance to the light (d) is less than ¾ of the range of the light and 1 when the distance to the light is more than the range. Of course we want to reverse this interpolation so we just subtract this value from 1 to get the attenuation we need.

Optionally, we could adjust the smoothness of the attenuation of the light by parameterization of the 0.75f in the equation above. A smoothness factor of 0.0 should result in the intensity of the light remaining 1.0 all the way to the maximum range of the light while a smoothness of 1.0 should result in the intensity of the light being interpolated through the entire range of the light.

Now let’s combine the diffuse, specular, and attenuation factors to compute the lighting contribution for each light type.

Point Lights

Point lights combine the attenuation, diffuse, and specular values to determine the final contribution of the light.

|

390

391

392

393

394

395

396

397

398

399

400

401

402

403

404

405

406

|

LightingResult DoPointLight( Light light, Material mat,

float4

V,

float4

P,

float4

N )

{

LightingResult result;

float4

L = light.PositionVS - P;

float

distance

=

length

( L );

L = L /

distance

;

float

attenuation = DoAttenuation( light,

distance

);

result.Diffuse = DoDiffuse( light, L, N ) *

attenuation * light.Intensity;

result.Specular = DoSpecular( light, mat, V, L, N ) *

attenuation * light.Intensity;

return

result;

}

|

On line 400-401, the diffuse and specular contributions are scaled by the attenuation and the light intensity factors before being returned from the function.

Spot Lights

In addition to the attenuation factor, spot lights also have a cone angle. In this case, the intensity of the light is scaled by the dot product between the light vector (L) and the direction of the spotlight. If the angle between light vector and the direction of the spotlight is less than the spotlight cone angle, then the point should be lit by the spotlight. Otherwise the spotlight should not contribute any light to the point being shaded. The DoSpotCone function will compute the intensity of the light based on the spotlight cone angle.

|

375

376

377

378

379

380

381

382

383

384

385

386

387

388

|

float

DoSpotCone( Light light,

float4

L )

{

// If the cosine angle of the light's direction

// vector and the vector from the light source to the point being

// shaded is less than minCos, then the spotlight contribution will be 0.

float

minCos =

cos

(

radians

( light.SpotlightAngle ) );

// If the cosine angle of the light's direction vector

// and the vector from the light source to the point being shaded

// is greater than maxCos, then the spotlight contribution will be 1.

float

maxCos =

lerp

( minCos, 1, 0.5f );

float

cosAngle =

dot

( light.DirectionVS, -L );

// Blend between the minimum and maximum cosine angles.

return

smoothstep

( minCos, maxCos, cosAngle );

}

|

First, the cosine angle of the spotlight cone is computed. If the dot product between the direction of the spotlight and the light vector (L) is less than the min cosine angle then the contribution of the light will be 0. If the dot product is greater than max cosine angle then the contribution of the spotlight will be 1.

It may seem counter-intuitive that the max cosine angle is a smaller angle than the min cosine angle but don’t forget that the cosine of 0° is 1 and the cosine of 90° is 0.

The DoSpotLight function will compute the spotlight contribution similar to that of the point light with the addition of the spotlight cone angle.

|

418

419

420

421

422

423

424

425

426

427

428

429

430

431

432

433

434

435

|

LightingResult DoSpotLight( Light light, Material mat,

float4

V,

float4

P,

float4

N )

{

LightingResult result;

float4

L = light.PositionVS - P;

float

distance

=

length

( L );

L = L /

distance

;

float

attenuation = DoAttenuation( light,

distance

);

float

spotIntensity = DoSpotCone( light, L );

result.Diffuse = DoDiffuse( light, L, N ) *

attenuation * spotIntensity * light.Intensity;

result.Specular = DoSpecular( light, mat, V, L, N ) *

attenuation * spotIntensity * light.Intensity;

return

result;

}

|

Directional Lights

Directional lights are the simplest light type because they do not attenuate over the distance to the point being shaded.

|

406

407

408

409

410

411

412

413

414

415

416

|

LightingResult DoDirectionalLight( Light light, Material mat,

float4

V,

float4

P,

float4

N )

{

LightingResult result;

float4

L =

normalize

( -light.DirectionVS );

result.Diffuse = DoDiffuse( light, L, N ) * light.Intensity;

result.Specular = DoSpecular( light, mat, V, L, N ) * light.Intensity;

return

result;

}

|

Final Shading

Now we have the material properties and the summed lighting contributions of all of the lights in the scene we can combine them to perform final shading.

|

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

|

float4

P =

float4

( IN.positionVS, 1 );

LightingResult

lit

= DoLighting( Lights, mat, eyePos, P, N );

diffuse *=

float4

(

lit

.Diffuse.rgb, 1.0f );

// Discard the alpha value from the lighting calculations.

float4

specular = 0;

if

( mat.SpecularPower > 1.0f )

// If specular power is too low, don't use it.

{

specular = mat.SpecularColor;

if

( mat.HasSpecularTexture )

{

float4

specularTex = SpecularTexture.Sample( LinearRepeatSampler, IN.texCoord );

if

(

any

( specular.rgb ) )

{

specular *= specularTex;

}

else

{

specular = specularTex;

}

}

specular *=

lit

.Specular;

}

return

float4

( ( ambient + emissive + diffuse + specular ).rgb,

alpha * mat.Opacity );

}

|

On line 113 the lighting contributions is computed using the DoLighting function that was just described.

On line 115, the material’s diffuse color is modulated by the lights diffuse contribution.

If the material’s specular power is lower than 1.0, it will not be considered for final shading. Some artists will assign a specular power less than 1 if a material does not have a specular shine. In this case we just ignore the specular contribution and the material is considered diffuse only (lambert reflectance only). Otherwise, if the material has a specular color texture associated with it, it will be sampled and combined with the material’s specular color before it is modulated with the light’s specular contribution.

The final pixel color is the sum of the ambient, emissive, diffuse and specular components. The opacity of the pixel is determined by the alpha value that was determined earlier in the pixel shader.

Deferred Shading

The deferred shading technique consists of three passes:

- G-buffer pass

- Lighting pass

- Transparent pass

The g-buffer pass will fill the g-buffer textures that were described in the introduction. The lighting pass will render each light source as a geometric object and compute the lighting for covered pixels. The transparent pass will render transparent scene objects using the standard forward rendering technique.

G-Buffer Pass

The first pass of the deferred shading technique will generate the G-buffer textures. I will first describe the layout of the G-buffers.

G-Buffer Layout

The layout of the G-buffer can be a subject of an entire article on this website. The layout I chose for this demonstration is based on simplicity and necessity. It is not the most efficient G-buffer layout as some data could be better packed into smaller buffers. There has been some discussion on packing attributes in the G-buffers but I did not perform any analysis regarding the effects of using various packing methods.

The attributes that need to be stored in the G-buffers are:

- Depth/Stencil

- Light Accumulation

- Diffuse

- Specular

- Normals

Depth/Stencil Buffer

The Depth/Stencil texture is stored as 32-bits per pixel with 24 bits for the depth value as a unsigned normalized value (UNORM) and 8 bits for the stencil value as an unsigned integer (UINT). The texture resource for the depth buffer is created using the R24G8_TYPELESS texture format and the depth/stencil view is created with the D24_UNORM_S8_UINT texture format. When accessing the depth buffer in the pixel shader, the shader resource view is created using the R24_UNORM_X8_TYPELESS texture format since the stencil value is unused.

The Depth/Stencil buffer will be attached to the output merger stage and will not directly computed in the G-buffer pixel shader. The results of the vertex shader are written directly to the depth/stencil buffer.

Light Accumulation Buffer

The light accumulation buffer is used to store the final result of the lighting pass. This is the same buffer as the back buffer of the screen. If your G-buffer textures are the same dimension as your screen, there is no need to allocate an additional buffer for the light accumulation buffer and the back buffer of the screen can be used directly.

The light accumulation buffer is stored as a 32-bit 4-component unsigned normalized texture using the R8G8B8A8_UNORM texture format for both the texture resource and the shader resource view.

The light accumulation buffer stores the emissive and ambient terms. This image has been considerably brightened to make the scene more visible.

After the G-buffer pass, the light accumulation buffer initially only stores the ambient and emissive terms of the lighting equation. This image was brightened considerably to make it more visible.

You may also notice that only the fully opaque objects in the scene are rendered. Deferred shading does not support transparent objects so only the opaque objects are rendered in the G-buffer pass.

As an optimization, you may also want to accumulate directional lights in the G-buffer pass and skip directional lights in the lighting pass. Since directional lights are rendered as full-screen quads in the lighting pass, accumulating them in the g-buffer pass may save some shader cycles if fill-rate is an issue. I’m not taking advantage of this optimization in this experiment because that would require storing directional lights in a separate buffer which is inconsistent with the way the forward and forward+ pixel shaders handle lighting.

Diffuse Buffer

The diffuse buffer is stored as a 32-bit 4-component unsigned normalized (UNORM) texture. Since only opaque objects are rendered in deferred shading, there is no need for the alpha channel in this buffer and it remains unused in this experiment. Both the texture resource and the shader resource view use the R8G8B8A8_UNORM texture format.

The above image shows the result of the diffuse buffer after the G-buffer pass.

Specular Buffer

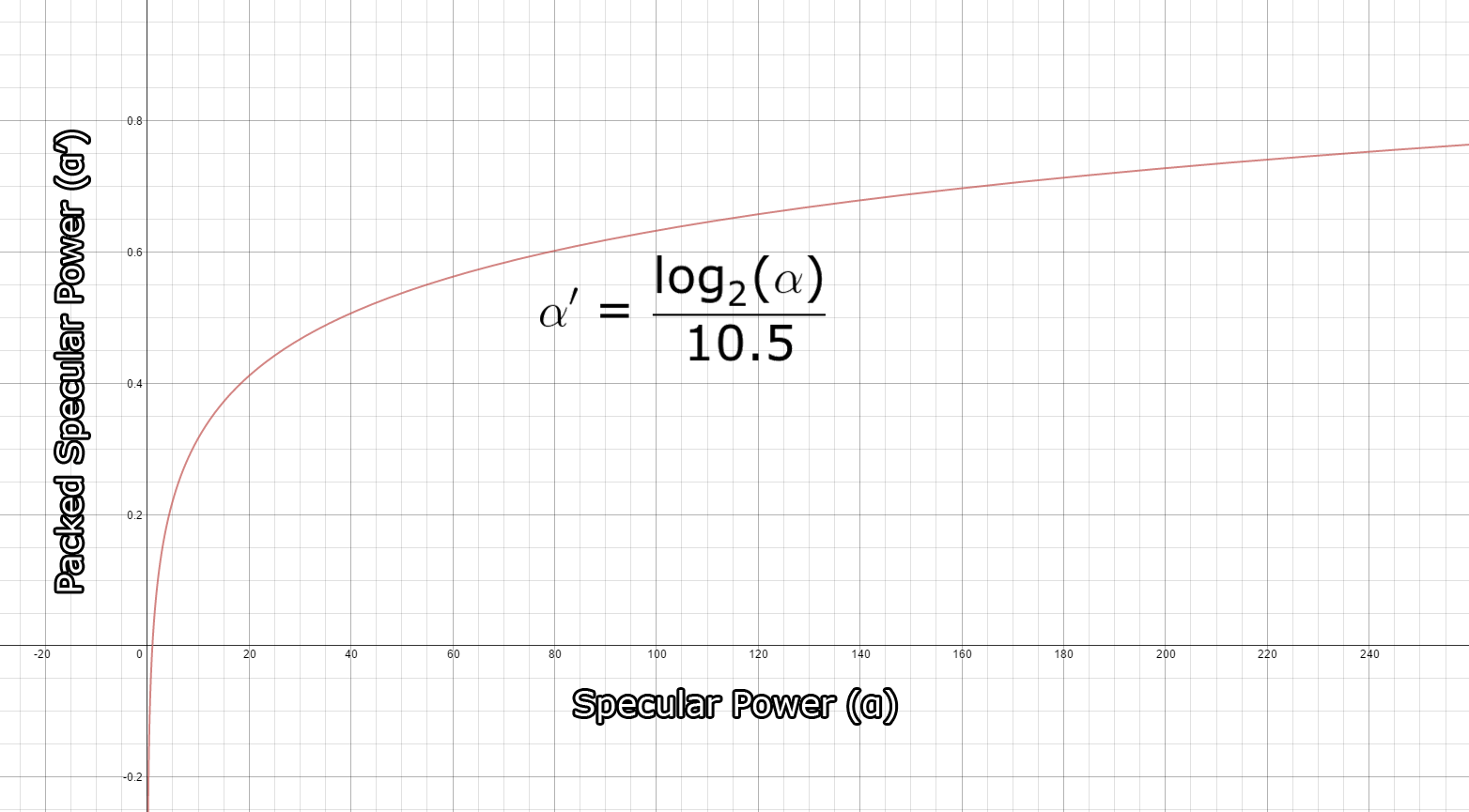

Similar to the light accumulation and the diffuse buffers, the specular color buffer is stored as a 32-bit 4-component unsigned normalized texture using the R8G8B8A8_UNORM format. The red, green, and blue channels are used to store the specular color while the alpha channel is used to store the specular power. The specular power value is usually expressed in the range (1…256] (or higher) but it needs to be packed into the range [0…1] to be stored in the texture. To pack the specular power into the texture, I use the method described in a presentation given by Michiel van der Leeuw titled “Deferred Rendering in Killzone 2” [13]. In that presentation he uses the following equation to pack the specular power value:

This function allows for packing of specular power values in the range [1…1448.15] and provides good precision for values in the normal specular range (1…256). The graph below shows the progression of the packed specular value.

The result of packing specular power. The horizontal axis shows the original specular power and the vertical axis shows the packed specular power.[/math]

And the result of the specular buffer after the G-buffer pass looks like this.

Normal Buffer

The view space normals are stored in a 128-bit 4-component floating point buffer using the R32G32B32A32_FLOAT texture format. A normal buffer of this size is not really necessary and I could probably have packed the X and Y components of the normal into a 32-bit 2-component half-precision floating point buffer and recomputed the z-component in the lighting pass. For this experiment, I favored precision and simplicity over efficiency and since my GPU is not constrained by texture memory I used the largest possible buffer with the highest precision.

It would be worthwhile to investigate other texture formats for the normal buffer and analyze the quality versus performance tradeoffs. My hypothesis is that using a smaller texture format (for example R16G16_FLOAT) for the normal buffer would produce similar quality results while providing improved performance.