目录

1. PromptTuningInit.RANDOM for generate prompts

2. PromptTuningInit.TEXT for detect hate in sentences

这篇prompt微调主要聚焦的是:两种虚拟 token 的初始化方式(Random or Text)

1. PromptTuningInit.RANDOM for generate prompts

1.1 codes

代码主体与上一篇(PEFT 之 Prompt-Tuning 1-CSDN博客)中的Prompt-Tuning1一致,主要区别在于数据处理的不同。

(1)data prepare

def concatenate_columns_prompt(dataset):

def concatenate(example):

example['prompt'] = "Act as a {}. Prompt: {}".format(example['act'], example['prompt'])

return example

dataset = dataset.map(concatenate)

return dataset

#Create the Dataset to create prompts.

data_prompt = load_dataset(dataset_prompt)

data_prompt['train'] = concatenate_columns_prompt(data_prompt['train'])

data_prompt = data_prompt.map(lambda samples: tokenizer(samples["prompt"]), batched=True)

train_sample_prompt = data_prompt["train"].remove_columns('act')lambda samples: tokenizer(samples["prompt"])

lambda是匿名函数(无需显式定义函数名的轻量级函数,lambda 参数: 表达式),相当于:

def tokenize_batch(samples):

return tokenizer(samples["prompt"]) 输入参数(samples)是一个批次的字典格式的样本:

samples = {"prompt": ["prompt1", "prompt2", ...], "act": ["act1", "act2", ...]} tokenizer(samples["prompt"]) 会对该批次中所有prompt字段进行分词,返回包含input_ids、attention_mask等字段的字典。 最终数据集的结构变为类似:

{"input_ids": [[...], ...], "attention_mask": [[...], ...], "act": [...]} (2)prompt 微调配置

generation_config_prompt = PromptTuningConfig(

task_type=TaskType.CAUSAL_LM,

prompt_tuning_init=PromptTuningInit.RANDOM, #The added virtual tokens are initializad with random numbers

num_virtual_tokens=NUM_VIRTUAL_TOKENS,

tokenizer_name_or_path=model_name

)1.2 results

(1)input1 = "Act as a fitness Trainer. Prompt: "

pretrained_model:

['Act as a fitness Trainer. Prompt: Follow up with your trainer']

560M Trained for 50 Epochs & 8 virtual tokens:

'train_loss': 2.69047605074369

['Act as a fitness Trainer. Prompt: I want you to act like an expert in the field of exercise and nutrition, but also have some fun with your own life! You will be teaching me how my body works when i do not eat enough or if it is too hot for what we']

560M Trained for 50 Epochs & 20 virtual tokens:

'train_loss': 2.5929998485858623

['Act as a fitness Trainer. Prompt: 输入你的问题,并给出答案。我是一对夫妻的健身教练和瑜伽老师、有小孩两岁半的孩子以及一个5岁的孩子在家里做运动训练需要用到专业的指导及建议!请用你最擅长的内容来']

最后一条输出的prompt为中文,这让主包有点意外了。但是 ai 说这种情况是正常的,不过可能的原因有:

根本原因: Bloomz-560M 是一个多语言模型。微调过程未明确限制输出语言,导致模型自由切换语言。

次要原因:训练参数的副作用。高学习率(3e-2)可能导致模型过度拟合训练数据中的噪声。训练轮次(50 epochs)过长可能放大噪声影响,使模型偏离预期目标。

稍微对参数进行了一些修改,几次输出都是英文的,机制很黑盒了:

参数:epoch = 30, learning_rate = 3e-3

output1:

['Act as a fitness Trainer. Prompt: I want you to act like an expert in your field and help others improve their health by doing exercises. You can be anything from running, swimming or cycling for example. My first request is "What\'s the best way of improving my body?" If']

output2:

['Act as a fitness Trainer. Prompt: I want you to act like an expert in your field and provide recommendations for the best products, services or training methods available on this market. You will also be responsible for: 1) providing advice about what is right at home; 2) helping customers make']

output3:

['Act as a fitness Trainer. Prompt: I want you to act like an expert in your field and be able to: Answer questions about the subject of this exercise, such as: "what is my favorite sport?" or "the best way for me [to] get fitter ... what are'](2)input2 = "Act as a Motivational Coach. Prompt: "

由于上一篇中输入“I want you to act as a motivational coach”得到的输出非常莫名其妙,于是主包选择重新尝试一下。

pretrained_model:

['Act as a Motivational Coach. Prompt: Ask for feedback']

560M Trained for 50 Epochs & 8 virtual tokens:

'train_loss': 2.6983046018160306

['Act as a Motivational Coach. Prompt: 输入问题,并给出建议。写出答案后写下动机和目标。 使用正向激励法来达到最佳效果:使用积极、正面的语气鼓励自己实现自己的目标和愿望;用消极的言语贬低自身或他人时要避免']

560M Trained for 50 Epochs & 20 virtual tokens:

'train_loss': 2.6259666560246395

['Act as a Motivational Coach. Prompt: + Addictive, motivating and inspiring; + I would like to help people feel more confident in themselves by helping them achieve their goals through actionable strategies that are practical for the real world; i want you always have something new or interesting happening with']

从上述结果能明显感觉到,经过当前数据处理的input,微调后输出的效果要好很多。

结构化输入(Act as {act}, Prompt:{prompt} )

通过显式标注任务角色 act 和指令内容 prompt,增强了模型对任务结构的感知。这种格式更贴近原始数据集(每条样本包含 act 和 prompt),这种对齐可能让模型更快学习到“角色-任务”的映射关系,从而在微调中更高效地捕捉任务模式。

显式的结构可能更适合需要严格遵循指令的场景(如客服对话、模板化回复),但可能限制模型的创造性。原始输入(“I want you to act as a motivational coach”)

依赖模型自行推断任务目标,可能导致生成结果的多样性强,但对指令的遵循性较弱。 直接使用 prompt 字段,省略了 act 信息,可能导致模型忽略角色定义对生成结果的影响。例如,模型可能更关注“生成激励性内容”,而非“作为教练”这一身份约束。

隐式指令则更适合开放域生成(如创意写作),模型能更灵活地结合上下文续写内容,但可能因指令模糊导致输出不稳定。

2. PromptTuningInit.TEXT for detect hate in sentences

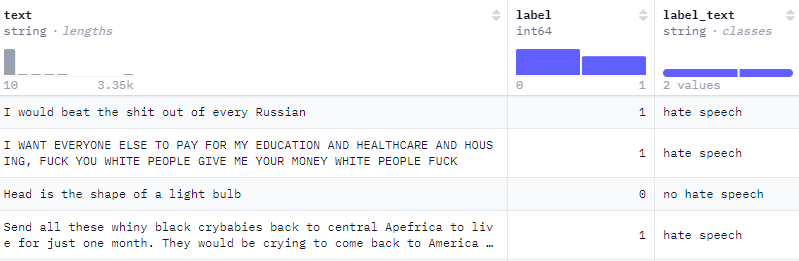

通过 Prompt Tuning ,将生成式语言模型(BLOOMZ-560M)改造成一个 二分类的仇恨言论检测器,使用的数据集是 SetFit/ethos_binary(包含仇恨言论和非仇恨言论的文本标注)。

2.1 codes

(1)data prepare

SetFit/ethos_binary 数据集:

concatenate_columns_classifier 将原始数据转换为指令式提示,为了训练模型根据输入句子生成对应的标签(hate or no_hate)。

def concatenate_columns_classifier(dataset):

def concatenate(example):

example['text'] = "Sentence : {} Label : {}".format(example['text'], example['label_text'])

return example

dataset = dataset.map(concatenate)

return dataset

data_classifier = load_dataset(dataset_classifier)

data_classifier['train'] = concatenate_columns_classifier(data_classifier['train'])

data_classifier = data_classifier.map(lambda samples: tokenizer(samples["text"]), batched=True)

train_sample_classifier = data_classifier["train"].remove_columns(['label', 'label_text', 'text'])(2)prompt 微调配置

PromptTuningInit.TEXT :表示用一段自然语言文本的 嵌入向量(Embedding) 来初始化虚拟令牌的嵌入空间。实现过程:

文本分词:将 prompt_tuning_init_text 通过分词器转换为 token_ids

截断或填充:根据 num_virtual_tokens 调整长度:

若文本分词后长度 >8 → 截断前8个词。

若长度 <8 → 填充 <pad> token至8个。嵌入映射:取这8个token对应的预训练模型的词嵌入矩阵(Embedding Layer)中的向量,作为虚拟令牌的初始值。

假设输入的"Indicates whether the sentence contains hate speech or not"分词后得到5个词,那么得到的嵌入向量为:[Indicates_emb, whether_emb, ..., <pad>_emb, <pad>_emb, <pad>_emb]

(3)load model for test samples(存疑)

loaded_model_peft = PeftModel.from_pretrained(foundational_model, output_directory_classifier, is_trainable=False)

loaded_model_peft.load_adapter(output_directory_classifier, adapter_name="classifier")

loaded_model_peft.set_adapter("classifier")

loaded_model_sentences_outputs = get_outputs(loaded_model_peft, input_classifier.to(device), max_new_tokens=3)

print(tokenizer.batch_decode(loaded_model_sentences_outputs, skip_special_tokens=True))这块比其他load_model的部分多了load_adapter和set_adapter,主包不太理解这么做的原因是什么,感觉是有点多此一举。我们进行了测试,两次的输出结果是一样的。from_pretrained已经从output_directory_classifier中加载了微调后的 adapter,而后续的load_adapter又从同一路径加载了adapter,只不过是将其重命名为了classifier。不太懂原作者在编写这块代码时的目的是什么,个人认为是冗余了。期待大家的解答(☆▽☆)

loaded_model_peft = PeftModel.from_pretrained(foundational_model, output_directory_classifier, is_trainable=False)

loaded_model_sentences_outputs1 = get_outputs(loaded_model_peft, input_classifier.to(device), max_new_tokens=3)

print("before classifier:", tokenizer.batch_decode(loaded_model_sentences_outputs1, skip_special_tokens=True))

## before classifier: ['Sentence : Head is the shape of a light bulb. Label : no hate speech']

loaded_model_peft.load_adapter(output_directory_classifier, adapter_name="classifier")

loaded_model_peft.set_adapter("classifier")

loaded_model_sentences_outputs2 = get_outputs(loaded_model_peft, input_classifier.to(device), max_new_tokens=3)

print("after classifier:", tokenizer.batch_decode(loaded_model_sentences_outputs2, skip_special_tokens=True))

## after classifier: ['Sentence : Head is the shape of a light bulb. Label : no hate speech']2.2 results

pretrained_model:

['Sentence : Head is the shape of a light bulb. Label : head']

["Sentence : I don't liky short people, no idea why they exist. Label : No"]

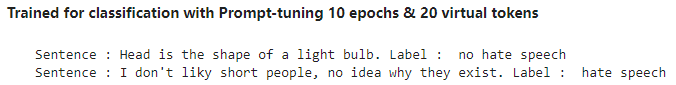

50 epochs & 8 virtual tokens:

['Sentence : Head is the shape of a light bulb. Label : no hate speech']

["Sentence : I don't liky short people, no idea why they exist. Label : hate speech ."]

10 epochs & 20 virtual tokens:

['Sentence : Head is the shape of a light bulb. Label : hate speech no']

["Sentence : I don't liky short people, no idea why they exist. Label : hate speech"]

第一个sentence和原文档中的输出不同,而且输出的顺序比较怪,尝试对学习率和token数进行修改,发现输出就恢复正常顺序了

token = 20, lr = 3e-3

['Sentence : Head is the shape of a light bulb. Label : no hate speech']

token = 10, lr = 3e-2

['Sentence : Head is the shape of a light bulb. Label : no hate speech']

2462

2462

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?