👨🎓个人主页:研学社的博客

💥💥💞💞欢迎来到本博客❤️❤️💥💥

🏆博主优势:🌞🌞🌞博客内容尽量做到思维缜密,逻辑清晰,为了方便读者。

⛳️座右铭:行百里者,半于九十。

📋📋📋本文目录如下:🎁🎁🎁

目录

💥1 概述

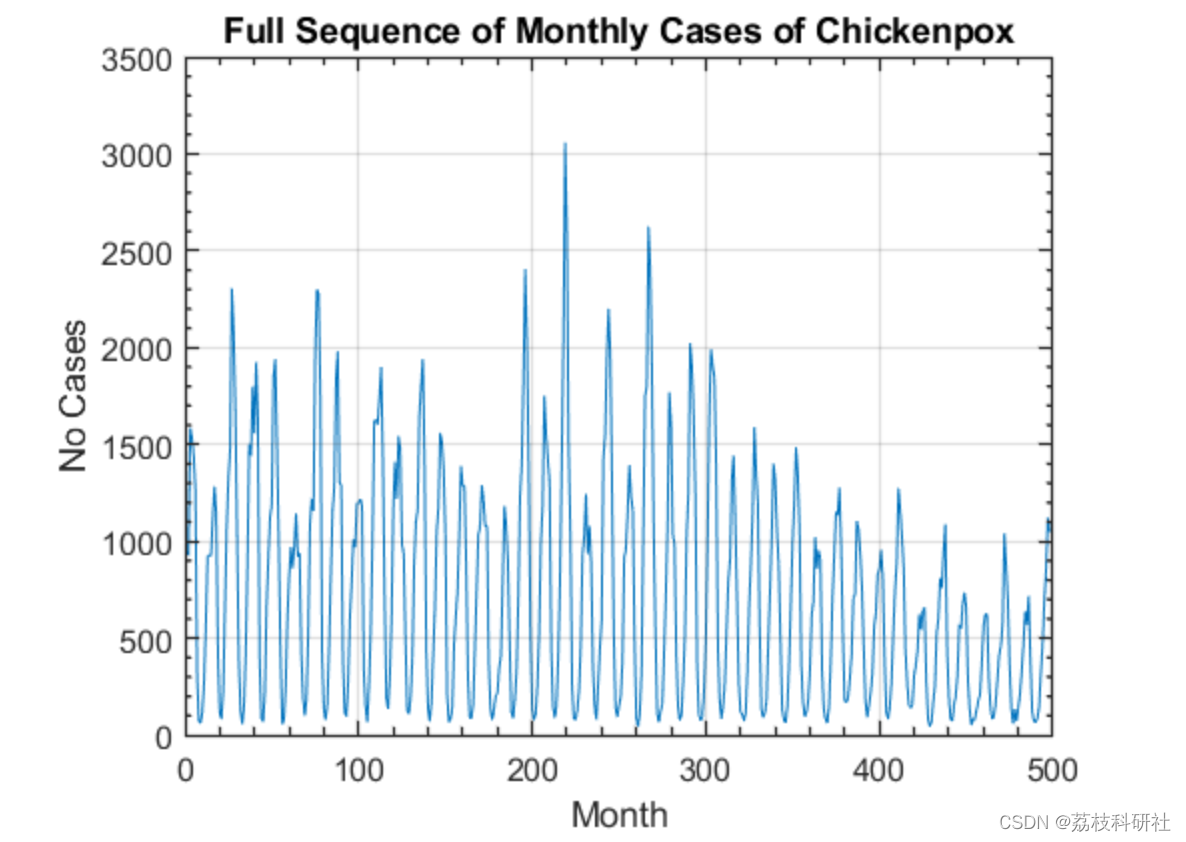

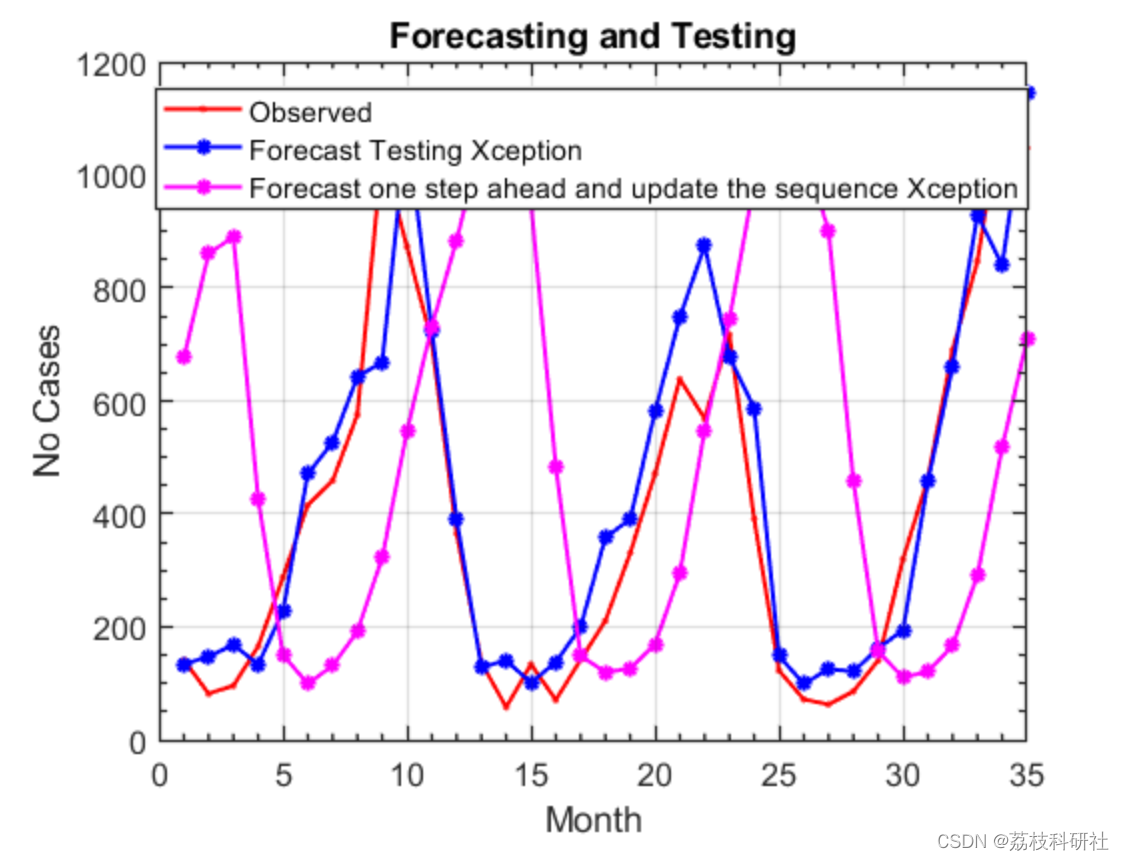

这个例子旨在提出将卷积神经网络(CNN)与递归神经网络(RNN)相结合的概念,以根据前几个月预测水痘病例的数量。

CNN是特征提取的绝佳网络,而RNN已经证明了其预测序列到序列序列值的能力。在每个时间步,CNN提取序列的主要特征,而RNN学习预测下一个时间步的下一个值。

基于混合卷积神经网络(CNN)和循环神经网络(RNN)的时间序列预测是一种先进的预测方法,它结合了两种模型的优点来处理具有时间依赖性的序列数据。这种方法在多个领域,如金融、天气预报、能源需求预测等,都展现出了优越的性能。下面详细解释这一方法的工作原理、优势以及应用实例。

工作原理

-

卷积神经网络(CNN):CNN最初设计用于图像识别任务,但其强大的特征提取能力也适用于时间序列数据。在时间序列预测中,CNN可以通过滑动窗口的方式在序列上进行卷积操作,自动学习到局部时间片段中的特征模式,这有助于捕捉数据中的周期性和趋势信息。

-

循环神经网络(RNN):RNN特别适合处理序列数据,因为它具有循环的内部状态,可以携带历史信息到当前时间步的计算中。长短期记忆(LSTM)和门控循环单元(GRU)是RNN的变种,通过引入门机制解决了长期依赖问题,更有效地捕获时间序列中的长期关系。

-

混合CNN-RNN架构:将CNN和RNN结合起来,可以利用CNN提取局部特征的能力作为预处理步骤,然后将这些特征输入到RNN中,利用RNN处理序列依赖关系。或者,可以在CNN之后使用RNN来对CNN提取的特征序列进行进一步的序列建模。另外,也可以设计更复杂的架构,比如在RNN的输出上叠加CNN层来增强模型的表现力。

优势

- 综合优势:结合了CNN的空间特征提取能力和RNN的时序依赖建模能力,能够更加全面地分析时间序列数据。

- 提高准确性:相较于单独使用CNN或RNN,混合模型往往能提供更高的预测精度,特别是在需要同时考虑时间序列的局部特征和长期依赖关系时。

- 灵活性:混合架构可以根据具体任务的需求灵活调整,例如通过调整CNN的卷积核大小来关注不同尺度的特征,或调整RNN的层数来捕捉不同长度的依赖关系。

应用实例

- 金融市场预测:预测股票价格、汇率变动等,利用CNN捕获市场波动的周期性特征,RNN处理长期的趋势变化。

- 能源需求预测:在智能电网中,预测未来的电力需求,CNN可以捕捉季节性变化,RNN则负责根据过去的消费模式预测未来需求。

- 天气预报:通过分析历史气象数据,混合模型可以更准确地预测气温、降水量等,CNN对地理位置特定的气象特征敏感,RNN则能处理随时间变化的气候模式。

总之,基于混合CNN-RNN的时间序列预测是一个活跃的研究领域,它展示了深度学习在复杂序列数据分析上的强大潜力。随着算法的不断优化和新架构的提出,这种混合方法在预测领域的应用前景十分广阔。

📚2 运行结果

部分代码:

部分代码:

tempLayers = [

sequenceInputLayer([inputSize 1 1],"Name","sequence")

sequenceFoldingLayer("Name","seqfold")];

lgraph = addLayers(lgraph,tempLayers);

tempLayers = [

convolution2dLayer([3 3],32,"Name","block1_conv1","BiasLearnRateFactor",0,"Padding","same","Stride",[2 1])

batchNormalizationLayer("Name","block1_conv1_bn","Epsilon",0.001)

reluLayer("Name","block1_conv1_act")

convolution2dLayer([3 3],64,"Name","block1_conv2","BiasLearnRateFactor",0,"Padding","same")

batchNormalizationLayer("Name","block1_conv2_bn","Epsilon",0.001)

reluLayer("Name","block1_conv2_act")];

lgraph = addLayers(lgraph,tempLayers);

tempLayers = [

groupedConvolution2dLayer([3 3],1,64,"Name","block2_sepconv1_channel-wise","BiasLearnRateFactor",0,"Padding","same")

convolution2dLayer([1 1],128,"Name","block2_sepconv1_point-wise","BiasLearnRateFactor",0)

batchNormalizationLayer("Name","block2_sepconv1_bn","Epsilon",0.001)

reluLayer("Name","block2_sepconv2_act")

groupedConvolution2dLayer([3 3],1,128,"Name","block2_sepconv2_channel-wise","BiasLearnRateFactor",0,"Padding","same")

convolution2dLayer([1 1],128,"Name","block2_sepconv2_point-wise","BiasLearnRateFactor",0)

batchNormalizationLayer("Name","block2_sepconv2_bn","Epsilon",0.001)

maxPooling2dLayer([3 3],"Name","block2_pool","Padding","same","Stride",[2 2])];

lgraph = addLayers(lgraph,tempLayers);

tempLayers = [

convolution2dLayer([1 1],128,"Name","conv2d_1","BiasLearnRateFactor",0,"Padding","same","Stride",[2 2])

batchNormalizationLayer("Name","batch_normalization_1","Epsilon",0.001)];

lgraph = addLayers(lgraph,tempLayers);

tempLayers = additionLayer(2,"Name","add_1");

lgraph = addLayers(lgraph,tempLayers);

tempLayers = [

reluLayer("Name","block3_sepconv1_act")

groupedConvolution2dLayer([3 3],1,128,"Name","block3_sepconv1_channel-wise","BiasLearnRateFactor",0,"Padding","same")

convolution2dLayer([1 1],256,"Name","block3_sepconv1_point-wise","BiasLearnRateFactor",0)

batchNormalizationLayer("Name","block3_sepconv1_bn","Epsilon",0.001)

reluLayer("Name","block3_sepconv2_act")

groupedConvolution2dLayer([3 3],1,256,"Name","block3_sepconv2_channel-wise","BiasLearnRateFactor",0,"Padding","same")

convolution2dLayer([1 1],256,"Name","block3_sepconv2_point-wise","BiasLearnRateFactor",0)

batchNormalizationLayer("Name","block3_sepconv2_bn","Epsilon",0.001)

maxPooling2dLayer([3 3],"Name","block3_pool","Padding","same","Stride",[2 2])];

lgraph = addLayers(lgraph,tempLayers);

tempLayers = [

convolution2dLayer([1 1],256,"Name","conv2d_2","BiasLearnRateFactor",0,"Padding","same","Stride",[2 2])

batchNormalizationLayer("Name","batch_normalization_2","Epsilon",0.001)];

lgraph = addLayers(lgraph,tempLayers);

tempLayers = additionLayer(2,"Name","add_2");

lgraph = addLayers(lgraph,tempLayers);

tempLayers = [

convolution2dLayer([1 1],728,"Name","conv2d_3","BiasLearnRateFactor",0,"Padding","same","Stride",[2 2])

batchNormalizationLayer("Name","batch_normalization_3","Epsilon",0.001)];

lgraph = addLayers(lgraph,tempLayers);

tempLayers = [

reluLayer("Name","block4_sepconv1_act")

groupedConvolution2dLayer([3 3],1,256,"Name","block4_sepconv1_channel-wise","BiasLearnRateFactor",0,"Padding","same")

convolution2dLayer([1 1],728,"Name","block4_sepconv1_point-wise","BiasLearnRateFactor",0)

batchNormalizationLayer("Name","block4_sepconv1_bn","Epsilon",0.001)

reluLayer("Name","block4_sepconv2_act")

groupedConvolution2dLayer([3 3],1,728,"Name","block4_sepconv2_channel-wise","BiasLearnRateFactor",0,"Padding","same")

convolution2dLayer([1 1],728,"Name","block4_sepconv2_point-wise","BiasLearnRateFactor",0)

batchNormalizationLayer("Name","block4_sepconv2_bn","Epsilon",0.001)

maxPooling2dLayer([3 3],"Name","block4_pool","Padding","same","Stride",[2 2])];

lgraph = addLayers(lgraph,tempLayers);

tempLayers = additionLayer(2,"Name","add_3");

lgraph = addLayers(lgraph,tempLayers);

tempLayers = [

reluLayer("Name","block5_sepconv1_act")

groupedConvolution2dLayer([3 3],1,728,"Name","block5_sepconv1_channel-wise","BiasLearnRateFactor",0,"Padding","same")

convolution2dLayer([1 1],728,"Name","block5_sepconv1_point-wise","BiasLearnRateFactor",0)

batchNormalizationLayer("Name","block5_sepconv1_bn","Epsilon",0.001)

reluLayer("Name","block5_sepconv2_act")

groupedConvolution2dLayer([3 3],1,728,"Name","block5_sepconv2_channel-wise","BiasLearnRateFactor",0,"Padding","same")

convolution2dLayer([1 1],728,"Name","block5_sepconv2_point-wise","BiasLearnRateFactor",0)

batchNormalizationLayer("Name","block5_sepconv2_bn","Epsilon",0.001)

reluLayer("Name","block5_sepconv3_act")

groupedConvolution2dLayer([3 3],1,728,"Name","block5_sepconv3_channel-wise","BiasLearnRateFactor",0,"Padding","same")

convolution2dLayer([1 1],728,"Name","block5_sepconv3_point-wise","BiasLearnRateFactor",0)

batchNormalizationLayer("Name","block5_sepconv3_bn","Epsilon",0.001)];

lgraph = addLayers(lgraph,tempLayers);

tempLayers = additionLayer(2,"Name","add_4");

lgraph = addLayers(lgraph,tempLayers);

tempLayers = [

reluLayer("Name","block6_sepconv1_act")

groupedConvolution2dLayer([3 3],1,728,"Name","block6_sepconv1_channel-wise","BiasLearnRateFactor",0,"Padding","same")

convolution2dLayer([1 1],728,"Name","block6_sepconv1_point-wise","BiasLearnRateFactor",0)

batchNormalizationLayer("Name","block6_sepconv1_bn","Epsilon",0.001)

reluLayer("Name","block6_sepconv2_act")

groupedConvolution2dLayer([3 3],1,728,"Name","block6_sepconv2_channel-wise","BiasLearnRateFactor",0,"Padding","same")

convolution2dLayer([1 1],728,"Name","block6_sepconv2_point-wise","BiasLearnRateFactor",0)

batchNormalizationLayer("Name","block6_sepconv2_bn","Epsilon",0.001)

reluLayer("Name","block6_sepconv3_act")

groupedConvolution2dLayer([3 3],1,728,"Name","block6_sepconv3_channel-wise","BiasLearnRateFactor",0,"Padding","same")

convolution2dLayer([1 1],728,"Name","block6_sepconv3_point-wise","BiasLearnRateFactor",0)

batchNormalizationLayer("Name","block6_sepconv3_bn","Epsilon",0.001)];

lgraph = addLayers(lgraph,tempLayers);

tempLayers = additionLayer(2,"Name","add_5");

lgraph = addLayers(lgraph,tempLayers);

tempLayers = [

reluLayer("Name","block7_sepconv1_act")

groupedConvolution2dLayer([3 3],1,728,"Name","block7_sepconv1_channel-wise","BiasLearnRateFactor",0,"Padding","same")

convolution2dLayer([1 1],728,"Name","block7_sepconv1_point-wise","BiasLearnRateFactor",0)

batchNormalizationLayer("Name","block7_sepconv1_bn","Epsilon",0.001)

reluLayer("Name","block7_sepconv2_act")

groupedConvolution2dLayer([3 3],1,728,"Name","block7_sepconv2_channel-wise","BiasLearnRateFactor",0,"Padding","same")

convolution2dLayer([1 1],728,"Name","block7_sepconv2_point-wise","BiasLearnRateFactor",0)

batchNormalizationLayer("Name","block7_sepconv2_bn","Epsilon",0.001)

reluLayer("Name","block7_sepconv3_act")

groupedConvolution2dLayer([3 3],1,728,"Name","block7_sepconv3_channel-wise","BiasLearnRateFactor",0,"Padding","same")

convolution2dLayer([1 1],728,"Name","block7_sepconv3_point-wise","BiasLearnRateFactor",0)

batchNormalizationLayer("Name","block7_sepconv3_bn","Epsilon",0.001)];

lgraph = addLayers(lgraph,tempLayers);

tempLayers = additionLayer(2,"Name","add_6");

lgraph = addLayers(lgraph,tempLayers);

🎉3 参考文献

部分理论来源于网络,如有侵权请联系删除。

[1]H Sanchez (2023). Time Series Forecasting Using Hybrid CNN - RNN

8019

8019

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?