Light Pre-Pass Renderer

In June last year I had an idea for a new rendering design. I call it light pre-pass renderer.

The idea is to fill up a Z buffer first and also store normals in a render target. This is like a G-Buffer with normals and Z values ... so compared to a deferred renderer there is no diffuse color, specular color, material index or position data stored in this stage.

Next the light buffer is filled up with light properties. So the idea is to differ between light and material properties. If you look at a simplified light equation for one point light it looks like this:

Color = Ambient + Shadow * Att * (N.L * DiffColor * DiffIntensity * LightColor + R.V^n * SpecColor * SpecIntensity * LightColor)

The light properties are:

- N.L

- LightColor

- R.V^n

- Attenuation

So what you can do is instead of rendering a whole lighting equation for each light into a render target, you render into a 8:8:8:8 render target only the light properties. You have four channels so you can render:

LightColor.r * N.L * Att

LightColor.g * N.L * Att

LightColor.b * N.L * Att

R.V^n * N.L * Att

That means in this setup there is no dedicated specular color ... which is on purpose (you can extend it easily).

Here is the source code what I store in the light buffer.

half4 ps_main( PS_INPUT Input ) : COLOR

{

half4 G_Buffer = tex2D( G_Buffer, Input.texCoord );

// Compute pixel position

half Depth = UnpackFloat16( G_Buffer.zw );

float3 PixelPos = normalize(Input.EyeScreenRay.xyz) * Depth;

// Compute normal

half3 Normal;

Normal.xy = G_Buffer.xy*2-1;

Normal.z = -sqrt(1-dot(Normal.xy,Normal.xy));

// Computes light attenuation and direction

float3 LightDir = (Input.LightPos - PixelPos)*InvSqrLightRange;

half Attenuation = saturate(1-dot(LightDir / LightAttenuation_0, LightDir / LightAttenuation_0));

LightDir = normalize(LightDir);

// R.V == Phong

float specular = pow(saturate(dot(reflect(normalize(-float3(0.0, 1.0, 0.0)), Normal), LightDir)), SpecularPower_0);

float NL = dot(LightDir, Normal)*Attenuation;

return float4(DiffuseLightColor_0.x*NL, DiffuseLightColor_0.y*NL, DiffuseLightColor_0.z*NL, specular * NL);

}

After all lights are alpha-blended into the light buffer, you switch to forward rendering and reconstruct the lighting equation. In its simplest form this might look like this

float4 ps_main( PS_INPUT Input ) : COLOR0

{

float4 Light = tex2D( Light_Buffer, Input.texCoord );

float3 NLATTColor = float3(Light.x, Light.y, Light.z);

float3 Lighting = NLATTColor + Light.www;

return float4(Lighting, 1.0f);

}

This is a direct competitor to the light indexed renderer idea described by Damian Trebilco at Paper .

I have a small example program that compares this approach to a deferred renderer but I have not compared it to Damian's approach. I believe his approach might be more flexible regarding a material system than mine but the Light Pre-Pass renderer does not need to do the indexing. It should even run on a seven year old ATI RADEON 8500 because you only have to do a Z pre-pass and store the normals upfront.

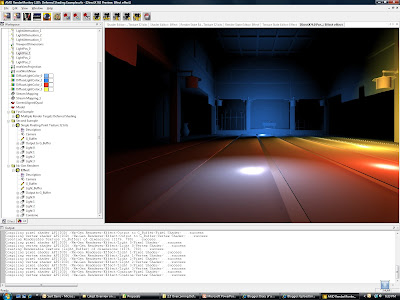

The following screenshot shows four point-lights. There is no restriction in the number of light sources:

The following screenshots shows the same scene running with a deferred renderer. There should not be any visual differences to the Light Pre-Pass Renderer:

The following screenshots shows the same scene running with a deferred renderer. There should not be any visual differences to the Light Pre-Pass Renderer:

The design here is very flexible and scalable. So I expect people to start from here and end up with quite different results. One of the challenges with this approach is to setup a good material system. You can store different values in the light buffer or use the values above and construct interesting materials. For example a per-object specular highlight would be done by taking the value stored in the alpha channel and apply a power function to it or you store the power value in a different channel.

Obviously my intial approach is only scratching the surface of the possibilities.

P.S: to implement a material system for this you can do two things: you can handle it like in a deferred renderer by storing a material id with the normal map ... maybe in the alpha channel, or you can reconstruct the diffuse and specular term in the forward rendering pass. The only thing you have to store to do this is N.L * Att in a separate channel. This way you can get back R.V^n by using the specular channel and dividing it by N.L * Att. So what you do is:

(R.V^n * N.L * Att) / (N.L * Att)

Those are actually values that represent all light sources.

Here is a link to the slides of my UCSD Renderer Design presentation. They provide more detail.

3099

3099

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?