tutorials/application/source_zh_cn/generative/dcgan.ipynb · MindSpore/docs - Gitee.com

在下面的教程中,我们将通过示例代码说明DCGAN网络如何设置网络、优化器、如何计算损失函数以及如何初始化模型权重。在本教程中,使用的动漫头像数据集共有70,171张动漫头像图片,图片大小均为96*96。

GAN基础原理

这部分原理介绍参考GAN图像生成。

DCGAN原理

DCGAN(深度卷积对抗生成网络,Deep Convolutional Generative Adversarial Networks)是GAN的直接扩展。不同之处在于,DCGAN会分别在判别器和生成器中使用卷积和转置卷积层。

它最早由Radford等人在论文Unsupervised Representation Learning With Deep Convolutional Generative Adversarial Networks中进行描述。判别器由分层的卷积层、BatchNorm层和LeakyReLU激活层组成。输入是3x64x64的图像,输出是该图像为真图像的概率。生成器则是由转置卷积层、BatchNorm层和ReLU激活层组成。输入是标准正态分布中提取出的隐向量𝑧z,输出是3x64x64的RGB图像。

本教程将使用动漫头像数据集来训练一个生成式对抗网络,接着使用该网络生成动漫头像图片。

数据准备与处理

首先我们将数据集下载到指定目录下并解压。示例代码如下:

%%capture captured_output

# 实验环境已经预装了mindspore==2.3.0,如需更换mindspore版本,可更改下面 MINDSPORE_VERSION 变量

!pip uninstall mindspore -y

%env MINDSPORE_VERSION=2.3.0

!pip install https://ms-release.obs.cn-north-4.myhuaweicloud.com/${MINDSPORE_VERSION}/MindSpore/unified/aarch64/mindspore-${MINDSPORE_VERSION}-cp39-cp39-linux_aarch64.whl --trusted-host ms-release.obs.cn-north-4.myhuaweicloud.com -i https://pypi.mirrors.ustc.edu.cn/simple# 查看当前 mindspore 版本

!pip show mindsporeName: mindspore Version: 2.3.0 Summary: MindSpore is a new open source deep learning training/inference framework that could be used for mobile, edge and cloud scenarios. Home-page: https://www.mindspore.cn Author: The MindSpore Authors Author-email: contact@mindspore.cn License: Apache 2.0 Location: /home/mindspore/miniconda/envs/jupyter/lib/python3.9/site-packages Requires: asttokens, astunparse, numpy, packaging, pillow, protobuf, psutil, scipy Required-by:

from download import download

url = "https://download.mindspore.cn/dataset/Faces/faces.zip"

path = download(url, "./faces", kind="zip", replace=True)Creating data folder... Downloading data from https://download-mindspore.osinfra.cn/dataset/Faces/faces.zip (274.6 MB) file_sizes: 100%|█████████████████████████████| 288M/288M [00:01<00:00, 207MB/s] Extracting zip file... Successfully downloaded / unzipped to ./faces

下载后的数据集目录结构如下:

./faces/faces

├── 0.jpg

├── 1.jpg

├── 2.jpg

├── 3.jpg

├── 4.jpg

...

├── 70169.jpg

└── 70170.jpg

数据处理

首先为执行过程定义一些输入:

batch_size = 128 # 批量大小

image_size = 64 # 训练图像空间大小

nc = 3 # 图像彩色通道数

nz = 100 # 隐向量的长度

ngf = 64 # 特征图在生成器中的大小

ndf = 64 # 特征图在判别器中的大小

num_epochs = 100 # 训练周期数

lr = 0.0002 # 学习率

beta1 = 0.5 # Adam优化器的beta1超参数定义create_dataset_imagenet函数对数据进行处理和增强操作。

import numpy as np

import mindspore.dataset as ds

import mindspore.dataset.vision as vision

def create_dataset_imagenet(dataset_path):

"""数据加载"""

dataset = ds.ImageFolderDataset(dataset_path,

num_parallel_workers=4,

shuffle=True,

decode=True)

# 数据增强操作

transforms = [

vision.Resize(image_size),

vision.CenterCrop(image_size),

vision.HWC2CHW(),

lambda x: ((x / 255).astype("float32"))

]

# 数据映射操作

dataset = dataset.project('image')

dataset = dataset.map(transforms, 'image')

# 批量操作

dataset = dataset.batch(batch_size)

return dataset

dataset = create_dataset_imagenet('./faces')通过create_dict_iterator函数将数据转换成字典迭代器,然后使用matplotlib模块可视化部分训练数据。

import matplotlib.pyplot as plt

def plot_data(data):

# 可视化部分训练数据

plt.figure(figsize=(10, 3), dpi=140)

for i, image in enumerate(data[0][:30], 1):

plt.subplot(3, 10, i)

plt.axis("off")

plt.imshow(image.transpose(1, 2, 0))

plt.show()

sample_data = next(dataset.create_tuple_iterator(output_numpy=True))

plot_data(sample_data)构造网络

当处理完数据后,就可以来进行网络的搭建了。按照DCGAN论文中的描述,所有模型权重均应从mean为0,sigma为0.02的正态分布中随机初始化。

生成器

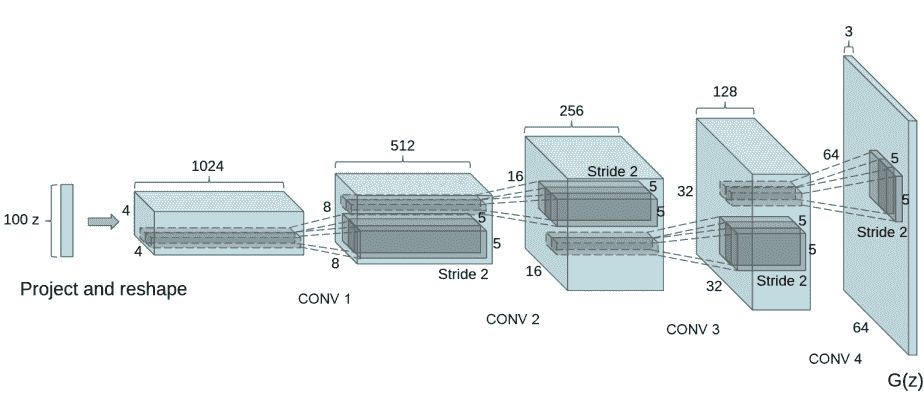

生成器G的功能是将隐向量z映射到数据空间。由于数据是图像,这一过程也会创建与真实图像大小相同的 RGB 图像。在实践场景中,该功能是通过一系列Conv2dTranspose转置卷积层来完成的,每个层都与BatchNorm2d层和ReLu激活层配对,输出数据会经过tanh函数,使其返回[-1,1]的数据范围内。

DCGAN论文生成图像如下所示:

图片来源:Unsupervised Representation Learning With Deep Convolutional Generative Adversarial Networks.

我们通过输入部分中设置的nz、ngf和nc来影响代码中的生成器结构。nz是隐向量z的长度,ngf与通过生成器传播的特征图的大小有关,nc是输出图像中的通道数。

以下是生成器的代码实现:

import mindspore as ms

from mindspore import nn, ops

from mindspore.common.initializer import Normal

weight_init = Normal(mean=0, sigma=0.02)

gamma_init = Normal(mean=1, sigma=0.02)

class Generator(nn.Cell):

"""DCGAN网络生成器"""

def __init__(self):

super(Generator, self).__init__()

self.generator = nn.SequentialCell(

nn.Conv2dTranspose(nz, ngf * 8, 4, 1, 'valid', weight_init=weight_init),

nn.BatchNorm2d(ngf * 8, gamma_init=gamma_init),

nn.ReLU(),

nn.Conv2dTranspose(ngf * 8, ngf * 4, 4, 2, 'pad', 1, weight_init=weight_init),

nn.BatchNorm2d(ngf * 4, gamma_init=gamma_init),

nn.ReLU(),

nn.Conv2dTranspose(ngf * 4, ngf * 2, 4, 2, 'pad', 1, weight_init=weight_init),

nn.BatchNorm2d(ngf * 2, gamma_init=gamma_init),

nn.ReLU(),

nn.Conv2dTranspose(ngf * 2, ngf, 4, 2, 'pad', 1, weight_init=weight_init),

nn.BatchNorm2d(ngf, gamma_init=gamma_init),

nn.ReLU(),

nn.Conv2dTranspose(ngf, nc, 4, 2, 'pad', 1, weight_init=weight_init),

nn.Tanh()

)

def construct(self, x):

return self.generator(x)

generator = Generator()判别器

如前所述,判别器D是一个二分类网络模型,输出判定该图像为真实图的概率。通过一系列的Conv2d、BatchNorm2d和LeakyReLU层对其进行处理,最后通过Sigmoid激活函数得到最终概率。

DCGAN论文提到,使用卷积而不是通过池化来进行下采样是一个好方法,因为它可以让网络学习自己的池化特征。

判别器的代码实现如下:

class Discriminator(nn.Cell):

"""DCGAN网络判别器"""

def __init__(self):

super(Discriminator, self).__init__()

self.discriminator = nn.SequentialCell(

nn.Conv2d(nc, ndf, 4, 2, 'pad', 1, weight_init=weight_init),

nn.LeakyReLU(0.2),

nn.Conv2d(ndf, ndf * 2, 4, 2, 'pad', 1, weight_init=weight_init),

nn.BatchNorm2d(ngf * 2, gamma_init=gamma_init),

nn.LeakyReLU(0.2),

nn.Conv2d(ndf * 2, ndf * 4, 4, 2, 'pad', 1, weight_init=weight_init),

nn.BatchNorm2d(ngf * 4, gamma_init=gamma_init),

nn.LeakyReLU(0.2),

nn.Conv2d(ndf * 4, ndf * 8, 4, 2, 'pad', 1, weight_init=weight_init),

nn.BatchNorm2d(ngf * 8, gamma_init=gamma_init),

nn.LeakyReLU(0.2),

nn.Conv2d(ndf * 8, 1, 4, 1, 'valid', weight_init=weight_init),

)

self.adv_layer = nn.Sigmoid()

def construct(self, x):

out = self.discriminator(x)

out = out.reshape(out.shape[0], -1)

return self.adv_layer(out)

discriminator = Discriminator()模型训练

损失函数

当定义了D和G后,接下来将使用MindSpore中定义的二进制交叉熵损失函数BCELoss。

# 定义损失函数

adversarial_loss = nn.BCELoss(reduction='mean')优化器

这里设置了两个单独的优化器,一个用于D,另一个用于G。这两个都是lr = 0.0002和beta1 = 0.5的Adam优化器。

# 为生成器和判别器设置优化器

optimizer_D = nn.Adam(discriminator.trainable_params(), learning_rate=lr, beta1=beta1)

optimizer_G = nn.Adam(generator.trainable_params(), learning_rate=lr, beta1=beta1)

optimizer_G.update_parameters_name('optim_g.')

optimizer_D.update_parameters_name('optim_d.')训练模型

训练分为两个主要部分:训练判别器和训练生成器。

-

训练判别器

训练判别器的目的是最大程度地提高判别图像真伪的概率。按照Goodfellow的方法,是希望通过提高其随机梯度来更新判别器,所以我们要最大化𝑙𝑜𝑔𝐷(𝑥)+𝑙𝑜𝑔(1−𝐷(𝐺(𝑧))logD(x)+log(1−D(G(z))的值。

-

训练生成器

如DCGAN论文所述,我们希望通过最小化𝑙𝑜𝑔(1−𝐷(𝐺(𝑧)))log(1−D(G(z)))来训练生成器,以产生更好的虚假图像。

在这两个部分中,分别获取训练过程中的损失,并在每个周期结束时进行统计,将fixed_noise批量推送到生成器中,以直观地跟踪G的训练进度。

下面实现模型训练正向逻辑:

def generator_forward(real_imgs, valid):

# 将噪声采样为发生器的输入

z = ops.standard_normal((real_imgs.shape[0], nz, 1, 1))

# 生成一批图像

gen_imgs = generator(z)

# 损失衡量发生器绕过判别器的能力

g_loss = adversarial_loss(discriminator(gen_imgs), valid)

return g_loss, gen_imgs

def discriminator_forward(real_imgs, gen_imgs, valid, fake):

# 衡量鉴别器从生成的样本中对真实样本进行分类的能力

real_loss = adversarial_loss(discriminator(real_imgs), valid)

fake_loss = adversarial_loss(discriminator(gen_imgs), fake)

d_loss = (real_loss + fake_loss) / 2

return d_loss

grad_generator_fn = ms.value_and_grad(generator_forward, None,

optimizer_G.parameters,

has_aux=True)

grad_discriminator_fn = ms.value_and_grad(discriminator_forward, None,

optimizer_D.parameters)

@ms.jit

def train_step(imgs):

valid = ops.ones((imgs.shape[0], 1), mindspore.float32)

fake = ops.zeros((imgs.shape[0], 1), mindspore.float32)

(g_loss, gen_imgs), g_grads = grad_generator_fn(imgs, valid)

optimizer_G(g_grads)

d_loss, d_grads = grad_discriminator_fn(imgs, gen_imgs, valid, fake)

optimizer_D(d_grads)

return g_loss, d_loss, gen_imgs循环训练网络,每经过50次迭代,就收集生成器和判别器的损失,以便于后面绘制训练过程中损失函数的图像。

%%time

import mindspore

G_losses = []

D_losses = []

image_list = []

total = dataset.get_dataset_size()

for epoch in range(num_epochs):

generator.set_train()

discriminator.set_train()

# 为每轮训练读入数据

for i, (imgs, ) in enumerate(dataset.create_tuple_iterator()):

g_loss, d_loss, gen_imgs = train_step(imgs)

if i % 100 == 0 or i == total - 1:

# 输出训练记录

print('[%2d/%d][%3d/%d] Loss_D:%7.4f Loss_G:%7.4f' % (

epoch + 1, num_epochs, i + 1, total, d_loss.asnumpy(), g_loss.asnumpy()))

D_losses.append(d_loss.asnumpy())

G_losses.append(g_loss.asnumpy())

# 每个epoch结束后,使用生成器生成一组图片

generator.set_train(False)

fixed_noise = ops.standard_normal((batch_size, nz, 1, 1))

img = generator(fixed_noise)

image_list.append(img.transpose(0, 2, 3, 1).asnumpy())

# 保存网络模型参数为ckpt文件

mindspore.save_checkpoint(generator, "./generator.ckpt")

mindspore.save_checkpoint(discriminator, "./discriminator.ckpt")为了减少打印,改为每轮打印一次:

%%time

import mindspore

G_losses = []

D_losses = []

image_list = []

total = dataset.get_dataset_size()

for epoch in range(num_epochs):

generator.set_train()

discriminator.set_train()

# 为每轮训练读入数据

for i, (imgs, ) in enumerate(dataset.create_tuple_iterator()):

g_loss, d_loss, gen_imgs = train_step(imgs)

if i == total - 1:

# 输出训练记录

print('[%2d/%d][%3d/%d] Loss_D:%7.4f Loss_G:%7.4f' % (

epoch + 1, num_epochs, i + 1, total, d_loss.asnumpy(), g_loss.asnumpy()))

D_losses.append(d_loss.asnumpy())

G_losses.append(g_loss.asnumpy())

# 每个epoch结束后,使用生成器生成一组图片

generator.set_train(False)

fixed_noise = ops.standard_normal((batch_size, nz, 1, 1))

img = generator(fixed_noise)

image_list.append(img.transpose(0, 2, 3, 1).asnumpy())

# 保存网络模型参数为ckpt文件

mindspore.save_checkpoint(generator, "./generator.ckpt")

mindspore.save_checkpoint(discriminator, "./discriminator.ckpt")[ 1/100][549/549] Loss_D: 0.6106 Loss_G: 0.6764 [ 2/100][549/549] Loss_D: 0.3092 Loss_G: 4.2549 [ 3/100][549/549] Loss_D: 0.3885 Loss_G: 1.6692 [ 4/100][549/549] Loss_D: 0.1445 Loss_G: 2.1410 [ 5/100][549/549] Loss_D: 0.2237 Loss_G: 2.4766 [ 6/100][549/549] Loss_D: 0.1699 Loss_G: 2.8469 [ 7/100][549/549] Loss_D: 0.4511 Loss_G: 8.5175 [ 8/100][549/549] Loss_D: 0.0959 Loss_G: 2.6130 [ 9/100][549/549] Loss_D: 0.1494 Loss_G: 2.6417 [10/100][549/549] Loss_D: 0.0880 Loss_G: 2.5666 [11/100][549/549] Loss_D: 0.0870 Loss_G: 2.1918 [12/100][549/549] Loss_D: 0.2240 Loss_G: 3.6016 [13/100][549/549] Loss_D: 0.1102 Loss_G: 2.8687 [14/100][549/549] Loss_D: 0.1859 Loss_G: 2.7287 [15/100][549/549] Loss_D: 0.1399 Loss_G: 1.9229 [16/100][549/549] Loss_D: 0.1043 Loss_G: 2.7204 [17/100][549/549] Loss_D: 0.2446 Loss_G: 1.7036 [18/100][549/549] Loss_D: 0.1583 Loss_G: 2.6729 [19/100][549/549] Loss_D: 0.2957 Loss_G: 6.4399 [20/100][549/549] Loss_D: 0.1509 Loss_G: 3.9361 [21/100][549/549] Loss_D: 0.0795 Loss_G: 3.8061 [22/100][549/549] Loss_D: 1.5071 Loss_G: 9.9033 [23/100][549/549] Loss_D: 0.0741 Loss_G: 2.7125 [24/100][549/549] Loss_D: 0.0451 Loss_G: 3.4519 [25/100][549/549] Loss_D: 0.1035 Loss_G: 3.0197 [26/100][549/549] Loss_D: 0.1196 Loss_G: 2.3379 [27/100][549/549] Loss_D: 0.1113 Loss_G: 2.3422 [28/100][549/549] Loss_D: 0.1827 Loss_G: 2.5274 [29/100][549/549] Loss_D: 0.0607 Loss_G: 2.9610 [30/100][549/549] Loss_D: 1.6338 Loss_G: 8.6766 [31/100][549/549] Loss_D: 0.0839 Loss_G: 3.9443 [32/100][549/549] Loss_D: 0.1627 Loss_G: 2.3885 [33/100][549/549] Loss_D: 0.1081 Loss_G: 3.0734 [34/100][549/549] Loss_D: 0.3051 Loss_G: 1.8697 [35/100][549/549] Loss_D: 0.1608 Loss_G: 5.1093 [36/100][549/549] Loss_D: 1.5805 Loss_G: 8.1907 [37/100][549/549] Loss_D: 0.1765 Loss_G: 2.0164 [38/100][549/549] Loss_D: 0.0220 Loss_G: 4.0469 [39/100][549/549] Loss_D: 0.1006 Loss_G: 2.4763 [40/100][549/549] Loss_D: 0.0794 Loss_G: 2.4766 [41/100][549/549] Loss_D: 0.0986 Loss_G: 4.9125 [42/100][549/549] Loss_D: 0.0607 Loss_G: 3.5990 [43/100][549/549] Loss_D: 0.0304 Loss_G: 3.5067 [44/100][549/549] Loss_D: 0.0579 Loss_G: 5.1174 [45/100][549/549] Loss_D: 0.2503 Loss_G: 3.6695 [46/100][549/549] Loss_D: 0.0344 Loss_G: 4.8349 [47/100][549/549] Loss_D: 0.1953 Loss_G: 2.7192 [48/100][549/549] Loss_D: 0.0469 Loss_G: 5.5249 [49/100][549/549] Loss_D: 0.0960 Loss_G: 3.0558 [50/100][549/549] Loss_D: 0.0581 Loss_G: 4.1038 [51/100][549/549] Loss_D: 0.0299 Loss_G: 3.8405 [52/100][549/549] Loss_D: 0.0990 Loss_G: 2.7754 [53/100][549/549] Loss_D: 0.0939 Loss_G: 3.8828 [54/100][549/549] Loss_D: 0.0618 Loss_G: 3.1732 [55/100][549/549] Loss_D: 0.3889 Loss_G: 1.2231 [56/100][549/549] Loss_D: 0.0990 Loss_G: 4.5803 [57/100][549/549] Loss_D: 0.0286 Loss_G: 5.2037 [58/100][549/549] Loss_D: 0.0617 Loss_G: 3.4745 [59/100][549/549] Loss_D: 0.2001 Loss_G: 2.2047 [60/100][549/549] Loss_D: 0.0353 Loss_G: 4.7155 [61/100][549/549] Loss_D: 0.0253 Loss_G: 4.6451 [62/100][549/549] Loss_D: 0.3381 Loss_G: 1.2426 [63/100][549/549] Loss_D: 1.6174 Loss_G: 6.0268 [64/100][549/549] Loss_D: 0.0446 Loss_G: 3.1168 [65/100][549/549] Loss_D: 0.0776 Loss_G: 3.7572 [66/100][549/549] Loss_D: 0.0178 Loss_G: 5.3017 [67/100][549/549] Loss_D: 0.0550 Loss_G: 5.0807 [68/100][549/549] Loss_D: 0.0359 Loss_G: 3.9622 [69/100][549/549] Loss_D: 0.0046 Loss_G: 6.1130 [70/100][549/549] Loss_D: 0.1212 Loss_G: 2.8980 [71/100][549/549] Loss_D: 0.1356 Loss_G: 3.5367 [72/100][549/549] Loss_D: 0.0879 Loss_G: 4.8944 [73/100][549/549] Loss_D: 0.0243 Loss_G: 4.2409 [74/100][549/549] Loss_D: 0.0418 Loss_G: 4.0617 [75/100][549/549] Loss_D: 0.1328 Loss_G: 5.4291 [76/100][549/549] Loss_D: 0.0150 Loss_G: 5.0857 [77/100][549/549] Loss_D: 0.5582 Loss_G: 0.9203 [78/100][549/549] Loss_D: 0.0579 Loss_G: 5.5855 [79/100][549/549] Loss_D: 0.3879 Loss_G: 3.7747 [80/100][549/549] Loss_D: 0.1436 Loss_G: 2.8071 [81/100][549/549] Loss_D: 0.3205 Loss_G: 7.8155 [82/100][549/549] Loss_D: 0.0180 Loss_G: 4.8216 [83/100][549/549] Loss_D: 0.4509 Loss_G: 1.6024 [84/100][549/549] Loss_D: 0.0254 Loss_G: 5.2787 [85/100][549/549] Loss_D: 0.0109 Loss_G: 5.8957 [86/100][549/549] Loss_D: 0.2196 Loss_G: 1.6766 [87/100][549/549] Loss_D: 0.0948 Loss_G: 2.7880 [88/100][549/549] Loss_D: 0.1002 Loss_G: 3.3182 [89/100][549/549] Loss_D: 0.0323 Loss_G: 5.7229 [90/100][549/549] Loss_D: 0.0565 Loss_G: 4.1676 [91/100][549/549] Loss_D: 0.0265 Loss_G: 4.7548 [92/100][549/549] Loss_D: 0.0741 Loss_G: 6.0792 [93/100][549/549] Loss_D: 0.0253 Loss_G: 5.2812 [94/100][549/549] Loss_D: 0.0388 Loss_G: 4.5826 [95/100][549/549] Loss_D: 0.0229 Loss_G: 4.4684 [96/100][549/549] Loss_D: 0.3439 Loss_G: 5.0983 [97/100][549/549] Loss_D: 0.2110 Loss_G: 6.5986 [98/100][549/549] Loss_D: 0.0588 Loss_G: 3.2943 [99/100][549/549] Loss_D: 0.0432 Loss_G: 3.6790 [100/100][549/549] Loss_D: 0.2591 Loss_G: 1.5543 CPU times: user 3h 10min 44s, sys: 1h 4s, total: 4h 10min 49s Wall time: 25min 21s

结果展示

运行下面代码,描绘D和G损失与训练迭代的关系图:

plt.figure(figsize=(10, 5))

plt.title("Generator and Discriminator Loss During Training")

plt.plot(G_losses, label="G", color='blue')

plt.plot(D_losses, label="D", color='orange')

plt.xlabel("iterations")

plt.ylabel("Loss")

plt.legend()

plt.show()可视化训练过程中通过隐向量fixed_noise生成的图像。

import matplotlib.pyplot as plt

import matplotlib.animation as animation

def showGif(image_list):

show_list = []

fig = plt.figure(figsize=(8, 3), dpi=120)

for epoch in range(len(image_list)):

images = []

for i in range(3):

row = np.concatenate((image_list[epoch][i * 8:(i + 1) * 8]), axis=1)

images.append(row)

img = np.clip(np.concatenate((images[:]), axis=0), 0, 1)

plt.axis("off")

show_list.append([plt.imshow(img)])

ani = animation.ArtistAnimation(fig, show_list, interval=1000, repeat_delay=1000, blit=True)

ani.save('./dcgan.gif', writer='pillow', fps=1)

showGif(image_list)

从上面的图像可以看出,随着训练次数的增多,图像质量也越来越好。如果增大训练周期数,当num_epochs达到50以上时,生成的动漫头像图片与数据集中的较为相似,下面我们通过加载生成器网络模型参数文件来生成图像,代码如下:

# 从文件中获取模型参数并加载到网络中

mindspore.load_checkpoint("./generator.ckpt", generator)

fixed_noise = ops.standard_normal((batch_size, nz, 1, 1))

img64 = generator(fixed_noise).transpose(0, 2, 3, 1).asnumpy()

fig = plt.figure(figsize=(8, 3), dpi=120)

images = []

for i in range(3):

images.append(np.concatenate((img64[i * 8:(i + 1) * 8]), axis=1))

img = np.clip(np.concatenate((images[:]), axis=0), 0, 1)

plt.axis("off")

plt.imshow(img)

plt.show()当num_epochs = 200时,好像出现了模式崩溃(生成器开始生成非常相似或重复的样本)。

batch_size = 128 # 批量大小

image_size = 64 # 训练图像空间大小

nc = 3 # 图像彩色通道数

nz = 100 # 隐向量的长度

ngf = 64 # 特征图在生成器中的大小

ndf = 64 # 特征图在判别器中的大小

num_epochs = 200 # 训练周期数

lr = 0.0002 # 学习率

beta1 = 0.5 # Adam优化器的beta1超参数%%time

import mindspore

G_losses = []

D_losses = []

image_list = []

total = dataset.get_dataset_size()

for epoch in range(num_epochs):

generator.set_train()

discriminator.set_train()

# 为每轮训练读入数据

for i, (imgs, ) in enumerate(dataset.create_tuple_iterator()):

g_loss, d_loss, gen_imgs = train_step(imgs)

if i % 500 == 0 or i == total - 1:

# 输出训练记录

print('[%2d/%d][%3d/%d] Loss_D:%7.4f Loss_G:%7.4f' % (

epoch + 1, num_epochs, i + 1, total, d_loss.asnumpy(), g_loss.asnumpy()))

D_losses.append(d_loss.asnumpy())

G_losses.append(g_loss.asnumpy())

# 每个epoch结束后,使用生成器生成一组图片

generator.set_train(False)

fixed_noise = ops.standard_normal((batch_size, nz, 1, 1))

img = generator(fixed_noise)

image_list.append(img.transpose(0, 2, 3, 1).asnumpy())

# 保存网络模型参数为ckpt文件

mindspore.save_checkpoint(generator, "./generator.ckpt")

mindspore.save_checkpoint(discriminator, "./discriminator.ckpt")[ 1/200][ 1/549] Loss_D: 0.8324 Loss_G: 1.2639 [ 1/200][501/549] Loss_D: 0.2243 Loss_G: 1.4972[ 1/200][549/549] Loss_D: 0.1225 Loss_G: 3.7119 [ 2/200][ 1/549] Loss_D: 0.2634 Loss_G: 1.7945 [ 2/200][501/549] Loss_D: 0.3243 Loss_G: 5.4640 [ 2/200][549/549] Loss_D: 0.2435 Loss_G: 2.2054 [ 3/200][ 1/549] Loss_D: 0.2918 Loss_G: 1.3228 [ 3/200][501/549] Loss_D: 0.2894 Loss_G: 3.4179 [ 3/200][549/549] Loss_D: 0.6185 Loss_G: 0.5866 [ 4/200][ 1/549] Loss_D: 1.2035 Loss_G:10.6546 [ 4/200][501/549] Loss_D: 0.3380 Loss_G: 3.2789 [ 4/200][549/549] Loss_D: 0.3168 Loss_G: 3.2505 [ 5/200][ 1/549] Loss_D: 0.3357 Loss_G: 1.2956 [ 5/200][501/549] Loss_D: 0.2061 Loss_G: 4.2091 [ 5/200][549/549] Loss_D: 0.1292 Loss_G: 2.4829 [ 6/200][ 1/549] Loss_D: 0.1827 Loss_G: 2.1405 [ 6/200][501/549] Loss_D: 0.2145 Loss_G: 6.3399 [ 6/200][549/549] Loss_D: 0.1928 Loss_G: 1.6432 [ 7/200][ 1/549] Loss_D: 0.0830 Loss_G: 3.3874 [ 7/200][501/549] Loss_D: 0.4541 Loss_G: 0.7287 [ 7/200][549/549] Loss_D: 0.1416 Loss_G: 2.0121 [ 8/200][ 1/549] Loss_D: 0.1891 Loss_G: 2.9087 [ 8/200][501/549] Loss_D: 0.1970 Loss_G: 4.0175 [ 8/200][549/549] Loss_D: 0.1693 Loss_G: 2.0178 [ 9/200][ 1/549] Loss_D: 0.0937 Loss_G: 3.4365 [ 9/200][501/549] Loss_D: 0.1757 Loss_G: 2.1768 [ 9/200][549/549] Loss_D: 0.1696 Loss_G: 1.9579 [10/200][ 1/549] Loss_D: 0.2211 Loss_G: 2.2690 [10/200][501/549] Loss_D: 0.1164 Loss_G: 2.1523 [10/200][549/549] Loss_D: 0.2187 Loss_G: 2.0313 [11/200][ 1/549] Loss_D: 0.1367 Loss_G: 2.5421 [11/200][501/549] Loss_D: 0.1174 Loss_G: 2.4074 [11/200][549/549] Loss_D: 1.4441 Loss_G: 0.1132 [12/200][ 1/549] Loss_D: 0.8690 Loss_G: 7.9187 [12/200][501/549] Loss_D: 0.1522 Loss_G: 2.2471 [12/200][549/549] Loss_D: 0.1498 Loss_G: 2.2950 [13/200][ 1/549] Loss_D: 0.2269 Loss_G: 2.5328 [13/200][501/549] Loss_D: 0.1723 Loss_G: 2.6353 [13/200][549/549] Loss_D: 0.1196 Loss_G: 2.7592 [14/200][ 1/549] Loss_D: 0.1173 Loss_G: 2.7692 [14/200][501/549] Loss_D: 0.1994 Loss_G: 1.8361 [14/200][549/549] Loss_D: 0.2566 Loss_G: 1.4707 [15/200][ 1/549] Loss_D: 0.1332 Loss_G: 2.0971 [15/200][501/549] Loss_D: 0.2121 Loss_G: 1.6003 [15/200][549/549] Loss_D: 2.2433 Loss_G: 9.1780 [16/200][ 1/549] Loss_D: 0.1690 Loss_G: 2.0324 [16/200][501/549] Loss_D: 0.2154 Loss_G: 3.4251 [16/200][549/549] Loss_D: 0.6030 Loss_G: 6.0030 [17/200][ 1/549] Loss_D: 0.2687 Loss_G: 1.1912 [17/200][501/549] Loss_D: 0.1808 Loss_G: 1.7610 [17/200][549/549] Loss_D: 0.3762 Loss_G: 0.8627 [18/200][ 1/549] Loss_D: 0.4494 Loss_G: 5.9094 [18/200][501/549] Loss_D: 0.4202 Loss_G: 0.9349 [18/200][549/549] Loss_D: 0.2420 Loss_G: 1.4175 [19/200][ 1/549] Loss_D: 0.1227 Loss_G: 2.6680 [19/200][501/549] Loss_D: 0.2651 Loss_G: 1.1539 [19/200][549/549] Loss_D: 0.2152 Loss_G: 4.3104 [20/200][ 1/549] Loss_D: 0.3126 Loss_G: 1.0723 [20/200][501/549] Loss_D: 0.1923 Loss_G: 1.8047 [20/200][549/549] Loss_D: 0.1228 Loss_G: 2.9775 [21/200][ 1/549] Loss_D: 0.1811 Loss_G: 1.9004 [21/200][501/549] Loss_D: 0.0985 Loss_G: 2.7778 [21/200][549/549] Loss_D: 0.7840 Loss_G: 6.9031 [22/200][ 1/549] Loss_D: 0.1365 Loss_G: 2.0484 [22/200][501/549] Loss_D: 0.2438 Loss_G: 2.9704 [22/200][549/549] Loss_D: 0.5911 Loss_G: 5.4517 [23/200][ 1/549] Loss_D: 0.9774 Loss_G: 0.2537 [23/200][501/549] Loss_D: 0.3037 Loss_G: 1.1571 [23/200][549/549] Loss_D: 0.1643 Loss_G: 3.9519 [24/200][ 1/549] Loss_D: 0.2573 Loss_G: 1.2705 [24/200][501/549] Loss_D: 0.1461 Loss_G: 4.0229 [24/200][549/549] Loss_D: 0.1226 Loss_G: 2.3870 [25/200][ 1/549] Loss_D: 0.1045 Loss_G: 3.9232 [25/200][501/549] Loss_D: 0.1356 Loss_G: 2.3146 [25/200][549/549] Loss_D: 0.2543 Loss_G: 3.6102 [26/200][ 1/549] Loss_D: 0.2078 Loss_G: 1.4601 [26/200][501/549] Loss_D: 0.1547 Loss_G: 2.7833 [26/200][549/549] Loss_D: 0.1853 Loss_G: 1.9727 [27/200][ 1/549] Loss_D: 0.1648 Loss_G: 2.2708 [27/200][501/549] Loss_D: 0.1452 Loss_G: 2.4902 [27/200][549/549] Loss_D: 0.2875 Loss_G: 3.2696 [28/200][ 1/549] Loss_D: 0.4400 Loss_G: 0.7629 [28/200][501/549] Loss_D: 0.0707 Loss_G: 3.8629 [28/200][549/549] Loss_D: 0.1430 Loss_G: 1.8769 [29/200][ 1/549] Loss_D: 0.7581 Loss_G: 5.9448 [29/200][501/549] Loss_D: 0.2469 Loss_G: 5.8975 [29/200][549/549] Loss_D: 0.0966 Loss_G: 3.0256 [30/200][ 1/549] Loss_D: 0.0998 Loss_G: 2.3803 [30/200][501/549] Loss_D: 0.1621 Loss_G: 4.5309 [30/200][549/549] Loss_D: 0.1170 Loss_G: 2.2813 [31/200][ 1/549] Loss_D: 0.2743 Loss_G: 1.2051 [31/200][501/549] Loss_D: 0.1116 Loss_G: 2.5379 [31/200][549/549] Loss_D: 0.2577 Loss_G: 2.2138 [32/200][ 1/549] Loss_D: 0.1581 Loss_G: 2.1937 [32/200][501/549] Loss_D: 1.1011 Loss_G: 0.2635 [32/200][549/549] Loss_D: 0.1970 Loss_G: 1.7803 [33/200][ 1/549] Loss_D: 0.4104 Loss_G: 4.4137 [33/200][501/549] Loss_D: 0.1607 Loss_G: 1.8982 [33/200][549/549] Loss_D: 0.0772 Loss_G: 3.9291 [34/200][ 1/549] Loss_D: 0.1640 Loss_G: 1.7486 [34/200][501/549] Loss_D: 0.1159 Loss_G: 2.3928 [34/200][549/549] Loss_D: 0.1051 Loss_G: 3.2938 [35/200][ 1/549] Loss_D: 0.2062 Loss_G: 1.5448 [35/200][501/549] Loss_D: 0.0764 Loss_G: 3.4685 [35/200][549/549] Loss_D: 0.0783 Loss_G: 4.0028 [36/200][ 1/549] Loss_D: 0.0691 Loss_G: 3.1478 [36/200][501/549] Loss_D: 0.1945 Loss_G: 1.8608 [36/200][549/549] Loss_D: 0.0664 Loss_G: 3.2432 [37/200][ 1/549] Loss_D: 0.1332 Loss_G: 2.8768 [37/200][501/549] Loss_D: 0.1357 Loss_G: 2.0631 [37/200][549/549] Loss_D: 0.1194 Loss_G: 3.4386 [38/200][ 1/549] Loss_D: 0.1071 Loss_G: 2.6619 [38/200][501/549] Loss_D: 0.0323 Loss_G: 3.8000 [38/200][549/549] Loss_D: 0.2826 Loss_G: 5.0917 [39/200][ 1/549] Loss_D: 0.8349 Loss_G: 0.3916 [39/200][501/549] Loss_D: 0.0603 Loss_G: 3.2233 [39/200][549/549] Loss_D: 0.0758 Loss_G: 2.6212 [40/200][ 1/549] Loss_D: 0.0472 Loss_G: 4.0676 [40/200][501/549] Loss_D: 0.1662 Loss_G: 4.0471 [40/200][549/549] Loss_D: 0.0901 Loss_G: 3.0110 [41/200][ 1/549] Loss_D: 0.1109 Loss_G: 2.3747 [41/200][501/549] Loss_D: 0.0864 Loss_G: 3.5582 [41/200][549/549] Loss_D: 0.1556 Loss_G: 4.0981 [42/200][ 1/549] Loss_D: 0.1805 Loss_G: 1.7918 [42/200][501/549] Loss_D: 0.1553 Loss_G: 2.5847 [42/200][549/549] Loss_D: 0.2758 Loss_G: 3.6331 [43/200][ 1/549] Loss_D: 1.6624 Loss_G: 0.1618 [43/200][501/549] Loss_D: 0.0344 Loss_G: 3.7180 [43/200][549/549] Loss_D: 0.0191 Loss_G: 4.4786 [44/200][ 1/549] Loss_D: 0.0239 Loss_G: 4.5359 [44/200][501/549] Loss_D: 0.0648 Loss_G: 3.8651 [44/200][549/549] Loss_D: 0.1347 Loss_G: 2.8805 [45/200][ 1/549] Loss_D: 0.2039 Loss_G: 1.7305 [45/200][501/549] Loss_D: 0.0523 Loss_G: 3.4660 [45/200][549/549] Loss_D: 0.0300 Loss_G: 4.3123 [46/200][ 1/549] Loss_D: 0.0935 Loss_G: 4.7765 [46/200][501/549] Loss_D: 0.1090 Loss_G: 2.7217 [46/200][549/549] Loss_D: 0.0705 Loss_G: 4.1516 [47/200][ 1/549] Loss_D: 0.0726 Loss_G: 3.0272 [47/200][501/549] Loss_D: 0.0537 Loss_G: 3.3243 [47/200][549/549] Loss_D: 0.0693 Loss_G: 2.8473 [48/200][ 1/549] Loss_D: 0.1066 Loss_G: 2.2994 [48/200][501/549] Loss_D: 0.0318 Loss_G: 4.9260 [48/200][549/549] Loss_D: 0.0633 Loss_G: 4.0919 [49/200][ 1/549] Loss_D: 0.1076 Loss_G: 3.9757 [49/200][501/549] Loss_D: 0.4428 Loss_G: 3.9113 [49/200][549/549] Loss_D: 0.0768 Loss_G: 3.8784 [50/200][ 1/549] Loss_D: 0.1388 Loss_G: 2.6904 [50/200][501/549] Loss_D: 0.5701 Loss_G: 7.2282 [50/200][549/549] Loss_D: 0.1603 Loss_G: 2.0840 [51/200][ 1/549] Loss_D: 0.1041 Loss_G: 2.8508 [51/200][501/549] Loss_D: 1.2263 Loss_G: 8.3074 [51/200][549/549] Loss_D: 0.1378 Loss_G: 2.7209 [52/200][ 1/549] Loss_D: 0.1355 Loss_G: 3.6068 [52/200][501/549] Loss_D: 0.0744 Loss_G: 3.1665 [52/200][549/549] Loss_D: 0.1439 Loss_G: 3.1384 [53/200][ 1/549] Loss_D: 0.1215 Loss_G: 2.4754 [53/200][501/549] Loss_D: 0.2279 Loss_G: 2.0314 [53/200][549/549] Loss_D: 0.0733 Loss_G: 2.7644 [54/200][ 1/549] Loss_D: 0.0536 Loss_G: 3.3702 [54/200][501/549] Loss_D: 0.0475 Loss_G: 4.4517 [54/200][549/549] Loss_D: 0.0437 Loss_G: 3.8798 [55/200][ 1/549] Loss_D: 0.0290 Loss_G: 3.6527 [55/200][501/549] Loss_D: 0.0985 Loss_G: 3.1062 [55/200][549/549] Loss_D: 0.0468 Loss_G: 3.9260 [56/200][ 1/549] Loss_D: 0.0751 Loss_G: 2.8475 [56/200][501/549] Loss_D: 0.0685 Loss_G: 3.6570 [56/200][549/549] Loss_D: 0.0366 Loss_G: 4.6187 [57/200][ 1/549] Loss_D: 0.0788 Loss_G: 2.9598 [57/200][501/549] Loss_D: 0.1491 Loss_G: 2.2459 [57/200][549/549] Loss_D: 0.1917 Loss_G: 1.8331 [58/200][ 1/549] Loss_D: 0.1782 Loss_G: 2.2357 [58/200][501/549] Loss_D: 0.1041 Loss_G: 2.5734 [58/200][549/549] Loss_D: 0.1353 Loss_G: 3.2996 [59/200][ 1/549] Loss_D: 0.2526 Loss_G: 1.3601 [59/200][501/549] Loss_D: 0.0873 Loss_G: 2.9913 [59/200][549/549] Loss_D: 0.0579 Loss_G: 5.3517 [60/200][ 1/549] Loss_D: 0.0932 Loss_G: 2.3693 [60/200][501/549] Loss_D: 0.1138 Loss_G: 2.6479 [60/200][549/549] Loss_D: 0.0952 Loss_G: 2.6705 [61/200][ 1/549] Loss_D: 0.1107 Loss_G: 2.7407 [61/200][501/549] Loss_D: 0.0529 Loss_G: 3.3084 [61/200][549/549] Loss_D: 0.2721 Loss_G: 4.1151 [62/200][ 1/549] Loss_D: 0.2245 Loss_G: 1.5675 [62/200][501/549] Loss_D: 0.0878 Loss_G: 2.9496 [62/200][549/549] Loss_D: 0.4308 Loss_G: 6.5642 [63/200][ 1/549] Loss_D: 2.2300 Loss_G: 0.0525 [63/200][501/549] Loss_D: 0.1626 Loss_G: 2.4035 [63/200][549/549] Loss_D: 0.1175 Loss_G: 2.5961 [64/200][ 1/549] Loss_D: 0.1353 Loss_G: 3.4148 [64/200][501/549] Loss_D: 0.0238 Loss_G: 4.8436 [64/200][549/549] Loss_D: 0.0207 Loss_G: 4.8270 [65/200][ 1/549] Loss_D: 0.0280 Loss_G: 3.7038 [65/200][501/549] Loss_D: 0.0916 Loss_G: 3.3575 [65/200][549/549] Loss_D: 0.0687 Loss_G: 4.0699 [66/200][ 1/549] Loss_D: 0.0923 Loss_G: 3.2198 [66/200][501/549] Loss_D: 0.0238 Loss_G: 4.5188 [66/200][549/549] Loss_D: 0.0719 Loss_G: 2.5030 [67/200][ 1/549] Loss_D: 0.1975 Loss_G: 1.6979 [67/200][501/549] Loss_D: 0.1652 Loss_G: 2.2843 [67/200][549/549] Loss_D: 1.0853 Loss_G: 8.0768 [68/200][ 1/549] Loss_D: 1.5934 Loss_G: 0.1117 [68/200][501/549] Loss_D: 0.1611 Loss_G: 2.2162 [68/200][549/549] Loss_D: 0.0672 Loss_G: 4.4790 [69/200][ 1/549] Loss_D: 0.1048 Loss_G: 2.4682 [69/200][501/549] Loss_D: 0.1481 Loss_G: 2.5849 [69/200][549/549] Loss_D: 0.0604 Loss_G: 2.8652 [70/200][ 1/549] Loss_D: 0.0333 Loss_G: 4.2357 [70/200][501/549] Loss_D: 0.0769 Loss_G: 3.6993 [70/200][549/549] Loss_D: 0.0793 Loss_G: 4.3074 [71/200][ 1/549] Loss_D: 0.0839 Loss_G: 2.7068 [71/200][501/549] Loss_D: 0.0468 Loss_G: 3.5372 [71/200][549/549] Loss_D: 0.1252 Loss_G: 2.2772 [72/200][ 1/549] Loss_D: 0.1966 Loss_G: 3.0858 [72/200][501/549] Loss_D: 1.5845 Loss_G: 0.0953 [72/200][549/549] Loss_D: 0.1905 Loss_G: 4.1469 [73/200][ 1/549] Loss_D: 0.2518 Loss_G: 1.4223 [73/200][501/549] Loss_D: 0.3203 Loss_G: 1.7023 [73/200][549/549] Loss_D: 0.1659 Loss_G: 2.8936 [74/200][ 1/549] Loss_D: 0.1719 Loss_G: 2.0328 [74/200][501/549] Loss_D: 0.0169 Loss_G: 4.6490 [74/200][549/549] Loss_D: 0.0710 Loss_G: 3.2644 [75/200][ 1/549] Loss_D: 0.0653 Loss_G: 3.5217 [75/200][501/549] Loss_D: 0.0319 Loss_G: 5.4261 [75/200][549/549] Loss_D: 0.0227 Loss_G: 6.9689 [76/200][ 1/549] Loss_D: 0.0286 Loss_G: 4.0718 [76/200][501/549] Loss_D: 0.1246 Loss_G: 2.3175 [76/200][549/549] Loss_D: 0.1094 Loss_G: 2.7421 [77/200][ 1/549] Loss_D: 0.0917 Loss_G: 2.7597 [77/200][501/549] Loss_D: 0.1620 Loss_G: 5.8381 [77/200][549/549] Loss_D: 0.9196 Loss_G: 0.3436 [78/200][ 1/549] Loss_D: 0.4835 Loss_G: 5.1983 [78/200][501/549] Loss_D: 0.1648 Loss_G: 1.8297 [78/200][549/549] Loss_D: 2.2909 Loss_G: 8.9165 [79/200][ 1/549] Loss_D: 0.2514 Loss_G: 2.0934 [79/200][501/549] Loss_D: 0.0312 Loss_G: 4.1159 [79/200][549/549] Loss_D: 0.1320 Loss_G: 3.1260 [80/200][ 1/549] Loss_D: 0.2042 Loss_G: 2.3162 [80/200][501/549] Loss_D: 0.2032 Loss_G: 2.1090 [80/200][549/549] Loss_D: 0.0824 Loss_G: 2.9415 [81/200][ 1/549] Loss_D: 0.1062 Loss_G: 3.5188 [81/200][501/549] Loss_D: 0.3138 Loss_G: 4.9336 [81/200][549/549] Loss_D: 0.0459 Loss_G: 3.6886 [82/200][ 1/549] Loss_D: 0.0373 Loss_G: 3.7398 [82/200][501/549] Loss_D: 0.0676 Loss_G: 4.7946 [82/200][549/549] Loss_D: 0.0519 Loss_G: 3.7365 [83/200][ 1/549] Loss_D: 0.0460 Loss_G: 5.1354 [83/200][501/549] Loss_D: 0.1640 Loss_G: 2.4931 [83/200][549/549] Loss_D: 0.0270 Loss_G: 4.5542 [84/200][ 1/549] Loss_D: 0.0279 Loss_G: 3.9441 [84/200][501/549] Loss_D: 0.0163 Loss_G: 4.4843 [84/200][549/549] Loss_D: 0.0237 Loss_G: 5.2358 [85/200][ 1/549] Loss_D: 0.0419 Loss_G: 3.4550 [85/200][501/549] Loss_D: 0.0413 Loss_G: 4.0424 [85/200][549/549] Loss_D: 0.0626 Loss_G: 5.2893 [86/200][ 1/549] Loss_D: 0.0702 Loss_G: 2.7308 [86/200][501/549] Loss_D: 0.0741 Loss_G: 2.8321 [86/200][549/549] Loss_D: 0.0316 Loss_G: 4.7986 [87/200][ 1/549] Loss_D: 0.0360 Loss_G: 3.9111 [87/200][501/549] Loss_D: 0.0237 Loss_G: 4.3538 [87/200][549/549] Loss_D: 0.1407 Loss_G: 2.9554 [88/200][ 1/549] Loss_D: 0.0863 Loss_G: 2.8311 [88/200][501/549] Loss_D: 0.0218 Loss_G: 5.3543 [88/200][549/549] Loss_D: 0.0587 Loss_G: 6.2412 [89/200][ 1/549] Loss_D: 0.0476 Loss_G: 5.0605 [89/200][501/549] Loss_D: 0.0050 Loss_G: 6.1558 [89/200][549/549] Loss_D: 0.0051 Loss_G: 6.1796 [90/200][ 1/549] Loss_D: 0.0025 Loss_G: 6.7527 [90/200][501/549] Loss_D: 0.0471 Loss_G: 3.5542 [90/200][549/549] Loss_D: 0.1369 Loss_G: 3.1567 [91/200][ 1/549] Loss_D: 0.1530 Loss_G: 2.9616 [91/200][501/549] Loss_D: 0.0785 Loss_G: 3.0815 [91/200][549/549] Loss_D: 0.0555 Loss_G: 3.9266 [92/200][ 1/549] Loss_D: 0.0650 Loss_G: 3.2796 [92/200][501/549] Loss_D: 0.0272 Loss_G: 4.1397 [92/200][549/549] Loss_D: 0.1264 Loss_G: 2.5896 [93/200][ 1/549] Loss_D: 0.0603 Loss_G: 3.6887 [93/200][501/549] Loss_D: 0.1040 Loss_G: 5.9669 [93/200][549/549] Loss_D: 0.1973 Loss_G: 3.0978 [94/200][ 1/549] Loss_D: 0.2311 Loss_G: 1.7136 [94/200][501/549] Loss_D: 0.0374 Loss_G: 4.1025 [94/200][549/549] Loss_D: 0.0258 Loss_G: 4.1492 [95/200][ 1/549] Loss_D: 0.0140 Loss_G: 4.7239 [95/200][501/549] Loss_D: 0.0559 Loss_G: 4.3814 [95/200][549/549] Loss_D: 0.0883 Loss_G: 5.0761 [96/200][ 1/549] Loss_D: 0.1652 Loss_G: 1.9615 [96/200][501/549] Loss_D: 0.0282 Loss_G: 5.0828 [96/200][549/549] Loss_D: 0.1106 Loss_G: 2.4472 [97/200][ 1/549] Loss_D: 0.1388 Loss_G: 4.0282 [97/200][501/549] Loss_D: 0.5366 Loss_G: 5.0007 [97/200][549/549] Loss_D: 0.0202 Loss_G: 4.8210 [98/200][ 1/549] Loss_D: 0.0234 Loss_G: 4.4471 [98/200][501/549] Loss_D: 0.1178 Loss_G: 3.1939 [98/200][549/549] Loss_D: 0.1322 Loss_G: 4.2913 [99/200][ 1/549] Loss_D: 0.2034 Loss_G: 1.7403 [99/200][501/549] Loss_D: 0.0475 Loss_G: 3.6451 [99/200][549/549] Loss_D: 0.2296 Loss_G: 1.9866 [100/200][ 1/549] Loss_D: 0.2815 Loss_G: 1.5961 [100/200][501/549] Loss_D: 0.0442 Loss_G: 4.8189 [100/200][549/549] Loss_D: 0.1277 Loss_G: 4.8222 [101/200][ 1/549] Loss_D: 0.6803 Loss_G: 0.6855 [101/200][501/549] Loss_D: 0.0402 Loss_G: 5.0521 [101/200][549/549] Loss_D: 0.0686 Loss_G: 6.2515 [102/200][ 1/549] Loss_D: 0.0939 Loss_G: 2.7984 [102/200][501/549] Loss_D: 0.1204 Loss_G: 2.6007 [102/200][549/549] Loss_D: 0.1181 Loss_G: 5.6810 [103/200][ 1/549] Loss_D: 0.2361 Loss_G: 1.7472 [103/200][501/549] Loss_D: 0.0427 Loss_G: 4.1614 [103/200][549/549] Loss_D: 0.0504 Loss_G: 5.8122 [104/200][ 1/549] Loss_D: 0.0538 Loss_G: 3.1531 [104/200][501/549] Loss_D: 2.0043 Loss_G: 0.1088 [104/200][549/549] Loss_D: 0.0687 Loss_G: 4.7651 [105/200][ 1/549] Loss_D: 0.0604 Loss_G: 3.5815 [105/200][501/549] Loss_D: 0.1369 Loss_G: 4.1490 [105/200][549/549] Loss_D: 0.0890 Loss_G: 2.7475 [106/200][ 1/549] Loss_D: 0.1495 Loss_G: 2.4499 [106/200][501/549] Loss_D: 0.0154 Loss_G: 5.3612 [106/200][549/549] Loss_D: 0.0784 Loss_G: 3.2332 [107/200][ 1/549] Loss_D: 0.1104 Loss_G: 2.6433 [107/200][501/549] Loss_D: 1.5659 Loss_G: 0.1345 [107/200][549/549] Loss_D: 0.1050 Loss_G: 2.6811 [108/200][ 1/549] Loss_D: 0.0709 Loss_G: 3.9519 [108/200][501/549] Loss_D: 0.0201 Loss_G: 4.5984 [108/200][549/549] Loss_D: 0.0284 Loss_G: 7.5778 [109/200][ 1/549] Loss_D: 0.0402 Loss_G: 3.6348 [109/200][501/549] Loss_D: 0.2203 Loss_G: 6.5892 [109/200][549/549] Loss_D: 0.0646 Loss_G: 4.8339 [110/200][ 1/549] Loss_D: 0.1203 Loss_G: 2.1755 [110/200][501/549] Loss_D: 0.2087 Loss_G: 1.8776 [110/200][549/549] Loss_D: 0.0963 Loss_G: 4.9759 [111/200][ 1/549] Loss_D: 1.2461 Loss_G: 0.2730 [111/200][501/549] Loss_D: 0.0719 Loss_G: 5.2483 [111/200][549/549] Loss_D: 0.0291 Loss_G: 5.4161 [112/200][ 1/549] Loss_D: 0.0276 Loss_G: 4.1824 [112/200][501/549] Loss_D: 0.0478 Loss_G: 4.4935 [112/200][549/549] Loss_D: 0.0399 Loss_G: 4.4753 [113/200][ 1/549] Loss_D: 0.0474 Loss_G: 5.7254 [113/200][501/549] Loss_D: 0.2062 Loss_G: 3.2870 [113/200][549/549] Loss_D: 0.0296 Loss_G: 3.9117 [114/200][ 1/549] Loss_D: 0.0317 Loss_G: 4.0872 [114/200][501/549] Loss_D: 0.0141 Loss_G: 5.2961 [114/200][549/549] Loss_D: 0.1965 Loss_G: 2.2977 [115/200][ 1/549] Loss_D: 0.2078 Loss_G: 2.9888 [115/200][501/549] Loss_D: 0.0288 Loss_G: 4.0424 [115/200][549/549] Loss_D: 0.0101 Loss_G: 5.6805 [116/200][ 1/549] Loss_D: 0.0227 Loss_G: 5.2681 [116/200][501/549] Loss_D: 0.0855 Loss_G: 3.3251 [116/200][549/549] Loss_D: 0.0549 Loss_G: 4.5450 [117/200][ 1/549] Loss_D: 0.0361 Loss_G: 4.0229 [117/200][501/549] Loss_D: 0.0300 Loss_G: 4.6825 [117/200][549/549] Loss_D: 0.0535 Loss_G: 3.4068 [118/200][ 1/549] Loss_D: 0.0677 Loss_G: 3.5772 [118/200][501/549] Loss_D: 0.0059 Loss_G: 6.3412 [118/200][549/549] Loss_D: 0.0963 Loss_G: 4.4405 [119/200][ 1/549] Loss_D: 0.0630 Loss_G: 5.0424 [119/200][501/549] Loss_D: 0.0352 Loss_G: 5.1691 [119/200][549/549] Loss_D: 0.0080 Loss_G: 6.2851 [120/200][ 1/549] Loss_D: 0.0108 Loss_G: 5.3682 [120/200][501/549] Loss_D: 0.0292 Loss_G: 5.7229 [120/200][549/549] Loss_D: 0.0031 Loss_G: 7.5459 [121/200][ 1/549] Loss_D: 0.0018 Loss_G: 7.1893 [121/200][501/549] Loss_D: 0.0211 Loss_G: 4.6909 [121/200][549/549] Loss_D: 0.0255 Loss_G: 4.5894 [122/200][ 1/549] Loss_D: 0.1269 Loss_G: 2.4639 [122/200][501/549] Loss_D: 0.0168 Loss_G: 5.4434 [122/200][549/549] Loss_D: 0.0961 Loss_G: 3.4046 [123/200][ 1/549] Loss_D: 0.1574 Loss_G: 3.3845 [123/200][501/549] Loss_D: 0.0390 Loss_G: 4.3531 [123/200][549/549] Loss_D: 0.0439 Loss_G: 3.7912 [124/200][ 1/549] Loss_D: 0.0812 Loss_G: 3.1290 [124/200][501/549] Loss_D: 0.0792 Loss_G: 3.6031 [124/200][549/549] Loss_D: 0.2097 Loss_G: 2.7773 [125/200][ 1/549] Loss_D: 0.1206 Loss_G: 2.6864 [125/200][501/549] Loss_D: 0.0994 Loss_G: 4.2767 [125/200][549/549] Loss_D: 0.0862 Loss_G: 4.2990 [126/200][ 1/549] Loss_D: 0.1475 Loss_G: 4.4362 [126/200][501/549] Loss_D: 0.0956 Loss_G: 2.9088 [126/200][549/549] Loss_D: 0.0784 Loss_G: 3.2672 [127/200][ 1/549] Loss_D: 0.0667 Loss_G: 3.1276 [127/200][501/549] Loss_D: 0.0593 Loss_G: 5.1181 [127/200][549/549] Loss_D: 0.0231 Loss_G: 5.1231 [128/200][ 1/549] Loss_D: 0.0099 Loss_G: 5.8862 [128/200][501/549] Loss_D: 0.0993 Loss_G: 3.1930 [128/200][549/549] Loss_D: 0.0120 Loss_G: 4.6784 [129/200][ 1/549] Loss_D: 0.0294 Loss_G: 4.4950 [129/200][501/549] Loss_D: 0.0961 Loss_G: 2.7850 [129/200][549/549] Loss_D: 0.0488 Loss_G: 4.2303 [130/200][ 1/549] Loss_D: 0.2489 Loss_G: 1.8707 [130/200][501/549] Loss_D: 0.0497 Loss_G: 4.6631 [130/200][549/549] Loss_D: 0.0122 Loss_G: 5.4436 [131/200][ 1/549] Loss_D: 0.0222 Loss_G: 5.9528 [131/200][501/549] Loss_D: 0.0081 Loss_G: 6.6881 [131/200][549/549] Loss_D: 0.0430 Loss_G: 3.7047 [132/200][ 1/549] Loss_D: 0.3198 Loss_G: 1.5745 [132/200][501/549] Loss_D: 0.0327 Loss_G: 4.7865 [132/200][549/549] Loss_D: 0.0316 Loss_G: 4.7662 [133/200][ 1/549] Loss_D: 0.0672 Loss_G: 5.4749 [133/200][501/549] Loss_D: 0.0124 Loss_G: 7.7918 [133/200][549/549] Loss_D: 0.0541 Loss_G: 4.4108 [134/200][ 1/549] Loss_D: 0.1942 Loss_G: 1.9203 [134/200][501/549] Loss_D: 0.3104 Loss_G: 8.1072 [134/200][549/549] Loss_D: 0.0261 Loss_G: 7.3222 [135/200][ 1/549] Loss_D: 0.0065 Loss_G: 5.6847 [135/200][501/549] Loss_D: 0.0219 Loss_G: 5.1363 [135/200][549/549] Loss_D: 0.0120 Loss_G: 5.1785 [136/200][ 1/549] Loss_D: 0.0166 Loss_G: 4.6034 [136/200][501/549] Loss_D: 0.0266 Loss_G: 4.3288 [136/200][549/549] Loss_D: 0.0225 Loss_G: 5.6862 [137/200][ 1/549] Loss_D: 0.0261 Loss_G: 4.1803 [137/200][501/549] Loss_D: 0.0441 Loss_G: 6.0091 [137/200][549/549] Loss_D: 0.2349 Loss_G: 3.2408 [138/200][ 1/549] Loss_D: 0.2589 Loss_G: 1.7933 [138/200][501/549] Loss_D: 0.1926 Loss_G: 2.3397 [138/200][549/549] Loss_D: 0.1333 Loss_G: 2.9139 [139/200][ 1/549] Loss_D: 0.1251 Loss_G: 3.5228 [139/200][501/549] Loss_D: 0.0115 Loss_G: 6.0259 [139/200][549/549] Loss_D: 0.0036 Loss_G: 7.2037 [140/200][ 1/549] Loss_D: 0.0148 Loss_G: 6.5842 [140/200][501/549] Loss_D: 0.0096 Loss_G: 5.4448 [140/200][549/549] Loss_D: 0.0633 Loss_G: 3.3144 [141/200][ 1/549] Loss_D: 0.1099 Loss_G: 5.2266 [141/200][501/549] Loss_D: 0.0495 Loss_G: 4.0772 [141/200][549/549] Loss_D: 0.0496 Loss_G: 5.8312 [142/200][ 1/549] Loss_D: 0.0587 Loss_G: 3.2091 [142/200][501/549] Loss_D: 0.0041 Loss_G: 7.1615 [142/200][549/549] Loss_D: 0.0009 Loss_G: 7.3294 [143/200][ 1/549] Loss_D: 0.0044 Loss_G: 5.7795 [143/200][501/549] Loss_D: 0.0000 Loss_G:27.6310 [143/200][549/549] Loss_D: 0.0000 Loss_G:27.6310 [144/200][ 1/549] Loss_D: 0.0000 Loss_G:27.6310 [144/200][501/549] Loss_D: 0.0000 Loss_G:27.6310 [144/200][549/549] Loss_D: 0.0000 Loss_G:27.6310 [145/200][ 1/549] Loss_D: 0.0000 Loss_G:27.6310 [145/200][501/549] Loss_D: 0.0000 Loss_G:27.6310 [145/200][549/549] Loss_D: 0.0000 Loss_G:27.6310 [146/200][ 1/549] Loss_D: 0.0000 Loss_G:27.6310 [146/200][501/549] Loss_D: 0.0000 Loss_G:27.6310 [146/200][549/549] Loss_D: 0.0000 Loss_G:27.6310 [147/200][ 1/549] Loss_D: 0.0000 Loss_G:27.6310 [147/200][501/549] Loss_D: 0.0000 Loss_G:27.6310 [147/200][549/549] Loss_D: 0.0000 Loss_G:27.6310 [148/200][ 1/549] Loss_D: 0.0000 Loss_G:27.6310 [148/200][501/549] Loss_D: 0.0000 Loss_G:27.6310 [148/200][549/549] Loss_D: 0.0000 Loss_G:27.6310 [149/200][ 1/549] Loss_D: 0.0000 Loss_G:27.6310 [149/200][501/549] Loss_D: 0.0000 Loss_G:27.6310 [149/200][549/549] Loss_D: 0.0000 Loss_G:27.6310 [150/200][ 1/549] Loss_D: 0.0000 Loss_G:27.6310 [150/200][501/549] Loss_D: 0.0000 Loss_G:27.6310 [150/200][549/549] Loss_D: 0.0000 Loss_G:27.6310 [151/200][ 1/549] Loss_D: 0.0000 Loss_G:27.6310 [151/200][501/549] Loss_D: 0.0000 Loss_G:27.6310 [151/200][549/549] Loss_D: 0.0000 Loss_G:27.6310 [152/200][ 1/549] Loss_D: 0.0000 Loss_G:27.6310 [152/200][501/549] Loss_D: 0.0000 Loss_G:27.6310 [152/200][549/549] Loss_D: 0.0000 Loss_G:27.6310 [153/200][ 1/549] Loss_D: 0.0000 Loss_G:27.6310 [153/200][501/549] Loss_D: 0.0000 Loss_G:27.6310 [153/200][549/549] Loss_D: 0.0000 Loss_G:27.6310 [154/200][ 1/549] Loss_D: 0.0000 Loss_G:27.6310 [154/200][501/549] Loss_D: 0.0000 Loss_G:27.6310 [154/200][549/549] Loss_D: 0.0000 Loss_G:27.6310 [155/200][ 1/549] Loss_D: 0.0000 Loss_G:27.6310 [155/200][501/549] Loss_D: 0.0000 Loss_G:27.6310 [155/200][549/549] Loss_D: 0.0000 Loss_G:27.6310 [156/200][ 1/549] Loss_D: 0.0000 Loss_G:27.6310 [156/200][501/549] Loss_D: 0.0000 Loss_G:27.6310 [156/200][549/549] Loss_D: 0.0000 Loss_G:27.6310 [157/200][ 1/549] Loss_D: 0.0000 Loss_G:27.6310 [157/200][501/549] Loss_D: 0.0000 Loss_G:27.6310 [157/200][549/549] Loss_D: 0.0000 Loss_G:27.6310 [158/200][ 1/549] Loss_D: 0.0000 Loss_G:27.6310 [158/200][501/549] Loss_D: 0.0000 Loss_G:27.6310 [158/200][549/549] Loss_D: 0.0000 Loss_G:27.6310 [159/200][ 1/549] Loss_D: 0.0000 Loss_G:27.6310 [159/200][501/549] Loss_D: 0.0000 Loss_G:27.6310 [159/200][549/549] Loss_D: 0.0000 Loss_G:27.6310 [160/200][ 1/549] Loss_D: 0.0000 Loss_G:27.6310 [160/200][501/549] Loss_D: 0.0000 Loss_G:27.6310 [160/200][549/549] Loss_D: 0.0000 Loss_G:27.6310 [161/200][ 1/549] Loss_D: 0.0000 Loss_G:27.6310 [161/200][501/549] Loss_D: 0.0000 Loss_G:27.6310 [161/200][549/549] Loss_D: 0.0000 Loss_G:27.6310 [162/200][ 1/549] Loss_D: 0.0000 Loss_G:27.6310 [162/200][501/549] Loss_D: 0.0000 Loss_G:27.6310 [162/200][549/549] Loss_D: 0.0000 Loss_G:27.6310 [163/200][ 1/549] Loss_D: 0.0000 Loss_G:27.6310 [163/200][501/549] Loss_D: 0.0000 Loss_G:27.6310 [163/200][549/549] Loss_D: 0.0000 Loss_G:27.6310 [164/200][ 1/549] Loss_D: 0.0000 Loss_G:27.6310 [164/200][501/549] Loss_D: 0.0000 Loss_G:27.6310 [164/200][549/549] Loss_D: 0.0000 Loss_G:27.6310 [165/200][ 1/549] Loss_D: 0.0000 Loss_G:27.6310 [165/200][501/549] Loss_D: 0.0000 Loss_G:27.6310 [165/200][549/549] Loss_D: 0.0000 Loss_G:27.6310 [166/200][ 1/549] Loss_D: 0.0000 Loss_G:27.6310 [166/200][501/549] Loss_D: 0.0000 Loss_G:27.6310 [166/200][549/549] Loss_D: 0.0000 Loss_G:27.6310 [167/200][ 1/549] Loss_D: 0.0000 Loss_G:27.6310 [167/200][501/549] Loss_D: 0.0000 Loss_G:27.6310 [167/200][549/549] Loss_D: 0.0000 Loss_G:27.6310 [168/200][ 1/549] Loss_D: 0.0000 Loss_G:27.6310 [168/200][501/549] Loss_D: 0.0000 Loss_G:27.6310 [168/200][549/549] Loss_D: 0.0000 Loss_G:27.6310 [169/200][ 1/549] Loss_D: 0.0000 Loss_G:27.6310 [169/200][501/549] Loss_D: 0.0000 Loss_G:27.6310 [169/200][549/549] Loss_D: 0.0000 Loss_G:27.6310 [170/200][ 1/549] Loss_D: 0.0000 Loss_G:27.6310 [170/200][501/549] Loss_D: 0.0000 Loss_G:27.6310 [170/200][549/549] Loss_D: 0.0000 Loss_G:27.6310 [171/200][ 1/549] Loss_D: 0.0000 Loss_G:27.6310 [171/200][501/549] Loss_D: 0.0000 Loss_G:27.6310 [171/200][549/549] Loss_D: 0.0000 Loss_G:27.6310 [172/200][ 1/549] Loss_D: 0.0000 Loss_G:27.6310 [172/200][501/549] Loss_D: 0.0000 Loss_G:27.6310 [172/200][549/549] Loss_D: 0.0000 Loss_G:27.6310 [173/200][ 1/549] Loss_D: 0.0000 Loss_G:27.6310 [173/200][501/549] Loss_D: 0.0000 Loss_G:27.6310 [173/200][549/549] Loss_D: 0.0000 Loss_G:27.6310 [174/200][ 1/549] Loss_D: 0.0000 Loss_G:27.6310 [174/200][501/549] Loss_D: 0.0000 Loss_G:27.6310 [174/200][549/549] Loss_D: 0.0000 Loss_G:27.6310 [175/200][ 1/549] Loss_D: 0.0000 Loss_G:27.6310 [175/200][501/549] Loss_D: 0.0000 Loss_G:27.6310 [175/200][549/549] Loss_D: 0.0000 Loss_G:27.6310 [176/200][ 1/549] Loss_D: 0.0000 Loss_G:27.6310 [176/200][501/549] Loss_D: 0.0000 Loss_G:27.6310 [176/200][549/549] Loss_D: 0.0000 Loss_G:27.6310 [177/200][ 1/549] Loss_D: 0.0000 Loss_G:27.6310 [177/200][501/549] Loss_D: 0.0000 Loss_G:27.6310 [177/200][549/549] Loss_D: 0.0000 Loss_G:27.6310 [178/200][ 1/549] Loss_D: 0.0000 Loss_G:27.6310 [178/200][501/549] Loss_D: 0.0000 Loss_G:27.6310 [178/200][549/549] Loss_D: 0.0000 Loss_G:27.6310 [179/200][ 1/549] Loss_D: 0.0000 Loss_G:27.6310 [179/200][501/549] Loss_D: 0.0000 Loss_G:27.6310 [179/200][549/549] Loss_D: 0.0000 Loss_G:27.6310 [180/200][ 1/549] Loss_D: 0.0000 Loss_G:27.6310 [180/200][501/549] Loss_D: 0.0000 Loss_G:27.6310 [180/200][549/549] Loss_D: 0.0000 Loss_G:27.6310 [181/200][ 1/549] Loss_D: 0.0000 Loss_G:27.6310 [181/200][501/549] Loss_D: 0.0000 Loss_G:27.6310 [181/200][549/549] Loss_D: 0.0000 Loss_G:27.6310 [182/200][ 1/549] Loss_D: 0.0000 Loss_G:27.6310 [182/200][501/549] Loss_D: 0.0000 Loss_G:27.6310 [182/200][549/549] Loss_D: 0.0000 Loss_G:27.6310 [183/200][ 1/549] Loss_D: 0.0000 Loss_G:27.6310 [183/200][501/549] Loss_D: 0.0000 Loss_G:27.6310 [183/200][549/549] Loss_D: 0.0000 Loss_G:27.6310 [184/200][ 1/549] Loss_D: 0.0000 Loss_G:27.6310 [184/200][501/549] Loss_D: 0.0000 Loss_G:27.6310 [184/200][549/549] Loss_D: 0.0000 Loss_G:27.6310 [185/200][ 1/549] Loss_D: 0.0000 Loss_G:27.6310 [185/200][501/549] Loss_D: 0.0000 Loss_G:27.6310 [185/200][549/549] Loss_D: 0.0000 Loss_G:27.6310 [186/200][ 1/549] Loss_D: 0.0000 Loss_G:27.6310 [186/200][501/549] Loss_D: 0.0000 Loss_G:27.6310 [186/200][549/549] Loss_D: 0.0000 Loss_G:27.6310 [187/200][ 1/549] Loss_D: 0.0000 Loss_G:27.6310 [187/200][501/549] Loss_D: 0.0000 Loss_G:27.6310 [187/200][549/549] Loss_D: 0.0000 Loss_G:27.6310 [188/200][ 1/549] Loss_D: 0.0000 Loss_G:27.6310 [188/200][501/549] Loss_D: 0.0000 Loss_G:27.6310 [188/200][549/549] Loss_D: 0.0000 Loss_G:27.6310 [189/200][ 1/549] Loss_D: 0.0000 Loss_G:27.6310 [189/200][501/549] Loss_D: 0.0000 Loss_G:27.6310 [189/200][549/549] Loss_D: 0.0000 Loss_G:27.6310 [190/200][ 1/549] Loss_D: 0.0000 Loss_G:27.6310 [190/200][501/549] Loss_D: 0.0000 Loss_G:27.6310 [190/200][549/549] Loss_D: 0.0000 Loss_G:27.6310 [191/200][ 1/549] Loss_D: 0.0000 Loss_G:27.6310 [191/200][501/549] Loss_D: 0.0000 Loss_G:27.6310 [191/200][549/549] Loss_D: 0.0000 Loss_G:27.6310 [192/200][ 1/549] Loss_D: 0.0000 Loss_G:27.6310 [192/200][501/549] Loss_D: 0.0000 Loss_G:27.6310 [192/200][549/549] Loss_D: 0.0000 Loss_G:27.6310 [193/200][ 1/549] Loss_D: 0.0000 Loss_G:27.6310 [193/200][501/549] Loss_D: 0.0000 Loss_G:27.6310 [193/200][549/549] Loss_D: 0.0000 Loss_G:27.6310 [194/200][ 1/549] Loss_D: 0.0000 Loss_G:27.6310 [194/200][501/549] Loss_D: 0.0000 Loss_G:27.6310 [194/200][549/549] Loss_D: 0.0000 Loss_G:27.6310 [195/200][ 1/549] Loss_D: 0.0000 Loss_G:27.6310 [195/200][501/549] Loss_D: 0.0000 Loss_G:27.6310 [195/200][549/549] Loss_D: 0.0000 Loss_G:27.6310 [196/200][ 1/549] Loss_D: 0.0000 Loss_G:27.6310 [196/200][501/549] Loss_D: 0.0000 Loss_G:27.6310 [196/200][549/549] Loss_D: 0.0000 Loss_G:27.6310 [197/200][ 1/549] Loss_D: 0.0000 Loss_G:27.6310 [197/200][501/549] Loss_D: 0.0000 Loss_G:27.6310 [197/200][549/549] Loss_D: 0.0000 Loss_G:27.6310 [198/200][ 1/549] Loss_D: 0.0000 Loss_G:27.6310 [198/200][501/549] Loss_D: 0.0000 Loss_G:27.6310 [198/200][549/549] Loss_D: 0.0000 Loss_G:27.6310 [199/200][ 1/549] Loss_D: 0.0000 Loss_G:27.6310 [199/200][501/549] Loss_D: 0.0000 Loss_G:27.6310 [199/200][549/549] Loss_D: 0.0000 Loss_G:27.6310 [200/200][ 1/549] Loss_D: 0.0000 Loss_G:27.6310 [200/200][501/549] Loss_D: 0.0000 Loss_G:27.6310 [200/200][549/549] Loss_D: 0.0000 Loss_G:27.6310 CPU times: user 6h 18min 32s, sys: 1h 56min 9s, total: 8h 14min 41s Wall time: 49min 9s

结果展示

运行下面代码,描绘D和G损失与训练迭代的关系图:

plt.figure(figsize=(10, 5))

plt.title("Generator and Discriminator Loss During Training")

plt.plot(G_losses, label="G", color='blue')

plt.plot(D_losses, label="D", color='orange')

plt.xlabel("iterations")

plt.ylabel("Loss")

plt.legend()

plt.show()可视化训练过程中通过隐向量fixed_noise生成的图像。

import matplotlib.pyplot as plt

import matplotlib.animation as animation

def showGif(image_list):

show_list = []

fig = plt.figure(figsize=(8, 3), dpi=120)

for epoch in range(len(image_list)):

images = []

for i in range(3):

row = np.concatenate((image_list[epoch][i * 8:(i + 1) * 8]), axis=1)

images.append(row)

img = np.clip(np.concatenate((images[:]), axis=0), 0, 1)

plt.axis("off")

show_list.append([plt.imshow(img)])

ani = animation.ArtistAnimation(fig, show_list, interval=1000, repeat_delay=1000, blit=True)

ani.save('./dcgan.gif', writer='pillow', fps=1)

showGif(image_list)# 从文件中获取模型参数并加载到网络中

mindspore.load_checkpoint("./generator.ckpt", generator)

fixed_noise = ops.standard_normal((batch_size, nz, 1, 1))

img64 = generator(fixed_noise).transpose(0, 2, 3, 1).asnumpy()

fig = plt.figure(figsize=(8, 3), dpi=120)

images = []

for i in range(3):

images.append(np.concatenate((img64[i * 8:(i + 1) * 8]), axis=1))

img = np.clip(np.concatenate((images[:]), axis=0), 0, 1)

plt.axis("off")

plt.imshow(img)

plt.show()

1442

1442

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?