【第六节】: 【Lagent & AgentLego 智能体应用搭建】

1、Agent 理论及 Lagent&AgentLego 开源产品介绍

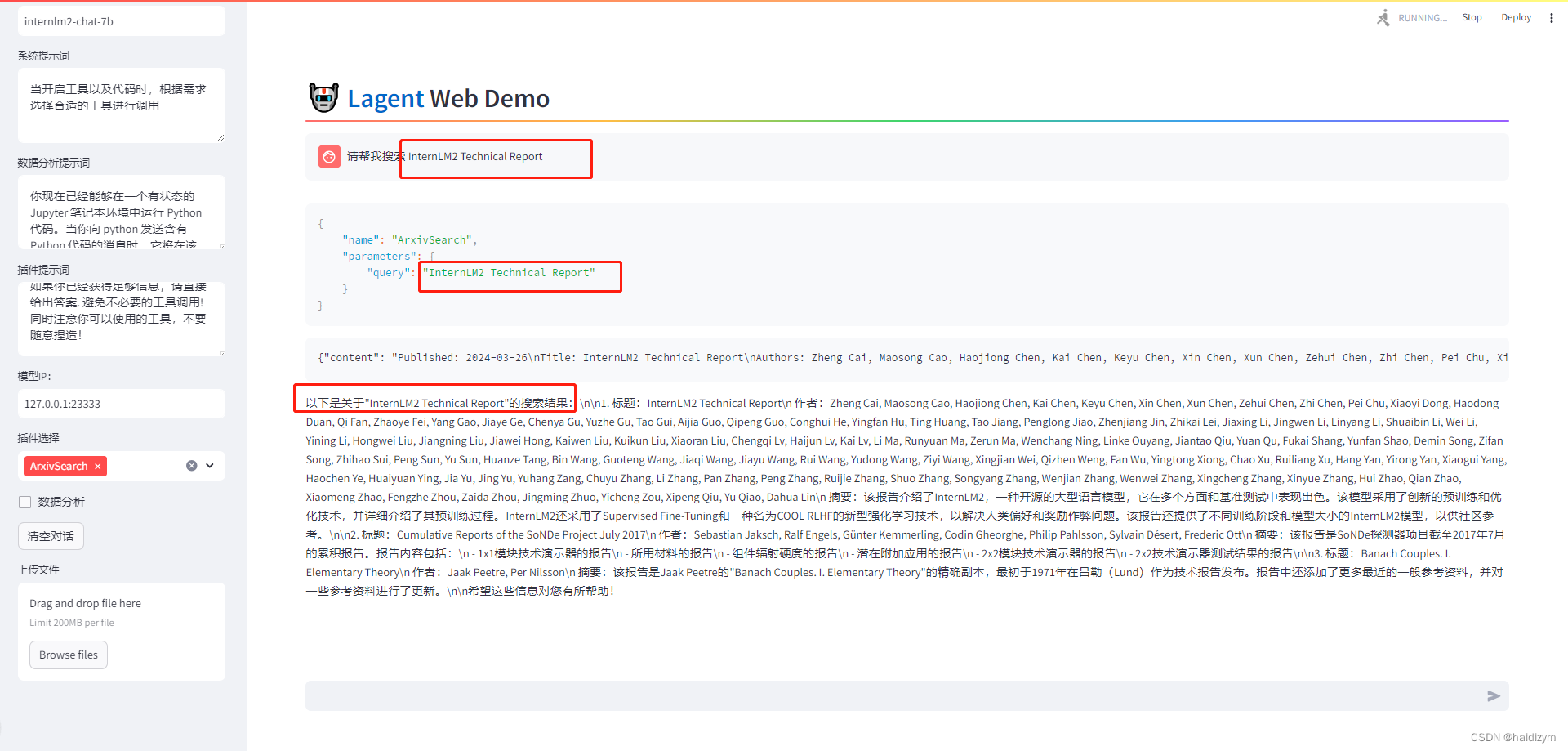

2、Lagent 调用已有 Arxiv 论文搜索工具实战

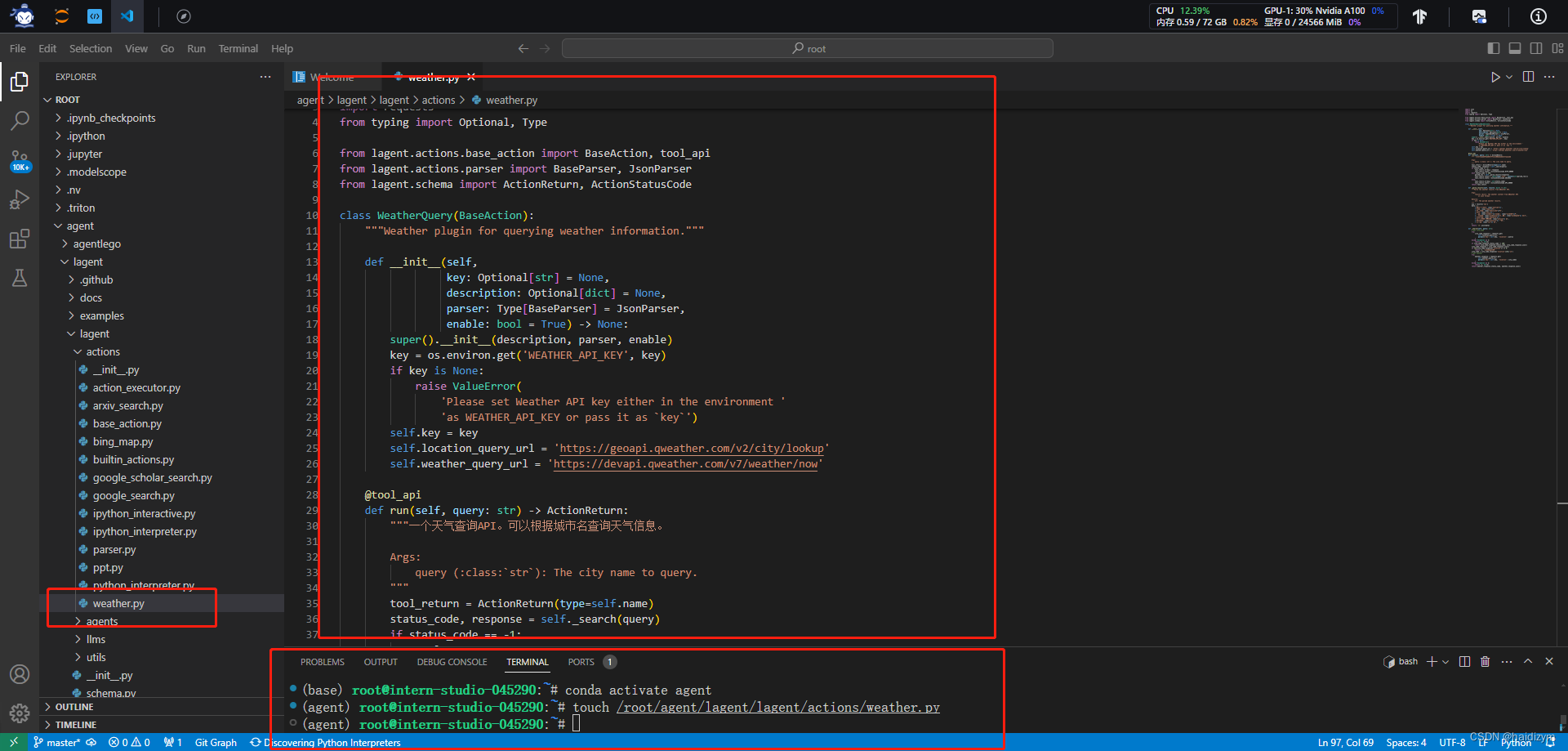

3、Lagent 新增自定义工具实战(以查询天气的工具为例)

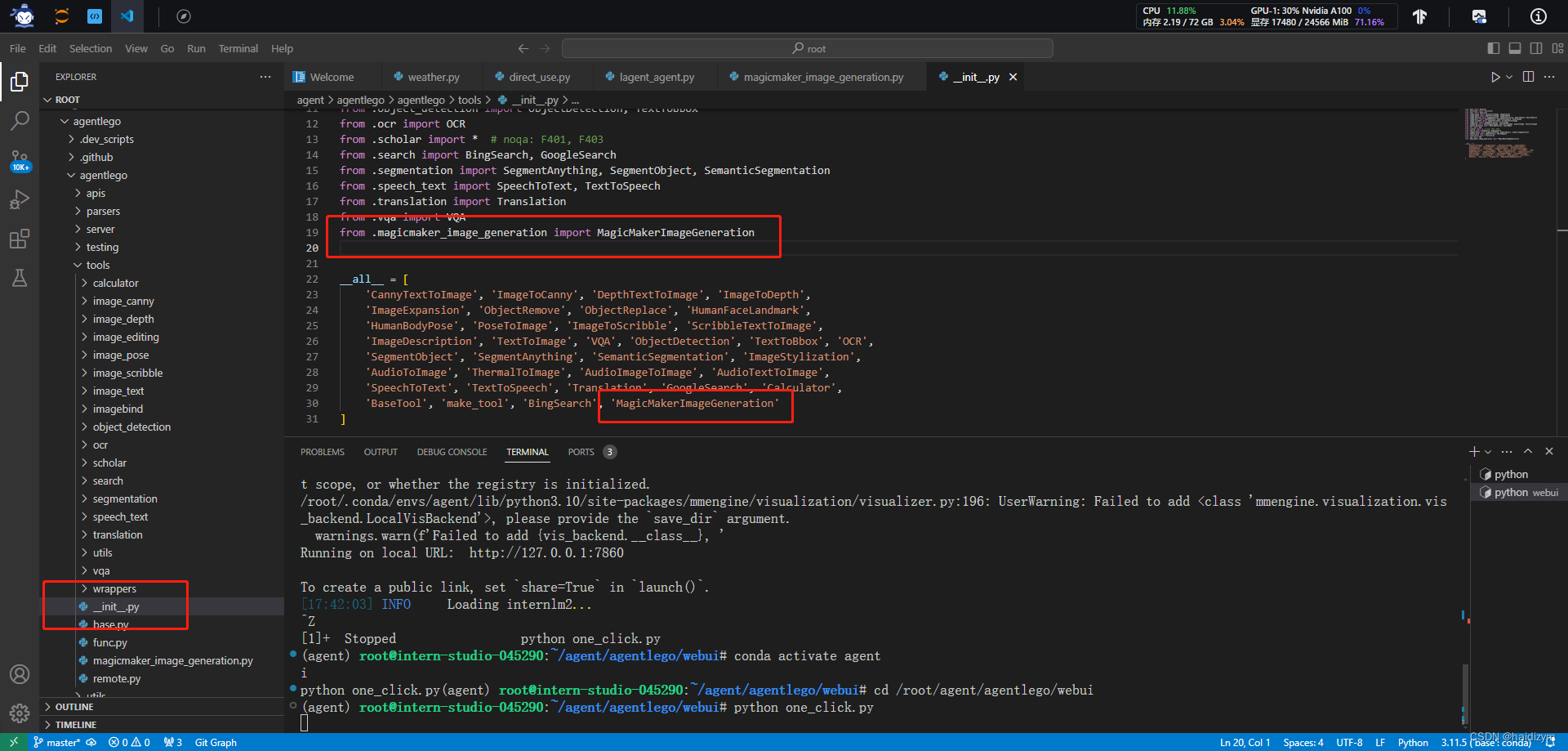

4、AgentLego 新增 MagicMaker 文生图工具实战

【视频地址】:https://www.bilibili.com/video/BV1Xt4217728/

【课程文档】:https://github.com/InternLM/Tutorial/tree/camp2/agent

【课程作业】:https://github.com/InternLM/Tutorial/blob/camp2/agent/homework.md

【操作平台】:https://studio.intern-ai.org.cn/console/instance/

【lagent文档】: https://github.com/InternLM/Tutorial/blob/camp2/agent/lagent.md

【agentlego文档】:https://github.com/InternLM/Tutorial/blob/camp2/agent/agentlego.md

【lagent自定义工具】: https://lagent.readthedocs.io/zh-cn/latest/tutorials/action.html

【agentlego自定义工具】: https://agentlego.readthedocs.io/zh-cn/latest/modules/tool.html

基础作业

Lagent+ArxivSearch(直接使用工具)

完成 Lagent Web Demo 使用,并在作业中上传截图。文档可见 Lagent Web Demo

环境安装

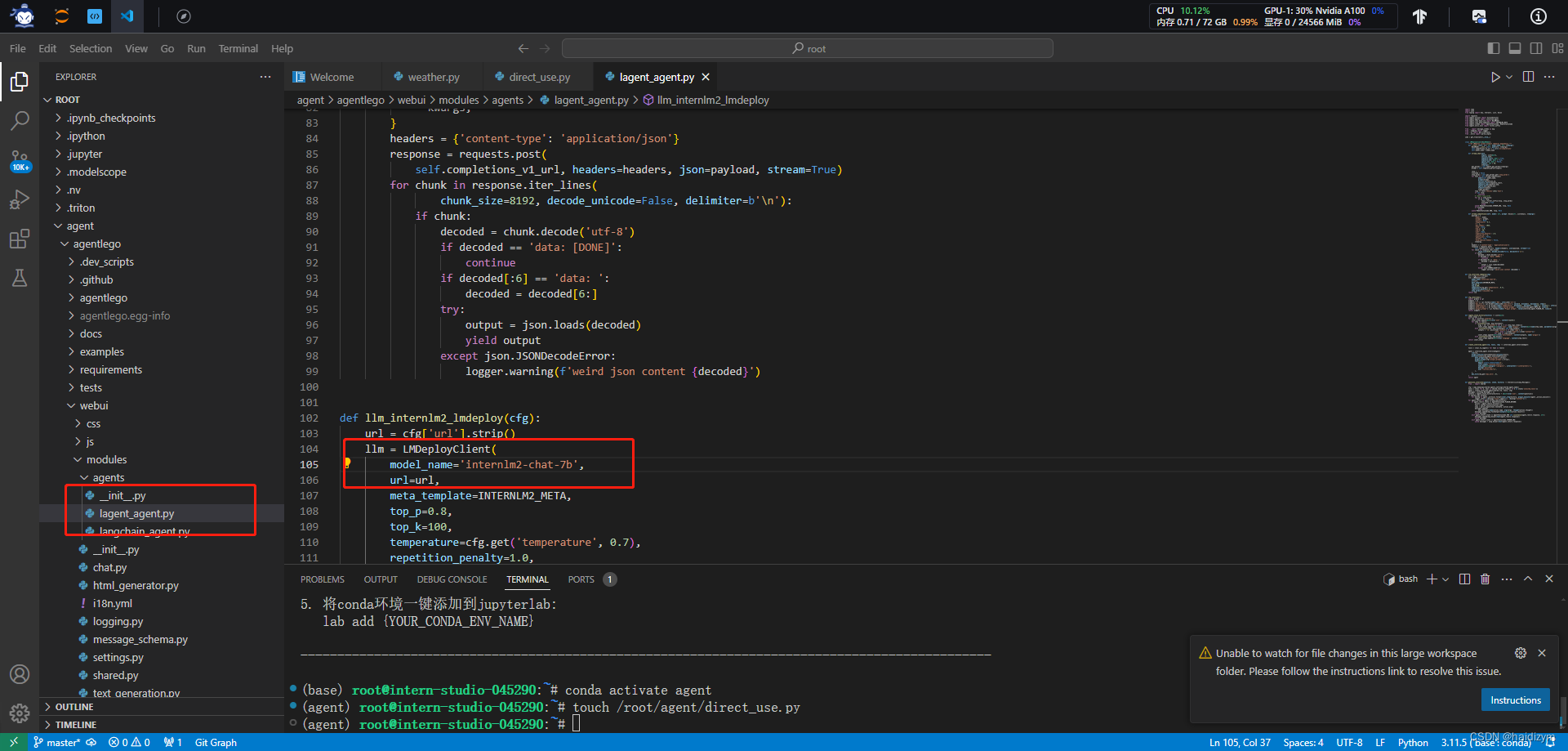

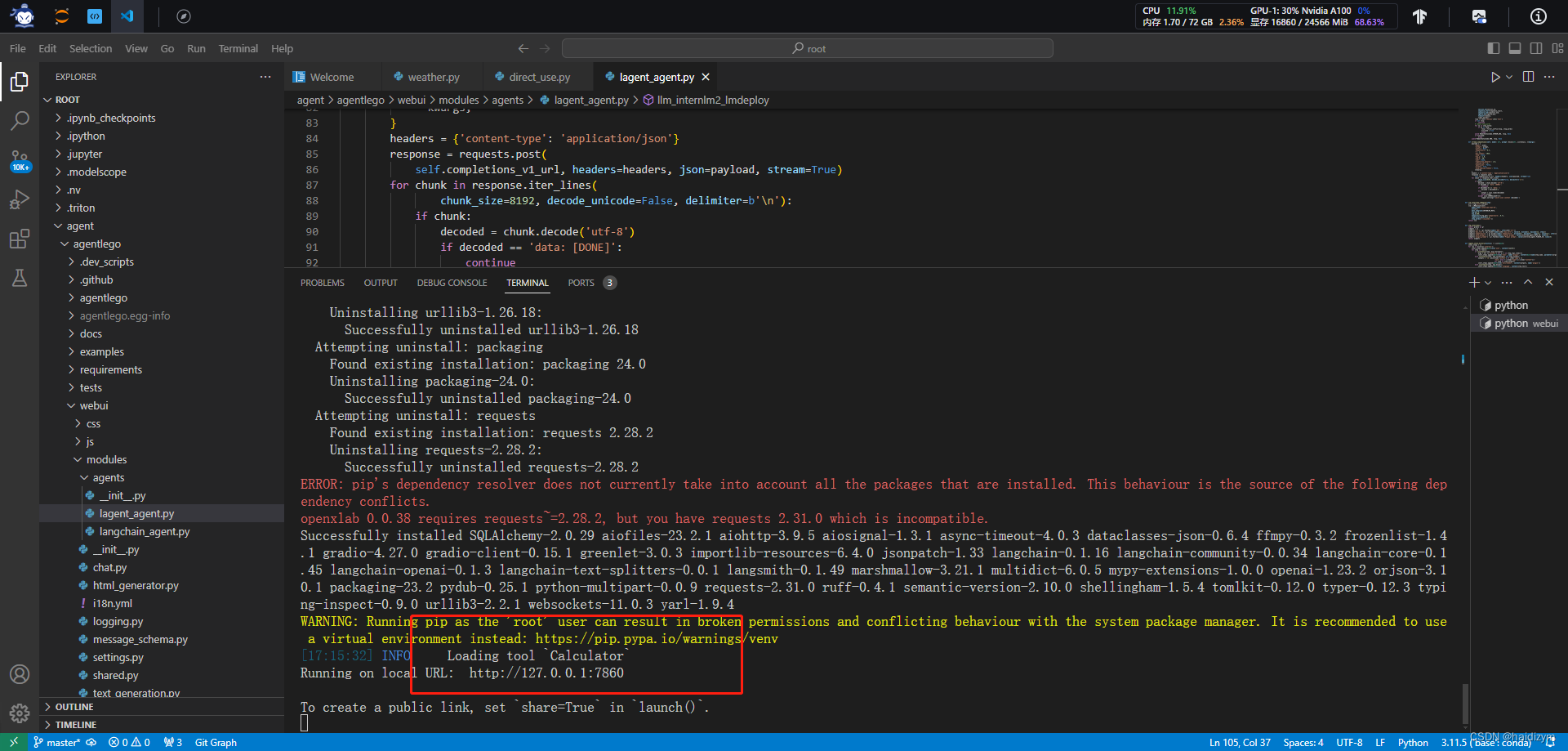

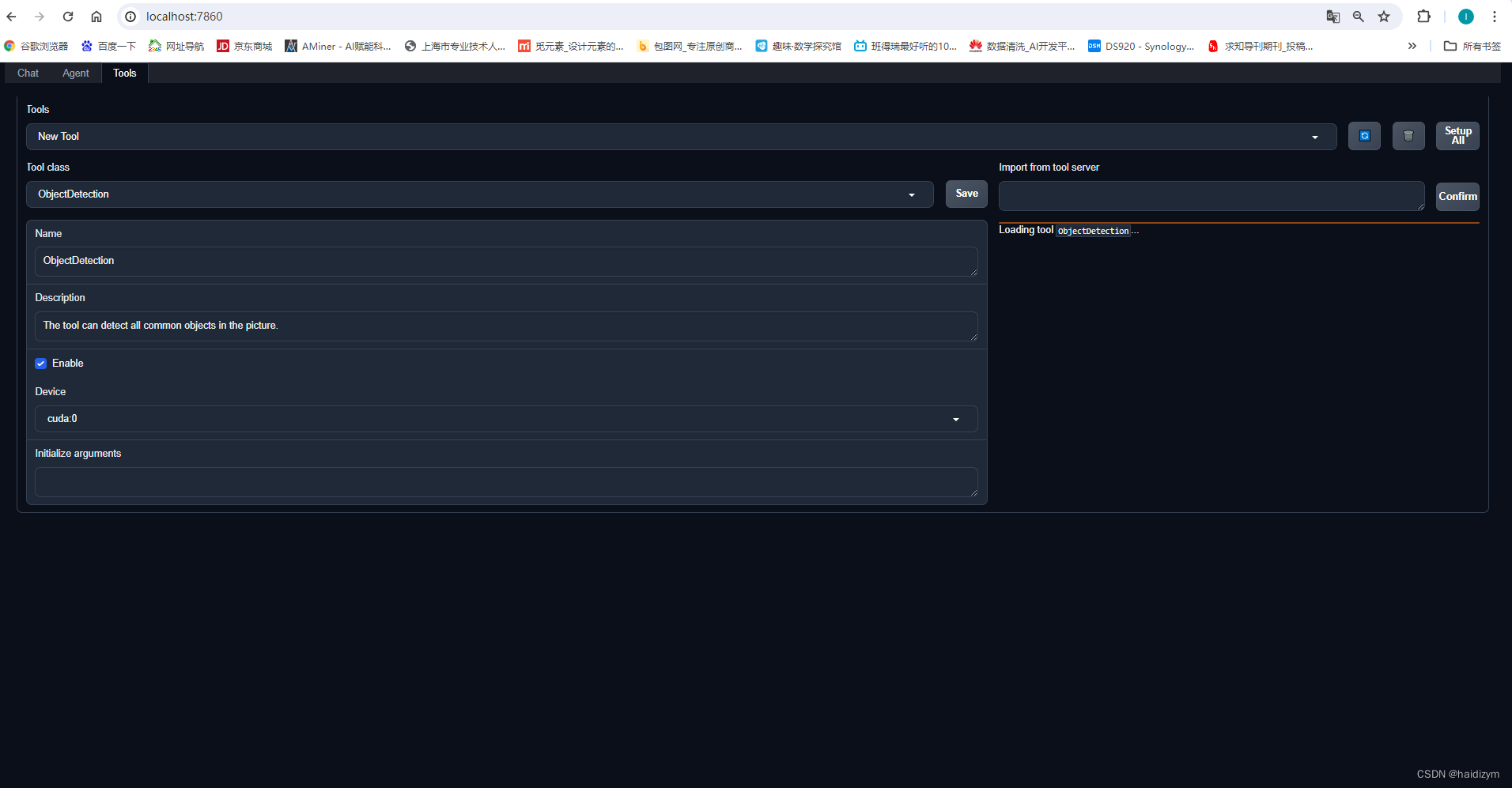

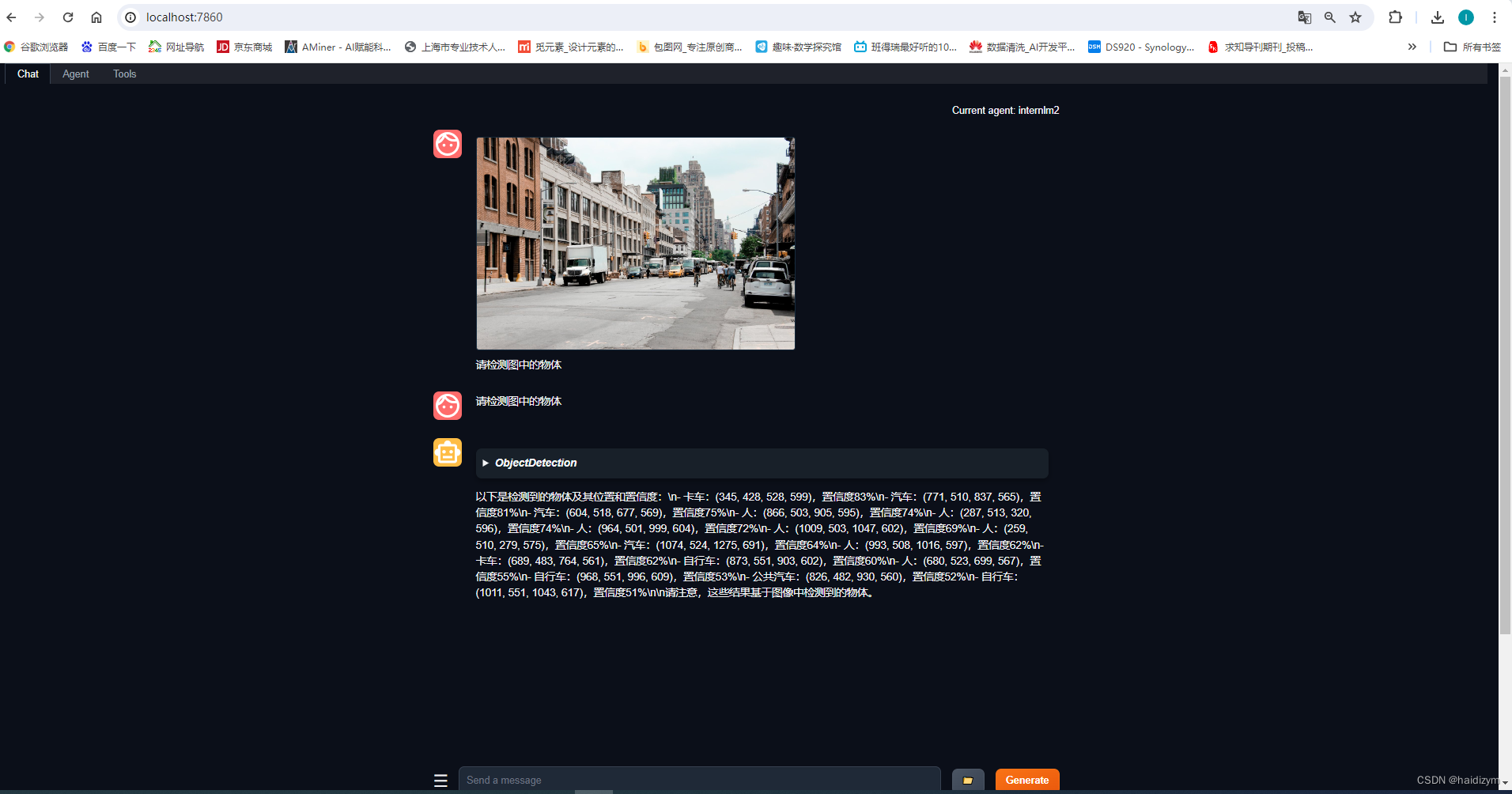

Lagent+agentlego+ObjectDetection(直接使用工具)

完成 AgentLego 直接使用部分,并在作业中上传截图。文档可见 直接使用 AgentLego

进阶作业

完成 AgentLego WebUI 使用,并在作业中上传截图。文档可见 AgentLego WebUI。

使用 Lagent 或 AgentLego 实现自定义工具并完成调用,并在作业中上传截图。文档可见:

用 Lagent 自定义工具

用 AgentLego 自定义工具

Lagent+weatherQuery(自定义工具)

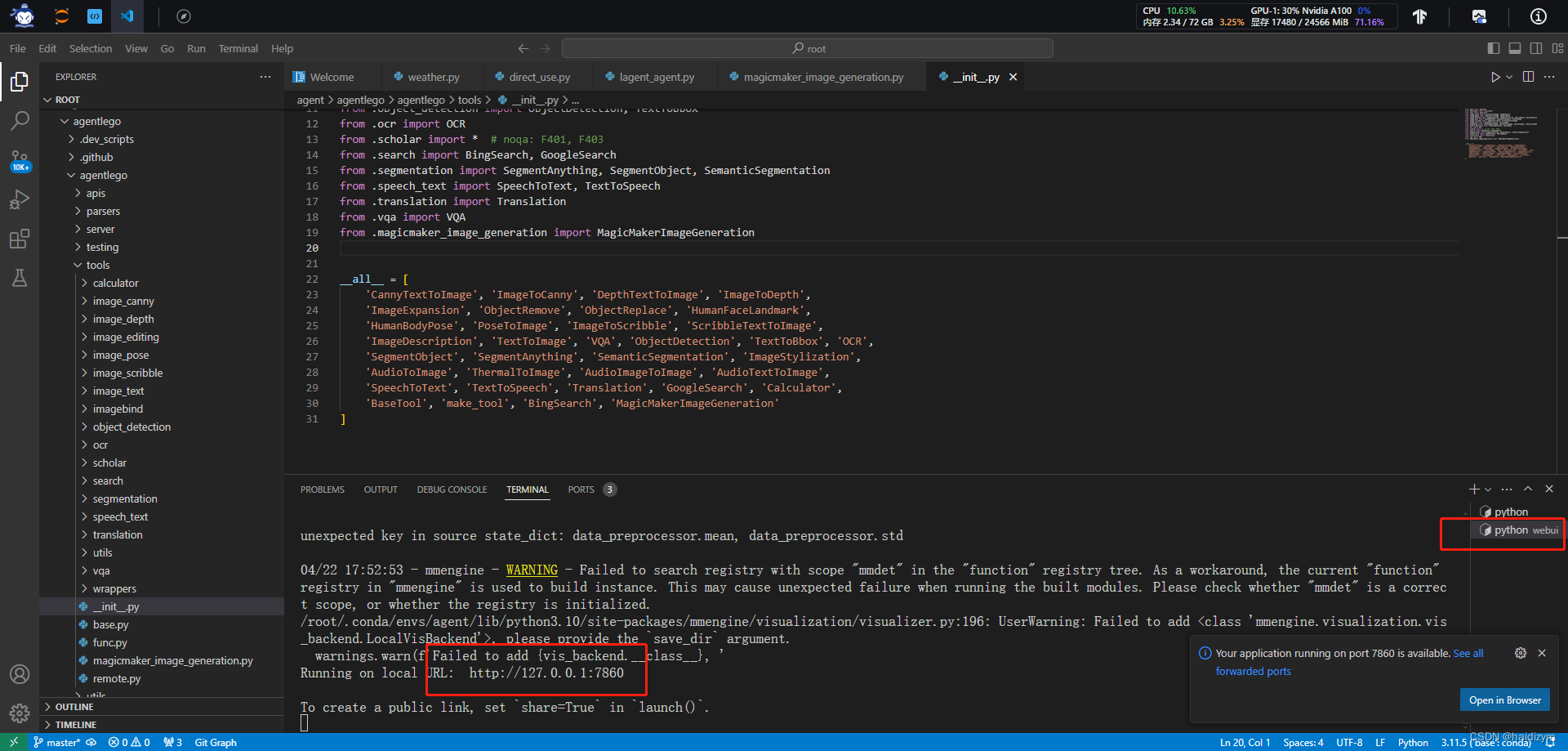

Lagent+agentlego+ MagicMakerImageGeneration(自定义工具)

(备注:开始由于少加了from .magicmaker_image_generation import MagicMakerImageGeneration导致工具列表中没有MagicMakerImageGeneration,只能从头做一遍,注意这里是修改两个地方)

(备注:注意只选中一个工具才会正常生成)

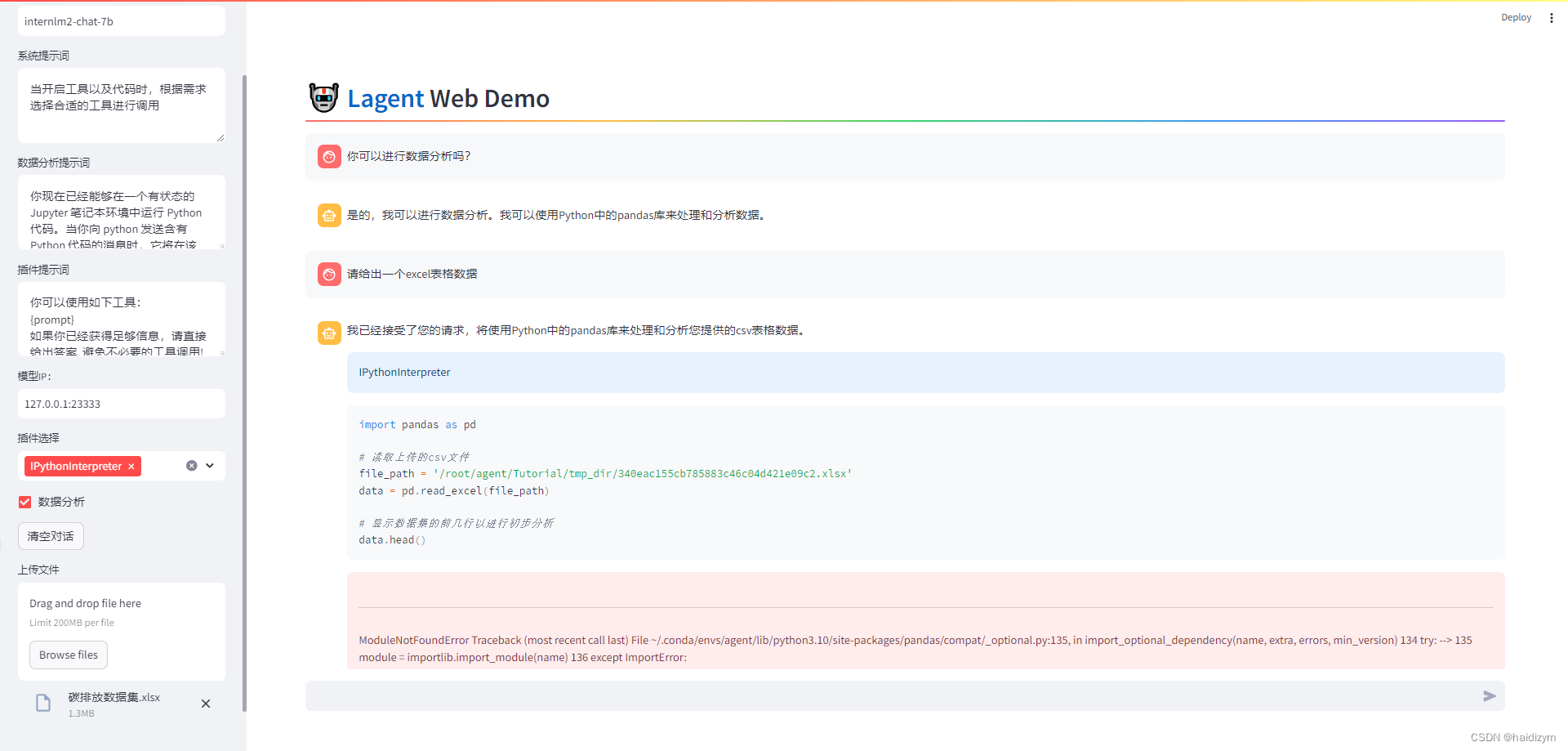

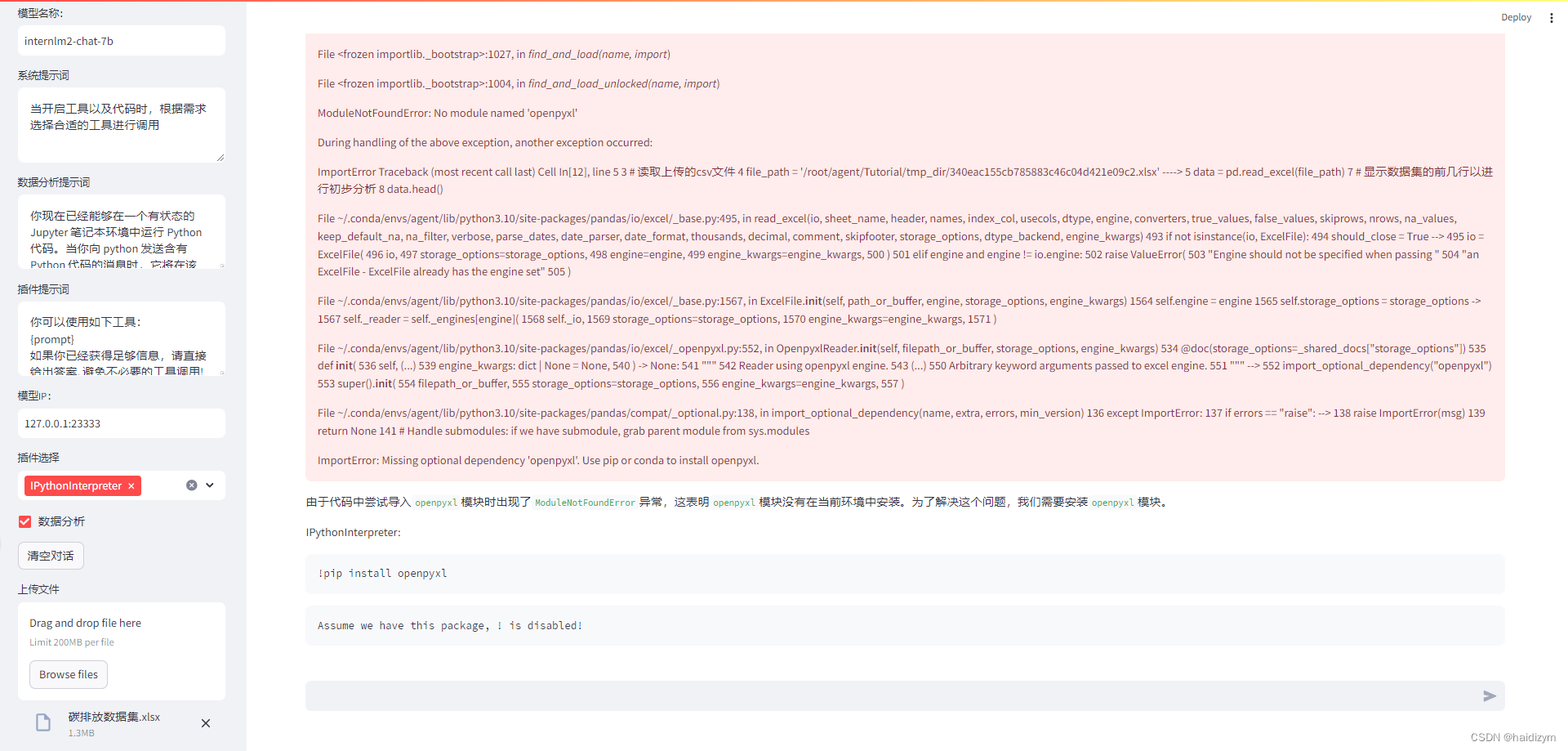

lagent+ipythoninterpreter

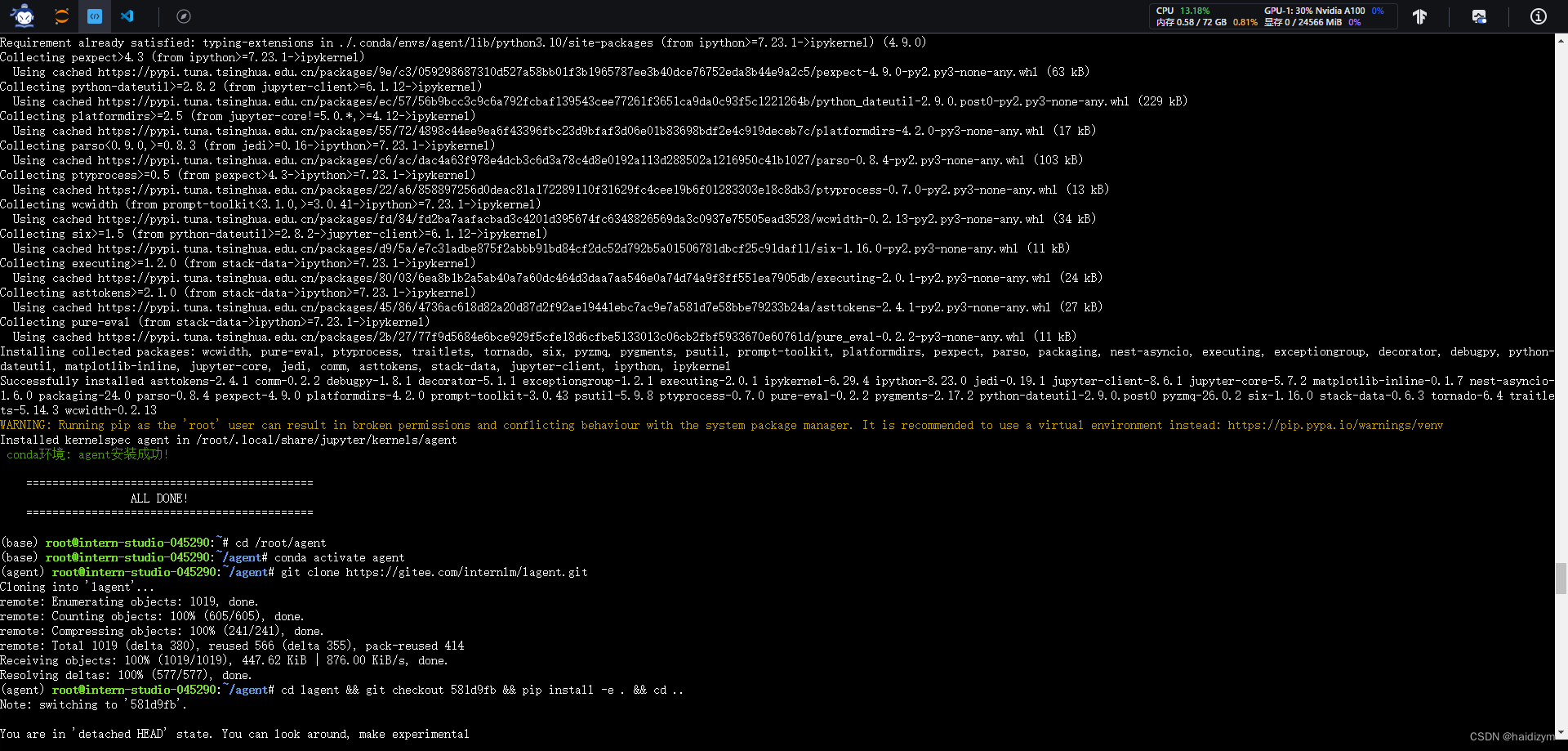

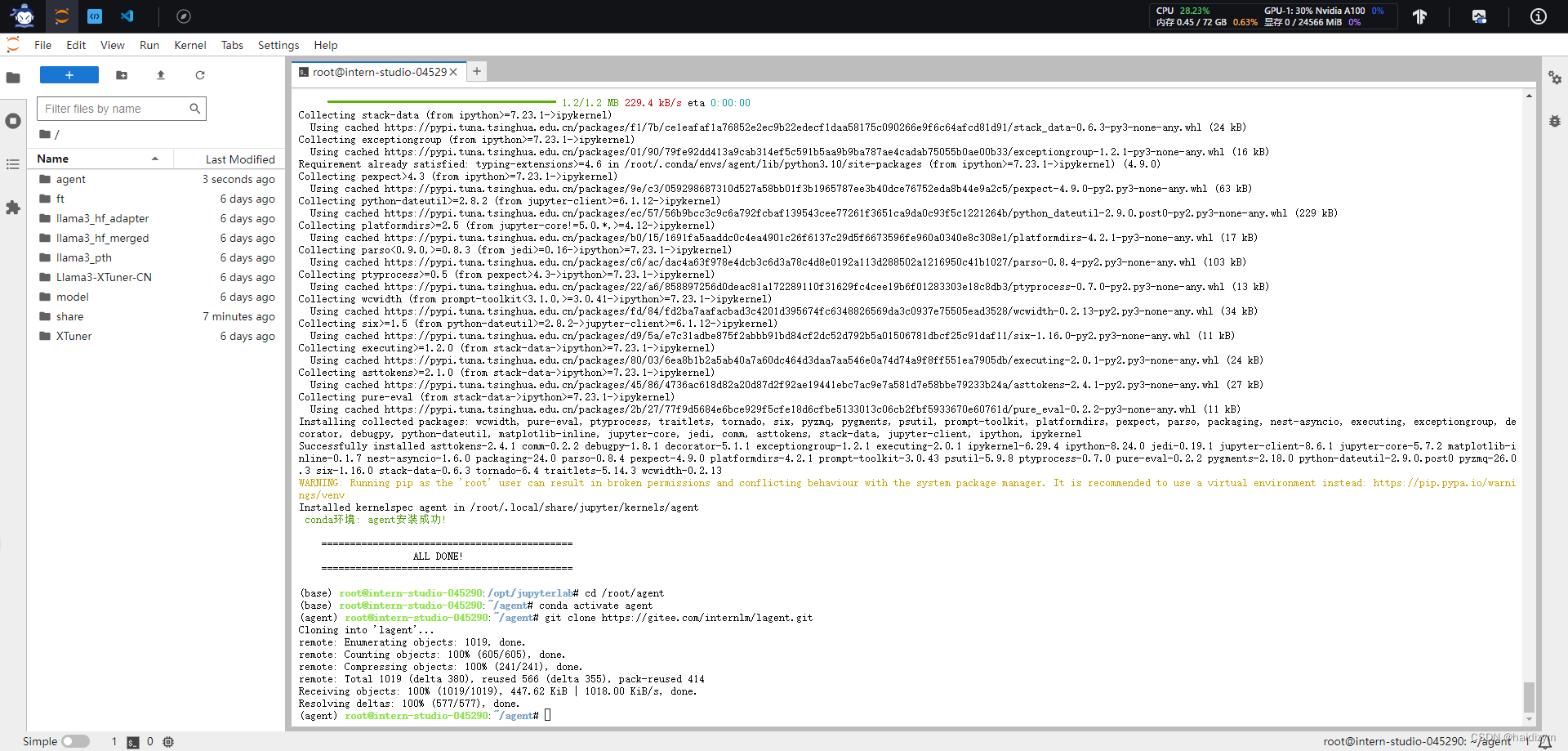

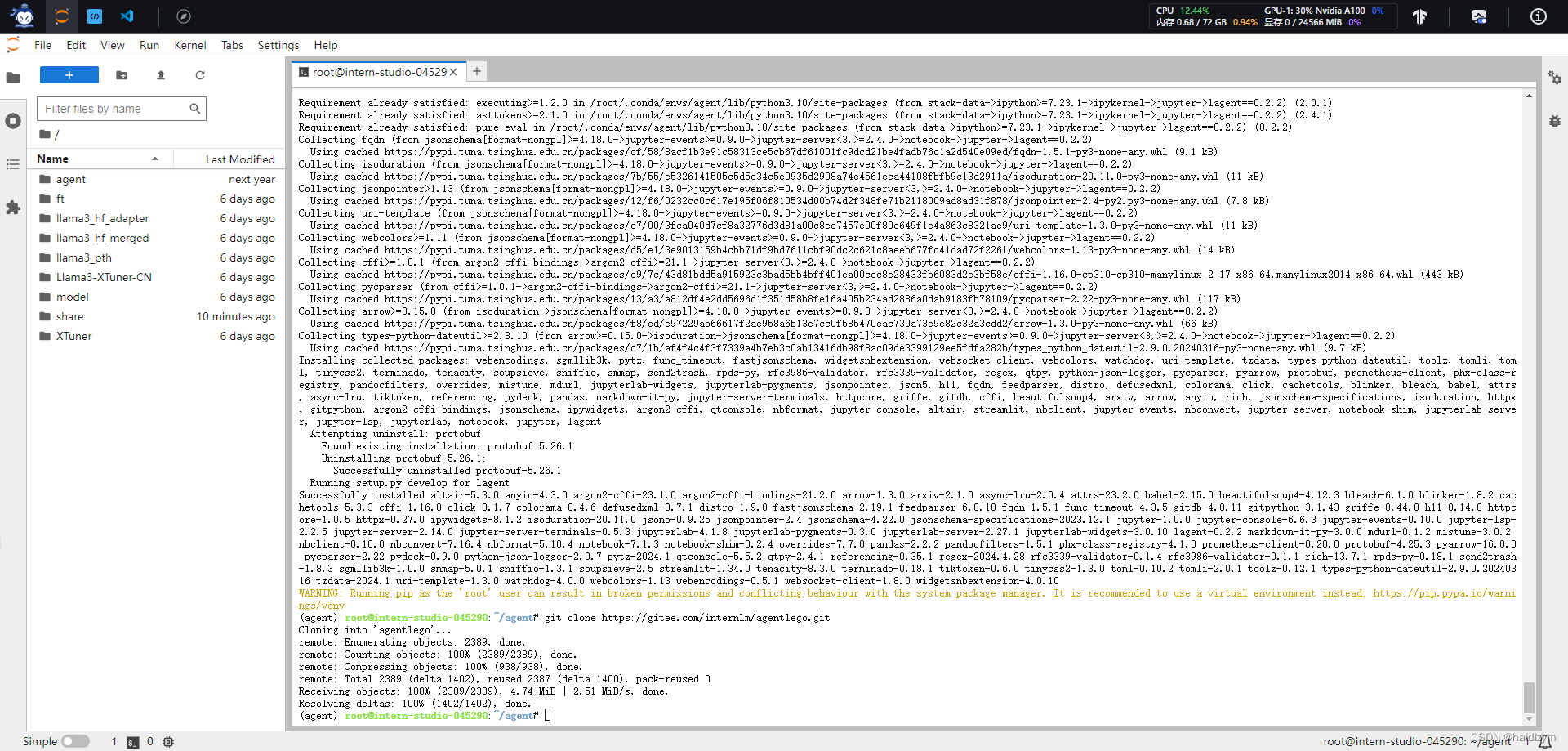

总环境配置:

#删除环境,重新开始

bash

conda remove --name agent --all

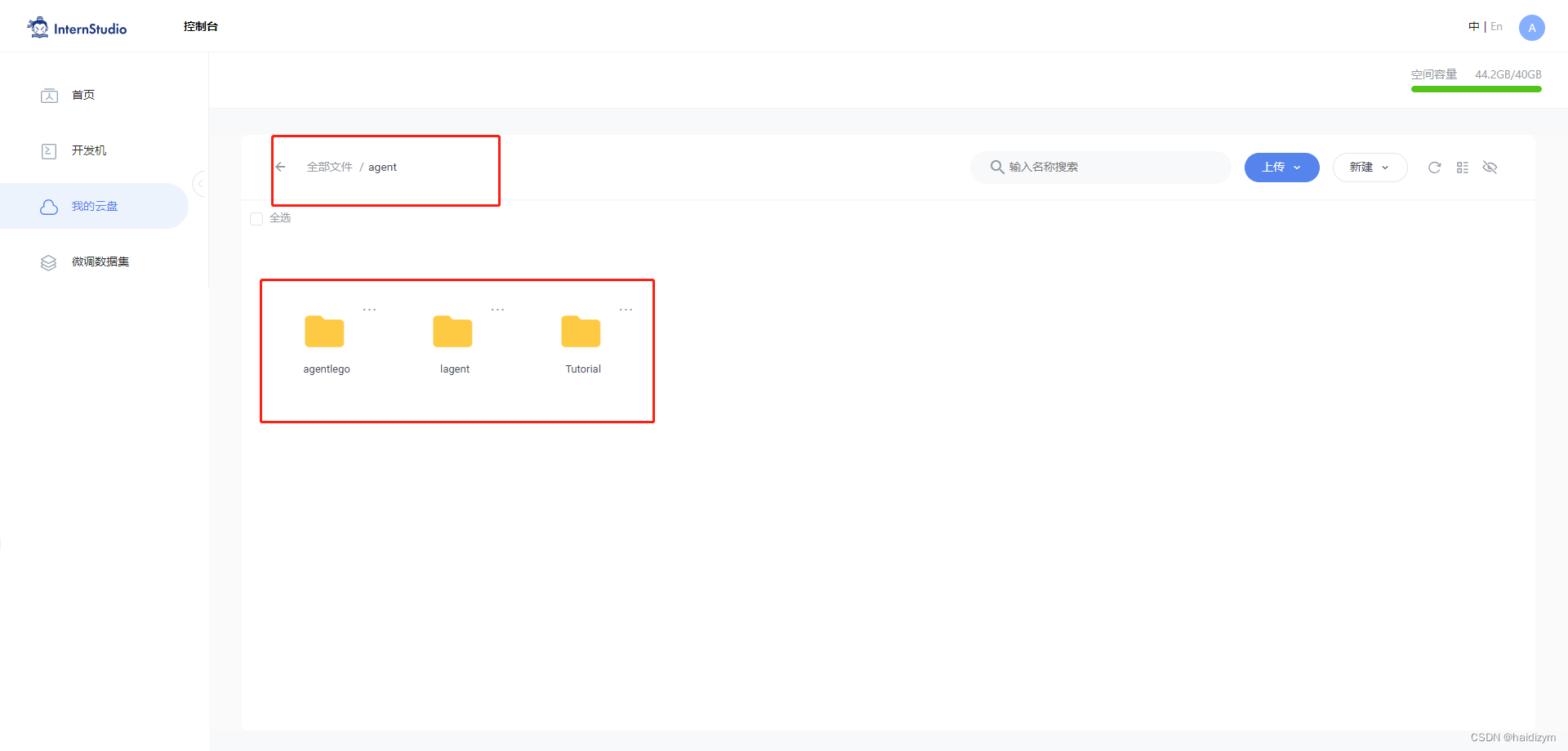

#删除云盘文件夹agent,重建文件夹

mkdir -p /root/agent

studio-conda -t agent -o pytorch-2.1.2

cd /root/agent

conda activate agent

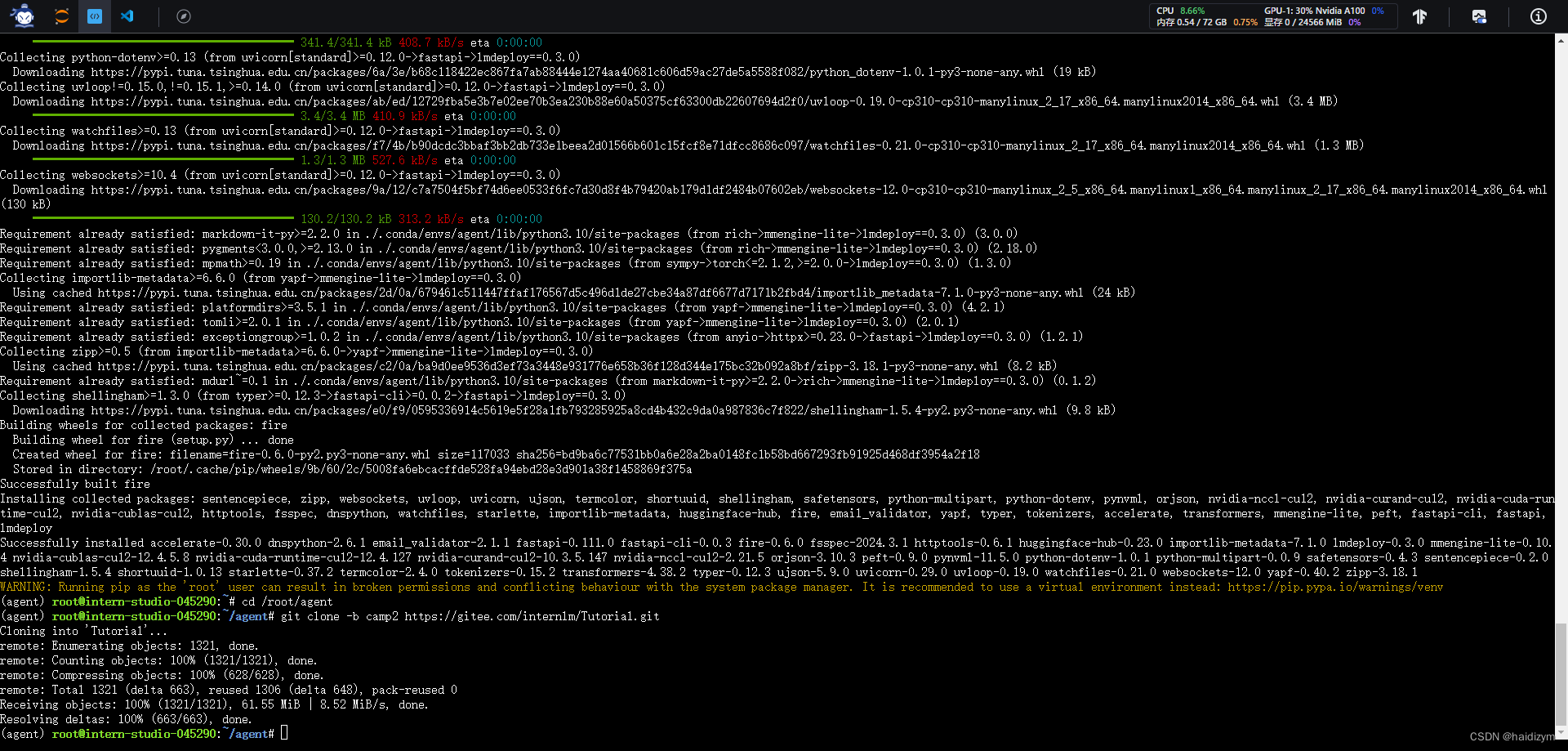

git clone https://gitee.com/internlm/lagent.git

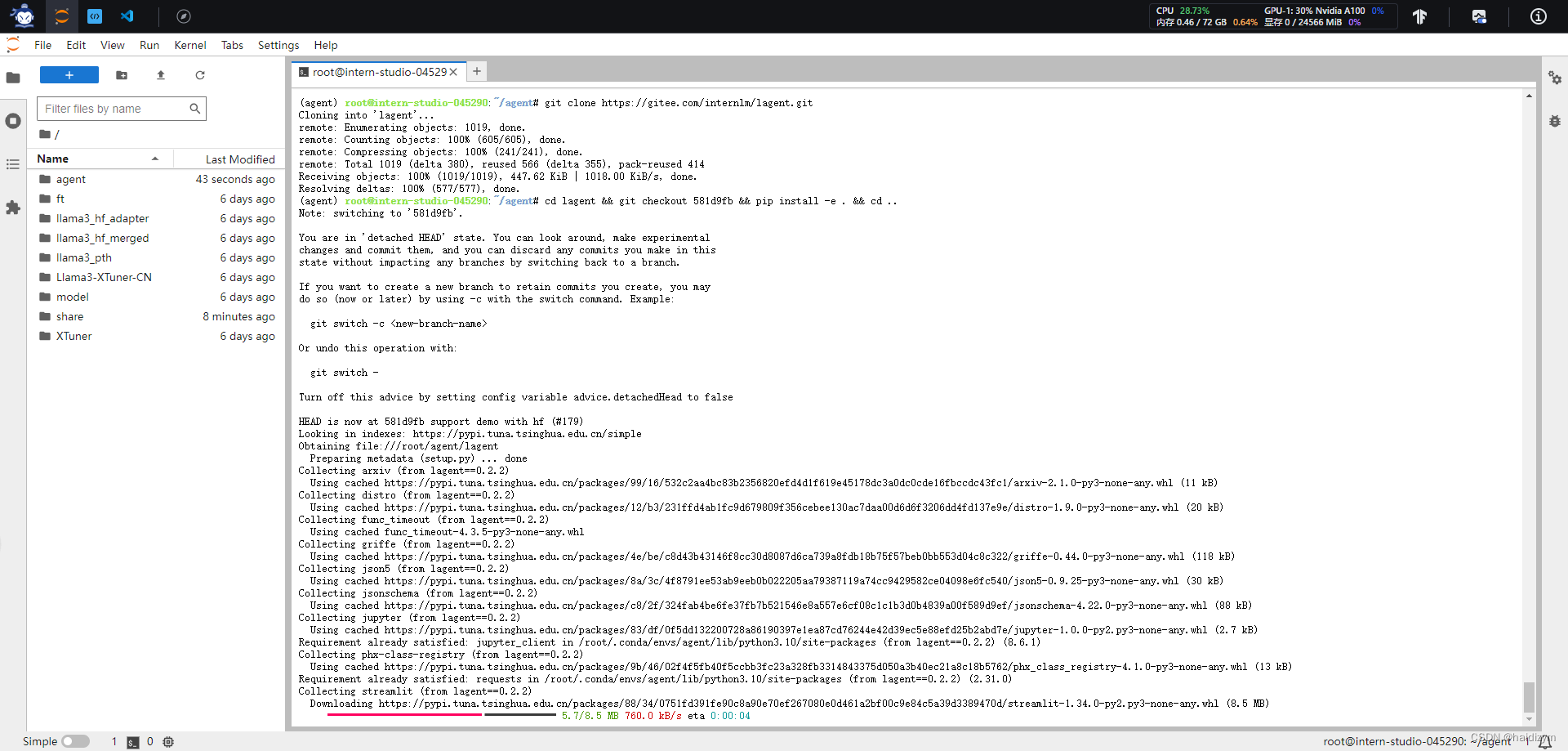

cd lagent && git checkout 581d9fb && pip install -e . && cd ..

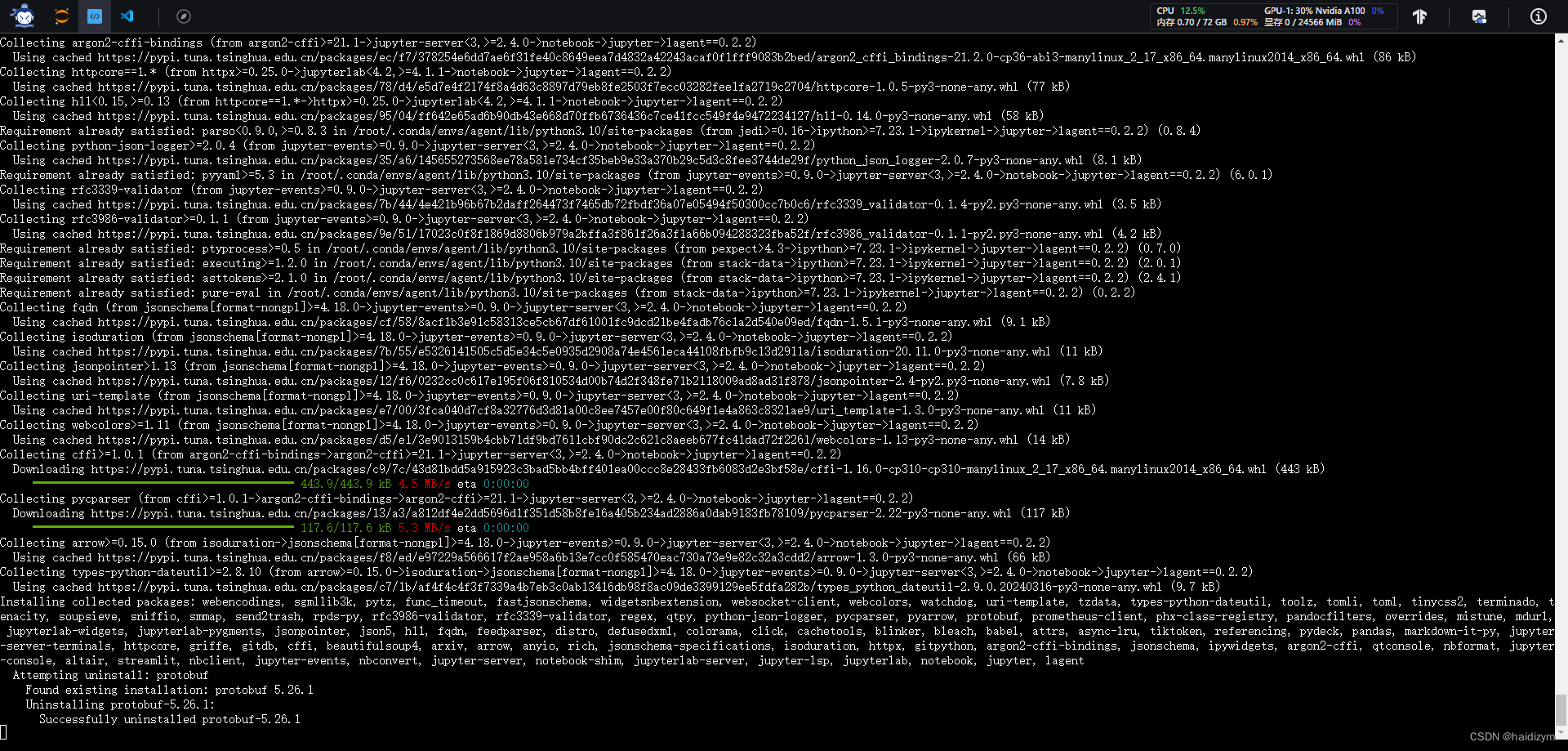

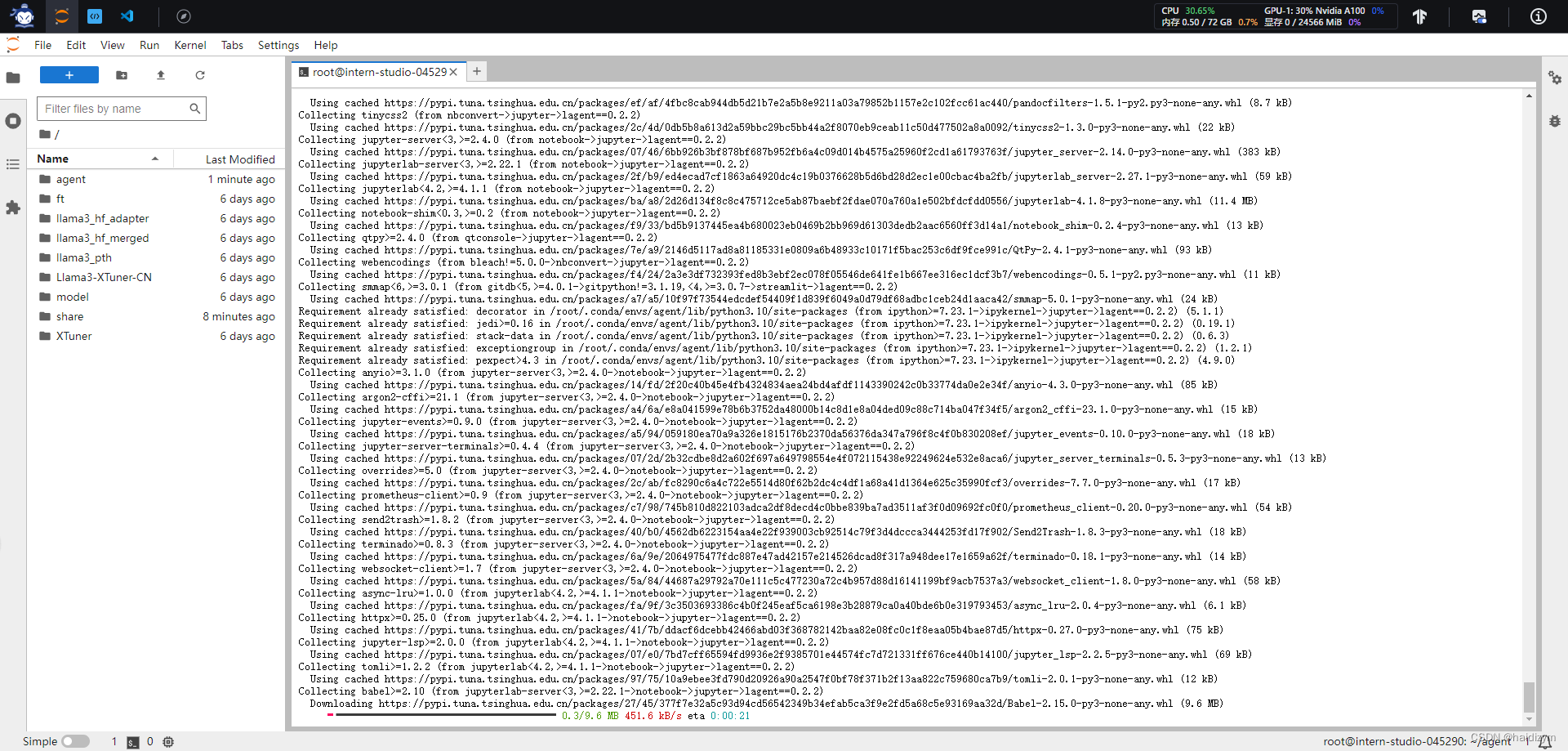

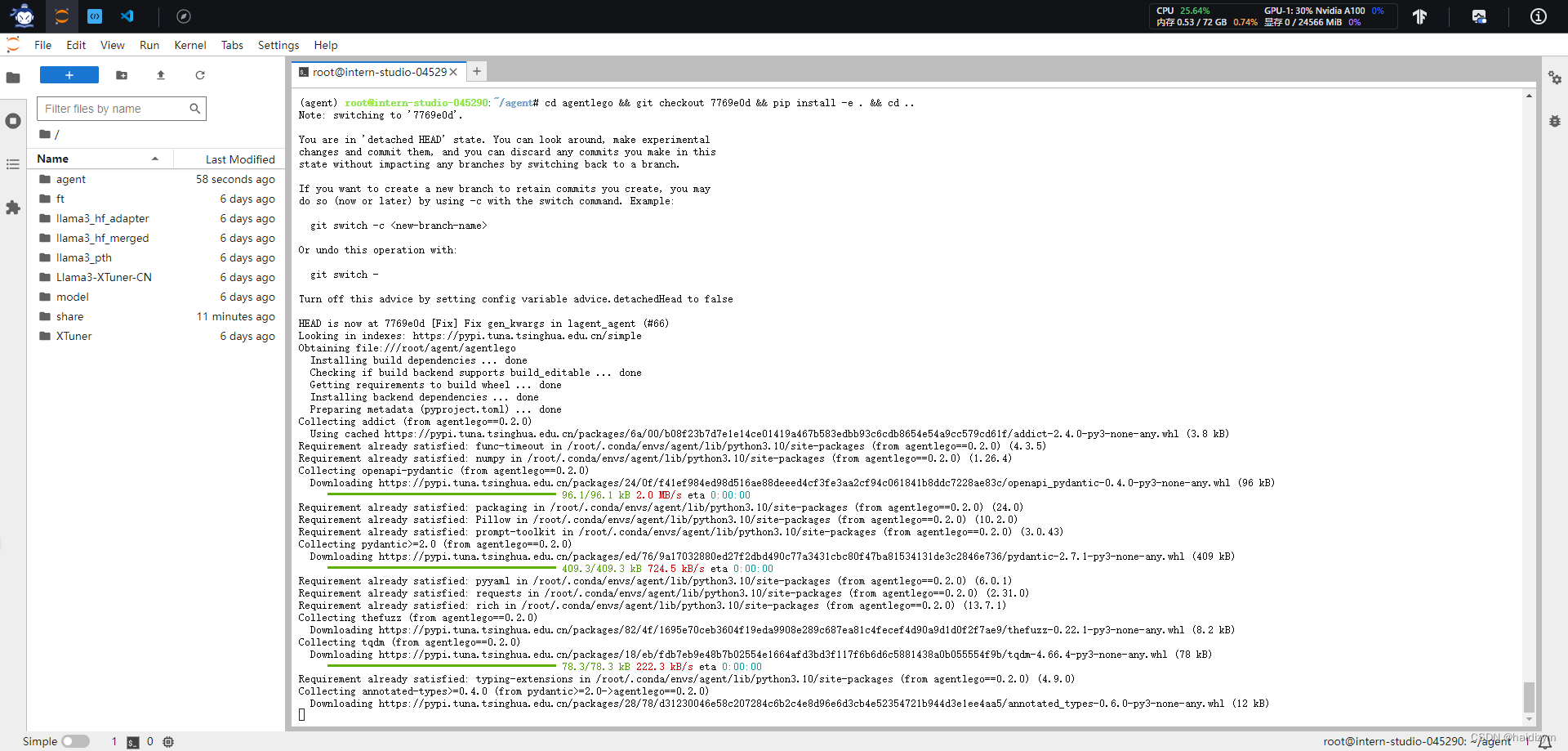

git clone https://gitee.com/internlm/agentlego.git

cd agentlego && git checkout 7769e0d && pip install -e . && cd ..

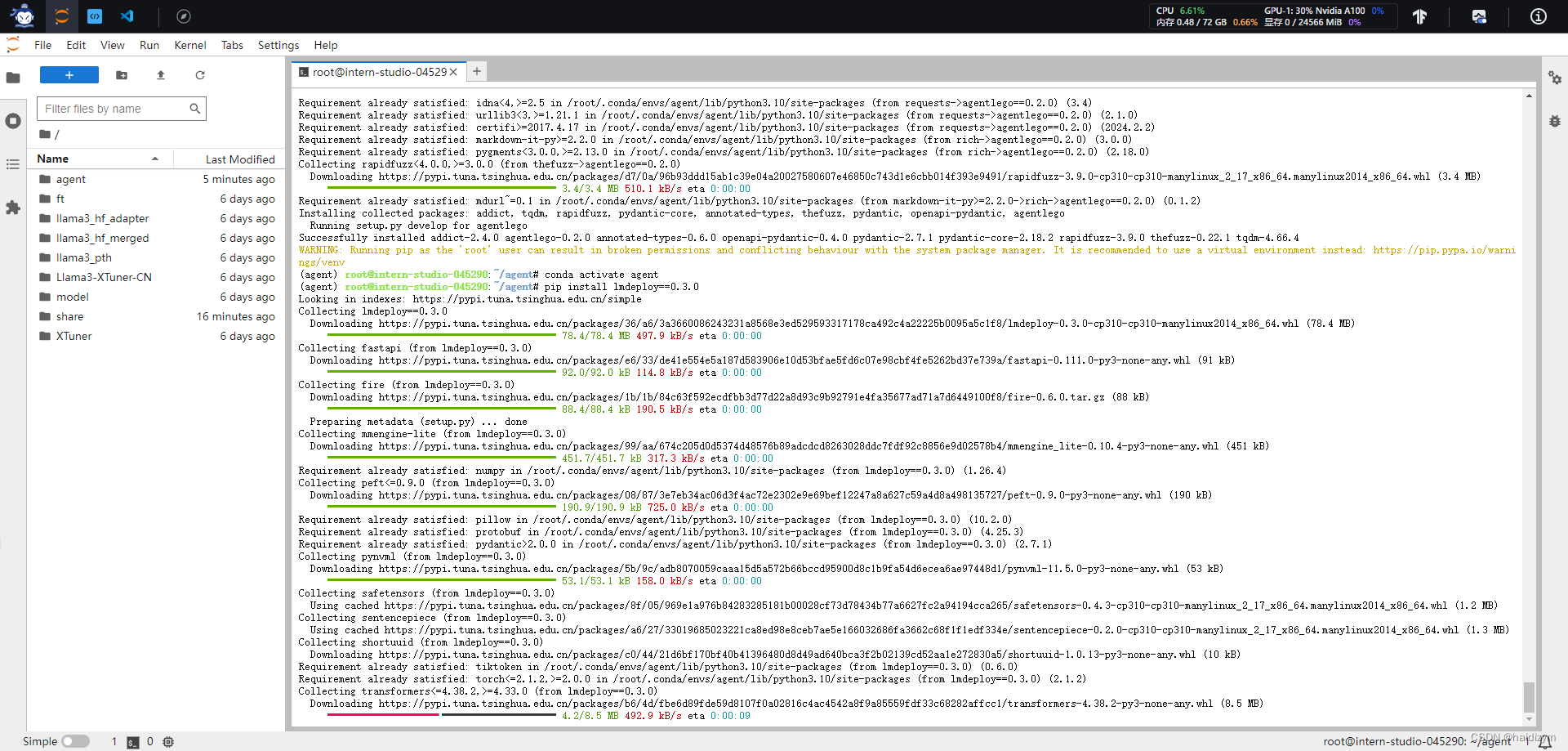

conda activate agent

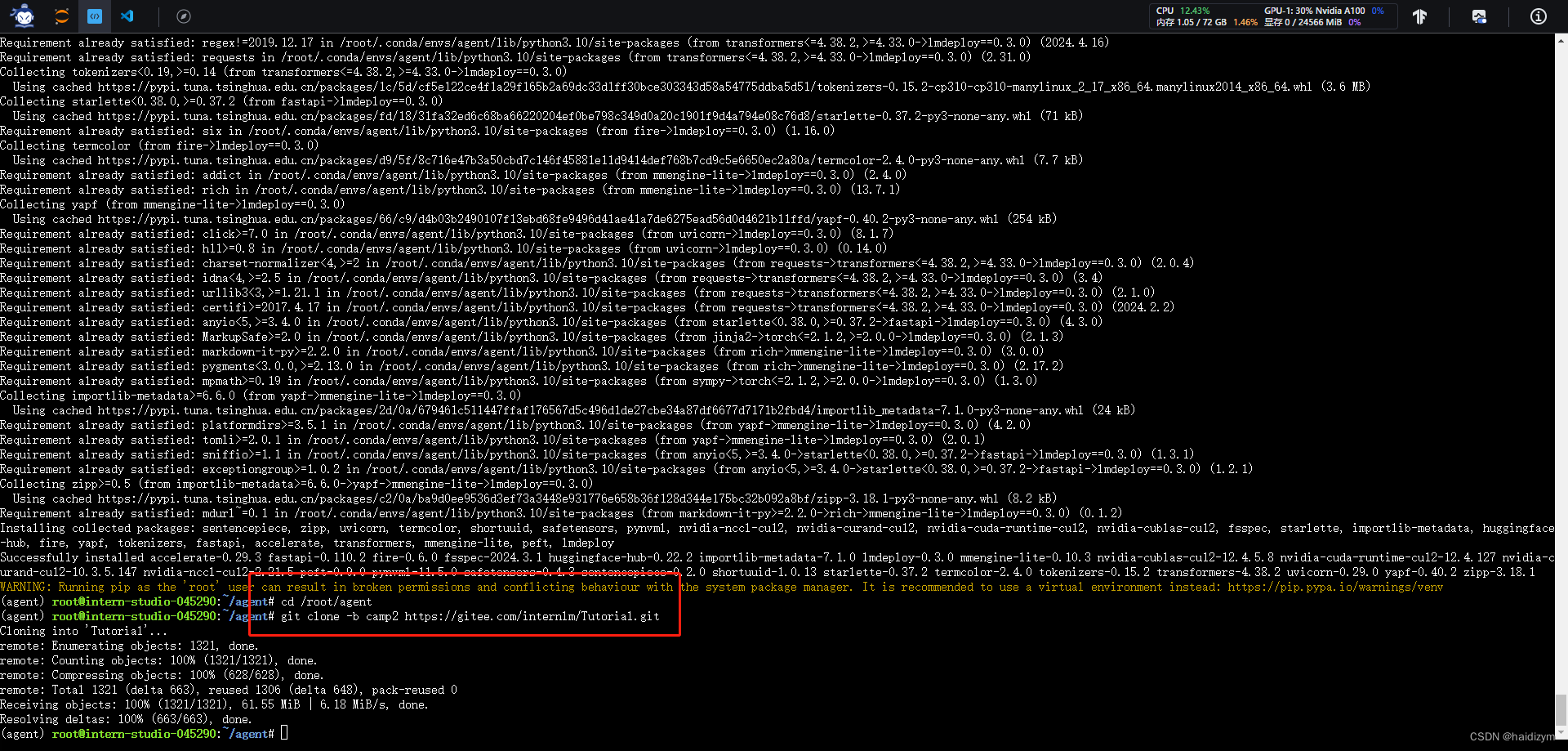

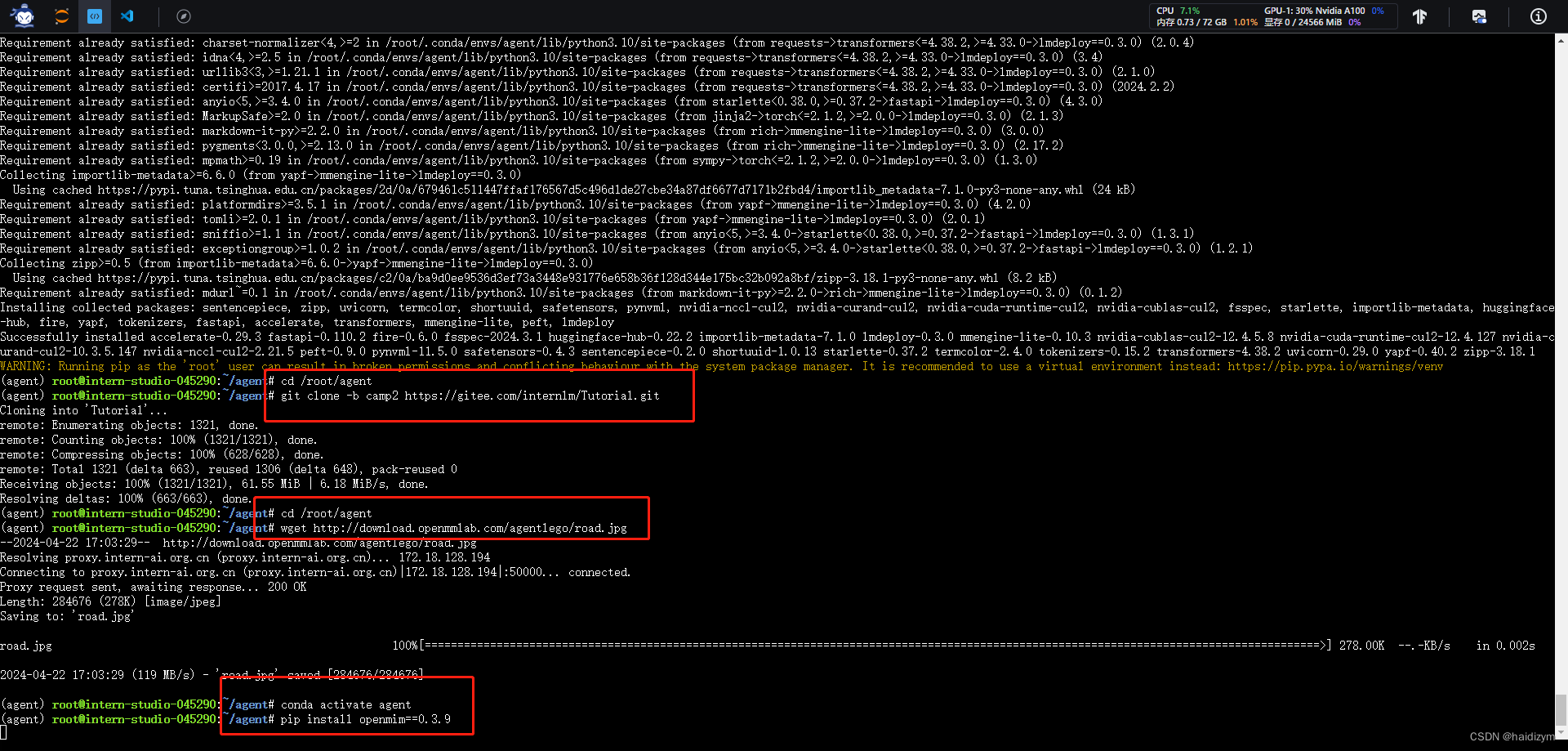

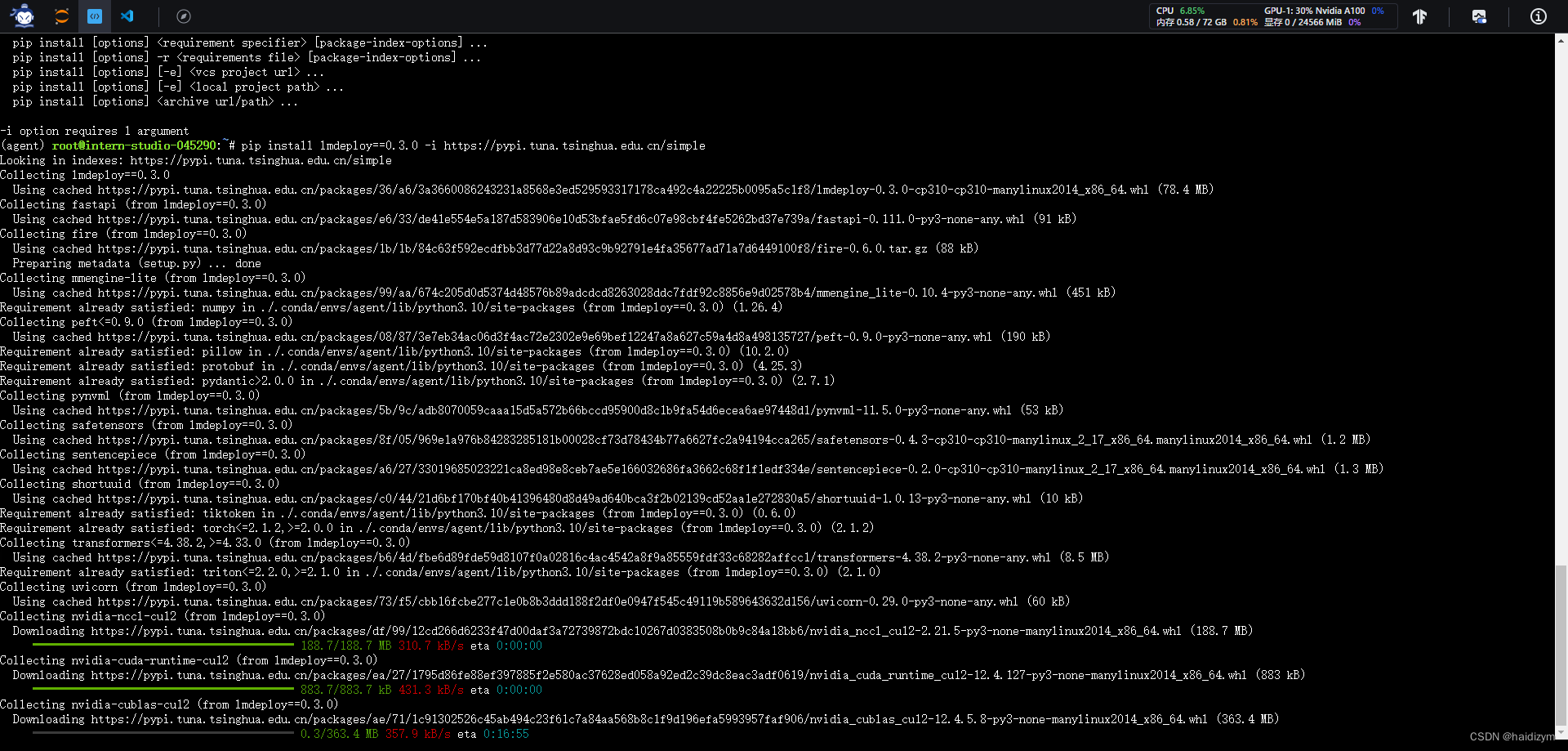

pip install lmdeploy==0.3.0

cd /root/agent

git clone -b camp2 https://gitee.com/internlm/Tutorial.git

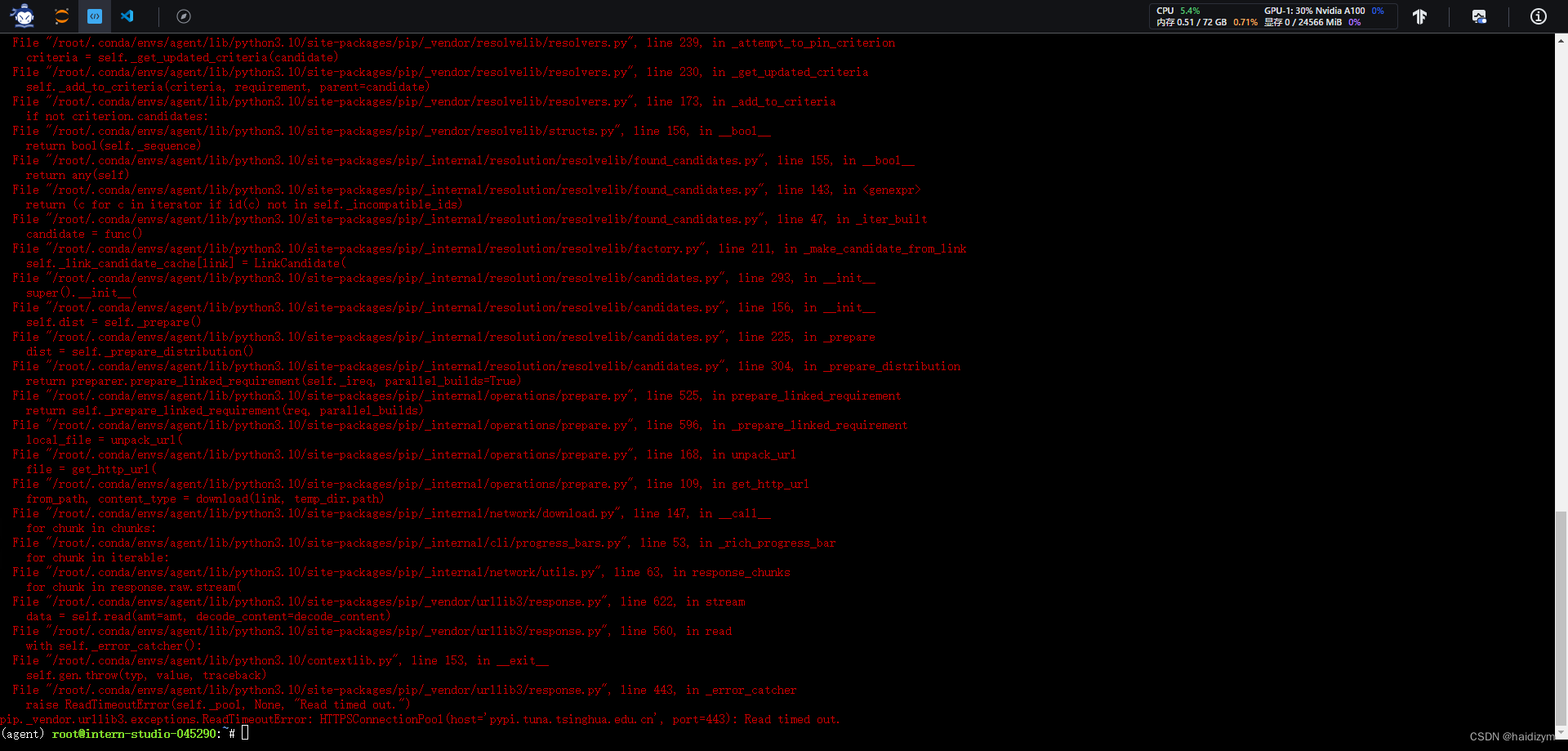

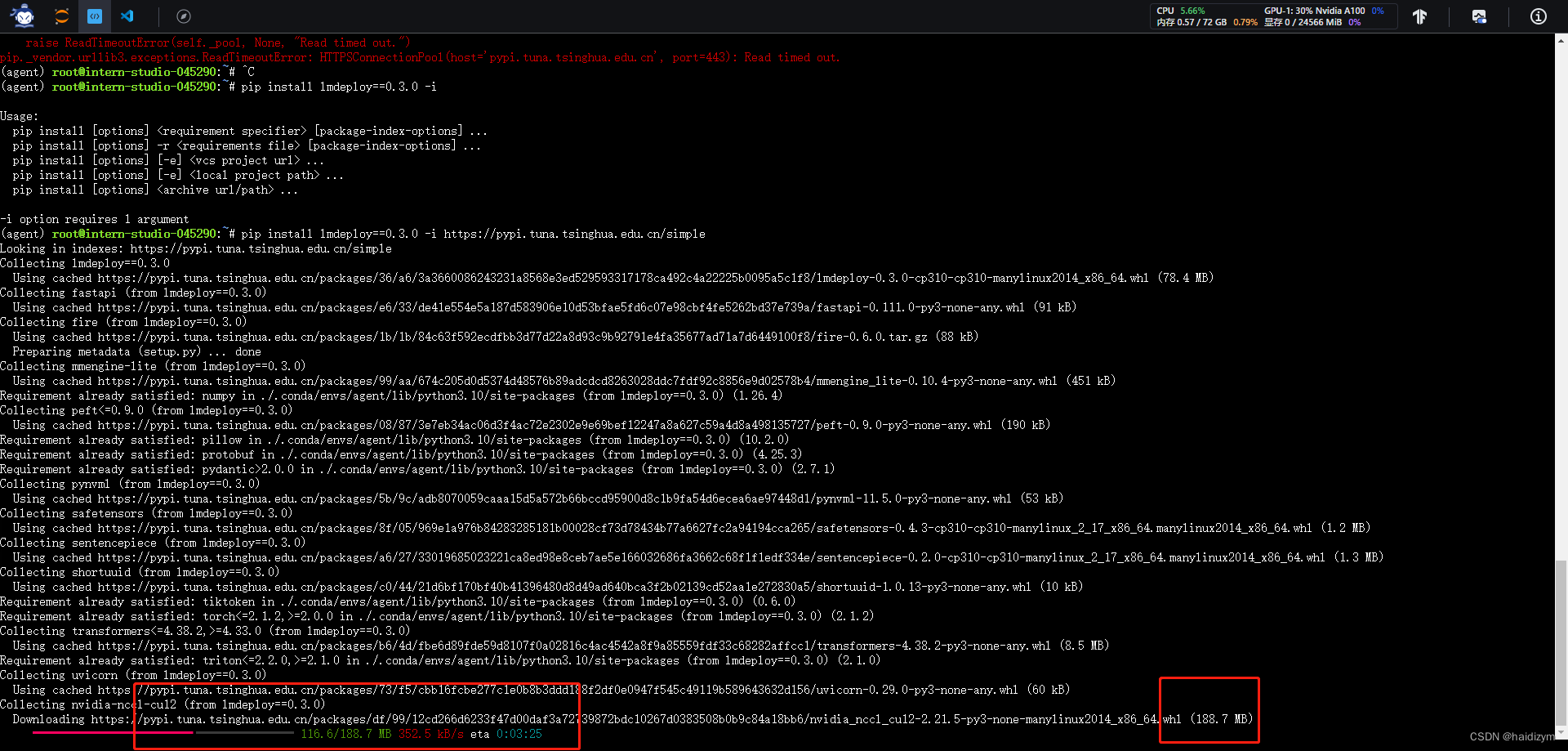

三个终端安装pip install lmdeploy==0.3.0都报错,

报错信息:

Traceback (most recent call last): File "/root/.conda/envs/agent/lib/python3.10/site-packages/pip/

代码修改为这样:

pip install lmdeploy==0.3.0 -i https://pypi.tuna.tsinghua.edu.cn/simple #支持断点下载,因为下载包文件特别大,网络不稳定的时候就加后面这个

成功跨越了!

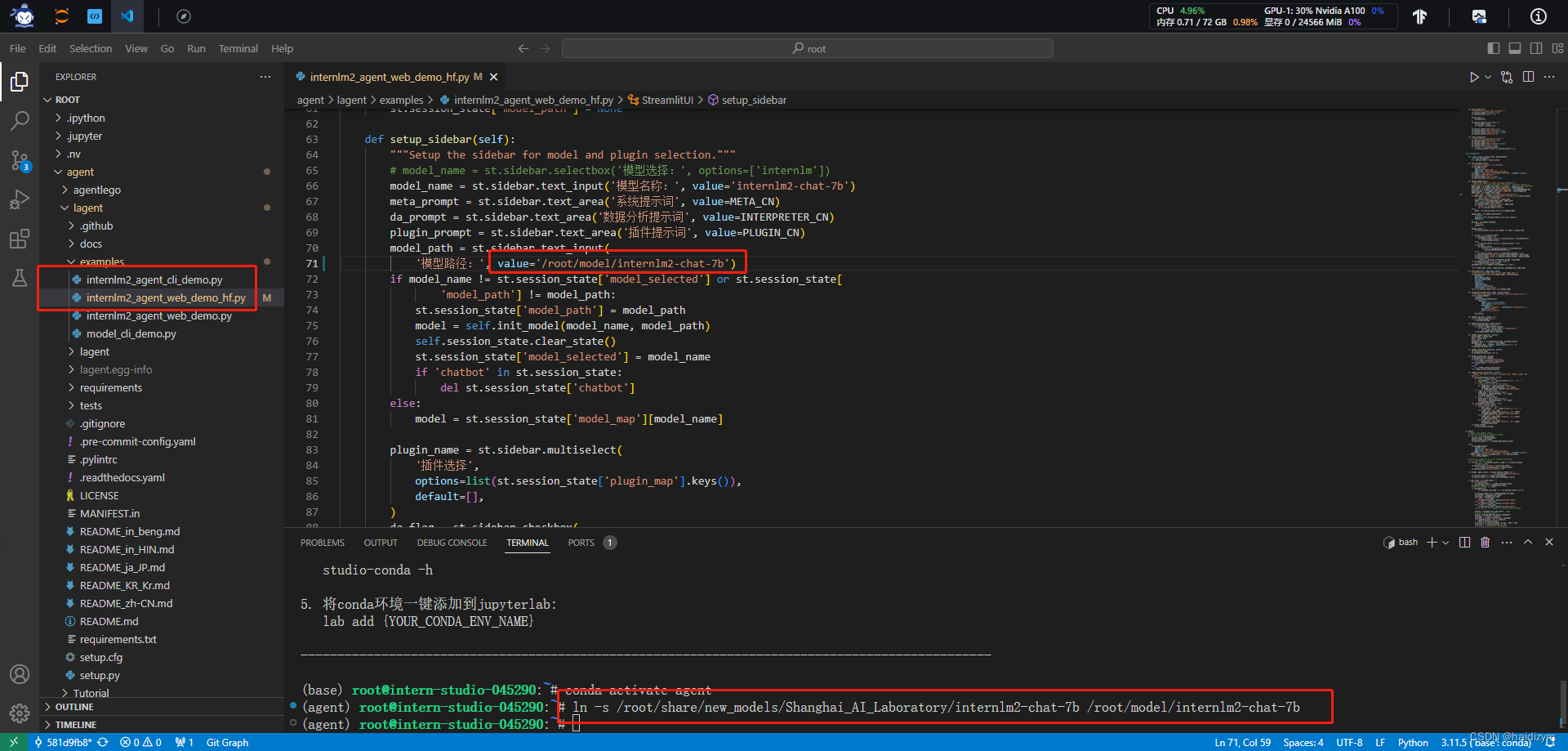

ln -s /root/share/new_models/Shanghai_AI_Laboratory/internlm2-chat-7b /root/model/internlm2-chat-7b

#构造软链接快捷访问方式,并把/root/demo/lagent/examples/internlm2_agent_web_demo_hf.py的约71行文件路径改为本地

#value='/root/model/internlm2-chat-7b'

pip install huggingface-hub==0.17.3

pip install transformers==4.34

pip install psutil==5.9.8

pip install accelerate==0.24.1

pip install streamlit==1.32.2

pip install matplotlib==3.8.3

pip install modelscope==1.9.5

pip install sentencepiece==0.1.99

问题

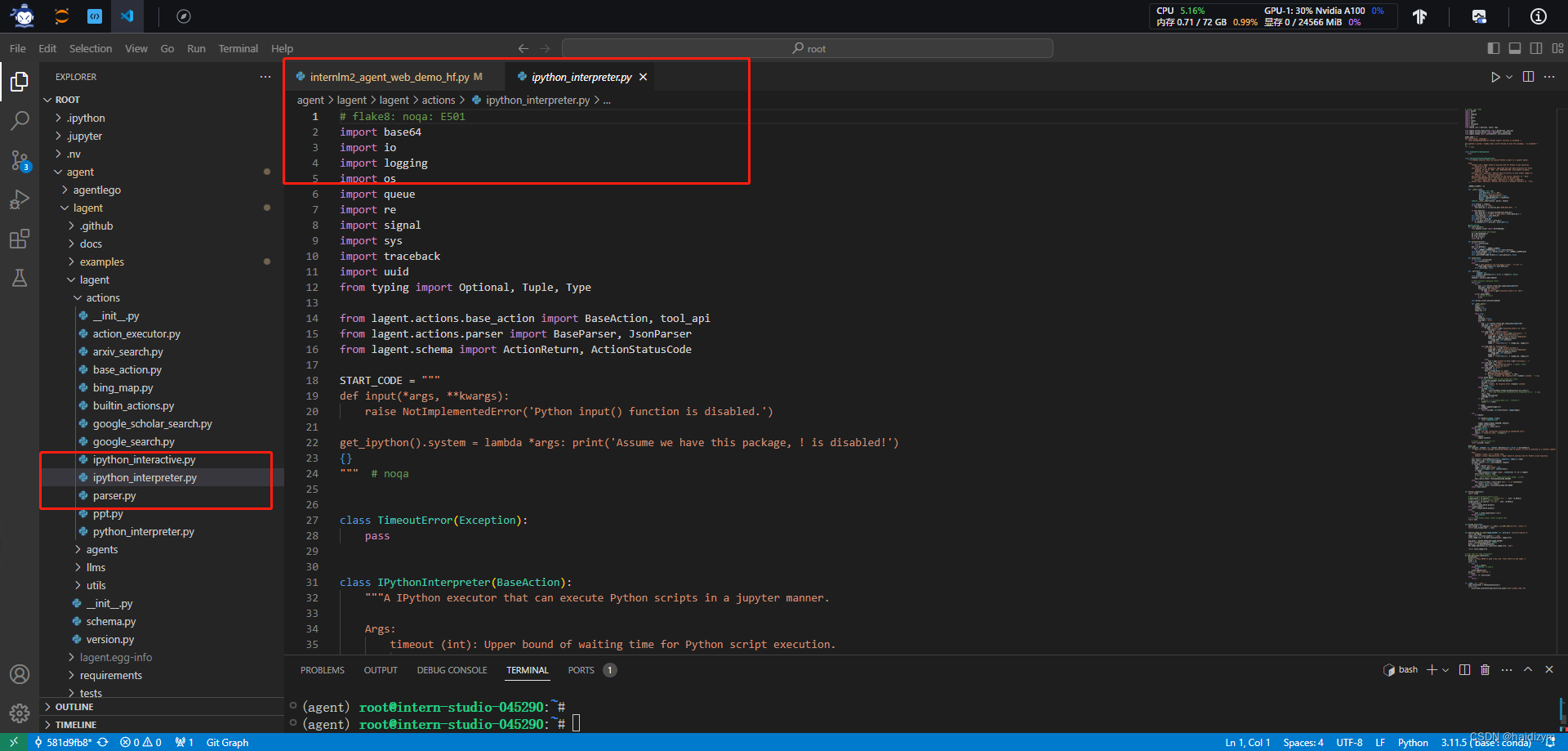

ipythoninterpreter(一个IPython执行器,可以以jupyter的方式执行Python脚本)

资源

#touch /root/agent/lagent/lagent/actions/ipython_interpreter.py

# flake8: noqa: E501

import base64

import io

import logging

import os

import queue

import re

import signal

import sys

import traceback

import uuid

from concurrent.futures import ThreadPoolExecutor, wait

from typing import Any, Dict, List, Optional, Tuple, Type, Union

from lagent.actions.base_action import BaseAction, tool_api

from lagent.actions.parser import BaseParser, JsonParser

from lagent.schema import ActionReturn, ActionStatusCode

START_CODE = """

def input(*args, **kwargs):

raise NotImplementedError('Python input() function is disabled.')

get_ipython().system = lambda *args: print('Assume we have this package, ! is disabled!')

{}

""" # noqa

class TimeoutError(Exception):

pass

class IPythonInterpreter(BaseAction):

"""A IPython executor that can execute Python scripts in a jupyter manner.

Args:

timeout (int): Upper bound of waiting time for Python script execution.

Defaults to 20.

user_data_dir (str, optional): Specified the user data directory for files

loading. If set to `ENV`, use `USER_DATA_DIR` environment variable.

Defaults to `ENV`.

work_dir (str, optional): Specify which directory to save output images to.

Defaults to ``'./work_dir/tmp_dir'``.

description (dict): The description of the action. Defaults to ``None``.

parser (Type[BaseParser]): The parser class to process the

action's inputs and outputs. Defaults to :class:`JsonParser`.

enable (bool, optional): Whether the action is enabled. Defaults to ``True``.

"""

_KERNEL_CLIENTS = {}

def __init__(self,

timeout: int = 20,

user_data_dir: str = 'ENV',

work_dir='./work_dir/tmp_dir',

description: Optional[dict] = None,

parser: Type[BaseParser] = JsonParser,

enable: bool = True):

super().__init__(description, parser, enable)

self.timeout = timeout

if user_data_dir == 'ENV':

user_data_dir = os.environ.get('USER_DATA_DIR', '')

if user_data_dir:

user_data_dir = os.path.dirname(user_data_dir)

user_data_dir = f"import os\nos.chdir('{user_data_dir}')"

self.user_data_dir = user_data_dir

self._initialized = False

self.work_dir = work_dir

if not os.path.exists(self.work_dir):

os.makedirs(self.work_dir, exist_ok=True)

@staticmethod

def start_kernel():

from jupyter_client import KernelManager

# start the kernel and manager

km = KernelManager()

km.start_kernel()

kc = km.client()

return km, kc

def initialize(self):

if self._initialized:

return

pid = os.getpid()

if pid not in self._KERNEL_CLIENTS:

self._KERNEL_CLIENTS[pid] = self.start_kernel()

self.kernel_manager, self.kernel_client = self._KERNEL_CLIENTS[pid]

self._initialized = True

self._call(START_CODE.format(self.user_data_dir), None)

def reset(self):

if not self._initialized:

self.initialize()

else:

code = "get_ipython().run_line_magic('reset', '-f')\n" + \

START_CODE.format(self.user_data_dir)

self._call(code, None)

def _call(self,

command: str,

timeout: Optional[int] = None) -> Tuple[str, bool]:

self.initialize()

command = extract_code(command)

# check previous remaining result

while True:

try:

msg = self.kernel_client.get_iopub_msg(timeout=5)

msg_type = msg['msg_type']

if msg_type == 'status':

if msg['content'].get('execution_state') == 'idle':

break

except queue.Empty:

# assume no result

break

self.kernel_client.execute(command)

def _inner_call():

result = ''

images = []

succeed = True

image_idx = 0

while True:

text = ''

image = ''

finished = False

msg_type = 'error'

try:

msg = self.kernel_client.get_iopub_msg(timeout=20)

msg_type = msg['msg_type']

if msg_type == 'status':

if msg['content'].get('execution_state') == 'idle':

finished = True

elif msg_type == 'execute_result':

text = msg['content']['data'].get('text/plain', '')

if 'image/png' in msg['content']['data']:

image_b64 = msg['content']['data']['image/png']

image_url = publish_image_to_local(

image_b64, self.work_dir)

image_idx += 1

image = '' % (image_idx, image_url)

elif msg_type == 'display_data':

if 'image/png' in msg['content']['data']:

image_b64 = msg['content']['data']['image/png']

image_url = publish_image_to_local(

image_b64, self.work_dir)

image_idx += 1

image = '' % (image_idx, image_url)

else:

text = msg['content']['data'].get('text/plain', '')

elif msg_type == 'stream':

msg_type = msg['content']['name'] # stdout, stderr

text = msg['content']['text']

elif msg_type == 'error':

succeed = False

text = escape_ansi('\n'.join(

msg['content']['traceback']))

if 'M6_CODE_INTERPRETER_TIMEOUT' in text:

text = f'Timeout. No response after {timeout} seconds.' # noqa

except queue.Empty:

# stop current task in case break next input.

self.kernel_manager.interrupt_kernel()

succeed = False

text = f'Timeout. No response after {timeout} seconds.'

finished = True

except Exception:

succeed = False

msg = ''.join(traceback.format_exception(*sys.exc_info()))

# text = 'The code interpreter encountered an unexpected error.' # noqa

text = msg

logging.warning(msg)

finished = True

if text:

# result += f'\n\n{msg_type}:\n\n```\n{text}\n```'

result += f'{text}'

if image:

images.append(image_url)

if finished:

return succeed, dict(text=result, image=images)

try:

if timeout:

def handler(signum, frame):

raise TimeoutError()

signal.signal(signal.SIGALRM, handler)

signal.alarm(timeout)

succeed, result = _inner_call()

except TimeoutError:

succeed = False

text = 'The code interpreter encountered an unexpected error.'

result = f'\n\nerror:\n\n```\n{text}\n```'

finally:

if timeout:

signal.alarm(0)

# result = result.strip('\n')

return succeed, result

@tool_api

def run(self, command: str, timeout: Optional[int] = None) -> ActionReturn:

r"""When you send a message containing Python code to python, it will be executed in a stateful Jupyter notebook environment. python will respond with the output of the execution or time out after 60.0 seconds. The drive at '/mnt/data' can be used to save and persist user files. Internet access for this session is disabled. Do not make external web requests or API calls as they will fail.

Args:

command (:class:`str`): Python code

timeout (:class:`Optional[int]`): Upper bound of waiting time for Python script execution.

"""

tool_return = ActionReturn(url=None, args=None, type=self.name)

tool_return.args = dict(text=command)

succeed, result = self._call(command, timeout)

if succeed:

text = result['text']

image = result.get('image', [])

resp = [dict(type='text', content=text)]

if image:

resp.extend([dict(type='image', content=im) for im in image])

tool_return.result = resp

# tool_return.result = dict(

# text=result['text'], image=result.get('image', [])[0])

tool_return.state = ActionStatusCode.SUCCESS

else:

tool_return.errmsg = result.get('text', '') if isinstance(

result, dict) else result

tool_return.state = ActionStatusCode.API_ERROR

return tool_return

def extract_code(text):

import json5

# Match triple backtick blocks first

triple_match = re.search(r'```[^\n]*\n(.+?)```', text, re.DOTALL)

# Match single backtick blocks second

single_match = re.search(r'`([^`]*)`', text, re.DOTALL)

if triple_match:

text = triple_match.group(1)

elif single_match:

text = single_match.group(1)

else:

try:

text = json5.loads(text)['code']

except Exception:

pass

# If no code blocks found, return original text

return text

def escape_ansi(line):

ansi_escape = re.compile(r'(?:\x1B[@-_]|[\x80-\x9F])[0-?]*[ -/]*[@-~]')

return ansi_escape.sub('', line)

def publish_image_to_local(image_base64: str, work_dir='./work_dir/tmp_dir'):

import PIL.Image

image_file = str(uuid.uuid4()) + '.png'

local_image_file = os.path.join(work_dir, image_file)

png_bytes = base64.b64decode(image_base64)

assert isinstance(png_bytes, bytes)

bytes_io = io.BytesIO(png_bytes)

PIL.Image.open(bytes_io).save(local_image_file, 'png')

return local_image_file

# local test for code interpreter

def get_multiline_input(hint):

print(hint)

print('// Press ENTER to make a new line. Press CTRL-D to end input.')

lines = []

while True:

try:

line = input()

except EOFError: # CTRL-D

break

lines.append(line)

print('// Input received.')

if lines:

return '\n'.join(lines)

else:

return ''

class BatchIPythonInterpreter(BaseAction):

"""A IPython executor that can execute Python scripts in batches in a jupyter manner."""

def __init__(

self,

python_interpreter: Dict[str, Any],

description: Optional[dict] = None,

parser: Type[BaseParser] = JsonParser,

enable: bool = True,

):

self.python_interpreter_init_args = python_interpreter

self.index2python_interpreter = {}

super().__init__(description, parser, enable)

def __call__(self,

commands: Union[str, List[str]],

indexes: Union[int, List[int]] = None) -> ActionReturn:

if isinstance(commands, list):

batch_size = len(commands)

is_batch = True

else:

batch_size = 1

commands = [commands]

is_batch = False

if indexes is None:

indexes = range(batch_size)

elif isinstance(indexes, int):

indexes = [indexes]

if len(indexes) != batch_size or len(indexes) != len(set(indexes)):

raise ValueError(

'the size of `indexes` must equal that of `commands`')

tasks = []

with ThreadPoolExecutor(max_workers=batch_size) as pool:

for idx, command in zip(indexes, commands):

interpreter = self.index2python_interpreter.setdefault(

idx,

IPythonInterpreter(**self.python_interpreter_init_args))

tasks.append(pool.submit(interpreter.run, command))

wait(tasks)

results = [task.result() for task in tasks]

if not is_batch:

return results[0]

return results

def reset(self):

self.index2python_interpreter.clear()

if __name__ == '__main__':

code_interpreter = IPythonInterpreter()

while True:

print(code_interpreter(get_multiline_input('Enter python code:')))

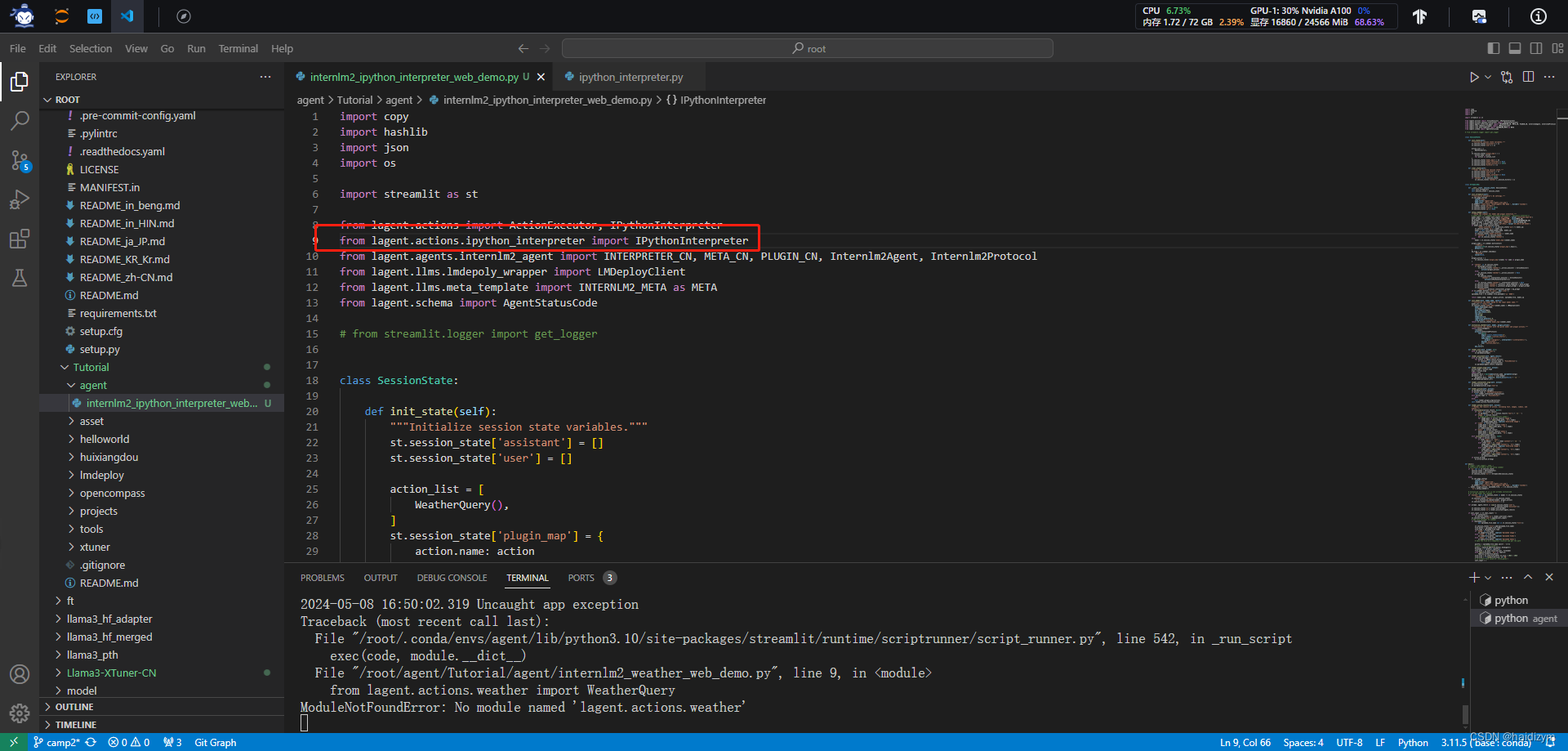

打开两个文件/root/agent/Tutorial/agent/internlm2_weather_web_demo.py和root/agent/lagent/lagent/actions/ipython_interpreter.py

/root/agent/Tutorial/agent/internlm2_weather_web_demo.py改名为/root/agent/Tutorial/agent/internlm2_ipython_interpreter_web_demo.py

#/root/agent/Tutorial/agent/internlm2_ipython_interpreter_web_demo.py修改文档

import copy

import hashlib

import json

import os

import streamlit as st

from lagent.actions import ActionExecutor, IPythonInterpreter

from lagent.actions.ipython_interpreter import IPythonInterpreter

from lagent.agents.internlm2_agent import INTERPRETER_CN, META_CN, PLUGIN_CN, Internlm2Agent, Internlm2Protocol

from lagent.llms.lmdepoly_wrapper import LMDeployClient

from lagent.llms.meta_template import INTERNLM2_META as META

from lagent.schema import AgentStatusCode

# from streamlit.logger import get_logger

class SessionState:

def init_state(self):

"""Initialize session state variables."""

st.session_state['assistant'] = []

st.session_state['user'] = []

action_list = [

IPythonInterpreter(),

]

st.session_state['plugin_map'] = {

action.name: action

for action in action_list

}

st.session_state['model_map'] = {}

st.session_state['model_selected'] = None

st.session_state['plugin_actions'] = set()

st.session_state['history'] = []

def clear_state(self):

"""Clear the existing session state."""

st.session_state['assistant'] = []

st.session_state['user'] = []

st.session_state['model_selected'] = None

st.session_state['file'] = set()

if 'chatbot' in st.session_state:

st.session_state['chatbot']._session_history = []

class StreamlitUI:

def __init__(self, session_state: SessionState):

self.init_streamlit()

self.session_state = session_state

def init_streamlit(self):

"""Initialize Streamlit's UI settings."""

st.set_page_config(

layout='wide',

page_title='lagent-web',

page_icon='./docs/imgs/lagent_icon.png')

st.header(':robot_face: :blue[Lagent] Web Demo ', divider='rainbow')

st.sidebar.title('模型控制')

st.session_state['file'] = set()

st.session_state['ip'] = None

def setup_sidebar(self):

"""Setup the sidebar for model and plugin selection."""

# model_name = st.sidebar.selectbox('模型选择:', options=['internlm'])

model_name = st.sidebar.text_input('模型名称:', value='internlm2-chat-7b')

meta_prompt = st.sidebar.text_area('系统提示词', value=META_CN)

da_prompt = st.sidebar.text_area('数据分析提示词', value=INTERPRETER_CN)

plugin_prompt = st.sidebar.text_area('插件提示词', value=PLUGIN_CN)

model_ip = st.sidebar.text_input('模型IP:', value='10.140.0.220:23333')

if model_name != st.session_state[

'model_selected'] or st.session_state['ip'] != model_ip:

st.session_state['ip'] = model_ip

model = self.init_model(model_name, model_ip)

self.session_state.clear_state()

st.session_state['model_selected'] = model_name

if 'chatbot' in st.session_state:

del st.session_state['chatbot']

else:

model = st.session_state['model_map'][model_name]

plugin_name = st.sidebar.multiselect(

'插件选择',

options=list(st.session_state['plugin_map'].keys()),

default=[],

)

da_flag = st.sidebar.checkbox(

'数据分析',

value=False,

)

plugin_action = [

st.session_state['plugin_map'][name] for name in plugin_name

]

if 'chatbot' in st.session_state:

if len(plugin_action) > 0:

st.session_state['chatbot']._action_executor = ActionExecutor(

actions=plugin_action)

else:

st.session_state['chatbot']._action_executor = None

if da_flag:

st.session_state[

'chatbot']._interpreter_executor = ActionExecutor(

actions=[IPythonInterpreter()])

else:

st.session_state['chatbot']._interpreter_executor = None

st.session_state['chatbot']._protocol._meta_template = meta_prompt

st.session_state['chatbot']._protocol.plugin_prompt = plugin_prompt

st.session_state[

'chatbot']._protocol.interpreter_prompt = da_prompt

if st.sidebar.button('清空对话', key='clear'):

self.session_state.clear_state()

uploaded_file = st.sidebar.file_uploader('上传文件')

return model_name, model, plugin_action, uploaded_file, model_ip

def init_model(self, model_name, ip=None):

"""Initialize the model based on the input model name."""

model_url = f'http://{ip}'

st.session_state['model_map'][model_name] = LMDeployClient(

model_name=model_name,

url=model_url,

meta_template=META,

max_new_tokens=1024,

top_p=0.8,

top_k=100,

temperature=0,

repetition_penalty=1.0,

stop_words=['<|im_end|>'])

return st.session_state['model_map'][model_name]

def initialize_chatbot(self, model, plugin_action):

"""Initialize the chatbot with the given model and plugin actions."""

return Internlm2Agent(

llm=model,

protocol=Internlm2Protocol(

tool=dict(

begin='{start_token}{name}\n',

start_token='<|action_start|>',

name_map=dict(

plugin='<|plugin|>', interpreter='<|interpreter|>'),

belong='assistant',

end='<|action_end|>\n',

), ),

max_turn=7)

def render_user(self, prompt: str):

with st.chat_message('user'):

st.markdown(prompt)

def render_assistant(self, agent_return):

with st.chat_message('assistant'):

for action in agent_return.actions:

if (action) and (action.type != 'FinishAction'):

self.render_action(action)

st.markdown(agent_return.response)

def render_plugin_args(self, action):

action_name = action.type

args = action.args

import json

parameter_dict = dict(name=action_name, parameters=args)

parameter_str = '```json\n' + json.dumps(

parameter_dict, indent=4, ensure_ascii=False) + '\n```'

st.markdown(parameter_str)

def render_interpreter_args(self, action):

st.info(action.type)

st.markdown(action.args['text'])

def render_action(self, action):

st.markdown(action.thought)

if action.type == 'IPythonInterpreter':

self.render_interpreter_args(action)

elif action.type == 'FinishAction':

pass

else:

self.render_plugin_args(action)

self.render_action_results(action)

def render_action_results(self, action):

"""Render the results of action, including text, images, videos, and

audios."""

if (isinstance(action.result, dict)):

if 'text' in action.result:

st.markdown('```\n' + action.result['text'] + '\n```')

if 'image' in action.result:

# image_path = action.result['image']

for image_path in action.result['image']:

image_data = open(image_path, 'rb').read()

st.image(image_data, caption='Generated Image')

if 'video' in action.result:

video_data = action.result['video']

video_data = open(video_data, 'rb').read()

st.video(video_data)

if 'audio' in action.result:

audio_data = action.result['audio']

audio_data = open(audio_data, 'rb').read()

st.audio(audio_data)

elif isinstance(action.result, list):

for item in action.result:

if item['type'] == 'text':

st.markdown('```\n' + item['content'] + '\n```')

elif item['type'] == 'image':

image_data = open(item['content'], 'rb').read()

st.image(image_data, caption='Generated Image')

elif item['type'] == 'video':

video_data = open(item['content'], 'rb').read()

st.video(video_data)

elif item['type'] == 'audio':

audio_data = open(item['content'], 'rb').read()

st.audio(audio_data)

if action.errmsg:

st.error(action.errmsg)

def main():

# logger = get_logger(__name__)

# Initialize Streamlit UI and setup sidebar

if 'ui' not in st.session_state:

session_state = SessionState()

session_state.init_state()

st.session_state['ui'] = StreamlitUI(session_state)

else:

st.set_page_config(

layout='wide',

page_title='lagent-web',

page_icon='./docs/imgs/lagent_icon.png')

st.header(':robot_face: :blue[Lagent] Web Demo ', divider='rainbow')

_, model, plugin_action, uploaded_file, _ = st.session_state[

'ui'].setup_sidebar()

# Initialize chatbot if it is not already initialized

# or if the model has changed

if 'chatbot' not in st.session_state or model != st.session_state[

'chatbot']._llm:

st.session_state['chatbot'] = st.session_state[

'ui'].initialize_chatbot(model, plugin_action)

st.session_state['session_history'] = []

for prompt, agent_return in zip(st.session_state['user'],

st.session_state['assistant']):

st.session_state['ui'].render_user(prompt)

st.session_state['ui'].render_assistant(agent_return)

if user_input := st.chat_input(''):

with st.container():

st.session_state['ui'].render_user(user_input)

st.session_state['user'].append(user_input)

# Add file uploader to sidebar

if (uploaded_file

and uploaded_file.name not in st.session_state['file']):

st.session_state['file'].add(uploaded_file.name)

file_bytes = uploaded_file.read()

file_type = uploaded_file.type

if 'image' in file_type:

st.image(file_bytes, caption='Uploaded Image')

elif 'video' in file_type:

st.video(file_bytes, caption='Uploaded Video')

elif 'audio' in file_type:

st.audio(file_bytes, caption='Uploaded Audio')

# Save the file to a temporary location and get the path

postfix = uploaded_file.name.split('.')[-1]

# prefix = str(uuid.uuid4())

prefix = hashlib.md5(file_bytes).hexdigest()

filename = f'{prefix}.{postfix}'

file_path = os.path.join(root_dir, filename)

with open(file_path, 'wb') as tmpfile:

tmpfile.write(file_bytes)

file_size = os.stat(file_path).st_size / 1024 / 1024

file_size = f'{round(file_size, 2)} MB'

# st.write(f'File saved at: {file_path}')

user_input = [

dict(role='user', content=user_input),

dict(

role='user',

content=json.dumps(dict(path=file_path, size=file_size)),

name='file')

]

if isinstance(user_input, str):

user_input = [dict(role='user', content=user_input)]

st.session_state['last_status'] = AgentStatusCode.SESSION_READY

for agent_return in st.session_state['chatbot'].stream_chat(

st.session_state['session_history'] + user_input):

if agent_return.state == AgentStatusCode.PLUGIN_RETURN:

with st.container():

st.session_state['ui'].render_plugin_args(

agent_return.actions[-1])

st.session_state['ui'].render_action_results(

agent_return.actions[-1])

elif agent_return.state == AgentStatusCode.CODE_RETURN:

with st.container():

st.session_state['ui'].render_action_results(

agent_return.actions[-1])

elif (agent_return.state == AgentStatusCode.STREAM_ING

or agent_return.state == AgentStatusCode.CODING):

# st.markdown(agent_return.response)

# 清除占位符的当前内容,并显示新内容

with st.container():

if agent_return.state != st.session_state['last_status']:

st.session_state['temp'] = ''

placeholder = st.empty()

st.session_state['placeholder'] = placeholder

if isinstance(agent_return.response, dict):

action = f"\n\n {agent_return.response['name']}: \n\n"

action_input = agent_return.response['parameters']

if agent_return.response[

'name'] == 'IPythonInterpreter':

action_input = action_input['command']

response = action + action_input

else:

response = agent_return.response

st.session_state['temp'] = response

st.session_state['placeholder'].markdown(

st.session_state['temp'])

elif agent_return.state == AgentStatusCode.END:

st.session_state['session_history'] += (

user_input + agent_return.inner_steps)

agent_return = copy.deepcopy(agent_return)

agent_return.response = st.session_state['temp']

st.session_state['assistant'].append(

copy.deepcopy(agent_return))

st.session_state['last_status'] = agent_return.state

if __name__ == '__main__':

root_dir = os.path.dirname(os.path.dirname(os.path.abspath(__file__)))

root_dir = os.path.join(root_dir, 'tmp_dir')

os.makedirs(root_dir, exist_ok=True)

main()

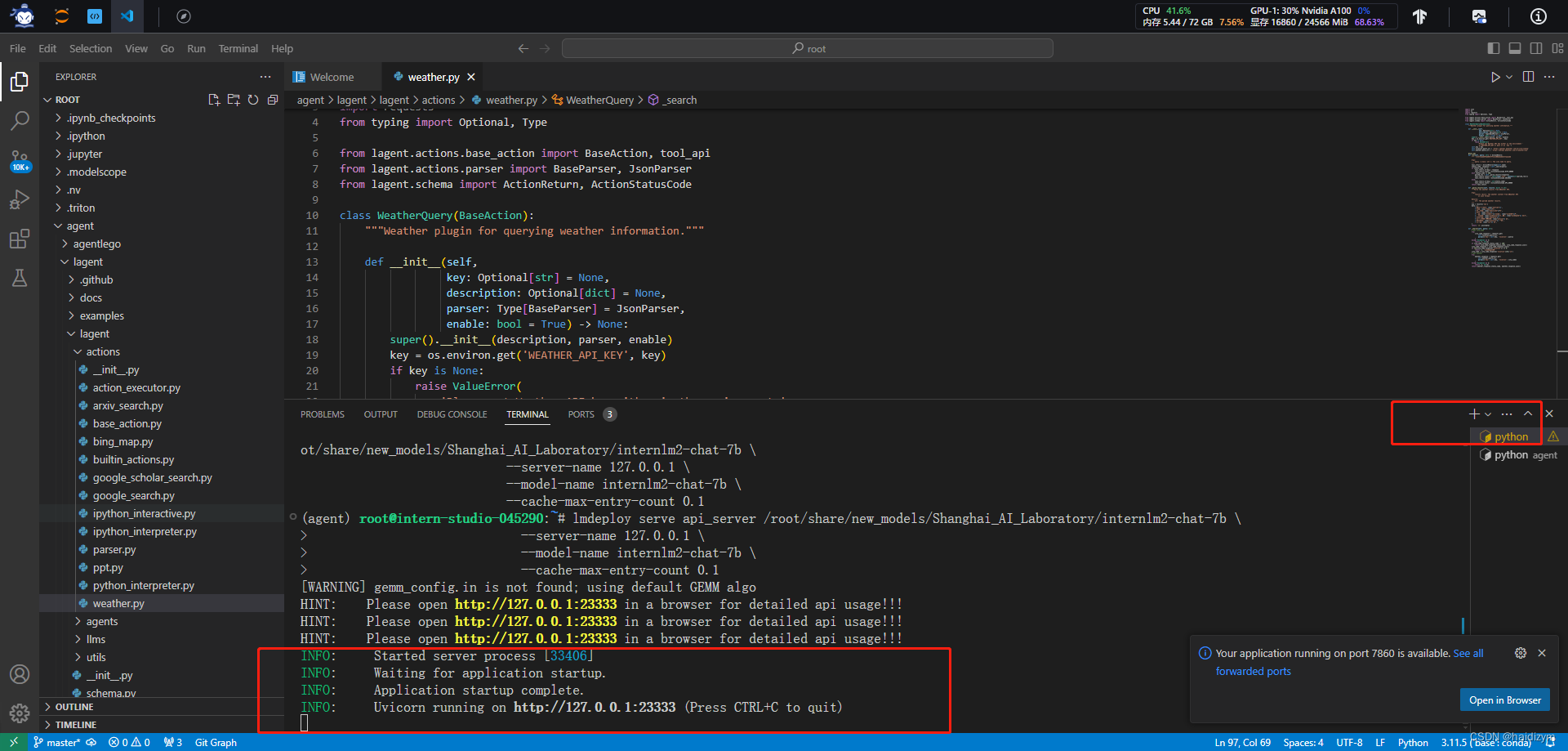

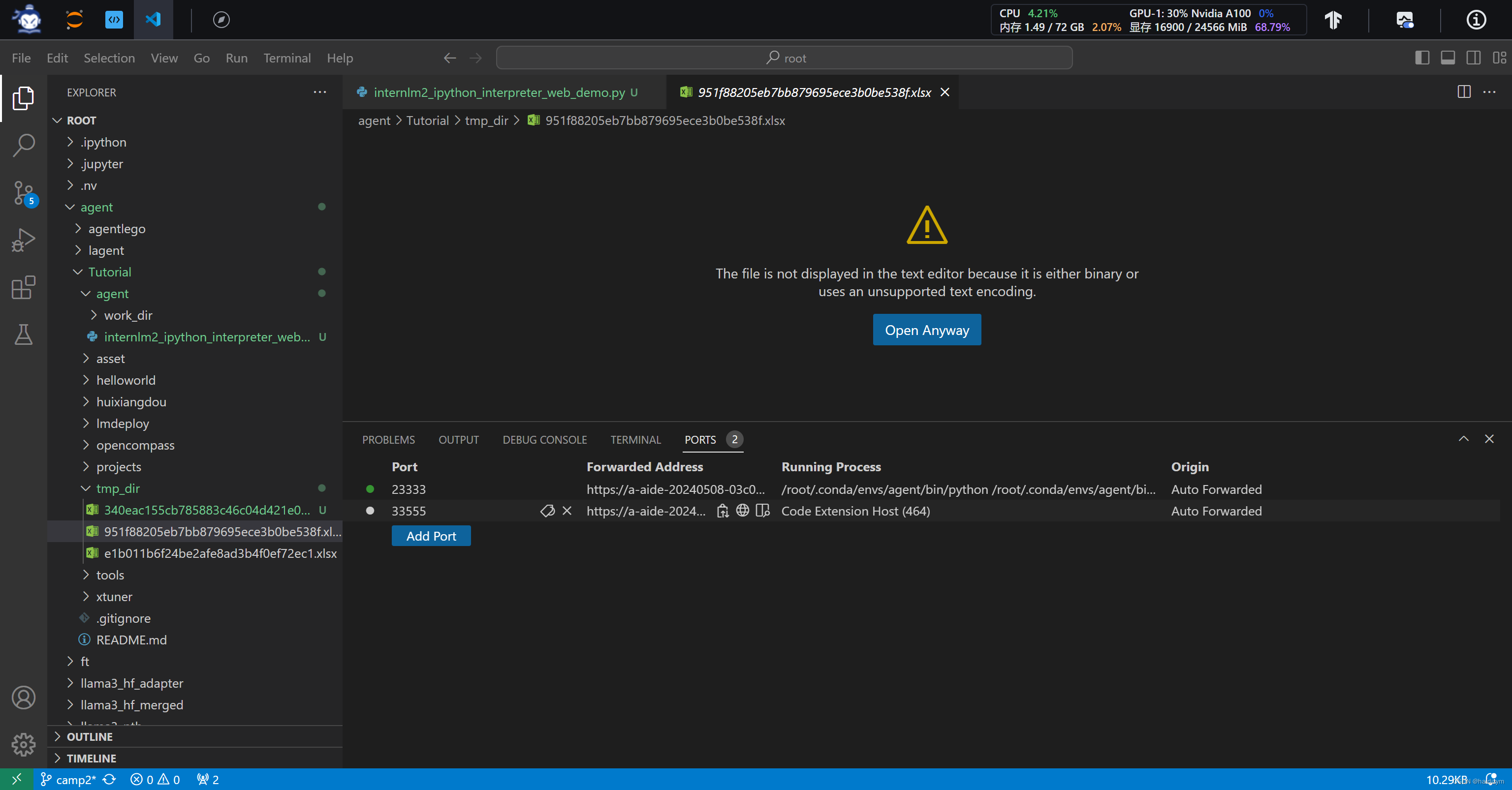

部署

#vscode terminal:Imdeploy的api serve

conda activate agent

lmdeploy serve api_server /root/share/new_models/Shanghai_AI_Laboratory/internlm2-chat-7b \

--server-name 127.0.0.1 \

--model-name internlm2-chat-7b \

--cache-max-entry-count 0.1

conda activate agent

cd /root/agent/Tutorial/agent

streamlit run internlm2_ipython_interpreter_web_demo.py --server.address 127.0.0.1 --server.port 7860

#终端映射powershell

ssh -CNg -L 7860:127.0.0.1:7860 -L 23333:127.0.0.1:23333 root@ssh.intern-ai.org.cn -p 47866

使用

浏览器: http://localhost:7860

Web页面设置:

模型IP:127.0.0.1:23333

插件选择:IPythonInterpreter

报错了,明天继续实验

晚上实验

备注:这是大模型的幻觉,我的数据不长这个样子

上传给你的是一份xlsx表格,给我简单介绍一下数据的情况

请根据上传的xlsx表格数据,绘制一下所有地区的碳排放量柱状图

请根据上传的xlsx表格数据,找出影响碳排放量的因子并求其权重,

拖上去的数据都在这里,

Connection error

Connection failed with status 500, and response “connect ECONNREFUSED 0.0.0.0:80”.

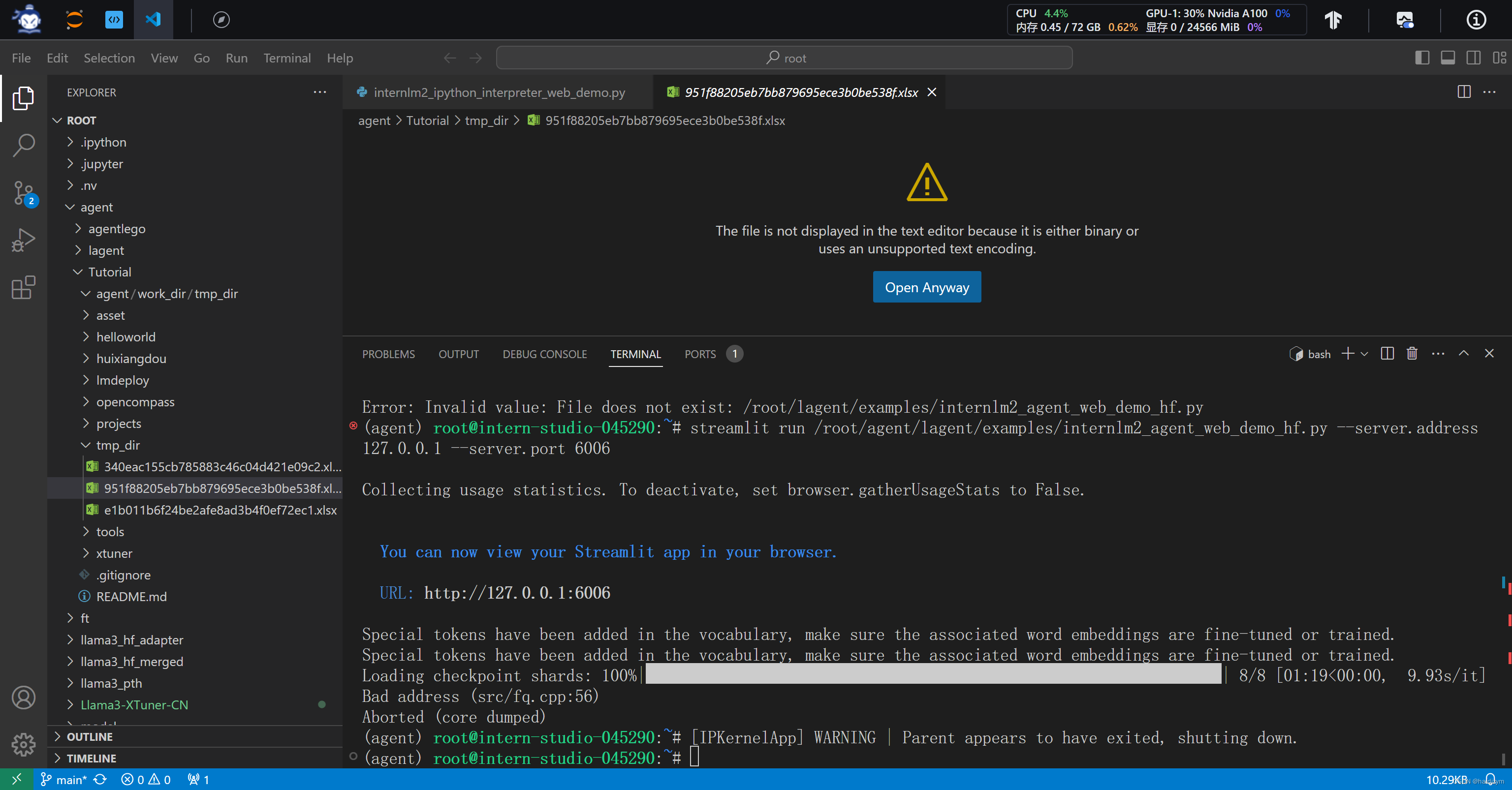

发现报错的原因是7860的端口自动消失了

总之,每次尝试都出现各种奇葩报错,下面是耗时非常久也没有加载出来,

小结

感觉自己搞复杂了,只要

部署模型,起web demo,开数据分析,问你好,然后上传文件要求分析

数据分析其实就是开了ipythoninterpreter

internlm2-chat-7b

#删除环境,重新开始

bash

conda remove --name agent --all

#删除云盘文件夹agent,重建文件夹

mkdir -p /root/agent

studio-conda -t agent -o pytorch-2.1.2

cd /root/agent

conda activate agent

git clone https://gitee.com/internlm/lagent.git

cd lagent && git checkout 581d9fb && pip install -e . && cd ..

git clone https://gitee.com/internlm/agentlego.git

cd agentlego && git checkout 7769e0d && pip install -e . && cd ..

conda activate agent

pip install lmdeploy==0.3.0

cd /root/agent

git clone -b camp2 https://gitee.com/internlm/Tutorial.git

ln -s /root/share/new_models/Shanghai_AI_Laboratory/internlm2-chat-7b /root/model/internlm2-chat-7b

#构造软链接快捷访问方式,并把/root/demo/lagent/examples/internlm2_agent_web_demo_hf.py的约71行文件路径改为本地

#value='/root/model/internlm2-chat-7b'

pip install huggingface-hub==0.17.3

pip install transformers==4.34

pip install psutil==5.9.8

pip install accelerate==0.24.1

pip install streamlit==1.32.2

pip install matplotlib==3.8.3

pip install modelscope==1.9.5

pip install sentencepiece==0.1.99

streamlit run /root/agent/lagent/examples/internlm2_agent_web_demo_hf.py --server.address 127.0.0.1 --server.port 6006

但是显然读取数据没问题,做分析目前总是报错

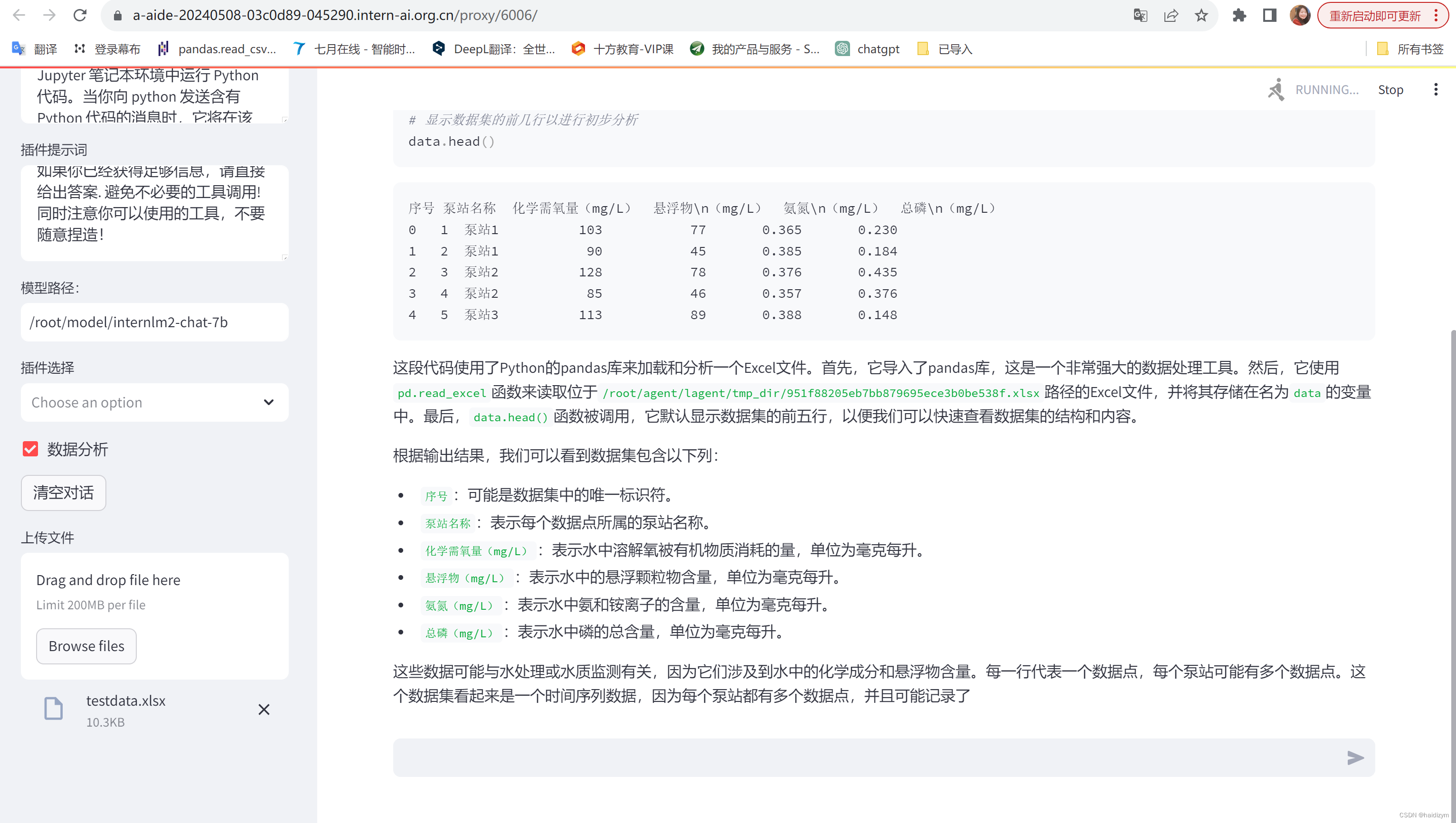

上传给你的是一份xlsx表格,给我简单介绍一下数据的情况(出结果)

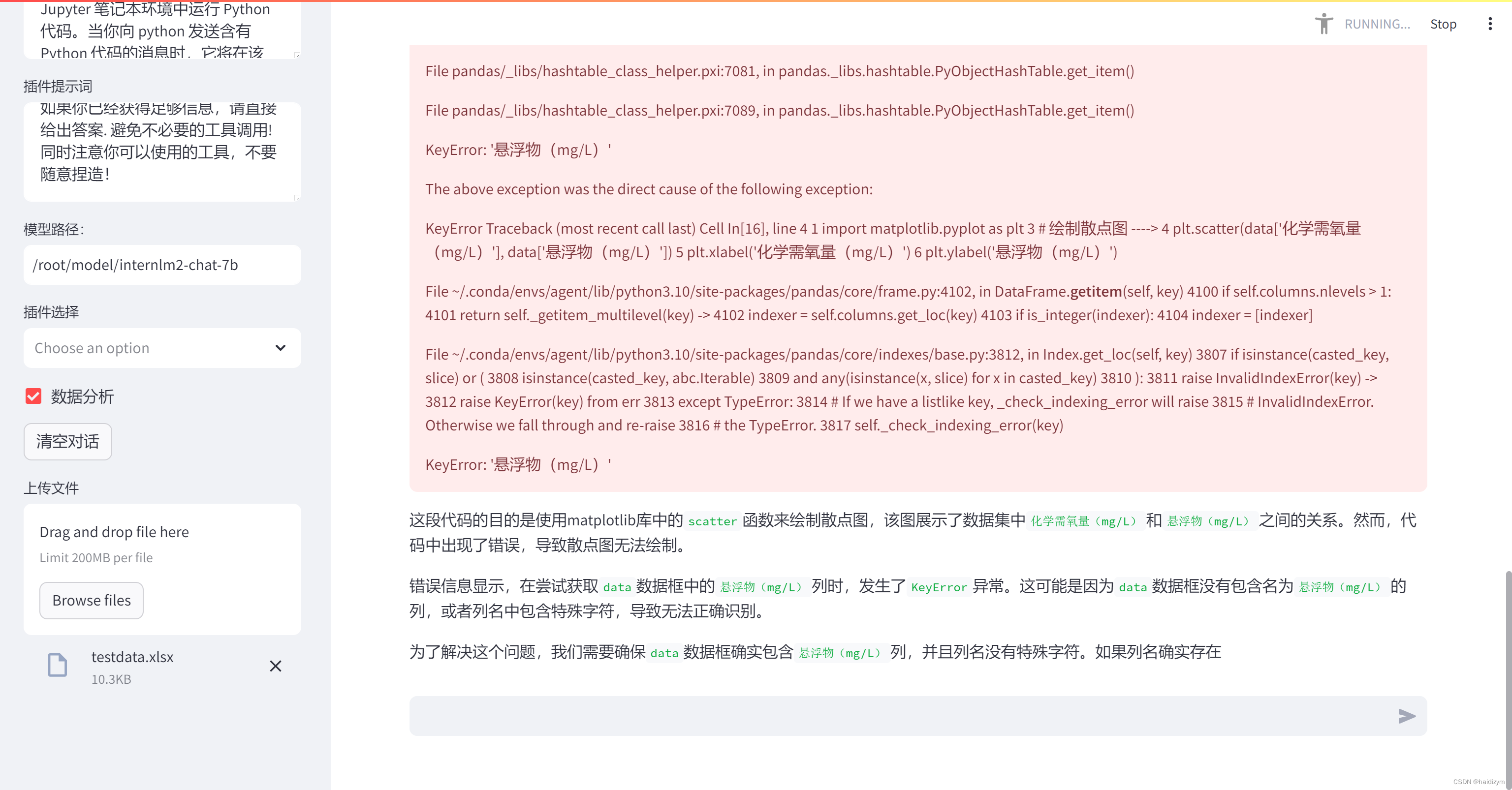

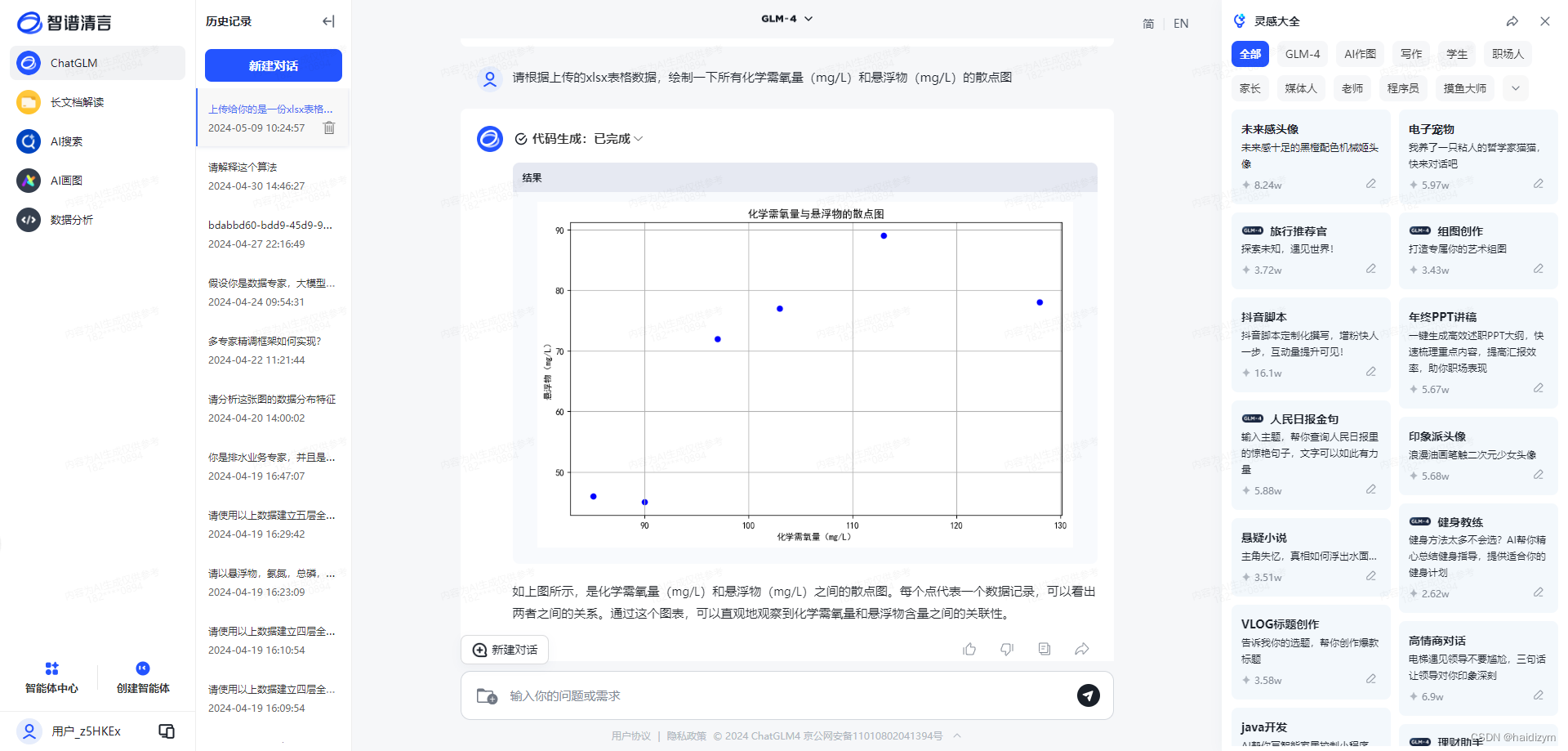

请根据上传的xlsx表格数据,绘制一下所有化学需氧量(mg/L)和悬浮物(mg/L)的散点图(报错)

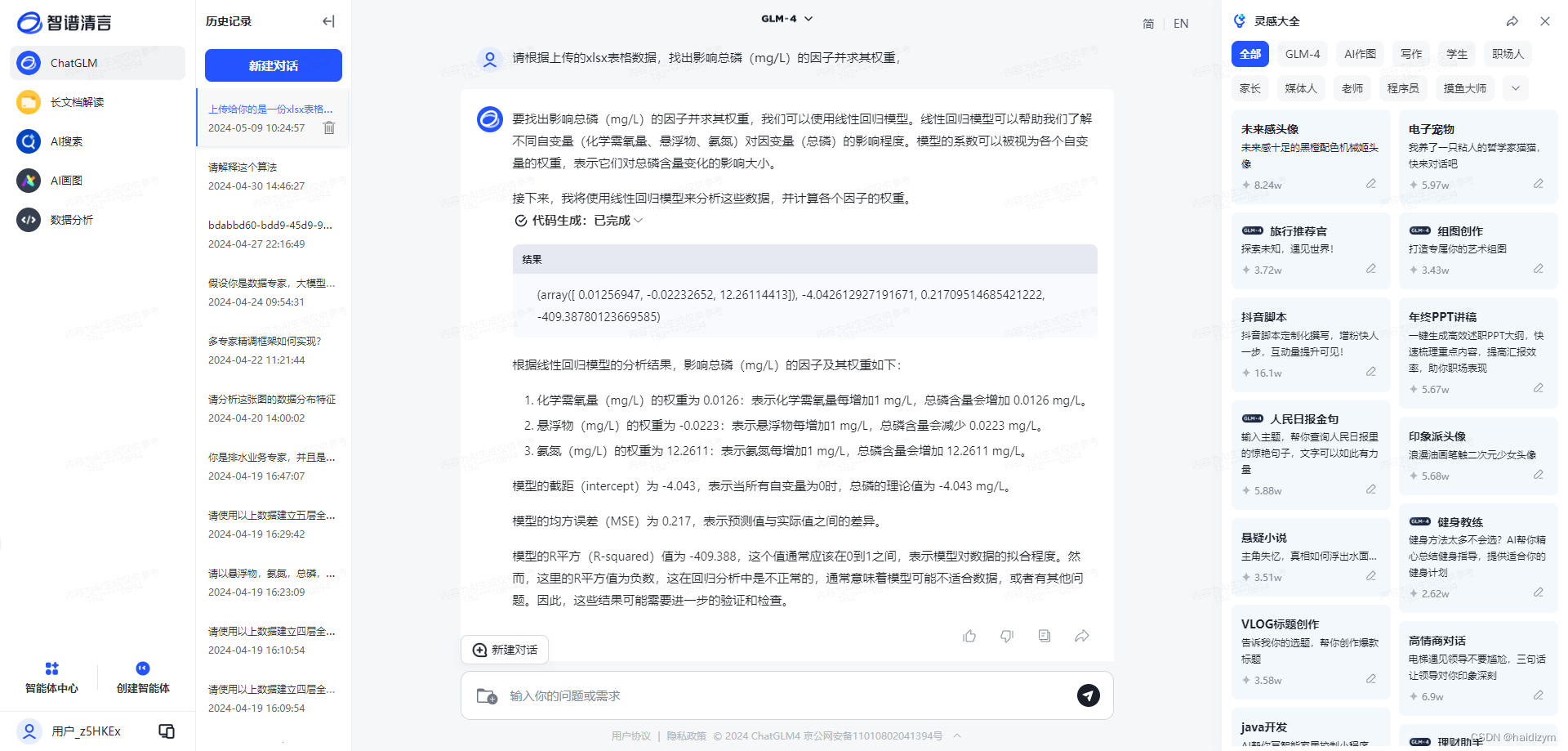

请根据上传的xlsx表格数据,找出影响总磷(mg/L)的因子并求其权重,(报错)

internlm2-chat-20b

#删除环境,重新开始

bash

conda remove --name agent --all

#删除云盘文件夹agent,重建文件夹

mkdir -p /root/agent

studio-conda -t agent -o pytorch-2.1.2

cd /root/agent

conda activate agent

git clone https://gitee.com/internlm/lagent.git

cd lagent && git checkout 581d9fb && pip install -e . && cd ..

git clone https://gitee.com/internlm/agentlego.git

cd agentlego && git checkout 7769e0d && pip install -e . && cd ..

conda activate agent

pip install lmdeploy==0.3.0

cd /root/agent

git clone -b camp2 https://gitee.com/internlm/Tutorial.git

ln -s /root/share/new_models/Shanghai_AI_Laboratory/internlm2-chat-20b /root/model/internlm2-chat-20b

#构造软链接快捷访问方式,并把/root/agent/lagent/examples/internlm2_agent_web_demo_hf.py的约71行文件路径改为本地

#value='/root/model/internlm2-chat-20b'

pip install huggingface-hub==0.17.3

pip install transformers==4.34

pip install psutil==5.9.8

pip install accelerate==0.24.1

pip install streamlit==1.32.2

pip install matplotlib==3.8.3

pip install modelscope==1.9.5

pip install sentencepiece==0.1.99

streamlit run /root/agent/lagent/examples/internlm2_agent_web_demo_hf.py --server.address 127.0.0.1 --server.port 6006

但是显然读取数据没问题,做分析目前总是报错

上传给你的是一份xlsx表格,给我简单介绍一下数据的情况(出结果)

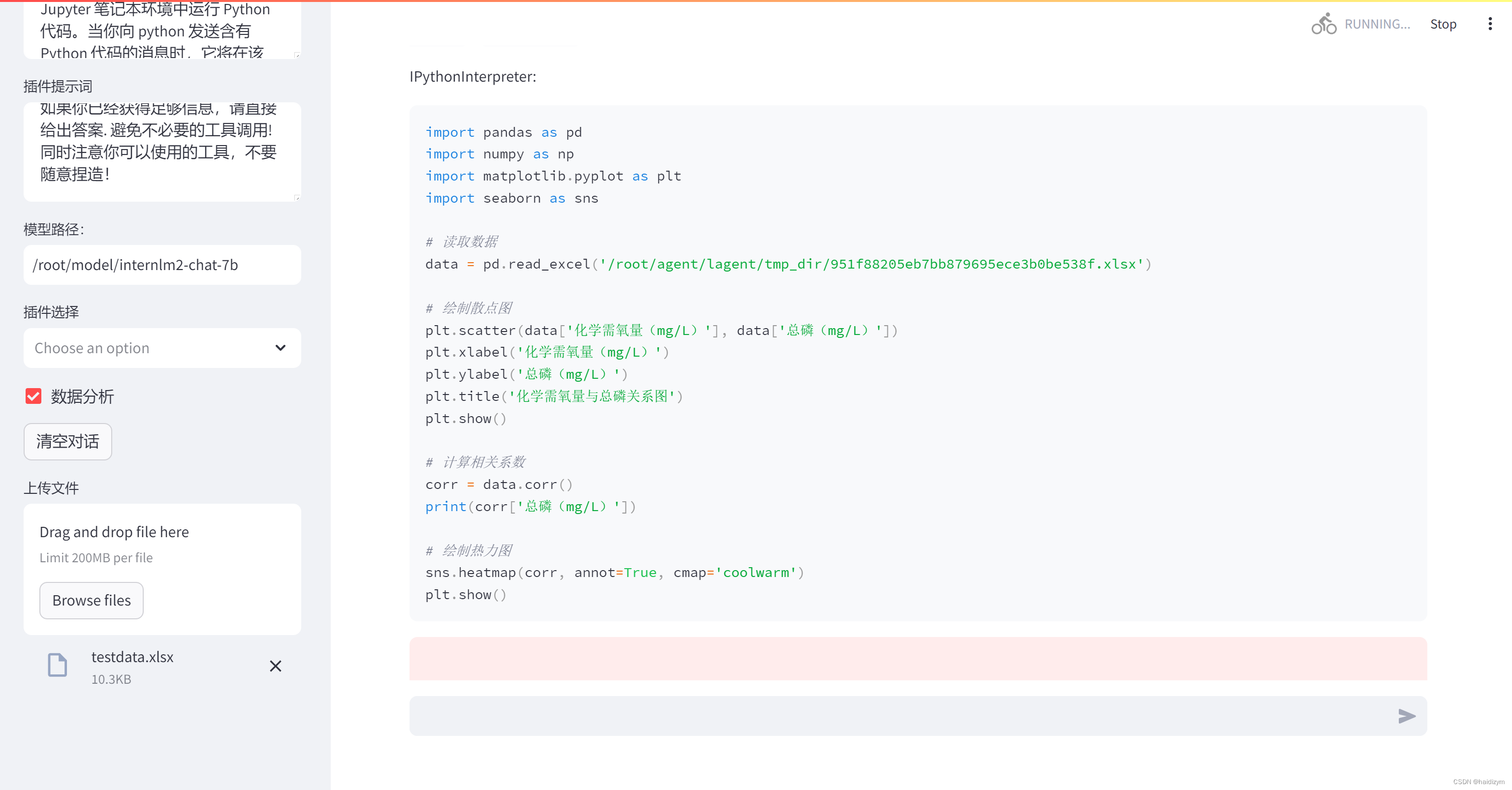

请根据上传的xlsx表格数据,绘制一下所有化学需氧量(mg/L)和悬浮物(mg/L)的散点图(还是报错)

请根据上传的xlsx表格数据,找出影响总磷(mg/L)的因子并求其权重,(还是报错)

不得不吐槽:对比一下智谱清言的鲁棒性

973

973

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?