在17.12.29,VINS更新了代码加入了新的特征,包括map merge( 地图合并), pose-graph reuse(位姿图重利用), online temporal calibration function(在线时间校准函数), and support rolling shutter camera(支持卷帘快门相机)。

论文见https://arxiv.org/abs/1803.01549

1 map merge( 地图合并)

map merge 最初是MapLab中出现的特征,VINS 将这个特征功能合并过来,并使用CLA数据集来测试。除了介绍页给出的ETH CLA 数据集下载外,MapLab的数据介绍详见Github网址。

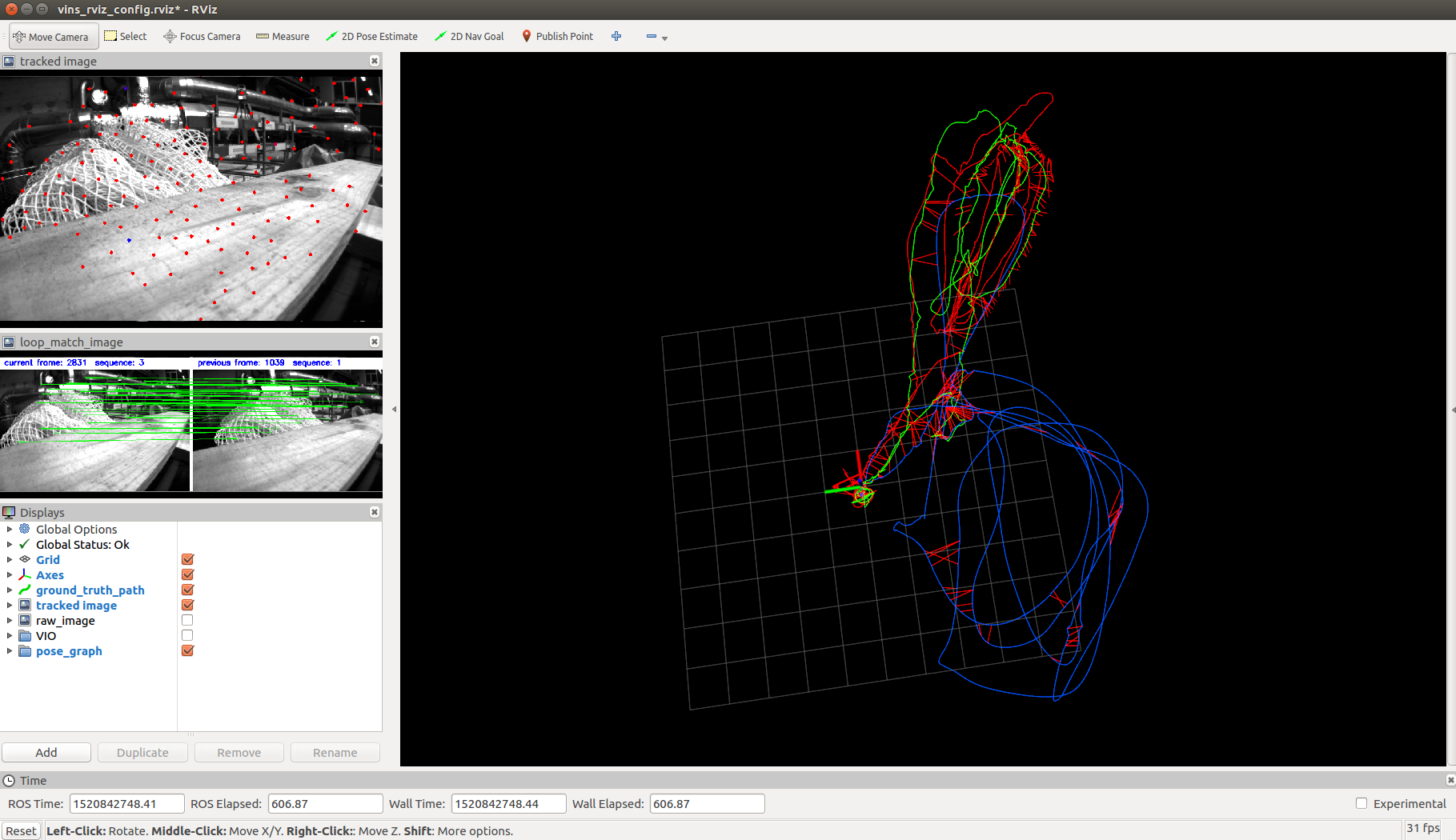

在播放完MH_01_easy.bag后,继续播放MH_02_easy.bag和MH_03_medium.bag, RVIZ可以得到如下合并的地图。左侧中间添加了回环检测匹配的图像。

图中有三个颜色不同的轨迹线绿色的是MH_01, 红色轨迹是MH_02, 蓝色轨迹是MH_03。MH_02和MH_01轨迹位置相近,因此回环检测比较多。这些地图序列拼接,初始点的拼接输出

[ WARN] [1520921503.762849522]: restart the estimator!

[ WARN] [1520921503.777266208]: image discontinue! detect a new sequence!

new sequence

sequence cnt 2 // detect unstable camera stream

if (img_msg->header.stamp.toSec() - last_image_time > 1.0 || img_msg->header.stamp.toSec() < last_image_time)

{

ROS_WARN("image discontinue! reset the feature tracker!");

first_image_flag = true;

last_image_time = 0;

pub_count = 1;

std_msgs::Bool restart_flag;

restart_flag.data = true;

pub_restart.publish(restart_flag);

return;

}void image_callback(const sensor_msgs::ImageConstPtr &image_msg)

{

//ROS_INFO("image_callback!");

if(!LOOP_CLOSURE)

return;

m_buf.lock();

image_buf.push(image_msg);

m_buf.unlock();

//printf(" image time %f \n", image_msg->header.stamp.toSec());

// detect unstable camera stream

if (last_image_time == -1)

last_image_time = image_msg->header.stamp.toSec();

else if (image_msg->header.stamp.toSec() - last_image_time > 1.0 || image_msg->header.stamp.toSec() < last_image_time)

{

ROS_WARN("image discontinue! detect a new sequence!");

new_sequence();

}

last_image_time = image_msg->header.stamp.toSec();

}从代码上看,新的序列就是检测新到达的图像时间是否已经超过上一帧图像时间1s,如果时间太长则重新初始化,重新构建地图。

2 pose-graph reuse(位姿图重利用)

首先修改对应的config.yaml,设置路径 pose_graph_save_path 后,播放需要建图的bag文件,播放完成在vins_estimator控制台键入‘s’,当前包的所有位姿图会存储下来。

代码

void PoseGraph::savePoseGraph()

{

m_keyframelist.lock();

TicToc tmp_t;

FILE *pFile;

printf("pose graph path: %s\n",POSE_GRAPH_SAVE_PATH.c_str());

printf("pose graph saving... \n");

string file_path = POSE_GRAPH_SAVE_PATH + "pose_graph.txt";

pFile = fopen (file_path.c_str(),"w");

//fprintf(pFile, "index time_stamp Tx Ty Tz Qw Qx Qy Qz loop_index loop_info\n");

list<KeyFrame*>::iterator it;

for (it = keyframelist.begin(); it != keyframelist.end(); it++)

{

std::string image_path, descriptor_path, brief_path, keypoints_path;

if (DEBUG_IMAGE)

{

image_path = POSE_GRAPH_SAVE_PATH + to_string((*it)->index) + "_image.png";

imwrite(image_path.c_str(), (*it)->image);

}

Quaterniond VIO_tmp_Q{(*it)->vio_R_w_i};

Quaterniond PG_tmp_Q{(*it)->R_w_i};

Vector3d VIO_tmp_T = (*it)->vio_T_w_i;

Vector3d PG_tmp_T = (*it)->T_w_i;

fprintf (pFile, " %d %f %f %f %f %f %f %f %f %f %f %f %f %f %f %f %d %f %f %f %f %f %f %f %f %d\n",(*it)->index, (*it)->time_stamp,

VIO_tmp_T.x(), VIO_tmp_T.y(), VIO_tmp_T.z(),

PG_tmp_T.x(), PG_tmp_T.y(), PG_tmp_T.z(),

VIO_tmp_Q.w(), VIO_tmp_Q.x(), VIO_tmp_Q.y(), VIO_tmp_Q.z(),

PG_tmp_Q.w(), PG_tmp_Q.x(), PG_tmp_Q.y(), PG_tmp_Q.z(),

(*it)->loop_index,

(*it)->loop_info(0), (*it)->loop_info(1), (*it)->loop_info(2), (*it)->loop_info(3),

(*it)->loop_info(4), (*it)->loop_info(5), (*it)->loop_info(6), (*it)->loop_info(7),

(int)(*it)->keypoints.size());

// write keypoints, brief_descriptors vector<cv::KeyPoint> keypoints vector<BRIEF::bitset> brief_descriptors;

assert((*it)->keypoints.size() == (*it)->brief_descriptors.size());

brief_path = POSE_GRAPH_SAVE_PATH + to_string((*it)->index) + "_briefdes.dat";

std::ofstream brief_file(brief_path, std::ios::binary);

keypoints_path = POSE_GRAPH_SAVE_PATH + to_string((*it)->index) + "_keypoints.txt";

FILE *keypoints_file;

keypoints_file = fopen(keypoints_path.c_str(), "w");

for (int i = 0; i < (int)(*it)->keypoints.size(); i++)

{

brief_file << (*it)->brief_descriptors[i] << endl;

fprintf(keypoints_file, "%f %f %f %f\n", (*it)->keypoints[i].pt.x, (*it)->keypoints[i].pt.y,

(*it)->keypoints_norm[i].pt.x, (*it)->keypoints_norm[i].pt.y);

}

brief_file.close();

fclose(keypoints_file);

}

fclose(pFile);

printf("save pose graph time: %f s\n", tmp_t.toc() / 1000);

m_keyframelist.unlock();

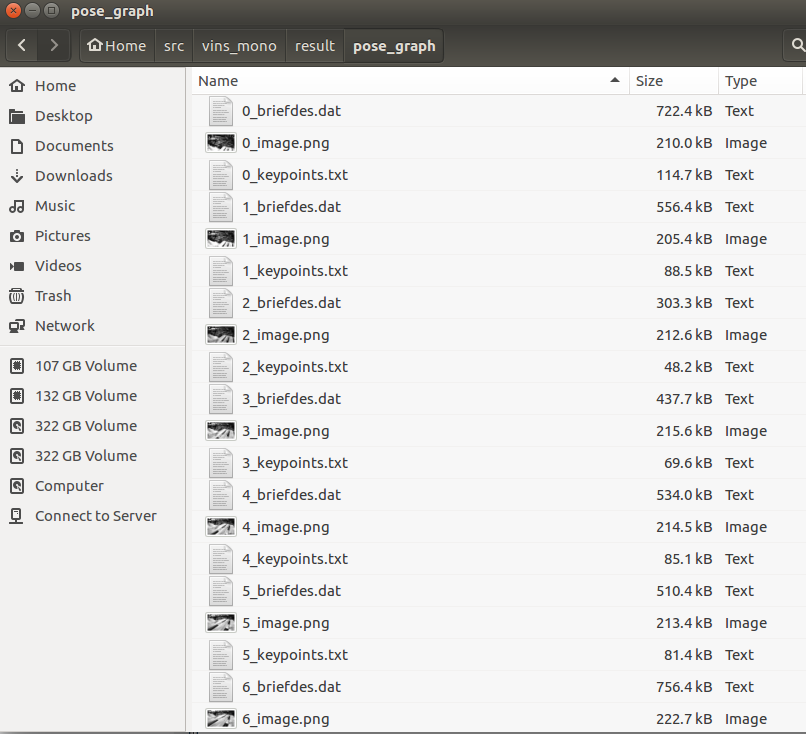

}从代码中可见,存储的是关键帧、关键帧上的特征点,特征点的brief描述子(二进制dat文件)。

将config.yaml中的load_previous_pose_graph修改为1,然后重新运行vins_estimator,系统则会加载 result/pose_graph下的位姿图, 新的序列会和之前的位姿图相重合。

Terminal输出的加载信息

load pose graph

lode pose graph from: /home/.../src/vins_mono/result/pose_graph/pose_graph.txt

pose graph loading...

load pose graph time: 33.301555 s

load pose graph finishpose_graph.txt 每一行读取

int index;

double time_stamp;

double VIO_Tx, VIO_Ty, VIO_Tz;

double PG_Tx, PG_Ty, PG_Tz;

double VIO_Qw, VIO_Qx, VIO_Qy, VIO_Qz;

double PG_Qw, PG_Qx, PG_Qy, PG_Qz;

double loop_info_0, loop_info_1, loop_info_2, loop_info_3;

double loop_info_4, loop_info_5, loop_info_6, loop_info_7;

int loop_index;

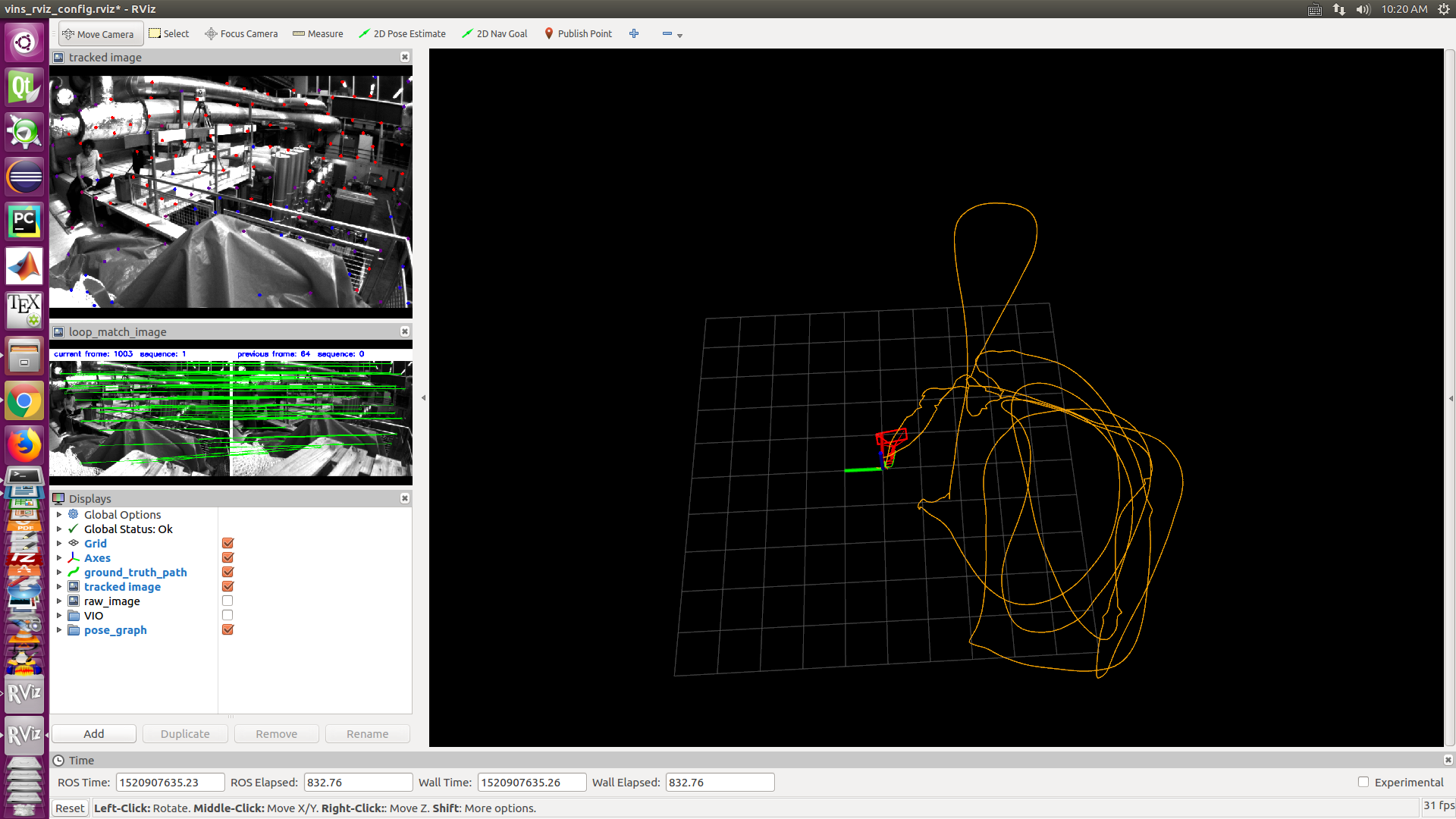

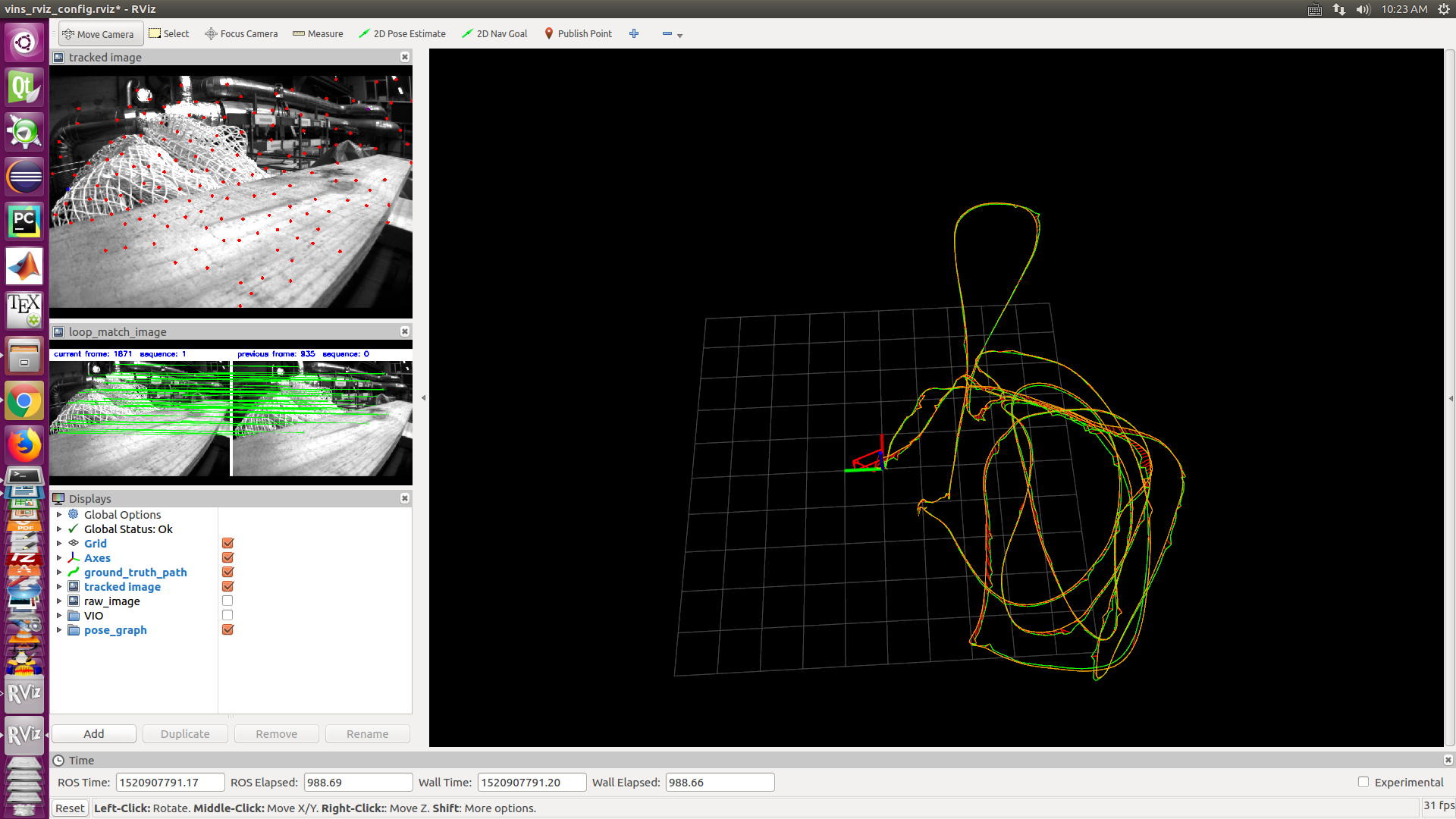

int keypoints_num;加载的位姿图是黄色轨迹线

在重新播放数据包之后与地图对准的结果如下图

save_image选项设置为0最好,节省内存。

3 online temporal calibration function(在线时间校准)

这项参数td在Estimator::optimization()里放在图像残差函数中进行非线性优化。

if (ESTIMATE_TD)

{

ProjectionTdFactor *f_td = new ProjectionTdFactor(pts_i, pts_j, it_per_id.feature_per_frame[0].velocity, it_per_frame.velocity, it_per_id.feature_per_frame[0].cur_td, it_per_frame.cur_td, it_per_id.feature_per_frame[0].uv.y(), it_per_frame.uv.y());

problem.AddResidualBlock(f_td, loss_function, para_Pose[imu_i], para_Pose[imu_j], para_Ex_Pose[0], para_Feature[feature_index], para_Td[0]);

/*

double **para = new double *[5];

para[0] = para_Pose[imu_i];

para[1] = para_Pose[imu_j];

para[2] = para_Ex_Pose[0];

para[3] = para_Feature[feature_index];

para[4] = para_Td[0];

f_td->check(para);

*/

}

else

{

ProjectionFactor *f = new ProjectionFactor(pts_i, pts_j);

problem.AddResidualBlock(f, loss_function, para_Pose[imu_i], para_Pose[imu_j], para_Ex_Pose[0], para_Feature[feature_index]);

}将特征点的速度和这个时间偏差考虑到视觉残差函数即重投影误差中

pts_i_td = pts_i - (td - td_i + TR / ROW * row_i) * velocity_i;

pts_j_td = pts_j - (td - td_j + TR / ROW * row_j) * velocity_j;

本文介绍了VINS在17.12.29更新的重要功能:地图合并(mapmerge)、位姿图重利用(pose-graphreuse)、在线时间校准(onlinetemporalcalibrationfunction)及支持卷帘快门相机(supportrollingshuttercamera)。通过这些更新,VINS提升了视觉惯性里程计的性能和灵活性。

本文介绍了VINS在17.12.29更新的重要功能:地图合并(mapmerge)、位姿图重利用(pose-graphreuse)、在线时间校准(onlinetemporalcalibrationfunction)及支持卷帘快门相机(supportrollingshuttercamera)。通过这些更新,VINS提升了视觉惯性里程计的性能和灵活性。

910

910

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?