TensorRT的安装可见我的上一篇博客

Ubuntu配置TensorRT及验证_jiugeshao的专栏-CSDN博客博主的一些基本环境配置可见之前博客非虚拟机环境下Ubuntu配置_jiugeshao的专栏-CSDN博客第一步: 准备安装AnacondaAnaconda3-5.2.0-Linux-x86_64.shhttps://repo.anaconda.com/archive/Anaconda3-5.2.0-Linux-x86_64.shhttps://blog.csdn.net/jiugeshao/article/details/123119995?spm=1001.2014.3001.5501 前面有一篇博客是用pytorch的自带模型预测了一张图片,并统计了推断时间,此篇会尝试使用tensorRT加速其推断时间。

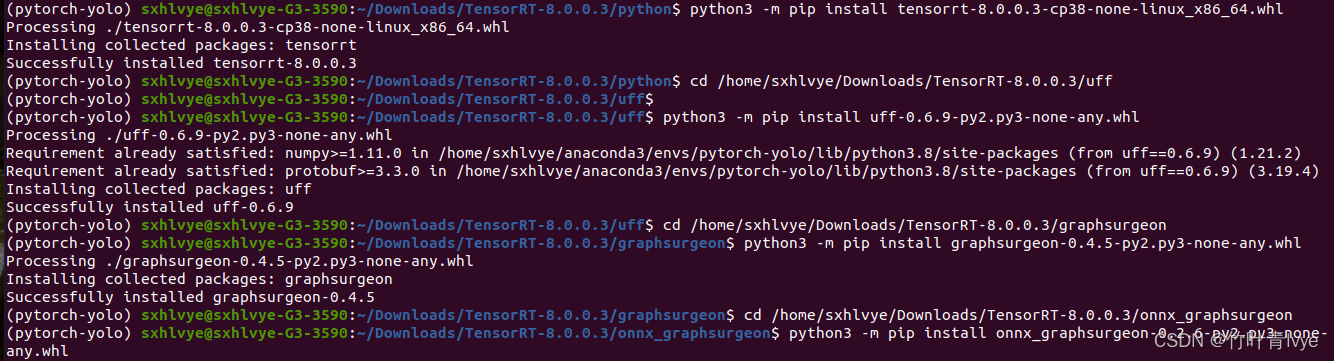

按照官网安装tensorRT的python库,这里接着前面博客中Tar方式安装tensorRT继续配置(给虚拟环境pytorch-yolo中的python版本配置,此pytorch-yolo在前面博客中所创建的),分别进入如下的文件夹,通过whl来安装库

主要还是参考官网,博主这里用的是tensorRT8.0版本,所以文档注意下对应版本,刚开始按照8.4版本上的语句实现的,发现实现的函数并没有。不得不说官网上资料真的很详尽,很多都是step-by-step的讲解,博主这里只能暂时快餐式的先过下,感受下其魅力吧,从一个加速小功能开始。

Developer Guide :: NVIDIA Deep Learning TensorRT Documentation![]() https://docs.nvidia.com/deeplearning/tensorrt/archives/tensorrt-800-ea/developer-guide/index.html主要实现过程可参考如下sample例子

https://docs.nvidia.com/deeplearning/tensorrt/archives/tensorrt-800-ea/developer-guide/index.html主要实现过程可参考如下sample例子

官网上也说了,常用的方法就是从ONNX模型去转化成tensorrt,当然你可以用tensorrt api自定义一个网络结构,这需要花费更多的经历。

完毕后,可以看到此python3.8环境下的外部库tensorrt已经安装

先介绍第一种加速方法(使用trtexec函数直接将onnx模型转化为tensorrt)

1. 将pytorch一个自带模型转化为onnx模型

import torchvision

import torch

from torch.autograd import Variable

import onnx

print(torch.__version__)

input_name = ['input']

output_name = ['output']

input = Variable(torch.randn(1, 3, 224, 224)).cuda()

model = torchvision.models.vgg16(pretrained=True).cuda()

torch.onnx.export(model, input, 'vgg16.onnx', input_names=input_name, output_names=output_name, verbose=True)

执行时,会默认下载resnet50模型,并进行转化,默认下载路径如下:

转化后获得onnx模型

2.使用TensorRT安装目录里的trtexec可执行文件来转换,将onnx模型转化为tensorRT,如下命令行语句即可执行转换

./trtexec --onnx=/home/sxhlvye/Trial/yolov3-9.5.0/vgg16.onnx --saveEngine=/home/sxhlvye/Trial/yolov3-9.5.0/vgg16.trt

过程信息如下:

&&&& RUNNING TensorRT.trtexec [TensorRT v8000] # ./trtexec --onnx=/home/sxhlvye/Trial/yolov3-9.5.0/vgg16.onnx --saveEngine=/home/sxhlvye/Trial/yolov3-9.5.0/vgg16.trt

[03/11/2022-20:50:45] [I] === Model Options ===

[03/11/2022-20:50:45] [I] Format: ONNX

[03/11/2022-20:50:45] [I] Model: /home/sxhlvye/Trial/yolov3-9.5.0/vgg16.onnx

[03/11/2022-20:50:45] [I] Output:

[03/11/2022-20:50:45] [I] === Build Options ===

[03/11/2022-20:50:45] [I] Max batch: explicit

[03/11/2022-20:50:45] [I] Workspace: 16 MiB

[03/11/2022-20:50:45] [I] minTiming: 1

[03/11/2022-20:50:45] [I] avgTiming: 8

[03/11/2022-20:50:45] [I] Precision: FP32

[03/11/2022-20:50:45] [I] Calibration:

[03/11/2022-20:50:45] [I] Refit: Disabled

[03/11/2022-20:50:45] [I] Sparsity: Disabled

[03/11/2022-20:50:45] [I] Safe mode: Disabled

[03/11/2022-20:50:45] [I] Enable serialization: Disabled

[03/11/2022-20:50:45] [I] Save engine: /home/sxhlvye/Trial/yolov3-9.5.0/vgg16.trt

[03/11/2022-20:50:45] [I] Load engine:

[03/11/2022-20:50:45] [I] NVTX verbosity: 0

[03/11/2022-20:50:45] [I] Tactic sources: Using default tactic sources

[03/11/2022-20:50:45] [I] timingCacheMode: local

[03/11/2022-20:50:45] [I] timingCacheFile:

[03/11/2022-20:50:45] [I] Input(s)s format: fp32:CHW

[03/11/2022-20:50:45] [I] Output(s)s format: fp32:CHW

[03/11/2022-20:50:45] [I] Input build shapes: model

[03/11/2022-20:50:45] [I] Input calibration shapes: model

[03/11/2022-20:50:45] [I] === System Options ===

[03/11/2022-20:50:45] [I] Device: 0

[03/11/2022-20:50:45] [I] DLACore:

[03/11/2022-20:50:45] [I] Plugins:

[03/11/2022-20:50:45] [I] === Inference Options ===

[03/11/2022-20:50:45] [I] Batch: Explicit

[03/11/2022-20:50:45] [I] Input inference shapes: model

[03/11/2022-20:50:45] [I] Iterations: 10

[03/11/2022-20:50:45] [I] Duration: 3s (+ 200ms warm up)

[03/11/2022-20:50:45] [I] Sleep time: 0ms

[03/11/2022-20:50:45] [I] Streams: 1

[03/11/2022-20:50:45] [I] ExposeDMA: Disabled

[03/11/2022-20:50:45] [I] Data transfers: Enabled

[03/11/2022-20:50:45] [I] Spin-wait: Disabled

[03/11/2022-20:50:45] [I] Multithreading: Disabled

[03/11/2022-20:50:45] [I] CUDA Graph: Disabled

[03/11/2022-20:50:45] [I] Separate profiling: Disabled

[03/11/2022-20:50:45] [I] Time Deserialize: Disabled

[03/11/2022-20:50:45] [I] Time Refit: Disabled

[03/11/2022-20:50:45] [I] Skip inference: Disabled

[03/11/2022-20:50:45] [I] Inputs:

[03/11/2022-20:50:45] [I] === Reporting Options ===

[03/11/2022-20:50:45] [I] Verbose: Disabled

[03/11/2022-20:50:45] [I] Averages: 10 inferences

[03/11/2022-20:50:45] [I] Percentile: 99

[03/11/2022-20:50:45] [I] Dump refittable layers:Disabled

[03/11/2022-20:50:45] [I] Dump output: Disabled

[03/11/2022-20:50:45] [I] Profile: Disabled

[03/11/2022-20:50:45] [I] Export timing to JSON file:

[03/11/2022-20:50:45] [I] Export output to JSON file:

[03/11/2022-20:50:45] [I] Export profile to JSON file:

[03/11/2022-20:50:45] [I]

[03/11/2022-20:50:45] [I] === Device Information ===

[03/11/2022-20:50:45] [I] Selected Device: NVIDIA GeForce GTX 1660 Ti with Max-Q Design

[03/11/2022-20:50:45] [I] Compute Capability: 7.5

[03/11/2022-20:50:45] [I] SMs: 24

[03/11/2022-20:50:45] [I] Compute Clock Rate: 1.335 GHz

[03/11/2022-20:50:45] [I] Device Global Memory: 5944 MiB

[03/11/2022-20:50:45] [I] Shared Memory per SM: 64 KiB

[03/11/2022-20:50:45] [I] Memory Bus Width: 192 bits (ECC disabled)

[03/11/2022-20:50:45] [I] Memory Clock Rate: 6.001 GHz

[03/11/2022-20:50:45] [I]

[03/11/2022-20:50:45] [I] TensorRT version: 8000

[03/11/2022-20:50:45] [I] [TRT] [MemUsageChange] Init CUDA: CPU +279, GPU +0, now: CPU 284, GPU 836 (MiB)

[libprotobuf WARNING google/protobuf/io/coded_stream.cc:604] Reading dangerously large protocol message. If the message turns out to be larger than 2147483647 bytes, parsing will be halted for security reasons. To increase the limit (or to disable these warnings), see CodedInputStream::SetTotalBytesLimit() in google/protobuf/io/coded_stream.h.

[libprotobuf WARNING google/protobuf/io/coded_stream.cc:81] The total number of bytes read was 553434728

[03/11/2022-20:50:45] [I] [TRT] ----------------------------------------------------------------

[03/11/2022-20:50:45] [I] [TRT] Input filename: /home/sxhlvye/Trial/yolov3-9.5.0/vgg16.onnx

[03/11/2022-20:50:45] [I] [TRT] ONNX IR version: 0.0.6

[03/11/2022-20:50:45] [I] [TRT] Opset version: 9

[03/11/2022-20:50:45] [I] [TRT] Producer name: pytorch

[03/11/2022-20:50:45] [I] [TRT] Producer version: 1.7

[03/11/2022-20:50:45] [I] [TRT] Domain:

[03/11/2022-20:50:45] [I] [TRT] Model version: 0

[03/11/2022-20:50:45] [I] [TRT] Doc string:

[03/11/2022-20:50:45] [I] [TRT] ----------------------------------------------------------------

[libprotobuf WARNING google/protobuf/io/coded_stream.cc:604] Reading dangerously large protocol message. If the message turns out to be larger than 2147483647 bytes, parsing will be halted for security reasons. To increase the limit (or to disable these warnings), see CodedInputStream::SetTotalBytesLimit() in google/protobuf/io/coded_stream.h.

[libprotobuf WARNING google/protobuf/io/coded_stream.cc:81] The total number of bytes read was 553434728

[03/11/2022-20:50:46] [I] [TRT] [MemUsageChange] Init CUDA: CPU +0, GPU +0, now: CPU 356, GPU 836 (MiB)

[03/11/2022-20:50:46] [I] [TRT] [MemUsageSnapshot] Builder begin: CPU 356 MiB, GPU 836 MiB

[03/11/2022-20:50:46] [W] [TRT] TensorRT was linked against cuBLAS/cuBLAS LT 11.2.0 but loaded cuBLAS/cuBLAS LT 11.1.0

[03/11/2022-20:50:46] [I] [TRT] [MemUsageChange] Init cuBLAS/cuBLASLt: CPU +355, GPU +148, now: CPU 711, GPU 984 (MiB)

[03/11/2022-20:50:47] [I] [TRT] [MemUsageChange] Init cuDNN: CPU +433, GPU +172, now: CPU 1144, GPU 1156 (MiB)

[03/11/2022-20:50:47] [W] [TRT] Detected invalid timing cache, setup a local cache instead

[03/11/2022-20:50:48] [I] [TRT] Some tactics do not have sufficient workspace memory to run. Increasing workspace size may increase performance, please check verbose output.

[03/11/2022-20:51:34] [I] [TRT] Detected 1 inputs and 1 output network tensors.

[03/11/2022-20:51:36] [I] [TRT] Total Host Persistent Memory: 14816

[03/11/2022-20:51:36] [I] [TRT] Total Device Persistent Memory: 101502976

[03/11/2022-20:51:36] [I] [TRT] Total Scratch Memory: 114688

[03/11/2022-20:51:36] [I] [TRT] [MemUsageStats] Peak memory usage of TRT CPU/GPU memory allocators: CPU 392 MiB, GPU 1536 MiB

[03/11/2022-20:51:36] [W] [TRT] TensorRT was linked against cuBLAS/cuBLAS LT 11.2.0 but loaded cuBLAS/cuBLAS LT 11.1.0

[03/11/2022-20:51:36] [I] [TRT] [MemUsageChange] Init cuBLAS/cuBLASLt: CPU +0, GPU +8, now: CPU 1538, GPU 2350 (MiB)

[03/11/2022-20:51:36] [I] [TRT] [MemUsageChange] Init cuDNN: CPU +0, GPU +8, now: CPU 1538, GPU 2358 (MiB)

[03/11/2022-20:51:36] [I] [TRT] [MemUsageChange] Init cuBLAS/cuBLASLt: CPU +0, GPU +0, now: CPU 1538, GPU 2342 (MiB)

[03/11/2022-20:51:36] [I] [TRT] [MemUsageChange] Init cuBLAS/cuBLASLt: CPU +0, GPU +0, now: CPU 1538, GPU 2324 (MiB)

[03/11/2022-20:51:36] [I] [TRT] [MemUsageSnapshot] Builder end: CPU 1538 MiB, GPU 2324 MiB

[03/11/2022-20:51:38] [I] Engine built in 53.4659 sec.

[03/11/2022-20:51:38] [W] [TRT] TensorRT was linked against cuBLAS/cuBLAS LT 11.2.0 but loaded cuBLAS/cuBLAS LT 11.1.0

[03/11/2022-20:51:38] [I] [TRT] [MemUsageChange] Init cuBLAS/cuBLASLt: CPU +0, GPU +10, now: CPU 1466, GPU 2334 (MiB)

[03/11/2022-20:51:38] [I] [TRT] [MemUsageChange] Init cuDNN: CPU +0, GPU +8, now: CPU 1466, GPU 2342 (MiB)

[03/11/2022-20:51:38] [I] Created input binding for input with dimensions 1x3x224x224

[03/11/2022-20:51:38] [I] Created output binding for output with dimensions 1x1000

[03/11/2022-20:51:38] [I] Starting inference

[03/11/2022-20:51:41] [I] Warmup completed 21 queries over 200 ms

[03/11/2022-20:51:41] [I] Timing trace has 347 queries over 3.03118 s

[03/11/2022-20:51:41] [I]

[03/11/2022-20:51:41] [I] === Trace details ===

[03/11/2022-20:51:41] [I] Trace averages of 10 runs:

[03/11/2022-20:51:41] [I] Average on 10 runs - GPU latency: 8.7275 ms - Host latency: 8.85547 ms (end to end 16.9159 ms, enqueue 0.456384 ms)

[03/11/2022-20:51:41] [I] Average on 10 runs - GPU latency: 8.59198 ms - Host latency: 8.71927 ms (end to end 16.6184 ms, enqueue 0.445374 ms)

[03/11/2022-20:51:41] [I] Average on 10 runs - GPU latency: 8.69814 ms - Host latency: 8.83329 ms (end to end 16.8985 ms, enqueue 0.476266 ms)

[03/11/2022-20:51:41] [I] Average on 10 runs - GPU latency: 8.56097 ms - Host latency: 8.68812 ms (end to end 16.5703 ms, enqueue 0.466962 ms)

[03/11/2022-20:51:41] [I] Average on 10 runs - GPU latency: 8.5494 ms - Host latency: 8.67427 ms (end to end 15.9973 ms, enqueue 0.437012 ms)

[03/11/2022-20:51:41] [I] Average on 10 runs - GPU latency: 8.57999 ms - Host latency: 8.70994 ms (end to end 16.5995 ms, enqueue 0.471051 ms)

[03/11/2022-20:51:41] [I] Average on 10 runs - GPU latency: 8.61454 ms - Host latency: 8.74319 ms (end to end 16.733 ms, enqueue 0.469867 ms)

[03/11/2022-20:51:41] [I] Average on 10 runs - GPU latency: 8.59292 ms - Host latency: 8.72351 ms (end to end 16.5897 ms, enqueue 0.46236 ms)

[03/11/2022-20:51:41] [I] Average on 10 runs - GPU latency: 8.84926 ms - Host latency: 8.98046 ms (end to end 17.1466 ms, enqueue 0.469708 ms)

[03/11/2022-20:51:41] [I] Average on 10 runs - GPU latency: 9.2775 ms - Host latency: 9.39707 ms (end to end 18.1751 ms, enqueue 0.305542 ms)

[03/11/2022-20:51:41] [I] Average on 10 runs - GPU latency: 8.76965 ms - Host latency: 8.90027 ms (end to end 17.0212 ms, enqueue 0.466687 ms)

[03/11/2022-20:51:41] [I] Average on 10 runs - GPU latency: 8.6661 ms - Host latency: 8.79405 ms (end to end 16.7926 ms, enqueue 0.479028 ms)

[03/11/2022-20:51:41] [I] Average on 10 runs - GPU latency: 8.60695 ms - Host latency: 8.73726 ms (end to end 16.6516 ms, enqueue 0.476416 ms)

[03/11/2022-20:51:41] [I] Average on 10 runs - GPU latency: 8.87834 ms - Host latency: 9.01311 ms (end to end 17.3084 ms, enqueue 0.490845 ms)

[03/11/2022-20:51:41] [I] Average on 10 runs - GPU latency: 8.56219 ms - Host latency: 8.69171 ms (end to end 16.6172 ms, enqueue 0.467834 ms)

[03/11/2022-20:51:41] [I] Average on 10 runs - GPU latency: 8.6796 ms - Host latency: 8.81218 ms (end to end 16.8454 ms, enqueue 0.477478 ms)

[03/11/2022-20:51:41] [I] Average on 10 runs - GPU latency: 8.62471 ms - Host latency: 8.75303 ms (end to end 16.6646 ms, enqueue 0.472693 ms)

[03/11/2022-20:51:41] [I] Average on 10 runs - GPU latency: 8.57371 ms - Host latency: 8.70239 ms (end to end 16.6045 ms, enqueue 0.472583 ms)

[03/11/2022-20:51:41] [I] Average on 10 runs - GPU latency: 8.61357 ms - Host latency: 8.74264 ms (end to end 16.6567 ms, enqueue 0.463147 ms)

[03/11/2022-20:51:41] [I] Average on 10 runs - GPU latency: 8.78726 ms - Host latency: 8.91915 ms (end to end 17.0023 ms, enqueue 0.467883 ms)

[03/11/2022-20:51:41] [I] Average on 10 runs - GPU latency: 8.89293 ms - Host latency: 9.02435 ms (end to end 17.2289 ms, enqueue 0.465051 ms)

[03/11/2022-20:51:41] [I] Average on 10 runs - GPU latency: 8.80696 ms - Host latency: 8.92379 ms (end to end 17.1941 ms, enqueue 0.263074 ms)

[03/11/2022-20:51:41] [I] Average on 10 runs - GPU latency: 8.74055 ms - Host latency: 8.86516 ms (end to end 17.0857 ms, enqueue 0.419067 ms)

[03/11/2022-20:51:41] [I] Average on 10 runs - GPU latency: 8.73726 ms - Host latency: 8.86758 ms (end to end 17.3054 ms, enqueue 0.160474 ms)

[03/11/2022-20:51:41] [I] Average on 10 runs - GPU latency: 8.60771 ms - Host latency: 8.72803 ms (end to end 16.7055 ms, enqueue 0.307349 ms)

[03/11/2022-20:51:41] [I] Average on 10 runs - GPU latency: 8.57827 ms - Host latency: 8.6999 ms (end to end 17.0064 ms, enqueue 0.225293 ms)

[03/11/2022-20:51:41] [I] Average on 10 runs - GPU latency: 8.62034 ms - Host latency: 8.74253 ms (end to end 16.7999 ms, enqueue 0.293311 ms)

[03/11/2022-20:51:41] [I] Average on 10 runs - GPU latency: 8.57913 ms - Host latency: 8.70735 ms (end to end 16.6135 ms, enqueue 0.478906 ms)

[03/11/2022-20:51:41] [I] Average on 10 runs - GPU latency: 9.07957 ms - Host latency: 9.21045 ms (end to end 17.7097 ms, enqueue 0.467505 ms)

[03/11/2022-20:51:41] [I] Average on 10 runs - GPU latency: 8.5864 ms - Host latency: 8.71738 ms (end to end 16.5833 ms, enqueue 0.495044 ms)

[03/11/2022-20:51:41] [I] Average on 10 runs - GPU latency: 8.70837 ms - Host latency: 8.83975 ms (end to end 16.8291 ms, enqueue 0.488721 ms)

[03/11/2022-20:51:41] [I] Average on 10 runs - GPU latency: 8.80723 ms - Host latency: 8.92363 ms (end to end 17.2935 ms, enqueue 0.282153 ms)

[03/11/2022-20:51:41] [I] Average on 10 runs - GPU latency: 8.78691 ms - Host latency: 8.9157 ms (end to end 17.0759 ms, enqueue 0.496094 ms)

[03/11/2022-20:51:41] [I] Average on 10 runs - GPU latency: 8.75461 ms - Host latency: 8.89763 ms (end to end 17.2238 ms, enqueue 0.359912 ms)

[03/11/2022-20:51:41] [I]

[03/11/2022-20:51:41] [I] === Performance summary ===

[03/11/2022-20:51:41] [I] Throughput: 114.477 qps

[03/11/2022-20:51:41] [I] Latency: min = 8.57364 ms, max = 11.3413 ms, mean = 8.83752 ms, median = 8.76416 ms, percentile(99%) = 10.0254 ms

[03/11/2022-20:51:41] [I] End-to-End Host Latency: min = 11.3068 ms, max = 20.374 ms, mean = 16.9197 ms, median = 16.8577 ms, percentile(99%) = 19.2314 ms

[03/11/2022-20:51:41] [I] Enqueue Time: min = 0.081665 ms, max = 0.604248 ms, mean = 0.418154 ms, median = 0.458374 ms, percentile(99%) = 0.567139 ms

[03/11/2022-20:51:41] [I] H2D Latency: min = 0.0983887 ms, max = 0.166016 ms, mean = 0.120533 ms, median = 0.120361 ms, percentile(99%) = 0.153076 ms

[03/11/2022-20:51:41] [I] GPU Compute Time: min = 8.43506 ms, max = 11.2148 ms, mean = 8.70926 ms, median = 8.64478 ms, percentile(99%) = 9.89709 ms

[03/11/2022-20:51:41] [I] D2H Latency: min = 0.00415039 ms, max = 0.0299072 ms, mean = 0.00772904 ms, median = 0.00665283 ms, percentile(99%) = 0.0236816 ms

[03/11/2022-20:51:41] [I] Total Host Walltime: 3.03118 s

[03/11/2022-20:51:41] [I] Total GPU Compute Time: 3.02211 s

[03/11/2022-20:51:41] [I] Explanations of the performance metrics are printed in the verbose logs.

[03/11/2022-20:51:41] [I]

&&&& PASSED TensorRT.trtexec [TensorRT v8000] # ./trtexec --onnx=/home/sxhlvye/Trial/yolov3-9.5.0/vgg16.onnx --saveEngine=/home/sxhlvye/Trial/yolov3-9.5.0/vgg16.trt

[03/11/2022-20:51:41] [I] [TRT] [MemUsageChange] Init cuBLAS/cuBLASLt: CPU +0, GPU +0, now: CPU 1466, GPU 2352 (MiB)

sxhlvye@sxhlvye-G3-3590:~/Downloads/TensorRT-8.0.0.3/bin$

转换后的trt文件如下:

完毕后可以运行如下python文件,博主会拿这个转化后的模型去预测同一张图片,统计了预测时间,代码里面有详细的注释,可供参考

import torch

import torchvision

from PIL import Image

from torchvision import transforms

import torchvision.models as models

import matplotlib.pyplot as plt

import time

import tensorrt as trt

import pycuda.driver as cuda

import pycuda.autoinit

import pdb

import os

import numpy as np

import cv2

# This logger is required to build an engine

TRT_LOGGER = trt.Logger()

filename = "2008_002682.jpg"

engine_file_path = "vgg16.trt"

class HostDeviceMem(object):

def __init__(self, host_mem, device_mem):

"""Within this context, host_mom means the cpu memory and device means the GPU memory

"""

self.host = host_mem

self.device = device_mem

def __str__(self):

return "Host:\n" + str(self.host) + "\nDevice:\n" + str(self.device)

def __repr__(self):

return self.__str__()

def allocate_buffers(engine):

inputs = []

outputs = []

bindings = []

stream = cuda.Stream()

for binding in engine:

size = trt.volume(engine.get_binding_shape(binding)) * engine.max_batch_size

dtype = trt.nptype(engine.get_binding_dtype(binding))

# Allocate host and device buffers

host_mem = cuda.pagelocked_empty(size, dtype)

device_mem = cuda.mem_alloc(host_mem.nbytes)

# Append the device buffer to device bindings.

bindings.append(int(device_mem))

# Append to the appropriate list.

if engine.binding_is_input(binding):

inputs.append(HostDeviceMem(host_mem, device_mem))

else:

outputs.append(HostDeviceMem(host_mem, device_mem))

return inputs, outputs, bindings, stream

def do_inference(context, bindings, inputs, outputs, stream, batch_size=1):

# Transfer data from CPU to the GPU.

[cuda.memcpy_htod_async(inp.device, inp.host, stream) for inp in inputs]

# Run inference.

t_model = time.perf_counter()

context.execute_async(batch_size=batch_size, bindings=bindings, stream_handle=stream.handle)

print(f'only one line cost:{time.perf_counter() - t_model:.8f}s')

# Transfer predictions back from the GPU.

[cuda.memcpy_dtoh_async(out.host, out.device, stream) for out in outputs]

# Synchronize the stream

stream.synchronize()

# Return only the host outputs.

return [out.host for out in outputs]

print("Reading engine from file {}".format(engine_file_path))

with open(engine_file_path, "rb") as f, trt.Runtime(TRT_LOGGER) as runtime:

engine = runtime.deserialize_cuda_engine(f.read())

#create the context for this engine

context = engine.create_execution_context()

#allocate buffers for input and output

inputs, outputs, bindings, stream = allocate_buffers(engine) # input, output: host # bindings

normalize = transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

transform = transforms.Compose([

transforms.Resize(256),

transforms.CenterCrop(224),

transforms.ToTensor(), normalize]

)

#读图

img = Image.open("2008_002682.jpg")

print(img.size)

# 对图像进行归一化

img_p = transform(img)

print(img_p.shape)

# 增加一个维度

img_normalize = torch.unsqueeze(img_p, 0)

print(img_normalize.shape)

#output

shape_of_output = (1, 1000)

#covert to numpy

img_normalize_np = img_normalize.cpu().data.numpy()

# Load data to the buffer

inputs[0].host = img_normalize_np

print(inputs[0].host.shape)

#Do Inference

t_model = time.perf_counter()

trt_outputs = do_inference(context, bindings=bindings, inputs=inputs, outputs=outputs, stream=stream) # numpy data

print(f'do inference cost:{time.perf_counter() - t_model:.8f}s')

print(len(trt_outputs))

#转化为torch tensor

out = torch.from_numpy(trt_outputs[0])

print(out.shape)

#拓展一个纬度

out = torch.unsqueeze(out, 0)

print(out.shape)

with open('imagenet_classes.txt') as f:

classes = [line.strip() for line in f.readlines()]

_, indices = torch.sort(out, descending=True)

percentage = torch.nn.functional.softmax(out, dim=1)[0] * 100

prediction = [[classes[idx], percentage[idx].item()] for idx in indices[0][:5]]

print(prediction)

score = []

label = []

for i in prediction:

print('Prediciton-> {:<25} Accuracy-> ({:.2f}%)'.format(i[0][:], i[1]))

score.append(i[1])

label.append(i[0])

# 把结果show出来,一些用法和matlab很相似

fig, (ax1, ax2) = plt.subplots(1, 2, figsize=(10, 8))

fig.sca(ax1)

ax1.imshow(img)

plt.xticks([])

plt.yticks([])

barlist = ax2.bar(range(5), [i for i in score])

barlist[0].set_color('g')

plt.sca(ax2)

plt.ylim([0, 20])

plt.xticks(range(5),

# [idx2labels[str(i)][1] for i in pred_label_idx],

[i for i in label],

rotation='45')

# fig.subplots_adjust(bottom=0.2)

plt.rcParams['font.size'] = '16' # 设置字体大小

plt.rcParams['axes.unicode_minus'] = False # 解决坐标轴负数的负号显示问题

plt.show()

运行结果如下:

/home/sxhlvye/anaconda3/envs/pytorch-yolo/bin/python /home/sxhlvye/Trial/yolov3-9.5.0/tensorrt_pytorch1.py

Reading engine from file vgg16.trt

[TensorRT] WARNING: TensorRT was linked against cuBLAS/cuBLAS LT 11.2.0 but loaded cuBLAS/cuBLAS LT 11.1.0

[TensorRT] WARNING: TensorRT was linked against cuBLAS/cuBLAS LT 11.2.0 but loaded cuBLAS/cuBLAS LT 11.1.0

(375, 500)

torch.Size([3, 224, 224])

torch.Size([1, 3, 224, 224])

(1, 3, 224, 224)

only one line cost:0.00034539s

do inference cost:0.00914562s

1

torch.Size([1000])

torch.Size([1, 1000])

[['tiger cat', 15.441015243530273], ['tabby', 11.573622703552246], ['Egyptian cat', 9.12546443939209], ['Eskimo dog', 7.142945289611816], ['wallaby', 4.557239532470703]]

Prediciton-> tiger cat Accuracy-> (15.44%)

Prediciton-> tabby Accuracy-> (11.57%)

Prediciton-> Egyptian cat Accuracy-> (9.13%)

Prediciton-> Eskimo dog Accuracy-> (7.14%)

Prediciton-> wallaby Accuracy-> (4.56%)

free(): invalid pointer

Process finished with exit code 134 (interrupted by signal 6: SIGABRT)

对比我之前博客中的预测结果

对比看到,将pytorch模型转化为onnx模型,再转化为tensorRT模型,再去预测相同的图片,精度并没有什么损失,但速度大大提升了。

接下来讲第二种加速方法(通过tensorrt API来实现)

在前面提到的onnx_resnet50.py文件基础上做更改,这里需要用到一个common.py文件,其路径可在tensorrt安装目录下找到,将common.py放到和下面实验的py文件同一个目录下

ONNX转换为tensorrt的API实现过程见如下build_engine_onnx函数

import os

# This sample uses an ONNX ResNet50 Model to create a TensorRT Inference Engine

import random

import sys

import numpy as np

# This import causes pycuda to automatically manage CUDA context creation and cleanup.

import pycuda.autoinit

import tensorrt as trt

from PIL import Image

sys.path.insert(1, os.path.join(sys.path[0], ".."))

import common

import torch

import torchvision

from torchvision import transforms

model_file = "vgg16.onnx"

import time

import matplotlib.pyplot as plt

# You can set the logger severity higher to suppress messages (or lower to display more messages).

TRT_LOGGER = trt.Logger(trt.Logger.WARNING)

# The Onnx path is used for Onnx models.

def build_engine_onnx(model_file):

builder = trt.Builder(TRT_LOGGER)

network = builder.create_network(common.EXPLICIT_BATCH)

config = builder.create_builder_config()

parser = trt.OnnxParser(network, TRT_LOGGER)

config.max_workspace_size = common.GiB(1)

# Load the Onnx model and parse it in order to populate the TensorRT network.

with open(model_file, 'rb') as model:

if not parser.parse(model.read()):

print ('ERROR: Failed to parse the ONNX file.')

for error in range(parser.num_errors):

print (parser.get_error(error))

return None

return builder.build_engine(network, config)

def main():

# Build a TensorRT engine.

engine = build_engine_onnx(model_file)

# Inference is the same regardless of which parser is used to build the engine, since the model architecture is the same.

# Allocate buffers and create a CUDA stream.

inputs, outputs, bindings, stream = common.allocate_buffers(engine)

# Contexts are used to perform inference.

context = engine.create_execution_context()

normalize = transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

transform = transforms.Compose([

transforms.Resize(256),

transforms.CenterCrop(224),

transforms.ToTensor(), normalize]

)

# 读图

img = Image.open("2008_002682.jpg")

print(img.size)

# 对图像进行归一化

img_p = transform(img)

print(img_p.shape)

# 增加一个维度

img_normalize = torch.unsqueeze(img_p, 0)

print(img_normalize.shape)

# output

shape_of_output = (1, 1000)

# covert to numpy

img_normalize_np = img_normalize.cpu().data.numpy()

# Load data to the buffer

inputs[0].host = img_normalize_np

print(inputs[0].host.shape)

t_model = time.perf_counter()

trt_outputs = common.do_inference_v2(context, bindings=bindings, inputs=inputs, outputs=outputs, stream=stream)

print(f'do inference cost:{time.perf_counter() - t_model:.8f}s')

print(len(trt_outputs))

# 转化为torch tensor

out = torch.from_numpy(trt_outputs[0])

print(out.shape)

# 拓展一个纬度

out = torch.unsqueeze(out, 0)

print(out.shape)

with open('imagenet_classes.txt') as f:

classes = [line.strip() for line in f.readlines()]

_, indices = torch.sort(out, descending=True)

percentage = torch.nn.functional.softmax(out, dim=1)[0] * 100

prediction = [[classes[idx], percentage[idx].item()] for idx in indices[0][:5]]

print(prediction)

score = []

label = []

for i in prediction:

print('Prediciton-> {:<25} Accuracy-> ({:.2f}%)'.format(i[0][:], i[1]))

score.append(i[1])

label.append(i[0])

# 把结果show出来,一些用法和matlab很相似

fig, (ax1, ax2) = plt.subplots(1, 2, figsize=(10, 8))

fig.sca(ax1)

ax1.imshow(img)

plt.xticks([])

plt.yticks([])

barlist = ax2.bar(range(5), [i for i in score])

barlist[0].set_color('g')

plt.sca(ax2)

plt.ylim([0, 20])

plt.xticks(range(5),

# [idx2labels[str(i)][1] for i in pred_label_idx],

[i for i in label],

rotation='45')

# fig.subplots_adjust(bottom=0.2)

plt.rcParams['font.size'] = '16' # 设置字体大小

plt.rcParams['axes.unicode_minus'] = False # 解决坐标轴负数的负号显示问题

plt.show()

if __name__ == '__main__':

main()运行后结果如下:

/home/sxhlvye/anaconda3/envs/pytorch-yolo/bin/python /home/sxhlvye/Trial/yolov3-9.5.0/tensorrt_pytorch2.py

[libprotobuf WARNING google/protobuf/io/coded_stream.cc:604] Reading dangerously large protocol message. If the message turns out to be larger than 2147483647 bytes, parsing will be halted for security reasons. To increase the limit (or to disable these warnings), see CodedInputStream::SetTotalBytesLimit() in google/protobuf/io/coded_stream.h.

[libprotobuf WARNING google/protobuf/io/coded_stream.cc:81] The total number of bytes read was 553434728

/home/sxhlvye/Trial/yolov3-9.5.0/tensorrt_pytorch2.py:88: DeprecationWarning: Use build_serialized_network instead.

return builder.build_engine(network, config)

[TensorRT] WARNING: Detected invalid timing cache, setup a local cache instead

(375, 500)

torch.Size([3, 224, 224])

torch.Size([1, 3, 224, 224])

(1, 3, 224, 224)

do inference cost:0.00797156s

1

torch.Size([1000])

torch.Size([1, 1000])

[['tiger cat', 15.441014289855957], ['tabby', 11.573627471923828], ['Egyptian cat', 9.125463485717773], ['Eskimo dog', 7.142951488494873], ['wallaby', 4.557241439819336]]

Prediciton-> tiger cat Accuracy-> (15.44%)

Prediciton-> tabby Accuracy-> (11.57%)

Prediciton-> Egyptian cat Accuracy-> (9.13%)

Prediciton-> Eskimo dog Accuracy-> (7.14%)

Prediciton-> wallaby Accuracy-> (4.56%)

Process finished with exit code 0

速度和效果和第一种方法基本一致。

c++ 加速pytorch模型方法可见我的博客(可以提前把pytorch转换到的ONNX模型准备好)

题外话:

很多博客都是围绕官网介绍开展,所以官网的介绍应该是主看的,同时再去参考一些博客

Quick Start Guide :: NVIDIA Deep Learning TensorRT Documentation![]() https://docs.nvidia.com/deeplearning/tensorrt/quick-start-guide/index.html#export-from-tf官网对不同深度学习框架的加速度做了描述及方法介绍,可参考

https://docs.nvidia.com/deeplearning/tensorrt/quick-start-guide/index.html#export-from-tf官网对不同深度学习框架的加速度做了描述及方法介绍,可参考

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?