一、引言

- 现阶段因为在研究目标检测方面的结果可视化,趁还没忘记打算赶快记录下来,所以暂时先不讲Anaconda环境配置以及cuda,cudnn配置的东西,后期补上。

- 目标是想要解决PointPillars的可视化,但是发现他自带的本地网页的显示方式不方便进行修改或者可移植,看到网上有说可以参考PointRCNN的可视化方法,如果又加班配置了一下PointRCNN的环境,最终成功可视化,下一步的目标是研究PointRCNN可视化代码,然后进行相应的修改。

- 本文首先从PointPillars的Kittiview可视化的方法开始介绍再到PointRCNN可视化方法逐步介绍(当然是站在巨人的肩膀上探索的,其实网上有博主以及研究好了,我在实际过程中发现其实说的不是很清晰,所以想做一个纯小白的教程(▽))

- 最后,不懂的可以评论或私信,敲了好久才总结完┭┮﹏┭┮

二、PointPillars可视化(Kittiview的方法)

2.1 配置PointPillars的环境

有两个版本的,一个是PointPillars作者的(链接1),一个是Second作者的(链接2),其中链接1中的可视化部分不全,后面运行会出问题,到后面会说怎么办 ~~

- 电脑配置:ubuntu18.04+GTX1660ti+cuda10.2+cudnn8.05+python3.7+pytorch1.9.0

- PointPillars作者的github网址,链接1

- Second作者的github网址,链接2

2.1.1 下载源码

本文用的是PointPillars作者的源码

git clone https://github.com/nutonomy/second.pytorch.git

2.1.2 配置Anaconda环境、安装pytorch

命名看自己的需求,这里就直接跳过ubuntu安Anaconda那一步了

conda create -n pointpillars python=3.7

pip3 install torch torchvision torchaudio -i http://pypi.douban.com/simple --trusted-host pypi.douban.com

这里下载pytorch进行换源加速了,因为我不喜欢设置全局换源,每次都是临时用一下

当然pytorch版本可以自己指定,去pytorch官网找指令就行

2.1.3 根据github里的readme配置好PointPillars环境

基本上网上的博主都是根据github翻译过来的,所以直接进作者的github按照他步骤从配环境到运行就可以了,这个靠谱些,报错才有必要去参考别人的

基本可以总结成下面几步:

·下载数据集(我记得有几十g要下载,从网上找找看有没有百度网盘资源)

·安装依赖包(就几个pip指令,记得无论啥时候运行要切到对应的虚拟环境)

·准备数据文件结构(这一块挺麻烦的,反正就运行指令就行了,会生成一些文件)

·更改config文件:就是一些文件的路径,主要就是数据集的,github上说的很清楚

·训练网络:一般代码都会有预训练好的权重

重点讲下运行还有配置环境时遇到的问题,这也是最重要的

(1)下载SparseConvNet的时候

不要用官网的git clone指令,用下面的,或者先Fork,再转到gitee里面下载会很快(一个很实用的小技巧)

git clone https://github.com/facebookresearch/SparseConvNet.git

注:我安装的时候有报错,跟当时cuda的在bashrc里面写的路径有关系,在bashrc里面添加

export CUDA_HOME=/usr/local/cuda-10.2

(2)要添加pythonpath到bashrc中,具体路径根据自己的位置来,否则会说某些包找不到

export PYTHONPATH=$PYTHONPATH:/home/lixushi/pointpillars/second.pytorch

(3)在运行训练部分代码时,遇到以下报错,主要是说加载数据集的问题,这个跟pytorch版本有关系

File "/home/lixushi/pointpillars/second.pytorch/torchplus/train/checkpoint.py", line 146, in try_restore_latest_checkpoints

restore(latest_ckpt, model)

File "/home/lixushi/pointpillars/second.pytorch/torchplus/train/checkpoint.py", line 117, in restore

model.load_state_dict(torch.load(ckpt_path))

File "/home/lixushi/.conda/envs/pytorch/lib/python3.7/site-packages/torch/nn/modules/module.py", line 1407, in load_state_dict

self.__class__.__name__, "\n\t".join(error_msgs)))

RuntimeError: Error(s) in loading state_dict for VoxelNet

将~/pointpillars/second.pytorch/torchplus/train/checkpoint.py的117行附近的

model.load_state_dict(torch.load(ckpt_path))

改为:

model.load_state_dict(torch.load(ckpt_path),False)

(4)遇到以下报错,大概意思是需要bool型但是代码却是byte

File "/home/lixushi/pointpillars/second.pytorch/second/pytorch/models/voxelnet.py", line 911, in predict

opp_labels = (box_preds[..., -1] > 0) ^ dir_labels.byte()

RuntimeError: result type Byte can't be cast to the desired output type Bool

将~/pointpillars/second.pytorch/second/pytorch/models/voxelnet.py的911行附近的

opp_labels = (box_preds[..., -1] > 0) ^ dir_labels.byte()

改为:

opp_labels = (box_preds[..., -1] > 0) ^ dir_labels.bool()

(5)遇到以下报错,numpy等级过高

'numpy.float64' object cannot be interpreted as an integer

解决方法就降低版本,运行下面的指令

pip install -U numpy==1.17.0 -i https://pypi.tuna.tsinghua.edu.cn/simple

2.2 运行PointPillars

2.2.1 运行train部分的代码

cd ~/pointpillars/second.pytorch/second

python ./pytorch/train.py train --config_path=./configs/pointpillars/car/xyres_16.proto --model_dir=/path/to/model_dir

这里的==/path/to/model_dir可以任意指定==,但是最好就放在和数据集同一路径下最好

2.2.2 运行evaluate部分的代码

# 如果只输出一个pkl格式的文件只运行这个指令就行

python pytorch/train.py evaluate --config_path= configs/pointpillars/car/xyres_16.proto --model_dir=/path/to/model_dir

# 如果要输出很多个txt文件的形式就要加一个参数

python pytorch/train.py evaluate --config_path= configs/pointpillars/car/xyres_16.proto --model_dir=/path/to/model_dir --pickle_result=False

这里的运行结果会存放在model_dir/eval_results/step_xxx/ 下,/path/to/model_dir就和训练时候定义的路径是一样的

2.3 kittiviewer web可视化(本地网页)

这一部分是参考的一个博主的CSDN,我把流程细化了一下,然后自己实际运行的时候也遇到了问题,把解决方案写上了

(1)将下面的代码替换掉~/pointpillars/second.pytorch/second/kittiviewer/frontend 目录下的index.html文件内容

<!DOCTYPE html>

<html>

<head>

<meta charset="utf-8" />

<meta name="viewport" content="width=device-width, initial-scale=1, shrink-to-fit=no" />

<!-- Bootstrap CSS -->

<link rel="stylesheet" href="https://maxcdn.bootstrapcdn.com/bootstrap/4.0.0/css/bootstrap.min.css" integrity="sha384-Gn5384xqQ1aoWXA+058RXPxPg6fy4IWvTNh0E263XmFcJlSAwiGgFAW/dAiS6JXm"

crossorigin="anonymous" />

<link rel="stylesheet" type="text/css" href="css/main.css" media="screen" />

<title>SECOND Kitti Viewer</title>

<script src="https://code.jquery.com/jquery-3.3.1.min.js" integrity="sha384-tsQFqpEReu7ZLhBV2VZlAu7zcOV+rXbYlF2cqB8txI/8aZajjp4Bqd+V6D5IgvKT"

crossorigin="anonymous"></script>

<script>window.jQuery || document.write('<script src="js/libs/jquery-3.3.1.min.js">\x3C/script>');</script>

<script src="https://cdnjs.cloudflare.com/ajax/libs/popper.js/1.12.9/umd/popper.min.js" integrity="sha384-ApNbgh9B+Y1QKtv3Rn7W3mgPxhU9K/ScQsAP7hUibX39j7fakFPskvXusvfa0b4Q"

crossorigin="anonymous"></script>

<script src="https://maxcdn.bootstrapcdn.com/bootstrap/4.0.0/js/bootstrap.min.js" integrity="sha384-JZR6Spejh4U02d8jOt6vLEHfe/JQGiRRSQQxSfFWpi1MquVdAyjUar5+76PVCmYl"

crossorigin="anonymous"></script>

<script src="https://cdnjs.cloudflare.com/ajax/libs/three.js/98/three.js" integrity="sha384-BMOR44t8p+yL7NVevEC9pO2y26JB6lv1mKFhit2zvzWq5jZo6RpIcTdg6MUxKQRP"

crossorigin="anonymous"></script>

<script src="https://cdnjs.cloudflare.com/ajax/libs/dat-gui/0.7.3/dat.gui.js" integrity="sha384-S7m8CpjFEEXwHzEDZ8XdeFSO0rLzdK8x1e7pLuc2hx5Xr23XnaWvb0p/kIez3mxy"

crossorigin="anonymous"></script>

<script src="https://cdnjs.cloudflare.com/ajax/libs/mathjs/5.3.0/math.js" integrity="sha384-YILGCrKtrx9ucVIp2iNy85HZcWysS6pXa+tAW+Jbgxoi3TJJSCrg0fJG5C0AJzJO"

crossorigin="anonymous"></script>

<script src="https://cdnjs.cloudflare.com/ajax/libs/stats.js/r16/Stats.min.js" integrity="sha384-JIMCcCVEupQBEb9e6o4OAccqY004Vm2uYnOzOlJCyyy/Tl3fVxU/nq2gUimNdloP"

crossorigin="anonymous"></script>

<link href="https://cdn.jsdelivr.net/npm/jspanel4@4.3.0/dist/jspanel.css" rel="stylesheet" />

<!-- jsPanel JavaScript -->

<script src="https://cdn.jsdelivr.net/npm/jspanel4@4.3.0/dist/jspanel.js" integrity="sha384-2F3fGv9PeamJMmqDMSollVdfQqFsLLru6E0ed+AOHOq3tB2IyUDSyllqrQJqx2vp"

crossorigin="anonymous"></script>

<script src="https://cdn.jsdelivr.net/npm/js-cookie@2/src/js.cookie.min.js" integrity="sha384-R4v5onSW2o3yhiPYUPN9ssGd9OmZdGRIdLmZgGst3fp0NhJDxYSSErv0YzWdeC/l" crossorigin="anonymous"></script>

<script src="https://cdn.jsdelivr.net/npm/js-cookie@2/src/js.cookie.min.js" integrity="sha384-8kKqrPvADL3/ZYJGtcqeG+fveXaJxTaI7LF1/a9QGZpkRVZSurP858KKE6rtnLLs"

crossorigin="anonymous"></script>

<script>window.Cookies || document.write('<script src="js/libs/js.cookie.min.js">\x3C/script>');</script>

<script src="js/MapControls.js"></script>

<script src="js/SimplePlot.js"></script>

<script src="js/Toast.js"></script>

<script src="js/KittiViewer.js"></script>

<script src="js/renderers/CSS2DRenderer.js"></script>

<script src="js/shaders/ConvolutionShader.js"></script>

<script src="js/shaders/CopyShader.js"></script>

<script src="js/shaders/FilmShader.js"></script>

<script src="js/shaders/FocusShader.js"></script>

<script src="js/postprocessing/EffectComposer.js"></script>

<script src="js/postprocessing/MaskPass.js"></script>

<script src="js/postprocessing/RenderPass.js"></script>

<script src="js/postprocessing/BloomPass.js"></script>

<script src="js/postprocessing/ShaderPass.js"></script>

<script src="js/postprocessing/FilmPass.js"></script>

<script src="js/shaders/LuminosityHighPassShader.js"></script>

<script src="js/postprocessing/UnrealBloomPass.js"></script>

</head>

<body>

<div class="row" id="bottompanel">

<div class="btn prev"><</div>

<input class="btn imgidx" type="text" pattern="[0-9]" placeholder="Image Index" value='1' />

<div class="btn next">></div>

</div>

<ul class="toasts"></ul>

<script type="text/javascript">

var CookiesKitti = Cookies.noConflict();

var scene2 = new THREE.Scene();

var toasts = $(".toasts")[0];

var logger = new Toast(toasts);

var scene = new THREE.Scene();

scene.fog = new THREE.FogExp2(0x000000, 0.01);

var camera = new THREE.PerspectiveCamera(

75,

window.innerWidth / window.innerHeight,

0.1,

300

);

camera.position.set(-10, 0, 5);

camera.lookAt(new THREE.Vector3(0, 0, 0));

camera.up.set(0, 0, 1);

var camhelper = new THREE.CameraHelper(camera);

scene.add(camhelper);

var renderer = new THREE.WebGLRenderer({

antialias: true

});

renderer.setPixelRatio(window.devicePixelRatio);

renderer.setSize(window.innerWidth, window.innerHeight);

var axesHelper = new THREE.AxesHelper(2);

scene.add(axesHelper);

// var stats = new Stats();

// stats.showPanel( 1 ); // 0: fps, 1: ms, 2: mb, 3+: custom

var panelCanvas = document.createElement("canvas");

var camerabev = new THREE.OrthographicCamera(80, -80, -50, 50, 1, 500);

camerabev.up.set(-1, 0, 1);

const wBev = 400;

const hBev = 700;

var panelBev = jsPanel.create({

id: "panelBev",

theme: "primary",

contentSize: {

width: function () {

return Math.min(wBev, window.innerWidth * 0.5);

},

height: function () {

return Math.min(hBev, window.innerHeight * 0.8);

}

},

position: "left-bottom 0 0",

animateIn: "jsPanelFadeIn",

headerTitle: "Bird's eye view",

content: function (panel) {

let container = $(this.content)[0];

panelCanvas.width = wBev;

panelCanvas.height = hBev;

camerabev.position.set(0, 0, 50);

camerabev.lookAt(0, 0, 0);

container.appendChild(panelCanvas);

},

onwindowresize: true

});

const wImage = 1242 / 3;

const hImage = 375 / 3;

var panelImageCanvas = document.createElement("canvas");

var panelImage = jsPanel.create({

id: "panelImage",

theme: "primary",

contentSize: {

width: function () {

return Math.min(wImage, window.innerWidth * 0.8);

},

height: function () {

return Math.min(hImage, window.innerHeight * 0.8);

}

},

position: "center-top 0 0",

animateIn: "jsPanelFadeIn",

headerTitle: "Image 2",

content: function (panel) {

let container = $(this.content)[0];

panelImageCanvas.width = wImage;

panelImageCanvas.height = hImage;

container.appendChild(panelImageCanvas);

},

onwindowresize: true

});

var rendererBev = new THREE.WebGLRenderer({

antialias: true

});

// renderer.setClearColor(0xeeeeee);

rendererBev.setPixelRatio(window.devicePixelRatio);

rendererBev.setSize(wBev, hBev);

var MAX_POINTS = 150000;

var vertices = new Float32Array(MAX_POINTS * 3);

var pointParicle = scatter(

vertices,

3.5,

0x9099ba,

"textures/sprites/disc.png"

);

pointParicle.geometry.setDrawRange(0, 0);

scene.add(pointParicle);

var viewer = new KittiViewer(pointParicle, logger, panelImageCanvas);

viewer.readCookies();

var panelResize = function (event) {

if (event.detail === 'panelBev') {

let container = $(panelBev.content)[0];

let w = container.clientWidth;

let h = container.clientHeight;

panelCanvas.width = w;

panelCanvas.height = h;

rendererBev.setSize(w, h);

let aspect = w / h;

camerabev.left = 0.5 * aspect * 100;

camerabev.right = -0.5 * aspect * 100;

camerabev.top = -0.5 * 100;

camerabev.bottom = 0.5 * 100;

camerabev.updateProjectionMatrix();

} else if (event.detail === 'panelImage') {

let container = $(panelImage.content)[0];

let w = container.clientWidth;

let h = container.clientHeight;

panelImageCanvas.width = w;

panelImageCanvas.height = h;

viewer.drawImage();

}

}

document.addEventListener("jspanelresize", function (event) {

return panelResize(event);

});

panelResize({

detail: 'panelBev'

});

labelRenderer = new THREE.CSS2DRenderer();

labelRenderer.setSize(window.innerWidth, window.innerHeight);

labelRenderer.domElement.style.position = "absolute";

labelRenderer.domElement.style.top = 0;

document.body.appendChild(labelRenderer.domElement);

document.body.appendChild(renderer.domElement);

// document.addEventListener('mouseup', onDocumentMouseUp, false);

window.addEventListener("resize", onWindowResize, false);

var controls = new THREE.MapControls(camera, labelRenderer.domElement);

// controls = new THREE.MapControls(camera);

//controls.addEventListener( 'change', render ); // call this only in static scenes (i.e., if there is no animation loop)

controls.enableDamping = true; // an animation loop is required when either damping or auto-rotation are enabled

controls.dampingFactor = 0.25;

controls.screenSpacePanning = false;

controls.minDistance = 1;

controls.maxDistance = 30;

controls.maxPolarAngle = Math.PI / 2;

controls.target = new THREE.Vector3(0, 0, 1);

var controlsBev = new THREE.MapControls(camerabev, panelCanvas);

controlsBev.enableDamping = true; // an animation loop is required when either damping or auto-rotation are enabled

controlsBev.dampingFactor = 0.25;

controlsBev.screenSpacePanning = false;

controlsBev.minDistance = 1;

controlsBev.maxDistance = 30;

controlsBev.maxPolarAngle = Math.PI / 2;

// controlsBev.target = new THREE.Vector3(0, 0, 1);

controlsBev.enableRotate = false;

controlsBev.panSpeed = 0.5;

var renderModel = new THREE.RenderPass(scene, camera);

var effectBloom = new THREE.BloomPass(0.75);

var effectFilm = new THREE.FilmPass(0.5, 0.5, 1448, false);

var bloomPass = new THREE.UnrealBloomPass(

new THREE.Vector2(window.innerWidth, window.innerHeight),

1.5,

0.4,

0.85

);

var postParams = {

exposure: 1,

bloomStrength: 1.5,

bloomThreshold: 0,

bloomRadius: 0

};

bloomPass.renderToScreen = true;

bloomPass.threshold = postParams.bloomThreshold;

bloomPass.strength = postParams.bloomStrength;

bloomPass.radius = postParams.bloomRadius;

effectFocus = new THREE.ShaderPass(THREE.FocusShader);

effectFocus.uniforms["screenWidth"].value = window.innerWidth;

effectFocus.uniforms["screenHeight"].value = window.innerHeight;

effectFocus.renderToScreen = true;

composer = new THREE.EffectComposer(renderer);

composer.addPass(renderModel);

// composer.addPass(effectBloom);

// composer.addPass(effectFilm);

// composer.addPass(effectFocus);

composer.addPass(bloomPass);

/*

var renderBevModel = new THREE.RenderPass(scene, camerabev);

composerbev = new THREE.EffectComposer(rendererBev);

composerbev.addPass(renderBevModel);

// composer.addPass(effectBloom);

// composerbev.addPass(effectFilm);

// composerbev.addPass(effectFocus);

composerbev.addPass(bloomPass);*/

// pointParicle.position.needsUpdate = true; // required after the first render

var gui = new dat.GUI();

var coreParams = {

backgroundcolor: "#000000"

};

var cameraGui = gui.addFolder("core");

cameraGui.add(camera, "fov");

cameraGui

.addColor(coreParams, "backgroundcolor")

.onChange(function (value) {

renderer.setClearColor(value, 1);

});

cameraGui.open();

var kittiGui = gui.addFolder("kitti controllers");

kittiGui.add(viewer, "backend").onChange(function (value) {

viewer.backend = value;

CookiesKitti.set('kittiviewer_backend', value);

});

kittiGui.add(viewer, "rootPath").onChange(function (value) {

viewer.rootPath = value;

CookiesKitti.set('kittiviewer_rootPath', value);

});

kittiGui.add(viewer, "infoPath").onChange(function (value) {

viewer.infoPath = value;

CookiesKitti.set('kittiviewer_infoPath', value);

});

kittiGui.add(viewer, "load");

kittiGui.add(viewer, "detPath").onChange(function (value) {

viewer.detPath = value;

CookiesKitti.set('kittiviewer_detPath', value);

});

kittiGui.add(viewer, "loadDet");

kittiGui.add(viewer, "drawDet");

kittiGui.add(viewer, "checkpointPath").onChange(function (value) {

viewer.checkpointPath = value;

CookiesKitti.set('kittiviewer_checkpointPath', value);

});

kittiGui.add(viewer, "configPath").onChange(function (value) {

viewer.configPath = value;

CookiesKitti.set('kittiviewer_configPath', value);

});

kittiGui.add(viewer, "buildNet");

/*

kittiGui.add(viewer, "imageIndex").listen();

var fixbugParam = {

changeImageIndex: viewer.imageIndex

};

kittiGui.add(fixbugParam, "changeImageIndex").onChange(function(val) {

viewer.imageIndex = val;

});

kittiGui.add(viewer, "plot");

kittiGui.add(viewer, "next");

kittiGui.add(viewer, "prev");

*/

kittiGui.add(viewer, "inference");

kittiGui.open();

var postGui = gui.addFolder("effect");

postGui.add(postParams, "exposure", 0.1, 2).onChange(function (value) {

renderer.toneMappingExposure = Math.pow(value, 4.0);

});

postGui

.add(postParams, "bloomThreshold", 0.0, 1.0)

.onChange(function (value) {

bloomPass.threshold = Number(value);

});

postGui

.add(postParams, "bloomStrength", 0.0, 3.0)

.onChange(function (value) {

bloomPass.strength = Number(value);

});

postGui

.add(postParams, "bloomRadius", 0.0, 1.0)

.step(0.01)

.onChange(function (value) {

bloomPass.radius = Number(value);

});

// postGui.open();

var param = {

color: pointParicle.material.color.getHex()

};

var pointGui = gui.addFolder("points");

pointGui.add(pointParicle.material, "size", 1, 10);

pointGui.addColor(param, "color").onChange(function (val) {

pointParicle.material.color.setHex(val);

});

pointGui.open();

var boxesGui = gui.addFolder("boxes");

boxesGui.addColor(viewer, "gtBoxColor");

boxesGui.addColor(viewer, "dtBoxColor");

boxesGui.addColor(viewer, "gtLabelColor");

boxesGui.addColor(viewer, "dtLabelColor");

boxesGui.open();

// renderer.autoClear = false;

var animate = function () {

requestAnimationFrame(animate);

// renderer.clear();

renderer.render(scene, camera);

labelRenderer.render(scene, camera);

// renderer.render( scene, camera );

composer.render(0.01);

rendererBev.render(scene, camerabev);

// composerbev.render(0.01);

let context = panelCanvas.getContext("2d");

context.drawImage(rendererBev.domElement, 0, 0);

// stats.update();

};

animate();

function onDocumentMouseUp(event) {

event.preventDefault();

console.log(camera.position);

}

function onWindowResize() {

camera.aspect = window.innerWidth / window.innerHeight;

camera.updateProjectionMatrix();

camhelper.update();

renderer.setSize(window.innerWidth, window.innerHeight);

labelRenderer.setSize(window.innerWidth, window.innerHeight);

}

$(".imgidx")[0].onkeypress = function (e) {

if (!e) e = window.event;

var keyCode = e.keyCode || e.which;

if (keyCode == '13') {

// Enter pressed

viewer.imageIndex = parseInt($(".imgidx")[0].value, 10);

viewer.plot();

return true;

}

}

$(".prev")[0].onclick = function (e) {

viewer.prev();

$(".imgidx")[0].value = viewer.imageIndex.toString()

return true;

}

$(".next")[0].onclick = function (e) {

viewer.next();

$(".imgidx")[0].value = viewer.imageIndex.toString()

return true;

}

</script>

</body>

</html>

(2)~/pointpillars/second.pytorch/second/kittiviewer/frontend/js 目录下检查一下是否有libs文件,如果没有的话,去Second作者的github里copy一份(反正我的是没有的。。。应该是pointpillars作者没放进去)

(3)在~/pointpillars/second.pytorch/second/kittiviewer 目录下运行

python backend.py main --port=16666

这里应该是后端代码,其中port参数默认值就是16666(可以打开py文件看看),记录下此时终端输出的一个ip地址,我的是:http://192.168.3.251:16666/,记作后端ip

(4)在~/pointpillars/second.pytorch/second/kittiviewer/frontend目录下重新开一个终端运行

python -m http.server

这里应该是运行前端代码,copy此时终端输出的ip(我的是http://0.0.0.0:8000/),打开浏览器复制搜索,可以看到下图结果

(5)配置右侧菜单栏参数

backend参数:http://192.168.3.251:16666/(把前面的后端ip填进去就行)

rootPath:/media/lixushi/二宝/KITTI_DATASET_ROOT(数据集路径)

infoPath:/media/lixushi/二宝/KITTI_DATASET_ROOT/kitti_infos_val.pkl (kitti_infos_val.pkl文件的位置,注意kitti_infos_val.pkl不能少,要按照我的路径格式写,只修改/media/lixushi/二宝/这一部分即可)

detPath:/media/lixushi/二宝/KITTI_DATASET_ROOT/my_model/pointpillars_original/eval_results/step_296960 (输出结果的pkl文件路径)

(6)其他都不用管了,先点击load,界面右下角会弹出

再点击loadDet,界面右下角会弹出

(7)输入图片索引,回车即可得到可视化结果(其他我也没深入去研究了(#.#))

三、PointRCNN可视化

3.1 配置PointRCNN环境

3.1.1 下载源码

3.1.2 配置环境

我的环境是:pytorch1.5+cuda10.2+cudnn8.05+python3.7

(确实版本高了会有很多问题,但是反正就函数名字变了,报错就改就是)

与前文相同,不讲具体配置过程,总之就是根据github上面讲的流程来就行了,主要讲下我遇到的报错

(1)报错1:

src/roipool3d.cpp:6:29: error: ‘AT_CHECK’ was not declared in this scope

#define CHECK_CONTIGUOUS(x) AT_CHECK(x.is_contiguous(), #x, " must be contiguous ")

将所有待编译的源文件中的‘AT_CHECK’全部替换为‘TORCH_CHECK’

(2)报错2:

src/ball_query.cpp:22:27: error: ‘THCState_getCurrentStream’ was not declared in this scope

cudaStream_t stream = THCState_getCurrentStream(state);

文件中的THCState_getCurrentStream(state)都替换为c10::cuda::getCurrentCUDAStream()

(3)报错3:

src/iou3d.cpp:7:23: error: ‘AT_CHECK’ was not declared in this scope

#define CHECK_CUDA(x) AT_CHECK(x.type().is_cuda(), #x, " must be a CUDAtensor ")

将所有待编译的源文件中的‘AT_CHECK’全部替换为‘TORCH_CHECK’

(4)报错4:

/home/lixushi/.conda/envs/pointrcnn/lib/python3.7/site-packages/torch/include/ATen/core/TensorBody.h:341:7: note: declared here

T * data() const {

^~~~

/home/lixushi/PointRCNN/pointnet2_lib/pointnet2/src/group_points.cpp:18:27: error: ‘THCState_getCurrentStream’ was not declared in this scope

cudaStream_t stream = THCState_getCurrentStream(state);

T * data() const {

^~~~

/home/lixushi/PointRCNN/pointnet2_lib/pointnet2/src/sampling.cpp:17:27: error: ‘THCState_getCurrentStream’ was not declared in this scope

cudaStream_t stream = THCState_getCurrentStream(state);

T * data() const {

^~~~

/home/lixushi/PointRCNN/pointnet2_lib/pointnet2/src/interpolate.cpp:21:27: error: ‘THCState_getCurrentStream’ was not declared in this scope

cudaStream_t stream = THCState_getCurrentStream(state);

^~~~~~~~~~~~~~~~~~~~~~~~~

将上述几个报错文件中的THCState_getCurrentStream(state)都替换为c10::cuda::getCurrentCUDAStream()

其实就是pytorch版本问题,根据报错挨个解决就行,可以看出来我上面主要也就是两个问题,到时候各位根据实际情况来,应该只是换换函数

3.1.3 跑inference代码

(1)下载训练好的模型,放到/PointRCNN/tools文件夹下

(2)将原来的PointPillars的数据集软链接到/home/lixushi/PointRCNN/data/KITTI/ 路径下,软链接名字为object,如果不会软链接的,这一部分后面详细说

(3)输入以下指令

python eval_rcnn.py --cfg_file cfgs/default.yaml --ckpt ../output/rpn/ckpt/checkpoint_epoch_200.pth --batch_size 4 --eval_mode rcnn

大概十分钟以后会在/PointRCNN/目录下生成一个output结果,我们需要的文件放在PointRCNN/output/rcnn/default/eval/epoch_no_number/val/final_result/目录下的data文件夹里面

然后把整个文件夹复制到之前PointPillars的运行用的数据集的training目录下(我的是~/二宝/KITTI_DATASET_ROOT/training),并把文件夹重命名为pred

3.2 可视化

3.2.1 下载可视化代码kitti_object_vis

git clone https://github.com/kuixu/kitti_object_vis.git

3.2.2 设置数据集软链接

他的需要的数据集的数据结构如下图

kitti

object

testing

calib

000000.txt

image_2

000000.png

label_2

000000.txt

velodyne

000000.bin

pred

000000.txt

training

calib

000000.txt

image_2

000000.png

label_2

000000.txt

velodyne

000000.bin

pred

000000.txt

而我们知道原本PointPillars的数据集的数据结构是

└── KITTI_DATASET_ROOT

├── training <-- 7481 train data

| ├── image_2 <-- for visualization

| ├── calib

| ├── label_2

| ├── velodyne

| └── velodyne_reduced <-- empty directory

└── testing <-- 7580 test data

├── image_2 <-- for visualization

├── calib

├── velodyne

└── velodyne_reduced <-- empty directory

原本PointRCNN的数据结构是

PointRCNN

├── data

│ ├── KITTI

│ │ ├── ImageSets

│ │ ├── object

│ │ │ ├──training

│ │ │ ├──calib & velodyne & label_2 & image_2 & (optional: planes)

│ │ │ ├──testing

│ │ │ ├──calib & velodyne & image_2

├── lib

├── pointnet2_lib

├── tools

综合上面三个数据结构我们会发现数据集部分被PointRCNN的object文件夹、PointPillars的KITTI_DATASET_ROOT、kitti_object_vis的object文件夹所共用,所以为了不重复占用计算机的存储空间,采用软链接的形式,这样就只要在自己的硬盘存储一份数据集就可以实现共用了

设置软链接的方式如下:(先cd到需要软链接的位置,右键在当前位置打开终端再执行以下指令)

ln -s /media/lixushi/二宝/KITTI_DATASET_ROOT object

其中/media/lixushi/二宝/KITTI_DATASET_ROOT是源文件名,object是自定义的名字(根据实际需求调整)

- 下面就给kitti_object_vis设置软链接

cd /home/lixushi/PointRCNN/可视化/kitti_object_vis/data

ln -s /media/lixushi/二宝/KITTI_DATASET_ROOT obj

# 原来这个位置可能有一个空的object可以删掉,里面就几张图

3.2.3 下载依赖包

pip install opencv-python pillow scipy matplotlib

conda install mayavi -c conda-forge

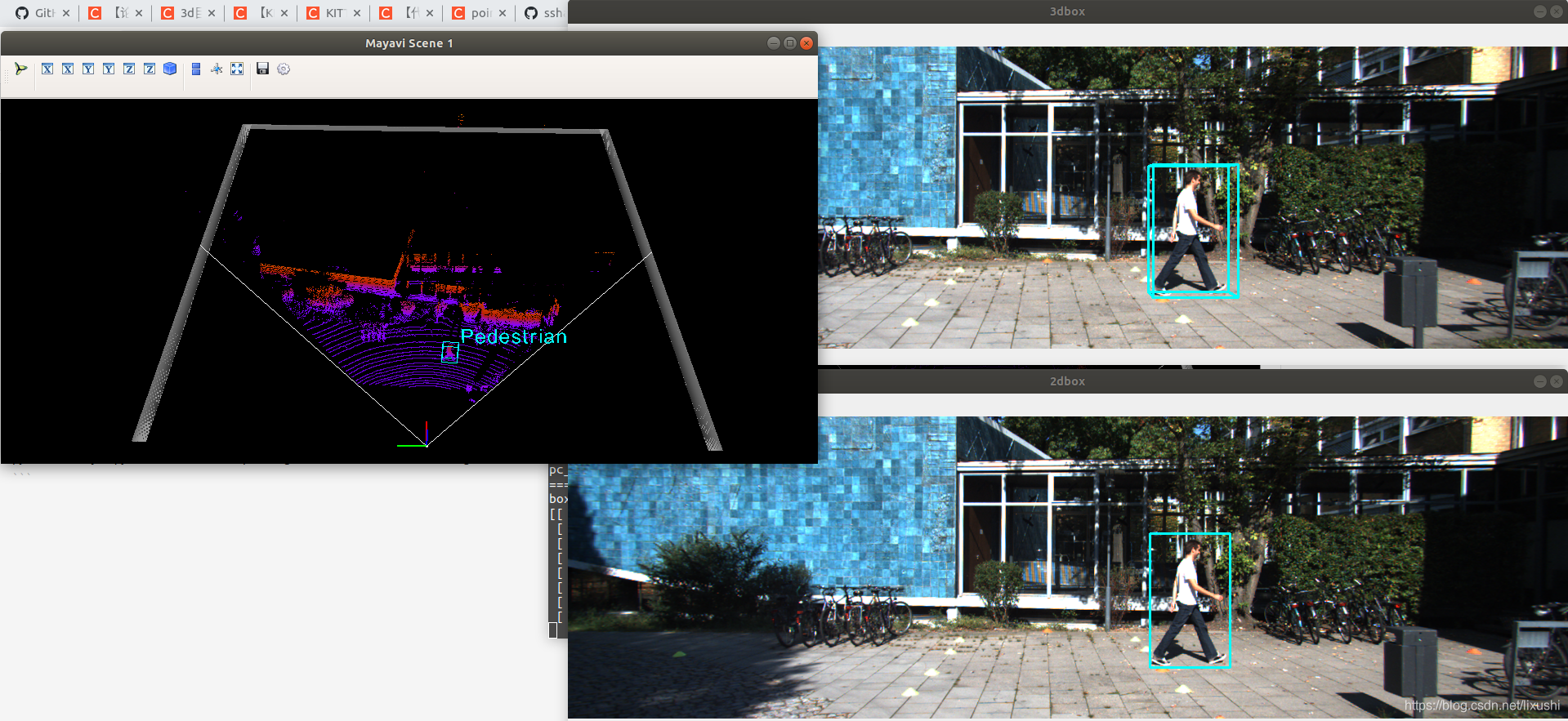

3.2.4 可视化

这一部分我是参考一篇博客的,然后也可以直接在作者的github上写的

(1)只显示雷达的真值

# 虚拟环境记得切换

conda activate pointrcnn

cd /home/lixushi/PointRCNN/可视化/kitti_object_vis/

python kitti_object.py --show_lidar_with_depth --img_fov --const_box --vis

终端回车可以切换下一个场景

(2)显示雷达和图像真值

python kitti_object.py --show_lidar_with_depth --img_fov --const_box --vis --show_image_with_boxes

(3)显示特定某张图的雷达和图像真值

python kitti_object.py --show_lidar_with_depth --img_fov --const_box --vis --show_image_with_boxes --ind 1

# ind 100表示就是图像编号为0001.txt

(4)显示预测值与真值对比

在以上所有命令后加-p,真值是绿色的,预测值是红色的

给个例子:

python kitti_object.py --show_lidar_with_depth --img_fov --const_box --vis --show_image_with_boxes --ind 1 -p

四、PointPillars可视化(kitti_object_vis方式)

只要在跑PointPillars的时候,要加一个后缀即可得到可操作的数据文件类型,其他的就根PointRCNN可视化方式没有什么区别了

python ./pytorch/train.py evaluate --config_path=./configs/pointpillars/car/xyres_16.proto --model_dir=/path/to/model_dir --pickle_result=False

其中pickle_result=False代表不集成数据为pkl文件,这样就可以得到通用的kitti数据的输出,再结合PointRCNN的可视化方法就可以更改下一步计划,继续研究可视化代码并进行修改写成自己所需要的结构

五、参考链接

[1] https://blog.csdn.net/weixin_40805392/article/details/100049202

[2] https://blog.csdn.net/weixin_42341149/article/details/111589407

[3] https://blog.csdn.net/Doraemon_Zzn/article/details/107808166

[4] https://blog.csdn.net/qq_38316300/article/details/110758104?utm_medium=distribute.pc_relevant.none-task-blog-2%7Edefault%7EBlogCommendFromMachineLearnPai2%7Edefault-5.baidujs&depth_1-utm_source=distribute.pc_relevant.none-task-blog-2%7Edefault%7EBlogCommendFromMachineLearnPai2%7Edefault-5.baidujs

[5] https://blog.csdn.net/wqwqqwqw1231/article/details/90788500

[6] https://blog.csdn.net/qimingxia/article/details/107653236?utm_medium=distribute.pc_relevant.none-task-blog-baidujs_baidulandingword-0&spm=1001.2101.3001.4242

[7] https://blog.csdn.net/tiatiatiatia/article/details/97765165?utm_medium=distribute.pc_relevant.none-task-blog-2%7Edefault%7EBlogCommendFromMachineLearnPai2%7Edefault-2.baidujs&depth_1-utm_source=distribute.pc_relevant.none-task-blog-2%7Edefault%7EBlogCommendFromMachineLearnPai2%7Edefault-2.baidujs

[8] https://github.com/sshaoshuai/PointRCNN

[9] https://github.com/nutonomy/second.pytorch

[10] https://github.com/kuixu/kitti_object_vis#install-locally-on-a-ubuntu-1604-pc-with-gui

[11] https://github.com/traveller59/second.pytorch/tree/master/second

[12] https://github.com/facebookresearch/SparseConvNet

注:发现这些链接有bug,可以直接复制粘贴

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?