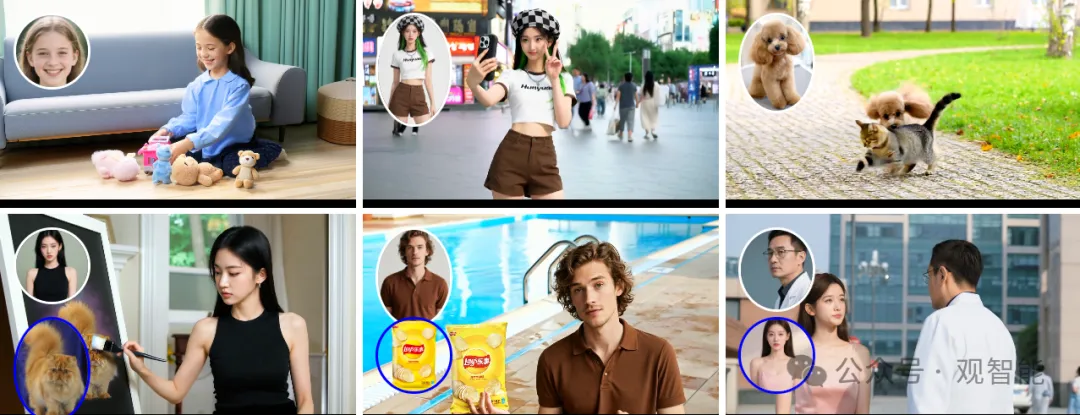

根据官方资料,混元Custom模型在单人、非人物体、多主体交互等多种场景中,都能保持身份特征在视频全程的一致性与连贯性,避免“主体漂移”、“人物变脸” 等问题。

该模型融合了文本、图像、音频、视频等多种模态输入,为视频生成提供丰富控制条件,创作者可依据需求灵活组合,实现多样化创意表达,呼应模型名称中的Custom一词。

🔗官网https://hunyuancustom.github.io/:

目前已开源单主体视频生成能力,即上传一张主体图片(比如一个人的照片),然后给出视频描述的提示词,模型就能识别图片中的身份信息,在不同动作、服饰与场景中生成连贯自然的视频内容。

目前已开源单主体视频生成能力,即上传一张主体图片(比如一个人的照片),然后给出视频描述的提示词,模型就能识别图片中的身份信息,在不同动作、服饰与场景中生成连贯自然的视频内容。

4578

4578

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?