Ultralytics YOLO11 是一款前沿的、最先进的(SOTA)模型,它基于先前 YOLO 版本的成功,并引入了新的功能和改进,以进一步提升性能和灵活性。YOLO11 旨在快速、准确且易于使用,适用于广泛的对象检测和跟踪、实例分割、图像分类以及姿态估计任务。本文主要讲解怎样下载安装YOLOv11,并训练自己的数据集(红外光伏板缺陷检测)。

环境安装

使用Anaconda创建虚拟环境

conda create -n yolo python=3.10 -y

conda activate yolo进入到自己的工作空间,拉取官方代码:https://github.com/ultralytics/ultralytics

git clone https://github.com/ultralytics/ultralytics.git

cd ultralytics或者直接用PyCharm打开代码

项目下载好后,没有requirements.txt文件,执行以下命令安装依赖库

pip install ultralytics默认的安装环境没有tensorboard库,为了训练时方便查看日志,需安装tensorboard

pip install tensorboard

准备数据集

ultralytics提供的有已经制作好的完整的数据集,运行相关脚本可以直接下载现成的数据集。

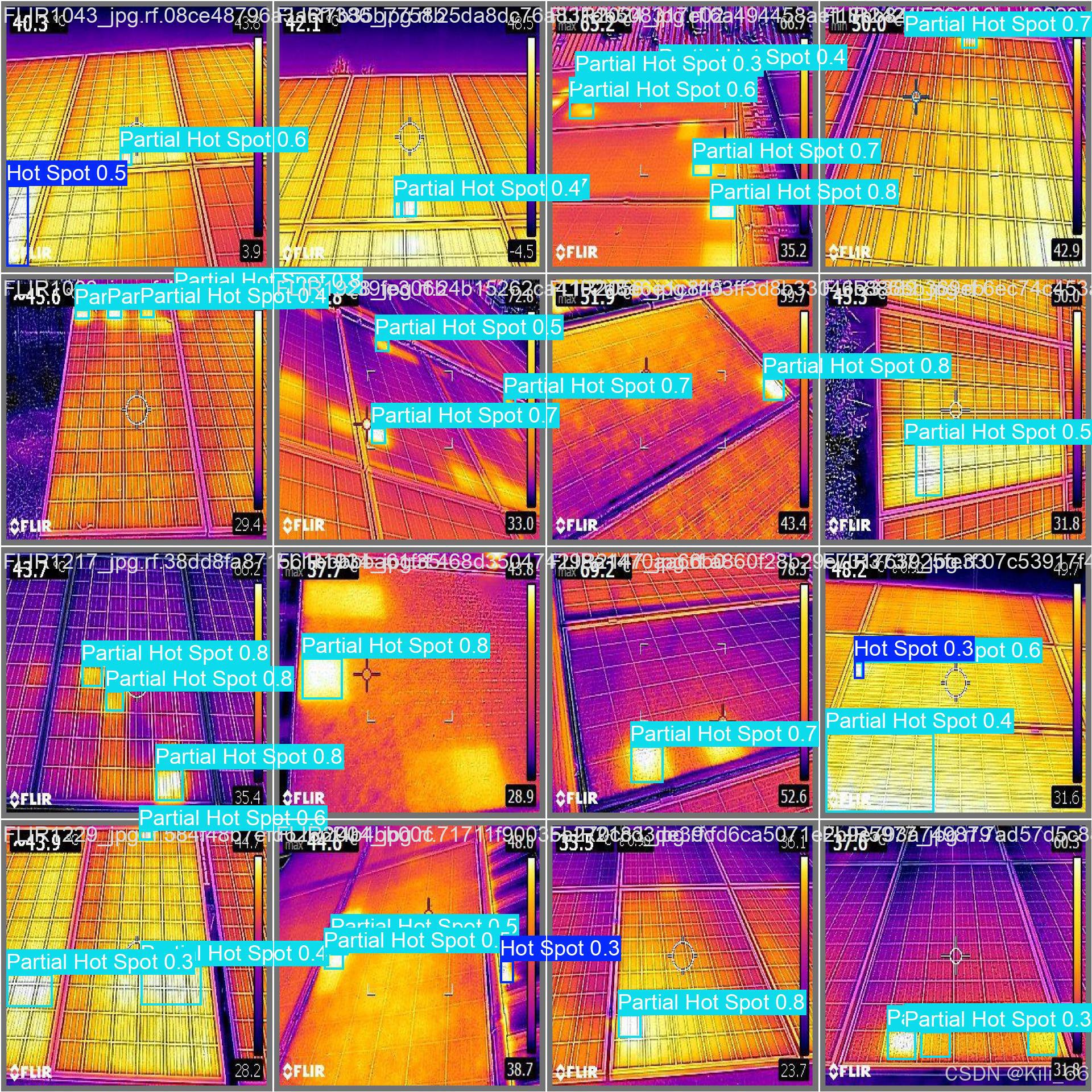

本文的数据集含10098+光伏板红外图像样张,缺陷类别包含2大类,分别为热点和局部热斑。

数据集分为训练集9458张,验证集97张,测试集543张,目录结构如下:

pv_defect_ir_dataset/

├── train/

│ ├── images/

│ │ ├── image_0001.jpg

│ │ ├── image_0002.jpg

│ │ └── ...

│ ├── labels/

│ │ ├── image_0001.xml

│ │ ├── image_0002.xml

│ │ └── ...

├── valid/

│ ├── images/

│ │ ├── image_0001.jpg

│ │ ├── image_0002.jpg

│ │ └── ...

│ ├── labels/

│ │ ├── image_0001.xml

│ │ ├── image_0002.xml

│ │ └── ...

├── test/

│ ├── images/

│ │ ├── image_0001.jpg

│ │ ├── image_0002.jpg

│ │ └── ...

│ ├── labels/

│ │ ├── image_0001.xml

│ │ ├── image_0002.xml

│ │ └── ...

└── README.txt # 数据集说明文件修改配置文件

数据集配置

复制一份coco.yaml作为自己的配置文件data.yaml,将train和val路径修改为图片images下训练集和验证集路径,不用指定label路径,读取数据集的时候label路径是将图片路径中的‘images’替换成‘labels’获取的。数据集只有两个类别,修改对应的names值。

修改类别数

修改yolo11.yaml的类别数为数据集的类别数。

训练

自己写个train.py脚本,设置好参数,执行python train.py进行训练。

from ultralytics import YOLO

# Load a model

# model = YOLO("yolo11x.yaml")

model = YOLO("yolo11x.pt")

# Train the model

train_results = model.train(

data="data.yaml", # path to dataset YAML

epochs=100, # number of training epochs

imgsz=640, # training image size

# batch=1,

)

训练参数如下,更多详情见官方文档:Train - Ultralytics YOLO Docs

| Argument | Default | Description |

|---|---|---|

model | None | Specifies the model file for training. Accepts a path to either a .pt pretrained model or a .yaml configuration file. Essential for defining the model structure or initializing weights. |

data | None | Path to the dataset configuration file (e.g., coco8.yaml). This file contains dataset-specific parameters, including paths to training and validation data, class names, and number of classes. |

epochs | 100 | Total number of training epochs. Each epoch represents a full pass over the entire dataset. Adjusting this value can affect training duration and model performance. |

time | None | Maximum training time in hours. If set, this overrides the epochs argument, allowing training to automatically stop after the specified duration. Useful for time-constrained training scenarios. |

patience | 100 | Number of epochs to wait without improvement in validation metrics before early stopping the training. Helps prevent overfitting by stopping training when performance plateaus. |

batch | 16 | Batch size, with three modes: set as an integer (e.g., batch=16), auto mode for 60% GPU memory utilization (batch=-1), or auto mode with specified utilization fraction (batch=0.70). |

imgsz | 640 | Target image size for training. All images are resized to this dimension before being fed into the model. Affects model accuracy and computational complexity. |

save | True | Enables saving of training checkpoints and final model weights. Useful for resuming training or model deployment. |

save_period | -1 | Frequency of saving model checkpoints, specified in epochs. A value of -1 disables this feature. Useful for saving interim models during long training sessions. |

cache | False | Enables caching of dataset images in memory (True/ram), on disk (disk), or disables it (False). Improves training speed by reducing disk I/O at the cost of increased memory usage. |

device | None | Specifies the computational device(s) for training: a single GPU (device=0), multiple GPUs (device=0,1), CPU (device=cpu), or MPS for Apple silicon (device=mps). |

workers | 8 | Number of worker threads for data loading (per RANK if Multi-GPU training). Influences the speed of data preprocessing and feeding into the model, especially useful in multi-GPU setups. |

project | None | Name of the project directory where training outputs are saved. Allows for organized storage of different experiments. |

name | None | Name of the training run. Used for creating a subdirectory within the project folder, where training logs and outputs are stored. |

exist_ok | False | If True, allows overwriting of an existing project/name directory. Useful for iterative experimentation without needing to manually clear previous outputs. |

pretrained | True | Determines whether to start training from a pretrained model. Can be a boolean value or a string path to a specific model from which to load weights. Enhances training efficiency and model performance. |

optimizer | 'auto' | Choice of optimizer for training. Options include SGD, Adam, AdamW, NAdam, RAdam, RMSProp etc., or auto for automatic selection based on model configuration. Affects convergence speed and stability. |

seed | 0 | Sets the random seed for training, ensuring reproducibility of results across runs with the same configurations. |

deterministic | True | Forces deterministic algorithm use, ensuring reproducibility but may affect performance and speed due to the restriction on non-deterministic algorithms. |

single_cls | False | Treats all classes in multi-class datasets as a single class during training. Useful for binary classification tasks or when focusing on object presence rather than classification. |

rect | False | Enables rectangular training, optimizing batch composition for minimal padding. Can improve efficiency and speed but may affect model accuracy. |

cos_lr | False | Utilizes a cosine learning rate scheduler, adjusting the learning rate following a cosine curve over epochs. Helps in managing learning rate for better convergence. |

close_mosaic | 10 | Disables mosaic data augmentation in the last N epochs to stabilize training before completion. Setting to 0 disables this feature. |

resume | False | Resumes training from the last saved checkpoint. Automatically loads model weights, optimizer state, and epoch count, continuing training seamlessly. |

amp | True | Enables Automatic Mixed Precision (AMP) training, reducing memory usage and possibly speeding up training with minimal impact on accuracy. |

fraction | 1.0 | Specifies the fraction of the dataset to use for training. Allows for training on a subset of the full dataset, useful for experiments or when resources are limited. |

profile | False | Enables profiling of ONNX and TensorRT speeds during training, useful for optimizing model deployment. |

freeze | None | Freezes the first N layers of the model or specified layers by index, reducing the number of trainable parameters. Useful for fine-tuning or transfer learning. |

lr0 | 0.01 | Initial learning rate (i.e. SGD=1E-2, Adam=1E-3) . Adjusting this value is crucial for the optimization process, influencing how rapidly model weights are updated. |

lrf | 0.01 | Final learning rate as a fraction of the initial rate = (lr0 * lrf), used in conjunction with schedulers to adjust the learning rate over time. |

momentum | 0.937 | Momentum factor for SGD or beta1 for Adam optimizers, influencing the incorporation of past gradients in the current update. |

weight_decay | 0.0005 | L2 regularization term, penalizing large weights to prevent overfitting. |

warmup_epochs | 3.0 | Number of epochs for learning rate warmup, gradually increasing the learning rate from a low value to the initial learning rate to stabilize training early on. |

warmup_momentum | 0.8 | Initial momentum for warmup phase, gradually adjusting to the set momentum over the warmup period. |

warmup_bias_lr | 0.1 | Learning rate for bias parameters during the warmup phase, helping stabilize model training in the initial epochs. |

box | 7.5 | Weight of the box loss component in the loss function, influencing how much emphasis is placed on accurately predicting bounding box coordinates. |

cls | 0.5 | Weight of the classification loss in the total loss function, affecting the importance of correct class prediction relative to other components. |

dfl | 1.5 | Weight of the distribution focal loss, used in certain YOLO versions for fine-grained classification. |

pose | 12.0 | Weight of the pose loss in models trained for pose estimation, influencing the emphasis on accurately predicting pose keypoints. |

kobj | 2.0 | Weight of the keypoint objectness loss in pose estimation models, balancing detection confidence with pose accuracy. |

label_smoothing | 0.0 | Applies label smoothing, softening hard labels to a mix of the target label and a uniform distribution over labels, can improve generalization. |

nbs | 64 | Nominal batch size for normalization of loss. |

overlap_mask | True | Determines whether object masks should be merged into a single mask for training, or kept separate for each object. In case of overlap, the smaller mask is overlayed on top of the larger mask during merge. |

mask_ratio | 4 | Downsample ratio for segmentation masks, affecting the resolution of masks used during training. |

dropout | 0.0 | Dropout rate for regularization in classification tasks, preventing overfitting by randomly omitting units during training. |

val | True | Enables validation during training, allowing for periodic evaluation of model performance on a separate dataset. |

plots | False | Generates and saves plots of training and validation metrics, as well as prediction examples, providing visual insights into model performance and learning progression. |

训练的时候可使用tensorboard实时查看日志,了解训练情况。

tensorboard --logdir runs/

验证和推理

验证和推理

验证和推理可以分别写两个脚本valid.py和predict.py,这里为了方便写在一个脚本里面。

from ultralytics import YOLO

# Load a model

# model = YOLO("yolo11x.pt")

model = YOLO("runs/detect/train/weights/best.pt")

# Evaluate model performance on the validation set

metrics = model.val(

data='data.yaml',

)

# Perform object detection on an image

results = model("ultralytics/assets/pvtest.jpg")

results[0].show()

results[0].save(filename="result.jpg")

推理结果

9331

9331

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?