VGG16/19是ImageNet比赛中获胜的经典之作,学习该算法的思想来入门深度学习很有必要。而且当我们自己的训练数据量过小时,往往借助牛人已经预训练好的网络进行特征的提取,然后在后面加上自己特定任务的网络进行调优。

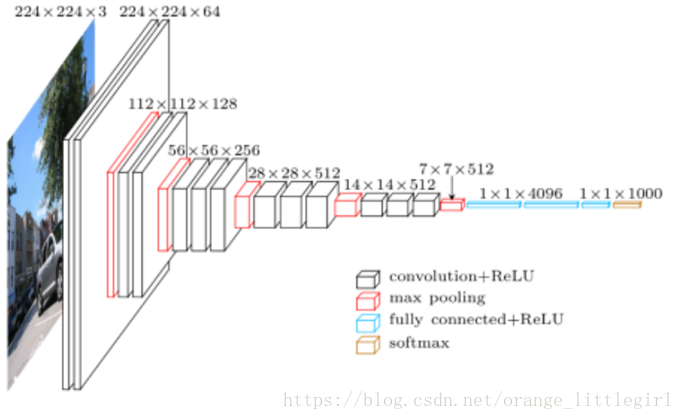

网络结构如下:

VGG16网上讲的很详细了,我这就不过多解释了,这次主要讲解一下VGG16的源码,以及怎么利用github的开源项目,开启自己的项目。

小伙伴可以通过以下链接下载源码:VGG16/19源码

网络结构vgg16.py代码,如下:

import inspect###获取加载模块路径

import os

import numpy as np

import tensorflow as tf

import time

VGG_MEAN = [103.939, 116.779, 123.68]

"""

numpy将数组以二进制格式保存到磁盘

np.load和np.save是读写磁盘数组数据的两个主要函数,默认情况下,数组是以未压缩的原始二进制格式保存在扩展名为.npy的文件中。

np.save("A.npy",A) #如果文件路径末尾没有扩展名.npy,该扩展名会被自动加上。

B=np.load("A.npy")

item()方法把字典中每对key和value组成一个元组,并把这些元组放在列表中返回。

person={'name':'lizhong','age':'26','city':'BeiJing','blog':'www.jb51.net'}

for x in person.items():

print x

显示:[('name','lizhong') etc ]

"""

class Vgg16:

def __init__(self, vgg16_npy_path=None):##构造函数

if vgg16_npy_path is None:

path = inspect.getfile(Vgg16)#获得Vgg16--该类的路径

path = os.path.abspath(os.path.join(path, os.pardir))#os.path.abspath(path) #返回绝对路径,os.pardir为当前目录的父目录

path = os.path.join(path, "vgg16.npy")#"vgg16.npy"文件名和路径合并成新路径

vgg16_npy_path = path

print(path)#打印路径

self.data_dict = np.load(vgg16_npy_path, encoding='latin1').item()

# print (self.data_dict.type())###查看数据类型

print("npy file loaded")

def build(self, rgb):

"""

load variable from npy to build the VGG

:param rgb: rgb image [batch, height, width, 3] values scaled [0, 1]

"""

start_time = time.time()

print("build model started")

rgb_scaled = rgb * 255.0

# Convert RGB to BGR

red, green, blue = tf.split(axis=3, num_or_size_splits=3, value=rgb_scaled)

assert red.get_shape().as_list()[1:] == [224, 224, 1]

assert green.get_shape().as_list()[1:] == [224, 224, 1]

assert blue.get_shape().as_list()[1:] == [224, 224, 1]

bgr = tf.concat(axis=3, values=[

blue - VGG_MEAN[0],

green - VGG_MEAN[1],

red - VGG_MEAN[2],

])

assert bgr.get_shape().as_list()[1:] == [224, 224, 3]

self.conv1_1 = self.conv_layer(bgr, "conv1_1")#this->conv1_1------新的变量,this->conv_layer---调用成员函数

self.conv1_2 = self.conv_layer(self.conv1_1, "conv1_2")############self.conv1_2相当于一个成员变量

self.pool1 = self.max_pool(self.conv1_2, 'pool1')

self.conv2_1 = self.conv_layer(self.pool1, "conv2_1")

self.conv2_2 = self.conv_layer(self.conv2_1, "conv2_2")

self.pool2 = self.max_pool(self.conv2_2, 'pool2')

self.conv3_1 = self.conv_layer(self.pool2, "conv3_1")

self.conv3_2 = self.conv_layer(self.conv3_1, "conv3_2")

self.conv3_3 = self.conv_layer(self.conv3_2, "conv3_3")

self.pool3 = self.max_pool(self.conv3_3, 'pool3')

self.conv4_1 = self.conv_layer(self.pool3, "conv4_1")

self.conv4_2 = self.conv_layer(self.conv4_1, "conv4_2")

self.conv4_3 = self.conv_layer(self.conv4_2, "conv4_3")

self.pool4 = self.max_pool(self.conv4_3, 'pool4')

self.conv5_1 = self.conv_layer(self.pool4, "conv5_1")

self.conv5_2 = self.conv_layer(self.conv5_1, "conv5_2")

self.conv5_3 = self.conv_layer(self.conv5_2, "conv5_3")

self.pool5 = self.max_pool(self.conv5_3, 'pool5')

self.fc6 = self.fc_layer(self.pool5, "fc6")

assert self.fc6.get_shape().as_list()[1:] == [4096]

self.relu6 = tf.nn.relu(self.fc6)

self.fc7 = self.fc_layer(self.relu6, "fc7")

self.relu7 = tf.nn.relu(self.fc7)

self.fc8 = self.fc_layer(self.relu7, "fc8")

self.prob = tf.nn.softmax(self.fc8, name="prob")###预测,prob------全局变量

self.data_dict = None

print(("build model finished: %ds" % (time.time() - start_time)))

def avg_pool(self, bottom, name):######bottom----rgb,name------"xxx"

return tf.nn.avg_pool(bottom, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding='SAME', name=name)

def max_pool(self, bottom, name):

return tf.nn.max_pool(bottom, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding='SAME', name=name)

######padding='SAME'只是保留边缘信息,还是缩减采样

def conv_layer(self, bottom, name):######bottom----rgb,name------"xxx"

with tf.variable_scope(name):

filt = self.get_conv_filter(name)#######得到权重W(四维张量)---vgg16.npy(通过"conv1_1"[0]取出W,也就是filter)

conv = tf.nn.conv2d(bottom, filt, [1, 1, 1, 1], padding='SAME')

conv_biases = self.get_bias(name)#######得到偏差b--vgg16.npy(通过"conv1_1"[1]取出b,也就是bias)

bias = tf.nn.bias_add(conv, conv_biases)

relu = tf.nn.relu(bias)

return relu

def fc_layer(self, bottom, name):

with tf.variable_scope(name):

shape = bottom.get_shape().as_list()

dim = 1

for d in shape[1:]:

dim *= d

x = tf.reshape(bottom, [-1, dim])

weights = self.get_fc_weight(name)

biases = self.get_bias(name)

# Fully connected layer. Note that the '+' operation automatically

# broadcasts the biases.

fc = tf.nn.bias_add(tf.matmul(x, weights), biases)

return fc

def get_conv_filter(self, name):#######得到权重W(四维张量)---vgg16.npy(通过"conv1_1"取出W,也就是filter)

return tf.constant(self.data_dict[name][0], name="filter")

def get_bias(self, name):#######得到偏差b--vgg16.npy(通过"conv1_1"[1]取出b,也就是bias)

return tf.constant(self.data_dict[name][1], name="biases")

def get_fc_weight(self, name):

return tf.constant(self.data_dict[name][0], name="weights")该网络的特点:

1)用了13个卷积层以及3个全连接层构成VGG16

2)卷积层全是:CONV=3*3 filter, s=1, padding="same",保持图片形状不变

每次卷积完了以后用MAX_POOL进行下采样,减小图片尺寸,加快计算速度

MAX_POOL=2*2 ,s=2

注:该文件自定义类class Vgg()的构造函数是为了获取之前训练好的参数包括权重-----W,和偏差-----bias。这是1000个种类识别的参数比较大,想测试的小伙伴可以通过以下链接下载vgg16.npy文件:下载链接

看一下测试文件test_vgg16.py:

import numpy as np

import tensorflow as tf

#import vgg16

from vgg16 import Vgg16

import utils

import hello

hello.hello()

img1 = utils.load_image("./test_data/tiger.jpeg")

img2 = utils.load_image("./test_data/timg.jpg")

batch1 = img1.reshape((1, 224, 224, 3))

batch2 = img2.reshape((1, 224, 224, 3))

batch = np.concatenate((batch1, batch2), 0)######[2, 224, 224, 3]

'''

numpy.concatenate((a1, a2, ...), axis=0)

0-------列向量

a1, a2, ... : 需要拼接的矩阵

axis : 沿着某个轴拼接,默认为列方向

'''

print (batch.shape)

#with tf.Session(config=tf.ConfigProto(gpu_options=(tf.GPUOptions(per_process_gpu_memory_fraction=0.7)))) as sess:

with tf.device('/cpu:0'):

with tf.Session() as sess:

images = tf.placeholder("float", [2, 224, 224, 3])

feed_dict = {images: batch}

vgg = Vgg16()##引用vgg16.py里面定义的Vgg16类

with tf.name_scope("content_vgg"):

vgg.build(images)

prob = sess.run(vgg.prob, feed_dict=feed_dict)### 在build()函数里面,属于成员变量,self.prob = tf.nn.softmax(self.fc8, name="prob")

print(prob)####打印的预测矩阵(2,1000)类似手写字识别: [1,0,0,0...]:表示0

utils.print_prob(prob[0], './synset.txt')

utils.print_prob(prob[1], './synset.txt')测试两张图片:

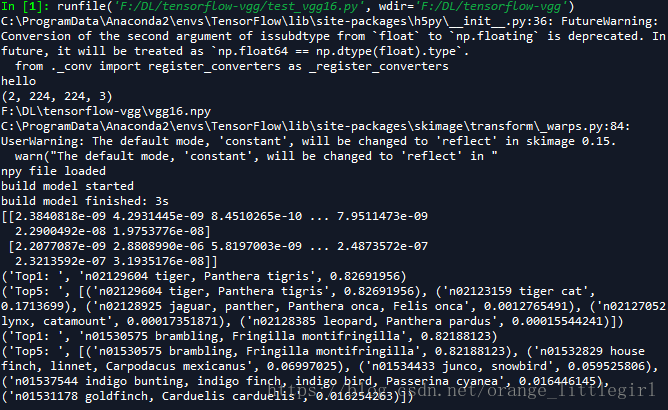

运行test_vgg16.py文件:

可以看出预测出来了准确的类别:tiger(老虎)和brambling(燕雀)

2万+

2万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?