DeepLab系列网络对应的文章

paper链接

v1: https://arxiv.org/abs/1412.7062

v2: https://ieeexplore.ieee.org/abstract/document/7913730/

v3:https://arxiv.org/abs/1706.05587

v3+:https://openaccess.thecvf.com/content_ECCV_2018/html/Liang-Chieh_Chen_Encoder-Decoder_with_Atrous_ECCV_2018_paper.html

DeepLab Series关键结构对比

主要改进在Backbone和Atrous Conv(实际上为Dilated Conv)上面

DeepLab V1 —— 网络结构

V1:

- backbone:VGG-16

- 各个层的连接,取了中间层的feature map

- 增加感受野的操作,Dilation

问题:Layer1-Layer4以及FC7的特征尺度不同怎么相加?

- 在Classification的第一步操作Conv(stride),通过改变stride参数,使得feature map变成同样的大小

扩展:Dilated Convlution操作

输出尺度计算公式:

dilation不同,可以cover到不同尺度的感受野

感受野越大,看的越远,但不是感受野越大越好,原因:看的太远了,和周边没关系,并且感受野越大,需要把看到的信息都记住。

DeepLab V2 —— 网络结构

V2:

V2:

- backbone:ResNet

- ASPP(空洞卷积金字塔)模块

DeepLab V2的ASPP并没有结合前面层的模块,直接把ASPP输出的结果加起来,输出output,在V2的时候,没有将输出output做上采样操作,而是把label做下采样,使得输出和label的尺度相同,来计算loss。但现在的文章几乎没有用label下采样操作了。

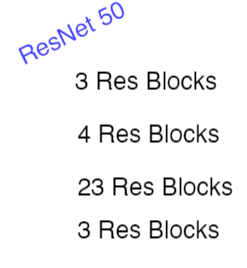

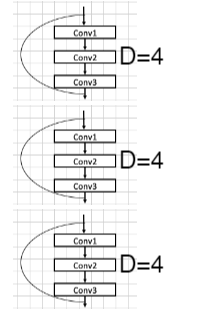

其中Res Layers3、Res Layers4使用了Dilation=2,Dilation=4,举个例子,ResNet50的block如下

Res Layers4使用了Dilation=4,代表Layers4的三个block,每个block的conv都用dilation=4

问题:为什么DeepLab V2的ASPP并没有结合前面层的模块?

- ASPP模块的感受野不同,每个分支能看到更广的东西了。

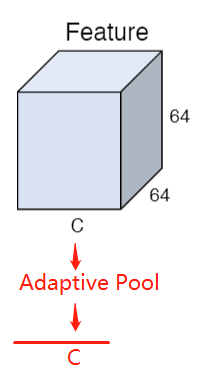

ASPP模块

问题:3×3卷积之后,怎么保证输出是64×64(或者卷积过程)?

- 加上padding

DeepLab V2与PSPNet的区别

- PSPNet 用的是adaptive-pool操作

- DeepLab V2用的是dilation conv操作

DeepLab V3 —— 网络结构

ASPP模块改进版

多了2个分支(多了2个feature map):

- 1×1 Conv

- Adaptive Pool(变成1条线)

DeepLab V3 —— Multi-Grid

从 Res Layers3 开始 用不同的dilation,D逐渐增大

最终实现的DeepLab V3的框架如下:

DeepLab V3 —— Multi-Scale(Inference)

多个尺度做预测,融合起来,取平均值,再softmax,argmax

提升准确率

预测方式:

① resize:

假设Model输入是512×512,将H W resize 成 512×512,最后resize back

② Direct

③ Sliding Window + Padding

假设Model是128×128

裁剪128×128的区域,然后送进去model做分割,一堆密集的框都经过model,然后求平均值,如果H W不是128的倍数,则padding就好了。最后记得crop原来的大小。

代码:

1、deeplab.py(DeepLab V3)

import numpy as np

import paddle.fluid as fluid

from paddle.fluid.dygraph import to_variable

from paddle.fluid.dygraph import Layer

from paddle.fluid.dygraph import Conv2D

from paddle.fluid.dygraph import BatchNorm

from paddle.fluid.dygraph import Dropout

from resnet_multi_grid import ResNet50, ResNet101, ResNet152

class ASPPPooling(Layer):

# TODO: ASPPPooling :adaptive_pool + Conv1×1 + BN + ReLU + interpolate

def __init__(self, num_channels, num_filters):

super(ASPPPooling,self).__init__()

self.features = fluid.dygraph.Sequential(

Conv2D(num_channels=num_channels, num_filters=num_filters, filter_size=1),

BatchNorm(num_channels=num_filters, act='relu')

)

def forward(self, inputs):

n,c,h,w = inputs.shape

x = fluid.layers.adaptive_pool2d(inputs, (1,1))

x = self.features(x)

x = fluid.layers.interpolate(x, (h,w), align_corners=False)

return x

class ASPPConv(fluid.dygraph.Sequential):

# TODO: ASPPConv ×3 :Conv3×3 dilation + BN + ReLU

def __init__(self, num_channels, num_filters, dilation):

super(ASPPConv,self).__init__(

Conv2D(num_channels=num_channels, num_filters=num_filters, filter_size=3, padding=dilation, dilation=dilation),

BatchNorm(num_filters, act='relu')

)

class ASPPModule(Layer):

# TODO: Conv1×1、ASPPConv ×3、ASPPPooling、concat、Project

def __init__(self, num_channels, num_filters, dilation_rates):

super(ASPPModule, self).__init__()

self.features = []

# Conv1×1 + BN + ReLU

self.features.append(

fluid.dygraph.Sequential(

Conv2D(num_channels=num_channels, num_filters=num_filters, filter_size=1),

BatchNorm(num_channels=num_filters, act='relu')

)

)

# ASPPConv ×3 :Conv3×3 dilation + BN + ReLU

for r in dilation_rates:

self.features.append(

ASPPConv(num_channels, num_filters, r)

)

# ASPPPooling :adaptive_pool + Conv1×1 + BN + ReLU + interpolate

self.features.append(ASPPPooling(num_channels, num_filters))

# concat(forward写)

# Project = Conv + BN + ReLU

self.project = fluid.dygraph.Sequential( # ASPP升级模块最右边 1×1Conv C''的地方,输入为5C'

Conv2D(num_channels = num_filters*(2 + len(dilation_rates)), num_filters=num_filters, filter_size=1),

BatchNorm(num_filters, act='relu')

)

def forward(self, inputs):

res = []

for op in self.features:

res.append(op(inputs))

# concat

x = fluid.layers.concat(res, axis=1)

x = self.project(x)

return x

class DeepLabHead(fluid.dygraph.Sequential):

# TODO: ASPPModule、3x3Conv、bn、1×1Conv

def __init__(self, num_channels, num_classes):

super(DeepLabHead, self).__init__(

ASPPModule(num_channels, 256, [12, 24, 36]),

Conv2D(num_channels=256, num_filters=256, filter_size=3, padding=1),

BatchNorm(256, act='relu'),

Conv2D(256, num_classes, 1)

)

class DeepLab(Layer):

# TODO:

def __init__(self, num_classes=59):

super(DeepLab, self).__init__()

# 在resnet_multi_grid.py中已经做了dilation

resnet = ResNet50(pretrained=False)

self.layer0 = fluid.dygraph.Sequential(

resnet.conv,

resnet.pool2d_max

)

self.layer1 = resnet.layer1

self.layer2 = resnet.layer2

self.layer3 = resnet.layer3 # dilation = 2

self.layer4 = resnet.layer4 # dilation = 4

# multigrid

self.layer5 = resnet.layer5 # Res Layers4_copy1 dilation = 4,8,16

self.layer6 = resnet.layer6 # Res Layers4_copy2 dilation = 8,16,32

self.layer7 = resnet.layer7 # Res Layers4_copy3 dilation = 16,32,64

feature_dim = 2048

self.classifier = DeepLabHead(feature_dim, num_classes)

def forward(self, inputs):

n, c, h, w = inputs.shape

x = self.layer0(inputs)

x = self.layer1(x)

x = self.layer2(x)

x = self.layer3(x)

x = self.layer4(x)

x = self.layer5(x)

x = self.layer6(x)

x = self.layer7(x)

x = self.classifier(x)

x = fluid.layers.interpolate(x, (h, w), align_corners=False)

return x

def main():

with fluid.dygraph.guard():

x_data = np.random.rand(2, 3, 512, 512).astype(np.float32)

x = to_variable(x_data)

model = DeepLab(num_classes=59)

model.eval()

pred = model(x)

print(pred.shape)

if __name__ == '__main__':

main()

- resnet_multi_grid.py

import numpy as np

import paddle.fluid as fluid

from paddle.fluid.dygraph import to_variable

from paddle.fluid.dygraph import Conv2D

from paddle.fluid.dygraph import BatchNorm

from paddle.fluid.dygraph import Pool2D

from paddle.fluid.dygraph import Linear

model_path = {'ResNet18': './resnet18',

'ResNet34': './resnet34',

'ResNet50': './resnet50',

'ResNet101': './resnet101',

'ResNet152': './resnet152'}

class ConvBNLayer(fluid.dygraph.Layer):

def __init__(self,

num_channels,

num_filters,

filter_size,

stride=1,

groups=1,

act=None,

dilation=1,

padding=None,

name=None):

super(ConvBNLayer, self).__init__(name)

if padding is None:

padding = (filter_size-1)//2

else:

padding=padding

self.conv = Conv2D(num_channels=num_channels,

num_filters=num_filters,

filter_size=filter_size,

stride=stride,

padding=padding,

groups=groups,

act=None,

dilation=dilation,

bias_attr=False)

self.bn = BatchNorm(num_filters, act=act)

def forward(self, inputs):

y = self.conv(inputs)

y = self.bn(y)

return y

class BasicBlock(fluid.dygraph.Layer):

expansion = 1 # expand ratio for last conv output channel in each block

def __init__(self,

num_channels,

num_filters,

stride=1,

shortcut=True,

name=None):

super(BasicBlock, self).__init__(name)

self.conv0 = ConvBNLayer(num_channels=num_channels,

num_filters=num_filters,

filter_size=3,

stride=stride,

act='relu',

name=name)

self.conv1 = ConvBNLayer(num_channels=num_filters,

num_filters=num_filters,

filter_size=3,

act=None,

name=name)

if not shortcut:

self.short = ConvBNLayer(num_channels=num_channels,

num_filters=num_filters,

filter_size=1,

stride=stride,

act=None,

name=name)

self.shortcut = shortcut

def forward(self, inputs):

conv0 = self.conv0(inputs)

conv1 = self.conv1(conv0)

if self.shortcut:

short = inputs

else:

short = self.short(inputs)

y = fluid.layers.elementwise_add(x=short, y=conv1, act='relu')

return y

class BottleneckBlock(fluid.dygraph.Layer):

expansion = 4

def __init__(self,

num_channels,

num_filters,

stride=1,

shortcut=True,

dilation=1,

padding=None,

name=None):

super(BottleneckBlock, self).__init__(name)

self.conv0 = ConvBNLayer(num_channels=num_channels,

num_filters=num_filters,

filter_size=1,

act='relu')

# name=name)

self.conv1 = ConvBNLayer(num_channels=num_filters,

num_filters=num_filters,

filter_size=3,

stride=stride,

padding=padding,

act='relu',

dilation=dilation)

# name=name)

self.conv2 = ConvBNLayer(num_channels=num_filters,

num_filters=num_filters * 4,

filter_size=1,

stride=1)

# name=name)

if not shortcut:

self.short = ConvBNLayer(num_channels=num_channels,

num_filters=num_filters * 4,

filter_size=1,

stride=stride)

# name=name)

self.shortcut = shortcut

self.num_channel_out = num_filters * 4

def forward(self, inputs):

conv0 = self.conv0(inputs)

#print('conv0 shape=',conv0.shape)

conv1 = self.conv1(conv0)

#print('conv1 shape=', conv1.shape)

conv2 = self.conv2(conv1)

#print('conv2 shape=', conv2.shape)

if self.shortcut:

short = inputs

else:

short = self.short(inputs)

#print('short shape=', short.shape)

y = fluid.layers.elementwise_add(x=short, y=conv2, act='relu')

return y

class ResNet(fluid.dygraph.Layer):

def __init__(self, layers=50, num_classes=1000, multi_grid=[1, 2, 4], duplicate_blocks=False):

super(ResNet, self).__init__()

self.layers = layers

supported_layers = [18, 34, 50, 101, 152]

assert layers in supported_layers

mgr = [1, 2, 4] # multi grid rate for duplicated blocks

if layers == 18:

depth = [2, 2, 2, 2]

elif layers == 34:

depth = [3, 4, 6, 3]

elif layers == 50:

depth = [3, 4, 6, 3]

elif layers == 101:

depth = [3, 4, 23, 3]

elif layers == 152:

depth = [3, 8, 36, 3]

if layers < 50:

num_channels = [64, 64, 128, 256, 512]

else:

num_channels = [64, 256, 512, 1024, 2048]

num_filters = [64, 128, 256, 512]

self.conv = ConvBNLayer(num_channels=3,

num_filters=64,

filter_size=7,

stride=2,

act='relu')

self.pool2d_max = Pool2D(pool_size=3,

pool_stride=2,

pool_padding=1,

pool_type='max')

if layers < 50:

block = BasicBlock

l1_shortcut=True

else:

block = BottleneckBlock

l1_shortcut=False

self.layer1 = fluid.dygraph.Sequential(

*self.make_layer(block,

num_channels[0],

num_filters[0],

depth[0],

stride=1,

shortcut=l1_shortcut,

name='layer1'))

self.layer2 = fluid.dygraph.Sequential(

*self.make_layer(block,

num_channels[1],

num_filters[1],

depth[1],

stride=2,

name='layer2'))

self.layer3 = fluid.dygraph.Sequential(

*self.make_layer(block,

num_channels[2],

num_filters[2],

depth[2],

stride=1,

dilation=2,

name='layer3'))

# add multi grid [1, 2, 4]

self.layer4 = fluid.dygraph.Sequential(

*self.make_layer(block,

num_channels[3],

num_filters[3],

depth[3],

stride=1,

name='layer4',

dilation=multi_grid))

# if duplicate_blocks:

self.layer5 = fluid.dygraph.Sequential(

*self.make_layer(block,

num_channels[4],

num_filters[3],

depth[3],

stride=1,

name='layer5',

dilation=[x*mgr[0] for x in multi_grid]))

self.layer6 = fluid.dygraph.Sequential(

*self.make_layer(block,

num_channels[4],

num_filters[3],

depth[3],

stride=1,

name='layer6',

dilation=[x*mgr[1] for x in multi_grid]))

self.layer7 = fluid.dygraph.Sequential(

*self.make_layer(block,

num_channels[4],

num_filters[3],

depth[3],

stride=1,

name='layer7',

dilation=[x*mgr[2] for x in multi_grid]))

self.last_pool = Pool2D(pool_size=7, # ignore if global_pooling is True

global_pooling=True,

pool_type='avg')

self.fc = Linear(input_dim=num_filters[-1] * block.expansion,

output_dim=num_classes,

act=None)

self.out_dim = num_filters[-1] * block.expansion

def forward(self, inputs):

x = self.conv(inputs)

x = self.pool2d_max(x)

#print(x.shape)

x = self.layer1(x)

#print(x.shape)

x = self.layer2(x)

#print(x.shape)

x = self.layer3(x)

#print(x.shape)

x = self.layer4(x)

#print(x.shape)

x = self.last_pool(x)

x = fluid.layers.reshape(x, shape=[-1, self.out_dim])

x = self.fc(x)

return x

def make_layer(self, block, num_channels, num_filters, depth, stride, dilation=1, shortcut=False, name=None):

layers = []

if isinstance(dilation, int):

dilation = [dilation] * depth

elif isinstance(dilation, (list, tuple)):

assert len(dilation) == 3, "Wrong dilation rate for multi-grid | len should be 3"

assert depth ==3, "multi-grid can only applied to blocks with depth 3"

padding = []

for di in dilation:

if di>1:

padding.append(di)

else:

padding.append(None)

layers.append(block(num_channels,

num_filters,

stride=stride,

shortcut=shortcut,

dilation=dilation[0],

padding=padding[0],

name=f'{name}.0'))

for i in range(1, depth):

layers.append(block(num_filters * block.expansion,

num_filters,

stride=1,

dilation=dilation[i],

padding=padding[i],

name=f'{name}.{i}'))

return layers

def ResNet18(pretrained=False):

model = ResNet(layers=18)

if pretrained:

model_state, _ = fluid.load_dygraph(model_path['ResNet18'])

model.set_dict(model_state)

return model

def ResNet34(pretrained=False):

model = ResNet(layers=34)

if pretrained:

model_state, _ = fluid.load_dygraph(model_path['ResNet34'])

model.set_dict(model_state)

return model

def ResNet50(pretrained=False, duplicate_blocks=False):

model = ResNet(layers=50, duplicate_blocks=duplicate_blocks)

if pretrained:

model_state, _ = fluid.load_dygraph(model_path['ResNet50'])

if duplicate_blocks:

set_dict_ignore_duplicates(model, model_state)

else:

model.set_dict(model_state)

return model

def findParams(model_state, name):

new_dict = dict()

for key,val in model_state.items():

if name == key[0:len(name)]:

print(f'change {key} -> {key[len(name)+1::]}')

new_dict[key[len(name)+1::]] = val

return new_dict

def set_dict_ignore_duplicates(model, model_state):

model.conv.set_dict(findParams(model_state,'conv'))

model.pool2d_max.set_dict(findParams(model_state,'pool2d_max'))

model.layer1.set_dict(findParams(model_state,'layer1'))

model.layer2.set_dict(findParams(model_state,'layer2'))

model.layer3.set_dict(findParams(model_state,'layer3'))

model.layer4.set_dict(findParams(model_state,'layer4'))

model.fc.set_dict(findParams(model_state,'fc'))

return model

def ResNet101(pretrained=False, duplicate_blocks=False):

model = ResNet(layers=101, duplicate_blocks=duplicate_blocks)

if pretrained:

model_state, _ = fluid.load_dygraph(model_path['ResNet101'])

if duplicate_blocks:

set_dict_ignore_duplicates(model, model_state)

else:

model.set_dict(model_state)

return model

def ResNet152(pretrained=False):

model = ResNet(layers=152)

if pretrained:

model_state, _ = fluid.load_dygraph(model_path['ResNet152'])

model.set_dict(model_state)

return model

def main():

with fluid.dygraph.guard():

#x_data = np.random.rand(2, 3, 512, 512).astype(np.float32)

x_data = np.random.rand(2, 3, 224, 224).astype(np.float32)

x = to_variable(x_data)

#model = ResNet18()

#model.eval()

#pred = model(x)

#print('resnet18: pred.shape = ', pred.shape)

#model = ResNet34()

#pred = model(x)

#model.eval()

#print('resnet34: pred.shape = ', pred.shape)

model = ResNet101(pretrained=False)

model.eval()

pred = model(x)

print('dilated resnet50: pred.shape = ', pred.shape)

#model = ResNet101()

#pred = model(x)

#model.eval()

#print('resnet101: pred.shape = ', pred.shape)

#model = ResNet152()

#pred = model(x)

#model.eval()

#print('resnet152: pred.shape = ', pred.shape)

#print(model.sublayers())

#for name, sub in model.named_sublayers(include_sublayers=True):

# #print(sub.full_name())

# if (len(sub.named_sublayers()))

# print(name)

if __name__ == "__main__":

main()

5583

5583

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?