DL之CharRNN:人工智能为你写英语小说短文/中文写诗/中文写歌词/写代码——基于TF利用RNN算法训练数据集(比如Coriolanus等)、训练&测试过程全记录

目录

人工智能为你写小说——基于TF利用RNN算法训练数据集(William Shakespeare的《Coriolanus》)替代你写英语小说短文、训练&测试过程全记录

人工智能为你写诗——基于TF利用RNN算法实现【机器为你写诗】、训练&测试过程全记录

人工智能为你写周董歌词——基于TF利用RNN算法实现【机器为你作词】、训练&测试过程全记录

人工智能为你写歌词(写给陈奕迅)——基于TF利用RNN算法实现【机器为你作词】、训练&测试过程全记录

人工智能为你写代码——基于TF利用RNN算法实现生成编程语言代码(C++语言)、训练&测试过程全记录

人工智能为你写小说——基于TF利用RNN算法训练数据集(William Shakespeare的《Coriolanus》)替代你写英语小说短文、训练&测试过程全记录

输出结果

1、test01

| conce alone, APRINS: LUCIANO: SIR TOBY RELLA: ALINA: | 独自一人, 哪一个叛逆变瘦,真正的仁慈, 并在我的石板上品尝这张纸,品尝他, 我把你的仆人安放在那里 他们把一件事拿给他听,但他现在是 她想去的地方,也有她的对手。 APRINS: 发送者,那就是他在你的血液里; 对你来说,一个让自己成为一个富人的圣地 对我来说似乎比法庭更重要。 LUCIANO: 我是如何,这是持有和她的悲伤 对你的香椿和作为事物,有意志 这是我的眼泪,有些是。 SIR TOBY RELLA: 如果她的话我的男人的这种悲伤是什么? 艾琳娜: 我应该说你是一个厄尔默斯,我们会和他更好相处。 ANTELIUS: 你会有那些失速和野性吗? 在那,有人会说,他会把她所有的绳子 到这里,那一个胸怀和我的乐队 女人应该说这穿了你; 看见了,向我告别 枯萎和刺痛, |

2、test02

| fort, SILVIA: POLANIA: ANDELIO: PRINCE HENRY: SIR OF ORIANIO: LAUVANICUS: PALIS: | 堡垒, 听我的话,她有我的舌头。 SILVIA: 所以,梅蒂,这只手, 为了封你,我们会在一段时间内接受这个想法。 POLANIA: 我都是他们的头脑,这就是舌头。 ANDELIO: 他在我的坏蛋那里,丁克如你 你最后一次告诉你 你把我的羞辱告诉你我的商店 对任何一个阴霾和天堂作为她的悸动 正如他对我的男人和我所拥有的, 我拥有所有值得收藏的东西。 PRINCE HENRY: 他有事要留下。 奥里亚尼奥爵士: 他这里有麻烦。 LAUVANICUS: 就这样把那匹马吓了一跳。 PALIS: 是真的有什么石头卖给谁, 我是一个大师,舌头,一个沙龙 我见他,愿你的斯塔兰格连任, 在你身上,维斯的音符越来越高。你认为 什么是荣耀你的家 他们的停留和手,弦,thyess都有这个, 新闻的灵魂是他的手。 他爱你 |

监控模型

训练过程全记录

2018-10-13 17:05:49.402137:

step: 10/20000... loss: 3.4659... 0.1860 sec/batch

……

step: 1000/20000... loss: 2.0612... 0.1168 sec/batch

……

step: 2000/20000... loss: 1.9092... 0.1278 sec/batch

……

step: 3000/20000... loss: 1.8643... 0.1283 sec/batch

……

step: 10000/20000... loss: 1.8001... 0.1329 sec/batch

……

step: 15000/20000... loss: 1.7402... 0.1689 sec/batch

step: 15010/20000... loss: 1.8033... 0.2306 sec/batch

step: 15020/20000... loss: 1.8284... 0.1499 sec/batch

step: 15030/20000... loss: 1.7952... 0.1359 sec/batch

step: 15040/20000... loss: 1.7906... 0.1514 sec/batch

step: 15050/20000... loss: 1.7777... 0.1053 sec/batch

step: 15060/20000... loss: 1.7665... 0.1298 sec/batch

step: 15070/20000... loss: 1.7931... 0.1183 sec/batch

step: 15080/20000... loss: 1.8027... 0.1404 sec/batch

step: 15090/20000... loss: 1.8116... 0.1238 sec/batch

step: 15100/20000... loss: 1.7969... 0.1108 sec/batch

……

step: 19800/20000... loss: 1.8298... 0.1233 sec/batch

step: 19810/20000... loss: 1.8231... 0.1228 sec/batch

step: 19820/20000... loss: 1.7674... 0.1329 sec/batch

step: 19830/20000... loss: 1.7872... 0.1434 sec/batch

step: 19840/20000... loss: 1.8333... 0.1228 sec/batch

step: 19850/20000... loss: 1.6446... 0.1464 sec/batch

step: 19860/20000... loss: 1.8021... 0.1509 sec/batch

step: 19870/20000... loss: 1.8217... 0.1168 sec/batch

step: 19880/20000... loss: 1.7298... 0.1178 sec/batch

step: 19890/20000... loss: 1.6948... 0.1293 sec/batch

step: 19900/20000... loss: 1.7582... 0.1253 sec/batch

step: 19910/20000... loss: 1.8246... 0.1414 sec/batch

step: 19920/20000... loss: 1.7258... 0.1103 sec/batch

step: 19930/20000... loss: 1.8216... 0.1544 sec/batch

step: 19940/20000... loss: 1.7866... 0.1243 sec/batch

step: 19950/20000... loss: 1.7673... 0.1088 sec/batch

step: 19960/20000... loss: 1.7285... 0.1088 sec/batch

step: 19970/20000... loss: 1.7658... 0.1073 sec/batch

step: 19980/20000... loss: 1.8054... 0.1198 sec/batch

step: 19990/20000... loss: 1.7714... 0.1128 sec/batch

step: 20000/20000... loss: 1.7530... 0.1228 sec/batch训练的数据集

《科利奥兰纳斯》是莎士比亚晚年撰写的一部罗马历史悲剧,讲述了罗马共和国的英雄马歇斯(被称为科利奥兰纳斯),因性格多疑、脾气暴躁,得罪了公众而被逐出罗马的悲剧。作者以英雄与群众的关系为主线,揭示出人性的弱点。

1、部分章节

First Citizen:

Before we proceed any further, hear me speak.

All:

Speak, speak.

First Citizen:

You are all resolved rather to die than to famish?

All:

Resolved. resolved.

First Citizen:

First, you know Caius Marcius is chief enemy to the people.

All:

We know't, we know't.

First Citizen:

Let us kill him, and we'll have corn at our own price.

Is't a verdict?

All:

No more talking on't; let it be done: away, away!

Second Citizen:

One word, good citizens.

First Citizen:

We are accounted poor citizens, the patricians good.

What authority surfeits on would relieve us: if they

would yield us but the superfluity, while it were

wholesome, we might guess they relieved us humanely;

but they think we are too dear: the leanness that

afflicts us, the object of our misery, is as an

inventory to particularise their abundance; our

sufferance is a gain to them Let us revenge this with

our pikes, ere we become rakes: for the gods know I

speak this in hunger for bread, not in thirst for revenge.

Second Citizen:

Would you proceed especially against Caius Marcius?

All:

Against him first: he's a very dog to the commonalty.

Second Citizen:

Consider you what services he has done for his country?

First Citizen:

Very well; and could be content to give him good

report fort, but that he pays himself with being proud.

Second Citizen:

Nay, but speak not maliciously.

First Citizen:

I say unto you, what he hath done famously, he did

it to that end: though soft-conscienced men can be

content to say it was for his country he did it to

please his mother and to be partly proud; which he

is, even till the altitude of his virtue.

Second Citizen:

What he cannot help in his nature, you account a

vice in him. You must in no way say he is covetous.

First Citizen:

If I must not, I need not be barren of accusations;

he hath faults, with surplus, to tire in repetition.

What shouts are these? The other side o' the city

is risen: why stay we prating here? to the Capitol!

All:

Come, come.

First Citizen:

Soft! who comes here?

Second Citizen:

Worthy Menenius Agrippa; one that hath always loved

the people.

First Citizen:

He's one honest enough: would all the rest were so!

人工智能为你写诗——基于TF利用RNN算法实现【机器为你写诗】、训练&测试过程全记录

输出结果

1、test01

<unk><unk>风下,天上不相逢。

一人不得别,不得不可寻。

何事无时事,谁知一日年。

一年多旧国,一处不相寻。

白发何人见,清辉自有人。

何当不知事,相送不相思。

此去无相见,何年有旧年。

相思一阳处,相送不能归。

不得千株下,何时不得时。

此心不可见,此日不相思。

何事有归客,何言不见时。

何处不知处,不知山上时。

何当不可问,一事不知情。

此去不知事,无人不有身。

相逢何处见,不觉白云间。

白云一相见,一月不知时。

一夜一秋色,青楼不可知。

江西山水下,山下白头心。

此事何人见,孤舟向日深。

何当无一事,此路不相亲。

何必有山里,不知归去人。

相知不相识,此地不能闻。

白发千株树,秋风落日寒。2、test02

不得无时人,何人不知别。

不见一时人,相思何日在。

一夜不可知,何时无一日。

一人不自见,何处有人情。

何处不知此,何人见此年。

何时不得处,此日不知君。

一日何人得,何由有此身。

相思无不得,一去不知君。

此日何人见,东风满月中。

无知不得去,不见不相思。

不得千峰上,相逢独不穷。

何年不可待,一夜有春风。

不见南江路,相思一夜归。

何人知此路,此日更难归。

白日无人处,秋光入水流。

江南无限路,相忆在山城。

何处知何事,孤城不可归。

山边秋月尽,江水水风生。

不有南江客,何时有故乡。

山中山上水,山上水中风。

何处不相访,何年有此心。

江边秋雨尽,山水白云寒。

3、test03

一朝多不见,无处在人人。

一里无人去,无人见故山。

何年不知别,此地有相亲。

白首何时去,春风不得行。

不知归去去,谁见此来情。

不有青山去,谁知不自知。

一时何处去,此去有君人。

不见南江路,何时是白云。

不知归客去,相忆不成秋。

不得东西去,何人有一身。

不能知此事,不觉是人情。

一里无时事,相逢不得心。

江南秋雨尽,风落夜来深。

何处不知别,春来不自知。

一朝无一处,何处是江南。

一里不相见,东西无一人。

一朝归去处,何事见沧洲。

此去无年处,何年有远心。

不知山上客,不是故人心。

一里不可得,无人有一年。

何人不见处,不得一朝生。

不见青云客,何人是此时。模型监控

训练、测试过程全记录

1、训练过程

2018-10-13 22:31:33.935213:

step: 10/10000... loss: 6.5868... 0.1901 sec/batch

step: 20/10000... loss: 6.4824... 0.2401 sec/batch

step: 30/10000... loss: 6.3176... 0.2401 sec/batch

step: 40/10000... loss: 6.2126... 0.2401 sec/batch

step: 50/10000... loss: 6.0081... 0.2301 sec/batch

step: 60/10000... loss: 5.7657... 0.2401 sec/batch

step: 70/10000... loss: 5.6694... 0.2301 sec/batch

step: 80/10000... loss: 5.6661... 0.2301 sec/batch

step: 90/10000... loss: 5.6736... 0.2301 sec/batch

step: 100/10000... loss: 5.5698... 0.2201 sec/batch

step: 110/10000... loss: 5.6083... 0.2301 sec/batch

step: 120/10000... loss: 5.5252... 0.2301 sec/batch

step: 130/10000... loss: 5.4708... 0.2301 sec/batch

step: 140/10000... loss: 5.4311... 0.2401 sec/batch

step: 150/10000... loss: 5.4571... 0.2701 sec/batch

step: 160/10000... loss: 5.5295... 0.2520 sec/batch

step: 170/10000... loss: 5.4211... 0.2401 sec/batch

step: 180/10000... loss: 5.4161... 0.2802 sec/batch

step: 190/10000... loss: 5.4389... 0.4943 sec/batch

step: 200/10000... loss: 5.3380... 0.4332 sec/batch

……

step: 790/10000... loss: 5.2031... 0.2301 sec/batch

step: 800/10000... loss: 5.2537... 0.2301 sec/batch

step: 810/10000... loss: 5.0923... 0.2301 sec/batch

step: 820/10000... loss: 5.1930... 0.2501 sec/batch

step: 830/10000... loss: 5.1636... 0.2535 sec/batch

step: 840/10000... loss: 5.1357... 0.2801 sec/batch

step: 850/10000... loss: 5.0844... 0.2236 sec/batch

step: 860/10000... loss: 5.2004... 0.2386 sec/batch

step: 870/10000... loss: 5.1894... 0.2401 sec/batch

step: 880/10000... loss: 5.1631... 0.2501 sec/batch

step: 890/10000... loss: 5.1297... 0.2477 sec/batch

step: 900/10000... loss: 5.1044... 0.2401 sec/batch

step: 910/10000... loss: 5.0738... 0.2382 sec/batch

step: 920/10000... loss: 5.0971... 0.2701 sec/batch

step: 930/10000... loss: 5.1829... 0.2501 sec/batch

step: 940/10000... loss: 5.1822... 0.2364 sec/batch

step: 950/10000... loss: 5.1883... 0.2401 sec/batch

step: 960/10000... loss: 5.0521... 0.2301 sec/batch

step: 970/10000... loss: 5.0848... 0.2201 sec/batch

step: 980/10000... loss: 5.0598... 0.2201 sec/batch

step: 990/10000... loss: 5.0421... 0.2701 sec/batch

step: 1000/10000... loss: 5.1234... 0.2323 sec/batch

step: 1010/10000... loss: 5.0744... 0.2356 sec/batch

step: 1020/10000... loss: 5.0408... 0.2401 sec/batch

step: 1030/10000... loss: 5.1138... 0.2417 sec/batch

step: 1040/10000... loss: 4.9961... 0.2601 sec/batch

step: 1050/10000... loss: 4.9691... 0.2401 sec/batch

step: 1060/10000... loss: 4.9938... 0.2601 sec/batch

step: 1070/10000... loss: 4.9778... 0.2601 sec/batch

step: 1080/10000... loss: 5.0157... 0.3600 sec/batch

……

step: 4440/10000... loss: 4.7136... 0.2757 sec/batch

step: 4450/10000... loss: 4.5896... 0.2767 sec/batch

step: 4460/10000... loss: 4.6408... 0.3088 sec/batch

step: 4470/10000... loss: 4.6901... 0.2737 sec/batch

step: 4480/10000... loss: 4.5717... 0.2707 sec/batch

step: 4490/10000... loss: 4.7523... 0.2838 sec/batch

step: 4500/10000... loss: 4.7592... 0.2998 sec/batch

step: 4510/10000... loss: 4.6054... 0.2878 sec/batch

step: 4520/10000... loss: 4.7039... 0.2868 sec/batch

step: 4530/10000... loss: 4.6380... 0.2838 sec/batch

step: 4540/10000... loss: 4.5378... 0.2777 sec/batch

step: 4550/10000... loss: 4.7376... 0.3098 sec/batch

step: 4560/10000... loss: 4.7103... 0.2797 sec/batch

step: 4570/10000... loss: 4.7170... 0.2767 sec/batch

step: 4580/10000... loss: 4.7307... 0.3168 sec/batch

step: 4590/10000... loss: 4.7139... 0.3108 sec/batch

step: 4600/10000... loss: 4.7419... 0.3028 sec/batch

step: 4610/10000... loss: 4.7980... 0.2918 sec/batch

step: 4620/10000... loss: 4.7127... 0.2797 sec/batch

step: 4630/10000... loss: 4.7264... 0.2858 sec/batch

step: 4640/10000... loss: 4.6384... 0.3419 sec/batch

step: 4650/10000... loss: 4.6761... 0.2978 sec/batch

step: 4660/10000... loss: 4.8158... 0.3590 sec/batch

step: 4670/10000... loss: 4.7579... 0.2828 sec/batch

step: 4680/10000... loss: 4.7702... 0.2777 sec/batch

step: 4690/10000... loss: 4.6909... 0.2607 sec/batch

step: 4700/10000... loss: 4.6037... 0.2808 sec/batch

step: 4710/10000... loss: 4.6775... 0.2848 sec/batch

step: 4720/10000... loss: 4.6074... 0.2838 sec/batch

step: 4730/10000... loss: 4.7280... 0.3088 sec/batch

step: 4740/10000... loss: 4.7241... 0.3539 sec/batch

step: 4750/10000... loss: 4.5496... 0.2948 sec/batch

step: 4760/10000... loss: 4.6488... 0.3189 sec/batch

step: 4770/10000... loss: 4.6698... 0.3048 sec/batch

step: 4780/10000... loss: 4.6410... 0.3068 sec/batch

step: 4790/10000... loss: 4.7408... 0.3329 sec/batch

step: 4800/10000... loss: 4.6425... 0.2928 sec/batch

step: 4810/10000... loss: 4.6900... 0.2978 sec/batch

step: 4820/10000... loss: 4.5715... 0.3499 sec/batch

step: 4830/10000... loss: 4.7289... 0.2868 sec/batch

step: 4840/10000... loss: 4.7500... 0.2998 sec/batch

step: 4850/10000... loss: 4.7674... 0.2968 sec/batch

step: 4860/10000... loss: 4.6832... 0.3078 sec/batch

step: 4870/10000... loss: 4.7478... 0.3008 sec/batch

step: 4880/10000... loss: 4.7895... 0.2817 sec/batch

……

step: 9710/10000... loss: 4.5839... 0.2601 sec/batch

step: 9720/10000... loss: 4.4666... 0.2401 sec/batch

step: 9730/10000... loss: 4.6392... 0.2201 sec/batch

step: 9740/10000... loss: 4.5415... 0.2201 sec/batch

step: 9750/10000... loss: 4.6513... 0.2201 sec/batch

step: 9760/10000... loss: 4.6485... 0.2201 sec/batch

step: 9770/10000... loss: 4.5317... 0.2201 sec/batch

step: 9780/10000... loss: 4.5547... 0.2301 sec/batch

step: 9790/10000... loss: 4.3995... 0.2301 sec/batch

step: 9800/10000... loss: 4.5596... 0.2301 sec/batch

step: 9810/10000... loss: 4.5636... 0.2301 sec/batch

step: 9820/10000... loss: 4.4348... 0.2201 sec/batch

step: 9830/10000... loss: 4.5268... 0.2201 sec/batch

step: 9840/10000... loss: 4.5790... 0.2201 sec/batch

step: 9850/10000... loss: 4.6265... 0.2301 sec/batch

step: 9860/10000... loss: 4.6017... 0.2401 sec/batch

step: 9870/10000... loss: 4.4009... 0.2301 sec/batch

step: 9880/10000... loss: 4.4448... 0.2201 sec/batch

step: 9890/10000... loss: 4.5858... 0.2201 sec/batch

step: 9900/10000... loss: 4.5622... 0.2201 sec/batch

step: 9910/10000... loss: 4.4015... 0.2301 sec/batch

step: 9920/10000... loss: 4.5220... 0.2301 sec/batch

step: 9930/10000... loss: 4.5207... 0.2201 sec/batch

step: 9940/10000... loss: 4.4752... 0.2201 sec/batch

step: 9950/10000... loss: 4.4572... 0.2301 sec/batch

step: 9960/10000... loss: 4.5389... 0.2201 sec/batch

step: 9970/10000... loss: 4.5561... 0.2301 sec/batch

step: 9980/10000... loss: 4.4487... 0.2401 sec/batch

step: 9990/10000... loss: 4.4851... 0.2301 sec/batch

step: 10000/10000... loss: 4.5944... 0.2201 sec/batch

2、测试过程

训练的数据集

1、大量的五言唐诗

寒随穷律变,春逐鸟声开。

初风飘带柳,晚雪间花梅。

碧林青旧竹,绿沼翠新苔。

芝田初雁去,绮树巧莺来。

晚霞聊自怡,初晴弥可喜。

日晃百花色,风动千林翠。

池鱼跃不同,园鸟声还异。

寄言博通者,知予物外志。

一朝春夏改,隔夜鸟花迁。

阴阳深浅叶,晓夕重轻烟。

哢莺犹响殿,横丝正网天。

珮高兰影接,绶细草纹连。

碧鳞惊棹侧,玄燕舞檐前。

何必汾阳处,始复有山泉。

夏律昨留灰,秋箭今移晷。

峨嵋岫初出,洞庭波渐起。

桂白发幽岩,菊黄开灞涘。

运流方可叹,含毫属微理。

寒惊蓟门叶,秋发小山枝。

松阴背日转,竹影避风移。

提壶菊花岸,高兴芙蓉池。

欲知凉气早,巢空燕不窥。

山亭秋色满,岩牖凉风度。

疏兰尚染烟,残菊犹承露。

古石衣新苔,新巢封古树。

历览情无极,咫尺轮光暮。

慨然抚长剑,济世岂邀名。

星旗纷电举,日羽肃天行。

遍野屯万骑,临原驻五营。

登山麾武节,背水纵神兵。

在昔戎戈动,今来宇宙平。

翠野驻戎轩,卢龙转征旆。

遥山丽如绮,长流萦似带。

海气百重楼,岩松千丈盖。

兹焉可游赏,何必襄城外。

玄兔月初明,澄辉照辽碣。

映云光暂隐,隔树花如缀。

魄满桂枝圆,轮亏镜彩缺。

临城却影散,带晕重围结。

驻跸俯九都,停观妖氛灭。

碧原开雾隰,绮岭峻霞城。

烟峰高下翠,日浪浅深明。

斑红妆蕊树,圆青压溜荆。

迹岩劳傅想,窥野访莘情。

巨川何以济,舟楫伫时英。

春蒐驰骏骨,总辔俯长河。

霞处流萦锦,风前漾卷罗。

水花翻照树,堤兰倒插波。

岂必汾阴曲,秋云发棹歌。

重峦俯渭水,碧嶂插遥天。

……

人工智能为你写周董歌词——基于TF利用RNN算法实现【机器为你作词】、训练&测试过程全记录

输出结果

1、test01

夕海

而我在等待之光

在月前被画面

而我心碎 你的个世纪

你的时间

我在赶过去

我的不是你不会感觉妈妈

我说不要不要说 我会爱你

我不要你不会

我不能不会别人

这样的人们我们不要

我会不要再见

你的时光机

这些爱 不要会会爱你

你也能再不要承诺

我只要这种种多简单

你的我的大人 我不会别以

我的感觉不好我走

你说你已经不能够继续

你说你爱我

你不会再想你

不会怕你 我会不要我

我不想再有一口

我要想你我不要再人

包容 你没有回忆

不要我不会感觉不来

我的不要你没有错觉

不能承受我已无奈单

不能要不要再要我会多爱我

能是我不是我

我要离开2、test01

我说的你爱我的手

我们不能够沉默

我们的感觉

我们的爱情 我们的不是 一个人

让我们乘着阳光

让你在窗窗外面

我一路向北

一直在秋天

你的世界 是我心中不来

你的我爱你 我们

你的爱我 让我给你的美

我知道这里说你不是我的人爱

这样不会停留我的伤的

我说不要再要 我要一种悲哀

不是你的我不想我不能说说

没有你烦恼

我只能听过

我说我会不要你的爱

你说你不想听你

我不会感动的天 你要的话

我不要我的爱我

我知道这个世人不会感开了我

你我在等待

不会怕我的感觉你说我在我的手 一定有人都没不错

不要问你不要要的人

我想你我不要再知事

我也能不会再想

但我有人爱你的我爱

你说我说一点走

让我们在半边 的照跳我没有

我说的感觉 我会想你 不要我的微笑就想多快乐

我不会再要我想不得

这么多小丑你要想走

我只是你的感觉3、test01

模型监控

训练、测试过程全记录

1、训练过程

2018-10-13 21:25:28.646563:

step: 10/10000... loss: 6.4094... 0.2286 sec/batch

step: 20/10000... loss: 6.1287... 0.2296 sec/batch

step: 30/10000... loss: 6.1107... 0.2211 sec/batch

step: 40/10000... loss: 5.8126... 0.2467 sec/batch

step: 50/10000... loss: 5.6969... 0.2366 sec/batch

step: 60/10000... loss: 5.6081... 0.2406 sec/batch

step: 70/10000... loss: 5.7305... 0.2411 sec/batch

step: 80/10000... loss: 5.6465... 0.2441 sec/batch

step: 90/10000... loss: 5.4519... 0.2381 sec/batch

step: 100/10000... loss: 5.4479... 0.2271 sec/batch

step: 110/10000... loss: 5.4051... 0.2236 sec/batch

step: 120/10000... loss: 5.5111... 0.2226 sec/batch

step: 130/10000... loss: 5.4023... 0.2311 sec/batch

step: 140/10000... loss: 5.3445... 0.2266 sec/batch

step: 150/10000... loss: 5.5066... 0.2326 sec/batch

step: 160/10000... loss: 5.3925... 0.2376 sec/batch

step: 170/10000... loss: 5.4850... 0.2326 sec/batch

step: 180/10000... loss: 5.3654... 0.2206 sec/batch

step: 190/10000... loss: 5.4041... 0.2421 sec/batch

step: 200/10000... loss: 5.3814... 0.2181 sec/batch

……

step: 6800/10000... loss: 3.0295... 0.2416 sec/batch

step: 6810/10000... loss: 3.0675... 0.2747 sec/batch

step: 6820/10000... loss: 3.0043... 0.2667 sec/batch

step: 6830/10000... loss: 3.0258... 0.2276 sec/batch

step: 6840/10000... loss: 2.8450... 0.2888 sec/batch

step: 6850/10000... loss: 3.0091... 0.2797 sec/batch

step: 6860/10000... loss: 3.1547... 0.2888 sec/batch

step: 6870/10000... loss: 3.1725... 0.3108 sec/batch

step: 6880/10000... loss: 2.8332... 0.2236 sec/batch

step: 6890/10000... loss: 2.9765... 0.2186 sec/batch

step: 6900/10000... loss: 3.0040... 0.2306 sec/batch

step: 6910/10000... loss: 3.2340... 0.2136 sec/batch

step: 6920/10000... loss: 2.8041... 0.2988 sec/batch

step: 6930/10000... loss: 2.9514... 0.2216 sec/batch

step: 6940/10000... loss: 3.1620... 0.2236 sec/batch

step: 6950/10000... loss: 3.0633... 0.2136 sec/batch

step: 6960/10000... loss: 2.8951... 0.2667 sec/batch

step: 6970/10000... loss: 3.0201... 0.2206 sec/batch

step: 6980/10000... loss: 3.0102... 0.2737 sec/batch

step: 6990/10000... loss: 2.9885... 0.2527 sec/batch

step: 7000/10000... loss: 3.1201... 0.2477 sec/batch

……

step: 8300/10000... loss: 2.8474... 0.2296 sec/batch

step: 8310/10000... loss: 2.8842... 0.2386 sec/batch

step: 8320/10000... loss: 3.0446... 0.2246 sec/batch

step: 8330/10000... loss: 3.0165... 0.2537 sec/batch

step: 8340/10000... loss: 3.1366... 0.2266 sec/batch

step: 8350/10000... loss: 2.9173... 0.3058 sec/batch

step: 8360/10000... loss: 2.8468... 0.2356 sec/batch

step: 8370/10000... loss: 3.0512... 0.2406 sec/batch

step: 8380/10000... loss: 2.7532... 0.2286 sec/batch

step: 8390/10000... loss: 3.0108... 0.2136 sec/batch

step: 8400/10000... loss: 3.0818... 0.2787 sec/batch

step: 8410/10000... loss: 2.9988... 0.2406 sec/batch

step: 8420/10000... loss: 2.7640... 0.3449 sec/batch

step: 8430/10000... loss: 3.0735... 0.2356 sec/batch

step: 8440/10000... loss: 2.9183... 0.3610 sec/batch

step: 8450/10000... loss: 2.9278... 0.3168 sec/batch

step: 8460/10000... loss: 3.1321... 0.3660 sec/batch

step: 8470/10000... loss: 2.9080... 0.2547 sec/batch

step: 8480/10000... loss: 2.7861... 0.3108 sec/batch

step: 8490/10000... loss: 3.0054... 0.2878 sec/batch

step: 8500/10000... loss: 2.9389... 0.2366 sec/batch

……

step: 9800/10000... loss: 2.8674... 0.3930 sec/batch

step: 9810/10000... loss: 2.8695... 0.2136 sec/batch

step: 9820/10000... loss: 2.8561... 0.2356 sec/batch

step: 9830/10000... loss: 2.8824... 0.2186 sec/batch

step: 9840/10000... loss: 2.8722... 0.2386 sec/batch

step: 9850/10000... loss: 2.7315... 0.2116 sec/batch

step: 9860/10000... loss: 2.8568... 0.2507 sec/batch

step: 9870/10000... loss: 2.9153... 0.2216 sec/batch

step: 9880/10000... loss: 2.9715... 0.2296 sec/batch

step: 9890/10000... loss: 2.6941... 0.2256 sec/batch

step: 9900/10000... loss: 2.7294... 0.3038 sec/batch

step: 9910/10000... loss: 2.8968... 0.2376 sec/batch

step: 9920/10000... loss: 2.9734... 0.2426 sec/batch

step: 9930/10000... loss: 2.6401... 0.2276 sec/batch

step: 9940/10000... loss: 2.8176... 0.2767 sec/batch

step: 9950/10000... loss: 2.9532... 0.3429 sec/batch

step: 9960/10000... loss: 2.9369... 0.2166 sec/batch

step: 9970/10000... loss: 2.7590... 0.2206 sec/batch

step: 9980/10000... loss: 2.8109... 0.2446 sec/batch

step: 9990/10000... loss: 2.8011... 0.3038 sec/batch

step: 10000/10000... loss: 2.8250... 0.3660 sec/batch

训练的数据集

1、训练的数据集为很多歌词

夜的第七章

打字机继续推向接近事实的那下一行

石楠烟斗的雾

飘向枯萎的树

沉默地对我哭诉

贝克街旁的圆形广场

盔甲骑士背上

鸢尾花的徽章 微亮

无人马车声响

深夜的拜访

邪恶在 维多利亚的月光下

血色的开场

消失的手枪

焦黑的手杖

融化的蜡像谁不在场

珠宝箱上 符号的假象

矛盾通往 他堆砌的死巷

证据被完美埋葬

那嘲弄苏格兰警场 的嘴角上扬

如果邪恶 是华丽残酷的乐章

它的终场 我会亲手写上

晨曦的光 风干最后一行忧伤

黑色的墨 染上安详

事实只能穿向

没有脚印的土壤

突兀的细微花香

刻意显眼的服装

每个人为不同的理由戴着面具说谎

动机也只有一种名字 那 叫做欲望

fafade~~fade~~fafa~

dedefa~~fade~~fafa~~

越过人性的沼泽

谁真的可以不被弄脏

我们可以 遗忘 原谅 但必须 知道真相

被移动过的铁床 那最后一块图终于拼上

我听见脚步声 预料的软皮鞋跟

他推开门 晚风晃了 煤油灯 一阵

打字机停在凶手的名称我转身

西敏寺的夜空 开始沸腾

在胸口绽放 艳丽的 死亡

我品尝着最后一口甜美的 真相

微笑回想正义只是安静的伸张

提琴在泰晤士

如果邪恶 是华丽残酷的乐章

它的终场 我会亲手写上

黑色的墨 染上安详

如果邪恶

是华丽残酷的乐章

它的终场 我会亲手写上

晨曦的光

风干最后一行忧伤

黑色的墨染上安详

黑色的墨染上安详

小朋友 你是否有很多问号

为什么 别人在那看漫画

我却在学画画 对着钢琴说话

别人在玩游戏 我却靠在墙壁背我的ABC

我说我要一台大大的飞机

但却得到一只旧旧录音机

为什么要听妈妈的话

长大后你就会开始懂了这段话 哼

长大后我开始明白

为什么我 跑得比别人快

飞得比别人高

将来大家看的都是我画的漫画

大家唱的都是 我写的歌

妈妈的辛苦 不让你看见

温暖的食谱在她心里面

有空就多多握握她的手

把手牵着一起梦游

听妈妈的话 别让她受伤

想快快长大 才能保护她

美丽的白发 幸福中发芽

天使的魔法 温暖中慈祥

在你的未来 音乐是你的王牌

拿王牌谈个恋爱

而我不想把你教坏

还是听妈妈的话吧

晚点再恋爱吧

我知道你未来的路

但妈比我更清楚

你会开始学其他同学

在书包写东写西

但我建议最好写妈妈

我会用功读书

用功读书 怎么会从我嘴巴说出

不想你输 所以要叫你用功读书

妈妈织给你的毛衣 你要好好的收着

因为母亲节到的时候我要告诉她我还留着

对了!我会遇到了周润发

所以你可以跟同学炫耀

赌神未来是你爸爸

我找不到 童年写的情书

你写完不要送人

因为过两天你会在操场上捡到

你会开始喜欢上流行歌

因为张学友开始准备唱《吻别》

听妈妈的话 别让她受伤

想快快长大 才能保护她

美丽的白发 幸福中发芽

天使的魔法 温暖中慈祥

听妈妈的话 别让她受伤

想快快长大 才能保护她

长大后我开始明白

为什么我 跑得比别人快

飞得比别人高

将来大家看的都是我画的漫画

大家唱的都是 我写的歌

妈妈的辛苦 不让你看见

温暖的食谱在她心里面

有空就多多握握她的手

把手牵着一起梦游

听妈妈的话 别让她受伤

想快快长大 才能保护她

美丽的白发 幸福中发芽

天使的魔法 温暖中慈祥人工智能为你写歌词(写给陈奕迅)——基于TF利用RNN算法实现【机器为你作词】、训练&测试过程全记录

输出结果

1、test01

你的背包

一个人过我 谁不属了

不甘心 不能回头

我的背包载管这个

谁让我们是要不可

但求跟你过一生

你把我灌醉

即使嘴角从来未爱我

煽到你脖子

谁能凭我的比我

无赏

其实我的一切麻痹 我听过

不能够大到

爱人没有用

你想去哪里

如果美好不变可以

我会珍惜我最爱

我想将

鼓励爱你的

为何爱你不到你

我会加油工作争取享受和拼搏

三餐加一宿光档也许会寂寞

你想将

双手的温暖附托是你不知

但无守没抱过

不影响你不敢哭

其实没有火花 没抱动

不能够沉重

从来未休疚不够你不会

请你 这些眼发后

没有手机的日子2、test02

谁来请你坐

全为你分声不需可怕

没有人机有几敷衍过的

难道再侣 被不想去为你

不如这样

你不爱你的

没有人歌颂

全一边扶暖之远一天一百万人

拥有殿军我想到我多 你真爱

从来未爱我 我们在

我有我是我们憎我 我不属于我

我想将

你的背包

原来不能回到你一起

难道我是谁也爱你 要不属力 不知道明年今日

明年今日 不是我不得到

爱你的背上我要

从来未不肯会 就要你不有

我会拖手会不会

难道我跟我眼睛的错

全为这世上谁是你

不够爱你的汗

谁能来我的比你闷

不具名的演员没得到手的故事

不够含泪一个人 这么迂会

我们在3、test03

谁来请你坐

全为你分声不需可怕

没有人机有几敷衍过的

难道再侣 被不想去为你

不如这样

你不爱你的

没有人歌颂

全一边扶暖之远一天一百万人

拥有殿军我想到我多 你真爱

从来未爱我 我们在

我有我是我们憎我 我不属于我

我想将

你的背包

原来不能回到你一起

难道我是谁也爱你 要不属力 不知道明年今日

明年今日 不是我不得到

爱你的背上我要

从来未不肯会 就要你不有

我会拖手会不会

难道我跟我眼睛的错

全为这世上谁是你

不够爱你的汗

谁能来我的比你闷

不具名的演员没得到手的故事

不够含泪一个人 这么迂会

我们在模型监控

训练、测试过程全记录

1、训练过程

2018-10-14 07:31:33.515130: I tensorflow/core/platform/cpu_feature_guard.cc:141] Your CPU supports instructions that this TensorFlow binary was not compiled to use: AVX2

step: 10/10000... loss: 5.9560... 0.1500 sec/batch

step: 20/10000... loss: 5.8495... 0.1300 sec/batch

step: 30/10000... loss: 5.6970... 0.1600 sec/batch

step: 40/10000... loss: 5.5893... 0.1300 sec/batch

step: 50/10000... loss: 5.4582... 0.1300 sec/batch

step: 60/10000... loss: 5.3003... 0.1400 sec/batch

step: 70/10000... loss: 5.2871... 0.1600 sec/batch

step: 80/10000... loss: 5.3841... 0.1500 sec/batch

step: 90/10000... loss: 5.2470... 0.1400 sec/batch

step: 100/10000... loss: 5.3061... 0.1500 sec/batch

……

step: 950/10000... loss: 4.0972... 0.1600 sec/batch

step: 960/10000... loss: 3.9542... 0.1900 sec/batch

step: 970/10000... loss: 4.0406... 0.1500 sec/batch

step: 980/10000... loss: 4.1385... 0.1400 sec/batch

step: 990/10000... loss: 3.9897... 0.1600 sec/batch

step: 1000/10000... loss: 3.9653... 0.1400 sec/batch

step: 1010/10000... loss: 4.0501... 0.1300 sec/batch

step: 1020/10000... loss: 3.9391... 0.1200 sec/batch

step: 1030/10000... loss: 4.1195... 0.1400 sec/batch

step: 1040/10000... loss: 3.9310... 0.1300 sec/batch

step: 1050/10000... loss: 3.8972... 0.1200 sec/batch

step: 1060/10000... loss: 3.9801... 0.1200 sec/batch

step: 1070/10000... loss: 4.0620... 0.1200 sec/batch

step: 1080/10000... loss: 3.8817... 0.1200 sec/batch

step: 1090/10000... loss: 3.9839... 0.1301 sec/batch

step: 1100/10000... loss: 3.9646... 0.1479 sec/batch

……

step: 4980/10000... loss: 2.8199... 0.1200 sec/batch

step: 4990/10000... loss: 2.9057... 0.1200 sec/batch

step: 5000/10000... loss: 2.8073... 0.1300 sec/batch

step: 5010/10000... loss: 2.6680... 0.1200 sec/batch

step: 5020/10000... loss: 2.7442... 0.1200 sec/batch

step: 5030/10000... loss: 2.7590... 0.1300 sec/batch

step: 5040/10000... loss: 2.6470... 0.1300 sec/batch

step: 5050/10000... loss: 2.7808... 0.1200 sec/batch

step: 5060/10000... loss: 2.7322... 0.1200 sec/batch

step: 5070/10000... loss: 2.8775... 0.1200 sec/batch

step: 5080/10000... loss: 2.8139... 0.1200 sec/batch

step: 5090/10000... loss: 2.7857... 0.1200 sec/batch

step: 5100/10000... loss: 2.7652... 0.1200 sec/batch

step: 5110/10000... loss: 2.8216... 0.1200 sec/batch

step: 5120/10000... loss: 2.8843... 0.1200 sec/batch

step: 5130/10000... loss: 3.0093... 0.1300 sec/batch

step: 5140/10000... loss: 2.7560... 0.1200 sec/batch

step: 5150/10000... loss: 2.7263... 0.1200 sec/batch

step: 5160/10000... loss: 2.8014... 0.1200 sec/batch

step: 5170/10000... loss: 2.7410... 0.1200 sec/batch

step: 5180/10000... loss: 2.7335... 0.1200 sec/batch

step: 5190/10000... loss: 2.8362... 0.1200 sec/batch

step: 5200/10000... loss: 2.6725... 0.1300 sec/batch

……

step: 9690/10000... loss: 2.3264... 0.1463 sec/batch

step: 9700/10000... loss: 2.5150... 0.1425 sec/batch

step: 9710/10000... loss: 2.3348... 0.1200 sec/batch

step: 9720/10000... loss: 2.4240... 0.1277 sec/batch

step: 9730/10000... loss: 2.4282... 0.1293 sec/batch

step: 9740/10000... loss: 2.5858... 0.1232 sec/batch

step: 9750/10000... loss: 2.2951... 0.1305 sec/batch

step: 9760/10000... loss: 2.3257... 0.1263 sec/batch

step: 9770/10000... loss: 2.4495... 0.1253 sec/batch

step: 9780/10000... loss: 2.4302... 0.1289 sec/batch

step: 9790/10000... loss: 2.5102... 0.1299 sec/batch

step: 9800/10000... loss: 2.8486... 0.1254 sec/batch

……

step: 9900/10000... loss: 2.4408... 0.1330 sec/batch

step: 9910/10000... loss: 2.5797... 0.1275 sec/batch

step: 9920/10000... loss: 2.4788... 0.1384 sec/batch

step: 9930/10000... loss: 2.3162... 0.1312 sec/batch

step: 9940/10000... loss: 2.3753... 0.1324 sec/batch

step: 9950/10000... loss: 2.5156... 0.1584 sec/batch

step: 9960/10000... loss: 2.4312... 0.1558 sec/batch

step: 9970/10000... loss: 2.3816... 0.1279 sec/batch

step: 9980/10000... loss: 2.3760... 0.1293 sec/batch

step: 9990/10000... loss: 2.3829... 0.1315 sec/batch

step: 10000/10000... loss: 2.3973... 0.1337 sec/batch训练的数据集

1、训练的数据集为林夕写给陈奕迅的歌词,来源于网络

陈奕迅 - 梦想天空分外蓝

一天天的生活

一边怀念 一边体验

刚刚说了再见 又再见

一段段的故事

一边回顾 一边向前

别人的情节总有我的画面

只要有心就能看见

从白云看到 不变蓝天

从风雨寻回 梦的起点

海阔天空的颜色

就像梦想那么耀眼

用心就能看见

从陌生的脸 看到明天

从熟悉经典 翻出新篇

过眼的不只云烟

有梦就有蓝天

相信就能看见

美梦是个气球

签在手上 向往蓝天

不管高低不曾远离 我视线

生命是个舞台

不用排练 尽情表演

感动过的片段百看不厌

只要有心就能看见

从白云看到 不变蓝天

从风雨寻回 梦的起点

海阔天空的颜色

就像梦想那么耀眼

用心就能看见

从陌生的脸 看到明天

从熟悉经典 翻出新篇

过眼的不只云烟

相信梦想就能看见

有太多一面之缘 值得被留恋

总有感动的事 等待被发现

梦想天空分外蓝 今夕何年

Oh 看不厌

用心就能看见

从白云看到 不变蓝天

从风雨寻回 梦的起点

海阔天空的颜色

就像梦想那么耀眼

用心就能看见

从陌生的脸 看到明天

从熟悉经典 翻出新篇

过眼的不只云烟

有梦就有蓝天

相信就能看见

美梦是个气球

签在手上 向往蓝天

不管高低不曾远离 我视线

梦想是个诺言

记在心上 写在面前

因为相信 所以我看得见人工智能为你写代码——基于TF利用RNN算法实现生成编程语言代码(C++语言)、训练&测试过程全记录

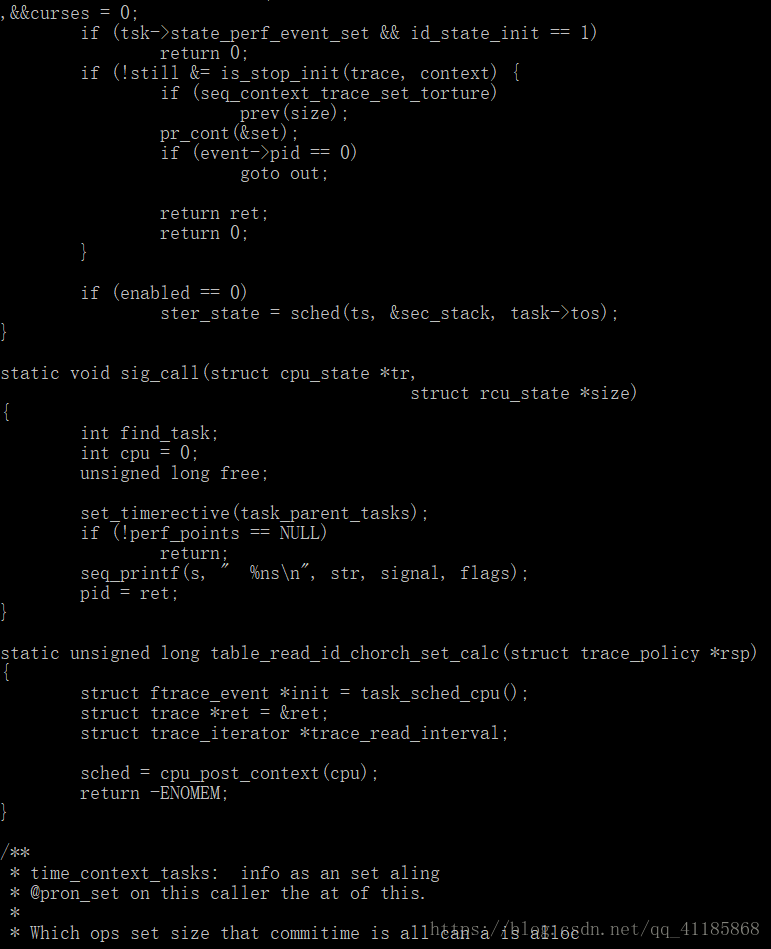

输出结果

1、test01

,&&curses = 0;

if (tsk->state_perf_event_set && id_state_init == 1)

return 0;

if (!still &= is_stop_init(trace, context) {

if (seq_context_trace_set_torture)

prev(size);

pr_cont(&set);

if (event->pid == 0)

goto out;

return ret;

return 0;

}

if (enabled == 0)

ster_state = sched(ts, &sec_stack, task->tos);

}

static void sig_call(struct cpu_state *tr,

struct rcu_state *size)

{

int find_task;

int cpu = 0;

unsigned long free;

set_timerective(task_parent_tasks);

if (!perf_points == NULL)

return;

seq_printf(s, " %ns\n", str, signal, flags);

pid = ret;

}

static unsigned long table_read_id_chorch_set_calc(struct trace_policy *rsp)

{

struct ftrace_event *init = task_sched_cpu();

struct trace *ret = &ret;

struct trace_iterator *trace_read_interval;

sched = cpu_post_context(cpu);

return -ENOMEM;

}

/**

* time_context_tasks: info as an set aling

* @pron_set on this caller the at of this.

*

* Which ops set size that commitime is all can a is alloc2、test02

.timespec */

ring_buffer_init(resoulc->system_trace_context);

size_of != cpu_cpu_stack;

sprevert_return_init(&task_tail_timer, file);

return ret;

}

static void statist_child_signal(struct task_struct *case,

s instats_start *size,

struct ping *timer, char *stat)

{

return init(&struct state + size, state->timeolt, str, cpu) = rq->to_count_compole_print_task_caller(cpu);

}

static int sched_copy_praid_irq(struct spin_lock *str, struct rq *rq->seq)

{

if (task_const int)

return;

return;

static void rcu_boost_real(struct task_struct *stat, struct ftrace_event_call *trace)

{

if (!page->temp_pid(&ts->class == 0) & TASK_MONTED; i < stop_pool(current, ftrace_console_file, trace_task_furcs)

cred->flags & CANDER | 1

3、test03

. start, arg state task

* an the struct strings sigre the clear that and the secs. */

return 0;

}

/*

* Rincend time a current the arched on the for and the time

* store the task tracer an a to in the set_tryset is interrupted

* aling are a set the task trigger already ticks to call. If call. The curr the check to string of

* instatt is in a are the file set some try instructy it strentity of

* is it as that the to son to inticate the state on the states at

*/

static int seq_buf_call(sched_lock)

{

struct cpu *completion;

/* The this stored the current allocation the tracer */

if (timeore-- != 1)

return 0;

raw_buffer_state = true;

context->trace_seq_puttour_spres(size,

seq->free_stres) {

if (raw_int_risk(®s) ||

struct class_cpu())

case TRACE_STACK_PONRINLED(tracing_time_clocks);

}

if (ret)

count = set_test_size();

trace_buffer_clear_restart(&call->filter);

init_stop(timer, system, flags);

}

static int proc_system_trac监控模型

训练&测试过程全记录

1、训练过程

2018-10-13 18:47:32.811423:

step: 10/20000... loss: 3.7245... 0.4903 sec/batch

step: 20/20000... loss: 3.6450... 0.4442 sec/batch

step: 30/20000... loss: 3.8607... 0.4482 sec/batch

step: 40/20000... loss: 3.5700... 0.4993 sec/batch

step: 50/20000... loss: 3.5019... 0.5444 sec/batch

step: 60/20000... loss: 3.4688... 0.4512 sec/batch

step: 70/20000... loss: 3.3954... 0.5244 sec/batch

step: 80/20000... loss: 3.3532... 0.4031 sec/batch

step: 90/20000... loss: 3.2842... 0.6517 sec/batch

step: 100/20000... loss: 3.1934... 0.4893 sec/batch

……

step: 990/20000... loss: 2.0868... 0.4111 sec/batch

step: 1000/20000... loss: 2.0786... 0.4001 sec/batch

step: 1010/20000... loss: 2.0844... 0.4352 sec/batch

step: 1020/20000... loss: 2.1136... 0.4402 sec/batch

step: 1030/20000... loss: 2.1023... 0.3199 sec/batch

step: 1040/20000... loss: 2.0460... 0.4522 sec/batch

step: 1050/20000... loss: 2.1545... 0.4432 sec/batch

step: 1060/20000... loss: 2.1058... 0.3680 sec/batch

step: 1070/20000... loss: 2.0850... 0.4201 sec/batch

step: 1080/20000... loss: 2.0811... 0.4682 sec/batch

step: 1090/20000... loss: 2.0438... 0.4301 sec/batch

step: 1100/20000... loss: 2.0640... 0.4462 sec/batch

……

step: 1990/20000... loss: 1.9070... 0.4442 sec/batch

step: 2000/20000... loss: 1.8854... 0.3920 sec/batch

……

step: 4000/20000... loss: 1.7408... 0.4612 sec/batch

step: 4010/20000... loss: 1.8354... 0.4402 sec/batch

step: 4020/20000... loss: 1.8101... 0.3951 sec/batch

step: 4030/20000... loss: 1.8578... 0.4422 sec/batch

step: 4040/20000... loss: 1.7468... 0.3770 sec/batch

step: 4050/20000... loss: 1.8008... 0.4301 sec/batch

step: 4060/20000... loss: 1.9093... 0.3650 sec/batch

step: 4070/20000... loss: 1.8889... 0.4582 sec/batch

step: 4080/20000... loss: 1.8673... 0.4682 sec/batch

step: 4090/20000... loss: 1.7999... 0.3951 sec/batch

step: 4100/20000... loss: 1.7484... 0.4582 sec/batch

step: 4110/20000... loss: 1.7629... 0.4071 sec/batch

step: 4120/20000... loss: 1.6727... 0.3940 sec/batch

step: 4130/20000... loss: 1.7895... 0.3750 sec/batch

step: 4140/20000... loss: 1.8002... 0.3860 sec/batch

step: 4150/20000... loss: 1.7922... 0.4532 sec/batch

step: 4160/20000... loss: 1.7259... 0.3951 sec/batch

step: 4170/20000... loss: 1.7123... 0.4642 sec/batch

step: 4180/20000... loss: 1.7262... 0.3760 sec/batch

step: 4190/20000... loss: 1.8545... 0.3910 sec/batch

step: 4200/20000... loss: 1.8221... 0.3539 sec/batch

step: 4210/20000... loss: 1.8693... 0.4472 sec/batch

step: 4220/20000... loss: 1.8502... 0.4793 sec/batch

step: 4230/20000... loss: 1.7788... 0.3539 sec/batch

step: 4240/20000... loss: 1.8240... 0.4793 sec/batch

step: 4250/20000... loss: 1.7947... 0.4101 sec/batch

step: 4260/20000... loss: 1.8094... 0.3630 sec/batch

step: 4270/20000... loss: 1.7775... 0.4021 sec/batch

step: 4280/20000... loss: 1.8868... 0.3950 sec/batch

step: 4290/20000... loss: 1.7982... 0.4532 sec/batch

step: 4300/20000... loss: 1.8579... 0.3359 sec/batch

step: 4310/20000... loss: 1.7709... 0.4412 sec/batch

step: 4320/20000... loss: 1.7422... 0.4011 sec/batch

step: 4330/20000... loss: 1.7841... 0.5775 sec/batch

step: 4340/20000... loss: 1.7253... 0.4532 sec/batch

step: 4350/20000... loss: 1.8973... 0.3479 sec/batch

step: 4360/20000... loss: 1.7462... 0.3680 sec/batch

step: 4370/20000... loss: 1.8291... 0.5204 sec/batch

step: 4380/20000... loss: 1.7276... 0.3930 sec/batch

step: 4390/20000... loss: 1.7404... 0.3289 sec/batch

step: 4400/20000... loss: 1.6993... 0.4462 sec/batch

step: 4410/20000... loss: 1.8670... 0.3920 sec/batch

step: 4420/20000... loss: 1.8217... 0.4301 sec/batch

step: 4430/20000... loss: 1.8339... 0.5164 sec/batch

step: 4440/20000... loss: 1.7154... 0.3660 sec/batch

step: 4450/20000... loss: 1.8485... 0.3920 sec/batch

step: 4460/20000... loss: 1.7758... 0.4161 sec/batch

step: 4470/20000... loss: 1.7017... 0.5234 sec/batch

step: 4480/20000... loss: 1.6939... 0.3379 sec/batch

step: 4490/20000... loss: 1.7715... 0.3951 sec/batch

step: 4500/20000... loss: 1.7940... 0.4492 sec/batch

step: 4510/20000... loss: 1.7804... 0.3740 sec/batch

step: 4520/20000... loss: 1.7876... 0.5073 sec/batch

step: 4530/20000... loss: 1.7149... 0.5825 sec/batch

step: 4540/20000... loss: 1.7723... 0.3961 sec/batch

step: 4550/20000... loss: 1.8180... 0.4271 sec/batch

step: 4560/20000... loss: 1.7757... 0.4933 sec/batch

step: 4570/20000... loss: 1.8858... 0.3309 sec/batch

step: 4580/20000... loss: 1.7332... 0.3890 sec/batch

step: 4590/20000... loss: 1.8466... 0.4251 sec/batch

step: 4600/20000... loss: 1.8532... 0.3930 sec/batch

step: 4610/20000... loss: 1.8826... 0.3850 sec/batch

step: 4620/20000... loss: 1.8447... 0.3359 sec/batch

step: 4630/20000... loss: 1.7697... 0.4221 sec/batch

step: 4640/20000... loss: 1.9220... 0.3549 sec/batch

step: 4650/20000... loss: 1.7555... 0.4011 sec/batch

step: 4660/20000... loss: 1.8541... 0.3830 sec/batch

step: 4670/20000... loss: 1.8676... 0.4181 sec/batch

step: 4680/20000... loss: 1.9653... 0.3600 sec/batch

step: 4690/20000... loss: 1.8377... 0.3981 sec/batch

step: 4700/20000... loss: 1.7620... 0.4291 sec/batch

step: 4710/20000... loss: 1.7802... 0.4251 sec/batch

step: 4720/20000... loss: 1.7495... 0.4131 sec/batch

step: 4730/20000... loss: 1.7338... 0.3299 sec/batch

step: 4740/20000... loss: 1.9160... 0.4662 sec/batch

step: 4750/20000... loss: 1.8142... 0.3389 sec/batch

step: 4760/20000... loss: 1.8162... 0.3680 sec/batch

step: 4770/20000... loss: 1.8710... 0.4552 sec/batch

step: 4780/20000... loss: 1.8923... 0.4321 sec/batch

step: 4790/20000... loss: 1.8062... 0.4061 sec/batch

step: 4800/20000... loss: 1.8175... 0.4342 sec/batch

step: 4810/20000... loss: 1.9355... 0.3459 sec/batch

step: 4820/20000... loss: 1.7608... 0.4191 sec/batch

step: 4830/20000... loss: 1.8031... 0.3991 sec/batch

step: 4840/20000... loss: 1.9261... 0.4472 sec/batch

step: 4850/20000... loss: 1.7129... 0.3981 sec/batch

step: 4860/20000... loss: 1.7748... 0.4642 sec/batch

step: 4870/20000... loss: 1.8557... 0.4221 sec/batch

step: 4880/20000... loss: 1.7181... 0.4452 sec/batch

step: 4890/20000... loss: 1.7657... 0.5134 sec/batch

step: 4900/20000... loss: 1.8971... 0.4813 sec/batch

step: 4910/20000... loss: 1.7947... 0.3670 sec/batch

step: 4920/20000... loss: 1.7647... 0.4362 sec/batch

step: 4930/20000... loss: 1.7945... 0.3509 sec/batch

step: 4940/20000... loss: 1.7773... 0.4342 sec/batch

step: 4950/20000... loss: 1.7854... 0.4121 sec/batch

step: 4960/20000... loss: 1.7883... 0.3720 sec/batch

step: 4970/20000... loss: 1.7483... 0.3700 sec/batch

step: 4980/20000... loss: 1.8686... 0.5645 sec/batch

step: 4990/20000... loss: 1.8472... 0.2075 sec/batch

step: 5000/20000... loss: 1.8808... 0.1955 sec/batch

……

step: 9990/20000... loss: 1.7760... 0.2306 sec/batch

step: 10000/20000... loss: 1.6906... 0.2256 sec/batch

……

step: 19800/20000... loss: 1.5745... 0.2657 sec/batch

step: 19810/20000... loss: 1.7075... 0.2326 sec/batch

step: 19820/20000... loss: 1.5854... 0.3660 sec/batch

step: 19830/20000... loss: 1.6520... 0.3529 sec/batch

step: 19840/20000... loss: 1.6153... 0.3434 sec/batch

step: 19850/20000... loss: 1.6174... 0.3063 sec/batch

step: 19860/20000... loss: 1.6060... 0.2717 sec/batch

step: 19870/20000... loss: 1.5775... 0.2627 sec/batch

step: 19880/20000... loss: 1.6181... 0.2326 sec/batch

step: 19890/20000... loss: 1.5117... 0.2547 sec/batch

step: 19900/20000... loss: 1.5613... 0.2356 sec/batch

step: 19910/20000... loss: 1.6465... 0.2346 sec/batch

step: 19920/20000... loss: 1.5160... 0.2607 sec/batch

step: 19930/20000... loss: 1.6922... 0.2306 sec/batch

step: 19940/20000... loss: 1.8708... 0.2527 sec/batch

step: 19950/20000... loss: 1.5579... 0.2276 sec/batch

step: 19960/20000... loss: 1.5850... 0.2376 sec/batch

step: 19970/20000... loss: 1.6798... 0.2286 sec/batch

step: 19980/20000... loss: 1.5684... 0.2667 sec/batch

step: 19990/20000... loss: 1.4981... 0.2617 sec/batch

step: 20000/20000... loss: 1.5322... 0.3199 sec/batch

训练的数据集展示

1、数据集展示

/**

* context_tracking_enter - Inform the context tracking that the CPU is going

* enter user or guest space mode.

*

* This function must be called right before we switch from the kernel

* to user or guest space, when it's guaranteed the remaining kernel

* instructions to execute won't use any RCU read side critical section

* because this function sets RCU in extended quiescent state.

*/

void context_tracking_enter(enum ctx_state state)

{

unsigned long flags;

/*

* Repeat the user_enter() check here because some archs may be calling

* this from asm and if no CPU needs context tracking, they shouldn't

* go further. Repeat the check here until they support the inline static

* key check.

*/

if (!context_tracking_is_enabled())

return;

/*

* Some contexts may involve an exception occuring in an irq,

* leading to that nesting:

* rcu_irq_enter() rcu_user_exit() rcu_user_exit() rcu_irq_exit()

* This would mess up the dyntick_nesting count though. And rcu_irq_*()

* helpers are enough to protect RCU uses inside the exception. So

* just return immediately if we detect we are in an IRQ.

*/

if (in_interrupt())

return;

/* Kernel threads aren't supposed to go to userspace */

WARN_ON_ONCE(!current->mm);

local_irq_save(flags);

if ( __this_cpu_read(context_tracking.state) != state) {

if (__this_cpu_read(context_tracking.active)) {

/*

* At this stage, only low level arch entry code remains and

* then we'll run in userspace. We can assume there won't be

* any RCU read-side critical section until the next call to

* user_exit() or rcu_irq_enter(). Let's remove RCU's dependency

* on the tick.

*/

if (state == CONTEXT_USER) {

trace_user_enter(0);

vtime_user_enter(current);

}

rcu_user_enter();

}

/*

* Even if context tracking is disabled on this CPU, because it's outside

* the full dynticks mask for example, we still have to keep track of the

* context transitions and states to prevent inconsistency on those of

* other CPUs.

* If a task triggers an exception in userspace, sleep on the exception

* handler and then migrate to another CPU, that new CPU must know where

* the exception returns by the time we call exception_exit().

* This information can only be provided by the previous CPU when it called

* exception_enter().

* OTOH we can spare the calls to vtime and RCU when context_tracking.active

* is false because we know that CPU is not tickless.

*/

__this_cpu_write(context_tracking.state, state);

}

local_irq_restore(flags);

}

NOKPROBE_SYMBOL(context_tracking_enter);

EXPORT_SYMBOL_GPL(context_tracking_enter);

void context_tracking_user_enter(void)

{

context_tracking_enter(CONTEXT_USER);

}

NOKPROBE_SYMBOL(context_tracking_user_enter);

/**

* context_tracking_exit - Inform the context tracking that the CPU is

* exiting user or guest mode and entering the kernel.

*

* This function must be called after we entered the kernel from user or

* guest space before any use of RCU read side critical section. This

* potentially include any high level kernel code like syscalls, exceptions,

* signal handling, etc...

*

* This call supports re-entrancy. This way it can be called from any exception

* handler without needing to know if we came from userspace or not.

*/

void context_tracking_exit(enum ctx_state state)

{

unsigned long flags;

if (!context_tracking_is_enabled())

return;

if (in_interrupt())

return;

local_irq_save(flags);

if (__this_cpu_read(context_tracking.state) == state) {

if (__this_cpu_read(context_tracking.active)) {

/*

* We are going to run code that may use RCU. Inform

* RCU core about that (ie: we may need the tick again).

*/

rcu_user_exit();

if (state == CONTEXT_USER) {

vtime_user_exit(current);

trace_user_exit(0);

}

}

__this_cpu_write(context_tracking.state, CONTEXT_KERNEL);

}

local_irq_restore(flags);

}

NOKPROBE_SYMBOL(context_tracking_exit);

EXPORT_SYMBOL_GPL(context_tracking_exit);

void context_tracking_user_exit(void)

{

context_tracking_exit(CONTEXT_USER);

}

NOKPROBE_SYMBOL(context_tracking_user_exit);

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?