ML之LoR&DT&RF:基于LoR&DT(CART)&RF算法对mushrooms蘑菇数据集(22+1,6513+1611)训练来预测蘑菇是否毒性(二分类预测)

目录

输出结果

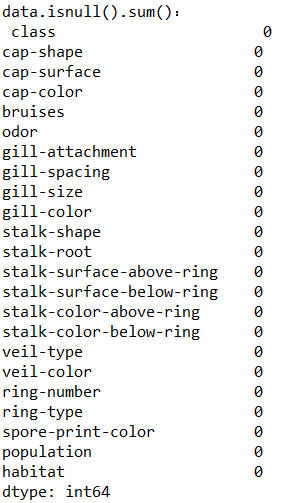

0、数据集

after LabelEncoder

![]()

1、LoR算法

![]()

![]()

LoR_model_GSCV.grid_scores_: [mean: 0.77012, std: 0.01349, params: {'C': 0.001, 'penalty': 'l1'},

mean: 0.86936, std: 0.01035, params: {'C': 0.001, 'penalty': 'l2'},

mean: 0.91229, std: 0.01022, params: {'C': 0.01, 'penalty': 'l1'},

mean: 0.91045, std: 0.00831, params: {'C': 0.01, 'penalty': 'l2'},

mean: 0.94707, std: 0.00853, params: {'C': 0.1, 'penalty': 'l1'},

mean: 0.93599, std: 0.00841, params: {'C': 0.1, 'penalty': 'l2'},

mean: 0.95984, std: 0.00670, params: {'C': 1, 'penalty': 'l1'},

mean: 0.94953, std: 0.00790, params: {'C': 1, 'penalty': 'l2'},

mean: 0.96553, std: 0.00531, params: {'C': 10, 'penalty': 'l1'},

mean: 0.95722, std: 0.00559, params: {'C': 10, 'penalty': 'l2'},

mean: 0.96646, std: 0.00516, params: {'C': 100, 'penalty': 'l1'},

mean: 0.96599, std: 0.00528, params: {'C': 100, 'penalty': 'l2'},

mean: 0.96661, std: 0.00513, params: {'C': 1000, 'penalty': 'l1'},

mean: 0.96646, std: 0.00564, params: {'C': 1000, 'penalty': 'l2'}]

LoR_model_GSCV.best_score_: 0.96661024773042

LoR_model_GSCV.best_params_: {'C': 1000, 'penalty': 'l1'}

LoR_model_GSCV.best_score_: 0.96661024773042

LoR_model_GSCV.best_params_: {'C': 1000, 'penalty': 'l1'}

LoR_model_GSCV_auc_roc: 0.97396449704142022、DT算法

![]()

3、RF算法

![]()

![]()

RFC_model_GSCV grid_scores_: [mean: 0.99938, std: 0.00075, params: {'max_features': 'auto', 'min_samples_leaf': 10, 'n_estimators': 10},

mean: 0.99954, std: 0.00070, params: {'max_features': 'auto', 'min_samples_leaf': 10, 'n_estimators': 20},

……

mean: 0.97784, std: 0.01071, params: {'max_features': 'log2', 'min_samples_leaf': 80, 'n_estimators': 20},

mean: 0.98215, std: 0.00703, params: {'max_features': 'log2', 'min_samples_leaf': 80, 'n_estimators': 30},

mean: 0.98169, std: 0.00550, params: {'max_features': 'log2', 'min_samples_leaf': 90, 'n_estimators': 80},

mean: 0.98169, std: 0.00801, params: {'max_features': 'log2', 'min_samples_leaf': 90, 'n_estimators': 90}]

RFC_model_GSCV best_score_: 0.9998461301738729

RFC_model_GSCV best_params_: {'max_features': 'auto', 'min_samples_leaf': 10, 'n_estimators': 50}

RFC_model_GSCV_auc_roc: 1.0

设计思路

后期更新……

核心代码

后期更新……

RF

tuned_parameters = {'min_samples_leaf': range(10,100,10),

'n_estimators' : range(10,100,10),

'max_features': ['auto','sqrt','log2'] }

RFC_model_GSCV = GridSearchCV(RFC_model, tuned_parameters,cv=10)

RFC_model_GSCV.fit(X_train,y_train)

endtime = time.clock()

print ('RFC_model_GSCV Training time:',endtime - starttime)

print('RFC_model_GSCV grid_scores_:', RFC_model_GSCV.grid_scores_)

print('RFC_model_GSCV best_score_:', RFC_model_GSCV.best_score_)

print('RFC_model_GSCV best_params_:', RFC_model_GSCV.best_params_)

y_prob = RFC_model_GSCV.predict_proba(X_test)[:,1]

y_pred = np.where(y_prob > 0.5, 1, 0)

RFC_model_GSCV.score(X_test, y_pred)

7079

7079

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?