本文内容整理自西安交通大学软件学院李晨老师的课件,仅供学习使用,请勿转载

文章目录

文章目录

本章思维导图

Key characteristics of memory systems

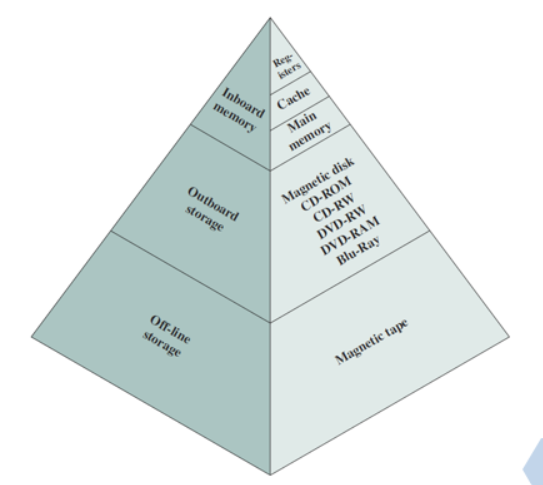

- Location: Internal, External, Offline

- Capacity: word length, number of words

- Unit of transfer: word, block

- Access method: sequential, direct, random, associative

- Performance: access time, access cycle, bandwidth

- Physical type: semiconductor, magnetic, optical, magneto-optical

- Physical characteristics: volatile/nonvolatile, erasable/nonerasable

- Organization: how to form bits to words

Classification based on location

- Internal motherboard, including inside CPU

- External motherboard

- offline

Capacity

- Word size

- Internal memory: in words or bytes. 8bit, 16bit,32bit;

- External memory: in bytes

- Number of words

- How many words or Bytes

Unit of Transfer

- Internal

- Usually governed by data bus width

- External

- Usually a block which is much larger than a word

- Addressable unit (可寻址单元)

- Smallest location which can be uniquely addressed

- Normally, it is word

- Allowing byte

- Address length & addressable unit: N = 2 A N=2^A N=2A

Access Methods

-

Sequential

- Start at the beginning and read through in order

- Access time depends on location of data and previous location

- e.g. tape

-

Direct

-

Individual blocks have unique address

-

Access is by jumping to vicinity plus sequential search

-

Access time depends on location and previous location

为啥需要看上次的位置

-

e.g. disk, optic disk

-

-

Random: address-based access

- Individual addresses identify locations exactly

- Access time is independent of location or previous access

- e.g. DRAM

-

Associative(混合的): comparing part of content to access, a kind of random access

- Data is located by a comparison with contents of a portion of address for all the words

- Access time is independent of location or previous access

- e.g. cache

Performance Factors of Memory

-

Access time: the time of getting data out of memory. Different memory has different access time.

-

For random access memory, access time is from the instant the address presented to memory to the instant of the data on the bus.

对于随机存取存储器,存取时间从呈现给存储器的时刻到总线上数据的时刻 (什么意思?)

-

-

Memory cycle time: access time + additional time before a second access

- Used for random access memory

-

Transfer rate R: data transferred into or out of a memory unit per second

-

1/ Memory cycle (for random access memory)

-

T n = T a + ( N / R ) Tn=Ta+(N/R) Tn=Ta+(N/R)(for non-random access memory)

公式咋来的?

-

Physical Types (material)

- Semiconductor (LSI/VLSI)

- RAM, ROM

- Magnetic

- Disk & Tape, mobile hard-disk

- Optical

- CD & DVD

Physical Characteristics

- volatile/nonvolatile(易失)

- Erasable or not (ROM/EPROM)

- Power consumption

Memory Hierarchy

For memory,the questions we concern are:

- Capacity

- Velocity(速度)

- Price

Locality of Reference

Principle of locality of reference

during the course of program execution, CPU accesses instructions or data in cluster.

- Because:

- Programs typically contain many loops and subroutines – repeated references;

- Table and array involve access to clustered data set – repeated references

- Programs or data are always put in sequence, instructions or data to be accessed are typically nearby current instruction or data.

Two types of locality:

- Temporal locality: if an item is referenced, it will tend to be referenced again soon

- Spatial locality: if an item is referenced, items whose addresses are close by tend to be referenced soon

Cache memory principles

Cache

- Small amount of fast memory

- Sits between normal main memory and CPU

- May be located on CPU chip or module

- Cache contains copies of sections of main memory

- Operates at or near the speed of the processor

- Main memory contains

2

n

2^n

2n words/units,K words consist of a block

- Total blocks: $ M=\frac{2^n}{K}$

- Cache contains C lines, a line contains K memory words

- A cache line = a memory block

- C<<M

- At any time, at most C blocks can reside in the Cache

- A line can not be occupied by a fixed block

- Each line has a tag to identify which block of memory

- Many memory blocks can mapped into a cache line

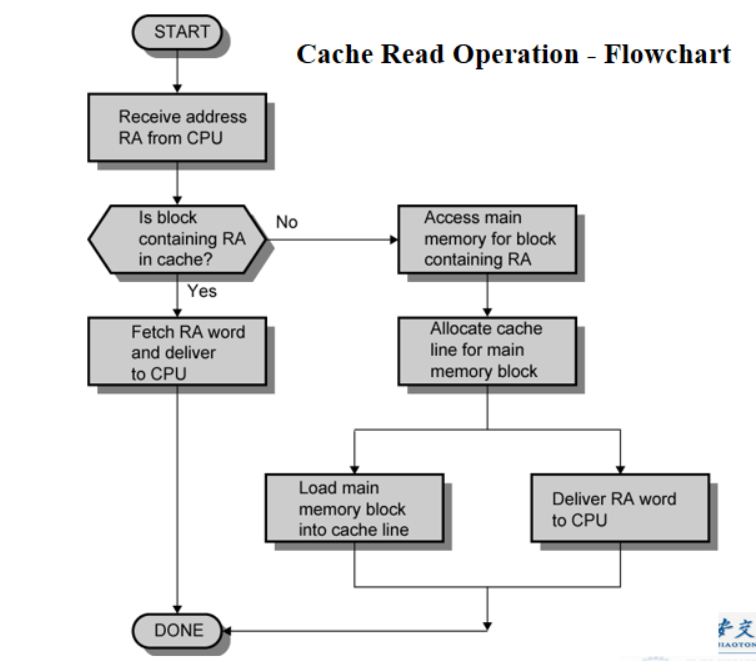

Cache operation

- Part contents of main memory are also in Cache

- CPU requests contents of memory location

- Check cache for this data

- If present, get from cache (fast)

- If not present, read required block from main memory to cache

- And deliver the word to CPU

- Cache includes tags to identify which block of main memory is in each cache slot

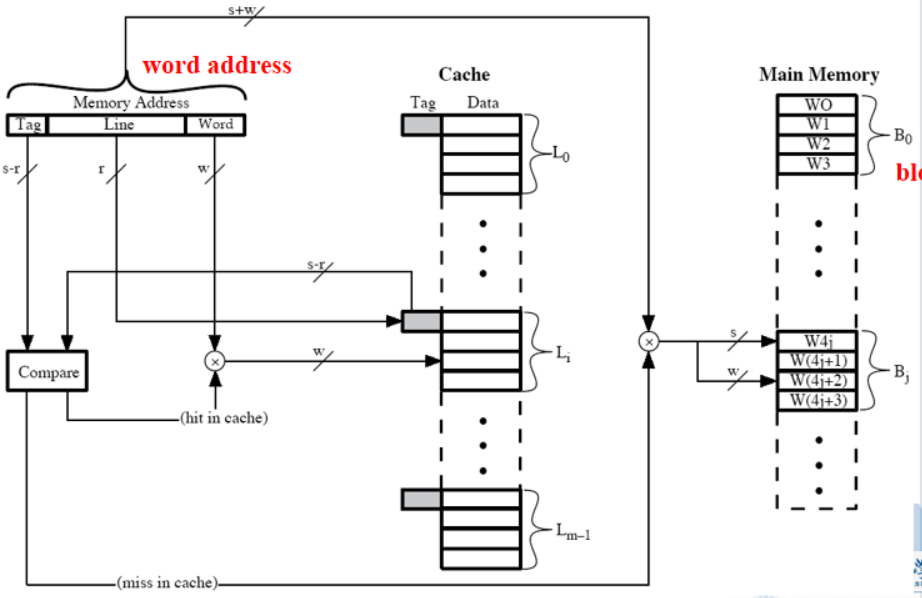

Typical Cache Organization

Cache Design

Key Techniques in Cache Design

- Size

- Mapping Function

- Replacement Algorithm

- Write Policy

- Block Size

- Number of Caches

- Address of Cache

Size

- Small size

- Cheap: Cache-Memory price is close to the price of main memory

- Low hit, access speed may be slower than accessing main memory

- Large size

- High hit: close to 100%, access speed may be close to Cache access

- costly

- More Gates, slightly slower than small cache

- Large CPU area occupation

- Trade-off between capacity, price and speed

- No optimum cache size, 1K~512K words are all effective

Mapping function

- Mapping mechanism needed

- Mapping memory block to cache line

- Which memory block occupies which cache line

- Mapping function is implemented in hardware

- Mapping function determines cache structure

- Typical mapping functions:

- Direct Mapping

- Associative Mapping

- Set Associative Mapping

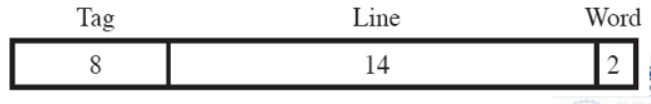

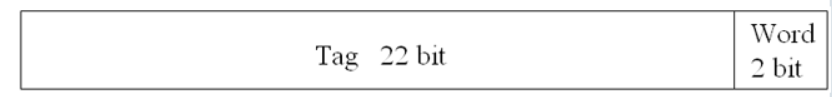

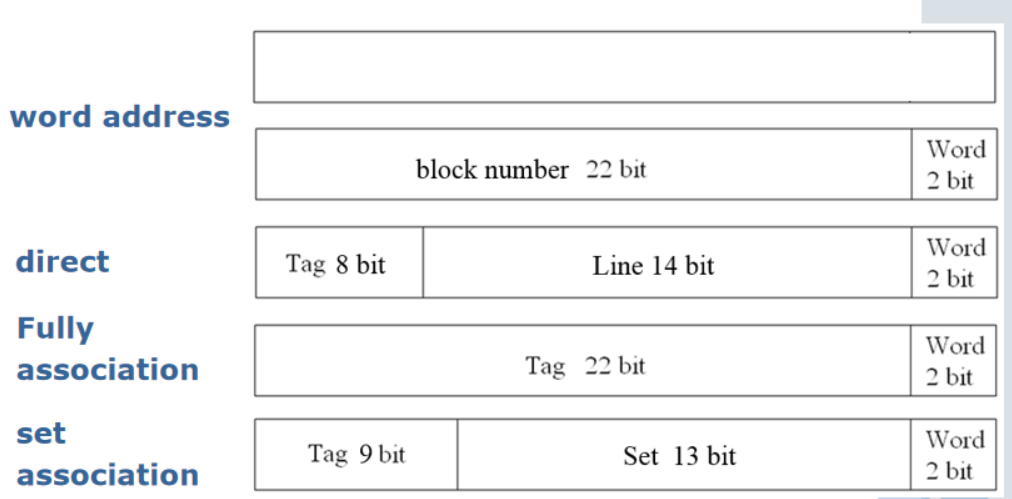

假设

- Cache大小是64KB

- 每个块包含4个字,每个字1B,因此Cache一共 16 K = 2 14 16K=2^{14} 16K=214行

- 主存的大小是16MB,根据字索引,因此一共有 4 M = 2 22 4M=2^{22} 4M=222个块

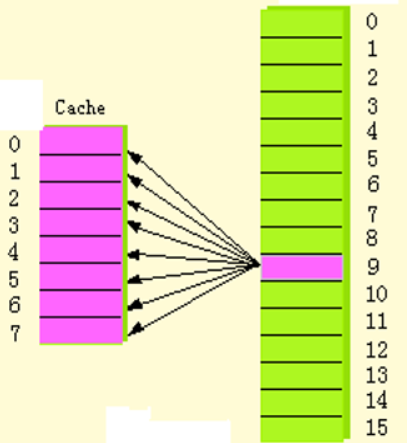

Direct Mapping

The simplest mapping, block to fixed line

Each main memory block is assigned to a specific line in the cache: i = j m o d m i = j\ mod\ m i=j mod m

映射到缓存中同一行的块块号低地址相同

Direct Mapping Implement

memory address separated into 3 parts:

- Low w bits identify content/word

- Middle r bits identify Cache line

- Left bits identify whether the data is needed — Tag identify which memory block

Direct Mapping Cache Organization

查找方式:首先根据Line查找Cache中对应的行,然后检查该行的Tag与字地址中的Tag是否相同

- 如果相同,则根据字地址中的Word查找该行中对应的字即为所需要的数据

- 如果不同,则说明缓存中没有该数据所在的块,因此应该根据Tag+Line组成块号去内存中超找到对应的块,然后根据Word找到对应的字,同时将整个块放入Cache对应的行中

Direct Mapping Summary

s:Tag+Line w:Word

- Address length = (s + w) bits

- Number of addressable units = 2s+w words or bytes

- Block size = line size = 2wwords or bytes

- Number of blocks in main memory = 2s+w/2w = 2s

- Number of lines in cache = m = 2r

- Size of tag = (s – r) bits

Direct Mapping pros & cons

- Simple

- Inexpensive to implement

- Fixed location for given block

- If a program accesses 2 blocks that map to the same line repeatedly, cache misses are very high —thrashing

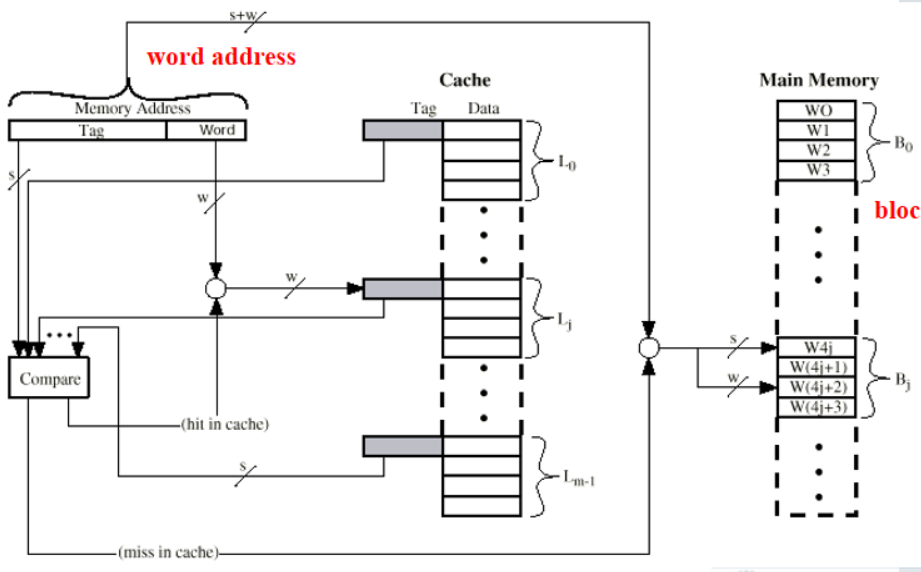

Fully Associative Mapping

- A main memory block can load into any line of cache

- Block and line have no fixed mapping relationship

- Memory address = tag + word

- Tag uniquely identifies block of memory

- Every line’s tag is examined for a match

Fully Associative Implement

memory address separated into 2 parts:

-

Low w bits identify content/word

-

left r bits identify the Cache Tag

Fully Associative Cache Organization

查找方式:根据字地址的Tag与缓存中每行的Tag一一对比

- 如果找到,则根据Word找到行中对应的字

- 如果没找到,则根据字地址的Tag去内存中找到对应的块,然后根据Word找到对应的字,同时根据替换算法将该块放入Cache中合适的行中

Associative Mapping Summary

s:Tag+Line w:Word

- Address length = (s + w) bits

- Number of addressable units = 2s+w words or bytes

- Block size = line size = 2w words or bytes

- Number of blocks in main memory = 2s+w/2w = 2s

- Number of lines in cache = undetermined

- Size of tag = s bits

Fully Associative Mapping pros & cons

- pros:

- A memory block can be mapped into any line of the cache

- Replacing is flexible

- cons :

- Parallel comparing circuit is needed

- Complex and expensive

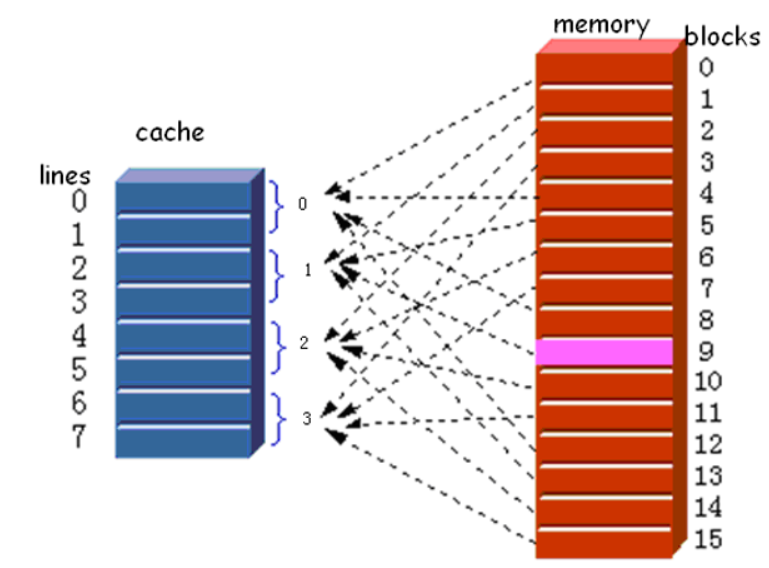

Set Associative Mapping

- Divide cache and memory into sets, each set includes lots of lines.

- Direct mapping for cache set and memory set

- Full associative mapping between two sets

- Set associative mapping=direct mapping + Full associative mapping

- A cache of m lines is divided into v sets, k lines/set, then

- m = v × k m=v\ \times\ k m=v × k

- Set number of Cache i = block number of memory mod v

- A given block may map to any line in a given set

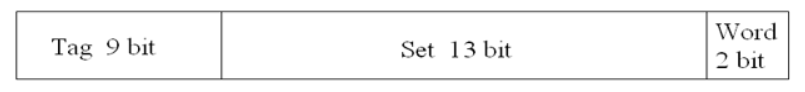

K Way Set Associative Implement

memory address separated into 3 parts:

- Low w bits identify content/word

- Middle r bits identify Cache Sets

- Left bits identify whether the data is needed — Tag identify which memory block

K Way Set Associative Cache Organization

查找方式:首先根据Set查找Cache中对应的组,然后查找该组中是否有和字地址的Tag相同的行

- 如果有,则根据字地址中的Word查找该行中对应的字即为所需要的数据

- 如果没有,则说明缓存中没有该数据所在的块,因此应该根据Tag+Set组成块号去内存中找到对应的块,然后根据Word找到对应的字,同时将整个块放入Cache对应的行中

Set Associative Mapping Summary

- Address length = (s + w) bits

- Number of addressable units = 2s+w words or bytes

- Block size = line size = 2w words or bytes

- Number of blocks in main memory = 2s

- Number of lines in set = k

- Number of sets = v = 2d

- Number of lines in cache = kv = k x 2d

- Size of tag = (s – d) bits

Summary

Replacement Algorithms

Direct mapping

- No choice

- Each block only maps to one line(fixed)

- Replace that line

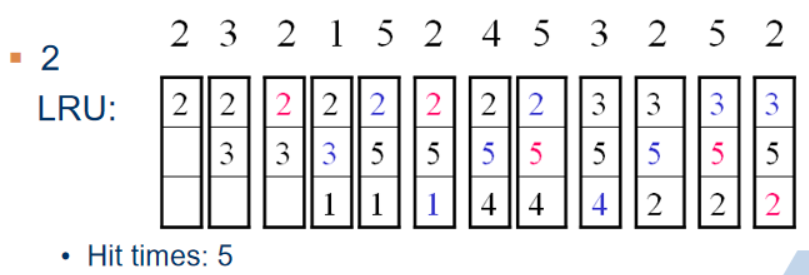

Associative & Set Associative

- Hardware implemented algorithm (speed)

- Least Recently used (LRU):use=1/0 as mark bit

- e.g. in 2 way set associative

- Which of the 2 block is LRU?

- e.g. in 2 way set associative

- First in first out (FIFO)

- replace block that has been in cache longest

- Least frequently used

- replace block which has had fewest hits

- Random :performance is almost the same as LRU

- Optimized :replace the line accessing in the farthest future

Write Policies

- Different from read, Cache write is more complex

- Data consistency between Cache, Memory and other Caches

- Two Cache write policies used usually for single CPU system:

- Write through (写直达)

- Write back (写回)

Write through

- Def.: write memory while writing Cache(缓存和内存一起写)

- All writes go to main memory as well as cache

- disadvantages:

- Lots of writing traffic,may result in bus bottleneck

- Slows down writes

Write back

- Write back :not only write Cache, but also modified flag is put in the written line, and when the line is replaced, write back that line to memory

- Updates initially made in cache only 只在cache更新数据

- Update bit for cache slot is set when update occurs

- If block is to be replaced, write to main memory only if update bit is set

- Suitable for iterative operation and the system that I/O module is directly connected to Cache

- disadvantages:

- Part of contents in the memory is invalid

- Circuit is complex

- Cache may become bottleneck

multiple CPU

- Bus watching with write through

- Cache controller monitors address lines, if a location is written in the shared memory and the data is in the cache, invalidate the cache entry.

- Hard Transparency

- Additional hardware is used . When a cache writes memory, corresponding entry in other caches is also updated

- Noncachable Memory

- Many CPU share a potion of main memory, shared contents are never copied into caches

不太明白都是啥,忘了

Cache line size vs. hit rate

- Principle of locality : the data in the vicinity of referenced word are likely used in the near future

- Block transferred

- To some extent, increasing line size will result in hit rate increase

- But, if the line is too large, hit ratio will become decrease

- Replace by a line

- Probability using new data is less than that of using replaced data

- Line size vs hit ratio is decided by local characteristic of program.

- No optimum

Number of Caches

- Single cache vs multilevel Cache

- On-chip Cache(L1):short path to CPU, fast speed and reduce the frequency of bus access

- Off-chip Cache(L2):only L1 access miss results in accessing L2

- Unified Cache and Split Cache

- Unified Cache(统一缓存,同时存储指令和数据):

- Storing Data & instruction, balancing load automatically

- Hit rate high

- Split Cache(分开存储指令和数据):

- Data Cache & instruction Cache

- Parallel operation

- Unified Cache(统一缓存,同时存储指令和数据):

Vocabulary

- DRAM(Dynamic random-access memory):动态随机存取(访问)存储器

- ZIP Cartridge: ZIP活动磁盘

- Controller:控制器

- Capacity:容量

- Unit of transfer:传送单元

- Addressable unit:可寻址单元

- Sequential access:顺序访问

- Associative access: 关联访问

- Nonvolatile memory:恒态存储器

- WORM(write once read many):一写多读存储器

- MO(magneto-optical disk):磁光盘

- Set associative mapping:组关联映射

- Replacement Algorithm:替换算法

- FIFO(first-in- first- out):先进先出

- LRU(least frequently used):最近最少使用

- Write through:写直达

- Retire unit:回收单元

- Consistency:一致性

- Coherency:一致性

Key points

- How about Memory Hierarchy? What executes it according to?

- Where can block be placed in cache?

- How is block found in cache?

- Which block is replaced on miss in cache?

- How about writes policy in cache?

558

558

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?