Model Training

Training is performed by passing parameters of spm_train to SentencePieceTrainer.train() function.

import sentencepiece as spm

# train sentencepiece model from `botchan.txt` and makes `m.model` and `m.vocab`

# `m.vocab` is just a reference. not used in the segmentation.

spm.SentencePieceTrainer.train('--input=examples/hl_data/botchan.txt --model_prefix=m --vocab_size=500')训练时可传的参数:

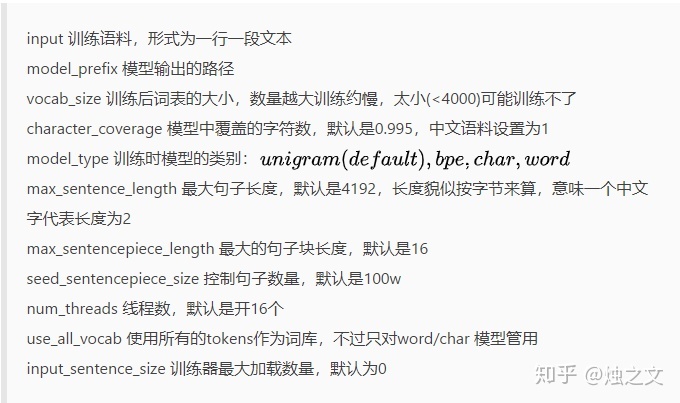

--input: one-sentence-per-line raw corpus file. No need to run tokenizer, normalizer or preprocessor. By default, SentencePiece normalizes the input with Unicode NFKC. You can pass a comma-separated list of files.--model_prefix: output model name prefix.<model_name>.modeland<model_name>.vocabare generated.--vocab_size: vocabulary size, e.g., 8000, 16000, or 32000--character_coverage: amount of characters covered by the model, good defaults are:0.9995for languages with rich character set like Japanese or Chinese and1.0for other languages with small character set.--model_type: model type. Choose fromunigram(default),bpe,char, orword. The input sentence must be pretokenized when usingwordtype.

Segmentation

# makes segmenter instance and loads the model file (m.model)

sp = spm.SentencePieceProcessor()

sp.load('m.model')

text = """

If you don’t have write permission to the global site-packages directory or don’t want to install into it, please try:

"""

# encode: text => id

print(sp.encode_as_pieces(text))

print(sp.encode_as_ids(text))

# decode: id => text

# print(sp.decode_pieces(['▁This', '▁is', '▁a', '▁t', 'est', 'ly']))

# print(sp.decode_ids([209, 31, 9, 375, 586, 34]))官方参考连接:

sentencepiece/README.md at master · google/sentencepiece · GitHub

1960

1960

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?