目前需要对点云障碍物进行初步的分类,所以要根据 kitti 标签的点云坐标,提取其在整帧点云中的某个障碍物数据,比如汽车,行人的点云数据;

问题在于点云的标签居然是在图像坐标系中的,所以我们需要先把图像坐标转换为激光雷达坐标系,

然后根据label中的长宽高获取其AABB包围盒,

最后提取包围盒中的点的数据,然后存储就行了;

代码如下:

def get_kitti_object_cloud_v2():

save_object_cloud_path = r'D:KITTIObjecttrainingobject_cloud'

for img_id in range(7481):

lidar_path = r'D:KITTIObjecttrainingvelodyne%06d.bin' % img_id ## Path ## need to be changed

label_path = r'D:KITTIObjecttraininglabel_2%06d.txt' % img_id ## Path ## need to be changed

calib_path = r'D:KITTIObjecttrainingcalib%06d.txt' % img_id

points = np.fromfile(lidar_path, dtype=np.float32).reshape(-1, 4) # .astype(np.float16)

lables = np.loadtxt(label_path,

dtype={'names': ('type', 'truncated', 'occuluded', 'alpha', 'xmin', 'ymin', 'xmax', 'ymax', 'h', 'w', 'l', 'x', 'y', 'z','rotation_y'),

'formats': ('S14', 'float', 'float', 'float', 'float', 'float', 'float', 'float','float', 'float', 'float', 'float', 'float', 'float', 'float')})

calibs = Calibration(calib_path)

if lables.size == 1:

lables = lables[np.newaxis]

i = 0

for label in lables:

i += 1

if label['type'] != b'DontCare':

# 将图像坐标转换为激光点云坐标

xyz = calibs.project_rect_to_velo(np.array([[label['x'], label['y'], label['z']]]))

# 中心点

x = xyz[0][0]

y = xyz[0][1]

z = xyz[0][2]

# AABB 包围盒,最近点和最远点

min_point_AABB = [x - label['l'] / 2, y - label['w'] / 2, z, ]

max_point_AABB = [x + label['l'] / 2, y + label['w'] / 2, z + label['h'], ]

# 过滤该范围内的激光点

x_filt = np.logical_and(

(points[:,0]>min_point_AABB[0]), (points[:,0]<max_point_AABB[0]))

y_filt = np.logical_and(

(points[:,1]>min_point_AABB[1]), (points[:,1]<max_point_AABB[1]))

z_filt = np.logical_and(

(points[:,2]>min_point_AABB[2]), (points[:,2]<max_point_AABB[2]))

filt = np.logical_and(x_filt, y_filt) # 必须同时成立

filt = np.logical_and(filt, z_filt) # 必须同时成立

object_cloud = points[filt, :] # 过滤

# 转换标签,可以自定义

if label['type'] in [ b'Car', b'Van', b'Truck', b'Tram']:

adjust_label = 'vehicle'

elif label['type'] in [ b'Pedestrian', b'Person_sitting']:

adjust_label = 'Pedestrian'

elif label['type'] in [ b'Cyclist']:

adjust_label = 'Cyclist'

elif label['type'] in [ b'Misc']:

adjust_label = 'other'

# 只有 1-3 个点的记录或者没有点的记录都不要,其实还可以更加严格

if object_cloud.shape[0] <= 3:

print('filter failed...', img_id, adjust_label, i)

continue

np.save(save_object_cloud_path+'%06d-%s-%d' % (img_id, adjust_label, i),object_cloud)

其中用到的工具代码如下:

class Calibration(object):

''' Calibration matrices and utils

3d XYZ in <label>.txt are in rect camera coord.

2d box xy are in image2 coord

Points in <lidar>.bin are in Velodyne coord.

y_image2 = P^2_rect * x_rect

y_image2 = P^2_rect * R0_rect * Tr_velo_to_cam * x_velo

x_ref = Tr_velo_to_cam * x_velo

x_rect = R0_rect * x_ref

P^2_rect = [f^2_u, 0, c^2_u, -f^2_u b^2_x;

0, f^2_v, c^2_v, -f^2_v b^2_y;

0, 0, 1, 0]

= K * [1|t]

image2 coord:

----> x-axis (u)

|

|

v y-axis (v)

velodyne coord:

front x, left y, up z

rect/ref camera coord: 这两个rect/ref 是一个东西?

right x, down y, front z

Ref (KITTI paper): http://www.cvlibs.net/publications/Geiger2013IJRR.pdf

TODO(rqi): do matrix multiplication only once for each projection.

'''

def __init__(self, calib_filepath, from_video=False):

if from_video:

calibs = self.read_calib_from_video(calib_filepath)

else:

calibs = self.read_calib_file(calib_filepath)

# Projection matrix from rect camera coord to image2 coord

self.P = calibs['P2']

self.P = np.reshape(self.P, [3,4])

# Rigid transform from Velodyne coord to reference camera coord

self.V2C = calibs['Tr_velo_to_cam']

self.V2C = np.reshape(self.V2C, [3,4])

self.C2V = inverse_rigid_trans(self.V2C)

# Rotation from reference camera coord to rect camera coord

self.R0 = calibs['R0_rect']

self.R0 = np.reshape(self.R0,[3,3])

# Camera intrinsics and extrinsics

self.c_u = self.P[0,2]

self.c_v = self.P[1,2]

self.f_u = self.P[0,0]

self.f_v = self.P[1,1]

self.b_x = self.P[0,3]/(-self.f_u) # relative

self.b_y = self.P[1,3]/(-self.f_v)

def read_calib_file(self, filepath):

''' Read in a calibration file and parse into a dictionary.

Ref: https://github.com/utiasSTARS/pykitti/blob/master/pykitti/utils.py

'''

data = {}

with open(filepath, 'r') as f:

for line in f.readlines():

line = line.rstrip()

if len(line)==0: continue

key, value = line.split(':', 1)

# The only non-float values in these files are dates, which

# we don't care about anyway

try:

data[key] = np.array([float(x) for x in value.split()])

except ValueError:

pass

return data

def read_calib_from_video(self, calib_root_dir):

''' Read calibration for camera 2 from video calib files.

there are calib_cam_to_cam and calib_velo_to_cam under the calib_root_dir

'''

data = {}

cam2cam = self.read_calib_file(os.path.join(calib_root_dir, 'calib_cam_to_cam.txt'))

velo2cam = self.read_calib_file(os.path.join(calib_root_dir, 'calib_velo_to_cam.txt'))

Tr_velo_to_cam = np.zeros((3,4))

Tr_velo_to_cam[0:3,0:3] = np.reshape(velo2cam['R'], [3,3])

Tr_velo_to_cam[:,3] = velo2cam['T']

data['Tr_velo_to_cam'] = np.reshape(Tr_velo_to_cam, [12])

data['R0_rect'] = cam2cam['R_rect_00']

data['P2'] = cam2cam['P_rect_02']

return data

def cart2hom(self, pts_3d):

''' Input: nx3 points in Cartesian 笛卡尔 就是在后面加了一列 1

Oupput: nx4 points in Homogeneous by pending 1

'''

n = pts_3d.shape[0]

pts_3d_hom = np.hstack((pts_3d, np.ones((n,1))))

return pts_3d_hom

# ===========================

# ------- 3d to 3d ----------

# ===========================

def project_velo_to_ref(self, pts_3d_velo):

pts_3d_velo = self.cart2hom(pts_3d_velo) # nx4

return np.dot(pts_3d_velo, np.transpose(self.V2C))

def project_ref_to_velo(self, pts_3d_ref):

pts_3d_ref = self.cart2hom(pts_3d_ref) # nx4

return np.dot(pts_3d_ref, np.transpose(self.C2V))

def project_rect_to_ref(self, pts_3d_rect):

''' Input and Output are nx3 points '''

return np.transpose(np.dot(np.linalg.inv(self.R0), np.transpose(pts_3d_rect)))

def project_ref_to_rect(self, pts_3d_ref):

''' Input and Output are nx3 points ''' # n*3 * 3*3 > n*3 > 3*n

return np.transpose(np.dot(self.R0, np.transpose(pts_3d_ref)))

def project_rect_to_velo(self, pts_3d_rect):

''' Input: nx3 points in rect camera coord.

这部分是我要的,需要把3D坐标转换到激光雷达坐标

Output: nx3 points in velodyne coord.

'''

pts_3d_ref = self.project_rect_to_ref(pts_3d_rect)

return self.project_ref_to_velo(pts_3d_ref)

def project_velo_to_rect(self, pts_3d_velo):

pts_3d_ref = self.project_velo_to_ref(pts_3d_velo)

return self.project_ref_to_rect(pts_3d_ref)

# ===========================

# ------- 3d to 2d ----------

# ===========================

def project_rect_to_image(self, pts_3d_rect):

''' Input: nx3 points in rect camera coord.

Output: nx2 points in image2 coord.

'''

pts_3d_rect = self.cart2hom(pts_3d_rect)

pts_2d = np.dot(pts_3d_rect, np.transpose(self.P)) # nx3

pts_2d[:,0] /= pts_2d[:,2]

pts_2d[:,1] /= pts_2d[:,2]

return pts_2d[:,0:2]

def project_velo_to_image(self, pts_3d_velo):

''' Input: nx3 points in velodyne coord.

Output: nx2 points in image2 coord.

'''

pts_3d_rect = self.project_velo_to_rect(pts_3d_velo)

return self.project_rect_to_image(pts_3d_rect)

# ===========================

# ------- 2d to 3d ----------

# ===========================

def project_image_to_rect(self, uv_depth):

''' Input: nx3 first two channels are uv, 3rd channel

is depth in rect camera coord.

Output: nx3 points in rect camera coord.

'''

n = uv_depth.shape[0]

x = ((uv_depth[:,0]-self.c_u)*uv_depth[:,2])/self.f_u + self.b_x

y = ((uv_depth[:,1]-self.c_v)*uv_depth[:,2])/self.f_v + self.b_y

pts_3d_rect = np.zeros((n,3))

pts_3d_rect[:,0] = x

pts_3d_rect[:,1] = y

pts_3d_rect[:,2] = uv_depth[:,2]

return pts_3d_rect

def project_image_to_velo(self, uv_depth):

pts_3d_rect = self.project_image_to_rect(uv_depth)

return self.project_rect_to_velo(pts_3d_rect)

def rotx(t):

''' 3D Rotation about the x-axis. '''

c = np.cos(t)

s = np.sin(t)

return np.array([[1, 0, 0],

[0, c, -s],

[0, s, c]])

def roty(t):

''' Rotation about the y-axis. '''

c = np.cos(t)

s = np.sin(t)

return np.array([[c, 0, s],

[0, 1, 0],

[-s, 0, c]])

def rotz(t):

''' Rotation about the z-axis. '''

c = np.cos(t)

s = np.sin(t)

return np.array([[c, -s, 0],

[s, c, 0],

[0, 0, 1]])

def transform_from_rot_trans(R, t):

''' Transforation matrix from rotation matrix and translation vector. '''

R = R.reshape(3, 3)

t = t.reshape(3, 1)

return np.vstack((np.hstack([R, t]), [0, 0, 0, 1]))

def inverse_rigid_trans(Tr):

''' Inverse a rigid body transform matrix (3x4 as [R|t])

[R'|-R't; 0|1]

'''

inv_Tr = np.zeros_like(Tr) # 3x4

inv_Tr[0:3,0:3] = np.transpose(Tr[0:3,0:3])

inv_Tr[0:3,3] = np.dot(-np.transpose(Tr[0:3,0:3]), Tr[0:3,3])

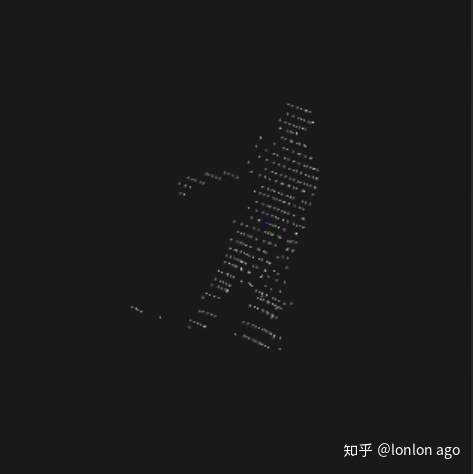

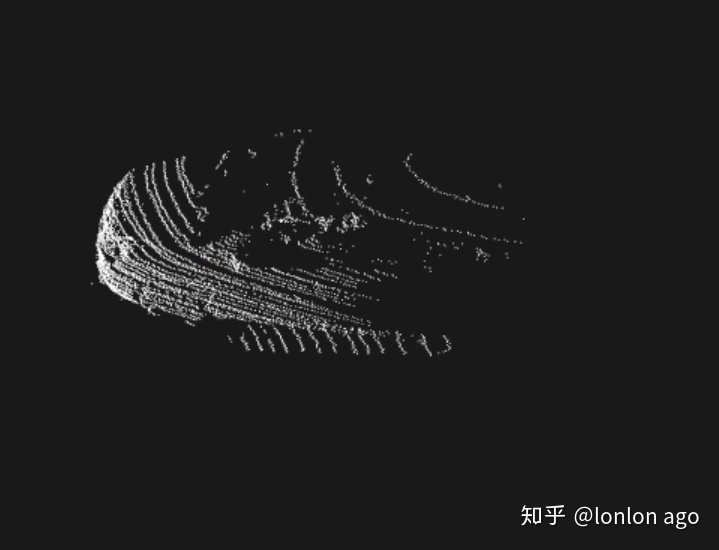

return inv_Tr最后单个障碍物的效果如图:

本文介绍如何从Kitti数据集中提取汽车和行人等障碍物的点云数据。首先,需要将图像坐标转换为激光雷达坐标系,接着利用标签的长宽高获取AABB包围盒,并提取包围盒内的点云数据进行存储。通过提供的代码实现,展示了单个障碍物的点云效果。

本文介绍如何从Kitti数据集中提取汽车和行人等障碍物的点云数据。首先,需要将图像坐标转换为激光雷达坐标系,接着利用标签的长宽高获取AABB包围盒,并提取包围盒内的点云数据进行存储。通过提供的代码实现,展示了单个障碍物的点云效果。

6899

6899

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?