前言

一时兴起和好朋友一起组队参加了Datawhale的OD小项目,索性也算是第一次做CV的项目了,顺便练练pytorch和神经网络的内容。这是第三次任务的博客。本次任务主要是学习目标检测任务的损失函数,也就是我们训练的criterion

一、匹配策略

匹配策略就是说,我们需要知道每个prior bbox和哪个目标对应。本任务采用以下几个原则

1. 原则一

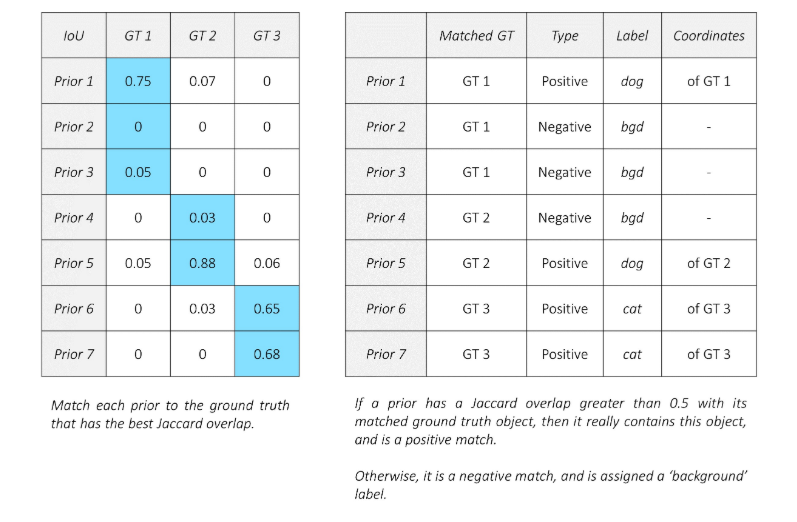

从ground truth box出发,寻找与每一个ground truth box有最大的IOU的prior bbox,这样就能保证每一个groundtruth box一定与一个prior bbox对应起来。 反之,若一个prior bbox没有与任何ground truth进行匹配,那么该prior bbox只能与背景匹配,就是负样本。一个图片中ground truth是非常少的,而prior bbox却很多,如果仅按第一个原则匹配,很多prior bbox会是负样本,正负样本极其不平衡,所以需要第二个原则。

2. 原则二

从prior bbox出发,对剩余的还没有配对的prior bbox与任意一个ground truth box尝试配对,只要两者之间的IOU大于阈值(一般是0.5),那么该prior bbox也与这个ground truth进行匹配。这意味着某个ground truth可能与多个Prior box匹配,这是可以的。但是反过来却不可以,因为一个prior bbox只能匹配一个ground truth,如果多个ground truth与某个prior bbox的 IOU 大于阈值,那么prior bbox只与IOU最大的那个ground truth进行匹配

举个例子,

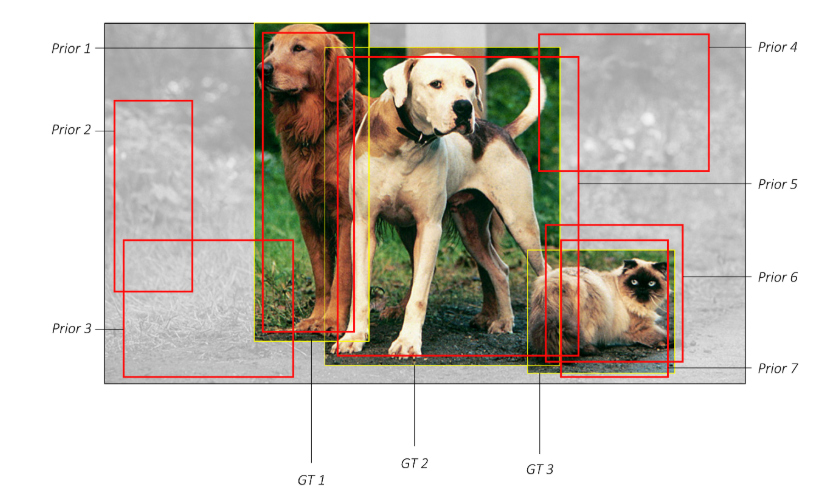

图中有三个真实的目标ground truth,7个先验框,按照前面的步骤生成以下匹配项

来看一看代码。

for i in range(batch_size):

n_objects = boxes[i].size(0)

overlap = find_jaccard_overlap(boxes[i], self.priors_xy) # (n_objects, 441)

# For each prior, find the object that has the maximum overlap

overlap_for_each_prior, object_for_each_prior = overlap.max(dim=0) # (441)

# We don't want a situation where an object is not represented in our positive (non-background) priors -

# 1. An object might not be the best object for all priors, and is therefore not in object_for_each_prior.

# 2. All priors with the object may be assigned as background based on the threshold (0.5).

# To remedy this -

# First, find the prior that has the maximum overlap for each object.

_, prior_for_each_object = overlap.max(dim=1) # (N_o)

# Then, assign each object to the corresponding maximum-overlap-prior. (This fixes 1.)

object_for_each_prior[prior_for_each_object] = torch.LongTensor(range(n_objects)).to(device)

# To ensure these priors qualify, artificially give them an overlap of greater than 0.5. (This fixes 2.)

overlap_for_each_prior[prior_for_each_object] = 1.

# Labels for each prior

label_for_each_prior = labels[i][object_for_each_prior] # (441)

# Set priors whose overlaps with objects are less than the threshold to be background (no object)

label_for_each_prior[overlap_for_each_prior < self.threshold] = 0 # (441)

# Store

true_classes[i] = label_for_each_prior

# Encode center-size object coordinates into the form we regressed predicted boxes to

true_locs[i] = cxcy_to_gcxgcy(xy_to_cxcy(boxes[i][object_for_each_prior]), self.priors_cxcy) # (441, 4)

二、损失函数

这部分我们就来看看损失函数该如何定义。

L ( x , c , l , g ) = 1 N ( L c o n f ( x , c ) + α L l o c ( x , l , g ) ) L(x,c,l,g) = \frac{1}{N}(L_{conf}(x,c)+\alpha L_{loc} (x,l,g)) L(x,c,l,g)=N1(Lconf(x,c)+αLloc(x,l,g))

其中, L c o n f ( x , c ) L_{conf}(x,c) Lconf(x,c)表示置信度损失, L l o c ( x , l , g ) L_{loc} (x,l,g) Lloc(x,l,g)表示定位损失, N N N是匹配到GT(Ground Truth)的prior bbox数量,如果 N = 0 N=0 N=

本文介绍了Datawhale OD项目的匹配策略,包括从ground truth出发和从prior bbox出发的原则,以及解决正负样本不平衡的方法。接着,详细阐述了目标检测任务中的损失函数定义,包括置信度损失和定位损失,并解释了在线难例挖掘策略,以确保训练的平衡和有效性。

本文介绍了Datawhale OD项目的匹配策略,包括从ground truth出发和从prior bbox出发的原则,以及解决正负样本不平衡的方法。接着,详细阐述了目标检测任务中的损失函数定义,包括置信度损失和定位损失,并解释了在线难例挖掘策略,以确保训练的平衡和有效性。

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

2万+

2万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?