Jetson Xavier NX使用手册

一.常用指令

(一)apt-get指令

使用apt-get指令可以直接从软件仓库里直接下载(需联网)。如果不换源,下载的过程非常慢,如果换成国内源网速就飞快了。apt-get不仅可以下载安装软件,还有很多其他的功能。

apt-get命令大全:

sudo apt-get update //更新仓库

sudo apt-get upgrade //更新升级所有软件

sudo apt-get upgrade 软件名// 更新某个软件

sudo apt list --upgradable //列出可更新的软件

sudo apt-get dist-upgrade//升级系统版本(Ubuntu的升级)

sudo apt-get install 软件包名 //安装一个软件包

sudo apt-get remove package //删除一个软件包

(二)dpkg指令

有时不方便使用apt-get指令安装, 需要自己去软件官网下载安装包,安装包大多是deb格式文件,在安装包所在的目录打开终端,使用dpkg命令就可以安装。dpkg指令大全:(package是具体的文件名)

dpkg -i package.deb:安装一个 Debian 软件包,如手动下载的文件。

dpkg -c package.deb:列出 package.deb 的内容。

dpkg -I package.deb:从 package.deb 中提取包信息。

dpkg -r package:移除一个已安装的包。

dpkg -P package:完全清除一个已安装的包。和 remove 不同的是,remove 只是删掉数据和可执行文件,purge 另外还删除所有的配制文件。

dpkg -L package:列出 package 安装的所有文件清单。

dpkg -s package:显示已安装包的信息。

dpkg-reconfigure package:重新配制一个已经安装的包,如果它使用的是 debconf (debconf 为包安装提供了一个统一的配置界面)。

dpkg -S package:查看软件在哪个包里

(三)Vim文本编辑器操作

i:进入插入模式,在光标当前位置插入文本。 Esc:从插入模式返回到命令模式。 :w:保存文件但不退出。 :q:退出 Vim,如果有未保存的更改,将提示您。 :wq 或 ZZ:保存文件并退出 Vim。 :q!:不保存更改并退出 Vim。 dd:删除当前行。 yy:复制当前行。 p:粘贴复制的内容。

(四)Nvidia Jeston系列系统信息查询指令

1. 查看系统BSP 版本信息 cat /etc /nv_tegra_release 2. 查看jetpack 版本信息 sudo apt-cache show nvidia-jetpack 3. 查看linux内核版本 uname -a 4. 查看cuda版本 ls /usr/local/ | grep cuda 5. 查看tensorRT版本 dpkg -l | grep TensorRT 6. 查看cudnn版本 cat /usr/include/cudnn_version.h 7. 图形界面关闭与开启 #关闭图形界面: sudo systemctl set-default multi-user.target sudo reboot #开启图形化界面 sudo systemctl set-default graphical.target

二.CUDA环境变量配置与可视化version

三.SDK Manager 管理器安装镜像系统

四.安装conda

*Jetson Xavier NX安装pytorch环境所有文件资源都放在了百度网盘,直接下载:*

链接:百度网盘 请输入提取码 提取码:4taz 转载:Jetson Xavier NX安装pytorch环境《最全、简洁》-CSDN博客

该安装包含有torch-1.8.0 vison-0.9.0,同时用miniforge3-4.12.0替代miniconda,注意jetson NX系列开发板不支持anaconda3的,因此配置环境注意:选择miniconda / 或者该安装包的miniforge都行。

换清华源

# 重新编辑source.list文件

sudo vim /etc/apt/sources.list # vim编辑操作文章开头介绍过

3 更换清华源(适用于ubuntu18.04因此为bionic 如果是20.04自行更换即可)

deb http://mirrors.tuna.tsinghua.edu.cn/ubuntu-ports/ bionic-updates main restricted universe multiverse

deb-src http://mirrors.tuna.tsinghua.edu.cn/ubuntu-ports/ bionic-updates main restricted universe multiverse

deb http://mirrors.tuna.tsinghua.edu.cn/ubuntu-ports/ bionic-security main restricted universe multiverse

deb-src http://mirrors.tuna.tsinghua.edu.cn/ubuntu-ports/ bionic-security main restricted universe multiverse

deb http://mirrors.tuna.tsinghua.edu.cn/ubuntu-ports/ bionic-backports main restricted universe multiverse

deb-src http://mirrors.tuna.tsinghua.edu.cn/ubuntu-ports/ bionic-backports main restricted universe multiverse

deb http://mirrors.tuna.tsinghua.edu.cn/ubuntu-ports/ bionic main universe restricted

deb-src http://mirrors.tuna.tsinghua.edu.cn/ubuntu-ports/ bionic main universe restricted

# 更新软件包源

sudo apt-get update

1.使用cd命令进入到Miniforge3-4.12.0-0-Linux-aarch64安装包所在的文件夹下

比如cd NXSource

2.bash Miniforge3-4.12.0-0-Linux-aarch64.sh -b

3.~/miniforge3/bin/conda init

4.重新打开终端可以输入conda -V命令,查看conda版本

5.# 添加清华源

conda config --add channels https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/free/

conda config --add channels https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/main/

conda config --add channels https://mirrors.tuna.tsinghua.edu.cn/anaconda/cloud/conda-forge/

conda config --add channels https://mirrors.tuna.tsinghua.edu.cn/anaconda/cloud/bioconda/

conda config --set show_channel_urls yes

conda config --get channels(一)创建conda环境

#终端执行下面命令

conda create --name pytorch python=3.6.9 # 我的jetson板子是ubuntu18.04 默认就是3.6.9

conda activate pytorch

pip config set global.index-url https://pypi.tuna.tsinghua.edu.cn/simple

sudo -H pip3 install -U jetson-stats

sudo jtop # 命令。查看jetson NX的硬件监测,现在应该能够看见包括jetback python numpy opencv cuda cudnn的版本,但现在的opencv with cuda : NO 后续可以利用cuda加速opencv(二)安装torch(pytorch环境)

#终端执行下面命令

sudo apt-get install python3-pip libopenblas-base libopenmpi-dev libomp-dev

pip install Cython

pip install numpy==1.19.4

pip install -i https://pypi.tuna.tsinghua.edu.cn/simple opencv-python pillow( 安装opencv-python会很慢)

#注意:cd torch-1.8.0-cp36-cp36m-linux_aarch64.whl所在的路径下,否则pip会失败

例如:运行 cd NXSource

pip install torch-1.8.0-cp36-cp36m-linux_aarch64.whl

sudo apt-get install libjpeg-dev zlib1g-dev libpython3-dev libavcodec-dev libavformat-dev libswscale-dev

cd ../

git clone --branch v0.9.0 https://gitee.com/rchen1997/torchvision torchvision

cd torchvision

export BUILD_VERSION=0.9.0

python3 setup.py install --user # 如果报错说缺失什么包就安装什么包,出现修复关系按照指示来修复即可,修复依赖包关系过程中报错出现没有权限前面加个sudo即可!

cd ../ (三)验证torch torchvison是否安装成功

#pytorch环境下终端执行下面命令

python

import torch

print(torch.cuda.is_available())

import torchvision

print(torchvision.__version__)

exit()提示非法指令,核心已转储。

*在jetson tx2部署yolo环境中,安装各种包的情况下,会遇到各种包不兼容问题,比如numpy。Nano刷系统的时候系统自带了一些必要的库其中包括numpy1.13.1,这个numpy库对于Jetson来说很重要,因此这里就会产生一个冲突,安装pytorch-lightning库的时候硬是再下载了最新的numpy1.19.x,这里就是问题的关键,系统内置的numpy1.13.1没有被删掉而又同时存在两个版本的numpy,因此系统其他依赖于numpy的库(比如cv2、pytorch-lightning等)在被调用的时候就会发生了错乱导致python3报错出现非法指令核心已转储

解决方法:

显示对应版本则安卸载torch中的高版本numpy,重装一个低版本的(如1.13.3)。具体步骤:进入我们的conda环境,输入pip list,会显示这个环境里所有软件包及其版本,我们发现numpy版本是1.19.5,然后卸载掉它:

python -m pip install numpy==1.19.4 # 卸载自带的numpy1.13.1,安装1.19.4

export OPENBLAS_CORETYPE=ARMV8 # 在每次运行python之前输入一下,可以从源文件里改,网上教程很多也很简单,这里就不做赘述了至此,解决了非法指令与Numpy的版本问题,重新进入python检验torch torchvison即可。

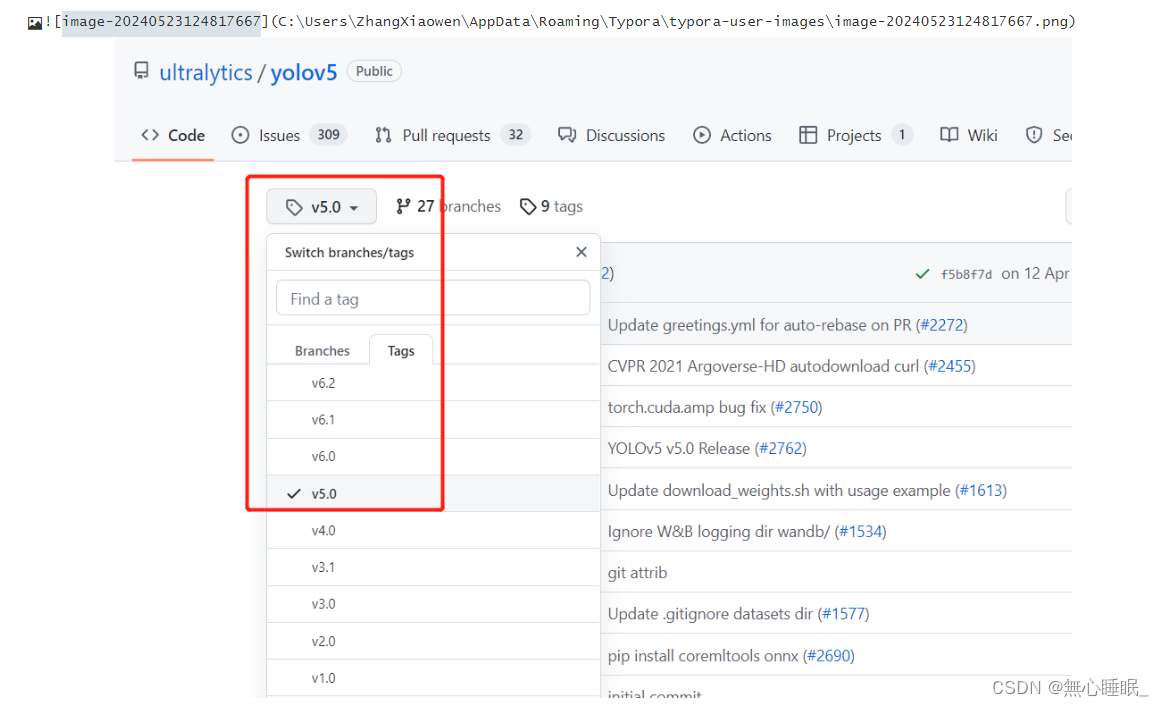

五.下载yolov5进行测试

进入:GitHub - ultralytics/yolov5 at v5.0 选择tar / zip 版本下载,这是因为jetson为arm64架构,或者直接控制台下载

git clone -b v5.0 https://github.com/ultralytics/yolov5.git

conda activate pytorch

cd yolov5 # 进入yolov5文件夹

(pytorch) liu@liu:~/yolov5-5.0$ pip install -r requirements.txt # 这里要注意,安装的时候opencv-python默认安装的是最新的:4.9.0,关于opencv-python安装问题:

4.9.0版本太高,接入摄像头进行实时检测的时候会出现报错:

Model Summary: 224 layers, 7266973 parameters, 0 gradients, 17.0 GFLOPS WARNING: Environment does not support cv2.imshow() or PIL Image.show() image displays OpenCV(4.9.0) /tmp/pip-install-zgse7tpx/opencv-python_658a2c36ed384d10bf9a386caf110135/opencv/modules/highgui/src/window.cpp:1272: error: (-2:Unspecified error) The function is not implemented. Rebuild the library with Windows, GTK+ 2.x or Cocoa support. If you are on Ubuntu or Debian, install libgtk2.0-dev and pkg-config, then re-run cmake or configure script in function 'cvShowImage'

------解决方法:

pip install opencv-python==4.5.3.56 # 经检验该版本能够解决问题,当然我认为只要和默认版本的4.5.4版本相差不大即可现在yolo所需的各种库均已安装完毕。

下载yolov5.pt到yolov5-5.0文件夹中,执行detect.py进行测试:(一定注意!如果直接执行python detect.py,会自动下载最新版本的yolov5.pt,而不是5.0版本的,因为5.0版本和最新版本网络结构不同,因此会报错)

(pytorch) liu@liu:~/yolov5-5.0$ wget https://github.com/ultralytics/yolov5/releases/download/v5.0/yolov5s.pt

(pytorch) liu@liu:~/yolov5-5.0$ python detect.py

执行完毕,在终端显示文件对应目录下有保存后的demo示例。

六.OPENCV WITH CUDA安装

我自己按照CSDN上的步骤进行操作,安装了两三天都不行,均有不同的报错。随后在知乎上找到已经配置好的4.5.0的CUDA加速版本的OpenCV。

1.下载Opencv源码

csdn - 安全中心 github自行下载

ZIP格式与tar格式都行。

我选择的版本是opencv-4.5.4,opencv_contrib-4.5.4,下载后并解压。

2.卸载原来的OpenCV

sudo apt-get purge libopencv* python-opencv

# 查看是否卸载

pkg-config opencv --libs

pkg-config opencv --modversion3.安装依赖

sudo apt-get update

sudo apt-get install build-essential pkg-config

sudo apt-get install -y cmake libavcodec-dev libavformat-dev libavutil-dev \

libglew-dev libgtk2.0-dev libgtk-3-dev libjpeg-dev libpng-dev libpostproc-dev \

libswscale-dev libtbb-dev libtiff5-dev libv4l-dev libxvidcore-dev \

libx264-dev qt5-default zlib1g-dev libgl1 libglvnd-dev pkg-config \

libgstreamer1.0-dev libgstreamer-plugins-base1.0-dev mesa-utils

sudo apt-get install python2.7-dev python3-dev python-numpy python3-numpy4.配置Opencv

cd opencv-4.5.4

mkdir build

cd build

sudo cmake-gui5.编译和安装 OpenCV(采用build文件下的终端运行)

在cmake编译之前,手动下载cmake过程中缺失的依赖https://files.cnblogs.com/files/arxive/boostdesc_bgm.i%2Cvgg_generated_48.i%E7%AD%89.rar (建议Windows下载解压成zip格式通过u盘拷入,Ubuntu系统默认不支持rar格式)

解压后直接拷贝以下文件名的源码,放在opencv_contrib/modules/xfeatures2d/src/ 路径下即可。

然后打开build文件夹,执行的 cmake命令如下(注意!!这里的cmake命令是一次性输入执行,并不是逐行输入,即将下列命令全部复制到终端执行):

cmake \

-D WITH_CUDA=ON \

-D CUDA_ARCH_BIN="7.2" \ jtop查看

-D WITH_cuDNN=ON \

-D OPENCV_DNN_CUDA=ON \

-D cuDNN_VERSION='8.2' \ jtop查看

-D cuDNN_INCLUDE_DIR='/usr/include/' \

-D CUDA_ARCH_PTX="" \

-D OPENCV_EXTRA_MODULES_PATH=../opencv_contrib-4.5.4/modules \ # 这里最好改成modules的绝对路径,例如我的是/home/liu/opencv-4.5.4-opencv_contrib-4.5.4/modules

-D WITH_GSTREAMER=ON \

-D WITH_LIBV4L=ON \

-D BUILD_opencv_python3=ON \

-D BUILD_TESTS=OFF \

-D BUILD_PERF_TESTS=OFF \

-D BUILD_EXAMPLES=OFF \

-D CMAKE_BUILD_TYPE=RELEASE \

-D CMAKE_INSTALL_PREFIX=/usr/local \ # opencv的安装路径 默认即可

.. 进行编译安装,时间比较长请耐心等待。

sudo make -j12 (编译100%后需要过一会才会完全编译好,要有耐心)

sudo make install (这一步也要有耐心)三. 添加环境变量 在终端操作:

sudo gedit /etc/ld.so.conf.d/opencv.conf

文件内写入lib的路径,Configure时没改的话就是这个:

/usr/local/lib

保存后在终端更新一下:

sudo ldconfig

添加pkg-config路径:

sudo gedit /etc/bash.bashrc

#在文本末尾添加这两句后保存

PKG_CONFIG_PATH=$PKG_CONFIG_PATH:/usr/local/lib/pkgconfig

export PKG_CONFIG_PATH

#终端下更新配置,然后可能要重启一下终端

source /etc/bash.bashrc

sudo updatedb

注:如果出现报错:

sudo: updatedb: command not found 解决方法是安装mlocate:

sudo apt-get install mlocate 至此安装完成,可以使用jtop或者"pkg-config --modversion opencv4"命令来查看opencv版本。

安装成功以后通过jtop查看,此时的info界面OPENCV的CUDA栏显示 :YES。 【我自己虽然成功安装Opencv但是并没有显示YES】

这里在介绍一种另一种调用CMAKE GUI界面进行安装的博客:在Jetson Xavier NX上安装编译OpenCV完整流程+踩坑记录_jetson xavier nx opencv 安装-CSDN博客

卸载安装失败的Opencv

安装WITH CUDA版本的Opencv少则一天多则几天耗神耗力,如何卸载安装失败的Opencv

1. 查看已预安装Opencv版本

pkg-config --modversion opencv2.卸载现有版本Opencv

找到当初安装Opencv的build文件夹路径(如果没有需要重新C-make编译一下),CMAKE找不到命令说明你需要更新一下CMAKE版本!

然后进入该build目录执行卸载操作

cd .../opencv-4.x.x/build

#卸载操作

sudo make uninstall

cd .../opencv-4.x.x

sudo rm -r build完成后将原本下载的opencv-4.x.x的文件夹删除即可,现在再次查看自己的opencv版本号会显示No package ‘opencv’ found,即表示卸载成功,可以开始下一步了。

下面我将提供一种配置好的4.5.0版本的Opencv,这是Nvidia内部团队已经编译好的,直接下载解包即可。OpenCV-4.5.0-aarch64.tar.gz下载链接: 百度网盘 请输入提取码 提取码: i32f 【感谢水花老哥的博客,要不然我还在这配置OPENCV】

tar -zxvf OpenCV-4.5.0-aarch64.tar.gz # 解压到主目录

cd OpenCV-4.5.0-aarch64

sudo dpkg -i *.deb #直接安装操作

jtop #检验是否安装有YES至此,我们已经安装好了WITH CUDA版本的Opencv torch与torchvision,下面进行实时摄像头的检测。

七.USB接口摄像头实时检测

这里首先要确定你的Opencv的版本,一定不能过高,在下载requirements的时候,jetson默认下载opencv版本为4.9.0,导致CV2不能执行imshow()函数,这点一定注意。上文也已经提及。

准备一个摄像头,USB或者CSI-2接口摄像头都行

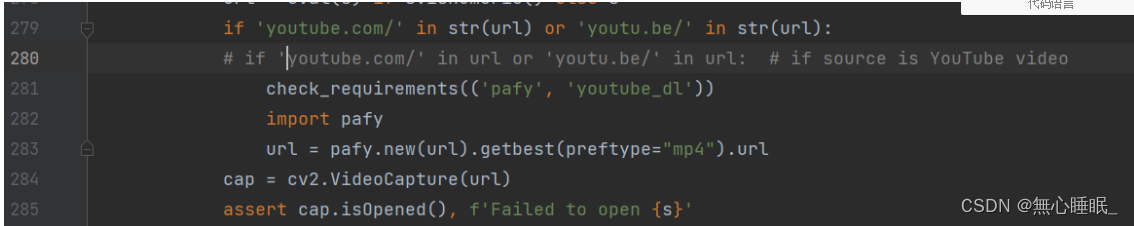

修改yolov5文件中的datasets.py的第280行代码为:if 'youtube.com/' in str(url) or 'youtu.be/' in str(url):

为了显示实时的FPS,修改datasets.py与detect.py文件

`datasets.py在utils/datasets.py`LoadStreams`类中的`__next__`函数中,返回`self.fps

` detect.py`:使用`cv2.putText`函数,在当前frame上显示文本,并在`vid_cap`前加个not,防止报错(原因:我们返回的只是fps值,而不是cap对象),如下图所示

# Stream results

if view_img:

# 实时显示当前FPS 1000 / t2-t1 * 1000

cv2.putText(im0, "YOLOv5 FPS: {0}".format(float('%.3f' % (1 / (t2 - t1)))), (100, 50),

cv2.FONT_HERSHEY_SIMPLEX, 1.5, (30,144,255), 3)

cv2.imshow(str(p), im0)

cv2.waitKey(1) # 1 millisecond

——————————————————————————————————————————————————————————————————————————————————————

if not vid_cap: # video

fps = vid_cap.get(cv2.CAP_PROP_FPS)

w = int(vid_cap.get(cv2.CAP_PROP_FRAME_WIDTH))

h = int(vid_cap.get(cv2.CAP_PROP_FRAME_HEIGHT))

else: # stream

fps, w, h = 30, im0.shape[1], im0.shape[0]

save_path += '.mp4'

vid_writer = cv2.VideoWriter(save_path, cv2.VideoWriter_fourcc(*'mp4v'), fps, (w, h))

vid_writer.write(im0)最终在终端yolo环境下执行命令

python detect.py --source 0 # 一般你的jetson第一次使用或者说第一次调用摄像头都是0在cuda加速的情况下,这时候的fps大概有20-25上下,还是感觉有点卡的.

八.安装PYCUDA(进行Tensorrt加速之前的必要操作)

我安装的是pycuda版本 2021.1,最好版本也不要太高,否则也是同样的问题因为pycuda版本不符导致tensorrt加速报错。

1.源码编译方式进行安装

官方下载地址https://pypi.org/project/pycuda/#history

sudo tar xvdf pycuda-2021.1.tar.gz

cd pycuda-2021.1

python3 configure.py --cuda-root=/usr/local/cuda-10.2 # 1.这里cuda-10.2 是你的cuda 文件夹名称,需要按照你的版本修改

make -j4 # 2. 调用4核核心进行编译 可以更高

sudo python3 setup.py install # 3显示成功安装即可。

九.Tensorrt加速模型+实现usb接口摄像头实时检测

1.克隆工程

这里只提供yolov5的安装

git clone -b v5.0 https://github.com/ultralytics/yolov5.git

git clone -b yolov5-v5.0 https://github.com/wang-xinyu/tensorrtx.gitjetson可能因为没有翻墙的缘故无法提取,可以通过windows进行克隆用u盘传输,千万不要从别的地方下,因为可能存在文件的缺失。

2.生成引擎文件

1.下载yolov5s.pt到yolov5工程的weights文件夹下。 (上文在测试demo的时候已经下过了)

2.复制tensorrtx/yolov5文件夹下的gen_wts.py文件到yolov5工程下。

3.生成yolov5s.wts文件

conda activate pytorch

cd /xxx/yolov5

#v5.0

python gen_wts.py -w yolov5s.pt -o yolov5s.wts # 生成引擎文件

# 进入tensorrtx/yolov5文件夹下

mkdir build

# 复制yolov5文件下的yolov5s.wts文件到tensorrtx/yolov5/build文件夹中,并且在build文件夹中打开终端

cmake ..

make

sudo ./yolov5 -s yolov5s.wts yolov5s.engine s生成yolov5s.engine文件

生成引擎文件报错问题,no find such file问题

原因是没有生成可执行文件,自然报错没有找到。

首先你回去看一下编译后终端显示的界面,是显示 target yolov5 build,也就是上图显示的这样;还是他把yolov5分成了三份,也就是三行显示成功编译的代码,这个要注意。如果是后者的话,你可能下载的不是官方源码【因为我就是这个问题】,还是直接从网址里直接克隆比较好。如果确定下载的是官方源码,那就将./yolov5改成下列代码尝试:

sudo ./yolov5_det -s yolov5s.wts yolov5s.engine s3.加速实现

1.图片检测加速

conda activate pytorch # 进入tensorrt文件夹中

python yolov5_trt.pyjetson xavier在使用tensorRT对yolov5加速时,报错ModuleNotFoundError: No module named ‘tensorrt‘问题

问题分析:tensorrt没有进行软连接,导致yolov5没找到

解决方法:

sudo find / -name tensorrt* # 他会找到tensorrt文件位置

# 进入虚拟环境目录下

cd /home/nvidia/mambaforge/envs/yolov5/lib/python3.6/site-packages

# 软连接

ln -s /usr/lib/python3.6/dist-packages/tensorrt # 失败就换成: ln -s /usr/lib/python3.6/dist-packages/tensorrt/tensorrt.so

# 再次执行

python yolov5_trt.py成功界面:

2.摄像头实时监测加速

仅在这里提供python版本的实现代码,c++版本的代码网上博客也有,可以检索一下。

"""

An example that uses TensorRT's Python api to make inferences.

"""

import ctypes

import os

import shutil

import random

import sys

import threading

import time

import cv2

import numpy as np

import pycuda.autoinit

import pycuda.driver as cuda

import tensorrt as trt

import torch

import torchvision

import argparse

CONF_THRESH = 0.5

IOU_THRESHOLD = 0.4

def get_img_path_batches(batch_size, img_dir):

ret = []

batch = []

for root, dirs, files in os.walk(img_dir):

for name in files:

if len(batch) == batch_size:

ret.append(batch)

batch = []

batch.append(os.path.join(root, name))

if len(batch) > 0:

ret.append(batch)

return ret

def plot_one_box(x, img, color=None, label=None, line_thickness=None):

"""

description: Plots one bounding box on image img,

this function comes from YoLov5 project.

param:

x: a box likes [x1,y1,x2,y2]

img: a opencv image object

color: color to draw rectangle, such as (0,255,0)

label: str

line_thickness: int

return:

no return

"""

tl = (

line_thickness or round(0.002 * (img.shape[0] + img.shape[1]) / 2) + 1

) # line/font thickness

color = color or [random.randint(0, 255) for _ in range(3)]

c1, c2 = (int(x[0]), int(x[1])), (int(x[2]), int(x[3]))

cv2.rectangle(img, c1, c2, color, thickness=tl, lineType=cv2.LINE_AA)

if label:

tf = max(tl - 1, 1) # font thickness

t_size = cv2.getTextSize(label, 0, fontScale=tl / 3, thickness=tf)[0]

c2 = c1[0] + t_size[0], c1[1] - t_size[1] - 3

cv2.rectangle(img, c1, c2, color, -1, cv2.LINE_AA) # filled

cv2.putText(

img,

label,

(c1[0], c1[1] - 2),

0,

tl / 3,

[225, 255, 255],

thickness=tf,

lineType=cv2.LINE_AA,

)

class YoLov5TRT(object):

"""

description: A YOLOv5 class that warps TensorRT ops, preprocess and postprocess ops.

"""

def __init__(self, engine_file_path):

# Create a Context on this device,

self.ctx = cuda.Device(0).make_context()

stream = cuda.Stream()

TRT_LOGGER = trt.Logger(trt.Logger.INFO)

runtime = trt.Runtime(TRT_LOGGER)

# Deserialize the engine from file

with open(engine_file_path, "rb") as f:

engine = runtime.deserialize_cuda_engine(f.read())

context = engine.create_execution_context()

host_inputs = []

cuda_inputs = []

host_outputs = []

cuda_outputs = []

bindings = []

for binding in engine:

print('bingding:', binding, engine.get_binding_shape(binding))

size = trt.volume(engine.get_binding_shape(binding)) * engine.max_batch_size

dtype = trt.nptype(engine.get_binding_dtype(binding))

# Allocate host and device buffers

host_mem = cuda.pagelocked_empty(size, dtype)

cuda_mem = cuda.mem_alloc(host_mem.nbytes)

# Append the device buffer to device bindings.

bindings.append(int(cuda_mem))

# Append to the appropriate list.

if engine.binding_is_input(binding):

self.input_w = engine.get_binding_shape(binding)[-1]

self.input_h = engine.get_binding_shape(binding)[-2]

host_inputs.append(host_mem)

cuda_inputs.append(cuda_mem)

else:

host_outputs.append(host_mem)

cuda_outputs.append(cuda_mem)

# Store

self.stream = stream

self.context = context

self.engine = engine

self.host_inputs = host_inputs

self.cuda_inputs = cuda_inputs

self.host_outputs = host_outputs

self.cuda_outputs = cuda_outputs

self.bindings = bindings

self.batch_size = engine.max_batch_size

def infer(self, input_image_path):

threading.Thread.__init__(self)

# Make self the active context, pushing it on top of the context stack.

self.ctx.push()

self.input_image_path = input_image_path

# Restore

stream = self.stream

context = self.context

engine = self.engine

host_inputs = self.host_inputs

cuda_inputs = self.cuda_inputs

host_outputs = self.host_outputs

cuda_outputs = self.cuda_outputs

bindings = self.bindings

# Do image preprocess

batch_image_raw = []

batch_origin_h = []

batch_origin_w = []

batch_input_image = np.empty(shape=[self.batch_size, 3, self.input_h, self.input_w])

input_image, image_raw, origin_h, origin_w = self.preprocess_image(input_image_path

)

batch_origin_h.append(origin_h)

batch_origin_w.append(origin_w)

np.copyto(batch_input_image, input_image)

batch_input_image = np.ascontiguousarray(batch_input_image)

# Copy input image to host buffer

np.copyto(host_inputs[0], batch_input_image.ravel())

start = time.time()

# Transfer input data to the GPU.

cuda.memcpy_htod_async(cuda_inputs[0], host_inputs[0], stream)

# Run inference.

context.execute_async(batch_size=self.batch_size, bindings=bindings, stream_handle=stream.handle)

# Transfer predictions back from the GPU.

cuda.memcpy_dtoh_async(host_outputs[0], cuda_outputs[0], stream)

# Synchronize the stream

stream.synchronize()

end = time.time()

# Remove any context from the top of the context stack, deactivating it.

self.ctx.pop()

# Here we use the first row of output in that batch_size = 1

output = host_outputs[0]

# Do postprocess

result_boxes, result_scores, result_classid = self.post_process(

output, origin_h, origin_w)

# Draw rectangles and labels on the original image

for j in range(len(result_boxes)):

box = result_boxes[j]

plot_one_box(

box,

image_raw,

label="{}:{:.2f}".format(

categories[int(result_classid[j])], result_scores[j]

),

)

return image_raw, end - start

def destroy(self):

# Remove any context from the top of the context stack, deactivating it.

self.ctx.pop()

def get_raw_image(self, image_path_batch):

"""

description: Read an image from image path

"""

for img_path in image_path_batch:

yield cv2.imread(img_path)

def get_raw_image_zeros(self, image_path_batch=None):

"""

description: Ready data for warmup

"""

for _ in range(self.batch_size):

yield np.zeros([self.input_h, self.input_w, 3], dtype=np.uint8)

def preprocess_image(self, input_image_path):

"""

description: Convert BGR image to RGB,

resize and pad it to target size, normalize to [0,1],

transform to NCHW format.

param:

input_image_path: str, image path

return:

image: the processed image

image_raw: the original image

h: original height

w: original width

"""

image_raw = input_image_path

h, w, c = image_raw.shape

image = cv2.cvtColor(image_raw, cv2.COLOR_BGR2RGB)

# Calculate widht and height and paddings

r_w = self.input_w / w

r_h = self.input_h / h

if r_h > r_w:

tw = self.input_w

th = int(r_w * h)

tx1 = tx2 = 0

ty1 = int((self.input_h - th) / 2)

ty2 = self.input_h - th - ty1

else:

tw = int(r_h * w)

th = self.input_h

tx1 = int((self.input_w - tw) / 2)

tx2 = self.input_w - tw - tx1

ty1 = ty2 = 0

# Resize the image with long side while maintaining ratio

image = cv2.resize(image, (tw, th))

# Pad the short side with (128,128,128)

image = cv2.copyMakeBorder(

image, ty1, ty2, tx1, tx2, cv2.BORDER_CONSTANT, (128, 128, 128)

)

image = image.astype(np.float32)

# Normalize to [0,1]

image /= 255.0

# HWC to CHW format:

image = np.transpose(image, [2, 0, 1])

# CHW to NCHW format

image = np.expand_dims(image, axis=0)

# Convert the image to row-major order, also known as "C order":

image = np.ascontiguousarray(image)

return image, image_raw, h, w

def xywh2xyxy(self, origin_h, origin_w, x):

"""

description: Convert nx4 boxes from [x, y, w, h] to [x1, y1, x2, y2] where xy1=top-left, xy2=bottom-right

param:

origin_h: height of original image

origin_w: width of original image

x: A boxes tensor, each row is a box [center_x, center_y, w, h]

return:

y: A boxes tensor, each row is a box [x1, y1, x2, y2]

"""

y = torch.zeros_like(x) if isinstance(x, torch.Tensor) else np.zeros_like(x)

r_w = self.input_w / origin_w

r_h = self.input_h / origin_h

if r_h > r_w:

y[:, 0] = x[:, 0] - x[:, 2] / 2

y[:, 2] = x[:, 0] + x[:, 2] / 2

y[:, 1] = x[:, 1] - x[:, 3] / 2 - (self.input_h - r_w * origin_h) / 2

y[:, 3] = x[:, 1] + x[:, 3] / 2 - (self.input_h - r_w * origin_h) / 2

y /= r_w

else:

y[:, 0] = x[:, 0] - x[:, 2] / 2 - (self.input_w - r_h * origin_w) / 2

y[:, 2] = x[:, 0] + x[:, 2] / 2 - (self.input_w - r_h * origin_w) / 2

y[:, 1] = x[:, 1] - x[:, 3] / 2

y[:, 3] = x[:, 1] + x[:, 3] / 2

y /= r_h

return y

def post_process(self, output, origin_h, origin_w):

"""

description: postprocess the prediction

param:

output: A tensor likes [num_boxes,cx,cy,w,h,conf,cls_id, cx,cy,w,h,conf,cls_id, ...]

origin_h: height of original image

origin_w: width of original image

return:

result_boxes: finally boxes, a boxes tensor, each row is a box [x1, y1, x2, y2]

result_scores: finally scores, a tensor, each element is the score correspoing to box

result_classid: finally classid, a tensor, each element is the classid correspoing to box

"""

# Get the num of boxes detected

num = int(output[0])

# Reshape to a two dimentional ndarray

pred = np.reshape(output[1:], (-1, 6))[:num, :]

# to a torch Tensor

pred = torch.Tensor(pred).cuda()

# Get the boxes

boxes = pred[:, :4]

# Get the scores

scores = pred[:, 4]

# Get the classid

classid = pred[:, 5]

# Choose those boxes that score > CONF_THRESH

si = scores > CONF_THRESH

boxes = boxes[si, :]

scores = scores[si]

classid = classid[si]

# Trandform bbox from [center_x, center_y, w, h] to [x1, y1, x2, y2]

boxes = self.xywh2xyxy(origin_h, origin_w, boxes)

# Do nms

indices = torchvision.ops.nms(boxes, scores, iou_threshold=IOU_THRESHOLD).cpu()

result_boxes = boxes[indices, :].cpu()

result_scores = scores[indices].cpu()

result_classid = classid[indices].cpu()

return result_boxes, result_scores, result_classid

class inferThread(threading.Thread):

def __init__(self, yolov5_wrapper):

threading.Thread.__init__(self)

self.yolov5_wrapper = yolov5_wrapper

def infer(self, frame):

batch_image_raw, use_time = self.yolov5_wrapper.infer(frame)

# for i, img_path in enumerate(self.image_path_batch):

# parent, filename = os.path.split(img_path)

# save_name = os.path.join('output', filename)

# # Save image

# cv2.imwrite(save_name, batch_image_raw[i])

# print('input->{}, time->{:.2f}ms, saving into output/'.format(self.image_path_batch, use_time * 1000))

return batch_image_raw, use_time

class warmUpThread(threading.Thread):

def __init__(self, yolov5_wrapper):

threading.Thread.__init__(self)

self.yolov5_wrapper = yolov5_wrapper

def run(self):

batch_image_raw, use_time = self.yolov5_wrapper.infer(self.yolov5_wrapper.get_raw_image_zeros())

print('warm_up->{}, time->{:.2f}ms'.format(batch_image_raw[0].shape, use_time * 1000))

if __name__ == "__main__":

# load custom plugins

parser = argparse.ArgumentParser()

parser.add_argument('--engine', nargs='+', type=str, default="build/yolov5s.engine", help='.engine path(s)')

parser.add_argument('--save', type=int, default=0, help='save?')

opt = parser.parse_args()

PLUGIN_LIBRARY = "build/libmyplugins.so"

engine_file_path = opt.engine

ctypes.CDLL(PLUGIN_LIBRARY)

# load coco labels

categories = ["person", "bicycle", "car", "motorcycle", "airplane", "bus", "train", "truck", "boat",

"traffic light",

"fire hydrant", "stop sign", "parking meter", "bench", "bird", "cat", "dog", "horse", "sheep", "cow",

"elephant", "bear", "zebra", "giraffe", "backpack", "umbrella", "handbag", "tie", "suitcase",

"frisbee",

"skis", "snowboard", "sports ball", "kite", "baseball bat", "baseball glove", "skateboard",

"surfboard",

"tennis racket", "bottle", "wine glass", "cup", "fork", "knife", "spoon", "bowl", "banana", "apple",

"sandwich", "orange", "broccoli", "carrot", "hot dog", "pizza", "donut", "cake", "chair", "couch",

"potted plant", "bed", "dining table", "toilet", "tv", "laptop", "mouse", "remote", "keyboard",

"cell phone",

"microwave", "oven", "toaster", "sink", "refrigerator", "book", "clock", "vase", "scissors",

"teddy bear",

"hair drier", "toothbrush"]

# a YoLov5TRT instance

yolov5_wrapper = YoLov5TRT(engine_file_path)

cap = cv2.VideoCapture(2)

try:

thread1 = inferThread(yolov5_wrapper)

thread1.start()

thread1.join()

while 1:

_, frame = cap.read()

img, t = thread1.infer(frame)

fps = 1/t

imgout = cv2.putText(img, "FPS= %.2f" % (fps), (0, 40), cv2.FONT_HERSHEY_SIMPLEX, 1, (0, 255, 0), 2)

cv2.imshow("result", imgout)

if cv2.waitKey(1) & 0XFF == ord('q'): # 1 millisecond

break

finally:

# destroy the instance

cap.release()

cv2.destroyAllWindows()

yolov5_wrapper.destroy()

将yolov5_trt.py文件备份到别的地方,新建一个重名文件,将代码放进去就行了,注意自己的相机编号要与代码保持一致,一般默认0,更换以后再次运行

python yolov5_trt.py 此时的fps已经能够达到40-45上下的水平。至此从刷机+各种环境配置+tensorrt加速模型的部署全部结束。后续会对yolov5的源码行对行的进行解读。

835

835

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?