可微分渲染器mesh_renderer

mesh_renderer是一个用于tensorflow框架的可微分3d网格渲染器。mesh_renderer源码可在github上google公司的开源代码内获得(https://github.com/google/tf_mesh_renderer),他是非官方的,底层基于C++,提供了python接口在mesh_renderer.py和rasterize_triangles.py内,通过引入这两个文件提供的tensorflow算子ops可以引入可微分渲染操作。

输入3D顶点坐标和三角面片所包含的3D顶点id,输出是渲染图像每个像素所对应的三角面片id和此三角面片3个顶点的重心权重。同时渲染器还提供了和像素重心重心权重关于顶点位置的微分。

安装方法

注意这个渲染器只支持linux平台。

通过Bazel从源码编译https://github.com/google/tf_mesh_renderer

通过pip直接安装

pip install mesh_rendererpython API

camera_utils.py

def look_at(eye, center, world_up):

"""Computes camera viewing matrices.

Functionality mimes gluLookAt (third_party/GL/glu/include/GLU/glu.h).

Args:

eye: 2-D float32 tensor with shape [batch_size, 3] containing the XYZ world

space position of the camera.输入相机在世界坐标系中的三维位置。

center: 2-D float32 tensor with shape [batch_size, 3] containing a position

along the center of the camera's gaze.输入相机中心向前方向上一点的坐标。

world_up: 2-D float32 tensor with shape [batch_size, 3] specifying the

world's up direction; the output camera will have no tilt with respect

to this direction.输入世界坐标系向上方向上的向量。

Returns:

A [batch_size, 4, 4] float tensor containing a right-handed camera

extrinsics matrix that maps points from world space to points in eye space.

输出世界坐标系转到相机坐标系的转换矩阵

"""

camera_matrices = tf.matmul(R, T)

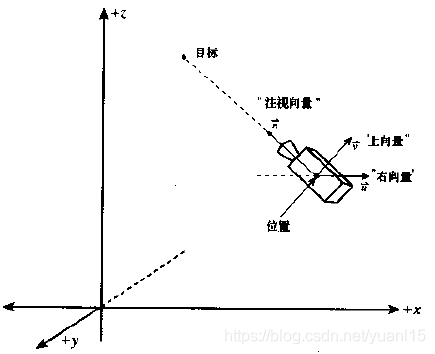

return camera_matriceslook_at函数是仿照openGL中的gluLookAt函数,用来计算世界坐标与相机坐标系的转换矩阵,最终是以齐次式来表达,返回矩阵camera_matrices为旋转矩阵R乘以位移矩阵T。

camera_matrices=R*T

def perspective(aspect_ratio, fov_y, near_clip, far_clip):

"""Computes perspective transformation matrices.

Functionality mimes gluPerspective (third_party/GL/glu/include/GLU/glu.h).

计算透视投影矩阵

Args:

aspect_ratio: float value specifying the image aspect ratio (width/height).

图像宽高比

fov_y: 1-D float32 Tensor with shape [batch_size] specifying output vertical

field of views in degrees.

垂直方向上视场角

near_clip: 1-D float32 Tensor with shape [batch_size] specifying near

clipping plane distance.

近平面

far_clip: 1-D float32 Tensor with shape [batch_size] specifying far clipping

plane distance.

远平面

Returns:

A [batch_size, 4, 4] float tensor that maps from right-handed points in eye

space to left-handed points in clip space.

从右手系的点转换为clip空间下的左手系点的转换矩阵

"""

return perspective_transformperspective函数是仿照openGL中gluPerspective函数,获取透视矩阵。

def transform_homogeneous(matrices, vertices):

"""Applies batched 4x4 homogenous matrix transformations to 3-D vertices.

The vertices are input and output as as row-major, but are interpreted as

column vectors multiplied on the right-hand side of the matrices. More

explicitly, this function computes (MV^T)^T.

Vertices are assumed to be xyz, and are extended to xyzw with w=1.

Args:

matrices: a [batch_size, 4, 4] tensor of matrices.

输入将物体从世界坐标系转到相机clip space空间的转换矩阵

vertices: a [batch_size, N, 3] tensor of xyz vertices.

输入三维物体顶点坐标系

Returns:

a [batch_size, N, 4] tensor of xyzw vertices.

返回物体在相机clip空间下的三维坐标

Raises:

ValueError: if matrices or vertices have the wrong number of dimensions.

"""

if len(matrices.shape) != 3:

raise ValueError(

'matrices must have 3 dimensions (missing batch dimension?)')

if len(vertices.shape) != 3:

raise ValueError(

'vertices must have 3 dimensions (missing batch dimension?)')

homogeneous_coord = tf.ones(

[tf.shape(vertices)[0], tf.shape(vertices)[1], 1], dtype=tf.float32)

vertices_homogeneous = tf.concat([vertices, homogeneous_coord], 2)

return tf.matmul(vertices_homogeneous, matrices, transpose_b=True)将三维物体坐标乘以转换矩阵并返回,运算中均使用齐次坐标。其中homogeneous_coord是将原三维物体点云转换为齐次坐标,matrices则是转换矩阵perspective_transform*R*T,使用上文中提到的透视矩阵、旋转矩阵、位移矩阵。

rasterize_triangles.py

rasterize模块主要是用于将三维物体光栅化,获取光栅化后的各个像素对应的属性。

def rasterize(world_space_vertices, attributes, triangles, camera_matrices,

image_width, image_height, background_value):

"""Rasterizes a mesh and computes interpolated vertex attributes.

Applies projection matrices and then calls rasterize_clip_space().

Args:

world_space_vertices: 3-D float32 tensor of xyz positions with shape

[batch_size, vertex_count, 3].

输入世界坐标系下的三维物体点云

attributes: 3-D float32 tensor with shape [batch_size, vertex_count,

attribute_count]. Each vertex attribute is interpolated across the

triangle using barycentric interpolation.

输入点云属性,光栅化的像素

triangles: 2-D int32 tensor with shape [triangle_count, 3]. Each triplet

should contain vertex indices describing a triangle such that the

triangle's normal points toward the viewer if the forward order of the

triplet defines a clockwise winding of the vertices. Gradients with

respect to this tensor are not available.

输入三角面片对应的点云id

camera_matrices: 3-D float tensor with shape [batch_size, 4, 4] containing

model-view-perspective projection matrices.

输入相机坐标系转换矩阵

image_width: int specifying desired output image width in pixels.

输入图像宽度

image_height: int specifying desired output image height in pixels.

输入图像高度

background_value: a 1-D float32 tensor with shape [attribute_count]. Pixels

that lie outside all triangles take this value.

背景点像素值

Returns:

A 4-D float32 tensor with shape [batch_size, image_height, image_width,attribute_count], containing the interpolated vertex attributes at each pixel.

Raises:

ValueError: An invalid argument to the method is detected.

"""

clip_space_vertices = camera_utils.transform_homogeneous(

camera_matrices, world_space_vertices)

return rasterize_clip_space(clip_space_vertices, attributes, triangles,

image_width, image_height, background_value)rasterize函数是光栅化的接口,将世界坐标系下的三维物体光栅化到相平面上。

def rasterize_clip_space(clip_space_vertices, attributes, triangles,

image_width, image_height, background_value):

"""Rasterizes the input mesh expressed in clip-space (xyzw) coordinates.

Interpolates vertex attributes using perspective-correct interpolation and

clips triangles that lie outside the viewing frustum.

Args:

clip_space_vertices: 3-D float32 tensor of homogenous vertices (xyzw) with

shape [batch_size, vertex_count, 4].

输入clip空间下的3d点云

attributes: 3-D float32 tensor with shape [batch_size, vertex_count,

attribute_count]. Each vertex attribute is interpolated across the

triangle using barycentric interpolation.

输入点云对应属性

triangles: 2-D int32 tensor with shape [triangle_count, 3]. Each triplet

should contain vertex indices describing a triangle such that the

triangle's normal points toward the viewer if the forward order of the

triplet defines a clockwise winding of the vertices. Gradients with

respect to this tensor are not available.

输入三角面片对应点云id

image_width: int specifying desired output image width in pixels.

输入图像宽度

image_height: int specifying desired output image height in pixels.

输入图像高度

background_value: a 1-D float32 tensor with shape [attribute_count]. Pixels

that lie outside all triangles take this value.

背景颜色

Returns:

A 4-D float32 tensor with shape [batch_size, image_height, image_width,

attribute_count], containing the interpolated vertex attributes at

each pixel.

返回光栅化之后每个像素对应的属性

Raises:

ValueError: An invalid argument to the method is detected.

"""rasterize_clip_space将转到clip空间下的三维物体进行光栅化,使用迭代的方式将一个batch中的样本一一光栅化,调用c++接口rasterize_triangles,获取每个样本光栅化后像素对应的顶点重心权重barycentric_coords、三角面片序号triangle_ids。再用重心加权vertices的属性来求取对应像素的属性。

c++ API

rasterize_triangles_impl.h

#ifndef MESH_RENDERER_KERNELS_RASTERIZE_TRIANGLES_IMPL_H_

#define MESH_RENDERER_KERNELS_RASTERIZE_TRIANGLES_IMPL_H_

namespace tf_mesh_renderer {

// Copied from tensorflow/core/platform/default/integral_types.h

// to avoid making this file depend on tensorflow.

typedef int int32;

typedef long long int64;

// Computes the triangle id, barycentric coordinates, and z-buffer at each pixel

// in the image.

//

// vertices: A flattened 2D array with 4*vertex_count elements.

// Each contiguous triplet is the XYZW location of the vertex with that

// triplet's id. The coordinates are assumed to be OpenGL-style clip-space

// (i.e., post-projection, pre-divide), where X points right, Y points up,

// Z points away.

输入三维点云齐次表达式,float指针指向4*顶点数的内存空间

// triangles: A flattened 2D array with 3*triangle_count elements.

// Each contiguous triplet is the three vertex ids indexing into vertices

// describing one triangle with clockwise winding.

输入三角面片所对应的顶点id,int指针指向3*三角面片数量的内存空间

// triangle_count: The number of triangles stored in the array triangles.

输入三角面片数量

// triangle_ids: A flattened 2D array with image_height*image_width elements.

// At return, each pixel contains a triangle id in the range

// [0, triangle_count). The id value is also 0 if there is no triangle

// at the pixel. The barycentric_coordinates must be checked to

// distinguish the two cases.

返回三角化后每个像素对应的三角面片id,int指针指向图像width*height大小的内存空间

// barycentric_coordinates: A flattened 3D array with

// image_height*image_width*3 elements. At return, contains the triplet of

// barycentric coordinates at each pixel in the same vertex ordering as

// triangles. If no triangle is present, all coordinates are 0.

返回重心插值权重

// z_buffer: A flattened 2D array with image_height*image_width elements. At

// return, contains the normalized device Z coordinates of the rendered

// triangles.

void RasterizeTrianglesImpl(const float* vertices, const int32* triangles,

int32 triangle_count, int32 image_width,

int32 image_height, int32* triangle_ids,

float* barycentric_coordinates, float* z_buffer);

} // namespace tf_mesh_renderer

#endif // MESH_RENDERER_OPS_KERNELS_RASTERIZE_TRIANGLES_IMPL_H_rasterize_triangles_impl.cc

#include <algorithm>

#include <cmath>

#include "rasterize_triangles_impl.h"

namespace tf_mesh_renderer {

namespace {

// Takes the minimum of a, b, and c, rounds down, and converts to an integer

// in the range [low, high].

inline int ClampedIntegerMin(float a, float b, float c, int low, int high) {

return std::min(

std::max(static_cast<int>(std::floor(std::min(std::min(a, b), c))), low),

high);

}

// Takes the maximum of a, b, and c, rounds up, and converts to an integer

// in the range [low, high].

inline int ClampedIntegerMax(float a, float b, float c, int low, int high) {

return std::min(

std::max(static_cast<int>(std::ceil(std::max(std::max(a, b), c))), low),

high);

}

// Computes a 3x3 matrix inverse without dividing by the determinant.

// Instead, makes an unnormalized matrix inverse with the correct sign

// by flipping the sign of the matrix if the determinant is negative.

// By leaving out determinant division, the rows of M^-1 only depend on two out

// of three of the columns of M; i.e., the first row of M^-1 only depends on the

// second and third columns of M, the second only depends on the first and

// third, etc. This means we can compute edge functions for two neighboring

// triangles independently and produce exactly the same numerical result up to

// the sign. This in turn means we can avoid cracks in rasterization without

// using fixed-point arithmetic.

// See http://mathworld.wolfram.com/MatrixInverse.html

void ComputeUnnormalizedMatrixInverse(const float a11, const float a12,

const float a13, const float a21,

const float a22, const float a23,

const float a31, const float a32,

const float a33, float m_inv[9]) {

m_inv[0] = a22 * a33 - a32 * a23;

m_inv[1] = a13 * a32 - a33 * a12;

m_inv[2] = a12 * a23 - a22 * a13;

m_inv[3] = a23 * a31 - a33 * a21;

m_inv[4] = a11 * a33 - a31 * a13;

m_inv[5] = a13 * a21 - a23 * a11;

m_inv[6] = a21 * a32 - a31 * a22;

m_inv[7] = a12 * a31 - a32 * a11;

m_inv[8] = a11 * a22 - a21 * a12;

// The first column of the unnormalized M^-1 contains intermediate values for

// det(M).

const float det = a11 * m_inv[0] + a12 * m_inv[3] + a13 * m_inv[6];

// Transfer the sign of the determinant.

if (det < 0.0f) {

for (int i = 0; i < 9; ++i) {

m_inv[i] = -m_inv[i];

}

}

}

// Computes the edge functions from M^-1 as described by Olano and Greer,

// "Triangle Scan Conversion using 2D Homogeneous Coordinates."

//

// This function combines equations (3) and (4). It first computes

// [a b c] = u_i * M^-1, where u_0 = [1 0 0], u_1 = [0 1 0], etc.,

// then computes edge_i = aX + bY + c

void ComputeEdgeFunctions(const float px, const float py, const float m_inv[9],

float values[3]) {

for (int i = 0; i < 3; ++i) {

const float a = m_inv[3 * i + 0];

const float b = m_inv[3 * i + 1];

const float c = m_inv[3 * i + 2];

values[i] = a * px + b * py + c;

}

}

// Determines whether the point p lies inside a front-facing triangle.

// Counts pixels exactly on an edge as inside the triangle, as long as the

// triangle is not degenerate. Degenerate (zero-area) triangles always fail the

// inside test.

bool PixelIsInsideTriangle(const float edge_values[3]) {

// Check that the edge values are all non-negative and that at least one is

// positive (triangle is non-degenerate).

return (edge_values[0] >= 0 && edge_values[1] >= 0 && edge_values[2] >= 0) &&

(edge_values[0] > 0 || edge_values[1] > 0 || edge_values[2] > 0);

}

} // namespace

void RasterizeTrianglesImpl(const float* vertices, const int32* triangles,

int32 triangle_count, int32 image_width,

int32 image_height, int32* triangle_ids,

float* barycentric_coordinates, float* z_buffer) {

const float half_image_width = 0.5 * image_width;

const float half_image_height = 0.5 * image_height;

float unnormalized_matrix_inverse[9];

float b_over_w[3];

for (int32 triangle_id = 0; triangle_id < triangle_count; ++triangle_id) {

// 获取三角面片顶点id

const int32 v0_x_id = 4 * triangles[3 * triangle_id];

const int32 v1_x_id = 4 * triangles[3 * triangle_id + 1];

const int32 v2_x_id = 4 * triangles[3 * triangle_id + 2];

// 获取三角面片顶点clipSpace下的z坐标

const float v0w = vertices[v0_x_id + 3];

const float v1w = vertices[v1_x_id + 3];

const float v2w = vertices[v2_x_id + 3];

// Early exit: if all w < 0, triangle is entirely behind the eye.

if (v0w < 0 && v1w < 0 && v2w < 0) {

continue;

}

const float v0x = vertices[v0_x_id];

const float v0y = vertices[v0_x_id + 1];

const float v1x = vertices[v1_x_id];

const float v1y = vertices[v1_x_id + 1];

const float v2x = vertices[v2_x_id];

const float v2y = vertices[v2_x_id + 1];

ComputeUnnormalizedMatrixInverse(v0x, v1x, v2x, v0y, v1y, v2y, v0w, v1w,

v2w, unnormalized_matrix_inverse);

// Initialize the bounding box to the entire screen.

int left = 0, right = image_width, bottom = 0, top = image_height;

// If the triangle is entirely inside the screen, project the vertices to

// pixel coordinates and find the triangle bounding box enlarged to the

// nearest integer and clamped to the image boundaries.

if (v0w > 0 && v1w > 0 && v2w > 0) {

// 当nearClip为0时,等同于相机透视投影

const float p0x = (v0x / v0w + 1.0) * half_image_width;

const float p1x = (v1x / v1w + 1.0) * half_image_width;

const float p2x = (v2x / v2w + 1.0) * half_image_width;

const float p0y = (v0y / v0w + 1.0) * half_image_height;

const float p1y = (v1y / v1w + 1.0) * half_image_height;

const float p2y = (v2y / v2w + 1.0) * half_image_height;

left = ClampedIntegerMin(p0x, p1x, p2x, 0, image_width);

right = ClampedIntegerMax(p0x, p1x, p2x, 0, image_width);

bottom = ClampedIntegerMin(p0y, p1y, p2y, 0, image_height);

top = ClampedIntegerMax(p0y, p1y, p2y, 0, image_height);

}

// Iterate over each pixel in the bounding box.

for (int iy = bottom; iy < top; ++iy) {

for (int ix = left; ix < right; ++ix) {

const float px = ((ix + 0.5) / half_image_width) - 1.0;

const float py = ((iy + 0.5) / half_image_height) - 1.0;

const int pixel_idx = iy * image_width + ix;

ComputeEdgeFunctions(px, py, unnormalized_matrix_inverse, b_over_w);

if (!PixelIsInsideTriangle(b_over_w)) {

continue;

}

const float one_over_w = b_over_w[0] + b_over_w[1] + b_over_w[2];

const float b0 = b_over_w[0] / one_over_w;

const float b1 = b_over_w[1] / one_over_w;

const float b2 = b_over_w[2] / one_over_w;

const float v0z = vertices[v0_x_id + 2];

const float v1z = vertices[v1_x_id + 2];

const float v2z = vertices[v2_x_id + 2];

// Since we computed an unnormalized w above, we need to recompute

// a properly scaled clip-space w value and then divide clip-space z

// by that.

const float clip_z = b0 * v0z + b1 * v1z + b2 * v2z;

const float clip_w = b0 * v0w + b1 * v1w + b2 * v2w;

const float z = clip_z / clip_w;

// Skip the pixel if it is farther than the current z-buffer pixel or

// beyond the near or far clipping plane.

if (z < -1.0 || z > 1.0 || z > z_buffer[pixel_idx]) {

continue;

}

triangle_ids[pixel_idx] = triangle_id;

z_buffer[pixel_idx] = z;

barycentric_coordinates[3 * pixel_idx + 0] = b0;

barycentric_coordinates[3 * pixel_idx + 1] = b1;

barycentric_coordinates[3 * pixel_idx + 2] = b2;

}

}

}

}

} // namespace tf_mesh_renderer在光栅化的实现,使用了z-buffer算法,获取到每个像素由哪个三角面片构成,并且通过重心插值的方法从面片顶点获得像素的属性值。

附录

世界坐标系到视点坐标系的转换

在openGL中可以调用gluLookAt(eye, center, up)函数,相机位置在视角eye,up在相机同一个平面上,视线指向center,这与mesh_renderer一致。

相机位置C=eye(Cx, Cy, Cz)

镜头朝向的单位向量N=center - eye(Nx, Ny, Nz)

相机向上的向量V= up - eye

相机坐标系x轴方向U= N x V

对方向向量归一化后,得到C、U、V、N组成了视点坐标系

现在的目标就是将世界坐标系下的点p(objx, objy, objz)转化为相机坐标系下坐标(obju, objv, objn)。而p在uvn坐标系下的坐标即为点p在u、v、n三个轴上的投影分量,而向量在某个单位向量上的投影,可以通过点乘求取。即可以通过p点到相机位置center(Cx, Cy, Cz)的向量,与u、v、n分别点乘求取。

则使用齐次向量表达为

(obju, objv, objn, 1) = (objx, objy, objz, 1) *  *

*

参考文献

Unsupervised Training for 3D Morphable Model Regression. Kyle Genova, Forrester Cole, Aaron Maschinot, Aaron Sarna, Daniel Vlasic, and William T. Freeman. CVPR 2018, pp. 8377-8386.

Triangle Scan Conversion using 2D Homogeneous Coordinates.HWWS 1997.

4658

4658

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?